Abstract

This paper presents a canonical dual method for solving a quadratic discrete value selection problem subjected to inequality constraints. By using a linear transformation, the problem is first reformulated as a standard quadratic 0–1 integer programming problem. Then, by the canonical duality theory, this challenging problem is converted to a concave maximization over a convex feasible set in continuous space. It is proved that if this canonical dual problem has a solution in its feasible space, the corresponding global solution to the primal problem can be obtained directly by a general analytical form. Otherwise, the problem could be NP-hard. In this case, a quadratic perturbation method and an associated canonical primal-dual algorithm are proposed. Numerical examples are illustrated to demonstrate the efficiency of the proposed method and algorithm.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

- Canonical Dual Problem

- Canonical Duality

- Concave Maximization

- Canonical Perturbation Method

- Nonconvex Analysis

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Many decision-making problems, such as portfolio selection, capital budgeting, production planning, resource allocation, and computer networks, etc., can often be formulated as quadratic programming problems with discrete variables. See for examples, [4, 5, 9, 24]. In engineering applications, the decision variables can not have arbitrary values. Instead, either some or all of the variables must be selected from a list of integer or discrete values for practical reasons. For examples, structural members may have to be selected from selections available in standard sizes, member thicknesses may have to be selected from the commercially available ones, the number of bolts for a connection must be an integer, the number of reinforcing bars in a concrete member must be an integer, etc. [23]. However, these integer programming problems are computationally highly demanding. Nevertheless, some numerical methods are now available.

Several review articles on nonlinear optimization problems with discrete variables are available [1, 4, 28, 33, 37, 38], and some popular methods have been discussed, including branch and bound methods, a hybrid method that combines a branch and bound method with a dynamic programming technique [29], sequential linear programming, rounding-off techniques, cutting plane techniques [2], heuristic techniques, penalty function approaches, simulated annealing [25], and genetic algorithms, etc. The relaxation methods have also been proposed recently, leading to second order cone programming (SOC) [21] and improved linearization strategy [35].

Branch and bound is perhaps the most widely known and used deterministic method for discrete optimization problems. When applied to linear problems, this method can be implemented in a way to yield a global minimum point. However for nonlinear problems there is no such guarantee, unless the problem is convex. The branch and bound method has been used successfully to deal with problems with discrete design variables. However for the problem with a large number of discrete design variables, the number of subproblems (nodes) becomes large, making the method inefficient.

Simulated annealing (SA) and genetic algorithms (GA) belong to the category of stochastic search methods [22] which based on an element of random choice. Because of this, one has to sacrifice the possibility of an absolute guarantee of success within a finite amount of computation.

Canonical duality theory provides a new and potentially useful methodology for solving a large class of nonconvex/nonsmooth/discrete problems (see the review articles [13, 19]). It was shown in [8, 12] that the Boolean integer programming problems are actually equivalent to certain canonical dual problems in continuous space without duality gap, which can be solved deterministically under certain conditions. This theory has been generalized for solving multi-integer programming [39] and the well-known max cut problems [40]. It is also shown in [13, 16] that by the canonical duality theory, the NP-hard quadratic integer programming problem is identical to a continuous unconstrained Lipschitzian global optimization problem, which can be solved via deterministic methods (but not in polynomial times) (see [20]). The canonical duality theory has been used successfully for solving a large class of challenging problems not only in global optimization, but also in nonconvex analysis and continuum mechanics [17].

In this paper, our goal is to solve a general quadratic programming problem with its decision variables taking values from discrete sets. The elements from these discrete sets are not required to be binary or uniformly distributed. An effective numerical method is developed based on the canonical duality theory [10]. The rest of the paper is organized as follows. Section 2 presents a mathematical statement of the general discrete value quadratic programming problem and how it can be transformed into a standard 0–1 programming problem in higher dimensional space. Section 3 presents a brief review on the canonical duality theory. Detailed canonical dual transformation procedure is presented in Sect. 4 to show how the integer programming problem can be converted to a concave maximization in a convex space. A perturbed computational method is developed in Sect. 5. Some numerical examples are illustrated in Sect. 6 to demonstrate the effectiveness and efficiency of the proposed method. The paper is ended with some concluding remarks.

2 Primal Problem and Equivalent Transformation

The discrete programming problem to be addressed is given below:

where \({Q}= \{ q_{ij} \} \in {\mathbb R}^{n\times n}\) is a symmetric matrix, \(\mathbf{A}=\{ a_{ij} \} \in {\mathbb R}^{m \times n}\) is a matrix with \(rank(\mathbf{A})=m<n\), \(\mathbf{c}=[c_1,\cdots ,c_n]^T \in {\mathbb R}^n\) and \(\mathbf{b}= [b_1, \cdots , b_m]^T \in {\mathbb R}^m\) are given vectors. Here, for each \(i=1, \cdots , n\),

where \(u_{i,j}, j=1, \cdots , K_i\), are given real numbers. In this paper, we let \({K}=\sum _{i=1}^n K_i\).

Problem \(({\mathscr {P}}_a)\) arises in many real-world applications, say, the pipe network optimization problems in water distribution systems, where the choices of pipelines are discrete values. Such problems have been studied extensively by traditional direct approaches (see [41]). Due to the constraint of discrete values, this problem is considered to be NP-hard and the traditional methods can only provide upper bound results. In this paper, we will show that the canonical duality theory will provide either a lower bound approach to this challenging problem, or the global optimal solution under certain conditions.

In order to convert the discrete value problem \(({\mathscr {P}}_a)\) to the standard 0–1 programming problem, we introduce the following transformation,

where, for each \(i=1, \cdots ,n \), \(u_{i,j} \in U_i,\; j=1, \cdots , K_i\). Then, the discrete programming problem \(({\mathscr {P}}_{a})\) can be written as the following 0–1 programming problem:

where

Theorem 1

Problem \(({\mathscr {P}}_{b})\) is equivalent to Problem \(({\mathscr {P}}_{a})\).

Proof

For any \(i=1,2, \cdots , n\), it is clear that constraints (6) and (7) are equivalent to the existence of only one \(j \in \{ 1, \cdots , K_i\}\), such that \(y_{i,j}=1\) while \(y_{i,j}=0\) for all other j. Thus, from the definition of \( \mathbf{y}\), the conclusion follows readily. \(\square \)

Problem \(({\mathscr {P}}_b)\) is a standard 0–1 quadratic programming problem with both equality and inequality constraints. Let

and, for a given integer K, let

Thus, on the feasible space

the integer constrained problem \(({\mathscr {P}}_b)\) can be reformulated as a standard constrained 0–1 programming problem:

3 Canonical Duality Theory: A Brief Review

The basic idea of the canonical duality theory can be demonstrated by solving the following general nonconvex problem (the primal problem \(({\mathscr {P}})\) in short)

where \( \mathbf{A}\in {\mathbb R}^{n\times n} \) is a given symmetric indefinite matrix, \(\mathbf{f}\in {\mathbb R}^n\) is a given vector (input), \(\langle \mathbf{x}, \mathbf{x}^* \rangle \) denotes the bilinear form between \(\mathbf{x}\) and its dual variable \(\mathbf{x}^*\), \({\mathscr {X}}_a \subset {\mathbb R}^n\) is a given feasible space, and \(W: {\mathscr {X}}_a \rightarrow {\mathbb R}\cup \{\infty \}\) is a general nonconvex objective function.

It must be emphasized that, different from the objective function extensively used in mathematical optimization, a real-valued function \(W(\mathbf{x})\) is called to be objective in continuum physics and the canonical duality theory only if (see [10] Chap. 6, p. 288)

where \({\mathscr {Q}} = \{ \mathbf{Q}\in {\mathbb R}^{n\times n} | \;\; \mathbf{Q}^{-1} = \mathbf{Q}^T \;\; \det \mathbf{Q}= 1 \}\) is a special rotation group.

Geometrically speaking, an objective function does not depend on the rotation, but only on certain measure of its variable. In Euclidean space \( {\mathbb R}^n\), the simplest objective function is the \(\ell _2\)-norm \(\Vert \mathbf{x}\Vert \) in \({\mathbb R}^n\) since \(\Vert \mathbf{Q} \mathbf{x}\Vert ^2 = \mathbf{x}^T \mathbf{Q}^T \mathbf{Q} \mathbf{x}= \Vert \mathbf{x}\Vert ^2 \;\; \forall \mathbf{Q}\in {\mathscr {Q}}\). By Cholesky factorization, any positive definite matrix has a unique decomposition \(C = D^* D\). Thus, any convex quadratic function is objective. Physically, an objective function does not depend on observers [7], which is essential for any real-world mathematical modeling.

The key step in the canonical duality theory is to choose a nonlinear operator

and a canonical function \({V}: {\mathscr {E}}_a \rightarrow {\mathbb R}\) such that the nonconvex objective function \({W}( \mathbf{x})\) can be recast by adopting a canonical form \({W}( \mathbf{x}) = {V}({\varLambda }(\mathbf{x}))\). Thus, the primal problem \(({\mathscr {P}})\) can be written in the following canonical form:

where \({U}(\mathbf{x}) = \langle \mathbf{x}, \mathbf{f}\rangle - \frac{1}{2}\langle \mathbf{x}, \mathbf{A}\mathbf{x}\rangle \). By the definition introduced in [10], a differentiable function \({V}(\varvec{\xi })\) is said to be a canonical function on its domain \({\mathscr {E}}_a\) if the duality mapping \(\varvec{\varsigma }= \nabla {V}(\varvec{\xi })\) from \({\mathscr {E}}_a\) to its range \( {\mathscr {S}}_a \subset {\mathbb R}^p \) is invertible. Let \(\langle \varvec{\xi }; \varvec{\varsigma }\rangle \) denote the bilinear form on \({\mathscr {E}}_a \times {\mathscr {S}}_a\). Thus, for the given canonical function \({V}(\varvec{\xi })\), its Legendre conjugate \({V}^*(\varvec{\varsigma })\) can be defined uniquely by the Legendre transformation (cf. Gao [10])

where the notation \(\mathrm{sta}\{ g(\varvec{\xi }) | \; \varvec{\xi }\in {\mathscr {E}}_a\}\) stands for finding stationary point of \(g(\varvec{\xi })\) on \({\mathscr {E}}_a\). It is easy to prove that the following canonical duality relations hold on \( {\mathscr {E}}_a \times {\mathscr {S}}_a\):

By this one-to-one canonical duality, the nonconvex term \(W( \mathbf{x})={V}({\varLambda }(\mathbf{x}))\) in the problem \(({\mathscr {P}})\) can be replaced by \(\langle {\varLambda }(\mathbf{x}) ; \varvec{\varsigma }\rangle - {V}^*(\varvec{\varsigma })\) such that the nonconvex function \({P}(\mathbf{x})\) is reformulated as the Gao-Strang total complementary function [10]:

By using this total complementary function, the canonical dual function \({P}^d (\varvec{\varsigma })\) can be obtained as

where \({U}^{\varLambda }(\mathbf{x})\) is defined by

In many applications, the geometrically nonlinear operator \({\varLambda }(\mathbf{x})\) is usually a quadratic function [3, 34]

where \(D_k \in {\mathbb R}^{n \times n}\) and \(\mathbf{b}_k\in {\mathbb R}^n (k = 1, \cdots , p)\). Let \(\varvec{\varsigma }= [\varsigma _1,\cdots , \varsigma _p]^T\). In this case, the canonical dual function can be written in the following form:

where

Let

It is easy to prove that \({\mathscr {S}}_a^+\) is convex. Moreover, \({\mathscr {S}}_a^+\) is nonempty as long as there exists one \(D_k \succ 0\).

Therefore, the canonical dual problem can be proposed as

which is a concave maximization problem over a convex set \({\mathscr {S}}^+_a \subset {\mathbb R}^p\).

Theorem 2

([10]). Problem \(({\mathscr {P}}^d)\) is canonically dual to \(({\mathscr {P}})\) in the sense that if \(\varvec{\bar{\varsigma }}\) is a critical point of \(P^d(\varvec{\varsigma })\), then

is a critical point of \(\varPi (\mathbf{x})\) and

If \(\varvec{\bar{\varsigma }}\) is a solution to \(({\mathscr {P}}^d)\), then \(\bar{\mathbf{x}}\) is a global minimizer of \(({\mathscr {P}}) \) and

Conversely, if \(\bar{\mathbf{x}}\) is a solution to \(({\mathscr {P}})\), it must be in the form of (21) for critical solution \(\varvec{\bar{\varsigma }}\) of \(P^d(\varvec{\varsigma })\).

To help explaining the theory, we consider a simple nonconvex optimization in \({\mathbb R}^n\):

where \({\alpha }, {\lambda }> 0\) are given parameters. The criticality condition \(\nabla P(\mathbf{x})=0\) leads to a nonlinear algebraic equation system in \({\mathbb R}^n\)

Clearly, to solve this n-dimensional nonlinear algebraic equation directly is difficult. Also traditional convex optimization theory can not be used to identify global minimizer. However, by the canonical dual transformation , this problem can be solved. To do so, we let \(\xi ={\varLambda }(u)=\frac{1}{2}\Vert \mathbf{x}\Vert ^2-{\lambda }\in {\mathbb R}\). Then, the nonconvex function \(W(\mathbf{x}) = \frac{1}{2}\alpha (\frac{1}{2}\Vert \mathbf{x}\Vert ^2 -{\lambda })^2\) can be written in canonical form \(V(\xi ) = \frac{1}{2}\alpha \xi ^2\). Its Legendre conjugate is given by \(V^{*}(\varsigma )=\frac{1}{2}\alpha ^{-1}\varsigma ^2\), which is strictly convex. Thus, the total complementary function for this nonconvex optimization problem is

For a fixed \(\varsigma \in {\mathbb R}\), the criticality condition \(\nabla _{\mathbf{x}} \varXi (\mathbf{x}, \varsigma )=0\) leads to

For each \(\varsigma \ne 0 \), the Eq. (27) gives \(\mathbf{x}=\mathbf{f}/\varsigma \) in vector form. Substituting this into the total complementary function \(\varXi \), the canonical dual function can be easily obtained as

The critical point of this canonical function is obtained by solving the following dual algebraic equation

For any given parameters \(\alpha \), \({\lambda }\) and the vector \(\mathbf{f}\in {\mathbb R}^n\), this cubic algebraic equation has at most three roots satisfying \(\varsigma _1 \ge 0 \ge \varsigma _2\ge \varsigma _3\), and each of these roots leads to a critical point of the nonconvex function \(P(\mathbf{x})\), i.e., \(\mathbf{x}_i=\mathbf{f}/\varsigma _i\), \(i=1,2,3\). By the fact that \(\varsigma _ 1 \in {\mathscr {S}}^+_a = \{ \varsigma \in {\mathbb R}\; |\; \varsigma > 0 \}\), then Theorem 1 tells us that \(\mathbf{x}_1\) is a global minimizer of \({P}(\mathbf{x})\).

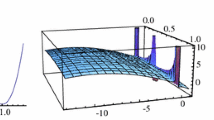

Consider one dimension problem with \(\alpha = 1\), \({\lambda }=2\), \(f= \frac{1}{2}\), the primal function and canonical dual function are shown in Fig. 1, where, \(x_1= 2.11491\) is a global minimizer of P(x), \(\varsigma _1=0.236417\) is a global maximizer of \({P}^d(\varsigma )\), and \({P}(x_1)=-1.02951={P}^d(\varsigma _1)\) (See the two black dots).

If we let \(\mathbf{f}= 0\), the graph of \({P}(\mathbf{x})\) is symmetric (i.e., the so-called double-well potential or the Mexican hat for \(n=2\) [11]) with infinite number of global minimizers satisfying \(\Vert \mathbf{x}\Vert ^2 = 2 {\lambda }\). In this case, the canonical dual \({P}^d (\varsigma ) = - \frac{1}{2}{\alpha }^{-1} \varsigma ^2 - {\lambda }\varsigma \) is strictly concave with only one critical point (local maximizer) \(\varsigma _3 = - {\alpha }{\lambda }< 0 \) (for \({\alpha }, {\lambda }> 0\)). The corresponding solution \(\mathbf{x}_3 = \mathbf{f}/\varsigma _3 = 0\) is a local maximizer. By the canonical dual equation (29) we have \(\varsigma _1 = \varsigma _2 = 0\) located on the boundary of \({\mathscr {S}}^+_a\), which corresponding to the two global minimizers \(x_{1,2} = \pm \sqrt{2 {\lambda }}\) for \(n=1\), see Fig. 1b.

This simple example shows a fundamental issue in global optimization, i.e., the optimal solutions of a nonconvex problem depends sensitively on the linear term (input) \(\mathbf{f}\). Geometrically speaking , the objective function \({W}( \mathbf{x})\) in \({P}(\mathbf{x})\) possesses certain symmetry. If there is no linear term, i.e., the subjective function in \({P}(\mathbf{x})\), the nonconvex problem usually has more than one global minimizer due to the symmetry. Traditional direct approaches and the popular SDP method are usually failed to deal with this situation. By the canonical duality theory, we understand that in this case the canonical dual function has no critical point in its open set \({\mathscr {S}}^+_a\). Therefore, by adding a linear perturbation \(\mathbf{f}\) to break this symmetry, the canonical duality theory can be used to solve the nonconvex problems to obtain one of global optimal solutions. This idea was originally from Gao’s work (1996) on post-buckling analysis of large deformed beam. The potential energy of this beam model is a double-well function, similar to this example, without the force \(\mathbf{f}\), the beam could have two buckling states (corresponding to two minimizers) and one un-buckled state (local maximizer). Later on (2008) in the Gao and Ogden work on analytical solutions in phase transformation [14], they further discovered that the nonconvex system has no phase transition unless the force distribution f(x) vanished at certain points. They also discovered that if force field f(x) changes dramatically, all the Newton type direct approaches failed even to find any local minimizer. This discovery is fundamentally important for understanding NP-hard problems in global optimization and chaos in nonconvex dynamical systems. The linear perturbation method has been used successfully for solving global optimization problems [16, 18, 32, 40]. Comprehensive reviews of the canonical duality theory and its applications in nonconvex analysis and global optimization can be found in [11, 13, 15].

4 Canonical Dual Problem

Now we are ready to apply the canonical duality theory for solving the integer programming problem \(({\mathscr {P}}_c)\) presented in Sect. 2. As indicated in [12, 13], the key step for solving this NP-hard problem is to use a so-called canonical measure \(\varvec{\rho }= \{y_i (y_i -1)\} \in {\mathbb R}^K\) such that the integer constraint \(y_{i} \in \{ 0, 1 \}\) can be equivalently written in the canonical form

where the notation \(\mathbf{s}\circ \mathbf{t}:=[s_1 t_1,s_2 t_2,\ldots ,s_K t_K]^T\) denotes the Hadamard product for any two vectors \(\mathbf{s}, \mathbf{t} \in {\mathbb R}^K\). Thus, the so-called geometrically admissible measure \(\varLambda \) can be defined as

Let

and define

Clearly, the constraints in \({\mathscr {Y}}\) can be replaced by the canonical transformation \({V}(\varLambda (\mathbf{y}))\) and the primal problem \(({\mathscr {P}}_c)\) can be equivalently written in the standard canonical form [13]

By the fact that \({V}(\varvec{\xi })\) is convex, lower, semi-continuous on \( {\mathscr {E}}\), its sub-differential leads to the canonical dual variable \( \varvec{\varsigma }= ( \varvec{\sigma }, \varvec{\tau }, \varvec{\mu }) \in \partial {V}(\varvec{\xi }) \in {\mathscr {E}}^* = {\mathbb R}^{m + n + {K}}\), and its Fenchel super-conjugate (cf. Rockafellar [30])

is also convex, l.s.c. on \({\mathscr {E}}^*\). By convex analysis, the following generalized canonical duality relations

hold on \({\mathscr {E}}\times {\mathscr {E}}^*\), where

are effective domains of \({V}\) and \({V}^\sharp \), respectively. The last equality in (32) is equivalent to the following KKT complementarity conditions:

Clearly, the condition \(\varvec{\mu }\ne 0\) leads to the integer condition \(\varvec{\rho }= \{ y_i(y_i -1) \} = 0 \in {\mathbb R}^K\). Let

Thus, on \({\mathbb R}^K \times {\mathscr {E}}^*_a\), the total complementary function \(\varXi \) associated with \(\varPi (\mathbf{y}) \) can be written as

The criticality condition \(\nabla _{\mathbf{y}} \varXi (\mathbf{y}, \varvec{\varsigma }) = 0\) leads to the canonical equilibrium equation

Let \({\mathscr {S}}_a \subset {\mathscr {E}}^*_a\) be a canonical dual space:

Then on \({\mathscr {S}}_a\), the canonical dual function can be finally formulated as

Theorem 3

(Complementary-Dual Principle). If \(\varvec{\bar{\varsigma }}= (\varvec{\bar{\sigma }}, \bar{\varvec{\tau }}, \bar{\varvec{\mu }}) \) is a KKT point of \(\varPi ^d(\varvec{\varsigma })\) on \({\mathscr {S}}_a\), then the vector

is a KKT point of Problem \(({\mathscr {P}})\) and

Proof

By introducing the Lagrange multiplier vectors \( \varvec{\xi }= \{ \varvec{\varepsilon }, \varvec{\delta }, \varvec{\rho }\} \in {\mathscr {E}}_a\) to relax the inequality constraintsFootnote 1 in \({\mathscr {E}}^*_a\), the Lagrangian function associated with the dual function \(\varPi ^d(\varvec{\sigma }, \varvec{\tau },\varvec{\mu }) \) becomes

Then, in terms of \(\mathbf{y}= {G}^{-1} (\varvec{\mu }) \mathbf{F}(\varvec{\sigma }, \varvec{\tau }, \varvec{\mu }) \), the criticality condition \(\nabla _{\varvec{\varsigma }} L(\varvec{\varsigma }, \varvec{\xi }) = 0\) leads to

as well as the KKT conditions

They can be written as:

This proves that if \((\varvec{\bar{\sigma }}, \bar{\varvec{\tau }}, \bar{\varvec{\mu }}) \) is a KKT point of \(\varPi ^d(\varvec{\varsigma })\), then the vector

is a KKT point of Problem \(({\mathscr {P}})\).

Again, by the complementary conditions (41)–(43) and (39), we have

Therefore, the theorem is proved. \(\square \)

Theorem 3 shows that the strong duality (40) holds for all KKT points of the primal and dual problems. In continuum mechanics, this theorem solved a 50-year-old problem and is known as the Gao principle [27]. In nonconvex analysis, this theorem can be used for solving a large class of fully nonlinear partial differential equations.

Remark 1.

As we have demonstrated that by the generalized canonical duality (32), all KKT conditions can be recovered for both equality and inequality constraints. Generally speaking, the nonzero Lagrange multiplier condition for the linear equality constraint is usually ignored in optimization textbooks. But it can not be ignored for nonlinear constraints. It is proved recently [26] that the popular augmented Lagrange multiplier method can be used mainly for linear constrained problems. Since the inequality constraint \(\varvec{\mu }\ne 0 \) produces a nonconvex feasible set \({\mathscr {E}}^*_a\), this constraint can be replaced by either \(\varvec{\mu }< 0\) or \(\varvec{\mu }> 0 \). But the condition \(\varvec{\mu }< 0\) is corresponding to \(\mathbf{y}\circ (\mathbf{y}-\mathbf{e}_{{K}}) \ge 0\), this leads to a nonconvex open feasible set for the primal problem. By the fact that the integer constraints \(y_i (y_i- 1) = 0\) are actually a special case (boundary) of the boxed constraints \( 0 \le y_i \le 1\), which is corresponding to \(\mathbf{y}\circ (\mathbf{y}-\mathbf{e}_{{K}}) \le 0\), we should have \(\varvec{\mu }> 0\) (see [8] and [12, 16]). In this case, the KKT condition (43) should be replaced by

Therefore, as long as \(\varvec{\mu }\ne 0\) is satisfied, the complementarity condition in (47) leads to the integer condition \(\mathbf{y}\circ (\mathbf{y}-\mathbf{e}_{{K}}) = 0\). Similarly, the inequality \(\varvec{\tau }\ne 0 \) can be replaced by \(\varvec{\tau }> 0\).

By this remark, we can introduce a convex subset of the dual feasible space \({\mathscr {S}}_a\):

Then the canonical dual problem can be eventually proposed as the following

It is easy to check that \( \varPi ^d(\varvec{\varsigma })\) is concave on the convex open set \({\mathscr {S}}^+_a\). Therefore, if \( {\mathscr {S}}^+_a\) is not empty, this canonical dual problem can be solved easily by convex minimization techniques.

Theorem 4

Assume that \(\varvec{\bar{\varsigma }}= (\varvec{\bar{\sigma }},\bar{\varvec{\tau }}, \bar{\varvec{\mu }})\) is a KKT point of \(\varPi ^d(\varvec{\varsigma })\) and \(\bar{\mathbf{y}}= \mathbf{G}^{-1} (\bar{\varvec{\mu }}) \mathbf{F}(\varvec{\bar{\varsigma }})\). If \( \varvec{\bar{\varsigma }}\in {\mathscr {S}}_a^+\), then \(\bar{\mathbf{y}}\) is a global minimizer of \(\varPi (\mathbf{y})\) and \(\varvec{\bar{\varsigma }}\) is a global maximizer of \(\varPi ^d(\varvec{\varsigma })\) with

Proof

It is easy to check that the total complementary function \(\varXi (\mathbf{y}, \varvec{\varsigma })\) is a saddle function on the open set \({\mathbb R}^K \times {\mathscr {S}}^+_a\), i.e., convex (quadratic) in \(\mathbf{y}\in {\mathbb R}^K\) and concave (linear) in \(\varvec{\varsigma }\in {\mathscr {S}}^+_a\). Therefore, if \((\bar{\mathbf{y}},\varvec{\bar{\varsigma }})\) is a critical point of \(\varXi (\mathbf{y}, \varvec{\varsigma })\), we must have

Note that

Thus, it follows from (51) that

This completes the proof. \(\square \)

Remark 2.

By the fact that \({\mathscr {S}}^+_a\) is an open convex set, if the problem \(({\mathscr {P}})\) has multiple global minimizers, then its canonical dual solutions could be located on the boundary of \({\mathscr {S}}^+_a\) as illustrated in Sect. 3 and in [12, 31]. In order to solve this problem, we let

Then on this closed convex domain, the relaxed concave maximization problem

has at least one solution \(\varvec{\bar{\varsigma }}= (\varvec{\bar{\sigma }}, \bar{\varvec{\tau }}, \bar{\varvec{\mu }})\). If the corresponding \(\bar{\mathbf{y}}= \mathbf{G}^{-1} (\bar{\varvec{\mu }}) \mathbf{F}(\varvec{\bar{\varsigma }})\) is feasible, then \(\bar{\mathbf{y}}\) is a global minimizer of the primal problem \(({\mathscr {P}})\). If \(\mathbf{G}(\bar{\varvec{\mu }})\) is singular, than \(\mathbf{G}^{-1} (\bar{\varvec{\mu }})\) can be replaced by the Moore–Penrose generalized inverse \(\mathbf{G}^{\dagger }\) (see [31]). Otherwise, the relaxed canonical dual \(({\mathscr {P}}^\sharp )\) provides a lower bound approach to the primal problem \(({\mathscr {P}})\), i.e.,

This is one of the main advantages of the canonical duality theory.

5 Canonical Perturbation Method

In fact, Problem \(({\mathscr {P}}^d)\) can be rewritten as a convex minimization problem:

If the primal problem has a unique global minimal solution, this canonical dual problem may have a unique critical point in \({\mathscr {S}}^+_a\) which can be obtained easily by well-developed nonlinear minimization techniques. Otherwise, the canonical dual function \(\varPi ^d(\varvec{\varsigma })\) may have critical point \(\varvec{\bar{\varsigma }}\) located on the boundary of \({\mathscr {S}}_a^+\), where the matrix \(\mathbf{G}(\varvec{\mu }) \) is singular. In order to handle this issue, \(({\mathscr {P}}^d)\) can be relaxed to a semi-definite programming problem:

where the parameter g is actually the Gao-Strang pure complementary gap function [19], and \(\mathbf{G}^{\dagger }\) represents the Moore–Penrose generalized inverse of \(\mathbf{G}\). Since \(\varvec{\tau }\) is a Lagrange multiplier for the linear equality \(H\mathbf{y}= \mathbf{e}_n\), the condition \(\varvec{\tau }\ne 0\) can be ignored in this section as long as the final solution \(\mathbf{y}\) is feasible.

Lemma 1

(Schur Complementary Lemma). Let

If \(B \succ 0\), then A is positive (semi) definite if and only if the matrix \(D-CB^{-1} C^T \) is positive (semi) definite. If \(B\succeq 0\), then, A is positive semi-definite if and only if the matrix \(D-CB^{-1} C^T\) is positive semi-definite and \((I - B B^{-1}) C=0\).

According to Lemma 1, (53) is equivalent to

Thus, the canonical dual problem \(({\mathscr {P}}^d)\) can be further relaxed to the following stardard semi-definite problem (SDP):

Although the SDP relaxation can be used theoretically to solve the canonical dual problem for the case that \(\varPi ^d\) has critical points on the boundary \(\partial {\mathscr {S}}_a^+\), in practice, the matrix \(\mathbf{G}(\varvec{\mu })\) will be ill-conditioning when the dual solution approaches to \(\partial {\mathscr {S}}_a^+\). In order to solve this type of challenging problems, a canonical perturbation method has been suggested [16, 32]. Let

where, \(\{\delta _k\}\) is a bounded sequence of positive real numbers, \(\{\mathbf{y}_k \} \in {\mathbb R}^K\) is a set of given vectors, \(\mathbf{G}_{\delta _k}(\varvec{\mu }) = \mathbf{G}(\varvec{\mu })+\delta _{k} I\), \(\mathbf{F}_{\delta _k}^T(\varvec{\varsigma }) = \mathbf{F}^T(\varvec{\varsigma }) +\delta _{k} \mathbf{y}_k\). Let

Clearly, we have \({\mathscr {S}}_a^+ \subset {\mathscr {S}}_{\delta _k}^+ \). Therefore, the perturbed canonical dual problem can be expressed as

Based on this perturbed problem, the following canonical primal-dual algorithm can be proposed for solving the nonconvex problem \(({\mathscr {P}})\).

Algorithm 1

(Canonical Primal-Dual Algorithm)

Given initial data \(\delta _0 > 0, \;\; \mathbf{y}_0 \in {\mathbb R}^K\), and error allowance \(\varepsilon > 0\), let \(k = 0\).

-

1.

Solve the perturbed canonical dual problem \(({\mathscr {P}}^d_{\delta _k})\) to obtain \(\varvec{\varsigma }_k \in \mathcal {S}^{+}_{\delta _k}\).

-

2.

Compute \(\widetilde{\mathbf{y}}_{k+1} = [\mathbf{G}_{\delta _k}( \varvec{\varsigma }_k)]^\dag \mathbf{F}_{\delta _k}(\varvec{\varsigma }_k)\) and let

\( \mathbf{y}_{k+1} = \mathbf{y}_k + \beta _k (\widetilde{\mathbf{y}}_{k+1} - \mathbf{y}_k), \;\; \beta _k \in [0,1] .\)

-

3.

If \( | P(\mathbf{y}_{k+1} - P(\mathbf{y}_{k}) | \le \varepsilon \), then stop, \(\mathbf{y}_{k+1}\) is the optimal solution. Otherwise, let \(k = k + 1\), go back to step 1.

In this algorithm, \(\{\beta _k\} \in [ 0, 1]\) are given parameters, which change the search directions. Clearly, if \( \beta _k = 1 \), we have \(\mathbf{y}_{k+1} = \widetilde{\mathbf{y}}_{k+1}\).

The key step in this algorithm is to solve the perturbed canonical dual problem \(({\mathscr {P}}_{\delta _k}^d)\), which is equivalent to

This problem can be solved by a well-known software package named SeDuMi [36].

6 Numerical Experience

All data and computational results presented in this section are produced by Matlab. In order to save space and fit the matrix in the paper, we round our these results up to two decimals.

Example 1. 5-dimensional problem.

Consider Problem \(({\mathscr {P}}_a)\) with \(\mathbf{x}{=}[x_1,\cdots ,x_5]^T\), while \(x_i \in \{ 2,3,5 \} \), \(i{=}1, \cdots , 5\),

Under the transformation (3), this problem is transformed into the 0–1 programming Problem \(({\mathscr {P}})\), where

The canonical dual problem can be stated as follows:

By solving this dual problem with the sequential quadratic programming method in the optimization Toolbox within the Matlab environment, we obtain

and

It is clear that \(\varvec{\bar{\varsigma }}= (\varvec{\bar{\sigma }},\bar{\varvec{\tau }},\bar{\varvec{\mu }}) \in \mathcal {S}_a^{+}\). Thus, from Theorem 4,

is the global minimizer of Problem \(({\mathscr {P}})\) with \(\varPi ^d(\varvec{\bar{\varsigma }})=-227.87=\varPi (\bar{\mathbf{y}})\). The solution to the original primal problem can be calculated by using the transformation

to give

with \(P(\bar{\mathbf{x}})=-227.87\).

Example 2. 10-dimensional problem. Consider Problem \(({\mathscr {P}}_a)\), with \(\mathbf{x}=[x_1, \cdots , x_{10}]^T \), while \(x_i \in \{1,2,4,7,9\},\;i=1,\cdots ,10\),

By solving the canonical dual problem of Problem \(({\mathscr {P}}_a)\), we obtain

and

It is clear that \(\varvec{\bar{\varsigma }}= (\varvec{\bar{\sigma }},\bar{\varvec{\tau }},\bar{\varvec{\mu }}) \in \mathcal {S}_a^{+}\). Therefore,

is the global minimizer of the problem \(({\mathscr {P}})\) with \(\varPi ^d(\varvec{\bar{\varsigma }})=45.54=\varPi (\bar{\mathbf{y}})\). The solution to the original primal problem is

with \({P}(\bar{\mathbf{x}})=45.54\).

Example 3. Relatively large size problems.

Consider Problem \(({\mathscr {P}}_a)\) with \(n=20\), 50, 100, 200, and 300. Let these five problems be referred to as Problem (1), \(\cdots \), Problem (5), respectively. Their coefficients are generated randomly with uniform distribution. For each problem, \(q_{ij} \in (0,1)\), \( a_{ij} \in (0,1)\), for \(i=1, \cdots , n\); \(j=1, \cdots , n\), and \(c_i \in (0,1)\), \(x_i \in \{1,2,3,4,5 \}\), for \(i=1, \cdots n\). Without loss of generality, we ensure that the constructed \({Q}\) is a symmetric matrix. Otherwise, we let \({Q}= \frac{{Q}+{Q}^T }{2}\). Furthermore, let \({Q}\) be diagonally dominated. For each \(x_i\), its lower bound is \(l_i=1\), and its upper bound is \(u_i=5\). Let \(l=[l_1, \cdots , l_n]^T\) and \(u=[u_1,\cdots ,u_n]^T \). The right-hand sides of the linear constraints are chosen such that the feasibility of the test problem is satisfied. More specifically, we set \(\mathbf{b}=\sum _{j} a_{ij} l_j + 0.5\cdot (\sum _j a_{ij}u_j-\sum _j a_{ij} l_j)\).

We then construct the canonical problem of each of the five problems. It is solved by using the sequential quadratic programming method with active set strategy from the Optimization Toolbox within the Matlab environment. The specifications of the personal notebook computer used are: Window 7 Enterprise, Intel(R), Core(TM)(2.50 GHZ). Table 1 presents the numerical results, where m is number of linear constraints in Problem \(I({\mathscr {P}}_a)\).

From Table 1, we see that the algorithm based on the canonical dual method can solve large scale problems with reasonable computational time. Furthermore, for each of the five problems, the solution obtained is a global optimal solution. For the case of \(n=300\), the equivalent problem in the form of Problem \(({\mathscr {P}}_b)\) has 1500 variables. For such a problem, there are \(2^{1500}\) possible combinations.

7 Conclusion

We have presented a canonical duality approach for solving a general quadratic discrete value selection problem with linear constraints. Our results show that this NP-hard problem can be converted to a continuous concave dual maximization problem over a convex space without duality gap. For certain given data, if this canonical dual has a KKT point in the dual feasible space \({\mathscr {S}}^+_a\), the problem can be solved easily by well-developed convex optimization methods. Otherwise, a canonical perturbation method is proposed, which can be used to deal with challenging cases when the primal problem has multiple global minimizers. Several examples, including some relatively large scale ones, were solved effectively by using the method proposed.

Remanning open problems include how to solve the canonical dual problem \(({\mathscr {P}}^d)\) more efficiently instead of using the SDP approximation. Also, for the given data \({Q}, \mathbf{c}, \mathbf{A}, \mathbf{b}\), the existence condition for the canonical dual problem having KKT point in \({\mathscr {S}}^+_a\) is fundamentally important for understanding NP-hard problems . If the canonical dual \(({\mathscr {P}}^d)\) has no KKT point in the closed set \( {{\mathscr {S}}}^+_c = {\mathscr {S}}^+_a \cup \partial {\mathscr {S}}^+_a\), the primal problem is equivalent to the following canonical dual problem (see Eq. (67) in [16])

i.e., to find the minimal stationary value of \(\varPi ^d\) on \({\mathscr {S}}_a\). Since the feasible set \({\mathscr {S}}_a\) is nonconvex, to solve this canonical dual problem is very difficult. Therefore, it is a conjecture that the primal problem \(({\mathscr {P}})\) could be NP-hard if its canonical dual \(({\mathscr {P}}^d)\) has no KKT point in the closed set \( \mathcal {S}_a^{+} \) [12]. In this case, one alternative approach for solving \(({\mathscr {P}})\) is the canonical dual relaxation \(({\mathscr {P}}^\sharp )\). Although the relaxed problem \(({\mathscr {P}}^\sharp )\) is convex, by Remark 2 we know that there exists a duality gap between the primal problem \(({\mathscr {P}})\). It turns out that the associated SDP method provides only a lower bound approach for solving the primal problem. Further researches are needed to know how big is this duality gap, how much does this relaxation lose, and how to solve the nonconvex canonical dual problem (56).

Notes

- 1.

The inequality \(\det \mathbf{G}(\varvec{\varsigma }) \ne 0\) is not a constraint since the Lagrange multiplier for this inequality is identical zero.

References

Arora, J.S., Huang, M.W., Hsieh, C.C.: Methods for optimization of nonlinear problems with discrete variables: a review. Struct. Optim. 8, 69–85 (1994)

Balas, E., Ceria, S., Cornuéjols, G.: A lift-and-project cutting plane algorithm for mixed 0–1 programs. Math. Program. 58, 295–324 (1993)

Belmiloudi, A.: Stabilization, Optimal and Robust Control: Theory and Applications in Biological and Physical Science. Springer, London (2008)

Bertsimas, D., Shioda, R.: Classification and regression via integer optimization. Oper. Res. 55(2), 252–271 (2007)

Chen, D.S., Batson, R.G., Dang, Y.: Applied Integer Programming: Modeling and Solution. Wiley, New Jersey (2010)

Ciarlet, P.G.: Linear and Nonlinear Functional Analysis with Applications. SIAM, Philadelphia (2013)

Marsden, J.E., Hughes, T.J.R.: Mathematical Foundations of Elasticity. Prentice-Hall, Upper Saddle River (1983)

Fang, S.C., Gao, D.Y., Sheu, R.L., Wu, S.Y.: Canonical dual approach to solving 0–1 quadratic programming problems. J. Ind. Manag. Optim. 4(4), 125–142 (2008)

Floudas, C.A.: Nonlinear and Mixed-Integer Optimization: Theory Methods and Applications. Kluwer Academic Publishers, Dordrecht (2000)

Gao, D.Y.: Duality Principles in Nonconvex Systems: Theory, Methods and Applications, p. 454. Kluwer Academic Publishers, Dordrecht (2000)

Gao, D.Y.: Nonconvex semi-linear problems and canonical duality solutions, In: Advances in Mechanics and Mathematics, vol. II, pp. 261–312. Kluwer Academic Publishers, Dordrecht (2003)

Gao, D.Y.: Solutions and optimality criteria to box constrained nonconvex minimization problem. J. Ind. Manag. Optim. 3(2), 293–304 (2007)

Gao, D.Y.: Canonical duality theory: unified understanding and generalized solution for global optimization problems. Comput. Chem. Eng. 33, 1964–1972 (2009)

Gao, D.Y., Ogden, R.W.: Multiple solutions to non-convex variational problems with implications for phase transitions and numerical computation. Q. J. Mech. Appl. Math 61(4), 497–522 (2008)

Gao, D.Y., Ruan, N.: Solutions and optimality criteria for nonconvex quadratic-exponential minimization problem. Math. Method. Oper. Res. 67, 479–496 (2008)

Gao, D.Y., Ruan, N.: Solutions to quadratic minimization problems with box and integer constraints. J. Glob. Optim. 47, 463–484 (2010)

Gao, D.Y., Ruan, N., Pardalos, P.M.: Canonical dual solutions to sum of fourth-order polynomials minimization problems with applications to sensor network localization. In: Boginski, V.L., Commander, C.W., Pardalos, P.M., Ye, Y.Y. (eds.) Sensors: Theory, Algorithms and Applications, vol. 61, pp. 37–54. Springer, Berlin (2012)

Gao, D.Y., Ruan, N., Sherali, H.D.: Solutions and optimality criteria for nonconvex constrained global optimization problems. J. Glob. Optim. 45, 473–497 (2009)

Gao, D.Y., Sherali, H.D.: Canonical duality: connection between nonconvex mechanics and global optimization. In: Advances in Application Mathematics and Global Optimization, pp. 249–316. Springer, Berlin (2009)

Gao, D.Y., Watson, L.T., Easterling, D.R., Thacker, W.I., Billups, S.C.: Solving the canonical dual of box- and integer-constrained nonconvex quadratic programs via a deterministic direct search algorithm. Optim. Methods Softw. 28(2), 313–326 (2013)

Ghaddar, B., Vera, J.C., Anjos, M.: Second-order cone relaxations for binary quadratic polynomial programs. SIAM J. Optim. 21, 391–414 (2011)

Holland, J.H.: Adaptation in Natural and Artificial System. The University of Michigan Press, Ann, Arbor, MI (1975)

Huang, M.W., Arora, J.S.: Optimal design with discrete variables: Some numerical experiments. INT. J. Numer. Meth. Eng. 40, 165–188 (1997)

Karlof, J.K.: Integer Programming: Theory and Practice. CRC Press, Boca Raton (2006)

Kincaid, R.K., Padula, S.L.: Minimizing distortion and internal forces in truss structures by simulated annealing. In: Proceedings of the 31st AIAA SDM Conference, Long Beach, CA, pp. 327–333 (1990)

Latorrie, V., Gao, D.Y.: Canonical duality for solving general nonconvex constrained problems. Optim. Lett. 10(8), 1–17 (2015)

Li, S.F., Gupta, A.: On dual configuration forces. J. Elast. 84, 13–31 (2006)

Loh, H.T., Papalambros, P.Y.: Computational implementation and tests of a sequential linearization approach for solving mixed-discrete nonlinear design optimization. J. Mech. Des. ASME 113, 335–345 (1991)

Marsten, R.E., Morin, T.L.: A hybrid approach to discrete mathematical programming. Math. Program. 14, 21–40 (1978)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1970)

Ruan, N., Gao, D.Y.: Global optimal solutions to a general sensor network localization problem. Perform. Eval. 75–76, 1–16 (2014)

Ruan, N., Gao, D.Y., Jiao, Y.: Canonical dual least square method for solving general nonlinear systems of equations. Comput. Optim. Appl. 47, 335–347 (2010)

Sandgren, E.: Nonlinear integer and discreter programming in mechanical design optimizatin. J. Mech. Des. ASME. 112, 223–229 (1990)

Santos, H.A.F.A., Moitinho De Almeida, J.P.: Dual extremum principles for geometrically exact finite strain beams. Int/ J. Nonlinear Mech. Elsevier 46(1), 151 (2010)

Sherali, H.D., Smith, J.C.: An improved linearization strategy for zero-one quadratic programming problems. Optim. Lett. 1(1), 33–47 (2007)

Sturn, J.F.: Using SeDuMi 1.02, a MATLAB toolbox for optimization over symmetric cone. Optim. Meth. Softw. 11, 625–653 (1999)

Thanedar, P.B., Vanderplaats, G.N.: A survey of discrete variable optimization for structural design. J. Struct. Eng. ASCE 121, 301–306 (1994)

Wang, S., Teo, K.L., Lee, H.W.J.: A new approach to nonlinear mixed discrete programming problems. Eng. Optim. 30(3), 249–262 (1998)

Wang, Z.B., Fang, S.C., Gao, D.Y., Xing, W.X.: Global extremal conditions for multi-integer quadratic programming. J. Ind. Manag. Optim. 4(2), 213–225 (2008)

Wang, Z.B., Fang, S.C., Gao, D.Y., Xing, W.X.: Canonical dual approach to solving the maximum cut problem. J. Glob. Optim. 54(2), 341–351 (2012)

Zecchin, A.C., Simpson, A.R., Maier, H.R., Nixon, J.B.: Parametric study for an ant algorithm applied to water distribution system optimization. IEEE Trans. Evol. Comput. 9(2), 175–191 (2005)

Acknowledgements

The research is supported by US Air Force Office of Scientific Research under grants FA2386-16-1-4082 and FA9550-17-1-0151.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this chapter

Cite this chapter

Ruan, N., Gao, D.Y. (2017). Global Optimal Solution to Quadratic Discrete Programming Problem with Inequality Constraints. In: Gao, D., Latorre, V., Ruan, N. (eds) Canonical Duality Theory. Advances in Mechanics and Mathematics, vol 37. Springer, Cham. https://doi.org/10.1007/978-3-319-58017-3_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-58017-3_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-58016-6

Online ISBN: 978-3-319-58017-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)