Abstract

We review recent advances in the quadratic convex reformulation (QCR) approach that is employed to derive efficient equivalent reformulations for mixed-integer quadratically constrained quadratic programming (MIQCQP) problems. Although MIQCQP problems can be directly plugged into and solved by standard MIQP solvers that are based on branch-and-bound algorithms, it is not efficient because the continuous relaxation of the standard MIQCQP reformulation is very loose. The QCR approach is a systematic way to derive tight equivalent reformulations. We will explore the QCR technique on subclasses of MIQCQP problems with simpler structures first and then generalize it step by step such that it can be applied to general MIQCQP problems. We also cover the recent extension of QCR on semi-continuous quadratic programming problems.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

- Quadratic programming

- Quadratic convex reformulation

- Recent advances

- Semi-continuous quadratic programming

1 Introduction

Mixed-integer quadratically constrained quadratic programming (MIQCQP) problems are mathematical programming problems with continuous and discrete variables and quadratic functions in the objective function and constraints. The use of MIQCQP is a natural approach of formulating nonlinear problems where it is necessary to simultaneously optimize the system structure (discrete) and parameters (continuous).

MIQCQPs have been used in various applications, including the process industry and the financial, engineering, management science and operations research sectors. It includes problems such as the unit commitment problem (Frangioni and Gentile 2006; Frangioni et al. 2011), the Markowitz mean-variance mode with practical constraints (Mitra et al. 2007), the chaotic mapping of complete multipartite graphs (Fu et al. 2001), the material cutting problem (Cui 2005), the capacity planning problem (Hua and Banerjee 2000). More MIQCQP applications can be found in Grossmann and Sahinidis (2002). The MIQCQP problem is in general NP-hard. It also nests many NP-hard problems as its special cases, such as the binary quadratic programming problem, the integer quadratic programming problem, the semi-continuous quadratic programming problem, etc. All these problems are very difficult to solve and yet have many useful real-life applications such as the max-cut problem (Rendl et al. 2010), the portfolio lot sizing problem (Li et al. 2006), the cardinality constrained portfolio selection and control problems (Gao and Li 2011, 2013b; Zheng et al. 2014; Gao and Li 2013a). The needs in such diverse areas have motivated research and development in solving MIQCQP problems, as they become more and more challenging with larger problem sizes along with the “big data” era. Various methods to find local or global optimal solutions and good lower bounds for different classes of MIQCQP problems can be found in Li and Sun (2006), which is also an excellent survey for nonlinear integer programming.

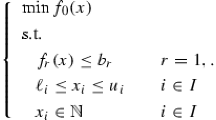

The general form of an MIQCQP problem is:

where we assume that, after fixing the values of x i , i ∈ I, the remaining problem is convex. The standard continuous relaxation of problem (P) resulting from removing the integral constraint \(x_{i} \in \mathbb{Z}\) is a convex problem. Thus, problem (P) can be directly plugged into and solved by many off-the-shelf solvers such as CPLEX and Gurobi, which use branch-and-bound schemes. The major issue is that the bound from the standard continuous relaxation is usually very loose, resulting in a large search tree in the branch-and-bound process.

One remedy is to find equivalent reformulations that have a tighter continuous relaxation. In this chapter, we review a general technique, the quadratic convex reformulation (QCR), that is used to find good equivalent reformulations for problem (P). When a better reformulation is solved by CPLEX or Gurobi, the computation time needed can be significantly reduced.

The QCR approach focuses on finding a tighter MIQCQP formulation that is equivalent to the original problem (P). It has the following characteristics:

-

(a)

Quadratic functions that vanish in the feasible region can be added to the objective function and the constraints.

-

(b)

These added quadratic functions can be characterized by a set of parameters.

-

(c)

A convex problem is solved to get the best parameters such that the continuous relaxation of the reformulation is convex and at the same time it provides a lower bound that is as tight as possible.

The process of QCR can be demonstrated by a simple example. The solid curve of Fig. 4.1 is the original objective function and the two dots are the feasible solutions. We can find a new objective curve, the dashed curve, that passes through the corresponding objective points of the feasible solutions and at the same time provides a better lower bound from its continuous relaxation. It is essential that the two curves coincide on the feasible region. The curvature of the dashed curve is smaller than that of the solid curve. That is why the QCR process is also called a “flattening” process in Plateau (2006) and Ahlatçıoğlu et al. (2012).

We will explore the QCR technique on subclasses of problem (P) with simpler structures first and then generalize it step by step such that it can be applied to the general form of problem (P). In Sect. 4.2, we review QCR for binary quadratic programming problems. Two schemes are covered, with or without additional variables. In Sect. 4.3, we review QCR for linear equality constrained binary quadratic programming problems. In Sect. 4.4, we show step by step how we can apply QCR on the general from of problem (P). In Sect. 4.5, we extend the QCR on semi-continuous quadratic programming problems. We conclude the review in Sect. 4.6.

Notation

In remaining sections, we denote by v(⋅ ) the optimal value of problem (⋅ ), and \(\mathbb{R}_{+}^{n}\) the nonnegative orthant of \(\mathbb{R}^{n}\). For any \(a \in \mathbb{R}^{n}\), we denote by Diag(a) = Diag(a 1, …, a n ) the diagonal matrix with a i being its ith diagonal element. We denote by e the all-one vector. We denote by \(\mathbb{S}^{n}\) the set of n × n symmetric real matrix..

2 QCR for Binary Quadratic Programming

QCR is firstly applied on the following binary quadratic programming problem,

There are two main schemes, one with no additional variables and the other with additional variables.

2.1 QCR with No Additional Variables

The QCR scheme with no additional variables was pioneered by Hammer and Rubin (1970) and later improved by Plateau (2006) and Billionnet et al. (2008, 2009). Li et al. (2012) explored the geometry of QCR from another angle.

For any \(d \in \mathbb{R}^{n}\), the following problem is equivalent to problem (BQP):

By relaxing the binary constraint as continuous constraint and noting 0 ≤ x i ≤ 1 is equivalent to x i 2 − x i ≤ 0, we can represent problem \((\overline{\text{BQP}}(d))\) as

We want to choose d such that the continuous relaxation of (BQP(d)) is a convex problem and the lower bound from this relaxation is as tight as possible. This can be done by solving the following problem,

Note that problem \((\overline{\text{BQP}}(d))\) is convex if and only if Q 0 −Diag(d)⪰0.

Theorem 1 (Billionnet et al. 2009)

Problem (BQP_MAX_d) is equivalent to the following semi-definite programming (SDP) problem,

Proof

The Lagrangian dual of \((\overline{\text{BQP}}(d))\) is

which is equivalent to

for any \(\tau \in \mathbb{R}\). By noting

we obtain that \((\overline{\text{LBQP}}(d))\) is equivalent to

Note that the constraints of \((\overline{\text{BQP}}(d))\) satisfy the Slater condition since (0. 5, …, 0. 5)T is an interior point. If Q 0 −diag(d)⪰0, then problem \((\overline{\text{BQP}}(d))\) is convex and from the no duality gap theory of convex programming (see, e.g., Proposition 6.5.6 in Bertsekas et al. 2003), we have \(\text{v}(\overline{\text{BQP}}(d)) = \text{v}(\overline{\text{LBQP}}(d))\). Thus (BQP_MAX_d) is equivalent to

which is further equivalent to problem (BQP_DSDP_d) by eliminating the variable α. □

In fact, by rewriting the binary constraint x ∈ { 0, 1}n as x i 2 − x i = 0, i = 1, …, n, one can check that problem (BQP_DSDP_d) is the Lagrangian dual of problem (BQP).

Convex optimization shows that the conic dual of problem (BQP_DSDP_d) is equivalent to the following SDP relaxation of problem (BQP), which is well known as Shor’s relaxation (Shor 1987),

2.2 QCR with Additional Variables

The QCR scheme with additional variables for binary quadratic programming problems can be found in Billionnet et al. (2012, 2013, 2015).

If we introduce new variables, the reformulation can be further strengthened. For any \(S \in \mathbb{S}^{n}\), the following problem is equivalent to (BQP),

By relaxing x ∈ { 0, 1}n, as 0 ≤ x i ≤ 1, i = 1…, n, we obtain the continuous relaxation of (BQP(S)) as follows:

which is equivalent to

where the constraint (4.1) includes the case of i = j. Note that the constraint Y ≤ 1 is implicit in the above problem since Y ii = x i and Y ii ≥ x i + x i − 1 together imply Y ii ≤ 1, thus Y ij ≤ x i = Y ii ≤ 1. The constraint 0 ≤ x i ≤ 1 is also implicit in the above problem since x i = Y ii .

We want to choose S such that \((\overline{\text{BQP}}(S))\) is a convex problem and the lower bound from this relaxation is as tight as possible. This can be done by solving the following problem:

Theorem 2 (Billionnet et al. 2013)

Problem (BQP_MAX_S) is equivalent to the following semi-definite programming (SDP) problem,

where e is a column vector with all entries being 1, and \(\mathbb{N}^{n}\) represents the set of n × n nonnegative matrices.

Proof

The Lagrangian dual of \((\overline{\text{BQP}}(S))\) is as follows:

Note that the constraints of \((\overline{\text{BQP}}(S))\) satisfy the Slater condition. If Q 0 − S ⪰0, then problem \((\overline{\text{BQP}}(S))\) is convex and from the no duality gap theory of convex programming, we have \(\text{v}(\overline{\text{BQP}}(S)) = \text{v}(\overline{\text{LBQP}}(S))\). Thus (BQP_MAX_S) is equivalent to

It is obvious that the above problem is further equivalent to (BQP_DSDP_S). □

The conic dual of problem (BQP_DSDP_S) is given as follows:

which is an SDP relaxation for problem (BQP) that is tighter than the Shor’s relaxation.

3 QCR for Linear Equality Constrained Binary Quadratic Programming

In this section, we extend the QCR to the following binary quadratic programming problems with linear equability constraints,

where A is an m × n matrix and \(b \in \mathbb{R}^{n}\). We consider only the scheme with no additional variables, which was proposed by Plateau (2006) and Billionnet et al. (2008, 2009).

For any \(d \in \mathbb{R}^{n},s \in \mathbb{R}^{(m+1){\ast}m/2}\), the following problem is equivalent to problem (EBQP):

By relaxing the binary constraint as continuous constraint and noting 0 ≤ x i ≤ 1 is equivalent to x i 2 − x i ≤ 0, we can represent the continuous relaxation of (EBQP(d, s)) as

We want to choose d and s such that \((\overline{\text{EBQP}}(d,s))\) is a convex problem and the low bound from this relaxation is as tight as possible. This can be done by solving the following problem:

Using similar proofs as in Sect. 4.2, we can show that (EBQP_MAX_ds) is equivalent to the following SDP problem,

The formulation (EBQP(d, s)) is parameterized by d and s. The dimension of s is (m + 1) ∗ m∕2. As the number of linear equalities grows, the number of parameters increases quadratically. In fact, we can achieve the same good reformulation with only one parameter.

For any \(d \in \mathbb{R}^{n},\ w \in \mathbb{R}\), the following problem is equivalent to (EBQP):

We want to choose d and w such that the continuous relaxation of (EBQP(d, w)) is a convex problem and the low bound from this relaxation is as tight as possible. This can be done by solving the following problem:

Similar to the last section, we have the following theorem.

Theorem 3 (Billionnet et al. 2012)

Problem (EBQP_MAX_dw) is equivalent to the following SDP problem:

The conic dual of problem (EBQP_DSDP_dw) is given as follows:

which is also an SDP relaxation of (EBQP).

It is shown in Faye and Roupin (2007) that problem (EBQP_PSDP_dw) has the same optimal value with the SDP problem (EBQP_PSDP_ds). Thus the reformulation (EBQP(d, w)) with less parameters is as good as the reformulation (EBQP(d, s)).

4 Generalization of QCR to MIQCQP

In this section we extend QCR to general MIQCQP problems step by step using the techniques derived in Sects. 4.2 and 4.3.

4.1 QCR for Binary Quadratically Constrained Quadratic Programming

We first consider binary quadratic programming problems with quadratic constraints:

For any \(d_{0},d_{1},\ldots,d_{m} \in \mathbb{R}^{n}\), the following problem is equivalent to problem (BQCQP):

For any \(S_{0},S_{1},\ldots,S_{m} \in \mathbb{S}^{n}\), the following problem is equivalent to (BQCQP),

Using the same methods as in Sects. 4.2 and 4.3, one can derive SDP problems to solve for the best parameters d for problem (BQCQP(d)) and S for problem (BQCQP(S)). Note that in this case we also need to maintain the convexity in the constraints.

4.2 QCR for Mixed-Binary Quadratic Programming

In this section we consider the following problem with continuous and binary variables,

where we assume that, after fixing the values of x i , i ∈ I, the remaining problem is convex.

For any \(s_{ij} \in \mathbb{R},\ (i,j) \in I \times \{ 1,\ldots,n\}\), the following problem is equivalent to (MBQP),

Using the same methods as in Sects. 4.2 and 4.3, one can derive SDP problems to solve for the best parameters s for problem (MBQP(s)).

4.3 QCR for MIQCQP

The final step in extending QCR to the general form of problem (P) is to employ binary expansion of the integer variables. Each variable \(x_{i} \in \mathbb{Z},\ 0 \leq x_{i} \leq u_{i},\) can be replaced by its unique binary decomposition:

Then we can apply all the results in previous sections to the “binarized” problem.

4.4 Compact QCR for MIQCQP

Using simple binary expansion as in Sect. 4.4.3 could blow up the problem size very quickly. This is especially true for problems driven by a massive amount of “big data”. Billionnet et al. (2012, 2013, 2015) proposed a relatively compact formulation. Consider the following integer quadratically constrained quadratic programming problem,

For any \(S_{0},S_{1},\ldots,S_{m} \in \mathbb{S}^{n}\), the following problem is equivalent to (IQCQP),

The method for extending this formulation to mixed-integer problems can be found in Billionnet et al. (2015).

4.5 With or Without Additional Variables

In Sect. 4.2, we presented two schemes, one with additional variables and the other without additional variables. In fact, all the reformulations we considered can have the corresponding two schemes. A natural question is: which scheme is better? The scheme with additional variables has tighter reformulations but involves more variables. The trade-off could be problem-specific. Billionnet et al. (2013) showed that the scheme without additional variables could be more efficient in terms of overall computation time for general problem (P).

5 QCR for Semi-Continuous Quadratic Programming

The QCR in previous sections exploits the structures of binary variables and linear equality constraints to derive tighter reformulations. In this section, we look at another structure involving semi-continuous variables.

When modeling real-world optimization problems, due to some managerial and technological consideration, the decision variables are often required to exceed certain threshold if they are set to be nonzero. Such variables are termed semi-continuous variables. Mathematically, semi-continuous variables can be defined as x i ∈ { 0} ∪ [a i , b i ], where a i < b i for i = 1, …, n. Using binary variables, semi-continuous variables can be expressed by a set of mixed-integer 0-1 linear constraints:

Let us consider the following semi-continuous quadratic programming problem,

Wu et al. (2016) proposed the following equivalent reformulation,

for any \(u,v \in \mathbb{R}^{n}\). They showed that the quadratic function

is the most general quadratic function that can be added to the objective function.

We want to choose u, v such that \((\overline{\text{SQP}}(u,v))\) is a convex problem and the lower bound from this relaxation is as tight as possible. This can be done by solving the following problem:

Following the approaches in Sects. 4.2 and 4.3, we can derive an SDP problem to solve for the best parameters u, v for problem (SQP(u, v)).

Theorem 4 (Wu et al. 2016)

Problem (SQP_MAX_uv) is equivalent to the following SDP problem:

where

6 Concluding Remark

The quadratic convex reformulation (QCR) approach for solving mixed-integer quadratically constrained quadratic programming (MIQCQP) problems is very effective. Its goal is to find a tight and efficient equivalent reformulation, by adding quadratic functions that vanish in the feasible region to the objective function and the constraints. We have reviewed recent advances in the QCR approach. By exploring the QCR technique on subclasses of MIQCQP problems with simpler structures first, we are able to generalize the approach step by step such that it can be applied to general MIQCQP problems. We have also covered the recent extension of QCR on semi-continuous quadratic programming problems. In a broad picture, the QCR approach provides a very effective solution framework for solving MIQCQP problems.

As the problem size of the NP-hard MIQCQP problems increases along with the “big data” era, the QCR approach would be more and more important. This is because finding the best reformulation in the QCR approach reduces to an SDP problem, which is a convex problem that can be solved in polynomial time. Armed with the optimized reformulation, better approximation and heuristics can be developed.

In the coming future, the QCR approach can be further generalized according to different practical structures in the objective function and the constraints. Also, the integration of the QCR approach with other solution techniques for MIQCQP problems is also an interesting research direction.

References

A. Ahlatçıoğlu, M. Bussieck, M. Esen, M. Guignard, J. Jagla, A. Meeraus, Combining QCR and CHR for convex quadratic pure 0–1 programming problems with linear constraints. Ann. Oper. Res. 199 (1), 33–49 (2012)

D. Bertsekas, A. Nedić, A. Ozdaglar, Convex Analysis and Optimization (Athena Scientific, Belmont, 2003)

A. Billionnet, S. Elloumi, M. Plateau, Quadratic 0–1 programming: tightening linear or quadratic convex reformulation by use of relaxations. RAIRO Oper. Res. 42 (02), 103–121 (2008)

A. Billionnet, S. Elloumi, M. Plateau, Improving the performance of standard solvers for quadratic 0-1 programs by a tight convex reformulation: the QCR method. Discret. Appl. Math. 157 (6), 1185–1197 (2009)

A. Billionnet, S. Elloumi, A. Lambert, Extending the QCR method to general mixed-integer programs. Math. Program. 131 (1–2), 381–401 (2012)

A. Billionnet, S. Elloumi, A. Lambert, An efficient compact quadratic convex reformulation for general integer quadratic programs. Comput. Optim. Appl. 54 (1), 141–162 (2013)

A. Billionnet, S. Elloumi, A. Lambert, Exact quadratic convex reformulations of mixed-integer quadratically constrained problems. Math. Program. 158 (1), 235–266 (2015)

Y. Cui, Dynamic programming algorithms for the optimal cutting of equal rectangles. Appl. Math. Model. 29 (11), 1040–1053 (2005)

A. Faye, F. Roupin, Partial Lagrangian relaxation for general quadratic programming. 4OR 5 (1), 75–88 (2007)

A. Frangioni, C. Gentile, Perspective cuts for a class of convex 0–1 mixed integer programs. Math. Program. 106, 225–236 (2006)

A. Frangioni, C. Gentile, E. Grande, A. Pacifici, Projected perspective reformulations with applications in design problems. Oper. Res.59, 1225–1232 (2011)

H.L. Fu, C.L. Shiue, X. Cheng, D.Z. Du, J.M. Kim, Quadratic integer programming with application to the chaotic mappings of complete multipartite graphs. J. Optim. Theory Appl.110 (3), 545–556 (2001)

J. Gao, D. Li, Cardinality constrained linear-quadratic optimal control. IEEE Trans. Autom. Control 56, 1936–1941 (2011)

J. Gao, D. Li, Optimal cardinality constrained portfolio selection. Oper. Res. 61, 745–761 (2013)

J. Gao, D. Li, A polynomial case of the cardinality-constrained quadratic optimization problem. J. Glob. Optim. 56, 1441–1455 (2013)

I. Grossmann, N. Sahinidis, Special issue on mixed integer programming and its application to engineering. Part I/II. Optim. Eng. 136–141 (2002)

P. Hammer, A. Rubin, Some remarks on quadratic programming with 0-1 variables. RAIRO Oper. Res. (Recherche Opérationnelle) 4 (V3), 67–79 (1970)

Z. Hua, P. Banerjee, Aggregate line capacity design for PWB assembly systems. Int. J. Prod. Res. 38 (11), 2417–2441 (2000)

D. Li, X. Sun, Nonlinear integer programming, vol. 84 (Springer, New York, 2006)

D. Li, X. Sun, J. Wang, Optimal lot solution to cardinality constrained mean-variance formulation for portfolio selection. Math. Financ. 16, 83–101 (2006)

D. Li, X. Sun, C. Liu, An exact solution method for unconstrained quadratic 0-1 programming: a geometric approach. J. Glob. Optim. 52 (4), 797–829 (2012)

G. Mitra, F. Ellison, A. Scowcroft, Quadratic programming for portfolio planning: insights into algorithmic and computational issues. Part II: processing of portfolio planning models with discrete constraints. J. Asset Manage. 8, 249–258 (2007)

M. Plateau, Reformulations quadratiques convexes pour la programmation quadratique en variables 0-1. Ph.D. Thesis, Conservatoire National des Arts et Métiers (2006)

F. Rendl, G. Rinaldi, A. Wiegele, Solving max-cut to optimality by intersecting semidefinite and polyhedral relaxations. Math. Program. 121 (2), 307–335 (2010)

N. Shor, Quadratic optimization problems. Sov. J. Comput. Syst. Sci. 25, 1–11 (1987)

B. Wu, X. Sun, D. Li, X. Zheng, Quadratic convex reformulations for semi-continuous quadratic programming. Working Paper, The Chinese University of Hong Kong

X. Zheng, X. Sun, D. Li, Improving the performance of MIQP solvers for quadratic programs with cardinality and minimum threshold constraints: a semidefinite program approach. INFORMS J. Comput. 26 (4), 690–703 (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this chapter

Cite this chapter

Wu, B., Jiang, R. (2017). Quadratic Convex Reformulations for Integer and Mixed-Integer Quadratic Programs. In: Choi, TM., Gao, J., Lambert, J., Ng, CK., Wang, J. (eds) Optimization and Control for Systems in the Big-Data Era. International Series in Operations Research & Management Science, vol 252. Springer, Cham. https://doi.org/10.1007/978-3-319-53518-0_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-53518-0_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-53516-6

Online ISBN: 978-3-319-53518-0

eBook Packages: Business and ManagementBusiness and Management (R0)