Abstract

One of the most important tasks that humans face is communication in complex, noisy acoustic environments. In this chapter, the focus is on populations of children and adult listeners who suffer from hearing loss and are fitted with cochlear implants (CIs) and/or hearing aids (HAs) in order to hear. The clinical trend is to provide patients with the ability to hear in both ears. This trend to stimulate patients in both ears has stemmed from decades of research with normal-hearing (NH) listeners, demonstrating the importance of binaural and spatial cues for segregating multiple sound sources. There are important effects due to the type of stimuli used, testing parameters, and auditory task utilized. The review of research in hearing impaired populations notes auditory cues that are potentially available to users of CIs and HAs. In addition, there is discussion of limitations resulting from the ways that devices handle auditory cues, auditory deprivation, and other factors that are inherently problematic for these patients.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

10.1 Introduction

One of the most important tasks that humans face is communication in complex, noisy acoustic environments. As the many chapters in this book focus on how normal-hearing (NH) listeners deal with the “cocktail party problem,” here the focus is on particular populations of listeners who suffer from hearing loss and are fitted with cochlear implants (CIs) and/or hearing aids (HAs) in order to hear. The clinical trend is to provide patients with the ability to hear in both ears and has stemmed from decades of research with NH listeners, demonstrating the importance of binaural and spatial cues for segregating multiple sound sources. There are important effects due to the type of stimuli used, testing parameters, and auditory task utilized. Although much of the research was originally conducted with adults, recently different investigators have adapted testing methods appropriate for children. Those studies are thus able to gauge the impact of hearing loss and the potential benefits of early intervention on the development of the ability to segregate speech from noise. The review of research in hearing impaired populations notes auditory cues that are potentially available to users of CIs and HAs. In addition, there is discussion of limitations due to the ways that devices handle auditory cues, auditory deprivation, and other factors that are inherently problematic for these patients.

The overall goal of clinically motivated bilateral stimulation is to provide patients with the auditory information necessary for sound localization and for functioning in cocktail party or other complex auditory environments. When adults with hearing loss are concerned, there is emphasis on minimizing social isolation and maximizing the ability to orient in the environment without exerting undue effort. In the case of children, although these aforementioned goals are also relevant, there are concerns regarding success in learning environments that are notoriously noisy, and significant discussion regarding the need to preserve hearing in both ears because future improved stimulation approaches will be most ideally realized in people whose auditory system has been stimulated successfully in both ears.

In the forthcoming pages, results from studies in adults and children are described, focusing on their ability to hear target speech in the presence of “other” sounds. In the literature those “other” sounds have been referred to, sometimes interchangeably, as maskers, interferers, or competitors. Here the term interferers is used because the context of the experiments is that of assessing the interference that background sounds have on the ability of hearing impaired individuals to communicate. It has long been known that spatial separation between target speech and interferers can lead to improved speech understanding. A common metric in the literature that is used here is “spatial release from masking” (SRM), the measured advantage gained from the spatial separation of targets and interferers; it can be quantified as change in percent correct of speech understanding under conditions of spatial coincidence versus spatial separation, or as change in speech reception thresholds (SRTs) under those conditions. The SRM can be relatively small when target and interferers have already been segregated by other cues, such as differing voice pitches. However, the SRM is particularly large when the interferer is similar to the target (e.g., two same-sex talkers with approximately the same voice pitch) and few other segregation cues are available. In such conditions, the listener must rely on spatial cues to segregate the target from the interferer. SRM is also particularly large for NH listeners when the interferer consists of multiple talkers as would occur in a realistic complex auditory environment (Bronkhorst 2000; Hawley et al. 2004; Jones and Litovsky 2011). For example, SRM can be as high as a 12-dB difference in SRTs under binaural conditions, especially when multiple interferers are present and the interferers and target speech are composed of talkers that can be confused with one another. SRM can be as low as a 1- to 2-dB difference in SRT under conditions when binaural hearing is not available. An important note regarding SRM and “real world” listening conditions is that the advantage of spatial separation is reduced in the presence of reverberation, whereby the binaural cues are smeared and thus the locations of the target and interferers is not easily distinguishable (Lavandier and Culling 2007; Lee and Shinn-Cunningham 2008).

In this chapter, results from studies in adults with CIs and with HAs are reviewed first, followed by results from children with CIs and with HAs.

10.2 Adults at the Cocktail Party

10.2.1 Factors that Limit Performance

Listening in cocktail parties is generally more of a challenge for listeners who suffer from hearing loss than for listeners with an intact auditory system. The limitations experienced by hearing impaired individuals generally can be subdivided into two main categories. One set of factors is the biological nature of the auditory system in a patient who has had sensorineural hearing loss, suffered from auditory deprivation, and has been stimulated with various combinations of acoustic and/or electric hearing. A second set of factors is the nature of the device(s) that provide hearing to the patient, whether electric or acoustic in nature. Although CIs and HAs aim to provide sound in ways that mimic natural acoustic hearing as much as possible, the reality is that these devices have limitations, and those limitations are likely to play a role in the patients’ ability to function in complex listening situations.

10.2.2 Physiological Factors that Limit Performance in Hearing Impaired Individuals

Hearing impairment can arise from a number of different factors, some of which are hereditary, some that are acquired (such as ototoxicity, noise exposure, etc.), and others that have unknown causes. In some patients, these factors can result in hearing loss that is single sided or asymmetric across the ears. In other patients, the hearing loss can be more symmetric. It is important to consider the extent of hearing loss and change over time, as many patients undergo progressive, continued loss of hearing over months or years. For each patient, access to useful auditory information will be affected by access to sound during development and by the health of the auditory system. Finally, the extent to which binaural cues are available to the patient is one major factor that will likely determine their success with performing spatial hearing tasks. Research to date has attempted to identify the exact level within the auditory system at which the availability of binaural cues is most important, but ongoing work is needed to address this important issue.

For patients who use CIs, additional factors need to be considered. Owing to their diverse and complex hearing histories, CI users are generally a more variable group than NH listeners. Longer periods of auditory deprivation between the onset of deafness and implantation can lead to atrophy, and possibly poor ability of the auditory neurons to process information provided by auditory input. The condition of the auditory neural pathway can be affected by several other factors as well, including age, etiology of deafness, and experience with HAs or other amplification. Another factor that applies to both CI users and other hearing impaired populations is that poor innervation can result in decreased audibility and frequency selectivity, and can manifest as spectral dead regions, or “holes in hearing” (e.g., Moore and Alcántara 2001). These holes that can lead to difficulty understanding certain speech sounds, a need for increased stimulation levels, and discrepancies in frequency information between the ears (Shannon et al. 2002). Neural survival is both difficult to identify and even harder to control for in CI users.

The delicate nature of CI surgery can introduce further variability and complications that affect binaural processing. The insertion depth of the electrode into the cochlea is known to be variable (Gstoettner et al. 1999) and can be difficult to ascertain. Because frequencies are mapped along the length of the cochlea, with low frequencies near the apex; shallow insertion depths can truncate middle or low frequency information. This has been shown to reduce speech recognition (Başkent and Shannon 2004). In the case of bilateral cochlear implants (BICIs), differing insertion depths between the ears can lead to an interaural mismatch in perceived frequencies. Using CI simulations, this has been shown to negatively affect binaural benefit with speech recognition (Siciliano et al. 2010) and to degrade the reliability of binaural cues such as interaural time differences (ITDs) and interaural level differences (ILDs) (Kan et al. 2013, 2015). In addition, the distance between the electrode array and the modiolus (central pillar of the cochlear) is not uniform along the cochlea or identical between the ears. For electrodes that are located further from the modiolus, high levels of stimulation are sometimes needed to elicit auditory sensation. These high stimulation levels excite a wider range of neurons, causing a broader spread of excitation along the length of the cochlea; again, using simulations of CIs, this has been shown to reduce frequency selectivity and word recognition (Bingabr et al. 2008) and may be detrimental to binaural processing abilities.

10.2.3 Devices

10.2.3.1 Cochlear Implants

For patients with a severe to profound hearing loss, the multichannel CI is becoming the standard of care for providing access to sound. For children, CIs have been particularly successful at providing access to acquisition of spoken language and oral-auditory communication. The CI is designed to stimulate the auditory nerve and to bypass the damaged cochlear hair cell mechanism. A full review of the CI speech processor and the internal components that are surgically implanted into the patient is beyond the scope of this chapter (Loizou 1999; Zeng et al. 2011). However, the important points are summarized as follows. In a CI sound processor, a bank of bandpass filters separates the incoming signal into a small number of frequency bands (ranging from 12 to 22), from which the envelope of each band is extracted. These envelopes are used to modulate the amplitudes of electrical pulse trains that are presented to electrodes at frequency-specific cochlear loci. In general, uniform pulse rates across the channels have been used in speech processing strategies, although some recent advances have led to mixed-rate approaches (Hochmair et al. 2006; Churchill et al. 2014) whose success is yet to be determined. By its basic design, current speech-coding strategies in clinical CI processors eliminate the temporal fine structure in the signal and focus on transmitting information provided by the time-varying envelope at each bandpassed channel. The result is loss of spectral resolution and temporal fine-structure cues, both of which are important for binaural hearing. In addition, CIs typically work independently of each other and act as unsynchronized monaural signal processors. This independent processing can distort the transmission of binaural cues, which are beneficial for sound localization and speech understanding in noise.

Several factors could limit the amount of SRM achieved by BICI listeners. First, the envelope encoding employed by most CI speech coding strategies removes usable fine-structure information (Loizou 2006) which limits the ability of CIs to convey the potent low-frequency ITDs (Wightman and Kistler 1992; Macpherson and Middlebrooks 2002) that are likely to be important for SRM (Ihlefeld and Litovsky 2012). Second, even if the fine-structure cues were available, the fact that CIs do not have obligatory synchronization of the stimulation between the ears results in poor or improper encoding of ITD cues. Third, it is difficult to represent binaural cues with fidelity for complex stimuli such as those that occur in natural everyday situations. Speech stimuli are dynamic, with ongoing dynamic changes in spectral and temporal information, rendering the encoding of binaural cues for speech sounds extremely difficult. An additional issue is that cochlear spread with monopolar stimulation, which is nearly 5 mm (Nelson et al. 2008) is likely to have further detrimental effects on binaural encoding of complex stimuli. Recent work has shown that when multiple binaural channels receive carefully controlled ITDs, CI users are able to extract this information with little interference across electrodes. The effectiveness of this approach for improving SRM remains to be better understood. The modulations in speech envelopes may also distort binaural cues (Goupell et al. 2013; Goupell 2015; Goupell and Litovsky 2015); amplitude modulations affect the loudness of stimuli, and the range of perceived loudness is not necessarily similar at all the electrodes across the electrode array within each ear, across the ears. Currently, CI processors and mapping consider only threshold and comfortable levels, and apply a generic compression function for all electrodes. As a modulated stimulus, such as speech, varies in instantaneous amplitude, distorted ILDs are produced. In summary, binaural cues presented through clinical processors are likely distorted by multiple mechanisms, which could in turn reduce perceived separation between targets and interferers in situations in which BICI listeners might otherwise benefit from SRM.

10.2.3.2 Hearing Aids

For patients with some usable residual hearing, the standard of care has been the prescription of HAs. The purpose of a HA is to partially compensate for the loss in sensitivity due to cochlear damage by the amplification of select frequencies. For listening in noisy situations, the amount of amplification that can be provided by HAs is typically limited by feedback issues, patient comfort, and audible dynamic range. Most modern HAs are digital, which means that the amplification stage is done through digital signal processing rather than analog electronic circuits.

Up to the 1990s, most HAs worked as linear amplifiers. However, loudness recruitment and the reduced dynamic range of patients with hearing impairment limited the usefulness of these HAs. Automatic gain control (AGC) systems, or compression, are now used in HAs to reduce the range of incoming sound levels before amplification to better match the dynamic range of the patient. At low input levels, the gain (ratio between the output and input levels) applied to the incoming signal is independent of input level. At high input levels, the gain decreases with increasing input level; that is, the input signal is compressed. For a more comprehensive overview of compression systems, see Kates and Arehart (2005). The use of compression in HA has generally provided positive results compared to linear amplification. However, different implementations of compression can have different consequences on performance, and there is no consensus on the best way to implement compression in HAs (Souza 2002).

10.3 Adults with Cochlear Implants

10.3.1 Availability of Spatial Cues

When listening to speech in quiet environments, adults with CIs can perform relatively well. However, listening to speech in a quiet environment is highly unrealistic, especially for listeners who spend much of their day communicating in environments with multiple auditory sources. Anecdotal reports, as well as research findings, clearly demonstrate that listening in noisy environments can be challenging for CI users even if they perceive themselves to be functioning very well with their devices.

In the context of controlled research environments, research has been aimed at simulating aspects of realistic auditory environments. The difficulty experienced by listeners in extracting meaningful information from sources of interest results in reduced access to information in the target auditory source. Auditory interference is often referred to as being accounted for by effects that are attributed to either the energy in the interferers or the information that the interferers carry (Culling and Stone Chap. 3; Kidd and Colburn, Chap. 4). The former is thought to occur at the level of the peripheral auditory system when the target and interfering stimuli overlap in the spectral and temporal domains. The latter is thought to occur more centrally within the auditory system and is due to auditory and nonauditory mechanisms. The definitions and auditory mechanisms involved in these phenomena are more controversial within the field of psychoacoustics, but informational effects are often attributed to uncertainty of which stimulus to attend to and/or similarity between the target and interfering stimuli (Durlach et al. 2003; Watson 2005).

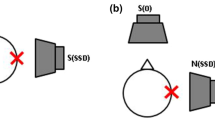

One way to improve the perceptual clarity of a target signal and to provide subjects with greater access to the content of the speech is to separate the target spatially from the interfering sources. Figure 10.1 shows three configurations, where the target is always at 0° in front, and the interfering sounds are either also in front or on the side. This figure is intended to provide a summary of the auditory cues that are typically available for source segregation in these listening situations. To quantify the magnitude of SRM, performance is measured (e.g., percent correct speech understanding) in conditions with the target and interferer either spatially separated or co-located. A positive SRM, for example, would be indicated by greater percent correct in the separated condition than the co-located condition. SRM can also be measured by comparing SRTs for the co-located versus separated conditions, and in that case positive SRM would be reflected by lower SRTs in the separated condition.

SRM relies on effects due to differences in the locations of the interferers relative to the location of the target, while holding constant unilateral or bilateral listening conditions. In contrast, other related effects that have been investigated result from comparing performance between unilateral and bilateral listening conditions. Figure 10.2 shows these three effects, widely known as the head shadow, binaural squelch, and binaural summation. The head shadow effect results from monaural improvement in signal-to-noise ratio (SNR) of the target speech. A measured improvement in speech understanding is due to the fact that the head casts an acoustic shadow on the interferer at the ear that is farther (on the opposite side of the head) from the interferer. In Fig. 10.2 (top), the left ear is shadowed, meaning that the intensity of the interferer is attenuated by the head before reaching the left ear. For this condition, the target speech in the left ear has a better SNR than that in the right ear. The second effect, known as squelch (Fig. 10.2, middle), refers to the advantage of adding an ear with a poorer SNR compared with conditions in which listening occurs only with an ear that has a good SNR. Binaural squelch is considered to be positive if performance is better in the binaural condition than in the monaural condition, despite having added an ear with a poor SNR. The third effect, binaural summation (Fig. 10.2, bottom), results from the auditory system receiving redundant information from both ears, and is effectively demonstrated when SRTs are lower when both ears are activated compared to when only one ear is activated. These effects will be considered in the following sections of the chapter in relation to the research conducted with hearing impaired individuals.

10.3.2 Binaural Capabilities of Adult BICI Users

The main goal of BICIs is to provide CI users with access to spatial cues. One way to evaluate success of BICIs is to determine whether patients who listen with two CIs gain benefit on tasks that measure SRM. Numerous studies have shown that head shadow and summation occur in BICI users. However, compared with NH listeners, there is a diminished amount of squelch, and unmasking due to binaural processing per se. Although this chapter focuses on SRM, it is worthwhile to pay attention to binaural capabilities in the subject population of interest, because diminished capacities in binaural sensitivity are likely to be related to reduction in unmasking of speech that occurs when binaural cues are readily available.

Much of the research on binaural capabilities of adults with BICIs has been performed with BICI listeners who have developed a typical auditory system because they were born with typical hearing and lost hearing after acquisition of language. One assumption that could be made is that these listeners have a fully intact and functioning central auditory system and that the problems of encoding the auditory stimulus are limited to the periphery. This is in contrast to prelingually deafened BICI listeners, in whom it is unclear if the correct development of the binaural system has occurred (Litovsky et al. 2010).

10.3.3 Sound Localization

A prerequisite for achieving SRM might be to perceive the multiple sound sources at different locations. This perception is produced by the ITDs and ILDs in the horizontal place, and location-specific spectral cues in the vertical plane (Middlebrooks and Green 1990). When using clinical processors, adult BICI listeners can perceive different locations in the horizontal plane, and generally localize better when listening through two CIs versus one CI (Litovsky et al. 2012). In general, performance is better than chance when listening either in quiet (van Hoesel and Tyler 2003; Seeber and Fastl 2008) or in background noise (Kerber and Seeber 2012; Litovsky et al. 2012) though it seems that BICI listeners are relying more on ILDs than ITDs to localize sounds (Seeber and Fastl 2008; Aronoff et al. 2010). This is in contrast to NH listeners who weight ITDs more heavily than ILDs for sound localization (Macpherson and Middlebrooks 2002). Although BICI listeners do not appear to rely on ITDs for sound localization with their clinical processors, ITD sensitivity has been demonstrated in BICI listeners when stimulation is tightly controlled and presented using synchronized research processors (e.g., see Litovsky et al. 2012; Kan and Litovsky 2015 for a review). One notable important control is that ITD sensitivity is best when the electrodes that are activated in the two ears are perceptually matched by place of stimulation. The goal of using interaurally pitch-matched pairs of electrodes is to activate neurons with similar frequency sensitivity so that there may be a greater chance of mimicking the natural manner in which binaural processing occurs at the level of the brainstem of NH mammals. However, with monopolar stimulation, which produces substantial spread of excitation along the basilar membrane, BICI listeners appear to be able to tolerate as much as 3 mm of mismatch in stimulation between the right and left ears before showing significant decreases in binaural sensitivity (Poon et al. 2009; Kan et al. 2013, 2015; Goupell 2015). In contrast, ILDs seem even more robust than ITDs to interaural place-of-stimulation mismatch (Kan et al. 2013). Studies have shown that when interaurally pitch-matched pairs of electrodes are stimulated, ITD sensitivity varies with the rate of electrical stimulation. ITD sensitivity is typically best (discrimination thresholds are approximately 100–500 µs) at low stimulation rates (<300 pulses per second [pps]) and tends to be lost at stimulation rates above 900 pps. However, ITD sensitivity is also observed when low modulation rates are imposed on high-rate carriers (van Hoesel et al. 2009; Noel and Eddington 2013). The ITD thresholds reported in many BICI users are considerably greater (i.e., worse) than the range of 20–200 µs observed in NH listeners when tested with low-frequency stimulation or with high-rate carriers that are amplitude modulated (Bernstein and Trahiotis 2002); however, note that several BICI users can achieve ITD thresholds in this range, as low at about 40–50 µs (Bernstein and Trahiotis 2002; Kan and Litovsky 2015; Laback et al. 2015).

10.3.4 Binaural Masking Level Differences

Another binaural prerequisite to achieve SRM in BICI listeners is binaural unmasking of a tone in noise, otherwise known as a binaural masking level difference (BMLD). This form of unmasking is similar to SRM, but experiments are performed with simpler signals. A BMLD is the degree of unmasking that is measured when detecting a tone-in-noise with dichotic stimuli (e.g., noise in phase, tone out of phase = N0Sπ) compared to a diotic stimuli (e.g., noise in phase, tone in phase = N0S0). Several studies have shown that BICI listeners can achieve BMLDs up to about 10 dB using single-electrode direct stimulation when the amplitude modulations are compressed as in a speech processing strategy (Goupell and Litovsky 2015) and can be quite large if amplitudes do not have a speech processing strategy amplitude compression (Long et al. 2006). Lu et al. (2011) took the paradigm a step further by also measuring BMLDs for multiple electrode stimulation, which is more akin to multielectrode stimulation needed to represent speech signals. Figure 10.3 shows a BMLD of approximately 9 dB for single-electrode stimulation; in that study there was a reduction of the effect size to approximately 2 dB when multielectrode stimulation was used (not shown in this figure). These findings suggested that spread of excitation along the cochlea in monopolar stimulation, which is known to produce masking and interference, also results in degraded binaural unmasking. Using auditory evoked potentials to examine this issue, the authors found that conditions with larger channel interaction correlated with psychophysical reduction in the BMLD. The studies on BMLDs offer insights into binaural mechanisms in BICI users. The fact that BMLDs can be elicited suggests that the availability of carefully controlled binaural cues could play an important role in the extent to which patients demonstrate SRM. In cases in which BMLDs in BICI users are poorer than those observed in NH listeners, insights can be gained into the limitations that BICI users face. These limitations include factors such as neural degeneration and poor precision of binaural stimuli in the context of monopolar stimulation.

Data are plotted for conditions with diotic stimuli (dark fills) or dichotic stimuli (white fills). In the former, noise in the right and left ears in phase for both signal and noise, hence referred to as N0S0). In the latter, noise in the right and left ears is in phase, while the tone in the right and left ears is out of phase, hence referred to as N0Sπ. (Replotted with permission from Lu et al. 2011.)

10.3.5 SRM in BICI Users

From a historical perspective, there has been increasing interest in examining SRM in CI users. The first study, by van Hoesel et al. (1993), tested only co-located conditions in one bilateral CI listener using unsynchronized clinical processors. After 12 months of use, bilateral presentation produced about 10–15% of a binaural summation advantage (see Fig. 10.2). Although it was a case study, the data suggested that the approach was valuable and numerous studies thereafter addressed similar questions. For instance, Buss et al. (2008) tested 26 postlingually deafened BICI users with a short duration of deafness before implantation, and with nearly all patients undergoing simultaneous bilateral implantation. Target and interferer were either co-located or spatially separated by 90°. Testing was repeated at 1, 3, 6, and 12 months after activation of the CIs. Significant head shadow and binaural summation benefits were observed at 6 and 12 months after CI activation. Binaural squelch became more apparent only 1 year after activation (Eapen et al. 2009). In this study, performance was also measured as change in percent correct. Litovsky et al. (2009) tested a very similar population of 37 postlingually deafened BICI users, and measured change in performance as change in SRT. Within 6 months after activation of the CIs, the largest and most robust bilateral benefit was due to the head shadow effect, averaging approximately 6 dB improvement in SRT. Benefits due to binaural summation and squelch were found in a small group of patients, where effect sizes were more modest, 1–2 dB change in SRT. These and numerous other studies have generally shown that the largest benefit of having BICIs is accounted for by the head shadow, or being able to attend to an ear at which the target has a good signal-to-noise ratio.

In addition to the studies using clinical processors, a few studies have investigated SRM using more controlled stimulation approaches that attempted to provide ITDs directly to the CI processors. These approaches were motivated by the idea that maximizing control over the ITDs presented to the BICIs would improve speech perception through increased SRM, mainly through the squelch mechanism. van Hoesel et al. (2008) imposed spatial separation by presenting the noise from the front and the target speech at one ear. One of the main purposes of this experiment was to compare three different types of speech coding strategies, two that were envelope based and one that explicitly encoded temporal fine-structure information. None of the speech coding strategies produced a significant binaural unmasking of speech. There are many possible reasons why squelch was not observed in this study. For one, the fine-structure ITDs were slightly different across electrode pairs, following that of the individual channel, and each channel had a high rate carrier, which could have blurred the perceived spatial locations of targets and interferers. Recent research from Churchill et al. (2014) showed a larger benefit of sound localization and ITD discrimination when coherent fine-structure ITDs are presented on multiple electrodes redundantly, particularly when the low-frequency channels have lower rate stimulation. It is possible that with this newer type of fine-structure encoding, squelch would be achieved. Another problem with the van Hoesel et al. (2008) study is that they had only four subjects, who may have had long durations of deafness and were sequentially implanted, as compared to the 26 subjects with short duration of deafness and simultaneous implantations studied by Buss et al. (2008).

Another approach to tightening control over the binaural stimuli presented to BICI users was that of Loizou et al. (2009). In that study, a single binaural digital signal processor was used to present stimuli to both the right and left CIs. Stimuli were convolved through head-related transfer functions (HRTFs), and hence ITDs and ILDs were preserved at the level of the processor receiving the signals. This study was designed so as to replicate a previous study in NH listeners by Hawley et al. (2004) and compared results from BICI users directly with those of the NH listeners. One goal of this study was to examine whether BICI users experience informational masking similar to that seen in NH listeners; evidence of informational masking in these studies would be a larger amount of SRM with speech versus noise interferers. Figure 10.4 shows summary data from the Loizou et al. (2009) study only when speech interferers were used. Unlike NH listeners, in BICI users the SRM was small, around 2–4 dB, regardless of condition. In only a few cases the noise and speech interferers produced different amounts of spatial advantage. In the NH data SRM was much larger, especially with speech interferers compared to noise interferers. This finding has been interpreted as evidence that NH listeners experience informational masking with speech maskers due to target/interferer similarity. Thus, when the target and interferers are co-located the ability to understand the target is especially poor, and spatial separation renders spatial cues particularly important. Finally, NH listeners showed effects due to both binaural and monaural spatial unmasking. In contrast, and as can be seen in Fig. 10.4, BICI users generally show monaural effects with weak or absent binaural unmasking effects. In summary, even with attempted presentation of binaural cues at the input to the processors, squelch was not observed. This is probably due to the fact that the ITDs and ILDs present in the signals were not deliberately sent to particular pairs of electrodes. Thus, ITDs and ILDs were likely to have been disrupted by the CI processors at the level of stimulation in the cochlear arrays.

SRM data are shown for subjects with normal hearing (NH) and bilateral cochlear implants (BICIs). Values are shown for the total amount of SRM; amount accounted for by binaural processing; and the remainder, which is attributed to monaural processing. (Replotted with permission from Loizou et al. 2009.)

Binaural unmasking of speech in BICI listeners was tested more recently by Bernstein et al. (2016) in an attempt to resolve many of the issues regarding the role of binaural cues in SRM. Conditions included a target monaurally compared to an interferer presented monaurally (the same ear) or diotically to both ears, thus attempting to produce the largest spatial separation with an effectively infinite ILD. Note that no head shadow occurs in such a paradigm; thus the effects observed are most likely related to the binaural squelch effect. A range of interferers were tested: noise and one or two talkers. The target and interferers came from the same corpus, known to produce high informational masking, and performance was measured at a range of levels that varied the target-to-masker (interferer) ratios (TMRs). Results from eight postlingually deafened BICI listeners showed 5 dB of squelch in the one-talker interferer condition, which is much larger than that in the previous studies, possibly because of the particular methodology that sought to maximize binaural unmasking of speech. Another interesting result from this study is that the amount of unmasking was larger for lower TMRs; many previous studies found only the 50% SRT and therefore may have missed larger squelch effects. Relatively smaller amounts of unmasking were observed for noise or two talkers; in NH listeners there is typically larger SRM for more interfering talkers. Interestingly, one BICI listener who had an early onset of deafness consistently showed interference (i.e., negative binaural unmasking) from adding the second ear. It may not be a coincidence that this listener was in the group that may not have a properly developed binaural system.

By way of highlighting some of the main effects, Fig. 10.5A shows summary data from this study, as well as from Loizou et al. (2009) and two other studies that measured the three effects (squelch, summation, and head shadow) as improvement in SRT. As can be seen from Fig. 10.5A, head shadow is the major contributor to the SRM improvement. Summation and squelch are about equal in their contribution to SRM.

10.3.6 Simulating Aspects of CI Processing for Testing in Normal-Hearing Listeners

Research to date provides a window into the successes and shortcomings of the currently available CI technology, and underscores difficulties that CI users experience, especially when listening to speech in noisy environments. These studies do carry some inherent limitations, however, including high intersubject variability and lack of control over many aspects of signal processing. These limitations are somewhat overcome by the use of multichannel vocoders, which enable the manipulation and testing of CI signal processing algorithms in NH listeners. The application of this approach to NH listeners is particularly useful because of the presumed unimpaired peripheral auditory systems in these listeners. NH listeners also have less variance across the population, making them ideal subjects for studies that attempt to exclude individual variability as a factor. In addition, factors such as the effect of processor synchronization, spread of excitation along the cochlea with monopolar stimulation, and matched placed of stimulation across the ears can be simulated and individually investigated under ideal conditions.

Multichannel vocoders mimic the same preprocessing steps as in CI processing; that is the acoustic input is divided into a number of frequency bands, and the envelope in each band is extracted for modulation of a carrier. The carriers can be sine tones or bandpass noise, depending on what aspects of CI electrical stimulation the vocoder is attempting to mimic. These modulated carriers are then recombined and presented to a NH listener via headphones or loudspeakers. Vocoders have been used to investigate many aspects of cochlear implant performance including the effect of mismatched frequency input between the ears on binaural processing (Siciliano et al. 2010; van Besouw et al. 2013). To date, few research studies have used vocoders as a tool to investigate the SRM performance gap between NH and BICI users. Garadat et al. (2009) used vocoders to investigate whether BICI users’ poor performance in binaural hearing tasks was an effect of the lack of fine structure ITD cues. They tested this hypothesis using two conditions. In the first, they processed stimuli through a vocoder and then convolved the vocoded stimuli through HRTFs. This order of processing replaced the fine structure with sine tones, but allowed for fine-structure ITD to remain in the carrier signal. In the second condition, the order of processing was reversed, eliminating fine structure ITDs and more accurately simulating CI processing. Listeners averaged 8–10 dB SRM in conditions with more degraded spectral cues. In addition, performance was not significantly different between orders of processing, which the authors interpreted to indicate that the removal of temporal fine structure cues is not a key factor in binaural unmasking. Freyman et al. (2008) also found that vocoder processing, with fine structure removed, allowed for SRM when stimuli were delivered via loudspeakers, as long as adequate informational masking was present. The authors interpreted the findings to indicate that, should there be ample coordination between the CIs, the signal processing would preserve adequate spatial information for SRM. Thus, poor performance from BICI users is likely due to other factors. One such factor was investigated by Garadat et al. (2010), who simulated spectral holes that extended to 6 or 10 mm along the cochlea, at either the basal, middle, or apical regions of the cochlea. The results of this study attest to the relative frailty of the binaural system and the importance of various portions of the frequency spectrum for binaural processing.

Bernstein et al. (2016) directly compared BICI listener performance to NH listeners presented with eight-channel noise vocoded stimuli. They found very similar trends between the two groups, demonstrating that such a comparison is useful in understanding expectations for the BICI listeners. However, there was much more squelch in the NH listeners than in the BICI listeners, which might be explained by the myriad of factors already outlined, such as deficiencies at the electrode–neural interface, differences in insertion depth, and so forth.

It should be acknowledged that there are limitations to the use of vocoders. To deliver spatialized stimuli to NH subjects, stimuli must be processed using HRTFs, and it is not well understood if the effects of vocoders processing on HRTF cues are similar to those that occur with CIs in actual three-dimensional space. In addition, acoustic stimulation with processed signals is fundamentally different from the direct electrical stimulation that occurs with CIs, and the systematic differences between acoustic and electrical stimulation are not well understood.

10.4 Adults with HAs

Similar to CIs, the effectiveness of HAs in helping speech understanding in noisy situations is affected by an interplay of individual differences, acoustical factors, and technological issues. However, the elements surrounding these factors are largely different between CIs and HAs. Whereas research in CIs has focused on the transmission of appropriate acoustic cues by electric hearing, HA research and development has been concerned with audibility. In general, satisfaction toward modern HAs has increased (Bertoli et al. 2009; Kaplan-Neeman et al. 2012). Speech understanding has improved, in part due to improved SNR before presentation.

10.4.1 Unilateral Versus Bilateral Fitting

One of the important clinical questions when considering HAs is whether one or two HAs should be prescribed. For the case of a unilateral hearing impairment, the choice of aiding the poorer ear may appear to be the obvious choice. However, in the case of a bilateral hearing impairment, the choice is not as obvious, especially if the impairment is asymmetric in the two ears and the patient can afford only one HA. If one has to choose a single ear, which of the two ears should be amplified is an area of debate. In some listening conditions aiding the poorer ear generally leads to improved hearing ability and is preferred by a majority of patients (Swan and Gatehouse 1987; Swan et al. 1987), while in other listening situations, aiding the better ear was found to be more beneficial (Henkin et al. 2007). Even when two HAs are prescribed, the literature does not show a consistent benefit. Henkin et al. (2007) reported bilateral interference in speech understanding in about two-thirds of their subjects. In contrast, Kobler and Rosenhall (2002) reported significant improvement when two HAs were used compared to an aid in the better ear. These conflicted results typically arise from differences in test setups and the amount of hearing impairment, which highlights the fact that the benefit of having bilateral HA over unilateral is situation dependent, and typically more useful in more demanding environments (Noble and Gatehouse 2006). Despite conflicting results, there appears to be a general trend that the advantage of bilateral fitting for speech intelligibility increases with increasing degree of hearing loss (Mencher and Davis 2006; Dillon 2012), though this may not be necessarily be predictive of HA user preferences (Cox et al. 2011).

10.4.2 Bilateral Benefits

In theory, prescription of bilateral HAs should provide improvements for both sound localization and speech-in-noise performance. Both these abilities are important in a cocktail party–like situation. As previously described, sound localization ability helps with distinguishing the location talkers from one another in a private conversation, and from among the background chatter of the cocktail party. Being able to understand speech in the noisy environment is important for being able to carry on a conversation.

The effect of hearing impairment on sound localization ability is related to the audibility of cues important for sound localization. For high-frequency hearing loss, common among those with hearing impairment, there is a decrease in vertical localization ability and an increase in front–back confusions, because high-frequency cues are important in helping discriminate these locations. In addition, for a sound located on the horizontal plane the ability to use ILDs for localization is also reduced. The ability to use low-frequency ITDs appears to be only mildly affected, and deteriorates only when low-frequency hearing loss exceeds about 50 dB. Bilateral aids increase the audibility of sounds in both ears, such that ITD and ILD cues can be heard and compared by the auditory system. Thus, the benefit of bilateral aids will likely be more significant for those with moderate to severe losses, compared to those with a mild hearing loss. Front–back confusions and vertical localization ability typically remain the same regardless of amount of hearing loss. This may be because the high-frequency cues necessary for the restoration of these abilities are not sufficiently audible, or because the microphone location of the HA is not in a location that maximally captures the spectral cues important for front–back and vertical location discrimination. Byrne and Nobel (1998) and Dillon (2012) provide excellent reviews on the effects of hearing impairment on sound localization ability and how HAs can provide benefit.

For speech-in-noise understanding, there are a number of binaural benefits arising from being able to hear with both ears, particularly in a cocktail party–like situation in which the target and maskers are spatially separated. These include binaural squelch and summation. Together with the head shadow effect, these benefits provide a spatial release from masking. In essence, the provision of bilateral HAs is to increase sensitivity to sounds at both ears in the hope that the same binaural advantages that are available in the NH listeners can occur, though not necessarily to the same degree. Hence, those with greater hearing loss are more likely to show a benefit from bilateral HAs (Dillon 2012). Bilateral HAs are also more likely to provide a benefit when the listening situation is more complex, such as in cocktail parties, though benefits of SRM have typically been much less that those observed in NH listeners (Festen and Plomp 1986; Marrone et al. 2008). However, the use of bilateral HA has been shown to lead to improved social and emotional benefits, along with a reduction in listening effort (Noble and Gatehouse 2006; Noble 2010).

10.4.3 Technological Advances

A known limitation faced by HA users (whether unilateral or bilateral) is whether the target signal can be heard above the noise. Hence, much of the progress in HA technology has focused on improving the SNR of the target signal before presentation to the HA user. This has included the introduction of directional microphone technology, noise reduction signal processing algorithms, and wireless links between HAs to provide bilateral processing capabilities.

Directional microphones attempt to improve the SNR of a target signal by exploiting the fact that target and noise signals are typically spatially separated. The directivity of a microphone can be changed such that maximum amplification is provided to a target signal located in a known direction (usually in front of the listener), and sounds from other directions are given much less amplification. This is usually achieved by forming a directional beam by combining the signals from two or more microphones. Comprehensive overviews of the different ways an array of microphone signals can be combined to shape the directivity of a microphone can be found in Chung (2004) and Dillon (2012). In laboratory test settings, SNR benefits from directional microphones range from 2.5 to 14 dB, depending on test configuration (Bentler et al. 2004; Dittberner and Bentler 2007; Hornsby and Ricketts 2007). However, reported benefit in real life situations is much less than expected (Cord et al. 2002) because in real-world listening situations, environmental acoustics and the dynamics of the sound scene are more complex than those of laboratory conditions, which leads to poorer performance than expected.

In contrast to directional microphones, noise reduction algorithms attempt to exploit the time–frequency separation between target and noise signals. The aim of noise reduction algorithms is to identify which components of the incoming signal are from the target and provide greater amplification to these components, compared to the noise components. This is not an easy task and the different HA manufacturers each have their own algorithms for noise reduction. An overview of noise reduction algorithms can be found in Chung (2004) and Dillon (2012). In general, noise reduction algorithms may not necessarily improve speech intelligibility, but the HA user will find the background noise less troublesome (Dillon 2012).

A more recent development has been the development of wireless communication between the left and right HAs of a bilaterally fit HA user (Edwards 2007). Currently, the wireless link is typically used for linked volume control and a few other basic functions. However, connectivity between HAs opens up the opportunity for linked bilateral processing, such as sharing of computation cycles to increase computational speed and power (Edwards 2007), implementation of more advance directional microphone and noise reduction algorithms by combining the microphone inputs from both ears (e.g., Luts et al. 2010; Kokkinakis and Loizou 2010), and the linking of compression systems in both ears to improve speech intelligibility (Wiggins and Seeber 2013).

10.5 Introduction to Pediatric Studies

For the majority of children who currently receive CIs, the first time they are exposed to sound will be at their CI activation. The CI device provides children who are deaf with hearing via electrical stimulation to the auditory nerve, ultimately giving them the opportunity to develop spoken language and use speech as their primary mode of communication.

As discussed above in Sect. 10.1, SRM can provide an assessment of the extent to which listeners who are fitted with two CIs are able to use spatial cues for segregation of target speech from background interferers. SRM in CI users is clearly observed when listeners can rely on monaural head shadow cues. However, if listeners must rely on binaural cues, SRM is small. One interesting question is whether auditory history has an impact on SRM in ways that would demarcate between children and adults. Studies reviewed in Sect. 10.5.2 were aimed at understanding if children who are generally congenitally deaf and implanted at a young age are able to utilize spatial cues for source segregation in ways that adults cannot.

10.5.1 Studies in Children with BICIs

Children who are diagnosed with a profound bilateral sensorineural hearing impairment, and do not benefit from a HA, can receive either unilateral CIs or BICIs. However, the standard of care in most countries is to provide children with bilateral CIs. The goal from a clinical perspective is to provide children with improved speech understanding in noise, access to spatial hearing, and to stimulate both the right and left auditory pathways. While a unilateral CI can clearly lead to the development of relatively good speech understanding in quiet, bilateral implantation has clear benefits for the spatial unmasking of speech that has been described above. For children, these benefits are also framed in the context of auditory models and theories on plasticity, which argue for better results with stimulation of the neural pathways early in life. Despite the many advances and large body of evidence to support the benefit of having two CIs versus one, there continues to be a gap in performance when listening to speech in noise between children with BICIs and their NH peers.

In a number of studies conducted by Litovsky and colleagues, children with BICIs or with a CI and a HA (bimodal hearing) were studied using similar approaches to evaluating SRM as those used with adults. The first study showed that children with bimodal hearing demonstrated small SRM or “negative SRM” (performance was worse with interferers on the side compared with in the front) (Litovsky et al. 2006). By comparison, children with BICIs showed SRM that was small but consistent. One possible reason for weak SRM is that the children had been bilaterally implanted at an older age (early to mid-childhood), and their auditory system may not have adapted well to spatial cues through the CIs. In a subsequent study (Misurelli and Litovsky 2012), SRM was investigated in children whose activation with BICIs occurred at younger ages. Results from conditions with interferers positioned to one side of the head (asymmetrical configuration) showed SRM to be small but consistent (see Fig. 10.6, left). In that study, a novel condition was added with “symmetrical” interferers, intended to reduce the available benefit of the head shadow as well as create a more realistic listening environment where children have to rely mostly on binaural cues to segregate the auditory sources. Results from the symmetrical condition (Fig. 10.6, right) showed that, on average, children with BICIs demonstrated little to no SRM, and in some cases SRM was negative or “anti-SRM” was seen, similar to the finding in the children with bimodal hearing (Litovsky et al. 2006). These findings suggest that children with BICIs do benefit from having two CIs, and that the benefit arises largely due to the head shadow effect. Figure 10.5B shows data from two studies (Mok et al. 2007; Van Deun et al. 2010) that estimated the three effects summarized for adults in Fig. 10.5A—head shadow, summation, and squelch—also computed from differences in SRTs. The children’s data, similarly to those from adults, show largest effects from head shadow, and similar, small effects for summation and squelch.

Mean (± SD) SRM data are shown for children and adults with normal hearing and with bilateral cochlear implants. In this study, interferers were placed either asymmetrically around the head (at 90° to one side; see left side of graph) or symmetrically (at 90° to the right and left; see right side of graph). Statistically significant differences between groups are shown in brackets with an asterisk on top. (Replotted with permission from Misurelli and Litovsky 2012.)

It has been shown that spatial cues are more useful for NH children in source segregation tasks in which there are large amounts of perceived informational masking. When the target and interfering stimuli comprise speech or speech-like stimuli (such as time-reversed speech), children with NH demonstrate robust SRM (Johnstone and Litovsky 2006). The authors interpreted the findings to suggest that similarity between the target and interferers produced informational masking; thus the use of spatial cues for segregation was enhanced. In a recent study (Misurelli and Litovsky 2015), the effect of informational masking was investigated by comparing SRM with target and interferers that were either same sex (both target and interferers male) or different sex (male target, female interferers). Children with BICIs did not demonstrate a significant benefit from spatial cues in conditions that were designed to create more informational masking. The reasons for small SRM found in children with BICIs are poorly understood. Future work could provide insights into this issue by determining whether access to binaural cues through synchronized CI devices will be useful. Other factors may also be important, including the fact that the BICI pediatric population is not exposed to binaural cues during development, in the years when neural circuits the mediate SRM are undergoing maturation.

In an attempt to understand whether maturation of auditory abilities lead to better SRM, longitudinal SRM data were collected with BICI pediatric users (Litovsky and Misurelli 2016). Children participated in the same task that was used in the aforementioned studies; however, testing was repeated over a 2–4-year period at annual intervals. The goal of this testing was to determine whether SRM undergoes changes, possibly increasing in magnitude, as each child gained bilateral experience and acquired additional context for listening in noisy situations. The children were instructed to identify a target spondee (i.e., a two-syllable word with equal stress on both syllables) in the presence of competing speech. During testing, SRTs were measured in a front condition with the target and the interferers co-located at 0° azimuth, in an asymmetrical condition with the target at 0° and the two-talker interferers spatially separated 90° to the side of the first implant, and in a symmetrical condition with the target at 0° and one interferer at 90° to the right and one at 90° to the left. SRM was calculated by subtracting the SRTs either in the asymmetrical condition or in the symmetrical from the SRTs in the front condition. For the majority of children SRM did not improve as children gained bilateral experience, suggesting that the limitations of the CI devices are the primary factors that contribute to the gap in performance on spatial unmasking, rather than the amount of bilateral experience with the CI devices.

10.5.2 Sequential Versus Simultaneous BICIs

Although a gap in performance remains between children with NH and with BICIs, there is evidence (Litovsky et al. 2006; Peters et al. 2007) to suggest that children who have BICIs perform better than children with a unilateral CI on speech-in-noise tasks. As a result, many children currently undergo CI surgery around 1 year of age with the intention of bilateral implantation. Successful surgical implantation of BICI devices to very young children has led to the question of whether there is physiological and functional benefit to receiving two CIs within the same surgical procedure (simultaneously) versus receiving two CIs with a delay in between (sequentially). It is evident that considerable extended periods of unilateral auditory deprivation can negatively affect auditory development and outcomes of CI users.

For children receiving their BICIs sequentially, it appears that it is best to implant the second ear as early as possible, and that performance on speech-in-noise tasks with the second CI improves as the users gain experience listening with that device (Peters et al. 2007). Further, in children with sequentially implanted BICIs, more SRM is demonstrated when the interferers are directed toward the side of the second CI than when they are directed toward the first CI (Litovsky et al. 2006; Van Deun et al. 2010; Chadha et al. 2011). This suggests that the dominant CI is the side that was activated first (and is also the side with which the child has the most listening experience). However, even when interferers are directed toward the side of the first CI, thereby establishing a more difficult listening environment than with the interferers directed toward the second CI, some BICI users as young as 5 years of age do show SRM (Misurelli and Litovsky 2012). In children who receive their CIs sequentially, though, the amount of SRM demonstrated with interferers directed toward the first CI (dominant) is variable.

Neurophysiological changes in the auditory brainstem and cortex can help to indicate neuronal survival and reorganization after the CI is activated. Nonbehavioral responses to change in sound can be made using electrically evoked responses from the auditory nerve (electrically evoked response action potential [ECAP]) and brainstem (electrically evoked auditory brainstem [EABR]). These studies have shown greater success, and specifically better speech perception abilities, for children who receive BICIs with only a small delay between the first and second CI (Gordon and Papsin 2009). A recent study showed that if a child receives the second CI within 1.5 years of the first CI, symmetrical neurological development of the auditory brainstem and cortex is more likely to occur. Functionally, this can be associated with better speech perception, especially in noise (Gordon et al. 2013).

A recent psychoacoustic study measured performance on a speech-in-noise task comparing children with sequential and simultaneous BICIs, and revealed that the simultaneously implanted group had significantly better performance when listening to speech in noise (Chadha et al. 2011). More research is needed regarding the functional and cortical benefits of simultaneous implantation versus sequential implantation with a minimal delay in time between the first and the second implant. There is currently not enough evidence to suggest a specific age limit, or time limit between CIs, in which a child would no longer benefit from bilateral implantation (Litovsky and Gordon 2016).

10.5.3 Children with HAs

As with the decision to implant children at an early age, the decision surrounding the prescription of HAs for children is motivated primarily by developmental concerns. For children born with, or who acquire, hearing impairment, early diagnosis is seen as essential and the prescription of HAs is considered of high importance for the child to have a typical educational and social development (Dillon 2012).

Although the importance of having a typical development for a child with hearing impairment cannot be denied for social, educational, and economic reasons, the data supporting whether unilateral or bilateral HAs should be prescribed is mixed, and recommendations are influenced primarily by the particular outcome measure that is considered important. Dillon (2012) provides a detailed summary of the literature concerning the impact of a unilateral loss on different outcome measures. He argues that although the effect of unilateral loss on a child’s language and educational development is mixed, an effect is still prevalent in every study, and it is likely that having a hearing impairment, especially with an increasing loss, will make it more difficult for a child to easily acquire language and social skill. However, whether the aiding of the ear with hearing impairment is important is left open for discussion. Although amplification of the poorer ear may increase the possibility of bilateral benefits, it may also introduce binaural interference that may have a negative impact on development. It may seem, however, that early fitting of an HA may provide sound localization benefits for children with a unilateral hearing impairment (Johnstone et al. 2010).

For children with a bilateral loss, binaural amplification may not provide much additional benefit in terms of language development and understanding over unilateral amplification (Grimes et al. 1981), and children who use HAs do not seem to demonstrate SRM (Ching et al. 2011). However, the provision of HAs may be of importance for promoting near-typical development of the binaural auditory system. Neural development occurs most rapidly during the first few years of life, and having access to binaural auditory input may be important for promoting near-normal–like development of the binaural auditory system (Gordon et al. 2011). Having access to binaural acoustic stimulation early in life may have an impact on the future success of cochlear implantation (Litovsky et al. 2010, 2012).

Finally, a growing population of children has been diagnosed with auditory neuropathy spectrum disorder (ANSD). A possible deficit with ANSD is in the temporal domain: perception of low- to mid-frequency sounds. A common treatment option for severe impairment in ANSD is unilateral cochlear implantation, and because the degree of impairment is unrelated to degree of hearing loss by audiometric thresholds, this population may have significant acoustic sensitivity in the unimplanted contralateral ear. Runge et al. (2011) recently tested a group of children with ANSD using the test protocols that Litovsky and colleagues have used, to investigate the effects of acute amplification. Results showed that children with ANSD who are experienced CI users tend to benefit from contralateral amplification, particularly if their performance with the CI is only moderate. This study opened up many questions, including the long-term impact of contralateral HA use in real-world situations. However, the one take-home message regarding the “cocktail party effects” in children with complex hearing issues is that electric + acoustic hearing may provide benefits that need to be closely tracked and evaluated.

10.5.4 Variability and Effects of Executive Function

Even when all demographic factors are accounted for (e.g., age at implantation, age at deafness, CI device type, etiology of hearing loss), children with CIs still demonstrate variability in outcomes (Geers et al. 2003; Pisoni and Cleary 2003). The large amount of variability is a hallmark of CI research and presents a challenge to clinicians trying to counsel expected outcomes to parents who are considering CIs for their children.

Executive function is fundamental for development of language processing and functioning in complex environments. Previous work has shown that deficits in executive function (i.e., working and short-term memory, attention, processing speed) are associated with poor performance in noisy environments. Although executive function is not a direct measure of the ability to hear speech in complex auditory environments, it is clear that individuals must be able to extract the target signal, retain attention to the target, and manipulate incoming linguistic information when in complex environments. A gap in performance on measures of short-term and working memory exists for children with CIs and with NH, such that children with CIs generally perform lower on these measures than NH age-matched children (Cleary et al. 2001; Pisoni and Cleary 2003). Deficiency in specific aspects of executive function could negatively impact a child’s ability to understand speech successfully in noisy environments, and therefore performance on these measures may help to predict some of the variability that is demonstrated in BICI groups. More work is necessary to define which specific aspects of executive function are related to performance on spatial hearing tasks.

10.5.5 Future Directions and Clinical Applications

It is well demonstrated that children with two CIs perform better on tasks of spatial hearing than children with one CI. Some recent evidence has shown that children with BICIs who are implanted simultaneously, or with a very minimal interimplant delay, may have a greater advantage in noisy environments than those with a long delay between the first and second CI. The gap that exists between BICI and NH listeners in the ability to segregate the target talker in cocktail party environments most likely reflects the limitations of the current clinically available CI devices. Improvements in the speech processing strategies and device synchronization must be made for children with BICIs to function more similarly to their NH peers in multisource acoustic environments. For BICI users, the lack of synchronization between the two CI devices greatly reduces binaural cues, or even prohibits the user from accessing any binaural cues, that have shown to benefit NH listeners in noisy environments. The development and implementation of enhanced speech processing strategies that take advantage of fine-structure spectral information in the acoustic signal would likely provide children with access to cues to aid in speech understanding in both quiet and noise.

The information presented in this section suggests that additional research and clinical advances are needed to narrow the gap in performance when listening to speech in noise between children with BICIs and with NH. Until the clinically available CI device allows the user to receive more fine-tuned signals, more like those a NH listener receives, the gap in performance between the two groups remain. In the interim, it is important to increase the signal-to-noise ratio in noisy classroom settings where children are expected to learn.

10.6 Conclusions

Mammals have evolved with two ears positioned symmetrically about the head, and the auditory cues arising from the brain’s analysis of interaural differences in the signals play an important role in sound localization and speech understanding in noise. Contributions of auditory experience and nonsensory processes are highly important, and less well understood. This chapter reviewed studies that focus on populations of listeners who suffer from hearing loss, and who are fitted with HAs and/or CIs in order to hear. Although fitting of dual devices is the clinical standard of care, the ability of the patient to benefit from stimulation in the two ears varies greatly. The common metric in the literature that was described here is spatial release from masking (SRM). A simplified version of SRM was described in the original study on the cocktail party by Cherry (1953), but the paradigm used in that study involved spatial separation of sources across the two ears, rather than in space. Later studies simulated realistic listening environments and captured more detailed knowledge about the many factors that augment or diminish unmasking of speech in noisy background. What is common to HA and BICI users are the limitations that are thought to contribute to the gap in performance when comparing performance in NH and hearing impaired populations. Several factors seem to play a role in the limitations, thus providing ample evidence to suggest that the devices do not analyze and present spatial cues to the auditory system with fidelity, and that patient histories related to auditory deprivation and diminished neural health are inherently problematic for these patients. Several studies provide evidence to suggest that, should there be ample coordination between the CIs in the right and left ears, the signal processing would preserve adequate spatial information for SRM.

In children the outcomes are not much different than in adults, possibly because the limitations of the devices that are used today create the same lack of access to important spatial unmasking cues. It is possible, however, that training the brain to utilize cues that are subtle or somewhat inconsistent will yield positive results, and this is fertile ground for future work.

References

Aronoff, J. M., Yoon, Y. S., Freed, D. J., Vermiglio, A. J., et al. (2010). The use of interaural time and level difference cues by bilateral cochlear implant users. The Journal of the Acoustical Society of America, 127(3), EL87–EL92.

Başkent, D., & Shannon, R. V. (2004). Frequency-place compression and expansion in cochlear implant listeners. The Journal of the Acoustical Society of America, 116(5), 3130–3140.

Bentler, R. A., Egge, J. L., Tubbs, J. L., Dittberner, A. B., & Flamme, G. A. (2004). Quantification of directional benefit across different polar response patterns. Journal of the American Academy of Audiology, 15(9), 649–659.

Bernstein, J., Goupell, M. J., Schuchman, G. I., Rivera, A. L., & Brungart, D. S. (2016). Having two ears facilitates the perceptual separation of concurrent talkers for bilateral and single-sided deaf cochlear implantees. Ear and Hearing, 37(3), 289–302.

Bernstein, L. R., & Trahiotis, C. (2002). Enhancing sensitivity to interaural delays at high frequencies by using “transposed stimuli.” The Journal of the Acoustical Society of America, 112(3 Pt. 1), 1026–1036.

Bertoli, S., Staehelin, K., Zemp, E., Schindler, C., et al. (2009). Survey on hearing aid use and satisfaction in Switzerland and their determinants. International Journal of Audiology, 48(4), 183–195.

Bingabr, M., Espinoza-Varas, B., & Loizou, P. C. (2008). Simulating the effect of spread of excitation in cochlear implants. Hearing Research, 241(1–2), 73–79.

Bronkhorst, A. W. (2000). The cocktail party phenomenon: A review of research on speech intelligibility in multiple-talker conditions. Acta Acustica united with Acustica, 86(1), 117–128.

Buss, E., Pillsbury, H. C., Buchman, C. A., Pillsbury, C. H., et al. (2008). Multicenter U.S. bilateral MED-EL cochlear implantation study: speech perception over the first year of use. Ear and Hearing, 29(1), 20–32.

Byrne, D., & Noble, W. (1998). Optimizing sound localization with hearing AIDS. Trends in Amplification, 3(2), 51–73.

Chadha, N. K., Papsin, B. C., Jiwani, S., & Gordon, K. A. (2011). Speech detection in noise and spatial unmasking in children with simultaneous versus sequential bilateral cochlear implants. Otology & Neurotology, 32(7), 1057–1064.

Cherry, E. C. (1953). Some experiments on the recognition of speech, with one and with two ears. The Journal of the Acoustical Society of America, 25, 975–979.

Ching, T. Y., van Wanrooy, E., Dillon, H., & Carter, L. (2011). Spatial release from masking in normal-hearing children and children who use hearing aids. The Journal of the Acoustical Society of America, 129(1), 368–375.

Chung, K. (2004). Challenges and recent developments in hearing aids. Part I. Speech understanding in noise, microphone technologies and noise reduction algorithms. Trends in Amplification, 8(3), 83–124.

Churchill, T. H., Kan, A., Goupell, M. J., & Litovsky, R. Y. (2014). Spatial hearing benefits demonstrated with presentation of acoustic temporal fine structure cues in bilateral cochlear implant listenersa). The Journal of the Acoustical Society of America, 136(3), 1246–1256.

Cleary, M., Pisoni, D. B., & Geers, A. E. (2001). Some measures of verbal and spatial working memory in eight- and nine-year-old hearing-impaired children with cochlear implants. Ear and Hearing, 22(5), 395–411.

Cord, M. T., Surr, R. K., Walden, B. E., & Olson, L. (2002). Performance of directional microphone hearing aids in everyday life. Journal of the American Academy of Audiology, 13(6), 295–307.

Cox, R. M., Schwartz, K. S., Noe, C. M., & Alexander, G. C. (2011). Preference for one or two hearing AIDS among adult patients. Ear and Hearing, 32(2), 181–197.

Dillon, H. (2012). Hearing aids. New York: Thieme.

Dittberner, A. B., & Bentler, R. A. (2007). Predictive measures of directional benefit. Part 1: Estimating the directivity index on a manikin. Ear and Hearing, 28(1), 26–45.

Durlach, N. I., Mason, C. R., Shinn-Cunningham, B. G., Arbogast, T. L., et al. (2003). Informational masking: Counteracting the effects of stimulus uncertainty by decreasing target-masker similarity. The Journal of the Acoustical Society of America, 114(1), 368–379.

Eapen, R. J., Buss, E., Adunka, M. C., Pillsbury, H. C., 3rd, & Buchman, C. A. (2009). Hearing-in-noise benefits after bilateral simultaneous cochlear implantation continue to improve 4 years after implantation. Otology & Neurotology, 30(2), 153–159.

Edwards, B. (2007). The future of hearing aid technology. Trends in Amplification, 11(1), 31–46.

Festen, J. M., & Plomp, R. (1986). Speech-reception threshold in noise with one and two hearing aids. The Journal of the Acoustical Society of America, 79(2), 465–471.

Freyman, R. L., Balakrishnan, U., & Helfer, K. S. (2008). Spatial release from masking with noise-vocoded speech. The Journal of the Acoustical Society of America, 124(3), 1627–1637.

Garadat, S. N., Litovsky, R. Y., Yu, G., & Zeng, F.-G. (2009). Role of binaural hearing in speech intelligibility and spatial release from masking using vocoded speech. The Journal of the Acoustical Society of America, 126(5), 2522–2535.

Garadat, S. N., Litovsky, R. Y., Yu, G., & Zeng, F.-G. (2010). Effects of simulated spectral holes on speech intelligibility and spatial release from masking under binaural and monaural listening. The Journal of the Acoustical Society of America, 127(2), 977–989.

Geers, A., Brenner, C., & Davidson, L. (2003). Factors associated with development of speech perception skills in children implanted by age five. Ear and Hearing, 24(1 Suppl.), 24S–35S.

Gordon, K. A., Jiwani, S., & Papsin, B. C. (2013). Benefits and detriments of unilateral cochlear implant use on bilateral auditory development in children who are deaf. Frontiers in Psychology, 4, 719.

Gordon, K. A., & Papsin, B. C. (2009). Benefits of short interimplant delays in children receiving bilateral cochlear implants. Otology & Neurotology, 30(3), 319–331.

Gordon, K. A., Wong, D. D. E., Valero, J., Jewell, S. F., et al. (2011). Use it or lose it? Lessons learned from the developing brains of children who are deaf and use cochlear implants to hear. Brain Topography, 24(3–4), 204–219.

Goupell, M. J. (2015). Interaural envelope correlation change discrimination in bilateral cochlear implantees: Effects of mismatch, centering, and onset of deafness. The Journal of the Acoustical Society of America, 137(3), 1282–1297.

Goupell, M. J., Kan, A., & Litovsky, R. Y. (2013). Mapping procedures can produce non-centered auditory images in bilateral cochlear implantees. The Journal of the Acoustical Society of America, 133(2), EL101–EL107.

Goupell, M. J., & Litovsky, R. Y. (2015). Sensitivity to interaural envelope correlation changes in bilateral cochlear-implant users. The Journal of the Acoustical Society of America, 137(1), 335–349.

Grimes, A. M., Mueller, H. G., & Malley, J. D. (1981). Examination of binaural amplification in children. Ear and Hearing, 2(5), 208–210.

Gstoettner, W., Franz, P., Hamzavi, J., Plenk, H., Jr., et al. (1999). Intracochlear position of cochlear implant electrodes. Acta Oto-Laryngologica, 119(2), 229–233.

Hawley, M. L., Litovsky, R. Y., & Culling, J. F. (2004). The benefit of binaural hearing in a cocktail party: effect of location and type of interferer. The Journal of the Acoustical Society of America, 115(2), 833–843.

Henkin, Y., Waldman, A., & Kishon-Rabin, L. (2007). The benefits of bilateral versus unilateral amplification for the elderly: Are two always better than one? Journal of Basic and Clinical Physiology and Pharmacology, 18(3), 201–216.

Hochmair, I., Nopp, P., Jolly, C., Schmidt, M., et al. (2006). MED-EL cochlear implants: State of the art and a glimpse into the future. Trends in Amplification, 10(4), 201–219.

Hornsby, B. W., & Ricketts, T. A. (2007). Effects of noise source configuration on directional benefit using symmetric and asymmetric directional hearing aid fittings. Ear and Hearing, 28(2), 177–186.

Ihlefeld, A., & Litovsky, R. Y. (2012). Interaural level differences do not suffice for restoring spatial release from masking in simulated cochlear implant listening. PLoS ONE, 7(9), e45296.