Abstract

Hearing assistance and restoration devices such as hearing aids and cochlear implants were originally designed for unilateral use to improve speech communications. However, the demands of understanding a conversation in noisy situations have led to these devices being increasingly prescribed bilaterally, in the hope that hearing-impaired listeners might be able to access the benefits of binaural hearing enjoyed by normal-hearing listeners. Although bilateral hearing with devices have led to some improvements compared with those for unilateral use, there is still a gap in performance between listening with assistive devices and normal hearing. This chapter first covers a brief history of hearing aids and cochlear implants to explain how some of the design choices motivated by the desire to improve unilateral speech communications have affected access to binaural cues. Research investigating binaural hearing abilities with current hearing aids and cochlear implants is reviewed, along with a discussion of the factors that affect bilateral hearing with devices.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Amplification

- Beamforming

- Bilateral

- Cochlear implants

- Compression

- Listening assistive devices

- Hearing aids

- Hearing assistance

- Hearing in noise

- Hearing restoration

- Sound processing

- Speech communication

- Unilateral

13.1 Hearing with Devices

13.1.1 Amplification of Sound with Hearing Aids

Hearing aids have gone through several major transformations. Acoustic devices (e.g., ear trumpets, hearing thrones), which used resonance to increase sound level, were first introduced in the seventeenth century. After electronic hearing aids were introduced in 1898, several advancements allowed for several significant improvements (Ricketts et al. 2019). Specifically, the amount of amplification possible and the amplified frequency range (audible frequency bandwidth) were increased while at the same time distortion and instrument size were reduced. These advancements were largely made possible by implementing transistors (1952) and electret microphones (1961) into hearing aid design as well as the development and miniaturization of the balanced armature driver. Throughout much of this history, from ear trumpets through body-style hearing aids, sound was usually received at a single location (a hearing aid case or external microphone) and then routed to one or both ears. Until about the 1950s, essentially all electric hearing aids were body-worn devices. Specifically, body aids were relatively large and included a boxlike case containing the microphone and amplification stages. A receiver worn in the ear was attached to the case by a cord. These cases could be worn in a variety of positions, including on a cord around the neck, in a shirt pocket, or attached to another part of the user’s clothing.

The first use of ear-level amplification was behind-the-ear-style (BTE; see Table 13.1 for a list of abbreviations) hearing aids, which are still in use today. In modern BTE hearing aids, incoming sound is directed to the microphone through opening(s) in the top of the case (microphone ports). Within the hearing aid case, the input is then transduced into a digital form for processing. After processing and amplification, the signals are transduced back into an acoustic form and the output of the receiver is routed from the case through an attached earhook that is used primarily for retention (keeping the device secure on the ear). The sound is then directed to the ear through tubing and a custom-made earmold (typically vented) that terminates in the ear canal. As advances in electronic circuitry continued, manufacturers were able to further reduce the size of the hearing aid, thereby introducing custom in-the-ear (ITE) and in-the-canal (ITC) style devices in the 1960s. In these instruments, all electronics are contained within a plastic case that is small enough to fit in the concha bowl and ear canal. Typically, an ear shell is manufactured using accurate impressions of the patient’s ear so that the fit is customized to the individual. The microphone port(s) is in a faceplate, which is attached to the top of the ear shell and is typically the only part of the device that is visible when the hearing aid is placed correctly in the ear. Further miniaturization led to the introduction of the completely-in-canal (CIC) style that is fitted entirely inside the ear canal. Because the faceplate and microphone port openings are typically recessed slightly inside the ear canal in CIC instruments, this is the position in which sound is received.

The introduction and increasing popularity of mini-BTE products have greatly reduced the use of custom products. As the name suggests, these hearing aids are significantly smaller than traditional BTE styles, but the case still fits behind the ear. Like the traditional BTE style, the microphone receives sound on the top of the case. In addition to a smaller case, either a very thin tube is used to transmit amplified sound to the ear or a thin plastic covered wire runs from the hearing aid case to an external receiver placed in the ear canal. This latter style, commonly referred to as a receiver-in-canal (RIC; Fig. 13.1), is currently the most popular hearing aid style, accounting for about 66% of all hearing aids sold (Ricketts et al. 2019). Although current mini-BTE hearing aids can be fitted with custom “eartips,” they also differ from other styles in that they are most often fitted by one of several sizes and configurations or noncustom eartips. The BTE form factor has 80% of the market (mostly mini-BTE styles). The small case and thin tube or wire of the mini-BTE style offer excellent cosmetics on the ear, whereas the use of noncustom eartips precludes the need for an ear impression, thereby reducing patient visits and improving efficiency.

Although diotic presentation (same sound presented to both ears) occurred even in the era of ear trumpets, it was not until ear-level devices became popular that amplified sound, sampled at the location of each ear, was delivered to the two ears individually (bilateral amplification). Even then, it took some time before research identifying the bilateral benefits informed clinical practice. Up to the early 1970s, it was still common for audiologists to recommend a single hearing aid. Indeed, some professionals argued that dispensing bilateral hearing aids was simply an attempt to double profit. A 1975 ruling by the Federal Trade Commission supported this belief by requiring professionals who wished to dispense bilateral amplification to disclose that there were no benefits associated with a second hearing aid. The importance for children to be fitted bilaterally, however, was beginning to be widely accepted about this time. However, it was not until the 1990s that the majority of people in the United States were fitted bilaterally. About 80% of the US fittings were bilateral in 2018.

It is important to distinguish the terms “unilateral and bilateral,” which are used to describe wearing devices on one or both ears, from the terms “monaural and binaural,” which are used to describe hearing with one or both ears. As described in this chapter, devices and/or processing can distort naturally occurring binaural cues. Furthermore, in the case of hearing aids, it is common for some binaural information to be audible when an individual is fitted with only one instrument (unilaterally). Even in cases where processing attempts to restore binaural cues, considerable distortion often remains. Therefore, unlike much of the rest of this book, this chapter focuses on bilateral versus unilateral performance with devices and, when appropriate, compares these outcomes with binaural hearing.

13.1.2 Recovery of Hearing with Cochlear Implants

Although hearing aids attempt to improve the access to sound for patients with some usable acoustic hearing, the motivation behind the creation of cochlear implants was to restore speech understanding to profoundly hearing-impaired patients. Cochlear implants bypass many of the peripheral components of the auditory system (outer ear, tympanic membrane, middle ear, and cochlea) and provide a sense of hearing through electrical stimulation of the auditory nerves near the cochlea. There are two main components to a cochlear implant: (1) an external sound processor and (2) an array of electrodes surgically implanted into the cochlea structure.

The feasibility of using electric stimulation to provide hearing to deaf patients was first demonstrated by Djourno and Eyriès in 1957. By implanting induction coils into a bilaterally deaf patient, a sense of audition was achieved whereby the patient was able to hear some environmental sounds and several words but was unable to understand speech. In the following two decades, further experiments were sparsely conducted around the globe with electrically stimulated hearing but with mixed success (see Eisen 2006; Wilson 2019 for detailed historical reviews). The legitimacy of cochlear implants as a possible device for restoring hearing came in 1975 with a study sponsored by the National Institutes of Health. Thirteen patients were implanted with a single-channel device and underwent extensive psychoacoustic, audiological, and vestibular testing. The study report concluded that single-channel devices could not support speech understanding but aided in lipreading and speech production and enhanced the quality of living for patients (Bilger et al. 1977).

A major turning point for cochlear implants came in the 1980s, when open-set speech recognition was reported with multichannel devices (Clark et al. 1981). These devices used an array of electrodes to stimulate the auditory nerve fibers at different places in the cochlea to take advantage of the frequency of the cochlea to place mapping. The incoming signal was divided into different channels by passing it through a series of band-pass filters, and the output of each filter was sent to the different electrodes along the array (see Fig. 13.2A). The electrodes located from the base to the apex were stimulated with high- to low-frequency information, respectively. Subsequent reports showed significant improvements in speech understanding performance of multichannel over single-channel devices (Gantz et al. 1988; Cohen et al. 1993).

Unilateral use of multichannel cochlear implants have enabled many patients to recover usable speech understanding (>80% correct) without lipreading in quiet situations (Firszt et al. 2004; Wilson and Dorman 2007). However, there is a large variability in outcomes among the implanted population, and understanding speech in noise with only one cochlear implant is still very challenging. To improve outcomes in understanding speech in noisy environments, bilateral implantation has become more common since the early 2000s (Peters et al. 2010).

13.2 Bilateral Hearing with Devices

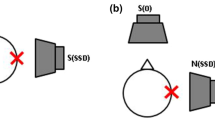

Several studies have demonstrated significantly better outcomes when listening with two devices rather than using one. This advantage, commonly termed bilateral benefit, has been demonstrated in a number of different domains, including speech recognition, listening effort, spatial/localization abilities, and sound quality. With regard to speech recognition, bilateral benefits may result from the head shadow effect, binaural squelch, and binaural redundancy (Fig. 13.3; see also Culling and Lavandier, Chap. 8). The head shadow effect results from a physical improvement in the signal-to-noise ratio (SNR) and is essentially a monaural phenomenon. That is, improved performance due to a reduction in head shadow effects is primarily associated with conditions in which the SNR is different at the two ears. In contrast to the head shadow effect, diotic summation (also known as binaural redundancy) and binaural squelch are considered to be binaural effects that are based on complex neural processing (Hawley et al. 1999, 2004). Binaural squelch may occur when the interaural spectral or temporal differences of the target speech signal are different from those of the background noise (as occurs, e.g., when the target speech signal and background noise come from different spatial positions in the horizontal plane). Binaural auditory processing in this situation can result in an effective improvement in the SNR relative to the actual SNR measured at either ear (Zurek 1993). In contrast, diotic summation refers to the advantage that results from having redundant (Ching et al. 2006) or complimentary (Kokkinakis and Pak 2014) information at the two ears and can lead to improved speech recognition in quiet as well as in the presence of noise. These effects can combine to improve speech recognition by 3 dB or more when considering one versus two ears. However, less benefit has typically been observed in hearing-impaired listeners.

13.2.1 Bilateral Benefits with Hearing Aids

As described by Hartmann (Chap. 2), listeners with normal hearing use binaural cues to assist in localization, particularly for localization in the horizontal plane. External sounds may reach the two ears of a listener at slightly different times or levels depending on the angle of arrival. For example, if a loudspeaker is placed on the right side of the head (90° azimuth), sound will reach the right ear before the left ear (because the right ear is closer to the sound), and some of the sound will reach the right ear at a higher level than that at the left ear (because the head will block some of the high-frequency energy as the sound travels around to the left ear). These time and level differences are referred to as interaural time differences (ITDs) and interaural level differences (ILDs), respectively, and they provide crucial information for localizing sounds in the horizontal plane. Specifically, an ITD provides an important cue for the localization of lower frequency signals (<1500 Hz) and an ILD provides an important cue for the localization of higher frequency signals (>2000 Hz). These interaural differences are used in conjunction with monaural high-frequency spectral information (>5000 Hz), which is used for front-back and vertical resolution (Slattery and Middlebrooks 1994; Blauert 1997). The low-frequency cues are especially important to listeners with high-frequency hearing loss for whom the high-frequency ILDs and monaural spectral cues may be inaudible (Neher et al. 2009; Jones and Litovsky 2011).

Although hearing aids can improve audibility, they generally do not improve localization for listeners with hearing loss (Köbler and Rosenhall 2002; Van den Bogaert et al. 2006). In addition, one of the hallmarks of aided localization is high intersubject variability. Some listeners exhibit aided localization that is quite poor, whereas a small percentage achieve performance in the normal range. Average aided-localization performance, however, is typically significantly poorer than that found in listeners with normal hearing (Van den Bogaert et al. 2011). Figure 13.4 provides a comparison of localization performance between listening with binaural hearing and listening bilaterally with hearing aids. Although performance remains outside the normal range on average, bilateral fittings generally allow for better localization than unilateral fittings, as indicated by subjective reports of improved localization (Köbler et al. 2001; Boymans et al. 2009) and laboratory tests of horizontal auditory localization (Byrne et al. 1992; Boymans et al. 2008). For example, Köbler and Rosenhall (2002) tested experienced hearing aid users with their personal hearing aids. They presented speech from one of eight loudspeakers, and the other seven loudspeakers presented background noise with the same long-term spectrum as the speech signal at a + 4 dB SNR overall. Listeners were instructed to identify the loudspeaker with the speech signal and also to repeat the speech presented. Results with bilateral devices indicated that the localization ability improved approximately 10 percentage points compared with unilateral fittings and was returned to unaided performance levels.

For many listeners with bilateral hearing loss, there is some residual audibility for speech in the unaided ear. This audibility provides the potential for at least some binaural speech recognition benefits when fitted with unilateral amplification. However, adding a second hearing aid can still further improve speech recognition in specific listening situations, even when the SNR is similar at the two ears. Because there is the potential to take advantage of both binaural squelch and binaural redundancy, it is perhaps not surprising that consistent bilateral benefits for speech recognition are most often reported in studies that included speech and noise sources that were spatially separated (Hawkins and Yacullo 1984; Freyaldenhoven et al. 2006). For example, bilateral benefits of approximately 3 dB have been reported in hearing aid users by several investigators that had a speech source in front of the listener and uncorrelated noise sources surrounding or to the sides of the listener (Ricketts 2000a; Boymans et al. 2008). When speech and noise are colocated (i.e., the potential for binaural redundancy as shown in Fig. 13.3), a smaller percentage of listeners exhibit benefits. For example, Walden and Walden (2005) reported that only 11% of hearing aid listeners demonstrated significant bilateral benefits for sentences presented in colocated noise. A study has demonstrated that a bilateral hearing aid fitting is actually worse than a unilateral hearing aid fitting in specific listening conditions (Henkin et al. 2007). In that study, speech was presented from a loudspeaker in front of the listener and noise was presented from behind. Even when speech and noise are spatially separated, significant bilateral benefits are not always found. Indeed, several investigators have reported similar speech recognition performance for unilateral and bilateral hearing aid fittings (Hedgecock and Sheets 1958; Punch et al. 1991). Potential factors contributing to the variability in speech recognition outcomes are described in Sects. 13.3.4 through 13.3.6.

Another domain in which bilateral hearing with hearing aids may be beneficial is “listening effort,” which is often described as the cognitive resources required for understanding speech (Fraser et al. 2010; Sugawara and Nikaido 2014). Listening effort has been shown to increase in adverse or complex listening situations (Murphy et al. 2000; Picou et al. 2013) and can be improved by hearing aids and the activation of some types of advanced sound processing (Sarampalis et al. 2009; Picou et al. 2017). Although factors that improve speech recognition also generally decrease the listening effort, speech recognition and listening effort are likely distinct constructs (Strand et al. 2018). Therefore, it is possible that even if the bilateral benefits for speech recognition are limited, bilateral hearing aid use could decrease the listening effort. Indeed, several researchers have reported subjective benefits of bilateral fittings on listening effort (Noble and Gatehouse 2006; Most et al. 2012), which can be present even when speech recognition is at the ceiling (Rennies and Kidd 2018). However, the potential bilateral benefits for objective listening effort have not yet been demonstrated.

Subjective bilateral benefits have been consistently reported in laboratory studies within the dimensions of sound quality, including clarity (i.e., clear versus muffled), loudness (i.e., soft versus loud), and balance (i.e., equal level at both ears versus unequal levels) (Balfour and Hawkins 1992; Naidoo and Hawkins 1997). Subjective preferences also generally favor bilateral fittings in real-world trials (Boymans et al. 2008; Cox et al. 2011). For example, the majority of respondents reported better speech recognition and overall sound quality with bilateral hearing aids in a survey of experienced hearing aid users (Köbler et al. 2001). In addition, most respondents reported that bilateral hearing aid use was beneficial when attending a lecture, in group conversations, while listening to music, and while watching television. Loudness is one sound-quality dimension for which bilateral fittings are sometimes not favored and, instead, are rated less comfortable than unilateral fittings or are rated too loud (Boymans et al. 2008; Cox et al. 2011).

Despite tendencies for higher subjective ratings when using two hearing aids compared with using just one, the number of patients who ultimately choose to be fit bilaterally is not consistently high. Researchers who have examined whether listeners prefer one versus two hearing aids have reported that preference for two hearing aids ranges from approximately 30–55% in field studies (Erdman and Sedge 1981; Vaughan-Jones et al. 1993) to approximately 70–95% in retrospective studies (Boymans et al. 2009; Bertoli et al. 2010). When listeners are fitted with their preferred fitting type (unilateral or bilateral), hearing aid outcomes have generally been shown to be similar on indices of use, satisfaction, benefit, and residual handicap (Walden and Walden 2004; Boymans et al. 2009), although some studies have shown improved outcomes on these dimensions in bilateral hearing aid users (Bertoli et al. 2010; Cox et al. 2011). These mixed preferences and outcomes are perhaps not surprising given the mixed benefits measured in the laboratory.

13.2.2 Bilateral Benefits with Cochlear Implants

Around 2000 when bilateral benefits were being observed with two hearing aids, some investigators began exploring whether bilateral implantation would improve outcomes (Tyler et al. 2002; van Hoesel 2004). Experiments comparing performance between unilateral and bilateral cochlear implantation have generally shown improved speech understanding in noise (Litovsky et al. 2009; Dunn et al. 2010) and greater sound localization abilities (Grantham et al. 2007; Dorman et al. 2016). Inspired by the results of bilateral implantation, some researchers have begun implanting the deaf ear of patients with some residual hearing or even normal hearing in the contralateral ear. For some of these patients, it is possible to wear a hearing aid in the impaired ear. The combination of electric and acoustic hearing in these bimodal hearing listeners have also provided some advantages in speech understanding in noise (Mok et al. 2006; Kokkinakis and Pak 2014) and sound localization abilities (Ching et al. 2004; Firszt et al. 2018). Furthermore, since around 2008, a growing number of single-sided deaf patients have been provided with a cochlear implant in the deaf ear as a treatment for tinnitus (Van de Heyning et al. 2008; Arts et al. 2012). Although it is unclear why cochlear implantation alleviates debilitating tinnitus, the addition of a cochlear implant appears to improve sound localization abilities similar to those in bilateral cochlear implant users (Dillon et al. 2017; Litovsky et al. 2018), but the benefits for listening to speech in noise are not as comparable (Bernstein et al. 2017; Döge et al. 2017).

Although bilateral listening with cochlear implants has improved outcomes, there is still a gap in performance between normal-hearing listeners and cochlear implant users. When listening to speech in noise, the benefit of adding a cochlear implant is usually due to the benefit of an acoustic head shadow (e.g., Litovsky et al. 2009; Gartrell et al. 2014). However, when trying to understand speech in the presence of noise or multiple talkers that surround the listener, the benefits of listening bilaterally with a cochlear implant can be quite small (see Fig. 13.5).

For sound localization, the gap in performance between normal-hearing listeners and cochlear implant users is due to a reliance on ILDs for locating sounds (see Fig. 13.4). This is in contrast to normal-hearing listeners who predominantly rely on ITDs for the precise localization of sounds (Wightman and Kistler 1992; Macpherson and Middlebrooks 2002). However, at least for bilateral cochlear implant users, the reliance on ILDs for sound localization (Grantham et al. 2007; Aronoff et al. 2010) is not due to a lack of sensitivity to ITDs with electrical stimulation. Psychophysical studies conducted using specialized research processors have found that bilateral cochlear implant users are sensitive to ITDs presented via electrical pulses (see Kan and Litovsky 2015; Laback et al. 2015 for detailed reviews). These studies have shown that bilateral cochlear implant users are sensitive to ITDs at low-pulse rates (van Hoesel et al. 2009; Litovsky et al. 2012), in amplitude-modulated high-rate pulse trains (Noel and Eddington 2013; Ihlefeld et al. 2014), or in aperiodic high-rate pulse trains (Laback and Majdak 2008; Srinivasan et al. 2018). However, sensitivity to ITDs is typically poorer than that in normal-hearing listeners. Median ITD just-noticeable differences for normal-hearing listeners presented pure tones (frequency range of 500–1000 Hz) is around 11.5 μs, whereas in bilateral cochlear implant users, median just-noticeable differences are around 144 μs for low-rate (≤100 pulses per second [pps]) electrical pulse trains (Laback et al. 2015). Although ITD sensitivity with electrical stimulation has been observed in the laboratory, there are many factors that hinder access to usable ITDs when listening with modern cochlear implant processors. These factors are discussed in Sect. 13.3.

13.3 Factors Affecting Binaural Hearing with Devices

A hallmark of bilateral hearing with devices is the substantial variability in outcomes across and within studies. The reasons for the variability may be attributed to numerous factors including sound acquisition and delivery, signal processing, and individual variations in the degree of hearing loss and contralateral interference for speech. Although specific details of the design and signal processing of hearing aids and cochlear implants are far beyond the scope of this chapter, it is important to understand how these devices can intentionally or unintentionally modify signals resulting in changes to acoustic cues important for binaural processing. For the interested reader, detailed explanations of the design of hearing aids can be found in Kates (2008), Lyons (2010), and Ricketts et al. (2019) and of cochlear implants in Zeng et al. (2008).

13.3.1 Sound Acquisition and Delivery

In hearing devices, the acquisition of sound in the listener’s environment is typically accomplished using a microphone. The electronic signal at the output of the microphone is intended to mimic the pressure changes of sounds in the environment at the microphone location. The electret or microelectromechanical system (MEMS) microphones used in modern devices are characterized by a relatively flat and broad frequency response. Because modern microphones are relatively transparent acoustically (i.e., flat-frequency response and nearly zero-added delay or distortion), distortion of natural binaural cues in sound acquisition is mainly related to the microphone position, which varies by style as described in Sect. 13.1.1. It follows that the nearer the microphones are to the natural position of sound acquisition (the tympanic membranes), the less distortion of the natural binaural cues.

Like hearing aids, the first ear-level cochlear implant processors were BTE styles with all the signal-processing hardware contained within the external processor. A transcutaneous radio frequency link, typically located above and behind the pinna, delivers power and communication of stimulus information to the implanted electrode array. The link is held in place by a magnet implanted just under the skin. Although BTE styles are still common, cochlear implant manufacturers have made a shift toward providing button-shaped processors that are magnetically held in place on the external side of the radio frequency link. These button-shaped processors shift the microphone location from behind the ear to a location that is above and behind the pinna, although the consequences of this change in location is currently unknown.

The specific location of the microphone port affects not only the frequency response of sounds arriving from specific angles but also the relative sensitivity to sound as a function of the angle of arrival. Changes in angular sensitivity to sound can have a direct effect on the SNR that a listener experiences in realistic listening environments. The relative sensitivity for sounds arriving from the front versus all other angles of arrival is quantified by the directivity index (DI). In environments in which the listener is surrounded by noise and speech arrives from the front, changes in speech recognition in noise performance are linearly related to changes in the DI after correcting for audibility (Ricketts et al. 2005). It is notable that many of the studies with hearing aids that demonstrated a lack of bilateral benefits for speech recognition are older (e.g., Jerger and Dirks 1961; Punch et al. 1991). As a result, all of these studies used traditional BTE hearing aids with omnidirectional microphones, often placed in a suboptimal location. Specifically, the microphone ports were often on the back or even the bottom of the BTE case. This location can result in a negative DI because the hearing aid will be more sensitive to sounds from behind than from the front because the pinna acts to provide some attenuation for higher frequency sounds arriving from in front of the listener (Ricketts et al. 2019). Therefore, although these BTE devices improved audibility, they also decreased the SNR compared with unaided listening. Audibility could be improved by a single hearing aid of this type. However, the addition of a second hearing aid does not improve audibility and further decreases the SNR. Modern hearing aids do not use this suboptimal location and often also include directional microphones or advanced microphone array technologies, which may account for the increased consistency of bilateral speech recognition benefits measured in newer studies.

Unfortunately, current hearing aids have not addressed another issue with BTE microphone port placement. Specifically, because the BTE microphone location is above the pinna, any advantages related to pinna diffraction effects (e.g., monaural spectral cues) will be eliminated. However, access to these cues for many listeners is likely limited regardless of hearing aid style. The amount of gain and subsequent audible bandwidth is limited by the hearing aid receiver. Audible bandwidth depends on many factors, including the power needed and the magnitude of hearing loss in the lowest and highest frequencies. Typical receivers are able to deliver amplified low frequencies down to 100–300 Hz. However, listeners with normal or near normal low-frequency hearing may be able to access even the lowest frequencies, unamplified, as long as there is adequate venting. In the high frequencies, even receivers that are considered “wideband” typically have a limited output available above 7–8 kHz. For listeners with severe-to-profound hearing loss, high-frequency audibility is often limited to only 4–5 kHz or lower. Instead of a receiver, the output stage may include a vibrating oscillator that stimulates the cochlea through bone conduction or a variety of other specialized transducers, including those associated with middle ear implants. In some cases, the bandwidth of these oscillators is considerably smaller than that delivered by traditional receivers, although the audible bandwidth is equivalent or even broader in some devices.

Limiting bandwidth reduces access to monaural spectral cues, which may be one reason why hearing aid wearers generally exhibit poorer than normal localization in the vertical plane, as described in Sect. 13.2.1. Access to extended high frequencies (from 5 to approximately 10 kHz in modern instruments) has also been shown to improve speech recognition for spatially separated speech in noise (Levy et al. 2015). Although benefits were larger in listeners with normal hearing (1.3–3.0 dB), they were still present in listeners with impaired hearing (0.5–1.3 dB). Therefore, improving access to this extended high-frequency information in hearing aid wearers still has the potential to slightly improve binaural outcomes.

In cochlear implants, one manufacturer (Advanced Bionics) provides the option of having an adapter that allows the microphone input to be located close to the entrance of the ear canal. A small handful of studies have shown some benefits for speech understanding by having the microphone located in this position compared with that behind the ear (Gifford and Revit 2010; Kolberg et al. 2015). However, the benefits of having microphones in the ears may still be limited. Jones et al. (2016)investigated the impact of microphone position on the horizontal-plane sound localization in the frontal hemisphere in cochlear implant users. By measuring individualized head-related transfer functions (HRTFs) of the bilateral cochlear implant for microphones positioned in the ear, behind the ear, and on the shoulders and then using the HRTFs to generate virtual stimuli Carlile (1996) found no significant improvement in localization performance with in-the-ear microphones. In addition, frequencies above 8 kHz are typically not available with cochlear implants, which would limit their ability to use high-frequency pinna-related spectral cues for front-back discrimination and vertical plane localization (Majdak et al. 2011). However, even if high-frequency spectral cues were available, it is likely that current spread would limit the sensitivity to spectral profile differences (Goupell et al. 2008).

A second factor related to sound acquisition that is important for hearing aids, but not for cochlear implants, is venting. All hearing aids or earmolds allow for at least a small amount of sound to leak out of and into the residual ear canal space. The space that allows sound to pass around the borders of the hearing aid/earmold in the concha and ear canal is referred to as a slit leak. Venting simply refers to the intentional process of increasing the amount of this leakage, usually by creating an additional sound channel. Because venting provides a pathway for external sounds to leak into the ear, these sounds may be audible in listeners with normal or near normal hearing in some frequency ranges. This may lead to audible natural binaural cues that could improve binaural outcomes. However, there is also the potential for incongruent cues between the same sound being accessed naturally and a delayed version of the sound after hearing aid processing and amplification, as described in Sect. 13.3.2.1. Access to natural acoustic sounds by hearing aid wearers will depend on the sound level, the magnitude of venting, and the degree of residual hearing. Most commonly, it will occur in hearing aid wearers that have little or no low-frequency hearing loss because they are also typically fitted with large vents.

13.3.2 Signal Processing

The goal of signal processing in hearing aids and cochlear implants has been to provide understandable speech information. However, the two device types are very different because of the mode of signal delivery to the patients. In hearing aids, signal processing aims to provide amplification at frequencies where a patient has difficulty hearing. In contrast, a cochlear implant has to convert the acoustic signal into an electrical code that the brain can understand as speech. Hence, there are different issues associated with how signal processing affects binaural hearing abilities with each device.

13.3.2.1 Hearing Aids

In analog hearing aids, the continuously varying voltage at the microphone output is filtered, amplified, and delivered to the receiver coil where it is transduced back into acoustic sound pressure. This process has a low latency, but signal modifications are quite limited. However, nearly all modern hearing aids are digital and the electrical output of the microphone is converted to a string of representative numbers. The process of obtaining a digital representation of sound through sampling and quantization is called analog-to-digital (A/D) conversion. Sampling is the process of measuring the signal amplitude at discrete points in time. Quantifying amplitude (or change in amplitude) through the assignment of numeric values at discrete sample intervals is a process referred to as quantization. Simple-to-complex signal modifications can then be achieved by applying mathematical functions to this digital representation of the input sound. In addition, the incoming signal can be analyzed and different functions can be applied for different inputs. Groups of mathematical functions with a combined goal are commonly referred to as digital algorithms or just algorithms. Together, these algorithms when applied to digital representations of sound are referred to as digital signal processing (DSP) or just processing. After applying the desired DSP, the digital signal is converted back into analog form for delivery to the hearing aid wearer.

Because patients do not typically have the same magnitude of hearing loss at all frequencies, it is often of interest to apply different processing in different frequency regions. Frequency-specific analysis and processing is typically completed for gain processing, digital noise reduction (DNR), wind noise reduction, and activation and control of many other special features. Consequently, it is typically necessary to break up the output of the A/D convertor into frequency ranges that are then analyzed in a variety of ways (e.g., amplitude, phase, changes compared with previous samples) to make processing decisions (see Ricketts et al. 2019 for further details).

Overall, the goal is for an accurate digital representation of sound, real-time signal analysis, and processing with limited delay, all while ensuring an adequate battery life (e.g., an 18 + −hour listening day in the case of rechargeable systems). To achieve these goals, a variety of complex and, in some cases, proprietary DSP methods are used by manufacturers but they are well beyond the scope of this chapter. Although modern hearing aids do many things very well, a continued challenge is delay. More accurate digital representation of the sound, greater numbers of algorithms, and more complex algorithms all increase processing time. It is important to clarify, however, that the amount of time that the sound is delayed by the hearing aid, referred to as total delay, is dependent on a number of factors in addition to processing speed. For example, both A/D and D/A conversions require some processing time. The type of filtering also has an effect on the delay. Specifically, the delay may be frequency independent (essentially the same delay at all frequencies as is the case for finite impulse response filters) or frequency dependent (typically more delay in the low frequencies falling to less delay in the higher frequencies as is the case for infinite impulse response filters). Furthermore, although more filters in a filter bank will provide a higher frequency resolution for processing, it will also increase the delay. Processing and/or analysis after obtaining a group of samples (e.g., block processing) is also necessary for some algorithms. However, although increasing the number of samples in a block is often desirable (particularly for improving the accuracy of analysis), it will also increase the delay. From 2005 to 2008, the total delay measured for some digital hearing aids was as large as 11–12 ms (unpublished data). Total delays are now typically between 2 and 8 ms (Alexander 2016). Delays as short as 5–6 ms may be noticed by listeners when fitted with hearing aids using highly vented eartips due, in part, to incongruent cues across natural acoustic and hearing aid-processed sound pathways (Stone and Moore 2005; Stone et al. 2008). Therefore, keeping group delay to a minimum is also a design concern when manufacturers introduce new algorithms.

The potential for incongruent cues in listeners with relatively normal low-frequency hearing was mentioned in Sect. 13.3.1. Specifically, because these individuals are often fitted with significant venting, there is no effective processing delay in the unamplified low frequencies but considerable processing delay in the amplified higher frequencies. A similar frequency-specific distortion of ITDs is also present in commercial digital hearing aids that have delay that differs greatly in the low and high frequencies. Although one might assume that this could be problematic, it is not because frequency-specific interaural differences are always constant. That is, even though there is an overall delay in the high frequencies, the magnitude of the ITD remains accurate as long as the same delay is present in both hearing aids. Therefore, it is perhaps not surprising that research to date has not found a performance detriment related to incongruent processing delay across frequencies (Byrne et al. 1996).

The majority of probe microphone verification systems used for fitting hearing aids implement two or three popular and validated prescriptive methods for the assignment of level- and frequency-specific gain in hearing aids. The current versions of these methods are the National Acoustics Laboratory-Nonlinear v2 (NAL-NL2; Keidser et al. 2011); the Desired Sensation Level v5 (DSLv5; Scollie et al. 2005); and the CAMFIT 2 (Moore et al. 2010). The primary goals of these three prescriptive gain methods relate to optimizing audibility and speech recognition for a wide range of speech inputs, avoiding loudness discomfort, and providing good sound quality. Although hearing thresholds increase with sensorineural hearing loss, there is not a concomitant increase in the thresholds of discomfort because of loudness recruitment (Hellman and Meiselman 1993). Recruitment refers to the abnormally rapid growth in the perceived loudness with increases in the sound level above the hearing threshold that is associated with damage to the inner hair cells of the cochlea. As a result, sensorineural hearing loss results in a decrease in a listener’s residual dynamic range (the range from hearing threshold to threshold of discomfort). To offset the decrease in the residual dynamic range, modern prescriptive gain procedures prescribe decreasing gain with increasing signal input level. These level-dependent gain changes are typically achieved using compression. Compression reduces the dynamic range from the lowest to highest levels at the output of the hearing aid relative to this input range of levels. When combining gain and compression processing in a hearing aid, the resulting amplification is referred to as nonlinear gain. When applied appropriately, nonlinear gain can effectively provide audibility for even soft speech, ensure average speech inputs are comfortable, and, at the same time, prevent loudness discomfort for many listeners with sensorineural hearing loss. Nearly every hearing aid today uses at least one type of compression, and most use at least two different types (Ricketts et al. 2019). One common scheme is to apply multichannel wide dynamic-range compression (WDRC) in combination with compression limiting. Compression limiting is designed to greatly reduce gain when hearing aid output would otherwise exceed a criterion level that is very high (e.g., 115 dB sound pressure level [SPL]). That is, the goal is to ensure that high-level inputs do not exceed the patient’s threshold of discomfort. In contrast, WDRC uses frequency-specific activation threshold levels (knee points). For input levels below the knee point, the gain is constant regardless of input; for input levels above the knee point, the gain is decreased with increasing input. In modern hearing aids, compression knee points are commonly lower than most speech input levels (e.g., 35–55 dB SPL). This ensures that most speech inputs (soft to loud) are compressed. When input signals are above the kneepoint in any given channel, any change in level will result in a change in gain based on a predefined ratio (compression ratio) and timescale. For example, if an input increases by 10 dB, a compression ratio of 2:1 will result in a change in output of only 5 dB. The timescale is defined by an “attack time” (the time it takes to decrease gain in response to an increase in signal level) and a “release time” (the time it takes to increase gain in response to a decrease in signal level). Compression attack and release times are defined electroacoustically, which involves nearly instantaneously increasing and then decreasing frequency-specific input signal levels from 55 to 90 back to 55 dB SPL and measuring the time it takes to reach stable output values within ±3 dB (American National Standards Institute [ANSI] 2014). Modern hearing aids have attack times varying from <1 ms to a few seconds. Release times exhibit an even greater range, from <30 ms to >5 s. Compression attack and release times are sometimes described relative to the length of the speech segment that is effectively compressed (e.g., phonemic compression, syllabic compression). In contrast, slow-acting compression will not compress the dynamic range of the speech input for a single talker. Instead, the range of average output levels over longer time windows is reduced. Compression is often referred to as automatic gain control because the gain of the hearing aid changes automatically as the input level changes. Even hearing aid models that do not use true compression still typically apply alternative methods to decrease gain with increasing input level.

Binaural listening increases the perceived sound loudness compared with listening monaurally, particularly for sound levels above threshold (i.e., Hall and Harvey 1985). Interestingly, the two popular prescriptive methods apply slightly different corrections for unilateral versus bilateral fittings to account for this suprathreshold loudness summation for speech. Specifically, DSLv50 includes an optional bilateral correction that reduces speech targets by 3 dB. Conversely, NAL-NL2 has a bilateral gain correction that increases with input level. It is 2 dB at low-input levels (40 dB SPL and below) and increases to 6 dB at high-input levels for symmetrical losses. Smaller corrections are used for asymmetrical losses.

Because compression distorts ILDs, it has the potential to limit binaural outcomes, particularly in complex environments in which ITD information may be distorted more than ILD information (see Zahorik, Chap. 9). Importantly, there are data suggesting that listeners will weigh either ILD or ITD cues more heavily depending on which cue is more accessible (e.g., Bibee and Stecker 2016). Because WDRC applies gain based on the input intensity in multiple channels, changes to the ILD are level dependent. Moreover, because all popular prescriptive gain procedures prescribe higher compression ratios in those frequency regions where an individual’s thresholds are poorer (typically in the higher frequencies), typical ILDs will also be disrupted in a frequency-specific fashion. Similarly, DNR can affect the frequency- and level-specific ILD for nonspeech sounds. DNR generally acts to filter or reduce the gain when the frequency-specific input is deemed to be noise based on acoustic analyses. In most hearing aids, the magnitude of gain reduction will increase with increasing level and decreasing estimated SNR. Although the data to date suggest that compression, DNR, and unilateral beamforming have no more than a limited effect on binaural outcomes in experienced hearing aid wearers (Keidser et al. 2006), much of this work was completed in relatively simple environments. It may therefore be of interest to examine the bilateral benefits in more complex listening environments.

13.3.2.2 Cochlear Implants

Multichannel stimulation in cochlear implants presents a few interesting issues that can affect binaural hearing outcomes. Early multichannel implants used analog electric currents to provide speech understanding. These currents were presented on all channels simultaneously and led to interactions between channels because of summation of the electric fields of individual electrodes (White et al. 1984). These interactions cause cross talk between channels such that auditory nerve fibers in one location of the cochlea may respond to stimulation from multiple electrodes at once, thereby distorting the spectral representation of the acoustic signal. To overcome channel interaction, interleaved pulsatile stimulation was introduced. This approach, named continuous interleaved sampling (CIS), stimulated only one electrode at any time with a biphasic electric pulse (Wilson et al. 1991a). The amplitude of each pulse is derived from the envelope of the signal for that channel (see Fig. 13.2B). Studies comparing analog stimulation and CIS showed improvements in open-set speech recognition in most patients with the latter approach (Wilson et al. 1991b; Loizou et al. 2003). Hence, CIS-like approaches have become the common approach for delivering electrical stimulation with cochlear implants. Modern sound-coding strategies typically use high-stimulation rates (≥900 pps/channel) based on research showing that electrical stimulation should be at least four times the highest envelope frequency that is to be presented for envelope pitches to be unaffected by the carrier pitch (McKay et al. 1994) and that pulse rates around 1000 pps were typically better (although not always) for speech perception compared with lower rates (Kiefer et al. 2000; Loizou et al. 2000).

With multichannel stimulation, an important question is how many electrode channels are necessary to convey speech understanding with fidelity. The channel vocoder (Dudley 1939) has been an instrumental tool for answering this question in listeners with normal hearing. Like CIS, the channel vocoder band-pass filters an incoming acoustic signal into a number of channels and extracts the envelope of each channel (Loizou 2006). However, rather than using the amplitudes of the envelope to modulate electrical pulses, the envelope could be used to modulate sine tones or band-pass filtered noise so that they can be presented to listeners with normal hearing (Shannon et al. 1995). With the channel vocoder, the effect of varying the number of channels on speech understanding has been studied in normal-hearing listeners, with results showing that three to four channels are needed to achieve reasonable speech understanding in quiet and at least eight channels are needed for speech understanding in noise (Loizou et al. 1999; Shannon et al. 2004). Similar findings have also been reported in cochlear implant users (Friesen et al. 2001; Croghan et al. 2017).

Depending on the manufacturer, modern cochlear implants use between 12 and 22 intracochlear electrodes for encoding sounds into electrical stimulation. Although CIS is the common approach for delivering electrical stimulation, each cochlear implant manufacturer uses a slightly different sound-coding strategy to convert acoustic signals into electrical stimulation. The differences in strategy arise from the different choices made in the design of the internal implant as well as which features of the acoustic signal to encode with electrical pulses. Details of the different sound-coding strategies used by each manufacturer can be found in Verhaert et al. (2012), Wouters et al. (2015), and Zeng et al. (2015). Although each sound-coding strategy is different, many patients can recover usable speech understanding (>80% correct) without lipreading in quiet situations, irrespective of which manufacturer’s device is being used. However, there can be a large variability in outcomes within the users of the same manufacturer’s device (Firszt et al. 2004; Lazard et al. 2012).

The method by which acoustic information is converted into electrical stimulation arguably plays an important role in determining binaural hearing performance with cochlear implants because binaural cues in the acoustic signal need to be encoded in the electrical stimulation for the brain to be able to access them. However, modern cochlear implants have not been designed for encoding binaural cues because the original motivation for cochlear implants was for restoring speech understanding unilaterally. Recall that the CIS method used in modern cochlear implant devices modulates electrical pulses by the signal envelope extracted from band-pass-filtered channels. Although the signal envelope is the minimum amount of information needed for speech understanding, the acoustic signal also contains temporal fine structure (TFS) information that has been shown to be important for the lateralization of ITDs (Smith et al. 2002; Dietz et al. 2013). The importance of TFS for sound localization was shown by Jones et al. (2014). By replacing the original TFS with different acoustic carriers using a channel vocoder, Jones et al. showed that sound localization performance in normal-hearing listeners became like that of bilateral cochlear implant users. However, even though TFS ITDs do not appear to be encoded in a way that is accessible by cochlear implant users, envelope ITDs should (in theory) still be encoded to some degree of fidelity (Kan et al. 2018). However, independent unilateral processing at the two ears can lead to envelope ITDs varying dynamically and unreliably (van Hoesel 2004; Litovsky et al. 2012).

Attempts to improve binaural hearing with cochlear implants have focused on encoding TFS ITDs using individual electrical pulses presented at low stimulation rates on the apical channels (van Hoesel and Tyler 2003; Arnoldner et al. 2007). This approach follows the assumption that normal-hearing listeners are most sensitive to ITDs at low frequencies (Wightman and Kistler 1992; Macpherson and Middlebrooks 2002), and hence TFS ITDs should be provided in the apical channels of the cochlear implant. However, this assumption may not be necessary in cochlear implant users, largely because ITD sensitivity can be measured throughout the length of the electrode array, with no place of best ITD sensitivity among bilateral cochlear implant users (van Hoesel et al. 2009; Litovsky et al. 2012). A few studies have shown that ITD information presented throughout the length of the electrode array can promote good ITD sensitivity with multielectrode stimulation (Kan et al. 2015a; Thakkar et al. 2018).

Bilateral implantation implies that an electrode array needs to be surgically inserted into each ear. Unfortunately, it is extremely difficult to place electrodes in the two ears at precisely the same insertion depth. Differences in insertion depth can be a problem because cochlear implants were designed to take advantage of the tonotopic organization of the cochlea, and insertion depth differences will likely lead to an interaural place-of-stimulation mismatch (IPM) for electrodes of the same number in the two ears. Unfortunately, current cochlear implant signal processing and audiological practices do not necessarily account for IPM because each sound processor is programmed independently for each ear. Hence, the range of frequencies assigned to the same numbered electrode can stimulate different cochlear locations in each ear (see Fig. 13.6). The impact of IPM on binaural hearing abilities in bilateral cochlear implant users have been studied using specialized research processors. IPM has been shown to decrease binaural sensitivity, although the resulting impact on ITD and ILD sensitivities may differ. In general, with increasing IPM, ITD thresholds double with approximately 3 mm of IPM, whereas ILD sensitivity remains much more consistent for an IPM up to about 6 mm (Poon et al. 2009; Kan et al. 2015b). However, much of this work was conducted without loudness roving and a monaural confound may exist when the impact of the IPM on the ILD was measured. Although the impact of an IPM on spatial unmasking has not been directly measured in cochlear implant users, studies in normal-hearing listeners using vocoders have shown that IPM reduces the binaural benefits for speech understanding in noise (Yoon et al. 2011, 2013; Goupell et al. 2018b; see also Best, Goupell, and Colburn, Chap. 7).

Schematic of an interaural place-of-stimulation mismatch (IPM) in bilateral cochlear implant users. The figure shows an unrolled cochlea with an inserted electrode array. For a sound in a particular frequency range (e.g., 250 Hz; red boxes), a difference in electrode insertion depth will lead to different place of stimulation in the two ears

Although bilateral cochlear implant users appear to solely rely on ILDs for sound localization when using clinical sound processors (Grantham et al. 2008; Aronoff et al. 2010), the fidelity with which ILDs are transmitted through the sound processor is likely compromised. This is because the available dynamic range in electric hearing is much smaller than that of naturally occurring sounds (Zeng and Shannon 1994) and the acoustic signal needs to be compressed to be encoded. Generally, the amount of compression applied to speech input levels in cochlear implants is much greater than that in hearing aids. After cochlear implant processing, ILDs of 15–17 dB can be reduced to 3–4 dB (Dorman et al. 2014), which may explain the poorer sound localization performance in bilateral cochlear implant users.

13.3.3 Beamforming Technology

To improve the SNR, beamforming technology was introduced in hearing aids and cochlear implants. Beamforming technologies are designed to have greater sensitivity for sounds arriving from in front of the listener, with relatively lower sensitivity for sounds arriving from behind and/or the side. Angle-specific sensitivity is commonly displayed via polar plots for easier visualization (e.g., Ricketts et al. 2019). The angle-specific sensitivity of these devices increases the effective SNR in environments for which the listener is facing the talker of interest and surrounded by noise (Ricketts 2000b). Consequently, in such environments, speech recognition is significantly improved by beamforming (Ricketts 2000a; Ricketts et al. 2005). Unilateral beamformers (directional microphones) and bilateral beamformers (higher order microphone arrays) have been shown to distort ILDs and ITDs and, in turn, affect sound localization. With regard to ITDs, unilateral beamformers generally have little-to-no effect; however, some bilateral beamformers can result in a severe distortion. For example, one commercial hearing aid provided an ITD of 0° azimuth regardless of the actual angle of arrival (Picou et al. 2014). With regard to ILDs, the intentional differences in sensitivity as a function of angle distort ILDs in both unilateral and bilateral beamformers (Fig. 13.7). In this example, adopted from the data presented by Picou et al. (2014), ILD distortion (i.e., a reduction in ILD compared to the unaided condition) for the unilateral beamformers is generally concentrated at more lateral angles. This is due to the fact that the angular sensitivity of the two unilateral beamformers are most different near 90°. In contrast, the specific bilateral beamformer investigated in that study distorted ILDs for all angles greater than 30°.

The relative effects of commercially available examples of unilateral and bilateral beamformers on interaural level differences (ILDs) compared with those ILDs measured in the unaided ear. (Data from Picou et al. 2014)

A few studies have found a decreased localization performance in the horizontal plane with unilateral beamformers (Keidser et al. 2006; Van den Bogaert et al. 2006). Consistent with the pattern of distortion of the ILDs, localization was disrupted near 90° but not distorted for sounds nearer to the midline. Even with this distortion, overall sound localization performance can be better using unilateral beamformers than omnidirectional processing due to a reduction in front-back confusions. Specifically, because beamformers are generally designed to provide the greatest reduction of level for sounds arriving from behind, they exaggerate the normal level differences between front-arriving and rear-arriving sounds. This exaggeration of the front-to-back level difference can reduce the number of front-back confusions compared with omnidirectional microphone settings (Carette et al. 2014).

Bilateral beamformers can present a more severe distortion to interaural difference cues. In general, bilateral beamforming uses the output from all four microphones in bilaterally fitted hearing aids to generate a single output with a higher DI, which is then routed to both hearing aids simultaneously. With this configuration and limited venting, the resulting diotic presentation could eliminate all interaural differences with clear negative consequences (perceptually, all sounds would emanate from the center of the head). As a result, all modern commercial implementations of bilateral beamformers attempt to preserve or restore some of the naturally occurring interaural differences. In one technique, the frequency region over which the bilateral beamformer provides a diotic signal is band limited to the higher frequencies and a dichotic signal is presented in the lower frequencies via traditional directional processing. In a second and more common technique, the ITDs or ILDs are estimated at the input to the two hearing aids and partially reintroduced after beamformer processing through convolution with an average head-related transform, either in the low frequencies in some research designs (Best et al. 2017) or over a wider frequency range (Picou et al. 2014). In this second, commercially available example, the ILD is reintroduced across all frequencies (rather than just in the high frequencies as would occur in listeners with normal hearing). This provides a constant ILD across all frequencies and is done to offset the distortion to the ITD for low-frequency band limited sounds. Despite these corrections, bilateral beamformers still distort ILDs and ITDs (Brown et al. 2016) and the associated localization performance, particularly in the absence of visual cues (Van den Bogaert et al. 2008; Picou et al. 2014). However, at least one study has demonstrated that a relatively simple localization task (closed-set speaker identification with four possible sources in a background of cafeteria noise) was not significantly disrupted by a commercial bilateral beamformer in comparison to a unilateral beamformer if visual cues were also present. In addition, there was no significant preference difference between these two types of microphone processing during a short (approximately 20-minute) trial during which patients were asked to switch between processing types while walking around a noisy hospital (Picou et al. 2014).

13.3.4 Attempts to Limit Interaural Distortion

There is some evidence suggesting that providing some bilateral control over the previously independent changes in hearing aid gain may be beneficial. For example, a study has shown that some systems that control interaural phase across bilaterally fitted hearing aids can result in a higher proportion of individuals with normal or near normal localization (Drennan et al. 2005). There are also limited data suggesting that directional processing should be activated or deactivated simultaneously for both devices. For example, one study examined the effect of nonsynchronized microphones on localization for 12 hearing-impaired listeners (Keidser et al. 2006). Results showed that left/right localization error was largest when an omnidirectional microphone mode was used on one side and a directional processing one was used on the other. If the microphones settings matched, the localization error decreased by approximately 40%. There has also been at least one study examining the potential benefits of bilateral control that provided simultaneous changes in directional processing and the DNR in comparison to two hearing aids operating independently (Smith et al. 2008). In a crossover design, this study evaluated 20 participants using hearing aids in the real world via the Speech, Spatial, and Qualities of Hearing Scale. The outcomes revealed a trend for the bilaterally linked condition to be rated higher in 12 of 14 questions related to speech understanding and in 14 of 17 questions related to the localization or spatial qualities of sound. Despite these trends, however, significant preference for the bilaterally controlled condition only occurred for one item: “You are sitting around a table or at a meeting with several people. You can see everyone. Can you tell where any person is as soon as they start speaking?”

In addition to bilateral control, there have been efforts to enhance binaural cues, with the goal of improving localization and speech recognition performance in listeners with impaired spatial abilities. Unfortunately, these studies have generally found little or no benefits related to “binaural enhancement.” For example, artificially exaggerating pinna cues as a way to limit front-back reversals and improve localization in the vertical plane in listeners with impaired hearing has been shown to have limited benefits, and the average minimum audible angle performance remained significantly worse than that exhibited by listeners with normal hearing (Rønne et al. 2016). It seems that despite the complexity of some of these processing algorithms, exaggerating interaural differences does not appear to be particularly beneficial for hearing aid users with reduced sensitivity to these same cues.

13.3.5 Patient Factors

Hearing abilities may not always be symmetrical across the two ears. The degree of hearing loss in each ear of an individual can arise from the same or different factors ranging from hereditary, acquired (such as through noise exposure and ototoxicity), and other unknown causes. Even if the factor(s) causing hearing loss in both ears is the same, the severity and progression of loss can still be different. With hearing loss, there can also be physiological differences. Within each ear, poor innervation of spiral ganglion cells can lead to decreased audibility and frequency selectivity with prolonged periods without hearing leading to a decrease in spiral ganglia cells (Moore 2007). If the extent of loss is different in each ear, this can lead to hearing asymmetries, which can affect binaural hearing abilities. As hearing loss increases, signals in an unaided ear become less audible, and hence binaural hearing benefits will be smaller (e.g., Durlach et al. 1981). Therefore, without bilateral amplification, signals may not be audible in both ears, limiting access to binaural cues. Consequently, it is not surprising that several investigators have reported greater and more consistent bilateral benefits for listeners with greater degrees of hearing loss on measures of speech recognition (Festen and Plomp 1986; McArdle et al. 2012), localization (Byrne et al. 1992), preference (Chung and Stephens 1986), and subjective ratings of benefit (Noble 2006; Boymans et al. 2008). van Schoonhoven et al. (2016) demonstrated that the magnitude of bilateral benefits from hearing aids generally increases with increasing hearing loss in multiple domains.

In cochlear implant users, there is increasing evidence that the early onset of hearing loss can affect binaural hearing outcomes. Early profound loss of hearing may lead to a less developed binaural hearing system that appears to affect sensitivity to ITDs with electrical stimulation (Litovsky et al. 2010). Laback et al. (2015) conducted a survey of the literature that measured ITD sensitivity with research processors and low-rate stimulation (≤100 pps). Their review showed that patients who lost their hearing earlier in life were more likely to have poorer ITD sensitivity. The impact of the early onset of deafness is more pronounced in bilaterally implanted children (Litovsky and Gordon 2016). Children who have had no experience with acoustic hearing and use bilateral cochlear implants for listening were more likely to have no measurable ITD sensitivity. In contrast, all bilateral cochlear implant users appear to be able to use ILDs to judge left from right sounds at a constant presentation level (Gordon et al. 2014; Ehlers et al. 2017).

13.3.6 Contralateral Interference for Speech

“Contralateral interference for speech” refers to a dichotic deficit wherein binaural speech recognition performance with two ears is measurably worse than monaural performance during a situation where the reverse would be expected. That is, the additional auditory information from the second ear interferes with performance. Past literature has commonly referred to this phenomenon using the less descriptive and more general term “binaural interference.” Although the prevalence of contralateral interference for speech in the general population may be small (between 5 and 18%; Allen et al. 2000; Mussoi and Bentler 2017), several authors have reported that contralateral interference for speech can be a predictor for unsuccessful bilateral hearing aid use (Jerger et al. 1993; Köbler et al. 2010). Contralateral interference for speech has also been reported in bilateral cochlear implant users (Goupell et al. 2016, 2018a).

13.4 Summary

Hearing devices have become relatively effective in aiding in, and even restoring, speech communication in quiet situations for hearing-impaired patients. In comparison to unilateral use, bilateral fitting of devices can improve speech recognition in noisy situations and sound localization. These benefits seem to also occur for patients who receive a cochlear implant in one ear while still having acoustic hearing in the other. However, despite these gains, a gap in performance still exists between device users and normal-hearing listeners. In this chapter, many of these factors that may be contributing to this gap were described, but there is still much work to be done to improve binaural hearing when listening with devices. Of immediate interest are the relative effects and trade-offs of processing that distort binaural cues (e.g., advanced beamforming technologies), particularly in complex reverberant listening situations for which the relative importance of ILDs and ITDs are less well understood. Another area of interest is the potential trade-offs for technologies when considering a target talker versus talkers in other locations (i.e., overhearing and group conversations) as a function of individual listener differences. Furthermore, a better understanding of how to encode ITDs is needed for cochlear implants.

References

Alexander J (2016) Hearing aid delay and current drain in modern digital devices. Can Audiol 3

Allen RL, Schwab BM, Cranford JL, Carpenter MD (2000) Investigation of binaural interference in normal-hearing and hearing-impaired adults. J Am Acad Audiol 11:494–500

ANSI (2014) Specification of hearing aid characteristics (ANSI S3.22-2014). New York

Arnoldner C, Riss D, Brunner M et al (2007) Speech and music perception with the new fine structure speech coding strategy: preliminary results. Acta Otolaryngol 127:1298–1303. https://doi.org/10.1080/00016480701275261

Aronoff JM, Yoon Y, Freed DJ et al (2010) The use of interaural time and level difference cues by bilateral cochlear implant users. J Acoust Soc Am 127:EL87–EL92. https://doi.org/10.1121/1.3298451

Arts RAGJ, George ELJ, Stokroos RJ, Vermeire K (2012) Review: cochlear implants as a treatment of tinnitus in single-sided deafness. Curr Opin Otolaryngol Head Neck Surg 20:398–403. https://doi.org/10.1097/MOO.0b013e3283577b66

Balfour PB, Hawkins DB (1992) A comparison of sound quality judgments for monaural and binaural hearing aid processed stimuli. Ear Hear 13:331–339. https://doi.org/10.1097/00003446-199210000-00010

Bernstein JGW, Schuchman GI, Rivera AL (2017) Head shadow and binaural squelch for unilaterally deaf Cochlear Implantees. Otol Neurotol 38:e195–e202. https://doi.org/10.1097/MAO.0000000000001469

Bertoli S, Bodmer D, Probst R (2010) Survey on hearing aid outcome in Switzerland: associations with type of fitting (bilateral/unilateral), level of hearing aid signal processing, and hearing loss. Int J Audiol 49:333–346. https://doi.org/10.3109/14992020903473431

Best V, Roverud E, Mason CR, Kidd G (2017) Examination of a hybrid beamformer that preserves auditory spatial cues. J Acoust Soc Am 142:EL369. https://doi.org/10.1121/1.5007279

Bibee JM, Stecker GC (2016) Spectrotemporal weighting of binaural cues: effects of a diotic interferer on discrimination of dynamic interaural differences. J Acoust Soc Am 140:2584–2592. https://doi.org/10.1121/1.4964708

Bilger RC, Black FO, Hopkinson NT, Myers EN (1977) Implanted auditory prosthesis: an evaluation of subjects presently fitted with cochlear implants. Trans Am Acad Ophthalmol Otolaryngol 84:677–682

Blauert J (1997) Spatial hearing, 2nd edn. The MIT Press, Cambridge, MA

Boymans M, Goverts ST, Kramer SE et al (2008) A prospective multi-Centre study of the benefits of bilateral hearing aids. Ear Hear 29:930–941

Boymans M, Goverts ST, Kramer SE et al (2009) Candidacy for bilateral hearing aids: a retrospective multicenter study. J Speech Lang Hear Res 52:130–140. https://doi.org/10.1044/1092-4388(2008/07-0120)

Brown AD, Rodriguez FA, Portnuff CDF et al (2016) Time-varying distortions of binaural information by bilateral hearing aids. Trends Hear 20:233121651666830. https://doi.org/10.1177/2331216516668303

Byrne D, Noble W, Glauerdt B (1996) Effects of earmold type on ability to locate sounds when wearing hearing aids. Ear Hear 17:218–228. https://doi.org/10.1097/00003446-199606000-00005

Byrne D, Noble W, LePage B (1992) Effects of long-term bilateral and unilateral fitting of different hearing aid types on the ability to locate sounds. J Am Acad Audiol 3:369–382

Carette E, Van den Bogaert T, Laureyns M, Wouters J (2014) Left-right and front-back spatial hearing with multiple directional microphone configurations in modern hearing aids. J Am Acad Audiol 25:791–803. https://doi.org/10.3766/jaaa.25.9.2

Carlile S (1996) Virtual auditory space: generation and applications. Springer, Berlin Heidelberg, Berlin, Heidelberg

Ching TYC, Incerti P, Hill M (2004) Binaural benefits for adults who use hearing aids and Cochlear implants in opposite ears. Ear Hear 25:9–21. https://doi.org/10.1097/01.AUD.0000111261.84611.C8

Ching TYC, Incerti P, Hill M, van Wanrooy E (2006) An overview of binaural advantages for children and adults who use binaural/bimodal hearing devices. Audiol Neurotol 11:6–11. https://doi.org/10.1159/000095607

Chung SM, Stephens SD (1986) Factors influencing binaural hearing aid use. Br J Audiol 20:129–140. https://doi.org/10.3109/03005368609079006

Clark GM, Tong YC, Martin LF, Busby PA (1981) A multiple-channel cochlear implant. An evaluation using an open-set word test. Acta Otolaryngol 91:173–175

Cohen NL, Waltzman SB, Fisher SG (1993) A prospective, randomized study of Cochlear implants. N Engl J Med 328:233–237. https://doi.org/10.1056/NEJM199301283280403

Cox RM, Schwartz KS, Noe CM, Alexander GC (2011) Preference for one or two hearing aids among adult patients. Ear Hear 32:181–197. https://doi.org/10.1097/AUD.0b013e3181f8bf6c

Croghan NBH, Duran SI, Smith ZM (2017) Re-examining the relationship between number of cochlear implant channels and maximal speech intelligibility. J Acoust Soc Am 142:EL537–EL543. https://doi.org/10.1121/1.5016044

Dietz M, Marquardt T, Salminen NH, McAlpine D (2013) Emphasis of spatial cues in the temporal fine structure during the rising segments of amplitude-modulated sounds. Proc Natl Acad Sci 110:15151–15156. https://doi.org/10.1073/pnas.1309712110

Dillon MT, Buss E, Anderson ML et al (2017) Cochlear implantation in cases of unilateral hearing loss. Ear Hear 38:611–619. https://doi.org/10.1097/AUD.0000000000000430

Döge J, Baumann U, Weissgerber T, Rader T (2017) Single-sided deafness: impact of Cochlear implantation on speech perception in complex noise and on auditory localization accuracy. Otol Neurotol 38:e563–e569. https://doi.org/10.1097/MAO.0000000000001520

Dorman MF, Loiselle L, Stohl J et al (2014) Interaural level differences and sound source localization for bilateral cochlear implant patients. Ear Hear 35:633–640. https://doi.org/10.1097/AUD.0000000000000057

Dorman MF, Loiselle LH, Cook SJ et al (2016) Sound source localization by Normal-hearing listeners, hearing-impaired listeners and Cochlear implant listeners. Audiol Neurootol 21:127–131. https://doi.org/10.1159/000444740

Drennan WR, Gatehouse S, Howell P et al (2005) Localization and speech-identification ability of hearing-impaired listeners using phase-preserving amplification. Ear Hear 26:461–472. https://doi.org/10.1097/01.aud.0000179690.30137.21

Dudley HW (1939) The vocoder. Bell Labs Rec:122–126

Dunn CC, Noble W, Tyler RS et al (2010) Bilateral and unilateral cochlear implant users compared on speech perception in noise. Ear Hear 31:296–298. https://doi.org/10.1097/AUD.0b013e3181c12383

Durlach NI, Thompson CL, Colburn HS (1981) Binaural interaction in impaired listeners: a review of past research. Int J Audiol 20:181–211. https://doi.org/10.3109/00206098109072694

Ehlers E, Goupell MJ, Zheng Y et al (2017) Binaural sensitivity in children who use bilateral cochlear implants. J Acoust Soc Am 141:4264. https://doi.org/10.1121/1.4983824

Eisen MD (2006) History of the Cochlear implant. In: Cochlear implant. Thieme Medical Publishers Inc, New York, pp 1–10

Erdman SA, Sedge RK (1981) Subjective comparisons of binaural versus monaural amplification. Ear Hear 2:225–229. https://doi.org/10.1097/00003446-198109000-00009

Festen JM, Plomp R (1986) Speech-reception threshold in noise with one and two hearing aids. J Acoust Soc Am 79:465–471

Firszt JB, Holden LK, Skinner MW et al (2004) Recognition of speech presented at soft to loud levels by adult Cochlear implant recipients of three Cochlear implant systems. Ear Hear 25:375–387. https://doi.org/10.1097/01.AUD.0000134552.22205.EE

Firszt JB, Reeder RM, Holden LK, Dwyer NY (2018) Results in adult Cochlear implant recipients with varied asymmetric hearing. Ear Hear 39:845–862. https://doi.org/10.1097/AUD.0000000000000548

Fraser S, Gagne J-P, Alepins M, Dubois P (2010) Evaluating the effort expended to understand speech in noise using a dual-task paradigm: the effects of providing visual speech cues. J Speech Lang Hear Res 53:18. https://doi.org/10.1044/1092-4388(2009/08-0140)

Freyaldenhoven MC, Plyler PN, Thelin JW, Burchfield SB (2006) Acceptance of noise with monaural and binaural amplification. J Am Acad Audiol 17:659–666. https://doi.org/10.3766/jaaa.17.9.5