Abstract

In this paper, by using the dynamic surface control technique, an adaptive backstepping controller using combined direct and indirect \( \sigma \)-modification adaptation is proposed for a class of parametric strict-feedback systems. In this approach, a \( \sigma \)-modification parameter adaptation law that combines direct and indirect update laws is proposed. At first, the \( x \)-swapping identifier with a gradient-type update law is presented for a class of parametric strict-feedback nonlinear systems. Next, the main steps of the controller design for a class of nonlinear systems in parametric strict-feedback form are described. The closed-loop error dynamics is shown to be globally stable by using the Lyapunov stability approach. Finally, simulation results for a single-link flexible-joint robot manipulator are given to illustrate the tracking performance of the proposed adaptive control scheme.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- Backstepping control

- Direct and indirect adaptive control

- Adaptive dynamic surface control

- Lyapunov stability

- Flexible joint manipulators

1 Introduction

Backstepping has been a powerful method for synthesizing adaptive controllers for the class of nonlinear systems with linearly parameterized uncertainties [1]. The uncertainties are assumed to be linear in the unknown constant parameters [2]. The adaptive backstepping control techniques have been found to be particularly useful for controlling parametric strict-feedback nonlinear systems [3], which achieve boundedness of the closed-loop states and convergence of the tracking error to zero. However, adaptive backstepping control can result in overparametrization and adaptation laws differentiations [3], a significant drawback that can be eliminated by introducing tuning functions [4]. For nonlinear systems with parametric lower-triangular form, several adaptive approaches were also presented in [5].

Though, backstepping technique has become one of the most popular design methods for a large class of single-input single-output (SISO) nonlinear systems [3, 5]. A drawback in the traditional backstepping technique is the problem of ‘‘explosion of complexity’’ [2, 6, 7]. That is, the complexity of the controller grows drastically as the system order increases [1]. This problem is caused by the repeated differentiations of certain nonlinear functions such as virtual controls [2, 6, 7]. In [6], a procedure to deal with this problem for the non-adaptive case has been presented for a class of strict-feedback nonlinear systems, and it is called dynamic surface control (DSC). This problem is eliminated by introducing a first-order filtering of the synthetic virtual control input at each step of the traditional backstepping approach [1, 6–8]. In [2], authors are extending this technique to the adaptive control approach and it is called adaptive dynamic surface control.

The methodology proposed in this paper is an extension of the ideas presented in [9–11] for the adaptive backstepping design. This paper presents a new approach that combines direct and indirect \( \sigma \)-modification adaptation mechanism for adaptive backstepping control of parametric strict-feedback nonlinear systems. In fact, the tracking error based parameter adaptation law of the direct adaptive backstepping control with DSC [2, 11] will be combined with an identification error based parameter adaptation law of the indirect adaptive backstepping control [5, 11–14]. The combined adaptive law is introduced in order to achieve better parameter estimation and hence better tracking performance. The stability analysis of the closed-loop system is performed by using the Lyapunov stability theorem.

This paper is organized as follows. In Sect. 2, the identification based \( x \)-swapping is provided. The combined direct/indirect adaptive backstepping control with DSC is presented in Sect. 3. The stability analysis of the closed-loop system is given in Sect. 4. In Sect. 5, numerical example for a single-link flexible-joint robot manipulator is used to demonstrate the effectiveness of the proposed approach. Conclusion is contained in Sect. 6.

2 Identification Based x-Swapping

The goal of a swapping filter is to transform a dynamic parametric model into a static form, such that standard parameter estimation algorithms can be used. The term swapping describes the fact that the order of the transfer function describing the dynamics and the time varying parameter error \( \tilde{\theta } \) is exchanged [14]. Two types of swapping schemes are presented in [5, 12–14], the z-swapping-based identifier derived from the tracking error model and the \( x \)-swapping-based identifier derived from the state dynamics [14]. Each of these two swapping-based identifiers allows application of gradient and least squares update laws. In this paper we use the gradient update law. To illustrate the \( x \)-swapping-based identifier procedure, we consider the following nonlinear system in parametric \( x \)-model [5, 12–14]

where

\( \theta_{i} \in {\mathbf{\mathbb{R}}}^{{p_{i} }} \).

Then, we introduce the following two filters

where, \( i = 1, \cdots ,n \), and \( A_{i} \left( {x,t} \right) < 0 \) is a negative definite matrix for each \( x \) continuous in \( t \). We define the estimation error vector as

with \( \hat{\theta }_{i} \) the estimate of \( \theta_{i} \) and let

Then, we obtain

The error signal \( \tilde{e}_{i} \) satisfies

To guarantee boundedness of \( \Omega _{i} \) when \( F_{i} \left( {x,u} \right) \) grows unbounded, a particular choice of \( A_{i} \left( {x,t} \right) \) is made [5, 14]

where \( \lambda_{i} > 0 \) and \( A_{0i} \) is an arbitrary constant matrix satisfying

The update law for \( \hat{\theta }_{i} \) employs the estimation error \( e_{i} \) and the filtered regressor \( \Omega _{i} \). The gradient update law is given by

where, \( i = 1, \cdots ,n \).

To establish the identifier properties, let \( \left[ {0,t_{f} } \right) \) be the maximal interval of existence of solutions of (1), the \( x \)-swapping filters (3) and (4), and the gradient update law (11). Then for \( \nu_{i} \ge 0 \) the following properties hold [5, 12–14]

-

(i)

\( \tilde{\theta }_{i} \in L_{\infty } \left[ {0,t_{f} } \right) \)

-

(ii)

\( e_{i} \in L_{2} \left[ {0,t_{f} } \right) \cap L_{\infty } \left[ {0,t_{f} } \right) \)

-

(iii)

\( \dot{\hat{\theta }}_{i} \in L_{2} \left[ {0,t_{f} } \right) \cap L_{\infty } \left[ {0,t_{f} } \right) \)

We consider the following Lyapunov function

Along of Eqs. (8) and (11), the derivative of the Lyapunov function (12) is

Since \( \dot{V}_{i} \) is negative semi-definite, one has \( \tilde{\theta }_{i} \in L_{\infty } \left[ {0,t_{f} } \right) \). From \( e_{i} =\Omega _{i}^{T} \tilde{\theta }_{i} + \tilde{e}_{i} \) and the boundedness of \( \Omega _{i} \), one concludes that \( e_{i} \) and \( \dot{\tilde{\theta }}_{i} \in L_{2} \left[ {0,t_{f} } \right) \cap L_{\infty } \left[ {0,t_{f} } \right) \).

3 Direct/Indirect Adaptive Backstepping Control with DSC

In the direct/indirect adaptive backstepping control with DSC procedure, the control law and the parameter estimation are not separated. In this paper, the parameter update law for \( \hat{\theta }_{i} \) combine gradient-type update laws based on the \( x \)-swapping identifier and tracking error based update laws. The control objective is to achieve the asymptotic tracking of a reference signal \( y_{r} \) by \( x_{1} \). The reference signal \( y_{r} \) and its derivatives \( \dot{y}_{r} , \ldots ,y_{r}^{\left( n \right)} \) are assumed piecewise continuous and bounded. In the following, we describe the main steps of the controller design for the nonlinear system in parametric strict-feedback form

where, \( x = \left[ {\begin{array}{*{20}c} {x_{1} } & {x_{2} } & \cdots & {x_{n} } \\ \end{array} } \right]^{T} \in {\mathbf{\mathbb{R}}}^{n} \) and \( u \in {\mathbf{\mathbb{R}}} \) are the state variables vector and the input of the system, respectively. \( \theta_{i} \in {\mathbf{\mathbb{R}}}^{{p_{i} }} \) are unknown constant parameter vectors, \( \bar{x}_{i} = \left[ {\begin{array}{*{20}c} {x_{2} } & {x_{3} } & \cdots & {x_{i} } \\ \end{array} } \right]^{T} \). The nonlinear functions \( \varphi_{i}^{T} (\bar{x}_{i} ):{\mathbf{\mathbb{R}}}^{i} \to {\mathbf{\mathbb{R}}}^{{p_{i} }} \) are known.

Step \( 1\left( {i = 1} \right) \)

The first surface is defined by \( S_{1} = x_{1} - x_{1d} \), and its time derivative is given by

we choose \( \bar{x}_{2} \) to drive \( S_{1} \) towards zero with

we pass \( \bar{x}_{2} \) through a first order filter, with time constant \( \tau_{2} \), to obtain \( x_{2d} \)

Step \( i\left( {i = 2, \cdots ,n - 1} \right) \)

The \( i^{th} \) surface is defined by \( S_{i} = x_{i} - x_{id} \), and its time derivative is given by

we choose \( \bar{x}_{i + 1} \) to drive \( S_{i} \) towards zero with

we pass \( \bar{x}_{i + 1} \) through a first order filter, with time constant \( \tau_{i + 1} \), to obtain \( x_{i + 1d} \)

Step \( n \)

The \( n^{th} \) surface is defined by \( S_{n} = x_{n} - x_{nd} \), and its time derivative is given by

we choose the control input \( u \) to drive \( S_{n} \) towards zero with

The update laws (direct part) for the parameter estimates are given by [2, 11]

where, \( \bar{{\Gamma }}_{i} > 0\left( {i = 1, \cdots ,n} \right) \) are design parameters that can be adjusted for the rate of convergence of the parameter estimates.

Let us introduce the following two filters

where, \( i = 1, \cdots ,n \), and

where \( \lambda_{i} > 0 \) and \( A_{0i} \) is an arbitrary constant matrix satisfying

The gradient update law (indirect part) is given by [5, 11–14]

where, \( i = 1, \cdots ,n \) and \( e_{i} = x_{i} +\Omega _{0i} -\Omega _{i}^{T} \hat{\theta }_{i} ,e_{i} \in {\mathbf{\mathbb{R}}} \).

Now we propose the following combined direct and indirect \( \sigma \)-modification adaptation law [11]

where, \( i = 1, \cdots ,n \), \( \sigma_{i} \) is a small positive constant, \( \bar{{\Gamma }}_{i} \) is a positive definite constant matrix and \( \bar{\theta }_{i} \) is computed with the gradient method as follows

where, \( {\Gamma }_{i} \) is a positive definite constant matrix and \( e_{i} = x_{i} +\Omega _{0i} -\Omega _{i}^{T} \bar{\theta }_{i} ,e_{i} \in {\mathbf{\mathbb{R}}} \).

4 Stability Analysis

We define the boundary layer error as [2, 11, 15]

and the parameter estimate errors as

Then the closed-loop dynamics can be expressed in terms of the surfaces \( S_{i} \), the boundary layer errors \( y_{i} \), and the parameter estimate errors \( \tilde{\theta }_{i} \).

The dynamics of the surfaces are expressed, for \( i = 1 \), as

For \( i = 2, \cdots ,n - 1 \)

For \( i = n \)

The dynamics of the boundary layer errors \( y_{i} \) are expressed, for \( i = 2 \), as

For \( i = 3, \cdots ,n \)

Let us consider the following Lyapunov function

where

Then, one has

We assume that, \( \left| {y_{i} \dot{\bar{x}}_{i} } \right| \le M_{1i} y_{i}^{2} + M_{2i} S_{i}^{2} + \delta_{i}^{2} \), where, \( M_{1i} \) and \( M_{2i} \) are positive constants, and \( \delta_{i} \) are bounded functions, then, we can write

One has: \( \theta_{i} - \bar{\theta }_{i} \) is bounded, thus: \( e_{{\theta_{i} }} = \theta_{i} - \bar{\theta }_{i} \) is bounded, \( \bar{\theta }_{i} = \theta_{i} - e_{{\theta_{i} }} \) and \( \tilde{\theta }_{i} = \theta_{i} - \hat{\theta }_{i} \).

If we assume that, \( \delta_{i} \in L_{\infty } \), \( e_{{\theta_{i} }} \in L_{\infty } \), \( K_{1} > 1 \), \( K_{i} > M_{2i} + \frac{3}{2} \), \( K_{n} > M_{2n} + \frac{1}{2} \) and \( \frac{1}{{\tau_{i} }} > \frac{1}{2} + M_{1i} \), we obtain the boundedness of all signals \( S_{i} \), \( y_{i} \) and \( \tilde{\theta }_{i} \). Moreover, the surface \( S_{i} \) can be made arbitrarily small by adjusting the design parameters \( K_{i} \).

5 Numerical Example

Consider the single-link flexible-joint robot shown in Fig. 1. The dynamic model of this robot is given as follow [16, 17]

where \( u \) is the input torque. \( J_{1} \) and \( J_{2} \) are the inertias of the link and the motor respectively. \( M \) is the link mass. \( g \) is the gravity. \( L \) is the link length. \( K \) is the stiffness. \( q_{1} \) and \( q_{2} \) are the angular positions of the link and the motor shaft, respectively.

Let the state variables defined as follows: \( x_{1} = q_{1} \), \( x_{2} = \dot{q}_{1} \), \( x_{3} = q_{2} \) and \( x_{4} = \dot{q}_{2} \), and its dynamic model becomes

with

The single-link flexible-joint robot model used in this paper is given by (49) where the parameter values are given in Table 1 [17].

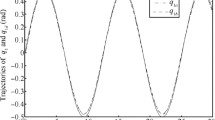

For the numerical simulation, the unknown parameter \( \theta_{1} \) of the system is selected as \( \theta_{1} = MgL \). Our objective is to force the output of the system to follow the reference trajectory given by: \( y_{d} = 0.1\sin \left( t \right) \).

We choose \( \bar{x}_{2} \), \( \bar{x}_{3} \) and \( \bar{x}_{4} \) to drive \( S_{1} \), \( S_{2} \) and \( S_{3} \) towards zero with

We choose the control \( u \) to drive \( S_{4} \) towards zero with

For the swapping-based identifier we use the following filters

The combined direct and indirect \( \sigma \)-modification adaptation law is given by

where, \( \bar{\theta } \) is computed with the gradient method as

where, \( e = x_{2} +\Omega _{0} -\Omega ^{T} \bar{\theta } \).

The selected initial conditions are: \( x\left( 0 \right) = \left[ {\begin{array}{*{20}c} {0.1} & 0 & {0.1 + \frac{MgL}{K}\sin \left( {0.1} \right)} & 0 \\ \end{array} } \right]^{T} \), \( \hat{\theta }_{1} \left( 0 \right) = \bar{\theta }\left( 0 \right) = 0 \) and \( \Omega _{0} \left( 0 \right) =\Omega ^{T} \left( 0 \right) = 0 \). The design parameters are selected as follows: \( K_{1} = 1 \), \( K_{2} = 80 \), \( K_{3} = 10 \), \( K_{4} = 100 \), \( \bar{\Gamma} = 15, \) \( \sigma = 0.5 \), \( {\Gamma} = 20, \) \( \tau_{2} = \tau_{3} = \tau_{4} = 0.009 \) and \( \lambda = \nu = 0.1 \).

Numerical simulation results are shown in Figs. 2, 3, 4, 5, 6, 7, 8, 9, 10 and 11. Figures 2, 3, 4 and 5 show actual and desired trajectories of the angular position and velocity of the link and the motor shaft. Figures 6, 7, 8 and 9 show the trajectories of the surfaces. Figure 10 shows the trajectory of the control input signal \( u \). Figure 11 shows the trajectory of the estimated parameter \( \hat{\theta }_{1} \). From these results, we observe that the actual trajectories converge towards the desired trajectories, and that the errors converge to zero and the estimated parameter \( \hat{\theta }_{1} \) converge towards \( \theta_{1} \). We can see that the results show that the proposed method has good tracking performance.

6 Conclusion

In this paper, adaptive backstepping control using combined direct and indirect \( \sigma \)-modification adaptation based gradient update law is designed using the DSC technique for a class of parametric strict-feedback nonlinear systems. The proposed approach eliminates the problem of explosion of complexity of the traditional backstepping approach. Stability analysis shows that the uniform ultimate boundedness of all signals in the closed-loop system can be guaranteed, and the tracking error can be made arbitrarily small by adjusting the control design parameters. Numerical simulation results demonstrate the effectiveness of the proposed approach.

References

Sun, G., Wang, D., Li, X., Peng, Z.: A DSC approach to adaptive neural network tracking control for pure-feedback nonlinear systems. Appl. Math. Comput. 219(11), 6224–6235 (2013)

Yip, P.P., Hedrick, J.K.: Adaptive dynamic surface control: a simplified algorithm for adaptive backstepping control of nonlinear systems. Int. J. Control 71(5), 959–979 (1998)

Kanellakopoulos, I., Kokotovic, P.V., Morse, A.S.: Systematic design of adaptive controllers for feedback linearizable systems. IEEE Trans. Autom. Control 36(11), 1241–1253 (1991)

Krstic, M., Kanellakopoulos, I., Kokotovic, P.V.: Adaptive nonlinear control without overparametrization. Syst. Control Lett. North-Holland 19(3), 177–185 (1992)

Krstic, M., Kanellakopoulos, I., Kokotovic, P.V.: Nonlinear and Adaptive Control Design. Wiley-Interscience Publication, New York (1995)

Swaroop, D., Gerdes, J.C., Yip, P.P., Hedrick, J.K.: Dynamic surface control of nonlinear systems. In: Proceedings of the American Control Conference, Albuquerque, New Mexico, pp. 3028–3034 (1997)

Swaroop, D., Hedrick, J.K., Yip, P.P., Gerdes, J.C.: Dynamic surface control for a class of nonlinear systems. IEEE Trans. Autom. Control 45(10), 1893–1899 (2000)

Zhang, T.P., Ge, S.S.: Adaptive dynamic surface control of nonlinear systems with unknown dead zone in pure feedback form. Automatica 44(7), 1895–1903 (2008)

Soukkou, Y., Labiod, S.: Adaptive backstepping control using combined direct and indirect adaptation based gradient update law for a single-link flexible-joint robot. In: 5th International Conference on Electrical Engineering and Automatic Control, Setif, Algeria, 24–26 November 2013

Soukkou, Y., Labiod, S.: Adaptive backstepping control using combined direct and indirect adaptation based least squares update law for a single-link flexible-joint robot. In: Proceedings of the 3rd International Conference on Industrial Engineering and Manufacturing, Batna, Algeria, pp. 272–279, 11–13 May 2014

Soukkou, Y.: Commande adaptative par backstepping d’une classe des systèmes non linéaires incertains. Magister Thesis, University of Jijel (2014)

Krstic, M., Kokotovic, P.V.: Adaptive nonlinear control with nonlinear swapping. In: Proceedings of the 32nd Conference on Decision and Control, San Antonio, Texas (1993)

Krstic, M., Kokotovic, P.V.: Adaptive nonlinear design with controller-identifier separation and swapping. IEEE Trans. Autom. Control 40(3), 426–440 (1995)

Oort, E.R.V.: Adaptive backstepping control and safety analysis for modern fighter aircraft. Ph.D. Thesis, Delft University of Technology, Netherlands (2011)

Hou, M.Z., Duan, G.R.: Robust adaptive dynamic surface control of uncertain nonlinear systems. Int. J. Control Autom. Syst. 9(1), 161–168 (2011)

Li, Y., Tong, S., Li, T.: Fuzzy adaptive dynamic surface control for a single link flexible joint robot. Nonlinear Dyn. 70(3), 2035–2048 (2012)

Nicosia, S., Tomei, P.: A tracking controller flexible joint robots using only link position feedback. IEEE Trans. Autom. Control 40(5), 885–890 (1995)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Soukkou, Y., Labiod, S. (2017). Adaptive Backstepping Control Using Combined Direct and Indirect \( \sigma \)–Modification Adaptation. In: Chadli, M., Bououden, S., Zelinka, I. (eds) Recent Advances in Electrical Engineering and Control Applications. ICEECA 2016. Lecture Notes in Electrical Engineering, vol 411. Springer, Cham. https://doi.org/10.1007/978-3-319-48929-2_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-48929-2_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-48928-5

Online ISBN: 978-3-319-48929-2

eBook Packages: EngineeringEngineering (R0)