Abstract

There are multiple problems in several industries that can be solved with combinatorial optimization. In this sense, the Set Covering Problem is one of the most representative of them, being used in various branches of engineering and science, allowing find a set of solutions that meet the needs identified in the restrictions that have the lowest possible cost. This paper presents an algorithm inspired by binary black holes (BBH) to resolve known instances of SPC from the OR-Library. Also, it reproduces the behavior of black holes, using various operators to bring good solutions.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The SCP is one of 21 NP-Hard problems, representing a variety of optimization strategies in various fields and realities. Since its formulation in the 1970 s has been used, for example, in minimization of loss of materials for metallurgical industry [1], preparing crews for urban transportation planning [2], safety and robustness of data networks [3], focus of public policies [4], construction structural calculations [5]. This problem was introduced in 1972 by Karp [6] and it is used to optimize problems of elements locations that provide spatial coverage, such as community services, telecommunications antennas and others.

The present work applied a strategy based on a binary algorithm inspired by black holes to solve the SCP, developing some operators that allow to implement an analog version of some characteristics of these celestial bodies to support the behavior of the algorithm and improve the processes of searching for the optimum. This type of algorithm was presented for the first time by Abdolreza Hatamlou in September 2012 [7], registering some later publications dealing with some applications and improvements. In this paper it will be detailed methodology, developed operators, experimental results and execution parameters and handed out some brief conclusions about them, the original version for both the proposed improvements.

The following section explains in detail the Set Covering Problem (SPC) and will be defined and briefly explain the black holes and their behavior. Then, in Sect. 3 the algorithm structure and behavior will be reviewed. Sections 4 and 5 will explain the experimental results and the conclusions drawn from these results.

2 Conceptual Context

This section describes the necessary concepts to understand the operation of the SPC and the basic nature of black holes, elements necessary to understand the subsequent coupling of both concepts.

2.1 SCP Explanation and Detail

Considering a binary numbers array A, of m rows and n columns (\(a_ {ij}\)), and a C vector (\(c_j\)) of n columns containing the costs assigned to each one, then we can then define the SCP such as:

Where a:

This ensures that each row is covered by at least one column and that there is a cost associated with it [8].

This problem was introduced in 1972 by Karp [6] and is used to optimize problems of elements locations that provide spatial coverage, such as community services, telecommunications antennas and others. In practical terms it can be explained by the following example:

Imagine a floor that is required to work on a series of pipes (represented in red) that are under 20 tiles, rising the least amount possible of them. The diagram of the situation would be as follows (Fig. 1).

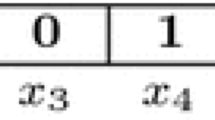

We define a variable \(X_{ij}\) which represents each tile by its coordinates in row i and column j. It will store a 1 if it is necessary to lift the back plate and a 0 if it is not. Then we will have:

We will define the “objective function” as:

Min z= \(X_{1a}\) + \(X_{2a}\) + \(X_{3a}\) + \(X_{4a}\) + \(X_{5a}\)+ \(X_{1b}\) + \(X_{2b}\) + \(X_{3b}\) + \(X_{4b}\) + \(X_{5b}\)+ \(X_{1c}\) + \(X_{2c}\) + \(X_{3c}\) + \(X_{4c}\) + \(X_{5c}\) + \(X_{1d}\) + \(X_{2d}\) + \(X_{3d}\) + \(X_{4d}\) + \(X_{5d}\)

Then the following system of equations represent the constraints of the problem (Table 1):

Then, each restriction corresponds to the tile on top of a main. It is only necessary to pick one per tube. The solution of the system can be seen on Table 2.

Finally, it is only necessary to lift 5 tiles (Fig. 2):

This type of strategy has been widely used in aerospace turbine design [9], timetabling design [10], probabilistic queuing [11], geographic analysis [12], services location [13], scheduling [14] and many others [15].

A variety of algorithms “bio-inspired” that mimic the behavior of some living beings [16] to solve problems, as well as others who are inspired by elements of nature [15], cultural and other types.

The present work applied a strategy based on a binary algorithm inspired by black holes to solve the SCP, developing some operators that allow to implement an analog version of some characteristics of these celestial bodies to support the behavior of the algorithm and improve the processes of searching for the optimum. This type of algorithm was presented for the first time by Abdolreza Hatamlou in September 2012 [7], registering some later publications dealing with some applications and improvements. In this paper it will be detailed methodology, developed operators, experimental results and execution parameters and handed out some brief conclusions about them, the original version for both the proposed improvements.

2.2 Black Holes

Black holes are the result of the collapse of a big star’s mass that after passing through several intermediate stages is transformed in a so massively dense body that manages to bend the surrounding space because of its immense gravity. They are called “black holes” due to even light does not escape their attraction and therefore is undetectable in the visible spectrum, knowing also by “singularities”, since inside traditional physics loses meaning. Because of its immense gravity, they tend to be orbited by other stars in binary or multiple systems consuming a little mass of bodies in its orbit [17] (Fig. 3).

When a star or any other body is approaching the black hole through what is called “event horizon”, collapses in its interior and is completely absorbed without any possibility to escape, since all its mass and energy become part of singularity (Fig. 4). This is because at that point the exhaust speed is the light one [17].

On the other hand, black holes also generate a type of radiation called “Hawking radiation”, in honor of its discoverer. This radiation have a quantum origin and implies transfer of energy from the event horizon of the black hole to its immediate surroundings, causing a slight loss of mass of the dark body and an emission of additional energy to the nearby objects [18].

3 Algorithm

The algorithm presented by Hatamlou [7] faces the problem of determination of solutions through the development of a set of stars called “universe”, using an algorithm type population similar to those used by genetic techniques or particles swarm. It proposes the rotation of the universe around the star that has the best fitness, i.e., which has the lowest value of a defined function, called “objective function”. This rotation is applied by an operator of rotation that moves all stars in each iteration of the algorithm and determines in each cycle if there is a new black hole, that will replace the previous one. This operation is repeated until find the detention criteria, being the last black holes founded the proposed solution and the last of the black holes found corresponds to the final solution proposed. Eventually, a star can ever exceed the defined by the radius of the event horizon [17]. In this case, the star collapses into the black hole and is removed from the whole universe being taken instead by a new star. Thus, stimulates the exploration of the space of solutions. The following is the proposed flow and the corresponding operators according to the initial version of the method (Fig. 5):

3.1 Big Bang

It is the initial random creation of the universe. Corresponds to the creation of a fixed amount of feasible binary vectors, i.e., that comply with all the restrictions defined in the problem.

3.2 Fitness Evaluation

For each star \(x_ {i}\) fitness is calculated by evaluating the objective function, according to the initial definition of the problem. In others terms described in the following way:

It should be remembered that \(c_j\) corresponds to the cost of that column in the matrix of costs. In other words, the fitness of a star is the sum of the product of the value of each column covered with a star in particular, multiplied by the corresponding cost. The black hole will be those who have minor fitness among all existing stars at the time of the evaluation.

3.3 Rotation Operator

The rotation operation occurs above all the universe of \(x_{i}\) stars of t iteration, with the exception of the black hole, which is fixed in its position. The operation sets the new t+1 position follows:

where random \(\in \) {0,1} and change in each iteration, \(x_i\)(t) and \(x_i\)(t+1) are the positions of the star \(x_ {i}\) at t and t+1 iterations respectively, \(x_ {BH}\) is the black hole location in the search space and N is the number of stars that make up the universe (candidate solution). It should be noted that the only exception in the rotation is designated as black hole star, which retains the position.

3.4 Collapse into the Black Hole

When a star is approaching a black hole at a distance called event horizon is captured and permanently absorbed by the black hole, being replaced by a new randomly generated one. In other words, it is considered when the collapse of a star exceeds the radius of Schawarzchild (R) defined as:

where \(f_{BH}\) is the value of the fitness of the black hole and \(f_{i}\) is the ith star fitness. N is the number of stars in the universe.

3.5 Implementation

The algorithm implementation was carried out with a I-CASE tool, generating Java programs and using a relational database as a repository of the entry information and gathered during executions. The parameters finally selected are the result of the needs of the original design of the algorithm improvements made product of the tests performed. In particular, attempted to improve the capacity of exploration of the metha heuristics [19]. Is contrast findings with tables of known optimal values [8], in order to quantitatively estimate the degree of effectiveness of the presented metha heuristics.

The process begins with the random generation of a population of binaries vectors (Stars) in a step that we will call “big bang”. With a universe of m stars formed by vectors of n binary digits, the algorithm must identify the star with better fitness value, i.e., the which one the objective function obtains a lower result. The next step is to rotate the other stars around the black hole detected until some other presents a better fitness and take its place.

The number of star generated will remain fixed during the iterations, notwithstanding that many vectors (or star) will be replaced by one of the operators. The binarization best results have been achieved with that was the standard to be applied in the subsequent benchmarks.

3.6 Feasibility and Unfeasibility

The feasibility of a star is given by the condition if it meets each of the constraints defined in the matrix A. In those cases which unfeasibility was detected, opted for repair of the vector to make it comply with the constraints. We implemented a repair function in two phases, ADD and DROP, as way to optimize the vector in terms of coverage and costs. The first phase changes the vector in the column that provides the coverage at the lowest cost, while the second one removes those columns which only added cost and do not provide coverage.

3.7 Event Horizon

One of the main problems for the implementation of this operator is that the authors refer to vectorial distances determinations or some other method. However, in a 2015 publication, Farahmandian, and Hatamlouy [20] intend to determine the distance of a star \(x_ {i}\) to the radius R as:

I.e. a star \(x_ {i}\) will collapse if the absolute value of the black hole and his fitness subtraction is less than the value of the radius R:

4 Experimental Results

The original algorithm was subjected to a test by running the benchmark 4, 5, 6, A, B, C, D, NRE, NRF, NRG and NRH from OR library [21]. Each of these data sets ran 30 times with same parameters [22], presenting the following results:

Where \(Z_{BKS}\) is the optimal for instance, \(Z_{min}\) is the minimum value found, \(Z_{min}\) is the maximum value found, \(Z_{avg}\) is the average value and \(Z_{RPD}\) is the percentage of deviation from the optimum (Table 3).

5 Analysis and Conclusions

Comparing the results of experiments with the best reported in the literature [24], we can see that results are acceptably close to the best known fitness for 5 and 6 benchmarks, and far away from them in the case of final ones. It is relevant to the case of series 4 and 5, which reached the optimum in a couple of instances. In the case of the first ones are deviations between 0 % and 61,54 %, while in the case of the latter ones reach 3.301,59 % of deviation. In both cases more than 30 times algorithm is executed, considering 20.000 iterations in each one. The rapid initial convergence is achieved, striking finding very significant improvements in the first iteration, to find very significant improvements in early iterations, being much more gradual subsequent and requiring the execution of those operators that stimulate exploration, such as collapse and Hawking radiation. This suggests that the algorithm has a tendency to fall in optimal locations, where cannot leave without the help of scanning components. In order to illustrate these trends, some graphics performance benchmarks are presented (Fig. 6):

While in the benchmark results which threw poor results have significant percentages of deviation from the known optimal, in absolute terms the differences are low considering the values from which it departed iterating algorithm. It is probably why these tests require greater amount of iterations to improve its results, since the values clearly indicate a consistent downward trend, the number of variables is higher and the difference between the optimum and the start values is broader. An interesting analysis element is that the gap between the best and the worst outcome is small and relatively constant in practically all benchmarks, indicating the algorithm tends continuously towards an improvement of results and the minimums are not just a product of suitable random values. The following chart explains this element (Fig. 7):

On the other hand, it is also important to note that in those initial tests in which the stochastic component was greater than that has been postulated as the optimal, the algorithm presented lower performance, determining optimal much higher probably by the inability to exploit areas with better potential solutions. All this is what it can be noted that the associated parameters to define the roulette for decision-making are quite small ranges in order that the random component be moderate. Other notorious elements are the large differences in results obtained with different methods of transfer and binarization, some ones simply conspired against acceptable results. Various possibilities already exposed to find a satisfactory combination were explored (Fig. 8). Some investigation lines that can be interesting approach for a possible improvement of results may be designed to develop a better way to determine the concept of distance, with better tailored criteria to the nature of the algorithm, as well as a more sophisticated method of mutation for those stars subjected to Hawking radiation. Additionally, some authors treat the rotation operator by adding additional elements such as mass and electric charge of the black holes [25], what was not considered in this work becouse the little existing documentation.

References

Vasko, F., Wolf, F., Stott, K.: Optimal selection of ingot sizes via set covering. Oper. Res. 35(3), 346–353 (1987)

Desrochers, M., Soumis, F.: A column generation approach to the urban transit crew scheduling problem. Transp. Sci. 23(1), 1–13 (1989)

Bellmore, M., Ratliff, H.D.: Optimal defense of multi-commodity networks. Manage. Sci. 18(4–part–i), B-174 (1971)

Garfinkel, R.S., Nemhauser, G.L.: Optimal political districting byimplicit enumeration techniques. Manage. Sci. 347, 267–276 (2015)

Amini, F., Ghaderi, P.: Hybridization of harmony search and ant colony optimization for optimal locating of structural dampers. Appl. Soft Comput. 13(5), 2272–2280 (2013)

Karp, R.: Reducibility among combinatorial problems (1972). http://www.cs.berkeley.edu/~luca/cs172/karp.pdf

Hatamlou, A.: Black hole: a new heuristic optimization approach for data clustering. Inf. Sci. 222, 175–184 (2013)

Ataim, P.: Resolución del problema de set-covering usando un algoritmo genético (2005)

Wang, B.S.: Caro and Crawford, “Multi-objective robust optimization using a postoptimality sensitivity analysis technique: application to a wind turbine design”. J. Mech. Design 137(1), 11 (2015)

Crawford, B., Soto, R., Johnson, F., Paredes, F.: A timetabling applied case solved with ant colony optimization. In: Silhavy, R., Senkerik, R., Oplatkova, Z.K., Prokopova, Z., Silhavy, P. (eds.) Artificial Intelligence Perspectives and Applications. AISC, vol. 347, pp. 267–276. Springer, Heidelberg (2015)

Marianov, R.: The queuing probabilistic location set coveringproblem and some extensions. Department of Electrical Engineering, PontificiaUniversidad Católica de Chile (2002)

ReVelle, C., Toregas, C., Falkson, L.: Applications of the location set-covering problem. Geog. Anal. 8(1), 65–76 (1976)

Walker, W.: Using the set-covering problem to assign fire companies to fire houses. The New York City-Rand Institute (1974)

Crawford, B., Soto, R., Johnson, F., Misra, S., Paredes, F.: The use of metaheuristics to software project scheduling problem. In: Murgante, B., Misra, S., Rocha, A.M.A.C., Torre, C., Rocha, J.G., Falcão, M.I., Taniar, D., Apduhan, B.O., Gervasi, O. (eds.) ICCSA 2014, Part V. LNCS, vol. 8583, pp. 215–226. Springer, Heidelberg (2014)

Soto, R., Crawford, B., Galleguillos, C., Barraza, J., Lizama, S., Muñoz, A., Vilches, J., Misra, S., Paredes, F.: Comparing cuckoo search, bee colony, firefly optimization, and electromagnetism-like algorithms for solving the set covering problem. In: Gervasi, O., Murgante, B., Misra, S., Gavrilova, M.L., Rocha, A.M.A.C., Torre, C., Taniar, D., Apduhan, B.O. (eds.) ICCSA 2015. LNCS, vol. 9155, pp. 187–202. Springer, Heidelberg (2015)

Crawford, B., Soto, R., Peña, C., Riquelme-Leiva, M., Torres-Rojas, C., Misra, S., Johnson, F., Paredes, F.: A comparison of three recent nature-inspired metaheuristics for the set covering problem. In: Gervasi, O., Murgante, B., Misra, S., Gavrilova, M.L., Rocha, A.M.A.C., Torre, C., Taniar, D., Apduhan, B.O. (eds.) ICCSA 2015. LNCS, vol. 9158, pp. 431–443. Springer, Heidelberg (2015)

Hawking, S.: Agujeros negros y pequeños universos. Planeta (1994)

S. Hawking and M. Jackson, A brief history of time. Dove Audio, 1993

Crawford, B., Soto, R., Riquelme-Leiva, M., Peña, C., Torres-Rojas, C., Johnson, F., Paredes, F.: Modified binary firefly algorithms with different transfer functions for solving set covering problems. In: Silhavy, R., Senkerik, R., Oplatkova, Z.K., Prokopova, Z., Silhavy, P. (eds.) Software Engineering in Intelligent Systems. AISC, vol. 349, pp. 307–315. Springer, Heidelberg (2015)

Farahmandian, M., Hatamlou, A.: Solving optimization problems using black hole algorithm. J. Adv. Comput. Sci. Technol. 4(1), 68–74 (2015)

Beasley, J.: Or-library (1990). http://people.brunel.ac.uk/~mastjjb/jeb/orlib/scpinfo.html

Beasley, J.E.: An algorithm for set covering problem. Euro. J. Oper. Res. 31(1), 85–93 (1987)

Gervasi, O., Murgante, B., Misra, S., Gavrilova, M.L., Rocha, A.M.A.C., Torre, C., Taniar, D., Apduhan, B.O. (eds.): ICCSA 2015. LNCS, vol. 9155. Springer, Heidelberg (2015)

Beasley, J.E.: A Lagrangian heuristic for set-covering problems. Naval Res. Logistics 1(37), 151–164 (1990)

Nemati, M., Salimi, R., Bazrkar, N.: Black holes algorithm: a swarm algorithm inspired of black holes for optimization problems. IAES Int. J. Artif. Intell. (IJ-AI) 2(3), 143–150 (2013)

Acknowledgements

Broderick Crawford is supported by Grant CONICYT / FONDECYT / REGULAR / 1140897. Ricardo Soto is supported by Grant CONICYT / FONDECYT / REGULAR / 1160455. Sebastiásn Mansilla, Álvaro Gómez and Juan Salas are supported by Postgraduate Grant Pontificia Universidad Católica de Valparaiso 2015 (INF-PUCV 2015).

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Rubio, Á.G. et al. (2016). Solving the Set Covering Problem with a Binary Black Hole Inspired Algorithm. In: Gervasi, O., et al. Computational Science and Its Applications – ICCSA 2016. ICCSA 2016. Lecture Notes in Computer Science(), vol 9786. Springer, Cham. https://doi.org/10.1007/978-3-319-42085-1_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-42085-1_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-42084-4

Online ISBN: 978-3-319-42085-1

eBook Packages: Computer ScienceComputer Science (R0)