Abstract

Optical Long Baseline Interferometry provides unrivalled angular resolution on bright and compact astrophysical sources. The link between the observables (interferometric phase and contrast ) and the image of the source is a Fourier transform expressed first by van Cittert and Zernike . Depending on the source size and the amount of information collected, the analysis of these Fourier components allows a measurement of the typical source size, a parametric modelling of its spatial structures, or a model-independent image reconstruction to be carried. In the past decades, optical long baseline interferometry provided fundamental measurements for astronomy (ex. Cepheids distances, surface-brightness relations) as well as iconic results such as the first images of stellar surfaces other than the Sun. Optical long baseline interferometers exist in the Northern and Southern hemisphere and are open to the astronomical community with modern level of support. We provide in this chapter an introduction to the fundamental principles of optical interferometry and introduce the currently available facilities.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Spatial Frequency

- Optical Path Difference

- Stellar Surface

- High Angular Resolution

- Baseline Interferometry

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

4.1 Linking the Object to the Interference Fringes

The purpose of Optical Long Baseline Interferometry (OLBI) is to overcome the diffraction limit of classical single-aperture telescopes by combining coherently the light coming from several small apertures separated by large distances. The link between these interference fringes (what an interferometer measures) and the object image (what the astronomer is interested in) can be expressed mathematically by modeling three simple progressive steps:

-

interference fringe from a single emitter,

-

linear relation between a small displacement on the sky and of the fringe,

-

integration over many non-coherent emitters to build an extended source.

At the end of these three steps, we obtain the Van Cittert – Zernike theorem .

A schematic representation of a two telescope optical interferometer observing an extended source. At the output of the interferometer, the fringe patterns created by each individual emitters are slightly displaced with respect to each other. This results in a reduced fringe contrast when integrating over all the emitters that compose the source. The mathematical relationship between the source angular brightness distribution and the resulting fringe contrast is given by the so-called Van Cittert – Zernike theorem

4.1.1 Interference of a Single Emitter

The light travels through the two arms of the interferometer (Fig. 4.1). The wave from one telescope is delayed by the projection of the baseline \(\mathbf{B}\) in the direction of observation \(\mathbf{S}\). The beam of the second telescope is delayed artificially by the so-called delay lines by a variable quantity δ.

The intensity at the recombined output of the interferometers is given by the superposition of these fields:

The two waves interfere because they originate from the same emitter. We note here that we suppose that the temporal coherence of light is infinite, which corresponds to an hypothetical monochromatic wave (first hypothesis). It is beyond the scope of this paper to explore the behaviour of polychromatic light. The flux measured at the output of the interferometer therefore writes:

The measured intensity depends on the optical path difference (OPD) , hereafter \(\varDelta =\mathbf{ B} \cdot \mathbf{ S}-\delta\). Depending on OPD, the interference is constructive (Δ = 0) or destructive (Δ = λ∕2). Equation 4.4 describes the so-called fringe pattern . Here I rewrite this cosine pattern as the real part of the OPD modulation and introduce the total photometry I = < A. A ∗ > , so that the following notation will be easier:

4.1.2 Linearity Between the Emitter and the Fringes Displacements

The next step is to understand how the fringe pattern changes when the emitter is slightly displaced in the plane of the sky. We consider the emitter displacement \(\mathbf{s}\) is a small angle such as \(\vert \mathbf{s}\vert << 1\) rad (source is compact on sky, second hypothesis). We consider the internal delay δ is not changed. The fringe pattern is displaced at the recombination point such as:

The fringe displacement is proportional to the emitter angular displacement and to the baseline projected onto the plane of the sky. Here you can think of the whole interferometer as a big Young experiment, which is another way to introduce this mathematical formalism. The key concept is that the angular displacement of the emitter is proportional to the fringe displacement:

where the proportionality factor \(\mathbf{f} =\mathbf{ B}_{\perp }/\lambda\) is often called the spatial frequency. We will see now why.

4.1.3 Integration Over Many Emitters

The final step is to consider an extended target as a collection of emitters. So we simply integrate the previous equation but for all angles over the target image. To do so, we suppose that the individual emitters have zero mutual coherence (third hypothesis). That is correct if the source has no spatial coherence , which is always true for astronomical objects (no spatially resolved optical sources equivalent to masers in the radio have been observed so far).Footnote 1 The integration writes:

with \(I_{0} =\int I(\mathbf{s})\mathrm{d}\mathbf{s}\) the total flux of the source and \(V (\mathbf{f}) =\mathrm{ FT}\{I(\mathbf{s})\}/I_{0}\) its normalized Fourier transform at the spatial frequency \(\mathbf{f}\). Thus, once integrated over all the emitters of the source, the new contrast of the fringe pattern is the Fourier component of the angular brightness distribution of the source, at the spatial frequency \(\mathbf{f}\) defined by the projected baseline . This is the so-called Van Cittert – Zernike theorem .

The three observing regimes of optical interferometry with a modern facility. The middle panels show an illustrative target visibility versus the radial spatial frequency (black line), overlaid with some observations (red stars). The right panels show the corresponding uv-sampling of the spatial frequencies

4.2 Interpreting Interferometric Observations

To summarise the foundation of the technique: by modulating the OPD , we form fringes whose contrast and phases define the measured complex visibility \(V (\mathbf{f})\), the latter being the 2D Fourier Transform of the angular brightness distribution \(I(\mathbf{s})\) of the source at the spatial frequency \(\mathbf{f} = {\boldsymbol B_{\perp }}/\lambda\). How does this work in practice to measure something of an astrophysical target?

As illustrated in Fig. 4.2, one can define three regimes: the source is partially resolved, the source is resolved with a sparse uv-sampling, and the source is resolved with a good uv-sampling (many \(\mathbf{f}\) observed).

4.2.1 Partially Resolved: Diameter Measurements

Measuring diameters has been the first use of astronomical optical interferometry , by Michelson and Pease in 1921 [1]. The authors measured the apparent diameter of Betelgeuse, which is arguably the largest star in the sky. Measuring diameters is still one of the major applications for the technique. Its most important contributions to astrophysics are closely related to the distance scale ladder:

-

The calibration of surface brightness relations for stars (link between color and intrinsic size, so intrinsic luminosity).

-

The calibration of the Cepheid distance scale via a direct measurement of the size of their pulsation, with the Baade-Wesselink method .

The last 15 years have seen a renewed interest for stellar physics , in particular stellar surfaces processes characterisation, to better interpret the discovery and characterization of exo-planets . Of particular interest is the prospect of synergy between asteroseismology and OLBI for precise diameter measurements .

But let’s illustrate this section with a completely different, and more dynamic, example. Figure 4.3 shows the visibility versus spatial frequencies , for different dates after the Nova Delphini exploded in 2013. All the visibility measurements are represented on the same scale. With time, the visibility drops more and more rapidly versus spatial frequencies, indicating the Nova is growing in size. The basic ingredient is that the Fourier Transform of a disc with uniform brightness is the well known Airy function. If this simple model is applicable to the observed object, it becomes possible to estimate the diameter even with only one visibility measurement. Therefore one can provide an independent guess of the diameter every night, with maybe less than 1 h of 2-telescope observing time.

The authors monitored the measured size as a function of time after the explosion. They revealed that the dimension increased by more than a decade in 40 days. This very nice observational result was published in Nature recently [2]. Combining the expansion in apparent size and radial velocities, the authors could determine the distance of the explosion at 4.5 kpc.

Sub-sample of the visibility curves versus spatial frequencies observed on the Nova Delphini 2013 with the CHARA array. The time in days since the explosion is reported in the upper-right corners. The red lines are the best fit model with a uniform disc model (Airy function ). The best fit diameters range from 0.5 mas at day 1 up to 10 mas at day 40

Of course we don’t want to measure only diameters. A technique dedicated to High Angular Resolution should say something about more complex geometries. To do so, one needs to resolve sufficiently the source and to gather a sufficient number of measurements. It is still predominantly done through a parametric analysis .

4.2.2 Parametric Analysis

Binaries are typical objects that are best studied with parametric modelling . The study of multiple systems is the second major application for optical interferometry . Recent surveys for binary search include more than 100 targets observed within 15 nights (see [3] and [4]). Figure 4.4 is an example of a difficult but successful parametric analysis : the interacting binary SS Lep . Clearly, the complex visibility curves cannot be reproduced with a single uniform disc (Airy function ).

Sample of observations (top) of the interacting binary SS Lep with the VLTI . Each subplot represent typically few hours of observation with four telescopes. The red lines is the best-fit binary model whose primary component is marginally resolved (M giant ), plus a surrounding shell. The recovered orbital motion and the SED decomposition are shown in the lower panels

Past photometry and spectroscopy already revealed the components of this system: a main-sequence AV, a MIII evolved giant , and a cool shell dominating at infrared wavelengths. A simple model of orbiting MIII+AV and a surrounding shell was able to reproduce the full complexity of the interferometric observations at any time. This model provides: (a) the stellar diameters , (b) the orbital motion and thus the individual masses, and (c) the individual Spectral Energy Distributions (SED). Altogether the authors found that the system is not filling its Roche lobe , and they had to propose an alternative scenario to explain the mass-transfer. These results have been published by [5].

Observations on a young binary whose parameters are similar to SS Lep (Fig. 4.4). The uv sampling is very good, but it is still impossible to build a geometrical model to capture the spatial complexity. Thus the fitted parameters are model-dependent and cannot be used quantitatively

It is critical to understand that optical interferometry could deliver all these new informations only because the underlying model was adequate. This is well illustrated in Fig. 4.5 which shows observations of a binary with PIONIER at VLTI . This young binary has similar parameters than SS Lep , but with a very complex circumbinary disc with possibly accretion streamers. This target was observed a lot, as seen in the well sampled uv-plane . But even with these high quality observations the binary orbit or its circumstellar environment cannot be constrained. The geometry is too complex and rapidly changing.

In the data we obviously see the binary motion, we see the contribution of the shell, but so far no proper geometric model able to reproduce the observation quantitatively has been found. Therefore there is no scenario that can reconcile all the data. This examples leads to point out that, for the parametric analysis to work, the model needs to represent all the (true) complexity of the target up to the dynamic range corresponding to the accuracy of the data (typically ≈ 15 in current instruments). The consequence is that in order to match the model complexity one needs to gather enough observations in the uv plane .

The availability of moderate ( ≈ 100) or high spectral resolution ( ≈ 1000) opens completely new observables for the parametric analysis technique. The most common is the differential visibility between the continuum and a spectral line. The main interest of differential quantities is that they are often much more robust against calibration issues. The differential quantities are generally linked to difference of size or subtle astrometric shifts. For interferometers with the highest spectral resolution, this technique provides interesting clues on the dynamic of the emitting/absorbing material at the highest angular resolution. Many results of modern interferometry have been obtain with such an analysis [9–11].

4.2.3 Aperture Synthesis Imaging

Of course, the ultimate goal of any high angular resolution technique is to unveil unpredicted morphologies in a model-independent way. This requires image reconstruction , or aperture synthesis imaging from the interferometry data. The technique boils down to reconstruct the image that would have been observed by the gigantic telescope of similar diameter but with a sparse uv-sampling . In order to inverse the sparsely populated Fourier Transform, it is necessary to add some regularisation in order to prescribe how the missing spatial frequencies should be interpolated. Therefore the result may depend on the regularisation choice. Accordingly, to perform unambiguous image reconstruction, one needs a good sampling of the baselines in term of size and orientation. The reader is referred to the chapter by Baron (this book) for a thorough description of the image reconstruction techniques.

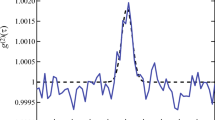

Figure 4.6 shows a dense uv-coverage obtained at VLTI , with several nights of observations of a Mira-type star R Car that involve relocating the telescopes between each nights. These observations were taken during technical time in order to demonstrate the imaging power of VLTI on true data. The observed visibilities follow the overall trend for a disc (Airy function ), but with major discrepancies.

Six image reconstructions from this dataset are shown in the bottom panels, from different softwares using different regularisations . Clearly, the basic structures are consistently recovered on all reconstructions: a star with spots and an envelope. This is a superb result. But the subtle details are not fully consistent between reconstructions. Again this refers to the fact that the object complexity at the dynamic range of the observation is still larger than the number of measurements.

Figure 4.7 presents another iconic example: the image reconstruction of Altair by Monnier et al. [6]. This result is in apparent contradiction with my previous statement. The authors gather only a limited uv-sampling , and still they were able to reconstruct an impressive model-independent image. But the key is that the object intrinsic complexity is manageable. Altair has no circumstellar envelope as R Car . Thus the authors forced the algorithm, through the use of a carefully defined regularisation and prior, to put all the reconstructed flux inside an ellipse corresponding to the stellar photosphere. Its shape was determined simultaneously than the reconstruction. This considerably reduces the degrees of freedom and allows a better convergence.

Left: coverage of the spatial frequencies (uv-plane ) gathered in Altair by Monnier et al. [6] with the MIRC/CHARA interferometer. Right: corresponding image reconstruction . This truly remarkable image compares directly with the prediction of the von-Zeipel theory for fast rotating stars

Lastly, the availability of spectral resolution opens completely new ways to implement the aperture synthesis imaging approach. A large variety of algorithms have been proposed and used to exploit true observations [12–14].

4.3 Instrumentation Suite

Several facilities exist, but the two most productives and the most open are CHARA and the VLTI (Fig. 4.8). Interestingly, CHARA is located in the Northern hemisphere while VLTI is located in the Southern hemisphere. Both of them are supported by modern tools to prepare, calibrate, reduce and analyse observations.

4.3.1 Observing Facilities

The Center for High Angular Resolution Astronomy (CHARA ,Footnote 2 from Georgia State University) is composed of six 1 m telescopes located in a fixed array of 330 m maximum baseline. See [7] for recent results. This facility is equipped with various instruments, whose overall performances can be summarised as follow:

-

MIRC : combines up to 6 telescopes (15 baselines), Hmag ≈ 5, spatial resolution ≈ 0. 7 mas, with spectral resolution R ≈ 40–400. There is an on-going effort to increase sensitivity and expand it to the K band.

-

CLASSIC , CLIMB , FLUOR : combine 2 to 3 telescopes (1 to 3 baseline), Kmag ≈ 8, spatial resolution ≈ 1 mas, with spectral resolution R ≈ 5. There is an on-going effort to increase the number of telescopes.

-

VEGA , PAVO : combine 2 to 3 telescopes (1 to 3 baseline), Vmag ≈ 7. 5, spatial resolution ≈ 0. 3mas, with spectral resolution R ≈ 100–10,000. There is an on-going effort to increase the number of telescopes and sensitivity.

The Very Large Telescope Interferometer (VLTI,Footnote 3 from European Southern Observatory ) is composed of four 1.8 m Auxiliary Telescopes in a relocatable array of 150 m maximum baselines. See [8] for a review of operations. This facility is equipped with various instruments, whose overall performances can be summarised as follow:

-

4 telescopes (6 baselines), Hmag ≈ 8, spatial resolution ≈ 1. 5 mas, with spectral resolution R ≈ 40 (PIONIER).

-

4 telescopes (6 baselines), Kmag ≈ 7, spatial resolution ≈ 2. 5 mas, with spectral resolution R ≈ 5–10,000 (AMBER , GRAVITY ).

-

4 telescopes (6 baselines), L, M, N ≈ 6, spatial resolution ≈ 5–10 mas, with spectral resolution R ≈ 50–10,000 (MATISSE in 2018).

VLTI also combines the four 8 m Unit Telescopes of the Paranal observatory but only few nights per months. Using these telescopes, the limiting magnitudes are increased by about + 2 mag.

Other facilities are currently in operation (NPOI ,Footnote 4 SUSI ,Footnote 5 LBTI Footnote 6) or in development (MROI Footnote 7). The interferometric mode of the Keck telescopes, the so called Keck Interferometer is now stopped, as well as many other facilities or prototypes (PTI ,Footnote 8 IOTA ,Footnote 9 Gi2T , COAST Footnote 10…) that produced early science in the past decades and pioneered the technologies used today. More information is available in the OLBI publication database at http://jmmc.fr/bibdb hosted by the Jean-Marie Mariotti Centre . The community is now exploring the design of a next generation optical array dedicated to planet formation studies: the Planet Formation Imager (PFI) . Alternatives approaches, such as specific smaller arrays, may emerge in the future with the goal to address well-defined science cases.

4.3.2 Support and Observing Tools

Considering the level of support, the OLBI community has soon developed a strong sense of international collaboration and sharing. The best example is the OIFITS format used to store reduced interferometric measurement. Its first version was defined in 2005[15] and an evolution is being proposed by the community. This format is now widely used worldwide by all the above-mentioned facilities. This allows data to be easily shared and analysed. Another example are the tools developed by the Jean-Marie Mariotti Centre to prepare,Footnote 11 calibrate,Footnote 12 reduceFootnote 13 and analyseFootnote 14 observations with efficient and user-friendly interfaces. The last effort from JMMC is to setup a global database of existing optical interferometric observations, with easy access to reduced products.Footnote 15

4.4 Conclusions

Optical long baseline interferometry started at the beginning of the twentieth century but is only now entering a mature phase. This technique samples the Fourier Transform of the angular brightness distribution at spatial frequencies inaccessible with classical telescopes, even considering the Extremely Large Telescope . As such, OLBI provides unrivalled spatial resolution.

For years, measuring the stellar diameters or detecting and monitoring binaries were the main applications of the technique. Large surveys of hundreds of stars have now been achieved. Recently, parametric analysis of sources of manageable complexity brought a completely new insight on interesting systems, such as interacting binaries , discs around young or evolved stars , stellar surface structures , AGNs …However, unknown complexity remains very hard to handle. To go further one has to do true aperture synthesis with an array of a large number of telescopes. The recent years have provided an increasing number of impressive and iconic results such as the first images of stellar surfaces other than the Sun.

Notes

- 1.

An illustrative example of coherent source is an hypothetical object made of two fibres fed by the same laser source. The two individual emitters of this “object” do have strong mutual coherence .

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

- 11.

Aspro2 : http://www.jmmc.fr/aspro

- 12.

SearchCal : http://www.jmmc.fr/searchcal

- 13.

PIONIER data reduction: http://www.jmmc.fr/pndrs

- 14.

LITpro http://www.jmmc.fr/

- 15.

OiDb: http://www.jmmc.fr/oidb

References

Michelson, A.A., Pease, F.G.: Measurement of the diameter of alpha Orionis with the interferometer. ApJ 53, 249 (1921)

Schaefer, G.H., Brummelaar, T.T., Gies, D.R., et al.: The expanding fireball of Nova Delphini 2013. Nature 515, 234 (2014)

Sana, H., Le Bouquin, J.-B., Lacour, S., et al.: Southern massive stars at high angular resolution: observational campaign and companion detection. ApJS 215, 15 (2014)

Marion, L., Absil, O., Ertel, S., et al.: Searching for faint companions with VLTI/PIONIER. II. 92 main sequence stars from the Exozodi survey. A&A 570 A127 (2014)

Blind, N., Boffin, H.M.J., Berger, J.-P., et al.: An incisive look at the symbiotic star SS Leporis. Milli-arcsecond imaging with PIONIER/VLTI. A&A 536, A55 (2011)

Monnier, J. D., Zhao, M., Pedretti, E., et al.: Imaging the surface of Altair. Science 317, 342 (2007)

ten Brummelaar, T. A., Huber, D., von Braun, K., et al.: Some recent results from the CHARA array. ASPC 487, 389 (2014)

Merand, A., Abuter, R., Aller-Carpentier, E., et al.: VLTI status update: a decade of operations and beyond. SPIE 9146 (2014)

Weigelt, G., Kraus, S., Driebe, T., et al.: Near-infrared interferometry of η Carinae with spectral resolutions of 1500 and 12000 using AMBER/VLTI. A&A 464, 87 (2007)

Malbet, F., Benisty, M., de Wit, W.-J., et al.: Disk and wind interaction in the young stellar object MWC 297 spatially resolved with AMBER/VLTI. A&A 464, 43 (2007)

Le Bouquin, J.-B., Absil, O., Benisty, M., et al.: The spin-orbit alignment of the Fomalhaut planetary system probed by optical long baseline interferometry. A&A 498, L41 (2009)

Mourard, D., Monnier, J.D., Meilland, A., et al.: Spectral and spatial imaging of the Be+sdO binary Φ Persei. A&A 577, A51 (2015)

Millour, F., Meilland, A., Chesneau, O., et al.: Imaging the spinning gas and dust in the disc around the supergiant A[e] star HD 62623. A&A 526, A107 (2011)

Kluska, J., Malbet, F., Berger, J.-P., et al.: SPARCO: a semi-parametric approach for image reconstruction of chromatic objects. Application to young stellar objects. A&A 564, A80 (2014)

Pauls, T.A., Young, J.S., Cotton, W.D., Monnier, J.D.: A data exchange standard for optical (visible/IR) interferometry. PASP 117, 1255 (2005)

Acknowledgements

The participation of JBLB to the workshop that led to this book was supported by OSUG@2020 ANR-10-LABX-56.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Le Bouquin, JB. (2016). Optical Long Baseline Interferometry. In: Boffin, H., Hussain, G., Berger, JP., Schmidtobreick, L. (eds) Astronomy at High Angular Resolution. Astrophysics and Space Science Library, vol 439. Springer, Cham. https://doi.org/10.1007/978-3-319-39739-9_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-39739-9_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-39737-5

Online ISBN: 978-3-319-39739-9

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)