Abstract

A wide variety of applied problems of statistical hypothesis testing can be treated under a general setup of the linear models which includes analysis of variance. In this study, a new method is presented to test linear hypothesis using a fuzzy test statistic produced by a set of confidence intervals with non-equal tails. Also, a fuzzy significance level is used to evaluate the linear hypothesis. The method can be used to improve linear hypothesis testing when there is a sensitively in accepting or rejecting the null hypothesis. Also, as a simple case of linear hypothesis testing, one-way analysis of variance based on fuzzy test statistic and fuzzy significance level is investigated. Numerical examples are provided for illustration.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Analysis of variance

- Confidence interval

- Fuzzy critical value

- Fuzzy test statistic

- Fuzzy significance level

- Linear hypothesis

- Linear model

1 Introduction and Background

Analysis of Variance (ANOVA) is a common and popular method in the analysis of experimental designs. It includes important cases such as one-way and two-way ANOVA, and one-way and two-way analysis of covariance, and it has many useful applications in industry, agriculture and social sciences [8, 12, 13]. Various aspects of this topic have been considered in a fuzzy environment. One-way and two-way ANOVA using fuzzy unbiased estimators for variance parameter are discussed based on arithmetic operations on intervals by Buckley [3]. Wu [16] presented one-way ANOVA based on several notations of the \(\alpha\)-cuts of fuzzy random variables, optimistic and pessimistic degrees and solving an optimization problem. An approach for one-way ANOVA has been carried out by Nourbakhsh et al. [10] for fuzzy data in which Zadeh’s extension principle [9, 17] plays a key role for the applied computing operations. A statistical technique for testing the fuzzy hypothesis of one-way ANOVA is proposed by Kalpanapriya et al. [7] using the levels of pessimistic and optimistic of the triangular fuzzy data.

Linear hypothesis testing is an extension of analysis of variance. It can test hypotheses about the unknown parameters of the linear model, such as testing the equality of the means of several random variables [12]. Sometimes the observed value of test statistic is close to the related quantiles of statistical distributions, so there is uncertainty in accepting or rejecting the null hypothesis \(H_{0}\). In this paper, a method is presented for linear hypothesis testing using a fuzzy test statistic and a fuzzy significance level. Moreover, the method can be used for modelling this uncertainty using fuzzy sets theory.

A method for testing statistical hypotheses in a fuzzy environment was introduced by Buckley [2, 3]. It considers a fuzzy test statistic and fuzzy critical values produced using confidence intervals with equal tails and arithmetic operations on intervals. In Buckley’s method the fuzzy estimates are developed as fuzzy numbers, and their membership functions have been derived by Falsafain et al. [5]. In [2] the non-fuzzy hypotheses are tested, and in [14] and [1] the presented approach in [2] is generalized to the case where the statistical hypotheses and the observed data are also fuzzy. When dealing with non-symmetric statistical distributions, using confidence intervals with equal tails results in producing a fuzzy estimate where the membership degree for the unbiased point estimate of the required parameter is not equal to one [4]. While we expect that the unbiased point estimate has the highest importance in the fuzzy estimate, i.e. its membership degree should be equal to one. Solutions to overcome this problem using the confidence intervals with non-equal tails are provided by Buckley [3], and Falsafain and Taheri [4]. It has been shown that the solution presented by Falsafain and Taheri [4] is reduced to Buckley’s method when dealing with symmetric statistical distributions. Moreover, it is possible to obtain the membership functions of the corrected fuzzy estimates. Therefore, we use this solution in this paper.

In order to discuss linear hypothesis testing based on fuzzy test statistic and fuzzy significance level , we first recall some basic concepts of fuzzy sets theory in Sect. 2. Section 3 contains a brief review of linear model and linear hypothesis. In Sect. 4, fuzzy test statistic and fuzzy critical value are discussed and decision rules are presented. Also, one-way ANOVA as a special case of linear hypothesis testing is discussed in Sect. 5. Two numerical examples are provided in Sect. 6 to show that our approach could perform quite well in practice. A conclusion is provided in Sect. 7.

2 Preliminaries

Some concepts of fuzzy sets theory, which will be referred to throughout this paper, are discussed in this section. Let \(U\) be a universal set and \(F\left( U \right) = \left\{ {\tilde{A}|\tilde{A}:U \to \left[ {0,1} \right]} \right\}\). Any \(\tilde{A} \in F(U)\) is called a fuzzy set on \(U\). The \(\alpha\)-cuts of \(\tilde{A}\) is the crisp set \(\tilde{A}_{\alpha } = \left\{ {u \in U|\tilde{A}\left( u \right) \ge \alpha } \right\}\), for \(0 < \alpha \le 1\). Moreover, \(\tilde{A}_{0}\) is separately defined [2] as the closure of the union of all the \(\tilde{A}_{\alpha }\), for \(0 < \alpha \le 1\). The value \(\tilde{A}\left( u \right)\) is interpreted as the membership degree of a point \(u\). \(\tilde{A} \in F({\mathbb{R}})\) is called a fuzzy number, under the following conditions:

-

1.

There is a unique \(r_{0} \in {\mathbb{R}}\) with \(\tilde{A}\left( {r_{0} } \right) = 1\),

-

2.

The \(\alpha\)-cuts of \(\tilde{A}\) are closed and bounded intervals on \({\mathbb{R}}\) for any \(0 \le \alpha \le 1\),

where \({\mathbb{R}}\) is the set of all real numbers. In other words for every fuzzy number \(\tilde{A}\) we have \(\tilde{A}_{\alpha } = \left[ {a_{1} \left( \alpha \right),a_{2} \left( \alpha \right)} \right]\) for all \(\alpha \in \left[ {0, 1} \right]\) which are the closed, bounded, intervals and their bounds are as functions of \(\alpha\).

To continue discussions, we need to clarify the concept of an unbiased fuzzy estimator , using the following definition. Similar to conventional statistics, a fuzzy estimator is a rule for calculating a fuzzy estimate of an unknown parameter based on observed data. Thus the rule and its result (the fuzzy estimate) are distinguished.

Definition 2.1

A fuzzy number \(\tilde{\theta }\) is an unbiased fuzzy estimator for parameter \(\theta\) from a statistical distribution if:

-

1.

The \(\alpha\)-cuts of \(\tilde{\theta }\) are \(\left( {1 - \alpha } \right)100{\% }\) confidence intervals for \(\theta\), with \(\alpha \in \left[ {0.01, 1} \right]\) and \(\tilde{\theta }_{\alpha } = \tilde{\theta }_{0.01}\) for \(\alpha \in [0, 0.01)\).

-

2.

If \(\hat{\theta }\) is an unbiased point estimator for \(\theta\) then \(\tilde{\theta }\left( {\hat{\theta }} \right) = 1\).

An explicit and unique membership function is given for a fuzzy estimate by the following theorem.

Theorem 2.1 [5]

Suppose that \(X_{1} ,X_{2} , \ldots ,X_{n}\) is a random sample of size \(n\) from a distribution with unknown parameter \(\theta\). If, based on observations \(x_{1} ,x_{2} , \ldots ,x_{n}\), we consider \(\tilde{A}_{\alpha } = \left[ {\theta_{1} \left( \alpha \right),\theta_{2} \left( \alpha \right)} \right]\) as a \(\left( {1 - \alpha } \right)\) 100 % confidence interval for \(\theta\) , then the fuzzy estimate of \(\theta\) is a fuzzy set with the following unique membership function:

To end this section, we give an introduction to interval arithmetic. Let \(I = \left[ {a,b} \right]\) and \(J = \left[ {c,d} \right]\) be two closed intervals. Then based on the interval arithmetic, we have

and

where zero does not belong to \(J = \left[ {c,d} \right]\) in the last case.

3 Linear Hypothesis Testing

In this section we give a brief review of linear hypothesis testing, for more details see [12, 13]. The concepts of linear model and linear hypothesis are given in Definition 3.1. The process of linear hypothesis testing is presented in Theorem 3.1.

Definition 3.1

Let \(\varvec{ Y} = \left( {Y_{1} Y_{2} \ldots Y_{n} } \right)^{\prime}\) be a random column vector and \({\mathbf{X}}\) be a \(n \times k\) matrix of full rank \(k < n\) and known constants \(x_{ij} , i = 1,2, \ldots ,n;j = 1,2, \ldots ,k\). It is said that the distribution of \(\varvec{ Y}\) satisfies a linear model if \(\varvec{ }E\left( \varvec{Y} \right) = {\mathbf{X}}\varvec{\beta}\), where \(\varvec{ \beta } = \left( {\beta_{1} \beta_{2} \ldots \beta_{k} } \right)'\) is vector of unknown (scalar) parameters \(\beta_{1} , \beta_{2} , \ldots ,\beta_{k}\), where \(\beta_{j} \in {\mathbb{R}}\) for \(j = 1,2, \ldots ,k\). It is convenient to write \(\varvec{ Y} = {\mathbf{X}}\varvec{\beta}+ \boldsymbol{\epsilon}\), where \(\varvec{ }\boldsymbol{\epsilon} = \left( {\boldsymbol{\epsilon}_{1} \boldsymbol{\epsilon}_{2} \ldots \boldsymbol{\epsilon}_{n} } \right)^{\prime}\) is a vector of non-observable independent normal random variables with common variance \(\sigma^{2}\) and \(E\left( {\boldsymbol{\epsilon}_{j} } \right) = 0\); \(j = 1,2, \ldots ,n\). Relation \(\varvec{ Y} = {\mathbf{X}}\varvec{\beta}+ \boldsymbol{\epsilon}\) is known as a linear model. The linear hypothesis concerns \(\varvec{ \beta }\), such that \(\varvec{\beta}\) satisfies \(\varvec{ }H_{0} :{\mathbf{H}}\varvec{\beta}= {\mathbf{0}}\), where \({\mathbf{H}}\) is a known \(r \times k\) matrix of full rank \(r \le k\).

Theorem 3.1

Consider the linear model \(\varvec{ Y} = {\mathbf{X}}\varvec{\beta}+ \boldsymbol{\epsilon}\). The generalized likelihood ratio (GLR) test for testing the linear hypothesis \(\varvec{ }H_{0} :{\mathbf{H}}\varvec{\beta}= {\mathbf{0}}\) is to reject \(\varvec{ }H_{0}\) at significance level \(\varvec{ }\gamma\) if \(\varvec{ }F \ge F_{1 - \gamma ,r,n - k}\) , where \(\varvec{ }P_{{H_{0} }} \left( {F < F_{1 - \gamma ,r,n - k} } \right) = 1 - \gamma\) and \(\varvec{ }F\) is the random variable given by

where,

and

\(\hat{\varvec{\beta }}\) is the maximum likelihood estimator (MLE) of \(\varvec{\beta}\) and \({\hat{\hat{\varvec{\beta}}}}\) is the MLE of \(\varvec{\beta}\) under \(\varvec{ }H_{0}\). Moreover, under \(\varvec{ }H_{0}\) the random variable \(\varvec{ }F\) has the \(\varvec{ }F\) -distribution with \(\varvec{ }r\) and \(\varvec{ }\left( {n - k} \right)\) degrees of freedom.

Note 3.1 As the result of Theorem 3.1, it can be shown that the pivotal quantity \(SS/\sigma^{2}\) has the distribution \(\chi^{2}\) with \(\varvec{ }\left( {n - k} \right)\) degrees of freedom and \(SS^{*} /\sigma^{2}\) has the distribution \(\chi^{2}\) with \(\varvec{ }r\) degrees of freedom, under the null hypothesis \(\varvec{ }H_{0}\). So both of these pivotal quantities can be used to produce confidence intervals for \(\sigma^{2}\). It is clear that the statistics \(SS/\left( {n - k} \right)\) and \(SS^{*} /r\) (under \(\varvec{ }H_{0}\)) are the unbiased point estimators for the unknown parameter \(\sigma^{2}\).

4 Linear Hypothesis Testing Based on Fuzzy Test Statistic

4.1 Testing at Precise Significance Level

In this section, taking into account Buckley’s method in [2] and its modifications in [4], we consider testing the linear hypothesis based on a fuzzy test statistic and a fuzzy significance level . Because we could obtain a fuzzy test statistics to evaluate the linear hypothesis, we give several theorems sequentially. Also, we obtain a fuzzy critical value using \(\alpha\)-cuts of a considered fuzzy significance level. Next we make two decision rules to the cases where the critical value is either crisp or fuzzy. In the rest of this paper, the symbols \(\chi_{\xi , \upsilon }^{2}\) and \(F_{{\xi ,\upsilon_{1} ,\upsilon_{2} }}\) will be used to represent the \(\xi\)’th quantile of the distribution \(\chi^{2}\) with \(\upsilon\) degrees of freedom and the \(\xi\)th quantile of the distribution \(F\) with \(\upsilon_{1}\) and \(\upsilon_{2}\) degrees of freedom, respectively.

Theorem 4.1.1

In a linear model consider \(SS/\left( {n - k} \right)\) as an unbiased point estimator for parameter \(\sigma^{2}\). Then an unbiased fuzzy estimator for \(\sigma^{2}\) is \(\widetilde{{\sigma^{2} }}\) with the following \(\alpha\)-cuts

in which \(p'\) is obtained from the relation \(\chi_{{p^{\prime},(n - k)}}^{2} = n - k\).

Proof

Based on the pivotal quantity \(SS/\sigma^{2}\), a \(\left( {1 - \alpha } \right)\) 100 % confidence interval for \(\sigma^{2}\) is \(\left[ {SS/\chi_{{1 - \alpha + \alpha p, \left( {n - k} \right)}}^{2} ,SS/\chi_{{\alpha p, \left( {n - k} \right)}}^{2} } \right]\) for any \(0 < \alpha < 1\) and \(0 < p < 1\). When \(\alpha = 1\) and \(p = p '\), satisfying \(\chi_{{p^{ '} , \left( {n - k} \right)}}^{2} = n - k\), this interval becomes the point \(SS/\left( {n - k} \right)\) which is unbiased point estimator for \(\sigma^{2}\). Now fixing \(p = p '\) and varying \(\alpha\) from 0.01 to 1 we obtain nested intervals which are the \(\alpha {\text{ - cuts}}\) of a fuzzy number, say \(\widetilde{{\sigma^{2} }}\). Finally, \((\widetilde{{\sigma^{2} }})_{\alpha } = (\widetilde{{\sigma^{2} }})_{0.01}\) for \(0 \le \alpha < 0.01\). So, we have the unbiased fuzzy estimator \(\widetilde{{\sigma^{2} }}\) for \(\sigma^{2}\).\(\square\)

Lemma 4.1.1

The membership function of fuzzy estimator \(\widetilde{{\sigma^{2} }}\) in Theorem 4.1.1 is as follows:

where \(G\) is the cumulative distribution function of the \(\chi^{2}\) variable with \(\varvec{ }\left( {n - k} \right)\) degrees of freedom.

Proof

By Theorem 4.1.1, we have \(\theta_{1} \left( \alpha \right) = SS/\chi_{{1 - \alpha + \alpha p^{ '} , \left( {n - k} \right)}}^{2}\) for \(0.01 \le \alpha \le 1\). Hence, \(\theta_{1}^{ - 1} \left( u \right) = \left[ {1 - G\left( {ss/u} \right)} \right]/\left( {1 - p^{ '} } \right)\). Also \(\theta_{2} \left( \alpha \right) = SS/\chi_{{\alpha p^{ '} , \left( {n - k} \right)}}^{2}\), therefore \(\left[ {{-}\theta_{2} } \right]^{ - 1} \left( { - u} \right) = \left[ {G\left( {ss/u} \right)} \right]/p^{ '}\) for \(0.01 \le \alpha \le 1\). Based on Theorem 2.1 \(\widetilde{{\sigma^{2} }}\left( u \right) = { \hbox{min} }\{ \theta_{1}^{ - 1} \left( u \right),\) \(\left[ { - \theta_{2} } \right]^{ - 1} \left( { - u} \right), 1\}\). So, the proof follows.\(\square\)

Theorem 4.1.2

Consider \(SS^{*} /r\) as an unbiased point estimator for parameter \(\sigma^{2}\) under the null hypothesis \(\varvec{ }H_{0} :{\mathbf{H}}\varvec{\beta}= {\mathbf{0}}\). Then, an unbiased fuzzy estimator for \(\sigma^{2}\) is \(\widetilde{{\sigma_{{H_{0} }}^{2} }}\) with \(\alpha\) -cuts \((\widetilde{{\sigma_{{H_{0} }}^{2} }})_{\alpha }\) , where

in which \(p''\) is obtained from the relation \(\chi_{{p^{''} , r}}^{2} = r\).

Proof

Consider the pivotal quantity \(SS^{*} /\sigma^{2}\). Now the proof is similar to that of Theorem 4.1.1.\(\square\)

Notice that, similar to Lemma 4.1.1, one can derive the membership function of fuzzy estimator \(\widetilde{{\sigma_{{H_{0} }}^{2} }}\), but under \(\varvec{ }H_{0} :{\mathbf{H}}\varvec{\beta}= 0\).

Lemma 4.1.2

The membership function of fuzzy estimator \(\widetilde{{\sigma_{{H_{0} }}^{2} }}\) in Theorem 4.1.2 is as follows:

where \(G\) is the cumulative distribution function of the \(\chi^{2}\) variable with \(\varvec{ }r\) degrees of freedom.

Proof

By Theorem 4.1.2, the proof is similar to that of Lemma 4.1.1. \(\square\)

Remark 4.1.1

Theorems 4.1.1 and 4.1.2 define unbiased fuzzy estimators for \(\sigma^{2}\) under null hypothesis \(H_{0}\). Moreover, Lemmas 4.1.1 and 4.1.2 provide the membership functions of these two estimators.

Theorem 4.1.3

The fuzzy test statistic for testing \(\varvec{ }H_{0} :{\mathbf{H}}\varvec{\beta}= {\mathbf{0}}\) is \(\varvec{ }\tilde{F}\) with \(\alpha\)-cuts

where

and

Proof

Using the equality \(\tilde{F}_{\alpha } = (\tilde{\sigma }_{{H_{0} }}^{2} )_{\alpha } /(\tilde{\sigma }^{2} )_{\alpha }\) and interval arithmetic, the fuzzy test statistic follows from Buckley’s method. \(\square\)

Decision rule 4.1.1

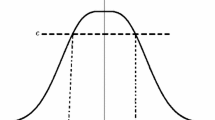

After observing the data and crisp significance level \(\gamma\), a typical method for rejecting or accepting the null hypothesis \(H_{0} :{\mathbf{H}}\varvec{\beta}= {\mathbf{0}}\) can be made as follows. First we calculate the ratio \(A_{\text{R}} /\left( {A_{\text{R}} + A_{\text{L}} } \right)\), where \(A_{\text{R}}\) (\(A_{\text{L}}\)) is area under the graph of the fuzzy test statistic \(\tilde{F}\), but to the right (left) of the vertical line through \(F_{1 - \gamma ,r,n - k}\) (see Fig. 1). Note that Fig. 1 just illustrates the sketch of \(A_{\text{R}}\) and \(A_{\text{L}}\) since the sides of \(\tilde{F}\) are curves, not straight line segments. Next we choose a value for the credit level \(\varphi\) from \(\left( {0,1} \right]\), [1]. Finally, our decision rule at significance level \(\gamma\) is:

-

1.

if \(A_{\text{R}} /\left( {A_{\text{R}} + A_{\text{L}} } \right) \ge \varphi\), then reject the hypothesis \(H_{0} :{\mathbf{H}}\varvec{\beta}= {\mathbf{0}}\),

-

2.

if \(A_{\text{R}} /\left( {A_{\text{R}} + A_{\text{L}} } \right) < \varphi\), then accept \(H_{0}\).

Remark 4.1.2

The presented decision rule 4.1.1 is reasonable since one can see that, by choosing any \(\alpha \in \left[ {0,1} \right]\) and any \(F \in \tilde{F}_{\alpha }\), this \(F\) is some value of the test statistic corresponding to this \(\alpha\) which relates back to confidence intervals for \(\sigma^{2}\). Therefore, if point \(\left( {F,\alpha } \right)\) is in the region \(A_{\text{R}}\) then \(H_{0}\) is rejected because \(F \ge F_{1 - \gamma ,r,n - k}\), and if point \(\left( {F,\alpha } \right)\) is in the region \(A_{\text{L}}\) then \(H_{0}\) is accepted since \(F < F_{1 - \gamma ,r,n - k}\).

Remark 4.1.3

In Decision rule 4.1.1, \(\varphi\) and \(\gamma\) are criterions which control possibilistic and probabilistic errors, respectively. Indeed they unify the concepts of randomness and fuzziness. The selected value of \(\varphi\) is more or less subjective and depends on the decision maker desire.

4.2 Testing at Fuzzy Significance Level

The approach for accepting or rejecting the null hypothesis in Subsection 4.1 is on the basis of comparing the observed fuzzy test statistic \(\tilde{F}\) with the crisp critical value \(F_{1 - \gamma ,r,n - k}\) at a crisp significance level \(\gamma\). In practice it is more natural to consider the significance level as a fuzzy set since the test statistic is fuzzy. In fact, a fuzzy significance level is considered as a fuzzy number on \(\left( {0,1} \right)\), [6, 15]. Subsequently, we define a fuzzy significance level as a fuzzy number. We obtain a fuzzy critical value to evaluate the linear hypothesis using \(\alpha\)-cuts of the defined fuzzy significance level. Finally, we provide a decision rule to decide whether to reject or accept the null hypothesis \(H_{0} :{\mathbf{H}}\varvec{\beta}= {\mathbf{0}}\).

Definition 4.2.1

A fuzzy significance level is a fuzzy number with the following \(\alpha\)-cuts

where \(0 <\upgamma_{1} \le\upgamma \le\upgamma_{2} < 1\).

Theorem 4.2.1

In linear hypothesis testing based on fuzzy test statistics at the introduced fuzzy significance level in Definition 4.2.1 , the critical value is a fuzzy number with the following \(\alpha\)-cuts

Proof

The proof follows by substituting \(\alpha\)-cuts of the fuzzy significance level \(\tilde{\gamma }\) for crisp one \(\gamma\) in the crisp critical level and by using the interval arithmetic. \(\square\)

Example 4.2.1

Consider a linear hypothesis testing at the significance level \(\gamma = 0.05\) where \(n = 25\), \(r = 3\) and \(k = 4\). Assume that the significance level is a fuzzy number with the following \(\alpha\)-cuts

Then based on Theorem 4.2.1 the fuzzy critical value is a fuzzy number with \(\alpha\)-cuts \(\left( {\widetilde{cv}} \right)_{\alpha }\) as follows

Figure 2 shows the fuzzy numbers \(\tilde{\gamma }\) and \(\widetilde{cv}\).

Decision rule 4.2.1

After observing the data, the final decision rule is derived by comparing two fuzzy numbers \(\widetilde{cv}\) and \(\tilde{F}\). Here a way is provided to decide whether to reject or accept the null hypothesis \(H_{0} :{\mathbf{H}}\varvec{\beta}= {\mathbf{0}}\). First we calculate the ratio \(A_{\text{R}} /\left( {A_{\text{R}} + A_{\text{L}} } \right)\), where \(A_{\text{R}}\) and \(A_{\text{L}}\) are depicted in Fig. 3. Note that Fig. 3 just illustrates the sketch of \(A_{\text{R}}\) and \(A_{\text{L}}\) since the sides of \(\tilde{F}\) and \(\widetilde{cv}\) are curves, not straight line segments. Next we choose a value for the credit level \(\varphi\) from \(\left( {0,1} \right]\). Finally, our decision rule at significance level \(\gamma\) is as follows:

-

1.

if \(A_{\text{R}} /\left( {A_{\text{R}} + A_{\text{L}} } \right) \ge \varphi\), then reject the hypothesis \(H_{0} :{\mathbf{H}}\varvec{\beta}= {\mathbf{0}}\),

-

2.

if \(A_{\text{R}} /\left( {A_{\text{R}} + A_{\text{L}} } \right) < \varphi\), then accept \(H_{0}\).

Remark 4.2.1

Decision rule 4.2.1 is reasonable since one can see that, by choosing any \(\alpha \in \left[ {0,1} \right]\), any \(F \in \tilde{F}_{\alpha }\) and any \(F_{1 - \gamma ,r,n - k} \in \left( {\widetilde{cv}} \right)_{\alpha }\), \(F_{1 - \gamma ,r,n - k}\) and \(F\) are some values of the test statistic and the critical level corresponding to this \(\alpha\) which relates back to confidence intervals for \(\sigma^{2}\) and \(\alpha\)-cuts for the fuzzy significance level, respectively. Therefore, if point \(\left( {F,\alpha } \right)\) is in the region \(A_{\text{R}}\) then we reject \(H_{0}\) because \(F \ge { \hbox{max} }\{ F_{1 - \gamma ,r,n - k} :F_{1 - \gamma ,r,n - k} \in \left( {\widetilde{cv}} \right)_{\alpha } \}\), if point \(\left( {F,\alpha } \right)\) is in the region \(A_{\text{L}}\) then we decide to accept \(H_{0}\) since \(F < { \hbox{min} }\{ F_{1 - \gamma ,r,n - k} :F_{1 - \gamma ,r,n - k} \in \left( {\widetilde{cv}} \right)_{\alpha } \}\), and finally if point \(\left( {F,\alpha } \right)\) is not in the region \(A_{\text{L}}\) or \(A_{\text{R}}\) then we do not make any decision on \(H_{0}\). To this end, we have not shared point \(\left( {F,\alpha } \right)\) in the final decision in Decision rule 4.2.1.

Remark 4.2.2

While, Buckley [2, 3] and Taheri et al. [1, 14] consider the problem of testing hypothesis based on a fuzzy test statistic and a crisp significance level, we assume that the significance level is fuzzy. Our method is, therefore, more convenient in real world studies.

5 One-Way ANOVA: A Simple Case of Linear Hypothesis Testing

In this section, one-way ANOVA is considered taking into account the method of linear hypothesis testing based on the fuzzy test statistic and fuzzy significance level. However, we must mention that the procedure proposed in this article is still applicable for any case of linear hypothesis testing. We now give a brief review of one-way ANOVA. For more detail refer to [8, 12]. Consider the linear model

where \(\epsilon_{ij}\)’s have a normal distribution with an unknown variance \(\sigma^{2}\), zero mean and \(\mu_{i}\)’s are unknown parameters. We are interested in testing the linear hypothesis \(H_{0} :\mu_{1} = \mu_{2} = \cdots = \mu_{k}\). To simplify the discussion we use the following notations

where \(\bar{Y}_{i.} = \sum\nolimits_{j = 1}^{{n_{i} }} {Y_{ij} /n_{i} }\) and \(\bar{Y}_{..} = \sum\nolimits_{i = 1}^{k} {\sum\nolimits_{j = 1}^{{n_{i} }} {Y_{ij} /n} }\). By replacing \(\varvec{\beta}= \left( {\mu_{1} \mu_{2} \ldots \mu_{k} } \right)^{'}\) in Theorem 3.1, it can be shown that \(SS = SSE\),\(SS^{*} = SSTr\),\(r = \left( {k - 1} \right)\), \(F = F1\) and the null hypothesis \(H_{0} :\mu_{1} = \mu_{2} = \cdots = \mu_{k}\) is rejected if the observed value of \(F1\) statistic is greater than or equal to \(F_{1 - \gamma ,k - 1,n - k}\). The case described above is referred to as a one-way analysis of variance which is a very simple case of linear hypothesis testing. One-way ANOVA has many applications in agricultural and engineering sciences.

6 Illustrative Examples

Example 6.1

An experiment is conducted to determine if there is a difference in the breaking strength of a monofilament fibre produced by four different machines for a textile company. Also it is known that all fibres are of equal thickness. A random sample is selected from each machine. The fibre strength \(y\) for each specimen is shown in Table 1. The one-way ANOVA model is \(Y_{ij} = \mu_{i} + \epsilon_{ij}\), \(j = 1,2, \ldots ,n_{i}\); \(i = 1,2,3,4\). We are going to test the null hypothesis \(H_{0} :\mu_{1} = \mu_{2} = \mu_{3} = \mu_{4}\). All computations are done by R software [11].

In the traditional statistics point of view and based on Theorem 3.1, we have \(F1 = 2.789\) and \(F_{0.95,3,32} = 2.901\). Therefore we accept \(H_{0}\) at the crisp significance level \(\gamma = 0.05\) because \(F1 < F_{0.95,3.32}\). In other words, there is not any difference at significance level \(0.05\) in the breaking strength of a monofilament fibre produced by four machines. In follows, consider two situations to understand the need of presenting fuzzy-decision-based approach:

-

1.

Let us only change \(y_{11} = 37\), in Table 1, to \(y_{11} = 36\). Now we have \(F1 = 2.907\) and \(F_{0.95,3,32} = 2.901\). So we reject \(H_{0} :\mu_{1} = \mu_{2} = \mu_{3} = \mu_{4}\), for \(\gamma = 0.05\) because \(F1 \ge F_{0.95,3,32}\) (i.e. there is a difference in the breaking strength of a monofilament fibre produced by four machines).

-

2.

Reconsider the observations of the experiment in Table 1, if we change the crisp significance level \(\gamma = 0.05\) to \(\gamma = 0.06\) then the null hypothesis \(H_{0} :\mu_{1} = \mu_{2} = \mu_{3} = \mu_{4}\) is rejected since \(F1 = 2.789\) is greater than \(F_{0.94,3,32} = 2.732\).

Therefore, we are not sure whether to accept or reject \(H_{0}\), since the values of \(F_{1 - \gamma ,k - 1,n - k}\) and \(F1\) are close to each other, based on data in Table 1 and \(\gamma = 0.05\). We overcome the sensitivity of this test by using linear hypothesis testing based on a fuzzy test statistic and a fuzzy significance level. In this example we have \(SS = SSE = 97.419\), \(SS^{*} = SSTr = 25.469\), \(r = \left( {k - 1} \right) = 3\), \(F = F1 = 2.789\) and \(n = 36\). Based on Theorem 4.1.1 a fuzzy estimate for \(\sigma^{2}\) is a fuzzy number with \(\alpha\)-cuts

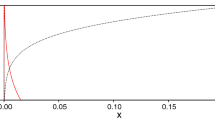

where \(p^{\prime} = 0.533\) is obtained from the relation \(\chi_{{p^{'} , 32}}^{2} = 32\). By Lemma 4.1.1, the membership function of this fuzzy estimate can be given by

where \(G\) is the cumulative distribution function of a \(\chi^{2}\) variable with \(\varvec{ }32\) degrees of freedom, as depicted in Fig. 4. Also, under the null hypothesis \(H_{0} :\mu_{1} = \mu_{2} = \mu_{3} = \mu_{4}\) an unbiased fuzzy estimate for \(\sigma^{2}\) based on Theorem 4.1.2 is a fuzzy number with the following \(\alpha {\text{ - cuts}}\):

and \(p^{{\prime}{\prime}} = 0.608\) is obtained from the relation \(\chi_{{p^{''} ,3}}^{2} = 3\). Now by Theorem 4.1.3, the observed value of the fuzzy test statistic \(\varvec{ }\tilde{F}\) is a fuzzy number with the following \(\alpha\)-cuts (see Fig. 5):

By using Definition 4.2.1 we consider the fuzzy significance level as a fuzzy number with the following \(\alpha\)-cuts:

and by Theorem 4.2.1 one can obtain \(\alpha\)-cuts of the fuzzy critical value as follows:

The graphs of the fuzzy numbers \(\widetilde{cv}\) and \(\tilde{F}\) are shown in Fig. 5. Finally, by Decision rule 4.2.1, since \(A_{\text{R}} /\left( {A_{\text{R}} + A_{\text{L}} } \right) = 0.8971\) where \(A_{\text{R}} = 9.7892\) and \(A_{\text{L}} = 1.1230\), the null hypothesis \(H_{0} :\mu_{1} = \mu_{2} = \mu_{3} = \mu_{4}\) is rejected for every credit level \(\varphi \in \left( {0,0.8971} \right]\). In fact it is possible for us to reject \(H_{0}\) for a high level of credit, since high ratio of observed values of the test statistic lead to reject \(H_{0}\).

Example 6.2

The quantity of oxygen dissolved in water is used as a measure of water pollution. Samples are taken at four locations in a lake and the quantity of dissolved oxygen is recorded in [12] as in Table 2 (lower reading corresponds to greater pollution). We would like to see that whether the data indicate a significant difference in the average amount of dissolved oxygen for the four location based on a fuzzy test statistic and the crisp significance level \(\gamma = 0.05\). The one-way ANOVA model is \(Y_{ij} = \mu_{i} + \epsilon_{ij}\), \(j = 1,2, \ldots ,n_{i}\); \(i = 1,2,3,4\).

In this example we have \(SS = SSE = 4.267\), \(SS^{*} = SSTr = 0.718\), \(r = \left( {k - 1} \right) = 3\), \(F = F1 = 0.897\), \(n = 20\) and \(F_{0.95,3,16} = 3.239\). By Lemma 4.1.1, the membership function of the fuzzy estimate for \(\sigma^{2}\) is given as follows:

where \(G\) is the cumulative distribution function of a \(\chi^{2}\) variable with \(\varvec{ }16\) degrees of freedom, as depicted in Fig. 6.

By Theorem 4.1.3, the observed value of the fuzzy test statistic \(\varvec{ }\tilde{F}\) is a fuzzy number with the following \(\alpha\)-cuts:

The graph of the fuzzy test statistic \(\tilde{F}\) is shown in Fig. 7. Finally, by Decision rule 4.1.1, since \(A_{\text{R}} /\left( {A_{\text{R}} + A_{\text{L}} } \right) = 0.578\) where \(A_{\text{R}} = 2.467\) and \(A_{\text{L}} = 1.797\), the null hypothe-sis \(H_{0} :\mu_{1} = \mu_{2} = \mu_{3} = \mu_{4}\) is accepted for every credit level \(\varphi \in \left( {0.578,1} \right]\). In other words, there is not any difference at significance level \(0.05\) in the average amount of dissolved oxygen for the four location for every credit level \(\varphi \in \left( {0.578,1} \right]\).

7 Conclusions

We have applied fuzzy techniques to linear hypothesis testing in this paper. Basically, in this method a set of \(\left( {1 - \alpha } \right)\) 100 % confidence intervals, for all \(0.01 \le \alpha \le 1\), are employed to produce the notion of the fuzzy test statistic. Also the concept of fuzzy critical value is derived based on \(\alpha\)-cuts of a defined fuzzy significance level. Then, decision rules are provided based on these notions. Employing all the confidence intervals from the 99 % to the 0 % rather than only a single confidence interval results in using far more information in data for the statistical inference. Moreover, this method improves the statistical hypotheses testing when there is an uncertainty in accepting or rejecting the hypotheses. This issue is clarified by practical examples. As a simple case of the linear hypothesis testing, one-way analysis of variance based on fuzzy test statistic and fuzzy significance level is discussed. Nevertheless, as a matter of fact, the proposed method in this article is still applicable to other cases of linear hypothesis testing. An interesting topic for future research is the study of the proposed method on the linear hypothesis testing when the hypotheses are fuzzy rather than crisp. Also, one can consider this problem based on fuzzy data with crisp/fuzzy parameters.

References

Arefi, M., Taheri, S.M.: Testing fuzzy hypotheses using fuzzy data based on fuzzy test statistic. J. Uncertain Syst. 5, 45–61 (2011)

Buckley, J.J.: Fuzzy statistics: hypothesis testing. Soft. Comput. 9, 512–518 (2005)

Buckley, J.J.: Fuzzy Probability and Statistics. Springer, Berlin Heidelberg (2006)

Falsafain, A., Taheri, S.M.: On Buckley’s approach to fuzzy estimation. Soft. Comput. 15, 345–349 (2011)

Falsafain, A., Taheri, S.M., Mashinchi, M.: Fuzzy estimation of parameter in statistical model. Int. J. Comput. Math. Sci. 2, 79–85 (2008)

Holena, M.: Fuzzy Hypothesis testing in a framework of fuzzy logic. Int. J. Intell. Syst. 24, 529–539 (2009)

Kalpanapriya, D., Pandian, P.: Int. J. Mod. Eng. Res. 2, 2951–2956 (2012)

Montgomery, D.C.: Design and Analysis of Experiments, 3rd edn. Wiley, New York (1991)

Nguyen, H.T., Walker, E.A.: A First Course in Fuzzy Logic, 3rd ed. Paris: Chapman Hall/CRC (2005)

Nourbakhsh, M.R., Parchami, A., Mashinchi, M.: Analysis of variance based on fuzzy observations. Int. J. Syst. Sci. 44(4), 714–726 (2013)

Rizzo, M.L.: Statistical Computing with R. Chapman Hall/CRC, Paris (2008)

Rohatgi, V.K., Ehsanes Saleh, A.K.M.: An Introduction to Probability and Statistics, 2nd edn. Wiley, New York (2001)

Searl, S.R.: Linear Models. Wiley, New York (1971)

Taheri, S.M., Arefi, M.: Testing fuzzy hypotheses based on fuzzy test statistic. Soft. Comput. 13, 617–625 (2009)

Taheri, S.M., Hesamian, G.: Goodman-Kruskal measure of assocition for fuzzy-categorized variables. Kybernetika 47(110), 122 (2011)

Wu, H.C.: Analysis of variance for fuzzy data. Int. J. Syst. Sci. 38, 235–246 (2007)

Zadeh, L.A.: Fuzzy sets. Inf. Control 8, 338–359 (1965)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Jiryaei, A., Mashinchi, M. (2016). Linear Hypothesis Testing Based on Unbiased Fuzzy Estimators and Fuzzy Significance Level. In: Kahraman, C., Kabak, Ö. (eds) Fuzzy Statistical Decision-Making. Studies in Fuzziness and Soft Computing, vol 343. Springer, Cham. https://doi.org/10.1007/978-3-319-39014-7_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-39014-7_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-39012-3

Online ISBN: 978-3-319-39014-7

eBook Packages: EngineeringEngineering (R0)