Abstract

We survey the results on no-gap second-order optimality conditions (both necessary and sufficient) in the Calculus of Variations and Optimal Control, that were obtained in the monographs Milyutin and Osmolovskii (Calculus of Variations and Optimal Control. Translations of Mathematical Monographs. American Mathematical Society, Providence, 1998) and Osmolovskii and Maurer (Applications to Regular and Bang-Bang Control: Second-Order Necessary and Sufficient Optimality Conditions in Calculus of Variations and Optimal Control. SIAM Series Design and Control, vol. DC 24. SIAM Publications, Philadelphia, 2012), and discuss their further development. First, we formulate such conditions for broken extremals in the simplest problem of the Calculus of Variations and then, we consider them for discontinuous controls in optimal control problems with endpoint and mixed state-control constraints, considered on a variable time interval. Further, we discuss such conditions for bang-bang controls in optimal control problems, where the control appears linearly in the Pontryagin-Hamilton function with control constraints given in the form of a convex polyhedron. Bang-bang controls induce an optimization problem with respect to the switching times of the control, the so-called Induced Optimization Problem. We show that second-order sufficient condition for the Induced Optimization Problem together with the so-called strict bang-bang property ensures second-order sufficient conditions for the bang-bang control problem. Finally, we discuss optimal control problems with mixed control-state constraints and control appearing linearly. Taking the mixed constraint as a new control variable we convert such problems to bang-bang control problems. The numerical verification of second-order conditions is illustrated on three examples.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Bang-bang Control

- Second-order Optimality Conditions

- Mixed Control-state Constraints

- Induced Optimization Problem (IOP)

- Appearance Control

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

We survey some main results presented in the recent monograph of the authors [36] (SIAM, 2012) and also some results obtained in the earlier monograph of Milyutin and Osmolovskii [28] (AMS, 1998). We discuss further developments of these results and give various applications.

Our main goal is to present and discuss the no-gap second-order necessary and sufficient conditions in control problems with bang-bang controls. In [28], it was shown how, by using quadratic conditions for the general problem of the Calculus of Variations with regular mixed equality constraint g(t, x, u) = 0, one can obtain quadratic (necessary and sufficient) conditions in optimal control problems in which the control variable enters linearly and the control constraint is given in the form of a convex polyhedron. These features were proved in Milyutin and Osmolovskii [28], who first used the property that the set ex U of vertices of a polyhedron U can be described by a nondegenerate relation g(u) = 0 on an open set \(\mathcal{Q}\) consisting of disjoint open neighborhoods of vertices. This allowed us to develop quadratic necessary conditions for bang-bang controls. Further, in [28] it was shown that a sufficient condition for a minimum on ex U guarantees (in the problem in which the control enters linearly) the minimum on its convexification U. In this way, quadratic sufficient conditions for bang-bang controls were obtained in Osmolovskii and Maurer [36]. This property, which is not discussed in the present paper, constitutes the main link between the second-order optimality conditions for broken extremals in the Calculus of Variations and the second-order optimality conditions for bang-bang controls in optimal control.

The paper is organized as follows. In Sect. 2, we formulate no-gap second-order conditions for broken extremals in the simplest problem of the Calculus of Variations. In Sect. 3, we consider such conditions for discontinuous controls in optimal control problems on a fixed time interval with endpoint constraints of equality and inequality type and mixed state-control constraints of equality type. In Sect. 4, we present an extension of the results of Sect. 3 to problems on a variable time interval. In Sect. 5, we discuss no-gap conditions for bang-bang controls. Bang-bang controls induce an optimization problem with respect to the switching times of the control that we call the Induced Optimization Problem (IOP). We have shown in our monograph [36] that the classical second-order sufficient condition for the IOP, together with the so-called strict bang-bang property, ensures second-order sufficient conditions for the bang-bang control problem. We discuss such conditions in Sect. 6.

In the next two sections, the theoretical results are illustrated by numerical examples. Namely, in Sect. 7, we study the optimal control of the chemotherapy of HIV, when the control-quadratic objective in [18] of L 2-type is replaced by a more realistic L 1-objective. In Sect. 8, we consider time-optimal controls in two models of two-link robots; cf. [36]. Finally, in Sect. 9, we discuss optimal control problems with running mixed control-state constraints and control appearing linearly. Taking the mixed constraint as a new control variable we convert such problem to a bang-bang control problem. We use this transformation to study extremals in the optimal control problem for the Rayleigh equation.

2 Second-Order Optimality Conditions for Broken Extremals in the Simplest Problem in the Calculus of Variations

2.1 The Simplest Problem in the Calculus of Variations

Let a closed interval [t 0, t f ], two points \(a,b \in \mathbb{R}^{n}\), an open set \(\mathcal{Q}\subset \mathbb{R}^{2n+1}\), and a function \(L: \mathcal{Q}\mapsto \mathbb{R}\) of class C 2 be given. The simplest problem of the Calculus of Variations has the form

We consider this problem in the space \(W^{1,\infty }\) of Lipschitz continuous functions. The last condition in (2) is assumed to hold almost everywhere. A weak minimum is defined as a local minimum in the space \(W^{1,\infty }\). We say that a function \(x \in W^{1,\infty }([t_{0},t_{f}], \mathbb{R}^{d(x)})\) is admissible if x satisfies (2) and, moreover, there exists a compact set \(\mathcal{C}\subset \mathcal{Q}\) such that \((t,x(t),\dot{x}(t)) \in \mathcal{C}\) a.e. in [t 0, t f ]. Set \(u:=\dot{ x}\) and w = (x, u). We call u the control.

Let an admissible function x 0(t) be an extremal in the sense that it satisfies the Euler equation

Here and in the sequel, partial derivatives are denoted by subscripts. Set

Let

where W 1, 2 is the space of absolutely continuous functions with square integrable derivative and L 2 is the space of square integrable functions. In the space \(\mathcal{W}_{2}\), let us define the subspace

and the quadratic form

The following theorem is well known.

Theorem 1.

-

(a)

If the extremal x 0 is a weak minimum, then \(\;\varOmega (\bar{w}) \geq 0\) on \(\mathcal{K}\) .

-

(b)

If \(\,\varOmega (\bar{w})\) is positive definite on \(\mathcal{K}\) , then the extremal x 0 is a (strict) weak minimum.

As is known, the quadratic conditions in Theorem 1 can be tested via the Jacobi conditions or via bounded solutions to an associated Riccati equation.

For a broken extremal, the quadratic form has to be stated in a different way that allows for the formulation of no-gap necessary and sufficient second-order conditions. We will formulate these conditions and discuss their extensions to different classes of optimal control problems, including bang-bang control problems and problems with mixed constraints and control appearing linearly.

2.2 Second-Order Optimality Conditions for Broken Extremals

Let again x 0(t) be an extremal in the simplest problem (1), (2), and let \(u^{0}(t) =\dot{ x}^{0}(t)\) be the corresponding control. Assume now that the control u 0(t) is piecewise continuous with one discontinuity point t ∗ ∈ (t 0, t f ). Hence, x 0(t) is a broken extremal with a corner at t ∗. We say that t ∗ is an L-point of the function u 0(t) if there exist \(\varepsilon > 0\) and C > 0 such that \(\vert u^{0}(t) - u^{0}(t_{{\ast}}-)\vert \leq C\vert t - t_{{\ast}}\vert \) for all \(t \in (t_{{\ast}}-\varepsilon,t_{{\ast}})\) and \(\vert u^{0}(t) - u^{0}(t_{{\ast}}+)\vert \leq C\vert t - t_{{\ast}}\vert \) for all \(t \in (t_{{\ast}},t_{{\ast}}+\varepsilon )\). Henceforth, we assume that t ∗ is an L-point of the function u 0(t). The following question naturally arises: which quadratic form corresponds to a broken extremal?

Let us change the definition of a weak local minimum as follows. Set \(\varTheta:=\{ t_{{\ast}}\}\) and define a notion of a \(\varTheta\)-weak minimum. Assuming additionally that the control u 0(t) is left-continuous at t ∗, denote by cl u 0(⋅ ) the closure of the graph of u 0(t). Denote by V a neighborhood of the compact set cl u 0(⋅ ).

Definition 1.

We say that x 0 is a point of a \(\varTheta\)-weak minimum (or an extended weak minimum) if there exists a neighborhood V of the compact set cl u 0(⋅ ) such that \(\mathcal{J} (x) \geq \mathcal{J} (x^{0})\) for all admissible x(t) such that u(t) ∈ V a.e., where \(u(t) =\dot{ x}(t)\).

Clearly, we have the following chain of implications among minima:

Let us formulate optimality conditions for a \(\varTheta\)-weak minimum. To this end, we introduce the Pontryagin function (Hamiltonian)

where \(\lambda\) is a row vector of the dimension n. Defining \(\lambda (t):= -L_{u}(t,x^{0}(t),u^{0}(t))\), we have in view of the Euler equation (3):

Denote by \([\lambda ]\) the jump of the function \(\lambda (t)\) at the point t ∗, i.e., \([\lambda ] =\lambda ^{+} -\lambda ^{-}\), where \(\lambda ^{-} =\lambda (t_{{\ast}}-)\) and \(\lambda ^{+} =\lambda (t_{{\ast}}+)\). Let [H] stand for the jump of the function \(H(t):= H(t,x^{0}(t),u^{0}(t),\lambda (t))\) at the same point. The equalities

constitute the Weierstrass–Erdmann conditions. They are known as necessary conditions for a strong minimum. However, they are also necessary for the \(\varTheta\)-weak minimum. We add one more necessary condition for the \(\varTheta\)-weak minimum:

where \(\dot{x}^{0-} =\dot{ x}^{0}(t_{{\ast}}-)\), \(L_{x}^{-} = L_{x}(t_{{\ast}},x^{0}(t_{{\ast}}-),u^{0}(t_{{\ast}}-))\), \([L_{t}] = L_{t}^{+} - L_{t}^{-}\), etc. Clearly,

where \(\lambda _{0}(t) = -H(t)\) (recall that \(\frac{d} {dt}H(t) = H_{t}(t)\) a.e.). Moreover, it can be shown that D(H) is equal to the negative derivative of the function

at t ∗. The existence of the derivative has been proved and, hence, this derivative can also be calculated as \(\frac{d} {dt}(\varDelta H)(t_{{\ast}}-)\) or as \(\frac{d} {dt}(\varDelta H)(t_{{\ast}}+)\).

Now, let us formulate second-order optimality conditions for a \(\varTheta\)-weak minimum. Denote by \(P_{\varTheta }W^{1,2}\) the Hilbert space of piecewise continuous functions \(\bar{x}(t)\), absolutely continuous on each of the two intervals [t 0, t ∗) and (t ∗, t f ], and such that their first derivative is square integrable. Any \(\bar{x} \in P_{\varTheta }W^{1,2}\) can have a nonzero jump \([\bar{x}] =\bar{ x}(t_{{\ast}} + 0) -\bar{ x}(t_{{\ast}}- 0)\) at the point t ∗. Let \(\bar{t}\) be a numerical parameter. Denote by \(Z_{2}(\varTheta )\) the space of triples \(\bar{z} = (\bar{t},\bar{x},\bar{u})\) such that \(\bar{t} \in \mathbb{R}\), \(\bar{x}(\cdot ) \in P_{\varTheta }W^{1,2}\), \(\bar{u}(\cdot ) \in L^{2},\) i.e.,

In this space, define the quadratic form

where [L x ] is the jump of the function L x (t, w 0(t)) at the point t ∗, and

Set

Theorem 2.

(a) If x 0 is a \(\varTheta\) -weak minimum, then \(\;\varOmega _{\varTheta }(\bar{z}) \geq 0\) on \(\mathcal{K}_{\varTheta }\) . (b) If \(\varOmega _{\varTheta }(\bar{z})\) is positive definite on \(\mathcal{K}_{\varTheta }\) , then x 0 is a (strict) \(\varTheta\) -weak minimum.

The proof of this theorem is given in [28]. Let us note that in [28], instead of \(\bar{t}\), we used a numerical parameter \(\bar{\xi }\) such that \(\bar{t} = -\bar{\xi }\). This remark also applies to the subsequent presentation.

3 Second-Order Optimality Conditions for Discontinuous Controls in the General Problem of the Calculus of Variations on a Fixed Time Interval

3.1 The General Problem in the Calculus of Variations on a Fixed Time Interval

Now consider the following optimal control problem in Mayer form on a fixed time interval [t 0, t f ]. It is required to find a pair of functions w(t) = (x(t), u(t)), t ∈ [t 0, t f ], minimizing the functional

subject to the constraints

where \(\mathcal{P}\) and \(\mathcal{Q}\) are open sets, x, u, F, K, f, and h are vector-functions.

We assume that J, F, and K are defined and twice continuously differentiable on \(\mathcal{P}\), and f and h are defined and twice continuously differentiable on \(\mathcal{Q}\). It is also assumed that the gradients with respect to the control h iu (t, x, u), \(i = 1,\ldots,d(h)\) are linearly independent at each point \((t,x,u) \in \mathcal{Q}\) such that h(t, x, u) = 0 (the regularity assumption for the equality constraint h(t, x, u) = 0). Here h i are the components of the vector function h and d(h) is the dimension of this function.

Problem (1), (2) is considered in the space

where n = d(x), m = d(u). Define a norm in this space as a sum of the norms:

A weak minimum is defined as a local minimum in the space \(\mathcal{W}\). We say that w = (x, u) is an admissible pair if it belongs to \(\mathcal{W}\), satisfies the constraints of the problem, and, moreover, there exists a compact set \(\mathcal{C}\subset \mathcal{Q}\) such that \((t,x(t),u(t)) \in \mathcal{C}\) for a.a. t ∈ [t 0, t f ].

It is well known that an optimal control problem with a functional in Bolza form,

can be converted to Mayer form by introducing the ODE \(\,\dot{y} = f_{0}(t,x,u),\,y(t_{0}) = 0.\)

3.2 First-Order Necessary Conditions

Let w 0 = (x 0, u 0) be an admissible pair. We introduce the Pontryagin function (or the Hamiltonian)

and the augmented Pontryagin function (or the augmented Hamiltonian)

where \(\lambda\) and ν are row-vectors of the dimensions d(x) = n and d(h), respectively. For brevity we set

Denote by \(\mathbb{R}^{n{\ast}}\) the space of n-dimensional row-vectors. Define the endpoint Lagrange function

where \(\alpha _{0} \in \mathbb{R},\quad \alpha \in (\mathbb{R}^{d(F)})^{{\ast}},\quad \beta \in (\mathbb{R}^{d(K)})^{{\ast}}.\) Introduce a tuple of Lagrange multipliers

such that \(\lambda (\cdot ): [t_{0},t_{f}] \rightarrow \mathbb{R}^{n{\ast}}\) is absolutely continuous and \(\nu (\cdot ): [t_{0},t_{f}] \rightarrow (\mathbb{R}^{d(h)})^{{\ast}}\) is measurable and bounded. Denote by \(\varLambda _{0}\) the set of the tuples μ satisfying the following conditions at the point w 0:

where η 0 = (x 0(t 0), x 0(t f )), the derivatives \(l_{x_{0}}\) and \(l_{x_{f}}\) are at (η 0, α 0, α, β) and the derivatives H x a, H u a are at \((t,x^{0}(t),u^{0}(t),\lambda (t),\nu (t))\), t ∈ [t 0, t f ]. By α i and β j we denote the components of the row vectors α and β, respectively.

Theorem 3.

If w 0 is a weak local minimum, then \(\varLambda _{0}\) is nonempty. Moreover, \(\varLambda _{0}\) is a finite dimensional compact set, and the projector \((\alpha _{0},\alpha,\beta,\lambda (\cdot ),\nu (\cdot )) \rightarrow (\alpha _{0},\alpha,\beta )\) is injective on \(\varLambda _{0}\) .

The condition \(\varLambda _{0}\neq \emptyset\) is called the local Pontryagin minimum principle, or the Euler–Lagrange equation. Let M 0 be the set of all \(\mu = (\alpha _{0},\alpha,\beta,\lambda (\cdot ),\nu (\cdot )) \in \varLambda _{0}\) satisfying the minimum condition for a.a. t ∈ [t 0, t f ]:

where

The condition M 0 ≠ ∅ is called the (integral) Pontryagin minimum principle, which is a necessary condition for the so-called Pontryagin minimum.

Definition 2 (A.A. Milyutin).

The pair w 0 affords a Pontryagin minimum if for any compact set \(\mathcal{C}\subset \mathcal{Q}\) there exists \(\varepsilon > 0\) such that \(\mathcal{J} (w) \geq \mathcal{J} (w^{0})\) for all admissible pairs \(w(t) =\big (x(t),u(t)\big)\) satisfying the conditions

3.3 Second-Order Necessary Conditions

Set

Let \(\mathcal{K}\) be the set of all \(\bar{w} = (\bar{x},\bar{u}) \in \mathcal{W}_{2}\) satisfying the following conditions:

where \(\bar{\eta }= (\bar{x}(t_{0}),\bar{x}(t_{f}))\), \(I_{F}(\eta ^{0}):=\{ i: F_{i}(\eta ^{0}) = 0\}\) is the set of active indices. Obviously, \(\mathcal{K}\) is a convex cone in the Hilbert space \(\mathcal{W}_{2}\). We call it the critical cone.

Let us introduce a quadratic form in \(\mathcal{W}_{2}\). For \(\mu \in \varLambda _{0}\) and \(\bar{w} = (\bar{x},\bar{u}) \in \mathcal{W}_{2}\), we set

where l η η μ(η 0) = l η η (η 0, α 0, α, β), \(H_{ww}^{a\mu }(t) = H_{ww}^{a}(t,x^{0}(t),u^{0}(t),\lambda (t),\nu (t))\), and \(\bar{\eta }= (\bar{x}(t_{0}),\bar{x}(t_{f}))\).

Theorem 4.

If w 0 is a weak minimum, then the set \(\varLambda _{0}\) is nonempty and

The necessary condition for a Pontryagin minimum differs from this condition only by replacing the set \(\varLambda _{0}\) by the set M 0.

Theorem 5.

If w 0 is a Pontryagin minimum, then the set M 0 is nonempty and

We now assume that the control u 0 is a piecewise continuous function on [t 0, t f ] with the set of discontinuity points \(\varTheta =\{ t_{1},\ldots,t_{s}\}\), \(t_{0} < t_{1} <\ldots < t_{s} < t_{f}.\) We also assume that each \(t_{k} \in \varTheta\) is an L-point of the function u 0 (see the definition in Sect. 2.2). In this case, the regularity assumption for h implies that, for any \(\mu = (\alpha _{0},\alpha,\beta,\lambda (\cdot ),\nu (\cdot )) \in \varLambda _{0}\), ν(t) has the same properties as u 0(t): the function ν(t) is piecewise continuous and each of its point of discontinuity is an L-point which belongs to \(\varTheta\). By virtue of the adjoint equation \(\dot{\lambda }= -H_{x}^{a}\), the same is true for the derivative \(\dot{\lambda }(t)\) of the adjoint variable \(\lambda\). Now, the second-order necessary conditions can be refined as follows.

For μ ∈ M 0, set

where [H t a μ]k is the jump of the derivative \(H_{t}^{a}(t,x^{0}(t),u^{0}(t),\lambda (t),\nu (t))\) at the point t k , and \(\dot{\lambda }^{k-}:=\dot{\lambda } (t_{k}-)\), \(\dot{\lambda }^{k+}:=\dot{\lambda } (t_{k}+)\), etc. Note that \(H_{t}^{a} = -\dot{\lambda }_{0}\), where \(\lambda _{0}(t) = -H(t)\), and hence \(-[H_{t}^{a}]^{k} = [\dot{\lambda }_{0}]^{k}\). Sometimes we omit the superscript μ in the notation D k(H a μ).

We can calculate D k(H a) using another method. Namely, D k(H a) can be calculated as the derivative of the “jump of H a” at the point t k . Introduce the function

It can be shown that the function (Δ k H a)(t) is continuously differentiable at the point \(t_{k} \in \varTheta\), and its derivative at this point coincides with − D k(H a). Therefore, we can obtain the value of D k(H a) by calculating the left or right limit of the derivatives of the function (Δ k H a)(t) at the point t k :

For any μ ∈ M 0, it can be shown that D k(H a μ) ≥ 0, k = 1, …, s. Set

where \(P_{\varTheta }W^{1,2}([t_{0},t_{f}], \mathbb{R}^{n})\) is the Hilbert space of piecewise continuous functions x(t), absolutely continuous on each interval of the set \([t_{0},t_{f}]\setminus \varTheta\) such that their first derivatives are square integrable. Define a quadratic form in \(Z_{2}(\varTheta )\) as follows:

where \(\bar{z} = (\bar{\theta },\bar{x},\bar{u})\), \(\bar{\theta }= (\bar{t}_{1},\ldots,\bar{t}_{s})\), \(\bar{\eta }= (\bar{x}(t_{0}),\bar{x}(t_{f}))\), \(\bar{x}_{\mathrm{av}}^{k} = \frac{1} {2}(\bar{x}(t_{k}-) +\bar{ x}(t_{k}+))\), \(\bar{w} = (\bar{x},\bar{u})\). Define the critical cone \(\mathcal{K}_{\varTheta }\) in the same space by the relations

Theorem 6.

If w 0 is a Pontryagin minimum, then the following Condition \(\mathcal{A}_{\varTheta }\) holds: the set M 0 is nonempty and

Let us give another possible representation for the terms \(\big(D^{k}(H^{a\mu })\bar{t}_{k}^{2} + 2[\dot{\lambda }]^{k}\bar{x}_{\mathrm{av}}^{k}\bar{t}_{k}\big)\) of the quadratic form \(\varOmega _{\varTheta }(\mu,\bar{z})\) on the critical cone \(\mathcal{K}\).

Lemma 1.

Let μ ∈ M 0 and \(z = (\bar{\theta },\bar{x},\bar{u}) \in \mathcal{K}_{\varTheta }\) . Then, for any k = 1,…,s, the following formula holds

Proof.

Everywhere in this proof we will omit the subscript and superscript k. Taking into account that

we obtain

3.4 Second-Order Sufficient Conditions

Here, we will formulate sufficient optimality conditions, but only in the case of discontinuous control u 0. Let again u 0 be a piecewise continuous function with the set of discontinuity points \(\varTheta\) and let each \(t_{k} \in \varTheta\) be an L-point. A natural strengthening of the necessary condition \(\mathcal{A}\) in Theorem 6 turned out to be sufficient not only for the Pontryagin minimum, but also for the so-called bounded strong minimum. This type of minimum will be defined below.

Definition 3.

The component x i of the state vector x is called unessential if the functions f and h do not depend on x i and the functions J, F, and K are affine in x i (t 0) and x i (t f ). Let x denote the vector composed by essential components of vector x.

For instance, the integral functional \(\mathcal{J} =\int _{ t_{0}}^{t_{f}}f_{ 0}(t,x,u)\,dt\) can be brought to the endpoint form: \(\mathcal{J} = y(t_{f}) - y(t_{0}),\mbox{ where }\dot{y} = f_{0}(t,x,u).\) Clearly, y is unessential component.

Definition 4.

An admissible pair w 0 affords a bounded strong minimum if for any compact set \(\mathcal{C}\subset \mathcal{Q}\) there exists \(\varepsilon > 0\) such that \(\mathcal{J} (w) \geq \mathcal{J} (w^{0})\) for all admissible pairs \(w(t) =\big (x(t),u(t)\big)\) satisfying the conditions \(\vert x(t_{0}) - x^{0}(t_{0})\vert <\varepsilon,\,\) \(\max _{[t_{0},t_{f}]}\vert \underline{x}(t) -\underline{ x}^{0}(t))\vert <\varepsilon \,\) and \(\,(t,x(t),u(t)) \in \mathcal{C}\mbox{ a.e. on }[t_{0},t_{f}]\).

Definition 5.

An admissible pair w 0 affords a strong minimum if there exists \(\varepsilon > 0\) such that \(\mathcal{J} (w) \geq \mathcal{J} (w^{0})\) for all admissible pairs \(w(t) =\big (x(t),u(t)\big)\) satisfying the conditions \(\vert x(t_{0}) - x^{0}(t_{0})\vert <\varepsilon \,\) and \(\;\max _{[t_{0},t_{f}]}\vert \underline{x}(t) -\underline{ x}^{0}(t))\vert <\varepsilon\).

The following assertion follows from the definitions.

Lemma 2.

If there exists a compact set \(\mathcal{C}\subset \mathcal{Q}\) such that \(\{(t,x,u) \in \mathcal{Q}: h(t,x,u) = 0\} \subset \mathcal{C}\) , then the bounded strong minimum is equivalent to the strong minimum.

Let us formulate sufficient conditions for a bounded strong minimum. For μ ∈ M 0, we introduce the following conditions of the strict minimum principle:

-

(a)

\(\;H(t,x^{0}(t),u,\lambda (t)) > H(t,x^{0}(t),u^{0}(t),\lambda (t))\,\)

for all \(t \in [t_{0},t_{f}]\setminus \varTheta\), u ≠ u 0(t), u ∈ U(t, x 0(t)),

-

(b)

\(\;H(t_{k},x^{0}(t_{k}),u,\lambda (t_{k})) > H^{k}\)

for all \(t_{k} \in \varTheta\), u ∈ U(t k , x 0(t k )), u ≠ u 0(t k −), u ≠ u 0(t k +), where

\(H^{k}:= H(t_{k},x^{0}(t_{k}),u^{0}(t_{k}-),\lambda (t_{k})) = H(t_{k},x^{0}(t_{k}),u^{0}(t_{k}+),\lambda (t_{k})).\)

We denote by M 0 + the set of all μ ∈ M 0 satisfying conditions (a) and (b).

For μ ∈ M 0 we also introduce the strengthened Legendre-Clebsch conditions:

-

(i)

for each \(t \in [t_{0},t_{f}]\setminus \varTheta\) the quadratic form

$$\displaystyle{\langle H_{uu}^{a}(t,x^{0}(t),u^{0}(t),\lambda (t),\nu (t))u,u\rangle }$$is positive definite on the subspace of vectors \(u \in \mathbb{R}^{m}\) such that

$$\displaystyle{h_{u}(t,x^{0}(t),u^{0}(t))u = 0.}$$ -

(ii)

for each \(t_{k} \in \varTheta\), the quadratic form

$$\displaystyle{\langle \bar{H}_{uu}(t_{k},x^{0}(t_{ k}),u^{0}(t_{ k}-),\lambda (t_{k}),\nu (t_{k}-))u,u\rangle }$$is positive definite on the subspace of vectors \(u \in \mathbb{R}^{m}\) such that

$$\displaystyle{h_{u}(t_{k},x^{0}(t_{ k}),u^{0}(t_{ k}-))u = 0.}$$ -

(iii)

this condition is symmetric to condition (ii) by replacing (t k −) everywhere by (t k +).

Note that for each μ ∈ M 0 the non-strengthened Legendre–Clebsch conditions hold, i.e., the same quadratic forms are nonnegative on the corresponding subspaces.

We denote by \(\mathrm{Leg}_{+}(M_{0}^{+})\) the set of all μ ∈ M 0 + satisfying the strengthened Legendre–Clebsch conditions (i)–(iii) and also the conditions

-

(iv)

\(D^{k}(H^{a\mu }) > 0\;\mbox{ for all }\,k = 1,\ldots,s.\)

Let us introduce the functional

where \(\bar{z} = (\bar{\theta },\bar{x},\bar{u})\) and \(\,\bar{\theta }= (\bar{t}_{1},\ldots,\bar{t}_{s})\).

Theorem 7.

For the pair w 0 , assume that the following Condition \(\mathcal{B}_{\varTheta }\) holds: the set \(\,\mathrm{Leg}_{+}(M_{0}^{+})\) is nonempty and there exist a nonempty compact set \(M \subset \mathrm{ Leg}_{+}(M_{0}^{+})\) and a number C > 0 such that

for all \(\bar{z} \in \mathcal{K}\) . Then the pair w 0 affords a (strict) bounded strong minimum.

The sufficient condition \(\mathcal{B}_{\varTheta }\) guarantees a certain growth condition for the cost which will be presented below. We define now the concept of the order function Γ(t,u).

Assuming that the function u 0(t) is left-continuous, denote by cl u 0(⋅ ) the closure (in \(\mathbb{R}^{m+1}\)) of its graph. Denote by cl u 0(t k−1, t k ) the closure in \(\mathbb{R}^{m+1}\) of the graph of the restriction of u 0(t) to the interval (t k−1, t k ), \(k = 1,\ldots,s + 1\), where \(t_{s+1} = t_{f}\). Then

Denote by \(\mathcal{V}_{k}\), \(k = 1,\ldots,s + 1,\) a system of non-overlapping neighborhoods of the compact sets cl u 0(t k−1, t k ). Let \(\mathcal{V} =\bigcup \limits _{ k=1}^{s+1}\mathcal{V}_{k}.\)

Definition 6.

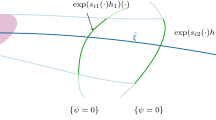

The function \(\varGamma (t,u): \mathbb{R}^{1+m} \rightarrow \mathbb{R}\) is said to be an order function if there exist disjoint neighborhoods \(\mathcal{V}_{k}\) of the compact sets cl u 0(t k−1, t k ) such that the following five conditions hold (Fig. 1):

-

(1)

\(\varGamma (t,u) = \vert u - u^{0}(t)\vert ^{2}\) if \((t,u) \in \mathcal{V}_{k}\), t ∈ (t k−1, t k ), \(k = 1,\ldots,s + 1\);

-

(2)

\(\varGamma (t,u) = 2\vert t - t_{k}\vert + \vert u - u^{0k-}\vert ^{2}\) if \((t,u) \in \mathcal{V}_{k}\), t > t k , k = 1, …, s;

-

(3)

\(\varGamma (t,u) = 2\vert t - t_{k}\vert + \vert u - u^{0k+}\vert ^{2}\) if \((t,u) \in \mathcal{V}_{k+1}\), t < t k , k = 1, …, s;

-

(4)

Γ(t, u) > 0 if \((t,u)\notin \mathcal{V}\);

-

(5)

Γ(t, u) is Lipschitz continuous on each compact set in \(\mathbb{R}^{1+m}\).

For δ w(t) = (δ x(t), δ u(t)) in \(\mathcal{W}\) we set

We call γ the higher order. This higher order corresponds to a typical minimum in the case of discontinuous control, and the order function Γ(t, v) corresponds to a typical Hamiltonian in this case.

Note that the order \(\int _{t_{0}}^{t_{f}}\,(\varGamma (t,u^{0}(t) +\delta u(t))\,dt\) is much finer (smaller) than the functional \(\int _{t_{0}}^{t_{f}}\vert \delta u(t))\vert ^{2}\,dt\). On the other hand, it can be proved that, on each compact set (in \(\mathbb{R}^{m}\)), the following lower bound holds for \(\int _{t_{0}}^{t_{f}}\varGamma (t,u^{0}(t) +\delta u(t))\,dt\):

where C > 0 depends only on the compact set.

Define the violation function at the point w 0:

where η 0 = (x 0(t 0), x 0(t f )), δ η = (δ x(t 0), δ x(t f )), \(\delta f = f(t,w^{0} +\delta w) - f(t,w^{0})\), δ w = (δ x, δ u), \(\|.\|_{1}\) is the norm in the space L 1 of integrable functions, and \(a_{+}:=\max \{ a,0\}\) for \(a \in \mathbb{R}\).

Definition 7.

We say that a bounded strong γ-sufficiency holds at the point w 0 if there exists C > 0 such that for any compact set \(\mathcal{C}\subset \mathcal{Q}\) there exists \(\varepsilon > 0\) such that the inequality V (δ w) ≥ C γ(δ w) holds for all \(\delta w = (\delta x,\delta u) \in \mathcal{W}\) satisfying the conditions

Obviously, a bounded strong γ-sufficiency implies a (strict) bounded strong minimum. Moreover, if the point w 0 +δ w is admissible, then, obviously, \(V (\delta w) = (J(w^{0} +\delta w) - J(w^{0}))_{+}\). Therefore, a bounded strong γ-sufficiency implies the following:

γ-growth condition for the cost: there exists C > 0 such that for any compact set \(\mathcal{C}\subset \mathcal{Q}\) there exists \(\varepsilon > 0\) such that

for all \(\delta w = (\delta x,\delta u) \in \mathcal{W}\,\) satisfying (9) and such that (w 0 +δ w) is an admissible pair.

Theorem 8.

The sufficient condition \(\mathcal{B}_{\varTheta }\) in Theorem 7 is equivalent to the bounded strong γ-sufficiency.

Theorems 6–8 were proved in [31]. Generalizations of these theorems for optimal control problem with regular mixed inequality state-control constraints were recently published in [33, 34]. An extension of the results of this section to problems on a variable time interval was obtained in [32].

4 The General Problem in the Calculus of Variations on a Variable Time Interval

4.1 Statement of the Problem

Here, quadratic optimality conditions, both necessary and sufficient, are presented in the following canonical problem on a variable time interval. Let \(\mathcal{T}\) denote a process (x(t), u(t)∣t ∈ [t 0, t f ]), where the state variable x(⋅ ) is a Lipschitz continuous function, and the control variable u(⋅ ) is a bounded measurable function on a time interval Δ = [t 0, t f ]. The interval Δ is not fixed. For each process \(\mathcal{T}\), we denote here by

the vector of the endpoints of time-state variable (t, x). It is required to find \(\mathcal{T}\) minimizing the functional

subject to the constraints

where \(\mathcal{P}\) and \(\mathcal{Q}\) are open sets, x, u, F, K, f, and h are vector-functions.

We assume that the functions J, F, and K are defined and twice continuously differentiable on \(\mathcal{P}\), and the functions f and h are defined and twice continuously differentiable on \(\mathcal{Q}\). It is also assumed that the gradients with respect to the control h iu (t, x, u), \(i = 1,\ldots,d(h)\) are linearly independent at each point \((t,x,u) \in \mathcal{Q}\) such that h(t, x, u) = 0. Here d(h) is a dimension of the vector h.

4.2 First-Order Necessary Conditions

We say that the function u(t) is Lipschitz-continuous if it is piecewise continuous and satisfies the Lipschitz condition on each interval of the continuity. Let

be a fixed admissible process such that the control u(⋅ ) is a piecewise Lipschitz-continuous function on the interval Δ with the set of discontinuity points

In order to make the notations simpler we do not use here such symbols and indices as zero, hat or asterisk to distinguish the process \(\mathcal{T}\) from others.

Let us formulate the first-order necessary condition for optimality of the process \(\mathcal{T}\). We introduce the Pontryagin function H (Hamiltonian), the augmented Pontryagin function H a, and the endpoint Lagrange function l as in Sect. 3.2, but remember that now η = (t 0, x 0, t f , x f ), Also we introduce a tuple of Lagrange multipliers

such that \(\lambda (\cdot ):\varDelta \rightarrow (\mathbb{R}^{d(x)})^{{\ast}}\) and \(\lambda _{0}(\cdot ):\varDelta \rightarrow \mathbb{R}^{1}\) are piecewise smooth functions, continuously differentiable on each interval of the set \(\varDelta \setminus \varTheta\), and \(\nu (\cdot ):\varDelta \rightarrow (\mathbb{R}^{d(h)})^{{\ast}}\) is a piecewise continuous function and Lipschitz continuous on each interval of the set \(\varDelta \setminus \varTheta\).

Denote by M 0 the set of the normed tuples μ satisfying the conditions of the minimum principle for the process \(\mathcal{T}\):

where \(U(t,x) =\{ u \in \mathbb{R}^{d(u)}\mid h(t,x,u) = 0\), \((t,x,u) \in \mathcal{Q}\}.\) The derivatives \(l_{x_{0}}\) and \(l_{x_{f}}\) are at (η, α 0, α, β), where η = (t 0, x(t 0), t f , x(t f )), and the derivatives H x a, H u a, and H t a are at \((t,x(t),u(t),\lambda (t),\nu (t))\), where \(t \in \varDelta \setminus \varTheta\). (Condition H u a = 0 follows from other conditions in this definition, and therefore, could be excluded; yet, we need to use it later.)

Let us give the definition of Pontryagin minimum in problem (10)–(12) on a variable interval [t 0, t f ].

Definition 8.

The process \(\mathcal{T}\) affords a Pontryagin minimum if for each compact set \(\mathcal{C}\subset \mathcal{Q}\) there exists \(\varepsilon > 0\) such that \(\mathcal{J} (\tilde{\mathcal{T}} ) \geq \mathcal{J} (\mathcal{T} )\) for all admissible processes \(\tilde{\mathcal{T}}= (\tilde{x}(t),\tilde{u}(t)\mid t \in [\tilde{t}_{0},\tilde{t}_{f}])\) satisfying the conditions

-

(a)

\(\vert \tilde{t}_{0} - t_{0}\vert <\varepsilon,\quad\) \(\vert \tilde{t}_{f} - t_{f}\vert <\varepsilon,\)

-

(b)

\(\max \limits _{\tilde{\varDelta }\cap \varDelta }\vert \tilde{x}(t) - x(t)\vert <\varepsilon,\quad\) where \(\tilde{\varDelta }= [\tilde{t}_{0},\tilde{t}_{f}]\),

-

(c)

\(\int \limits _{\tilde{\varDelta }\cap \varDelta }\vert \tilde{u}(t) - u(t)\vert \,dt <\varepsilon\),

-

(d)

\((t,\tilde{x}(t),\tilde{u}(t)) \in \mathcal{C}\quad \mbox{ a.e. on }\tilde{\varDelta }.\)

The condition M 0 ≠ ∅ is equivalent to Pontryagin’s minimum principle. It is the first-order necessary condition for Pontryagin minimum for the process \(\mathcal{T}\). Thus, the following theorem holds.

Theorem 9.

If the process \(\mathcal{T}\) affords a Pontryagin minimum, then the set M 0 is nonempty.

Assume that the set M 0 is nonempty. Using its definition and the full rank condition for the matrix h u on the surface h = 0 one can easily prove the following statement:

Proposition 1.

The set M 0 is a finite-dimensional compact set, and the mapping μ ↦ (α 0 ,α,β) is injective on M 0 .

As in Sect. 3, for each \(\mu \in M_{0},t_{k} \in \varTheta\), we define D k(H a μ) by relation (7). Then, for each μ ∈ M 0 the following inequalities hold: D k(H a μ) ≥ 0, k = 1, …, s.

4.3 Second-Order Necessary Conditions

Let us formulate a quadratic necessary condition for a Pontryagin minimum for the process \(\mathcal{T}\) as in (13). First, for this process, we introduce a Hilbert space \(\mathcal{Z}_{2}(\varTheta )\) and the critical cone \(\mathcal{K}\subset \mathcal{Z}_{2}(\varTheta )\). Again, we denote by \(P_{\varTheta }W^{1,2}(\varDelta, \mathbb{R}^{d(x)})\) the Hilbert space of piecewise continuous functions \(\bar{x}(\cdot ):\varDelta \rightarrow \mathbb{R}^{d(x)},\) absolutely continuous on each interval of the set \(\varDelta \setminus \varTheta\) and such that their first derivative is square integrable. We set

where

Thus,

Moreover, for given \(\bar{z}\) we set

By \(I_{F}(\eta ) =\{ i \in \{ 1,\ldots,d(F)\}\mid F_{i}(\eta ) = 0\}\) we denote the set of active indices of the constraints F i ≤ 0. Let \(\mathcal{K}_{\varTheta }\) be the set of all \(\bar{z} \in \mathcal{Z}_{2}(\varTheta )\) satisfying the following conditions:

Clearly, \(\mathcal{K}_{\varTheta }\) is a convex cone in the Hilbert space \(Z_{2}(\varTheta )\). We call it the critical cone. If the interval Δ is fixed, then we set \(\eta:= (x_{0},x_{f}) = (x(t_{0}),x(t_{f}))\), and in the definition of \(\mathcal{K}\) we have \(\bar{t}_{0} =\bar{ t}_{f} = 0\), \(\bar{\bar{x}}_{0} = \bar{x}_{0}\), \(\bar{\bar{x}}_{f} = \bar{x}_{f}\), and \(\bar{\bar{\eta }}= \bar{\eta }:= (\bar{x}_{0},\bar{x}_{f})\).

Let us introduce a quadratic form on \(\mathcal{Z}_{2}(\varTheta )\). For μ ∈ M 0 and \(\bar{z} \in \mathcal{K}_{\varTheta }\), we set

where \(l_{\eta \eta }^{\mu } = l_{\eta \eta }(\eta,\alpha _{0},\alpha,\beta ),\quad H_{ww}^{a\mu } = H_{ww}^{a}(t,x(t),u(t),\lambda (t),\nu (t)).\) We now formulate the main necessary quadratic condition of Pontryagin minimum in the problem on a variable time interval.

Theorem 10.

If the process \(\mathcal{T}\) yields a Pontryagin minimum, then the following Condition \(\mathcal{A}_{\varTheta }\) holds: the set M 0 is nonempty and

Using (8), we can represent the quadratic form \(\varOmega _{\varTheta }\) on \(\mathcal{K}_{\varTheta }\) as follows:

4.4 Second-Order Sufficient Conditions

Let us give the definition of a bounded strong minimum in problem (10)–(12) on a variable interval [t 0, t f ]. Let again x denote a vector composed of all essential components of vector x (cf. Definition 3).

Definition 9.

The process \(\mathcal{T}\) affords a bounded strong minimum if for each compact set \(\mathcal{C}\subset \mathcal{Q}\) there exists \(\varepsilon > 0\) such that \(\mathcal{J} (\tilde{\mathcal{T}} ) \geq \mathcal{J} (\mathcal{T} )\) for all admissible processes \(\tilde{\mathcal{T}}= (\tilde{x}(t),\tilde{u}(t)\mid t \in [\tilde{t}_{0},\tilde{t}_{f}])\) satisfying the conditions

-

(a)

\(\vert \tilde{t}_{0} - t_{0}\vert <\varepsilon,\quad\) \(\vert \tilde{t}_{f} - t_{f}\vert <\varepsilon,\quad\) \(\vert \tilde{x}(\tilde{t}_{0}) - x(t_{0})\vert <\varepsilon\),

-

(b)

\(\max \limits _{\tilde{\varDelta }\cap \varDelta }\vert \underline{\tilde{x}}(t) -\underline{ x}(t)\vert <\varepsilon,\quad\) where \(\tilde{\varDelta }= [\tilde{t}_{0},\tilde{t}_{f}]\),

-

(c)

\((t,\tilde{x}(t),\tilde{u}(t)) \in \mathcal{C}\quad \mbox{ a.e. on }\tilde{\varDelta }.\)

The strict bounded strong minimum is defined in a similar way, with the nonstrict inequality \(\mathcal{J} (\tilde{\mathcal{T}} ) \geq \mathcal{J} (\mathcal{T} )\) replaced by the strict one and the process \(\tilde{\mathcal{T}}\) required to be different from \(\mathcal{T}\).

Let us formulate a sufficient optimality Condition \(\mathcal{B}_{\varTheta }\), which is a natural strengthening of the necessary Condition \(\mathcal{A}_{\varTheta }\). The condition \(\mathcal{B}_{\varTheta }\) is sufficient not only for a Pontryagin minimum, but also for a strict bounded strong minimum.

Theorem 11.

For the process \(\mathcal{T}\) , assume that the following Condition \(\mathcal{B}_{\varTheta }\) holds: the set \(\mathrm{Leg}_{+}(M_{0}^{+})\) is nonempty and there exist a nonempty compact set \(M \subset \mathrm{ Leg}_{+}(M_{0}^{+})\) and a number C > 0 such that

for all \(\bar{z} \in \mathcal{K}_{\varTheta }\) . Then the process \(\mathcal{T}\) affords a strict bounded strong minimum.

Here the set \(\mathrm{Leg}_{+}(M_{0}^{+})\) has the same definition as in Sect. 3.4.

5 Second-Order Optimality Conditions for Bang-Bang Controls

5.1 Optimal Control Problems with Control Appearing Linearly

Let again \(\mathcal{T}\) denote a process (x(t), u(t)∣t ∈ [t 0, t f ]), where the time interval Δ = [t 0, t f ] is not fixed. As above, we set

We will refer to the following control problem (22)–(24) as the basic control problem:

subject to the constraints

Here \(x \in \mathbb{R}^{n}\), \(u \in \mathbb{R}^{m}\), F, K, and f are vector functions, g is n × m matrix function with column vector functions g 1(t, x, u), …, g m (t, x, u), \(\mathcal{P}\subset \mathbb{R}^{2n+2}\) and \(\mathcal{Q}\subset \mathbb{R}^{n+1}\) are open sets, \(U \subset \mathbb{R}^{m}\) is a convex polyhedron. The functions J, F, and K are assumed to be twice continuously differential on \(\mathcal{P}\), and the functions f and g are twice continuously differential on \(\mathcal{Q}\).

A process \(\mathcal{T} = (x(t),u(t)\mid t \in [t_{0},t_{1}])\) is said to be admissible if x(⋅ ) is absolutely continuous, u(⋅ ) is measurable bounded and the pair of functions (x(t), u(t)) on the interval Δ = [t 0, t 1] with the end-points η = (t 0, x(t 0), t 1, x(t 1)) satisfies the constraints (23), (24).

Let us give the definition of Pontryagin minimum for the basic problem.

Definition 10.

The process \(\widehat{\mathcal{T}} = (\hat{x}(t),\hat{u}(t)\mid t \in [\hat{t}_{0},\hat{t}_{f}])\) affords a Pontryagin minimum in the basic problem if there exists \(\varepsilon > 0\) such that \(\mathcal{J} (\mathcal{T} ) \geq \mathcal{J} (\widehat{\mathcal{T}})\) for all admissible processes \(\mathcal{T} = (x(t),u(t)\mid t \in [t_{0},t_{f}])\) satisfying

where Δ = [t 0, t f ], \(\widehat{\varDelta }= [\hat{t}_{0},\hat{t}_{f}]\).

Note that, for a fixed time interval Δ, a Pontryagin minimum corresponds to an L 1 -local minimum with respect to the control variable.

5.2 Necessary Optimality Conditions: The Minimum Principle of Pontryagin et al.

Let \(\mathcal{T} = (x(t),u(t)\mid t \in [t_{0},t_{f}])\) be a fixed admissible process such that the control u(⋅ ) is a piecewise constant function on the interval Δ = [t 0, t f ]. Denote by

the finite set of all discontinuity points (jump points) of the control u(t). Then \(\dot{x}(t)\) is a piecewise continuous function whose discontinuity points belong to \(\varTheta\), and hence x(t) is a piecewise smooth function on Δ.

Let us formulate the Pontryagin minimum principle, which is the first-order necessary condition for optimality of the process \(\mathcal{T}\). The Pontryagin function has the form

where \(\lambda\) is a row-vector of the dimension \(d(\lambda ) = d(x) = n\) while x, u, f, F, and K are column-vectors. The factor of the control u in the Pontryagin function is the switching vector function, a row vector of dimension d(u) = m. Set

The endpoint Lagrange function is

where α and β are row-vectors with \(d(\alpha ) = d(F),\,d(\beta ) = d(K)\), and α 0 is a number. By

we denote a tuple of Lagrange multipliers such that \(\lambda (\cdot ):\varDelta \rightarrow \mathbb{R}^{n{\ast}}\) and \(\lambda _{0}(\cdot ):\varDelta \rightarrow \mathbb{R}\) are continuous on Δ and continuously differentiable on each interval of the set \(\varDelta \setminus \varTheta\).

Let M 0 be the set of the normed collections μ satisfying the conditions of Minimum Principle for the process \(\mathcal{T}\):

The derivatives \(l_{x_{0}}\) and \(l_{x_{f}}\) are taken at the point (α 0, α, β, η), and the derivatives H x , H t are evaluated at the point \((t,x(t),u(t),\lambda (t))\). We use the simple abbreviation (t) for indicating all arguments \((t,x(t),u(t),\lambda (t)),\) \(t \in \varDelta \setminus \varTheta\).

Theorem 12.

If the process \(\mathcal{T}\) affords a Pontryagin minimum, then the set M 0 is nonempty. The set M 0 is a finite-dimensional compact set and the projector μ ↦ (α 0 ,α,β) is injective on M 0 .

In view of this theorem, we can identify each tuple μ ∈ M 0 with its projection (α 0, α, β). In what follows we set μ = (α 0, α, β). For each μ ∈ M 0 and \(t_{k} \in \varTheta\), we define again the quantity D k(H μ). Set

For each μ ∈ M 0 the following equalities hold:

Consequently, for each μ ∈ M 0 the function (Δ k H)(t) has a derivative at the point \(t_{k} \in \varTheta\). Set

Then, for each μ ∈ M 0, the minimum condition (30) implies the inequalities:

As we know, the value D k(H μ) can be written in the form

where H x k− and H x k+ are the left-hand and the right-hand values of the function \(H_{x}(t):= H_{x}(t,x(t),u(t),\lambda (t))\) at t k , respectively, [H t ]k is a jump of the function H t (t) at t k , etc. It also follows from the above representation that we have

where the values on the right-hand side agree for the derivative \(\dot{\sigma }(t_{k}+)\) from the right and the derivative \(\dot{\sigma }(t_{k}-)\) from the left. In the case of a scalar control u, the total derivative \(\sigma _{t} +\sigma _{x}\dot{x} +\sigma _{\lambda }\dot{\lambda }\) does not contain the control variable explicitly and hence the derivative \(\dot{\sigma }(t)\) is continuous at t k .

Definition 11.

For a given extremal process \(\mathcal{T} =\{\, (x(t),u(t))\mid t \in \varDelta \,\}\) with a piecewise constant control u(t) we say that u(t) is a strict bang-bang control if there exists \(\mu = (\alpha _{0},\alpha,\beta,\lambda,\lambda _{0}) \in M_{0}\) such that

where \(\,[u(t-),u(t+)]\) denotes the line segment spanned by the vectors u(t−) and u(t+) in \(\mathbb{R}^{d(u)}\) and \(\sigma (t):=\sigma (t,x(t),\lambda (t)) =\lambda (t)g(t,x(t))\).

Note that \([u(t-),u(t+)]\) is a singleton {u(t)} at each continuity point of the control u(t) with u(t) being a vertex of the polyhedron U. Only at the points \(t_{k} \in \varTheta\) does the line segment \([u^{k-},u^{k+}]\) coincide with an edge of the polyhedron.

It is instructive to evaluate the condition (35) in greater detail when the control set is the hypercube

Let \(S_{i} =\{ t_{k,i},\,k = 1,\ldots,k_{i}\},\;k_{i} \geq 0,\) be the set of switching times of the i − th control component u i (t) and let \(\sigma _{i}(t) =\lambda (t)g_{i}(t,x(t))\) be switching function for u i , i = 1, …, d(u). Then the set of all switching times is given by

and the condition (35) for a strict bang-bang control requires that

Hence, the i-th control component is determined by the control law

Remark 1.

There exist examples, where condition (a) in (37) is slightly violated as \(\sigma _{i}(t_{f}) = 0\) holds for certain control components u i ; cf. the Rayleigh problem in Sect. 9.2 and the collision avoidance problem in Maurer et al. [27]. In this case, we require in addition that \(\dot{\sigma }_{i}(t_{f})\not =0\) holds to compensate for the condition \(\sigma _{i}(t_{f}) = 0\). This property is fulfilled for the Rayleigh problem in Sect. 9.2 and the control problem in [27].

5.3 Second-Order Necessary Optimality Conditions

Here, we formulate quadratic necessary optimality conditions for a Pontryagin minimum for a given bang-bang control. (Their strengthening yields quadratic sufficient conditions for a strong minimum.) These quadratic conditions are based on the properties of a quadratic form on the critical cone.

Let again \(\mathcal{T} = (x(t),u(t)\mid t \in [t_{0},t_{f}])\) be a fixed admissible process such that the control u(⋅ ) is a piecewise constant function on the interval Δ = [t 0, t f ], and let \(\varTheta =\{ t_{1},\ldots,t_{s}\}\), \(t_{0} < t_{1} <\ldots < t_{s} < t_{f}\), be the set of discontinuity points of the control u(t). For the process \(\mathcal{T}\), we introduce the space \(\mathcal{Z}(\varTheta )\) and the critical cone \(\mathcal{K}_{\varTheta }\subset \mathcal{Z}(\varTheta )\) as follows. Denote by \(P_{\varTheta }C^{1}(\varDelta, \mathbb{R}^{d(x)})\) the space of piecewise continuous functions \(\bar{x}(\cdot ):\varDelta \rightarrow \mathbb{R}^{d(x)}\) that are continuously differentiable on each interval of the set \(\varDelta \setminus \varTheta\). For each \(\bar{x} \in P_{\varTheta }C^{1}(\varDelta, \mathbb{R}^{d(x)})\) and for \(t_{k} \in \varTheta\) we set \(\bar{x}^{k-} =\bar{ x}(t_{k}-)\), \(\bar{x}^{k+} =\bar{ x}(t_{k}+)\) and \([\bar{x}]^{k} =\bar{ x}^{k+} -\bar{ x}^{k-}.\) Now set

where \(\bar{t}_{0},\bar{t}_{f} \in \mathbb{R}^{1}\), \(\bar{\theta }= (\bar{t}_{1},\ldots,\bar{t}_{s}) \in \mathbb{R}^{s}\), \(\bar{x} \in P_{\varTheta }C^{1}(\varDelta, \mathbb{R}^{d(x)})\). Thus,

For each \(\bar{z}\) we set

The vector \(\bar{\bar{\eta }}\) is considered as a column vector. Note that \(\bar{t}_{0} = 0\), respectively, \(\bar{t}_{f} = 0\) for fixed initial time t 0, respectively, final time t f . Let

be the set of indices of all active endpoint inequalities F i ≤ 0 at the point η = (t 0, x(t 0), t f , x(t f )). Denote by \(\mathcal{K}_{\varTheta }\) the set of all \(\bar{z} \in \mathcal{Z}(\varTheta )\) satisfying the following conditions:

It is obvious that \(\mathcal{K}_{\varTheta }\) is a convex, finite-dimensional, and finite-faced cone in the space \(\mathcal{Z}(\varTheta )\). We call it the critical cone. Each element \(\bar{z} \in \mathcal{K}_{\varTheta }\) is uniquely defined by the numbers \(\bar{t}_{0}\), \(\bar{t}_{f}\), the vector \(\bar{\theta }\) and the initial value \(\bar{x}(t_{0})\) of the function \(\bar{x}(t)\). Two important properties of the critical cone are formulated in the next two propositions.

Proposition 2.

For any μ ∈ M 0 and \(\bar{z} \in \mathcal{K}_{\varTheta }\) , we have

Proposition 3.

Suppose that there exist μ ∈ M 0 with α 0 > 0. Then adding the equalities \(\alpha _{i}F_{i}'(\eta )\bar{\bar{\eta }} = 0\) \(\forall i \in I_{F}(\eta )\) to the system (40)–(42) defining \(\mathcal{K}_{\varTheta }\) , one can omit the inequality \(J'(\eta )\bar{\bar{\eta }} \leq 0\) in that system without affecting \(\mathcal{K}_{\varTheta }\) .

Thus, \(\mathcal{K}_{\varTheta }\) is defined by conditions (41), (42) and by the condition \(\bar{\bar{\eta }}\in \mathcal{K}_{\varTheta }^{e}\), where \(\mathcal{K}_{\varTheta }^{e}\) is the cone in \(\mathbb{R}^{2d(x)+2}\) given by (40). But if there exists μ ∈ M 0 with α 0 > 0, then we can put

If, in addition, α i > 0 holds for all i ∈ I F (η), then \(\mathcal{K}_{\varTheta }^{e}\) is a subspace in \(\mathbb{R}^{d(x)+2}\).

Let us introduce a quadratic form on the critical cone \(\mathcal{K}_{\varTheta }\) defined by the conditions (40)–(42). For each μ ∈ M 0 and \(\bar{z} \in \mathcal{K}_{\varTheta }\) we set

where \(l_{\eta \eta }^{\mu } = l_{\eta \eta }(\eta,\alpha _{0},\alpha,\beta ),\quad H_{xx}^{\mu } = H_{xx}(t,x(t),u(t),\lambda (t))\) and \(\bar{\bar{\eta }}\) was defined in (39). Note that for a problem on a fixed time interval [t 0, t f ] we have \(\bar{t}_{0} =\bar{ t}_{f} = 0\). The following theorem gives the main second-order necessary condition of optimality.

Theorem 13.

If the process \(\mathcal{T}\) affords a Pontryagin minimum, then the following Condition \(\mathcal{A}_{\varTheta }\) holds: the set M 0 is nonempty and \(\max _{\mu \in M_{0}}\,\varOmega _{ \varTheta }(\mu,\bar{z}) \geq 0\) for all \(\bar{z} \in \mathcal{K}_{\varTheta }.\)

Using (8), we can also represent the quadratic form \(\varOmega _{\varTheta }\) as follows:

5.4 Second-Order Sufficient Optimality Conditions (SSC)

The state variable x i is called unessential if the function f does not depend on x i and the functions F, J, K are affine in x i0: = x i (t 0) and x if : = x i (t f ). Let x denote the vector of all essential components of state vector x. Let us define a strong minimum in the basic problem.

Definition 12.

The process \(\mathcal{T}\) affords a strong minimum if there exists \(\varepsilon > 0\) such that \(\mathcal{J} (\tilde{\mathcal{T}} ) \geq \mathcal{J} (\mathcal{T} )\) for all admissible processes \(\tilde{\mathcal{T}}= (\tilde{x}(t),\tilde{u}(t)\mid t \in [\tilde{t}_{0},\tilde{t}_{f}])\) satisfying the conditions

-

(a)

\(\vert \tilde{t}_{0} - t_{0}\vert <\varepsilon,\quad\) \(\vert \tilde{t}_{f} - t_{f}\vert <\varepsilon,\quad\) \(\vert \tilde{x}(\tilde{t}_{0}) - x(t_{0})\vert <\varepsilon\),

-

(b)

\(\max \limits _{\tilde{\varDelta }\cap \varDelta }\vert \underline{\tilde{x}}(t) -\underline{ x}(t)\vert <\varepsilon,\quad\) where \(\tilde{\varDelta }= [\tilde{t}_{0},\tilde{t}_{f}]\),

The strict strong minimum is defined in a similar way, with the non-strict inequality \(\mathcal{J} (\tilde{\mathcal{T}} ) \geq \mathcal{J} (\mathcal{T} )\) replaced by the strict one and the process \(\tilde{\mathcal{T}}\) required to be different from \(\mathcal{T}\).

A natural strengthening of the necessary Condition \(\mathcal{A}_{\varTheta }\) of Theorem 13 turns out to be a sufficient optimality condition not only for a Pontryagin minimum, but also for a strong minimum.

Theorem 14.

Let the following Condition \(\mathcal{B}_{\varTheta }\) be fulfilled for the process \(\mathcal{T}\):

-

(a)

u(t) is a strict bang-bang control (i.e., there exists μ ∈ M 0 such that condition (35) holds),

-

(b)

there exists μ ∈ M 0 such that D k (H μ ) > 0, k = 1,…,s,

-

(c)

\(\max \limits _{\mu \in M_{0}}\,\varOmega _{\varTheta }(\mu,\bar{z}) > 0\;\;\mbox{ for all }\;\bar{z} \in \mathcal{K}_{\varTheta }\setminus \{0\}.\)

Then \(\mathcal{T}\) is a strict strong minimum.

Note that the condition (c) is automatically fulfilled if \(\mathcal{K}_{\varTheta } =\{ 0\}\), which gives a first-order sufficient condition for a strong minimum in the problem. Also note that the condition (c) is automatically fulfilled if there exists μ ∈ M 0 such that

Sufficient conditions for inequality (46) were obtained in [21] and [22] (see also [36], Section 6.3). Clearly, there is no gap between the necessary condition \(\mathcal{A}_{\varTheta }\) of Theorem 13 and the sufficient condition \(\mathcal{B}_{\varTheta }\) of Theorem 14.

6 Induced Optimization Problem for Bang-Bang Controls and the Verification of SSC

We continue our discussion of bang-bang controls. Second-order sufficient optimality conditions for bang-bang controls had been derived in the literature in two different forms. The first form was discussed in the last section. The second one is due to Agrachev et al. [1], who first reduce the bang-bang control problem to a finite-dimensional optimization problem and then show that the well-known sufficient optimality conditions for this optimization problem supplemented by the strict bang-bang property furnish sufficient conditions for the bang-bang control problem. The bang-bang control problem, considered in this section, is more general than that in [1]. Following [35], we claim the equivalence of both forms of second-order conditions for this problem.

6.1 Formulation of the Induced Optimization Problem and Necessary Optimality Conditions

Let \(\widehat{\mathcal{T}} = (\widehat{x}(t),\widehat{u}(t)\mid t \in [\widehat{t}_{0},\widehat{t}_{f}])\) be an admissible process for the basic control problem (22)–(24). We denote by ex U the set of vertices of the polyhedron U. Assume that \(\widehat{u}(t)\) is a bang-bang control in \(\widehat{\varDelta }= [\hat{t}_{0},\hat{t}_{f}]\) taking values in the set ex U,

where \(\hat{t}_{s+1} =\hat{ t}_{f}\). Thus, \(\widehat{\varTheta }=\{\hat{ t}_{1},\ldots,\hat{t}_{s}\}\) is the set of switching points of the control \(\hat{u}(\cdot )\) with \(\hat{t}_{k} <\hat{ t}_{k+1}\) for k = 0, 1, …, s. Assume now that the set M 0 of multipliers is nonempty for the process \(\widehat{\mathcal{T}}\). Put

Then \(\hat{\theta }\in \mathbb{R}^{s}\), \(\hat{\zeta }\in \mathbb{R}^{2} \times \mathbb{R}^{n} \times \mathbb{R}^{s}\), where n = d(x).

Take a small neighborhood \(\mathcal{V}\) of the point \(\hat{\zeta }\) in \(\mathbb{R}^{2} \times \mathbb{R}^{n} \times \mathbb{R}^{s}\), and let

where \(\theta = (t_{1},\ldots,t_{s})\) satisfies t 0 < t 1 < t 2 < … < t s < t f . Define the function \(u(t;t_{0},t_{f},\theta )\) by the condition (Fig. 2)

where \(t_{s+1} = t_{f}\). The values \(u(t_{k};t_{0},t_{f},\theta )\), k = 1, …, s, may be chosen in U arbitrarily. For definiteness, define them by the condition of continuity of the control from the left: \(u(t_{k};t_{0},t_{f},\theta ) = u(t_{k}-;t_{0},t_{f},\theta )\), k = 1, …, s.

Let \(x(t;t_{0},t_{f},x_{0},\theta )\) be the solution of the initial value problem (IVP)

For each \(\zeta \in \mathcal{V}\) this solution exists if the neighborhood \(\mathcal{V}\) of the point \(\hat{\zeta }\) is sufficiently small. We obviously have

Consider now the following finite-dimensional optimization problem in the space \(\mathbb{R}^{2} \times \mathbb{R}^{n} \times \mathbb{R}^{s}\) of the variables \(\zeta = (t_{0},t_{f},x_{0},\theta )\):

We call (50) the Induced Optimization Problem (IOP) or simply Induced Problem which represents an extension of the IOP introduced in [1]. The following assertion is almost obvious.

Theorem 15.

Let the process \(\widehat{\mathcal{T}}\) be a Pontryagin local minimum for the basic control problem (22)–(24) . Then the point \(\hat{\zeta }\) is a local minimum of the IOP (50) , and hence it satisfies first and second-order necessary conditions for this problem.

6.2 Second-Order Optimality Conditions for Bang-Bang Controls in Terms of the Induced Optimization Problem

We shall clarify a relationship between the second-order conditions for the Induced Optimization Problem (50) at the point \(\hat{\zeta }\) and those in the basic bang-bang control problem (22)–(24) for the process \(\widehat{\mathcal{T}}\). It turns out that there is a one-to-one correspondence between Lagrange multipliers in these problems and a one-to-one correspondence between elements of the critical cones. Moreover, for corresponding Lagrange multipliers, the quadratic forms in these problems take equal values on the corresponding elements of the critical cones. This allows to express the necessary and sufficient quadratic optimality conditions for a bang-bang control, formulated in Theorems 13 and 14, in terms of the IOP (50). Thus we are able to establish the equivalence between our quadratic sufficient conditions and those due to Agrachev et al. [1].

Let \(\widehat{\mathcal{T}} = (\hat{x}(t),\hat{u}(t)\mid t \in [\hat{t}_{0},\hat{t}_{f}])\) be an admissible process in the basic problem with the properties assumed in Sect. 5.2 and let \(\hat{\zeta }= (\hat{t}_{0},\hat{t}_{f},\hat{x}_{0},\hat{\theta })\) be the corresponding admissible point in the IOP. The Lagrange function for the Induced Optimization Problem (50) is

where \(\mu = (\alpha _{0},\alpha,\beta ),\,\zeta = (t_{0},t_{f},x_{0},\theta ),\,\theta = (t_{1},\ldots,t_{s})\). We denote by \(\mathcal{K}_{0}\) the critical cone at the point \(\hat{\zeta }\) in the IOP. Thus, \(\mathcal{K}_{0}\) is the set of collections \(\bar{\zeta }= (\bar{t}_{0},\bar{t}_{f},\bar{x}_{0},\bar{\theta })\) such that

where \(I =\{ i\mid \mathcal{F}_{i}(\hat{\zeta }) = 0\}\) is the set of indices of the inequality constraints active at the point \(\hat{\zeta }\). For μ ∈ M 0 the quadratic form, of the induced optimization problem, is equal to \(\langle \mathcal{L}_{\zeta \zeta }(\mu,\hat{\zeta })\bar{\zeta },\bar{\zeta }\rangle\).

Let us formulate now second-order optimality conditions for the basic control problem in terms of the IOP.

Theorem 16 (Second-Order Necessary Conditions).

If the process \(\widehat{\mathcal{T}}\) affords a Pontryagin minimum in the basic problem, then the following Condition \(\mathcal{A}_{0}\) holds: the set M 0 is nonempty and

Theorem 17 (Second-Order Sufficient Conditions).

Let the following Condition \(\mathcal{B}_{0}\) be fulfilled for an admissible process \(\widehat{\mathcal{T}}\) in the basic problem:

-

(a)

\(\hat{u}(t)\) is a strict bang-bang control with finitely many switching times \(\hat{t}_{k}\) , k = 1,…,s (hence, the set M 0 is nonempty and condition (35) holds for some μ ∈ M 0 ),

-

(b)

there exists μ ∈ M 0 such that D k (H μ ) > 0, k = 1,…,s,

-

(c)

\(\max \limits _{\mu \in M_{0}}\,\langle \mathcal{L}_{\zeta \zeta }(\mu,\hat{\zeta })\bar{\zeta },\bar{\zeta }\rangle > 0\;\;\mbox{ for all }\;\bar{\zeta } \in \mathcal{K}_{0}\setminus \{0\}.\)

Then \(\widehat{\mathcal{T}}\) is a strict strong minimum in the basic problem.

Theorem 17 is a generalization of sufficient optimality conditions for bang-bang controls obtained in Agrachev et al. [1]. Detailed proofs of Theorems 16 and 17 are given in [35] and in our book [36].

Remark 2.

Noble and Schättler [29] and Schättler and Ledzewicz [38] develop sufficient optimality conditions using methods of geometric optimal control. There is some evidence that their sufficient conditions are closely related to the SSC in our work [35, 36]. However, a formal proof of the equivalence of both types of sufficient conditions has not yet been worked out.

6.3 Numerical Methods for Solving the Induced Optimization Problem

The arc-parametrization method developed in [16, 26] provides an efficient method for solving the IOP. To better explain this method, for simplicity let us consider the basic control problem with fixed initial time t 0 = 0 and fixed initial condition x 0(0) = x 0, and without inequality constraints \(\mathcal{F}(\zeta ) \leq 0\). For this problem, we slightly change the notation and replace the resulting optimization vector ζ = (t f , t 1, …, t s ) by the vector \(z = (t_{1},\ldots,t_{s},t_{s+1}),\,t_{s+1} = t_{f}.\) Instead of directly optimizing the switching times t k , k = 1, …, s, we determine the arc lengths (arc durations)

of bang-bang arcs. Hence, the optimization variable \(z = (t_{1},\ldots,t_{s},t_{s+1})^{{\ast}}\) is replaced by the optimization variable

The variables z and \(\xi\) are related by a linear transformation involving the regular \((s + 1) \times (s + 1)\)-matrix R:

In the arc-parametrization method, the time interval [t k−1, t k ] is mapped to the fixed interval

by the linear transformation

where

Identifying

in the relevant intervals, we obtain the ODE system

By concatenating the solutions in the intervals I k we get the continuous solution \(x(t) = x(t;\xi )\) in the normalized interval [0, 1]. When expressed via the new optimization variable \(\xi\), the Induced Optimization Problem (IOP) in (50) is equivalent to the following optimization problem \((\widetilde{\text{IOP}})\) with \(t_{f} =\sum \limits _{ k=1}^{s+1}\xi _{k}\):

The Lagrangian function is given by

Using the linear transformation (55), it can easily be seen that the SSCs for the Induced Optimization Problems (IOP) and \((\widetilde{\text{IOP}})\) are equivalent; cf. similar arguments in [26]. To solve the \((\widetilde{\text{IOP}})\), we use a suitable adaptation of the control package NUDOCCCS in Büskens [4, 5]. Then we can take advantage of the fact that NUDOCCCS also provides the Jacobian of the terminal constraints and the Hessian of the Lagrangian which are needed in the check of the second-order condition in Theorem 17.

In practice, we shall verify the positive definiteness condition (c) in Theorem 17 in a stronger form. We assume that the multiplier can be chosen as μ = (1, β) and that the following regularity condition holds:

Let N be the \(n_{\xi } \times (n_{\xi } - d(K))\) matrix, \(n_{\xi } = n + s + 1\) (where n = d(x)), with full column rank \(n_{\xi } - d(K)\), whose columns span the kernel of \(\widetilde{\mathcal{G}}_{\xi }(\hat{\xi })\). Then condition (c) in Theorem 17,

is equivalent to the condition that the projected Hessian is positive definite [6],

7 Numerical Example with Fixed Final Time: Optimal Control of the Chemotherapy of HIV

The treatment of patients infected with the human immunodeficiency virus (HIV) is still of great concern today (Kirschner et al. [18]). The problem of determining optimal chemotherapies has been treated in Kirschner et al. [18] in the framework of optimal control theory. The optimal control model is based on a simple dynamic model in Perelson et al. [37] which simulates the interaction of the immune system with HIV. Kirschner et al. [18] use a control quadratic cost functional of L 2-type. It has been argued in Schättler et al. [39] that in a biological context it is more appropriate to consider cost functionals of L 1-type which are linear in the control variable. Therefore, in this section, we are studying an objective of L 1-type, where the quadratic control is replaced by a linear control. The state and control variables have the following meaning:

- T(t)::

-

concentration of uninfected CD4+ T cells,

- T ∗(t)::

-

concentration of latently infected CD4+ T cells,

- T ∗∗(t)::

-

concentration of actively infected CD4+ T cells,

- V (t)::

-

concentration of free infectious virus particles,

- u(t)::

-

control, rate of chemotherapy.

The treatment starts at t 0 = 0 and terminates at the fixed final time t f = 500 (days). Thus, the control process is considered in the interval [0, t f ]. The dynamics of the populations are (omitting the time argument):

The control constraint is given by

where u(t) = 1 represents maximal chemotherapy, while u(t) = 0 means that no chemotherapy is administered. Note that Kirschner et al. [18] consider the control variable \(v = 1 - u\). It is convenient to write the ODE (65) as the control affine system (24),

with obvious definitions of the vector functions f(x) and g(x). As in [18] we consider two sets of initial conditions which depend on the time at which the treatment starts after the infection. The following initial conditions are interpolated from [18] and have already been used in the [15].

Initial conditions after 800 days:

Initial conditions after 1000 days:

The parameter and constants are taken from [18] and are listed in Table 1.

Kirschner et al. [18] consider the following objective of L 2-type which is quadratic in the control variable:

Recall that the state variable is defined as \(x:= (T,T^{{\ast}},T^{{\ast}{\ast}},V ) \in \mathbb{R}^{4}\). The optimal control that minimizes (70) subject to the constraints (65)–(69) is a continuous function, since the associated Hamiltonian \(H(x,\lambda,u)\) has a unique minimum with respect to u and the strict Legendre-Clebsch condition H uu = 2B > 0 holds. For the two sets of initial conditions (68) and (69), the numerical discretization and NLP approach using AMPL [12] and IPOPT [42] yield the optimal controls shown in Fig. 3 which were also obtained in Hannemann [15].

Hannemann [15] showed that second-order sufficient conditions (SSC) are satisfied for the controls displayed in Fig. 3, since the associated matrix Riccati equation has a bounded solution. Note that Riccati equations are discussed in [19, 24] and in our book [36], Chap. 4

Instead of the L 2 functional (70) we consider now the functional of L 1-type:

The Hamiltonian or Pontryagin function for this control problem is given by

where \(\lambda = (\lambda _{T},\lambda _{T^{{\ast}}},\lambda _{T^{{\ast}{\ast}}},\lambda _{V }) \in \mathbb{R}^{4}\) denotes the adjoint variable. The adjoint equation and transversality condition are given by

since the terminal state x(t f ) is free and the objective (71) does not contain a Mayer term. We do not write out the adjoint equation \(\dot{\lambda }= -H_{x}(x,\lambda,u)\) explicitly, since this equation is not needed in the sequel. The adjoint variables can be computed from the Lagrange multipliers of the associated Induced Optimization Problem (IOP). The switching function is given by

The minimization of the Hamiltonian with respect to u yields the switching condition

The control has a singular arc in an interval \([t_{1},t_{2}] \subset [0,T]\) if \(\sigma (t) = 0\) holds on [t 1, t 2]. However, we do not discuss singular controls further because for the data in Table 1 we never found singular arcs. Indeed, the optimal control for the L 1-functional (71) is the following bang-bang control with only one switch at t 1:

The terminal arc u(t) = 0 results from the terminal value \(\sigma (t_{f}) = B > 0\) of the switching function and the minimum condition (74). Hence, the IOP has only the scalar optimization variable t 1 and thus the objective (71) reduces to a function J 1(t 1) = J 1(x, u). The arc-parametrization method [26, 36] and the code NUDOCCCS [4] yield the following numerical results, where state variables are listed with 8 digits and adjoint variables with 6 digits.

The state and control variables and the switching function are displayed in Fig. 4. To verify that the second-order sufficient conditions (SSC) are satisfied for the computed extremal solution, we have to check the conditions of Theorem 17. The strict bang-bang property is satisfied, since we infer from Fig. 4 (bottom, right) that the switching function satisfies

To verify the positive definiteness in condition (63), we note that the Hessian is simply the second derivative of the objective J 1(t 1) evaluated at the optimal switching time t 1 = 161. 695711 for which we find

Hence, the extremal solution (76) displayed in Fig. 4 provides a strict strong minimum.

Optimal solution for initial conditions (68): treatment starts after 800 days. Top row: (left) uninfected CD4+ T cells, (right) latently infected CD4+ T ∗ cells. Middle row: (left) actively infected CD4+ T ∗∗ cells, (right) infectious virus particles V. Bottom row: (left) bang-bang control u, (right) bang-bang control u and (scaled) switching function \(\sigma\) in (73) satisfying the switching condition (74)

Now we try to improve the optimal terminal value T(t f ) = 983. 493 of the uninfected CDC4+ T cells. For that purpose we prescribe a higher terminal value and minimize the functional J 1(x, u) subject to the boundary condition

The arc-parametrization method [26, 36] and the control package NUDOCCCS [4] furnish the results

Figure 5 displays the state and control variables and the switching function. Figure 5 (bottom, right) shows that the strict bang-bang property (77) is satisfied. Condition (c) in Theorem 17 holds because the critical cone \(\mathcal{K}_{0} =\{ 0\}\) contains of zero element. Therefore, the extremal displayed in Fig. 5 provides a strict strong minimum.

Optimal solution for initial conditions (68): treatment starts after 800 days and and terminal condition T(t f ) = 995. Top row: (left) uninfected CD4+ T cells, (right) latently infected CD4+ T ∗. Middle row: (left) actively infected CD4+ T ∗∗ cells, (right) infectious virus particles V. Bottom row: (left) bang-bang control u, (right) bang-bang control u and scaled switching function \(\sigma\) in (73) satisfying the switching condition (74)

Finally, we study the optimal solution for the initial values (69), when the treatment starts after 1000 days and, again, the boundary condition T(t f ) = 995 is prescribed. The arc-parametrization method in [26, 36] and the control package NUDOCCCS yield the results

Figure 6 depicts the state and control variables and the switching function. The SSC in Theorem 17 are satisfied, since the strict bang-bang property (77) holds and condition (63) holds in view of \(\mathcal{K}_{0} =\{ 0\}\). Therefore, the extremal (80) provides a strict strong minimum.

Optimal solution for initial conditions (69): treatment starts after 1000 days and terminal condition T(t f ) = 995. Top row: (left) uninfected CD4+ T cells, (right) latently infected CD4+ T ∗. Middle row: (left) actively infected CD4+ T ∗∗ cells, (right) infectious virus particles V. Bottom row: (left) bang-bang control u, (right) bang-bang control u and scaled switching function \(\sigma\) in (73) satisfying the switching condition (74)

8 Numerical Example with Free Final Time: Time-Optimal Control of Two-Link Robots

In this section, we review the results in our book [36] on the optimal control of two-link robots which has been addressed in various articles; cf., e.g. [9, 13, 14, 30]. In these papers, optimal control policies are determined solely on the basis of first order necessary conditions, since sufficient conditions were not available. In this section we show that SSC hold for both types of robots considered in [9, 14, 30].

First, we study the robot model considered in Chernousko et al. [9]. Göllmann [14] has shown that the optimal control candidate presented in [9] is not optimal, since the sign conditions of the switching functions do not comply with the Minimum Principle. Figure 7 displays the two-link robot schematically. The state variables are the angles q 1 and q 2. The parameters I 1 and I 2 are the moments of inertia of the upper arm \(\overline{OQ}\) and the lower arm \(\overline{QP}\) with respect to the points O and Q, resp. Further, let m 2 be the mass of the lower arm, \(L_{1} = \vert \overline{OQ}\vert \) the length of the upper arm, and \(L_{1} = \vert \overline{QC}\vert \) the distance between the second link Q and the center of gravity C of the lower arm. With the abbreviations

the dynamics of the two-link robot can be described by the ODE system

where ω 1 and ω 2 are the angular velocities. The torques u 1 and u 2 in the two links represent the two control variables. Therefore, the state variable and control variable are given by

The control problem consists in steering the robot from a given initial position to a terminal position in minimal final time t f ,

The control components are bounded by

The Pontryagin function (Hamiltonian) is

The adjoint equations are rather complicated and are not given here explicitly. The switching functions are

For the parameter values

Göllmann [14] has found the following control structure with four bang-bang arcs,

This control structure differs substantially from the one in Chernousko et al. [9] which violates the switching conditions. Obviously, the bang-bang control (87) satisfies the assumption that only one control components switches at a time. Since the initial point (q 1(0), q 2(0), ω 1(0), ω 2(0)) is specified, the optimization variable in the IOP (61) is

Using the code NUDOCCCS we compute the following arc durations and switching times

Numerical values for the adjoint functions are also provided by the code NUDOCCCS, e.g., the initial values

Figure 8 shows that the switching functions \(\sigma _{1}\) and \(\sigma _{2}\) comply with the minimum condition and that the strict bang-bang property (35) and the inequalities D k(H) > 0, k = 1, 2, 3 are satisfied:

For the terminal conditions (83) we obtain the Jacobian

This square matrix has full rank in view of

which means that the positive definiteness condition (63) trivially holds. Thus we have verified first-order sufficient conditions showing that the extremal solution given by (87)–(89) provides a strict strong minimum.

In the model treated above, some parameters like the mass of the upper arm and the mass of a load at the end of the lower arm appear implicitly in the system equations. The mass m 1 of the upper arm is included in the moment of inertia I 2 and the mass M of a load in the point P can be added to the mass m 2, where the point C and therefore the length L have to be adjusted. The length L 2 of the lower arm is incorporated in the parameter L.

The second robot model that we are going to discuss is taken from Geering et al. [13] and Oberle [30]. Here, every physical parameter enters the system equation explicitly. The dynamic system is as follows:

where we have used the abbreviations

Here, I 3 denotes the moment of inertia of the load with respect to the point P and ω 2 is now the angular velocity of the angle q 1 + q 2. For simplicity, we set I 3 = 0. Again, the torques u 1 and u 2 in the two links are used as control variables by which the robot is steered from a given initial position to a non-fixed end position in minimal final time t f ,

where (x 1(t), x 2(t)) are the Cartesian coordinates of the point P,

The initial point (x 1(0), x 2(0)) = (2, 0) is fixed. Both control components are bounded,

The Hamilton–Pontryagin function is given by

The switching functions are computed as

For the parameter values

we will show that the optimal control has the following structure with five bang-bang arcs with \(0 = t_{0} < t_{1} < t_{2} < t_{3} < t_{4} < t_{5} = t_{f}\) (Fig. 9):

Since the initial point (q 1(0), q 2(0), ω 1(0), ω 2(0)) is specified, the optimization variable in the optimization problem (50), resp., (61) is

The code NUDOCCCS yields the arc durations and switching times

as well as the initial values of the adjoint variables,

The strict bang-bang property (35) and the inequalities D k(H) > 0, k = 1, 2, 3, hold in view of

For the terminal conditions in (92), the Jacobian in the optimization problem is computed as the (4 × 5)-matrix

which has full rank. The Hessian of the Lagrangian is given by

This yields the projected Hessian (64) as the positive number

Hence, all conditions in Theorem 17 are satisfied and thus the extremal (97)–(99) yields a strict strong minimum.

It is interesting to note that there exists a second local minimum with the same terminal time t f = 3. 88941. Though the control has also five bang-bang arcs, the control structure is substantially different from that in (97),

where 0 < t 1 < t 2 < t 3 < t 4 < t 5 = t f . NUDOCCCS determines the switching times

for which the strict bang-bang property (35) holds and D k(H) > 0 for k = 1, 2, 3, 4. Moreover, computations show that \(\,rank\,(\,\widetilde{\mathcal{G}}_{\xi }(\hat{\xi })\,) = 4\,\) and that the projected Hessian of the Lagrangian (64) is the positive number

It is remarkable that this value is identical with the value of the projected Hessian for the first local minimum. Therefore, also for the second solution we have verified that all conditions in Theorem 17 hold, and thus the extremal (100), (101) is a strict strong minimum. The phenomenon of multiple local solutions all with the same minimal time t f has also been observed by Betts [3], Example 6.8 (Reorientation of a rigid body).

9 Optimal Control Problems with Mixed Control-State Constraints and Control Appearing Linearly

To the best of our knowledge, second-order sufficient optimality conditions (SSC) for optimal control problems with mixed control-state constraints have only been studied for the class of regular controls, where the strict Legendre–Clebsch condition holds. Such control problems have not yet been considered, when the control variable appears linearly in the system dynamics and in the mixed control-state constraint. For a two-sided control-state constraint we will show that the constraining function itself can be taken as a new control variable, whereby the original control problem is transformed into a classical control problem with an affine control variable subject to simple control bounds. Hence, optimal controls for the transformed control problem are concatenations of bang-bang and singular arcs. The material in this section is based on our paper [23].

9.1 Statement of the Problem and Transformed Control Problem