Abstract

Within different techniques for texture modelling and recognition, local binary patterns and its variants have received much interest in recent years thanks to their low computational cost and high discrimination power. We propose a new texture description approach, whose principle is to extend the LBP representation from the local gray level to the regional distribution level. The region is represented by pre-defined structuring element, while the distribution is approximated using the two first statistical moments. Experimental results on four large texture databases, including Outex, KTH-TIPS 2b, CUReT and UIUC show that our approach significantly improves the performance of texture representation and classification with respect to comparable methods.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Local Binary Pattern (LBP)

- Local Gray Level

- Large Text Databases

- Binary Pattern Statistics (SBP)

- Image Moments

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Texture analysis is an active research topic in computer vision and image processing. It has a significant role in many applications, such as medical image analysis, remote sensing, document vectorisation, or content-based image retrieval. Thus, texture classification has received considerable attention over the two last decades, and many novel methods have been proposed [1–15].

The representation of texture features is a key factor for the performance of texture classification systems. Numerous powerful descriptors were recently proposed: modified SIFT (scale invariant feature transform) and intensity domain SPIN images [4], MR8 [7], the rotation invariant basic image features (BIF) [11], (sorted) random projections over small patches [15]. Most earlier works focused on filter banks and the statistical distributions of their responses. Among the popular descriptors in these approaches are Gabor filters [16], MR8 [7], Leung and Malik’s [17] filters, or wavelets [18]. Filter bank approaches are attractive by being expressive and flexible, but they may be hard to work out by being computationally expensive and application dependent. Varma and Zisserman [7] have shown that local intensities or differences in a small patch can produce better performance than filter banks with large spatial support.

Local Binary Patterns (LBP) emerged ten years ago when Ojala et al. [3] showed that simple relations in small pixel neighborhoods can represent texture with high discrimination. They used a binary code to represent the signs of difference between the values of a pixel and its neighbours. Since then, due to its great computational efficiency and good texture characterisation performance, the LBPs have been applied in many applications of computer vision and a large number of LBP variants [8, 9, 14, 19] have been introduced. They have been introduced to remedy several limitations of basic LBP including small spatial support region, loss of local textural information, rotation and noise sensitivities. Instead of using central pixel as threshold, several authors used the median [20] or the mean [21, 22] value of neighbouring pixels. Similarly, Liao et al. [23] considered LBP on mean values of local blocks. Local variance was used as a complementary contrast measure [3, 12]. Gabor filters [24] are widely used for capturing more global information. Guo et al. [9] included both the magnitudes of local differences and the pixel intensity itself in order to improve the discrimination capability. Dominant LBPs (DLBP) [8] have been proposed to deal with the most frequent patterns instead of uniform patterns. Zhao et al. [25] combined with covariance matrix to improve the performance.

We address a new efficient schema to exploit variance information in this paper. Unlike typical methods that considered the joint distribution of LBP codes and local contrast measure (LBP/VAR) [3] or integrated directly variance information into LBP model [12], we capture the local relationships within images corresponding to local mean and variance. We show that this approach is more efficient to exploit the complementary contrast information. Our descriptor enhances the expressiveness of the classic LBP texture representation while providing high discrimination, as we show in a comparative evaluation on several classic texture data sets. It has limited computational complexity and doesn’t need neither multiscale processing nor magnitude complementary information (CLBP_M) to obtain state-of-the-art results: CLBP [9], CLBC [14], CRLBP [22], NI/RD/CI [26].

The remaining of the paper is organised as follows. Section 2 presents in more details the other works most directly related to our method. Section 3 elaborates the proposed approach. Experimental results are presented in Sect. 4 and conclusions are finally drawn in Sect. 5.

2 Related Work

2.1 Rotation Invariant Uniform LBP

Ojala et al. [27] supposed that texture has locally two complementary aspects: a spatial structure and its contrast. Therefore, LBP was proposed as a binary version of the texture unit to represent the spatial structure. The original version works in a block of 3\(\,\times \,\)3 pixels. The pixels in the block are coded based on a thresholding by the value of center pixel and its neighbors. A chain code of 8 bit is then obtained to label the center pixel. Hence, there are totally \(2^8=256\) different labels to describe the spatial relation of the center pixel. These labels define local textural patterns.

The generalised LBP descriptor, proposed by Ojala et al. [3], encodes the spatial relations in images. Let \(f\) be a discrete image, modelled as a mapping from \(\mathbb {Z}^2\) to \(\mathbb {R}\). The original LBP encoding of \(f\) is defined as the following mapping from \(\mathbb {Z}^2\) to \(\{0,1\}^P\):

Here \(\mathbf {y}_p\) (\(0 \le p < P\)) are the \(P\) neighbours of \(\mathrm {z}\), whose values are evenly measured (or interpolated) on the circle of radius \(R\) centred on \(\mathrm {z}\).

The uniformity measure of an LBP is defined as follows:

where \(\mathrm {LBP}_{P,R}^p\) is the \(p\)-th bit of \(\mathrm {LBP}_{P,R}\), and \(\mathrm {LBP}_{P,R}^P = \mathrm {LBP}_{P,R}^0\). An LBP is called uniform if \(U(\mathrm {LBP}_{P,R}) \le 2\). Ojala et al. observed that, on natural texture images, most patterns are uniform. Finally the rotation invariant uniform LBP is defined as follows:

LBP\(^{riu2}\) proved [3] a very efficient local texture descriptor and then has been intensively used in texture classification. Uniform patterns are considered as more reliable and more statistically significant. Furthermore, ignoring non-uniform patterns considerably reduces the length of the descriptor, with only \(P+2\) distinct \({LBP}_{P,R}^{riu2}\) compared to \(2^P\) distinct \({LBP}_{P,R}\).

2.2 Complementary Information

Local Contrast. Aside from the classic LBP, different authors have addressed the complementary information in order to improve the performance of texture classification. In the first work about LBP [27], a local contrast measure, defined between each block \(3 \times 3\), has been used in combination with LBP codes. Then, it has been replaced in [3] by local variance (VAR) measured in a circular spatial support just like the LBP:

where \(\mu =\frac{1}{P}\displaystyle \sum _{p=0}^{P-1}g_p\)

Because variance measure has a continuous value, in order to construct a joint distribution of LBP and local variance (LBP/VAR), a quantization of variance measure is needed. The efficiency of quantization step depends on the number of bins and also the cut values of the bins of the histogram, hence a training stage is applied to determine such parameters. In [12], Guo et al. addressed a different way that avoids this stage by incorporating variance measure (VAR) into LBP model. It was used as an adaptive weight for adjusting the contribution of LBP code in construction of histogram.

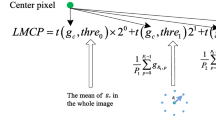

Magnitude Information. Guo et al. [9] presented a state-of-the-art variant by regarding the local differences as two complementary components, signs: \(s_p = s(f(\mathbf {y}_p) - f(\mathbf {z}))\) and magnitudes: \(m_p=|f(\mathbf {y}_p) - f(\mathbf {z})|\). They proposed two operators, called CLBP-Sign (\(CLBP\_S\)) and CLBP-Magnitude (\(CLBP\_M\)) to code these two components. The first operator is identical to the LBP. The second one which measures the local variance of magnitude is defined as follows:

where \(\tilde{m}\) is the mean value of \(m_p\) for the whole image. In addition, Guo et al. observed that the local value itself carries important information. Therefore, they defined the operator CLBP-Center (CLBP\(\_C\)) as follows:

where \(\tilde{f}\) is set as the mean gray level of the whole image. Because these operators are complementary, their combination leads to a significant improvement in texture classification, then this variant is also considered as a reference LBP method.

3 Texture Representation Using Statistical Binary Patterns

The Statistical Binary Pattern (SBP) representation aims at enhancing the expressiveness and discrimination power of LBPs for texture modelling and recognition, while reducing their sensitivity to unsignificant variations (e.g. noise). The principle consists in applying rotation invariant uniform LBP to a set of images corresponding to local statistical moments associated to a spatial support. The resulting code forms the Statistical Binary Patterns (SBP). Then a texture is represented by joint distributions of SBPs. The classification can then be performed using nearest neighbour criterion on classical histogram metrics like \(\chi ^2\). We now detail those different steps.

3.1 Moment Images

A real valued 2d discrete image \(f\) is modelled as a mapping from \(\mathbb {Z}^2\) to \(\mathbb {R}\). The spatial support used to calculate the local moments is modelled as \(B \subset \mathbb {Z}^2\), such that \(O \in B\), where \(O\) is the origin of \(\mathbb {Z}^2\).

The \(r\)-order momentFootnote 1 image associated to \(f\) and \(B\) is also a mapping from \(\mathbb {Z}^2\) to \(\mathbb {R}\), defined as:

where \(|B|\) is the cardinality of \(B\). Accordingly, the \(r\)-order centred moment image (\(r > 1\)) is defined as:

From now on, we shall use also the notation \(m_r\), \(\mu _r\) to indicate \(r\)-order moment and \(r\)-order centred moment respectively.

3.2 Statistical Binary Patterns

Let \(R\) and \(P\) denote respectively the radius of the neighbourhood circle and the number of values sampled on the circle. For each moment image \(M\), one statistical binary pattern is formed as follows:

-

one \((P+2)\)-valued pattern corresponding to the rotation invariant uniform LBP coding of \(M\):

$$\begin{aligned} \mathrm {SBP}_{P,R}(M)(\mathbf {z}) = \mathrm {LBP}_{P,R}^{riu2}(M)(\mathbf {z}) \end{aligned}$$(9) -

one binary value corresponding to the comparison of the centre value with the mean value of \(M\):

$$\begin{aligned} \mathrm {SBP}_{C}(M)(\mathbf {z}) = s(M(\mathbf {z}) - \tilde{M}) \end{aligned}$$(10)

Where \(s\) denote the sign function already defined, and \(\tilde{M}\) the mean value of the moment \(M\) on the whole image. \(\mathrm {SBP}_{P,R}(M)\) then represents the structure of moment \(M\) with respect to a local reference (the centre pixel), and \(\mathrm {SBP}_{C}(M)\) complements the information with the relative value of the centre pixel with respect to a global reference (\(\tilde{M}\)). As a result of this first step, a \(2(P+2)\)-valued scalar descriptor is then computed for every pixel of each moment image.

3.3 Texture Descriptor

Principles. Let \(\{M_i\}_{1 \le i \le n_M}\) be the set of \(n_M\) computed moment images. \(\mathrm {SBP}^{\{M_i\}}\) is defined as a vector valued image, with \(n_M\) components such that for every \(\mathbf {z} \in \mathbb {Z}^2\), and for every \(i\), \(\mathrm {SBP}^{\{M_i\}}(\mathbf {z})_i\) is a value between \(0\) and \(2(P+2)\).

If the image \(f\) contains a texture, the descriptor associated to \(f\) is made by the histogram of values of \(\mathrm {SBP}^{\{M_i\}}\). The joint histogram \(H\) is defined as follows:

The texture descriptor is formed by \(H\) and its length is \(\left[ 2(P+2) \right] ^{n_M}\).

Implementation.

In this paper, we focus on SBP\(_{P,R}^{m_1\mu _2}\), i.e. the SBP patterns obtained with the mean \(m_1\) and the variance \(\mu _2\). Using two orders of moments, the size of the joint histogram in the texture descriptor remains reasonable. Figure 1 illustrates the calculation of the texture descriptor using \(m_1\) and \(\mu _2\) images. The local spatial structure on each image are captured using LBP\(^{riu2}_{P,R}\). In addition, two binary images are computed by thresholding the moment images with respect to their average values. For each moment, the local pattern may then have \(2(P+2)\) distinct values. Finally, the joint histogram of the two local descriptors is used as the texture feature and is denoted SBP\(^{m_1\mu _2}\). Therefore, the feature vector length is \(4(P+2)^2\). As we can see in Fig. 1, alongside of the pics corresponding to non-uniform bin, the local structures of the 2D histogram clearly highlight the correlation between the uniform patterns of the two LBP images.

Texture representation based on a combination of two first order moments. In LBP images, red pixels correspond to non-uniform patterns. The structuring element used here is \(\{(1,5),(2,8)\}\) (see Sect. 3.3) while LBP\(_{24,3}^{riu2}\) is applied.

Properties.

Several remarks can be made on the properties of SBP\(^{m_1\mu _2}\) and its relation with existing works.

-

Robustness to noise: \(m_1\) and \(\mu _2\) act like a pre-processing step which reduces small local variations and then enhances the significance of the binary pattern with respect to the raw images.

-

Rotation invariance: Isotropic structuring elements should be used in order to keep the rotation invariance property of the local descriptor.

-

Information richness: LBPs on moment images capture an information which is less local, and the two orders of moments provide complementary information on the spatial structure.

There are links between SBP\(^{m_1\mu _2}\) and the CLBP descriptors of Guo et al. [9] (see Sect. 2). First, the binary images used in SBP correspond to CLBP_C operator. Second, the respective role of \(m_1\) and \(\mu _2\) are somewhat similar to the CLBP_S and CLBP_M operators. However in CLBP and its variants, CLBC [14] and CRLBP [22], the magnitude component (CLBP_M) is more a contrast information complementing CLBP_S, whereas SBP\(^{\mu _2}\) represents the local structure of a contrast map, and can be considered independently on SBP\(^{m_1}\).

Parameter Settings.

We describe now the different parameters that can be adjusted in the framework, and the different settings we have chosen to evaluate. Two main parameters have to be set in the calculation of the SBP:

-

the spatial support \(B\) for calculating the local moments, also referred to as structuring element.

-

the spatial support \(\{P,R\}\) for calculating the LBP.

Although those two parameters are relatively independent, it can be said that \(B\) has to be sufficiently large to be statistically relevant, and that its size should be smaller or equal than the typical period of the texture. Regarding \(\{P,R\}\), it is supposed to be very local, to represent micro-structures of the (moment) images.

As mentioned earlier, for rotation invariance purposes, we shall use isotropic structuring elements. To be compliant with the LBP representation, we have chosen to define the structuring elements as unions of discrete circles: \(B = \{\{P_i,R_i\}\}_{i \in I}\), such that \((P_i)_i\) (resp. \((R_i)_i\)) is an increasing series of neighbour numbers (resp. radii). As an example, Fig. 2 shows the filtered images using a structuring element \(B = \{(1,4), (2,8)\}\).

3.4 Texture Classification

Two texture images being characterised by their respective histogram (descriptor) \(F\) and \(G\), their texture dissimilarity metrics is calculated using the classical \(\chi ^2\) distance between distributions:

where \(d\) is the number of bins (dimension of the descriptors). Our classification is then based on a nearest neighbour criterion. Every texture class with label \(\lambda \) is characterised by a prototype descriptor \(K_{\lambda }\), with \(\lambda \in \Lambda \). For an unknown texture image \(f\), its descriptor \(D_f\) is calculated, and the texture label is attributed as follows:

4 Experimentations

We present hereafter a comparative evaluation of our proposed descriptor.

4.1 Databases and Experimental Protocols

The effectiveness of the proposed method is assessed by a series of experiments on four large and representative databases: Outex [28], CUReT (Columbia-Utrecht Reflection and Texture) [29], UIUC [4] and KTH-TIPS2b [30].

The Outex database (examples are shown in Fig. 5) contains textural images captured from a wide variety of real materials. We consider the two commonly used test suites, Outex_TC_00010 (TC10) and Outex_TC_00012 (TC12), containing 24 classes of textures which were collected under three different illuminations (“horizon”, “inca”, and “t184”) and nine different rotation angles (0\(^{\circ }\), 5\(^{\circ }\), 10\(^{\circ }\), 15\(^{\circ }\), 30\(^{\circ }\), 45\(^{\circ }\), 60\(^{\circ }\), 75\(^{\circ }\) and 90\(^{\circ }\)). Each class contains 20 non-overlapping 128 \(\times \) 128 texture samples.

The CUReT database contains 61 texture classes, each having 205 images acquired at different viewpoints and illumination orientations. There are 118 images shot from a viewing angle of less than 60\(^{\circ }\). From these 118 images, as in [7, 9], we selected 92 images, from which a sufficiently large region could be cropped (200 \(\times \) 200) across all texture classes. All the cropped regions are converted to grey scale (examples are shown in Fig. 3).

The UIUC texture database includes 25 classes with 40 images in each class. The resolution of each image is 640 \(\times \) 480. The database contains materials imaged under significant viewpoint variations (examples are shown in Fig. 4).

The KTH-TIPS2b database contains images of 11 materials. Each material contains 4 physical samples taken at 9 different scales, 3 viewing angles and 4 illuminants, producing 432 images per class (see [30] for more detailed information). Figure 6 illustrates an example of the 11 materials. All the images are cropped to 200 \(\times \) 200 pixels and converted to grey scale. This database is considered more challenging than the previous version KTH-TIPS. In addition, it is more completed than KTH-TIPS2a where several samples have only 72 images.

4.2 Results on the Outex Database

Two common test suites TC10 and TC12 are used for evaluated our method. Following [28], the 24 \(\times \) 20 samples with illumination condition “inca” and rotation angle 0\(^{\circ }\) were adopted as the training data. Table 1 reports the experimental results of \(\mathrm {SBP^{m_1\mu _2}_{P,R}}\) in comparison with different methods.

From Table 1, we can get several interesting findings:

-

The proposed descriptor largely improves the results on the two test suites TC10 and TC12 in comparison with state-of-the-art methods in all configurations and with all structuring elements. Related with the most significant LBP-based variant (CLBP_S/M/C), the average improvement on three exprements (TC10, TC12t and TC12h) can reach 3.5 % in all configurations of \((P,R)\).

-

The structuring elements having single circular neighborhood give good results for test suite TC10.

-

The structuring elements having two circular neighborhoods lead to good results for the two test suites TC10 and TC12. It could be explained that their multiscale structure makes the descriptors more robust against illumination changes addressed in TC12 test suite.

From now on, the structuring element \(\{(1,5),(2,8)\}\) will be chosen by default due to its good results.

4.3 Results on the CUReT Database

Following [4, 9], in order to get statistically significant experimental results, \(N\) training images were randomly chosen from each class while the remaining \(92-N\) images per class were used as the test set. Table 2 shows an experiment of our method on CUReT database from which we can make the following remarks.

-

\(SBP\) works well in all configurations of \((P,R)\).

-

\(SBP\) is comparable to VZ_MR8 and it outperforms other LBP-based descriptors.

4.4 Results on the UIUC Database

As in [4], to eliminate the dependence of the results on the particular training images used, \(N\) training images were randomly chosen from each class while the remaining \(40-N\) images per class were used as test set. Table 3 shows the results obtained by our approach on UIUC dataset in comparison with other state-of-the-art methods. As can be seen from this table, our proposed descriptor largely outperforms CLBP.

4.5 Experiment on KTH-TIPS 2b Dataset

At the moment, KTH-TIPS 2b can be seen as the most challenging dataset for texture recognition. For the experiments on this dataset, we follow the training and testing scheme used in [30]. We perform experiments training on one, two, or three samples; testing is always conducted only on un-seen samples. Table 4 details our results on this dataset.

5 Conclusions and Discussions

We have presented a new approach for texture representation called Statistical Binary Patterns (SBP). Its principle is to extend the LBP representation from the local gray level to the regional distribution level. In this work, we have used single region, represented by pre-defined structuring element and we have limited the representation of the distribution to the two first statistical moments. While this representation is sufficient for single mode distributions such as Gaussian, it won’t be convenient for more complex distributions. Hence, investigating higher order moments in SBP would be highly desirable. The proposed approach has been experimented on different large databases to validate its interest. It must be mentioned that the complementary information of magnitude (CLBP_M), which is the principal factor for boosting classification performance in many other descriptors in the literature, has not been used in our approach. Our method also opens several perspectives that will be addressed in our future works.

-

Can we apply efficiently our descriptor in other domains of computer vision such as face recognition, dynamic texture, ...?

-

Can we still improve the performance by integrating other complementary information such as magnitude (CLBP_M), multi-scale approach, ...or combining with other LBP variants?

Notes

- 1.

Note that a moment image corresponds to a local filter defined by a statistical moment, and should not be confused with the concept of “image moment”.

References

Cula, O.G., Dana, K.J.: Compact representation of bidirectional texture functions. In: CVPR, vol. 1, pp. 1041–1047 (2001)

Zhang, J., Tan, T.: Brief review of invariant texture analysis methods. Pattern Recognition 35, 735–747 (2002)

Ojala, T., Pietikainen, M., Maenpaa, T.: Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. PAMI 24, 971–987 (2002)

Lazebnik, S., Schmid, C., Ponce, J.: A sparse texture representation using local affine regions. IEEE Trans. PAMI 27, 1265–1278 (2005)

Varma, M., Zisserman, A.: A statistical approach to texture classification from single images. Int. J. Comput. Vis. 62, 61–81 (2005)

Permuter, H., Francos, J., Jermyn, I.: A study of gaussian mixture models of color and texture features for image classification and segmentation. Pattern Recognit. 39, 695–706 (2006)

Varma, M., Zisserman, A.: A statistical approach to material classification using image patch exemplars. IEEE Trans. PAMI 31, 2032–2047 (2009)

Liao, S., Law, M.W.K., Chung, A.C.S.: Dominant local binary patterns for texture classification. IEEE Trans. Image Process. 18, 1107–1118 (2009)

Guo, Z., Zhang, L., Zhang, D.: A completed modeling of local binary pattern operator for texture classification. IEEE Trans. Image Process. 19, 1657–1663 (2010)

Chen, J., Shan, S., He, C., Zhao, G., Pietikäinen, M., Chen, X., Gao, W.: WLD: a robust local image descriptor. IEEE Trans. PAMI 32, 1705–1720 (2010)

Crosier, M., Griffin, L.D.: Using basic image features for texture classification. Int. J. Comput. Vis. 88, 447–460 (2010)

Guo, Z., Zhang, L., Zhang, D.: Rotation invariant texture classification using LBP variance (LBPV) with global matching. Pattern Recognit. 43(3), 706–719 (2010)

Puig, D., Garcia, M.A., Melendez, J.: Application-independent feature selection for texture classification. Pattern Recognit. 43, 3282–3297 (2010)

Zhao, Y., Huang, D.S., Jia, W.: Completed local binary count for rotation invariant texture classification. IEEE Trans. Image Process. 21, 4492–4497 (2012)

Liu, L., Fieguth, P.W., Clausi, D.A., Kuang, G.: Sorted random projections for robust rotation-invariant texture classification. Pattern Recognit. 45, 2405–2418 (2012)

Manjunath, B.S., Ma, W.Y.: Texture features for browsing and retrieval of image data. IEEE Trans. PAMI 18, 837–842 (1996)

Leung, T.K., Malik, J.: Representing and recognizing the visual appearance of materials using three-dimensional textons. Int. J. Comput. Vis. 43, 29–44 (2001)

Sifre, L., Mallat, S.: Rotation, scaling and deformation invariant scattering for texture discrimination. In: CVPR, pp. 1233–1240 (2013)

Liu, L., Zhao, L., Long, Y., Kuang, G., Fieguth, P.: Extended local binary patterns for texture classification. Image Vis. Comput. 30, 86–99 (2012)

Hafiane, A., Seetharaman, G., Zavidovique, B.: Median binary pattern for textures classification. In: Kamel, M.S., Campilho, A. (eds.) ICIAR 2007. LNCS, vol. 4633, pp. 387–398. Springer, Heidelberg (2007)

Jin, H., Liu, Q., Lu, H., Tong, X.: Face detection using improved lbp under bayesian framework. In: ICIG. (2004) 306–309

Zhao, Y., Jia, W., Hu, R.X., Min, H.: Completed robust local binary pattern for texture classification. Neurocomputing 106, 68–76 (2013)

Liao, S.C., Zhu, X.X., Lei, Z., Zhang, L., Li, S.Z.: Learning multi-scale block local binary patterns for face recognition. In: Lee, S.-W., Li, S.Z. (eds.) ICB 2007. LNCS, vol. 4642, pp. 828–837. Springer, Heidelberg (2007)

Zhang, W., Shan, S., Gao, W., Chen, X., Zhang, H.: Local gabor binary pattern histogram sequence (LGBPHS): a novel non-statistical model for face representation and recognition. In: ICCV, pp. 786–791 (2005)

Zhao, G., Pietikainen, M., Chen, X.: Combining lbp difference and feature correlation for texture description. IEEE Trans. Image Process. 23, 2557–2568 (2014)

Liu, L., Zhao, L., Long, Y., Kuang, G., Fieguth, P.W.: Extended local binary patterns for texture classification. Image Vision Comput. 30, 86–99 (2012)

Ojala, T., Pietikäinen, M., Harwood, D.: A comparative study of texture measures with classification based on featured distributions. PR 29(1), 51–59 (1996)

Ojala, T., Mäenpää, T., Pietikäinen, M., Viertola, J., Kyllönen, J., Huovinen, S.: Outex - new framework for empirical evaluation of texture analysis algorithms. In: ICPR, pp. 701–706 (2002)

Dana, K.J., van Ginneken, B., Nayar, S.K., Koenderink, J.J.: Reflectance and texture of real-world surfaces. ACM Trans. Graph. 18, 1–34 (1999)

Caputo, B., Hayman, E., Fritz, M., Eklundh, J.O.: Classifying materials in the real world. Image Vision Comput. 28, 150–163 (2010)

Tan, X., Triggs, B.: Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans. Image Process. 19, 1635–1650 (2010)

Guo, Y., Zhao, G., Pietikäinen, M.: Discriminative features for texture description. Pattern Recognit. 45, 3834–3843 (2012)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Nguyen, T.P., Manzanera, A. (2015). Incorporating Two First Order Moments into LBP-Based Operator for Texture Categorization. In: Jawahar, C., Shan, S. (eds) Computer Vision - ACCV 2014 Workshops. ACCV 2014. Lecture Notes in Computer Science(), vol 9008. Springer, Cham. https://doi.org/10.1007/978-3-319-16628-5_38

Download citation

DOI: https://doi.org/10.1007/978-3-319-16628-5_38

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-16627-8

Online ISBN: 978-3-319-16628-5

eBook Packages: Computer ScienceComputer Science (R0)