Abstract

We give an overview of Volker Mehrmann’s work on structured perturbation theory of eigenvalues. In particular, we review his contributions on perturbations of structured pencils arising in control theory and of Hamiltonian matrices. We also give a brief outline of his work on structured rank one perturbations.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Volker Mehrmann

- Hamiltonian Matrices

- Hamiltonian Schur Form

- Structured Eigenvalue Problems

- Mixed Signal Characteristics

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

The core research interests of Volker Mehrmann include mathematical modelling of real world processes and the design of numerically stable solutions of associated problems. He has almost four decades of research experience in these areas and perturbation analysis of the associated challenging eigenvalue problems form an integral part of his research. Often the challenges provided by these eigenvalue problems are due to the fact that the associated matrices have a special structure leading to symmetries in the distribution of their eigenvalues. For example the solution of continuous time linear quadratic optimal control problems and the vibration analysis of machines, buildings and vehicles lead to generalized eigenvalue problems where the coefficient matrices of the matrix pencil have a structure that alternates between Hermitian and skew-Hermitian. Due to this, their eigenvalues occur in pairs \((\lambda,-\bar{\lambda })\) when the matrices are complex and quadruples \((\lambda,\bar{\lambda },-\lambda,-\bar{\lambda })\) when they are real. In either case, the eigenvalues are symmetrically placed with respect to the imaginary axis. This is referred to as Hamiltonian spectral symmetry as it is also typically displayed by eigenvalues of Hamiltonian matrices. Note that a matrix A of even size, say 2n, is said to be Hamiltonian if (JA)∗ = JA where \(J = \left [\begin{array}{cc} 0 &I_{n} \\ - I_{n}& 0 \end{array} \right ]\). On the other hand, discrete time linear quadratic optimal control problems lead to generalized eigenvalue problems associated with matrix pencils of the form A − zA ∗ where A ∗ denotes the complex conjugate transpose of the matrix A [15, 46, 51, 63]. For these pencils, the eigenvalues occur in pairs \((\lambda,1/\bar{\lambda })\) in the complex case and in quadruples \((\lambda,1/\lambda,\bar{\lambda },1/\bar{\lambda })\) in the real case which imply that the eigenvalues are symmetrically placed with respect to the unit circle. This symmetry is referred to as symplectic spectral symmetry as it is typically possessed by symplectic matrices. Note that a matrix S of size 2n × 2n is said to be symplectic if JS is a unitary matrix.

It is now well established that solutions of structured eigenvalue problems by algorithms that preserve the structure are more efficient because often they need less storage space and time than other algorithms that do not do so. Moreover, computed eigenvalues of stable structure preserving algorithms also reflect the spectral symmetry associated with the structure which is important in applications. For more on this, as well as Volker Mehrmann’s contributions to structure preserving algorithms, we refer to the Chap. 1 by A. Bunse-Gerstner and H. Faßbender.

Perturbation analysis of eigenvalue problems involves finding the sensitivity of eigenvalues and eigenvectors of matrices, matrix pencils and polynomials with respect to perturbations and is essential for many applications, important among which is the design of stable and accurate algorithms. Typically, this is measured by the condition number which gives the rate of change of these quantities under perturbations to the data. For example, the condition number of a simple eigenvalue λ of an n × n matrix A, is defined by

where Λ(A +Δ) denotes the spectrum of A +Δ. Given any \(\mu \in \mathbb{C}\) and \(x \in \mathbb{C}^{n}\setminus \{0\}\), perturbation analysis is also concerned with computing backward errors η(μ) and η(μ, x). They measure minimal perturbations \(\varDelta \in \mathbb{C}^{n\times n}\) (with respect to a chosen norm) to A such that μ is an eigenvalue of A +Δ in the first instance and an eigenvalue of A +Δ with corresponding eigenvector x in the second instance. In particular, if η(μ, x) is sufficiently small for eigenvalue-eigenvector pairs computed by an algorithm, then the algorithm is (backward) stable. Moreover, η(μ, x) ×κ(μ) is an approximate upper bound on the (forward) error in the computed pair (μ, x).

In the case of structured eigenvalue problems, the perturbation analysis of eigenvalues and eigenvectors with respect to structure preserving perturbations is important for the stability analysis of structure preserving algorithms and other applications like understanding the behaviour of dynamical systems associated with such problems. This involves finding the structured condition numbers of eigenvalues and eigenvectors and structured backward errors of approximate eigenvalues and eigenvalue-eigenvector pairs. For instance, if \(A \in \mathbf{S} \subset \mathbb{C}^{n\times n}\), then the structured condition number of any simple eigenvalue λ of A with respect to structure preserving perturbations is defined by

Similarly, the structured backward error η S(μ) (resp., η S(μ, x)) is a measure of the minimum structure preserving perturbation to A ∈ S such that \(\mu \in \mathbb{C}\) (resp. \((\mu,x) \in \mathbb{C} \times \mathbb{C}^{n}\)) is an eigenvalue (resp. eigenvalue-eigenvector pair) for the perturbed problem. Evidently, κ S(λ) ≤ κ(λ) and η S(μ) ≥ η(μ). Very often, the difference between condition numbers with respect to arbitrary and structure preserving perturbations is small for most eigenvalues of structured problems. In fact, they are equal for purely imaginary eigenvalues of problems with Hamiltonian spectral symmetry and eigenvalues on the unit circle of problems with symplectic spectral symmetry [2, 14, 33, 36, 55]. Such eigenvalues are called critical eigenvalues as they result in a breakdown in the eigenvalue pairing. The same also holds for structured and conventional backward errors of approximate critical eigenvalues of many structured eigenvalue problems [1, 14].

However the conventional perturbation analysis via condition numbers and backward errors does not always capture the full effect of structure preserving perturbations on the movement of the eigenvalues. This is particularly true of certain critical eigenvalues whose structured condition numbers do not always indicate the significant difference in their directions of motion under structure preserving and arbitrary perturbations [5, 48]. It is important to understand these differences in many applications. For instance, appearance of critical eigenvalues may lead to a breakdown in the spectral symmetry resulting in loss of uniqueness of deflating subspaces associated with the non-critical eigenvalues and leading to challenges in numerical computation [24, 46, 52–54]. They also result in undesirable physical phenomena like loss of passivity [5, 8, 27]. Therefore, given a structured eigenvalue problem without any critical eigenvalues, it is important to find the distance to a nearest problem with critical eigenvalues. Similarly, if the structured eigenvalue problem already has critical eigenvalues, then it is important to investigate the distance to a nearest problem with the same structure which has no such eigenvalues. Finding these distances pose significant challenges due to the fact that critical eigenvalues are often associated with an additional attributes called sign characteristics which restrict there movement under structure preserving perturbations. These specific ‘distance problems’ come within the purview of structured perturbation analysis and are highly relevant to practical problems. Volker Mehrmann is one of the early researchers to realise the significance of structured perturbation analysis to tackle these issues.

It is difficult to give a complete overview of Volker Mehrmann’s wide body of work in eigenvalue perturbation theory [3–5, 10, 11, 13, 15, 31, 34, 35, 41–45, 48]. For instance one of his early papers in this area is [10] where along with co-authors Benner and Xu, he extends some of the classical results of the perturbation theory for eigenvalues, eigenvectors and deflating subspaces of matrices and matrix pencils to a formal product \(A_{1}^{s_{1}}A_{2}^{s_{2}} \cdot A_{p}^{s_{p}}\) of p square matrices A 1, A 2, …, A p where s 1, s 2, …, s p ∈ {−1, 1}. With co-authors Konstantinov and Petkov, he investigates the effect of perturbations on defective matrices in [35]. In [13] he looks into structure preserving algorithms for solving Hamiltonian and skew-Hamiltonian eigenvalue problems and compares the conditioning of eigenvalues and invariant subspaces with respect to structure preserving and arbitrary perturbations. In fact, the structure preserving perturbation theory of the Hamiltonian and the skew-Hamiltonian eigenvalue problem has been a recurrent theme of his research [5, 34, 48]. He has also been deeply interested in structure preserving perturbation analysis of the structured eigenvalue problems that arise in control theory and their role in the design of efficient, robust and accurate methods for solving problems of computational control [11, 15, 31]. The computation of Lagrangian invariant subspaces of symplectic matrices arises in many applications and this can be difficult specially when the matrix has eigenvalues very close to the unit circle. With co-authors, Mehl, Ran and Rodman, he investigates the perturbation theory of such subspaces in [41]. With the same co-authors, he has also investigated the effect of low rank structure preserving perturbations on different structured eigenvalue problems in a series of papers [42–45]. Volker Mehrmann has also undertaken the sensitivity and backward error analysis of several structured polynomial eigenvalue problems with co-author Ahmad in [3, 4].

In this article we give a brief overview of Volker Mehrmann’s contributions in three specific topics of structured perturbation theory. In Sect. 8.2, we describe his work with co-author Bora on structure preserving linear perturbation of some structured matrix pencils that occur in several problems of control theory. In Sect. 8.3, we describe his work with Xu, Alam, Bora, Karow and Moro on the effect of structure preserving perturbations on purely imaginary eigenvalues of Hamiltonian and skew-Hamiltonian matrices. Finally, in Sect. 8.4 we give a brief overview of Volker Mehrmann’s research on structure preserving rank one perturbations of several structured matrices and matrix pencils with co-authors Mehl, Ran and Rodman.

2 Structured Perturbation Analysis of Eigenvalue Problems Arising in Control Theory

One of the early papers of Volker Mehrmann in the area of structured perturbation analysis is [15] where he investigates the effect of structure preserving linear perturbations on matrix pencils that typically arise in robust and optimal control theory. Such control problems typically involve constant co-efficient dynamical systems of the form

where \(x(\tau ) \in \mathbb{C}^{n}\) is the state, x 0 is an initial vector, \(u(\tau ) \in \mathbb{C}^{m}\) is the control input of the system and the matrices \(E,A \in \mathbb{C}^{n,n}\), \(B \in \mathbb{C}^{n,m}\) are constant. The objective of linear quadratic optimal control is to find a control law u(τ) such that the closed loop system is asymptotically stable and the performance criterion

is minimized, where \(Q = Q^{{\ast}}\in \mathbb{C}^{n,n}\), \(R = R^{{\ast}}\in \mathbb{C}^{m,m}\) and \(S \in \mathbb{C}^{n,m}\). Application of the maximum principle [46, 51] leads to the problem of finding a stable solution to the two-point boundary value problem of Euler-Lagrange equations

leading to the structured matrix pencil

in the continuous time case. Note that H c and N c are Hermitian and skew-Hermitian respectively, due to which the eigenvalues of the pencil occur in pairs \((\lambda,-\bar{\lambda })\) if the matrices are complex and in quadruples \((\lambda,\bar{\lambda },-\lambda,-\bar{\lambda })\) when the matrices are real. In fact, for the given pencil it is well known that if E is invertible, then under the usual control theoretic assumptions [46, 66, 67], it has exactly n eigenvalues on the left half plane, n eigenvalues on the right half plane and m infinite eigenvalues. One of the main concerns in the perturbation analysis of these pencils is to find structure preserving perturbations that result in eigenvalues on the imaginary axis. In [15], the authors considered the pencils H c −λ N c which had no purely imaginary eigenvalues or infinite eigenvalues (i.e. the block E is invertible) and investigated the effect of structure preserving linear perturbations of the form \(H_{c} -\lambda N_{c} + t(\varDelta H_{c} -\lambda \varDelta N_{c})\) where

are fixed matrices such that Δ Q and Δ R are symmetric, E +Δ E is invertible and t is a parameter that varies over \(\mathbb{R}\). The aim of the analysis was to find the smallest value(s) of the parameter t such that the perturbed pencil has an eigenvalue on the imaginary axis in which case their is loss of spectral symmetry and uniqueness of the deflating subspace associated with the eigenvalues on the left half plane.

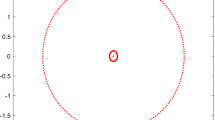

The discrete-time analogue to the linear quadratic control problem leads to slightly different matrix pencils of the form [46, 47]

The eigenvalues of H d −λ N d occur in pairs \((\lambda,1/\bar{\lambda })\) when the pencil is complex and in quadruples \((\lambda,1/\bar{\lambda },\bar{\lambda },1/\lambda )\) when the pencil is real. For such pencils, the critical eigenvalues are the ones on the unit circle. With the assumption that H d −λ N d has no critical eigenvalues, [15] investigates the smallest value of the parameter t such that the perturbed pencils \(H_{d} + t\varDelta H_{d} -\lambda (N_{d} + t\varDelta N_{d})\) have an eigenvalue on the unit circle, where

are fixed matrices that preserve the structure of H d and N d respectively. Note that in this case, the loss of spectral symmetry can lead to non-uniqueness of the deflating subspace associated with eigenvalues inside the unit circle.

Analogous investigations were also made in [15] for a slightly different set of structured matrix pencils that were motivated by problems of H ∞ control. The method of γ-iteration suggested in [12] for robust control problems arising in frequency domain [26, 68] results in pencils of the form

in the continuous time case and in pencils of the form

in the discrete time case where in each case,

is an indefinite Hermitian matrix. Each of the structured pencils vary with the positive parameter t (playing the role of the parameter γ in the γ-iteration), while the other coefficients are constant in t. Here too, the key question investigated was the smallest value(s) of the parameter t for which the pencils \(\hat{H}_{c}(t) -\lambda \hat{ N}_{c}\) and \(\hat{H}_{d}(t) -\lambda \hat{ N}_{d}\) have critical eigenvalues.

The authors developed a general framework for dealing with the structured perturbation problems under consideration in [15] and derived necessary and sufficient conditions for the perturbed matrix pencils to have critical eigenvalues. The following was one of the key results.

Theorem 1

Consider a matrix pencil H c −λN c as given in (8.4) . Let the matrices ΔH c and ΔN c be as in (8.5) and

Let V (t,γ) be any orthonormal basis of the kernel of P(t,γ), and let W(t,γ) be the range of P(t,γ) ∗ .

Then for given real numbers t ≠ 0 and γ, the purely imaginary number iγ is an eigenvalue of the matrix pencil \((H_{c} + t\varDelta H_{c},N_{c} + t\varDelta N_{c})\) if and only if

In particular, it was observed that if the cost function is chosen in such a way that the matrices Q, R and S are free of perturbation, then the pencil \(H_{c} + t\varDelta H_{c} -\lambda (N_{c} + t\varDelta N_{c})\) has a purely imaginary eigenvalue i γ if and only if

In particular, if the matrix \(\left [\begin{array}{cc} Q & S\\ S^{{\ast} }&R \end{array} \right ]\) associated with the cost function is nonsingular and the kernel of P(t, γ) is an invariant subspace of the matrix, then i γ is not an eigenvalue of \(H_{c} + t\varDelta H_{c} -\lambda (N_{c} + t\varDelta N_{c})\). Similar results were also obtained for the other structures.

3 Perturbation Theory for Hamiltonian Matrices

Eigenvalue problems associated with Hamiltonian matrices play a central role in computing various important quantities that arise in diverse control theory problems like robust control, gyroscopic systems and passivation of linear systems. For example, optimal H ∞ control problems involve Hamiltonian matrices of the form

where \(F,G_{1},G_{2},H \in \mathbb{R}^{n,n}\) are such that G 1, G 2 and H are symmetric positive semi-definite and γ is a positive parameter [26, 38, 68]. In such cases, it is important to identify the smallest value of γ for which all the eigenvalues of \(\mathcal{H}(\gamma )\) are purely imaginary. The stabilization of linear second order gyroscopic systems [37, 63] requires computing the smallest real value of δ such that all the eigenvalues of the quadratic eigenvalue problem \((\lambda ^{2}I +\lambda (2\delta G) - K)x = 0\) are purely imaginary. Here G is a non-singular skew-Hermitian matrix and K is a Hermitian positive definite matrix. This is equivalent to finding the smallest value of δ such that all the eigenvalues of the Hamiltonian matrix \(\mathcal{H}(\delta ) = \left [\begin{array}{cc} -\delta G&K +\delta ^{2}G^{2} \\ I_{n} & -\delta G \end{array} \right ]\) are purely imaginary. Finally Hamiltonian matrices also arise in the context of passivation of non-passive dynamical systems. Consider a linear time invariant control system described by

where \(A \in \mathbb{F}^{n,n},B \in \mathbb{F}^{n,m},C \in \mathbb{F}^{m,n}\), and \(D \in \mathbb{F}^{m,m}\) are real or complex matrices such that all the eigenvalues of A are on the open left half plane, i.e., the system is asymptotically stable and D has full column rank. The system is said to be passive if there exists a non-negative scalar valued function Θ such that the dissipation inequality

holds for all t 1 ≥ t 0, i.e., the system absorbs supply energy. This is equivalent to checking whether the Hamiltonian matrix

has any purely imaginary eigenvalues. A non-passive system is converted to a passive one by making small perturbations to the matrices A, B, C, D [19, 25, 27] such that the eigenvalues of the corresponding perturbed Hamiltonian matrix move off the imaginary axis.

Many linear quadratic optimal control problems require the computation of the invariant subspace of a Hamiltonian matrix \(H \in \mathbb{F}^{n,n}\) associated with eigenvalues on the left half plane. Structure preserving algorithms are required for the efficient computation of such subspaces and the aim of such algorithms is to transform H via eigenvalue and structure preserving transformations to the Hamiltonian Schur form \(\varSigma:= \left [\begin{array}{cc} T & R\\ 0 & - T^{{\ast}} \end{array} \right ]\) where \(R \in \mathbb{F}^{n,n}\) is Hermitian and \(T \in \mathbb{F}^{n,n}\) is upper triangular if \(\mathbb{F} = \mathbb{C}\) and quasi-upper triangular if \(\mathbb{F} = \mathbb{R}\). It is well known that there exists a unitary symplectic matrix U (which is orthogonal if \(\mathbb{F} = \mathbb{R}\)) such that U ∗ HU = Σ if H has no purely imaginary eigenvalue [50] although Mehrmann along with co-authors Lin and Xu have shown in [40] that Hamiltonian matrices with purely imaginary eigenvalues also have a Hamiltonian Schur form under special circumstances.

Due to these motivations, a significant part of Volker Mehrmann’s research in structured perturbation theory focuses on Hamiltonian matrices and especially on the effect of Hamiltonian perturbations on purely imaginary eigenvalues. One of his early contributions in this area is [34] co-authored with Konstantinov and Petkov, in which the importance of the Hamiltonian Schur form motivates the authors to perform a perturbation analysis of the form under Hamiltonian perturbations. In this work, the authors also introduce a Hamiltonian block Schur form which is seen to be relatively less sensitive to perturbations.

Volker Mehrmann’s first significant contribution to the perturbation analysis of purely imaginary eigenvalues of Hamiltonian matrices is a joint work [48] with Hongguo Xu. Here Xu and Mehrmann describe the perturbation theory for purely imaginary eigenvalues of Hamiltonian matrices with respect to Hamiltonian and non-Hamiltonian perturbations. It was observed that when a Hamiltonian matrix \(\mathcal{H}\) is perturbed by a small Hamiltonian matrix, whether a purely imaginary eigenvalue of \(\mathcal{H}\) will stay on the imaginary axis or move away from it is determined by the inertia of a Hermitian matrix associated with that eigenvalue.

More precisely, let \(i\alpha,\alpha \in \mathbb{R}\), be a purely imaginary eigenvalue of \(\mathcal{H}\) and let \(X \in \mathbb{C}^{2n,p}\) be a full column rank matrix. Suppose that the columns of X span the right invariant subspace \(\ker (\mathcal{H}- i\alpha I)^{2n}\) associated with i α so that

for some square matrix \(\mathcal{R}\). Here and in the sequel Λ(M) denotes the spectrum of the matrix M. Since \(\mathcal{H}\) is Hamiltonian, relation (8.10) implies that

Since \(\varLambda (-\mathcal{R}^{{\ast}}) =\{ i\alpha \}\), it follows that the columns of the full column rank matrix J ∗ X span the left invariant subspace associated with i α. Hence, (J ∗ X)∗ X = X ∗ JX is nonsingular and the matrix

is Hermitian and nonsingular. The inertia of Z α plays the central role in the main structured perturbation theory result of [48] for the spectral norm \(\|\cdot \|_{2}\).

Theorem 2 ([48])

Consider a Hamiltonian matrix \(\mathcal{H}\in \mathbb{F}^{2n,2n}\) with a purely imaginary eigenvalue iα of algebraic multiplicity p. Suppose that \(X \in \mathbb{F}^{2n,p}\) satisfies Rank X = p and (8.10) , and that Z α as defined in (8.12) is congruent to \(\left [\begin{array}{cc} I_{\pi }& 0\\ 0 & -I_{\mu } \end{array} \right ]\) (with \(\pi +\mu = p\) ).

If \(\mathcal{E}\) is Hamiltonian and \(\|\mathcal{E}\|_{2}\) is sufficiently small, then \(\mathcal{H} + \mathcal{E}\) has p eigenvalues λ 1 ,…,λ p (counting multiplicity) in the neighborhood of iα, among which at least |π −μ| eigenvalues are purely imaginary. In particular, we have the following cases.

-

1.

If Z α is definite, i.e. either π = 0 or μ = 0, then all λ 1 ,…,λ p are purely imaginary with equal algebraic and geometric multiplicity, and satisfy

$$\displaystyle{\lambda _{j} = i(\alpha +\delta _{j}) + O(\|\mathcal{E}\|_{2}^{2}),}$$where δ 1 ,…,δ p are the real eigenvalues of the pencil \(\lambda Z_{\alpha } - X^{{\ast}}(J\mathcal{E})X\) .

-

2.

If there exists a Jordan block associated with iα of size larger than 2, then generically for a given \(\mathcal{E}\) some eigenvalues among λ 1 ,…,λ p will no longer be purely imaginary.

If there exists a Jordan block associated with iα of size 2, then for any ε > 0, there always exists a Hamiltonian perturbation matrix \(\mathcal{E}\) with \(\|\mathcal{E}\|_{2} =\epsilon\) such that some eigenvalues among λ 1 ,…,λ p will have nonzero real part.

-

3.

If iα has equal algebraic and geometric multiplicity and Z α is indefinite, then for any ε > 0, there always exists a Hamiltonian perturbation matrix \(\mathcal{E}\) with \(\|\mathcal{E}\|_{2} =\epsilon\) such that some eigenvalues among λ 1 ,…,λ p have nonzero real part.

The above theorem has implications for the problem of passivation of dynamical systems as mentioned before, where the goal is to find a smallest Hamiltonian perturbation to a certain Hamiltonian matrix associated with the system such that the perturbed matrix has no purely imaginary eigenvalues. Indeed, Theorem 2 states that a purely imaginary eigenvalue i α can be (partly) removed from the imaginary axis by an arbitrarily small Hamiltonian perturbation if and only if the associated matrix Z α in (8.12) is indefinite. The latter implies that i α has algebraic multiplicity at least 2. This results in the following Theorem in [48].

Theorem 3 ([48])

Suppose that \(\mathcal{H}\in \mathbb{C}^{2n,2n}\) is Hamiltonian and all its eigenvalues are purely imaginary. Let \(\mathbb{H}_{2n}\) be the set of all 2n × 2n Hamiltonian matrices and let \(\mathbb{S}\) be the set of Hamiltonian matrices defined by

Let

If every eigenvalue of \(\mathcal{H}\) has equal algebraic and geometric multiplicity and the corresponding matrix Z α as in (8.12) is definite, then for any Hamiltonian matrix \(\mathcal{E}\) with \(\|\mathcal{E}\|_{2} \leq \epsilon _{0}\) , \(\mathcal{H} + \mathcal{E}\) has only purely imaginary eigenvalues. For any ε > ε 0 , there always exists a Hamiltonian matrix \(\mathcal{E}\) with \(\|\mathcal{E}\|_{2} =\epsilon\) such that \(\mathcal{H} + \mathcal{E}\) has an eigenvalue with non zero real part.

Mehrmann and Xu also consider skew-Hamiltonian matrices in [48]. These are 2n × 2n matrices \(\mathcal{K}\) such that \(J\mathcal{K}\) is skew-Hermitian. Clearly, if \(\mathcal{K}\) is skew-Hamiltonian, then \(i\mathcal{K}\) is Hamiltonian so that the critical eigenvalues of \(\mathcal{K}\) are its real eigenvalues. Therefore the effect of skew-Hamiltonian perturbations on the real eigenvalues of a complex skew-Hamiltonian matrix is expected to be the same as the effect of Hamiltonian perturbations on the purely imaginary eigenvalues of a complex Hamiltonian matrix. However, the situation is different if the skew-Hamiltonian matrix \(\mathcal{K}\) is real. In such cases, the canonical form of \(\mathcal{K}\) is given by the following result.

Theorem 4 ([23, 62])

For any skew- Hamiltonian matrix \(\mathcal{K}\in \mathbb{R}^{2n,2n}\) , there exists a real symplectic matrix \(\mathcal{S}\) such that

where \(\mathcal{K}\) is in real Jordan canonical form.

The above result shows that each Jordan block associated with a real eigenvalue of \(\mathcal{K}\) occurs twice and consequently every real eigenvalue is of even algebraic multiplicity. The following result in [48] summarizes the effect of structure preserving perturbations on the real eigenvalues of a real skew-Hamiltonian matrix.

Theorem 5 ([48])

Consider the skew-Hamiltonian matrix \(\mathcal{K}\in \mathbb{R}^{2n,2n}\) with a real eigenvalue α of algebraic multiplicity 2p.

-

1.

If p = 1, then for any skew-Hamiltonian matrix \(\mathcal{E}\in \mathbb{R}^{2n,2n}\) with sufficiently small \(\|\mathcal{E}\|_{2}\) , \(\mathcal{K} + \mathcal{E}\) has a real eigenvalue λ close to α with algebraic and geometric multiplicity 2, which has the form

$$\displaystyle{\lambda =\alpha +\eta + O(\|\mathcal{E}\|_{2}^{2}),}$$where η is the real double eigenvalue of the 2 × 2 matrix pencil \(\lambda X^{T}\mathit{JX} - X^{T}(J\mathcal{E})X\) , and X is a full column rank matrix so that the columns of X span the right eigenspace \(\ker (\mathcal{K}-\alpha I)\) associated with α.

-

2.

If α is associated with a Jordan block of size larger than 2, then generically for a given \(\mathcal{E}\) some eigenvalues of \(\mathcal{K} + \mathcal{E}\) will no longer be real. If there exists a Jordan block of size 2 associated with α, then for every ε > 0, there always exists \(\mathcal{E}\) with \(\|\mathcal{E}\|_{2} =\epsilon\) such that some eigenvalues of \(\mathcal{K} + \mathcal{E}\) are not real.

-

3.

If the algebraic and geometric multiplicities of α are equal and are greater than 2, then for any ε > 0, there always exists \(\mathcal{E}\) with \(\|\mathcal{E}\|_{2} =\epsilon\) such that some eigenvalues of \(\mathcal{K} + \mathcal{E}\) are not real.

Mehrmann and Xu use Theorems 2 and 4 in [48] to analyse the properties of the symplectic URV algorithm that computes the eigenvalues of a Hamiltonian matrix in a structure preserving way. One of the main conclusions of this analysis is that if a Hamiltonian matrix \(\mathcal{H}\) has a simple non-zero purely imaginary eigenvalue say i α, then the URV algorithm computes a purely imaginary eigenvalue say, \(i\hat{\alpha }\) close to i α. In such a case, they also find a relationship between i α and \(i\hat{\alpha }\) that holds asymptotically. However, they show that if i α is multiple or zero, then the computed eigenvalue obtained from the URV algorithm may not be purely imaginary.

The structured perturbation analysis of purely imaginary eigenvalues of Hamiltonian matrices in [48] was further extended by Volker Mehrmann along with co-authors Alam, Bora, Karow and Moro in [5]. This analysis was used to find explicit Hamiltonian perturbations to Hamiltonian matrices that move eigenvalues away from the imaginary axis. In the same work, the authors also provide a numerical algorithm for finding an upper bound to the minimal Hamiltonian perturbation that moves all eigenvalues of a Hamiltonian matrix outside a vertical strip containing the imaginary axis. Alternative algorithms for this task have been given in [17, 27] and [28]. These works were motivated by the problem of passivation of dynamical systems mentioned previously.

One of the main aims of the analysis in [5] is to identify situations under which arbitrarily small Hamiltonian perturbations to a Hamiltonian matrix move its purely imaginary eigenvalues away from the imaginary axis with further restriction that the perturbations are real if the original matrix is real. Motivated by the importance of the Hermitian matrix Z α introduced via (8.12) in [48], the authors make certain definitions in [5] to set the background for the analysis. Accordingly, any two vectors x and y from \(\mathbb{F}^{2n}\) are said to be J-orthogonal if x ∗ Jy = 0. Subspaces \(\mathcal{X},\ \mathcal{Y}\subseteq \mathbb{F}^{2n}\) are said to be J-orthogonal if x ∗ Jy = 0 for all \(x \in \mathcal{X},\ y \in \mathcal{Y}\). A subspace \(\mathcal{X} \subseteq \mathbb{F}^{2n}\) is said to be J-neutral if x ∗ Jx = 0 for all \(x \in \mathcal{X}\). The subspace \(\mathcal{X}\) is said to be J-nondegenerate if for any \(x \in \mathcal{X}\setminus \{0\}\) there exists \(y \in \mathcal{X}\) such that x ∗ Jy ≠ 0. The subspaces of \(\mathbb{F}^{2n}\) invariant with respect to \(\mathcal{H}\) were then investigated with respect to these properties and the following was one of the major results in this respect.

Theorem 6

Let \(\mathcal{H}\in \mathbb{F}^{2n,2n}\) be Hamiltonian. Let \(i\alpha _{1},\ldots,i\alpha _{p} \in i\mathbb{R}\) be the purely imaginary eigenvalues of \(\mathcal{H}\) and let \(\lambda _{1},\ldots,\lambda _{q} \in \mathbb{C}\) be the eigenvalues of \(\mathcal{H}\) with negative real part. Then the \(\mathcal{H}\) -invariant subspaces \(\mathrm{ker}(\mathcal{H}- i\alpha _{k}\,I)^{2n}\) and \(\mathrm{ker}(\mathcal{H}-\lambda _{j}\,I)^{2n} \oplus \mathrm{ker}(\mathcal{H} + \overline{\lambda }_{j}\,I)^{2n}\) are pairwise J-orthogonal. All these subspaces are J-nondegenerate. The subspaces

are J-neutral.

An important result of [5] is the following.

Theorem 7

Suppose that \(\mathcal{H}\in \mathbb{F}^{2n,2n}\) is Hamiltonian and \(\lambda \in \mathbb{C}\) is an eigenvalue of \(\mathcal{H}\) such that \(\ker (\mathcal{H}-\lambda I)^{2n}\) contains a J-neutral invariant subspace of dimension d. Let δ 1 ,…,δ d be arbitrary complex numbers such that maxk |δ k | < ε. Then there exists a Hamiltonian perturbation \(\mathcal{E}\) such that \(\|\mathcal{E}\|_{2} = O(\epsilon )\) and \(\mathcal{H} + \mathcal{E}\) has eigenvalues \(\lambda +\delta _{k},k = 1,\ldots,d\) . The matrix \(\mathcal{E}\) can be chosen to be real if \(\mathcal{H}\) is real.

This together with Theorem 6 implies that eigenvalues of \(\mathcal{H}\) with non zero real parts can be moved in any direction in the complex plane by an arbitrarily small Hamiltonian perturbation. However such a result does not hold for the purely imaginary eigenvalues as the following argument shows. Suppose that a purely imaginary eigenvalue i α is perturbed to an eigenvalue i α +δ with Re δ ≠ 0 by a Hamiltonian perturbation \(\mathcal{E}_{\delta }\). Then the associated eigenvector v δ of the Hamiltonian matrix \(\mathcal{H} + \mathcal{E}_{\delta }\) is J-neutral by Theorem 6. By continuity it follows that i α must have an associated J-neutral eigenvector \(v \in \ker (\mathcal{H}- i\alpha \,I)\). This in turn implies that the associated matrix Z α is indefinite and so in particular, the eigenvalue i α must have multiplicity at least 2. In view of this, the following definition was introduced in [5].

Definition 1

Let \(\mathcal{H}\in \mathbb{F}^{2n,2n}\) be Hamiltonian and i α, \(\alpha \in \mathbb{R}\) (with the additional assumption that α ≠ 0 if \(\mathcal{H}\) is real) be an eigenvalue of \(\mathcal{H}\). Then i α is of positive, negative or mixed sign characteristic if the matrix Z α given by (8.12) is positive definite, negative definite or indefinite respectively.

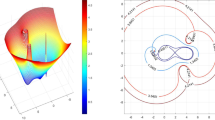

This definition clearly implies that only purely imaginary eigenvalues of \(\mathcal{H}\) that are of mixed sign characteristic possess J-neutral eigenvectors. So, the key to removing purely imaginary eigenvalues of \(\mathcal{H}\) away from the imaginary axis is to initially generate an eigenvalue of mixed sign characteristic via a Hamiltonian perturbation. It was established in [5] that this can be achieved only by merging two imaginary eigenvalues of opposite sign characteristic and the analysis and investigations involved in the process utilized the concepts of Hamiltonian backward error and Hamiltonian pseudospectra.

The Hamiltonian backward error associated with a complex number \(\lambda \in \mathbb{C}\) is defined by

Note that in general \(\eta ^{\mathrm{Ham}}(\lambda,\mathcal{H})\) is different for \(\mathbb{F} = \mathbb{C}\) and for \(\mathbb{F} = \mathbb{R}\). We use the notation \(\eta _{F}^{\mathrm{Ham}}(\lambda,\mathcal{H})\) and \(\eta _{2}^{\mathrm{Ham}}(\lambda,\mathcal{H})\), when the norm in (8.14) is the Frobenius norm and the spectral norm, respectively. The complex Hamiltonian backward error for nonimaginary λ is discussed in the theorem below, see [5, 32].

Theorem 8

Let \(\mathcal{H}\in \mathbb{C}^{2n,2n}\) be a Hamiltonian matrix, and let \(\lambda \in \mathbb{C}\) be such that Re λ ≠ 0. Then we have

In particular, we have \(\eta _{2}^{\mathrm{Ham}}(\lambda,\mathcal{H}) \leq \eta _{F}^{\mathrm{Ham}}(\lambda,\mathcal{H}) \leq \sqrt{2}\,\eta _{2}^{\mathrm{Ham}}(\lambda,\mathcal{H})\) .

Suppose that the minima in (8.15), and (8.16) are attained for \(u \in \mathbb{C}^{2n}\) and \(v \in \mathbb{C}^{2n}\) , respectively. Let

and

where \(w:= J(\mathcal{H}-\lambda \, I)v/\|(\mathcal{H}-\lambda \, I)v\|_{2}\) . Then

A minimizer v of the right hand side of (8.16) can be found via the following method. For \(t \in \mathbb{R}\) let \(F(t) = (\mathcal{H}-\lambda \, I)^{{\ast}}(\mathcal{H}-\lambda \, I) + i\,t\,J\) . Let \(t_{0} = \mathrm{arg}\,\max _{t\in \mathbb{R}}\lambda _{\min }(F(t))\) . Then there exists a normalized eigenvector v to the minimum eigenvalue of F(t 0 ) such that v ∗ Jv = 0. Thus,

The proposition below from [5] deals with the Hamiltonian backward error for the case that λ = i ω is purely imaginary. In this case an optimal perturbation can also be constructed as a real matrix if \(\mathcal{H}\) is real. In the sequel M + denotes the Moore-Penrose generalized inverse of M.

Proposition 1

Let \(\mathcal{H}\in \mathbb{F}^{2n,2n}\) be Hamiltonian and \(\omega \in \mathbb{R}\) . Let v be a normalized eigenvector of the Hermitian matrix \(J(\mathcal{H}- i\omega I)\) corresponding to an eigenvalue \(\lambda \in \mathbb{R}\) . Then |λ| is a singular value of the Hamiltonian matrix \(\mathcal{H}- i\omega I\) and v is an associated right singular vector.

Further, the matrices

are Hamiltonian, \(\mathcal{K}\) is real and we have \((\mathcal{H} + \mathcal{E})v = (\mathcal{H} + \mathcal{K})v = i\omega v\) . Furthermore, \(\|\mathcal{E}\|_{F} =\| \mathcal{E}\|_{2}\; =\;\| \mathcal{K}\|_{2}\; =\; \vert \lambda \vert \mbox{ and }\|\mathcal{K}\|_{F} \leq \sqrt{2}\,\vert \lambda \vert.\)

Moreover, suppose that λ is an eigenvalue of \(J(\mathcal{H}- i\omega I)\) of smallest absolute value and let \(\sigma _{\min }(\mathcal{H}- i\omega I)\) be the smallest singular value of \(\mathcal{H}- i\omega I\) . Then \(\vert \lambda \vert =\sigma _{\min }(\mathcal{H}- i\omega I)\) and we have

The above result shows that the real and complex Hamiltonian backward errors for purely imaginary numbers \(i\omega,\,\omega \in \mathbb{R}\) can be easily computed and in fact they are equal with respect to the 2-norm. Moreover, they depend on the eigenvalue of smallest magnitude of the Hermitian matrix \(J(\mathcal{H}- i\omega I)\) and the corresponding eigenvector v is such that v and Jv are respectively the right and left eigenvectors corresponding to i ω as an eigenvalue of the minimally perturbed Hamiltonian matrix \(\mathcal{H} +\varDelta \mathcal{H}\) where \(\varDelta \mathcal{H} = \mathcal{E}\) if \(\mathcal{H}\) is complex and \(\varDelta \mathcal{H} = \mathcal{K}\) when \(\mathcal{H}\) is real. The authors also introduce the eigenvalue curves \(\lambda _{\min }(\omega ),\omega \in \mathbb{R}\) in [5] satisfying

and show that the pair (λ min(ω), v(ω)) where \(J(\mathcal{H}- i\omega I)v(\omega ) =\lambda _{\min }(\omega )v(\omega )\) is a piecewise analytic function of ω. Moreover,

for all but finitely many points \(\omega _{1},\omega _{2},\ldots,\omega _{p} \in \mathbb{R}\) at which there is loss of analyticity. These facts are used to show that i ω is an eigenvalue of \(\mathcal{H} +\varDelta \mathcal{H}\) with a J-neutral eigenvector if and only if it is a local extremum of λ min(ω). Hamiltonian ε-pseudospectra of \(\mathcal{H}\) are then introduced into the analysis in [5] to show that the local extrema of λ min(ω) are the points of coalescence of certain components of the Hamiltonian pseudospectra.

Given any \(A \in \mathbb{C}^{n,n}\) and ε ≥ 0, the ε-pseudospectrum of A is defined as

It is well-known [64] that in the complex case when \(\mathbb{F} = \mathbb{C}\), we have

where, σ min(⋅ ) denotes the minimum singular value. The Hamiltonian ε-pseudospectrum is defined by

It is obvious that

where \(\eta _{2}^{\mathrm{Ham}}(z,\mathcal{H})\) is the Hamiltonian backward error as defined in (8.14) with respect to the spectral norm. The Hamiltonian pseudospectrum is in general different for \(\mathbb{F} = \mathbb{C}\) and for \(\mathbb{F} = \mathbb{R}\). However, the real and complex Hamiltonian pseudospectra coincide on the imaginary axis due to Proposition 1.

Corollary 1

Let \(\mathcal{H}\in \mathbb{C}^{2n,2n}\) be Hamiltonian. Consider the pseudospectra \(\varLambda _{\epsilon }(\mathcal{H}; \mathbb{F})\) and \(\varLambda _{\epsilon }^{\mathrm{Ham}}(\mathcal{H}; \mathbb{F})\) . Then,

Analogous results for other perturbation structures have been obtained in [55].

Definition 1 introduced in [5] for purely imaginary eigenvalues of a Hamiltonian matrix is then extended to the components of the Hamiltonian pseudospectra \(\varLambda _{\epsilon }^{\mathrm{Ham}}(\mathcal{H}, \mathbb{F})\) as follows.

Definition 2

Let \(\mathcal{H}\in \mathbb{F}^{2n,2n}\). A connected component \(\mathcal{C}_{\epsilon }(\mathcal{H})\) of \(\varLambda _{\epsilon }^{\mathrm{Ham}}(\mathcal{H}, \mathbb{F})\) is said to have positive (resp., negative) sign characteristic if for all Hamiltonian perturbations \(\mathcal{E}\) with \(\|\mathcal{E}\|_{2} \leq \epsilon\) each eigenvalue of \(\mathcal{H} + \mathcal{E}\) that is contained in \(\mathcal{C}_{\epsilon }(\mathcal{H})\) has positive (resp., negative) sign characteristic.

In view of the above definition, if a component \(\mathcal{C}_{\epsilon }(\mathcal{H})\) of \(\varLambda _{\epsilon }^{\mathrm{Ham}}(\mathcal{H}, \mathbb{F})\) has positive (resp., negative) sign characteristic then \(\mathcal{C}_{\epsilon }(\mathcal{H}) \subset i\mathbb{R}\) and all eigenvalues of \(\mathcal{H}\) that are contained in \(\mathcal{C}_{\epsilon }(\mathcal{H})\) have positive (resp., negative) sign characteristic. Consequently, such components are necessarily subsets of the imaginary axis. In fact an important result in [5] is that sign characteristic of \(\mathcal{C}_{\epsilon }(\mathcal{H})\) is completely determined by the sign characteristic of the eigenvalues of \(\mathcal{H}\) that are contained in \(\mathcal{C}_{\epsilon }(\mathcal{H})\).

Theorem 9

Let \(\mathcal{H}\in \mathbb{F}^{2n,2n}\) and let \(\mathcal{C}_{\epsilon }(\mathcal{H})\) be a connected component of \(\varLambda _{\epsilon }^{\mathrm{Ham}}(\mathcal{H}, \mathbb{F})\) . For a Hamiltonian matrix \(\mathcal{E}\in \mathbb{F}^{2n,2n}\) with \(\|\mathcal{E}\|_{2} \leq \epsilon\) , let \(X_{\mathcal{E}}\) be a full column rank matrix whose columns form a basis of the direct sum of the generalized eigenspaces \(\mathrm{ker}(\mathcal{H} + \mathcal{E}-\lambda I)^{2n}\) , \(\lambda \in \mathcal{C}_{\epsilon }(\mathcal{H}) \cap \varLambda (\mathcal{H} + \mathcal{E})\) . Set \(Z_{\mathcal{E}}:= -iX_{\mathcal{E}}^{{\ast}}\mathit{JX}_{\mathcal{E}}\) . Then the following conditions are equivalent.

-

(a)

The component \(\mathcal{C}_{\epsilon }(\mathcal{H})\) has positive (resp., negative) sign characteristic.

-

(b)

All eigenvalues of \(\mathcal{H}\) that are contained in \(\mathcal{C}_{\epsilon }(\mathcal{H})\) have positive (resp., negative) sign characteristic.

-

(c)

The matrix Z 0 associated with \(\mathcal{E} = 0\) is positive (resp., negative) definite.

-

(d)

The matrix \(Z_{\mathcal{E}}\) is positive (resp., negative) definite for all Hamiltonian matrix \(\mathcal{E}\) with \(\|\mathcal{E}\|_{2} \leq \epsilon\) .

Apart from characterising the sign characteristic of components of \(\varLambda _{\epsilon }^{\mathrm{Ham}}(\mathcal{H})\) in terms of the sign characteristic of the eigenvalues of \(\mathcal{H}\) contained in them, Theorem 9 implies that the only way to produce minimal Hamiltonian perturbations to \(\mathcal{H}\) such that the perturbed matrices have purely imaginary eigenvalues with J-neutral eigenvectors is to allow components of \(\varLambda _{\epsilon }^{\mathrm{Ham}}(\mathcal{H})\) of different sign characteristic to coalesce. Also in view of the analysis of the eigenvalue curves \(w \rightarrow \lambda _{\min }(J(\mathcal{H}-\mathit{iwI}))\), it follows that the corresponding points of coalescence are precisely the local extrema of these curves.

If all the eigenvalues of a Hamiltonian matrix \(\mathcal{H}\) are purely imaginary and of either positive or negative sign characteristic, [5] provides a procedure for constructing a minimal 2-norm Hamiltonian perturbation \(\varDelta \mathcal{H}\) based on Theorem 9, that causes at least one component of the Hamiltonian ε-pseudospectrum of \(\mathcal{H}\) to be of mixed sign characteristic. This component is formed from the coalescence of components of positive and negative sign characteristic of the pseudospectrum and the perturbation \(\varDelta \mathcal{H}\) induces a point of coalescence of the components (which is purely imaginary) as an eigenvalue of \(\mathcal{H} +\varDelta \mathcal{H}\) with a J-neutral eigenvector. Therefore, any further arbitrarily small Hamiltonian perturbation \(\mathcal{E}\) results in a non-imaginary eigenvalue for the matrix \(\mathcal{H} +\varDelta \mathcal{H} + \mathcal{E}\). The details of this process are given below. The following theorem from [5] is a refinement of Theorem 3.

Theorem 10

Let \(\mathcal{H}\in \mathbb{F}^{2n,2n}\) be a Hamiltonian matrix whose eigenvalues are all purely imaginary, and let \(f(\omega ) =\sigma _{\min }(\mathcal{H}- i\omega I),\,\omega \in \mathbb{R}\) . Define

Furthermore, let ε 0 be defined as in (8.13). Then the following assertions hold.

-

(i)

If at least one eigenvalue of \(\mathcal{H}\) has mixed sign characteristic then \(\epsilon _{0} = R_{\mathbb{F}}(\mathcal{H}) =\rho _{\mathbb{F}}(\mathcal{H}) = 0\) .

-

(ii)

Suppose that each eigenvalue of \(\mathcal{H}\) has either positive or negative sign characteristic. Let

$$\displaystyle{i\mathcal{I}_{1},\ldots,i\mathcal{I}_{q} \subset i\mathbb{R}}$$denote the closed intervals on the imaginary axis whose end points are adjacent eigenvalues of \(\mathcal{H}\) with opposite sign characteristics. Then we have

$$\displaystyle\begin{array}{rcl} \epsilon _{0} = R_{\mathbb{F}}(\mathcal{H}) =\rho _{\mathbb{F}}(\mathcal{H}) =\min _{1\leq k\leq q}\max _{\omega \in \mathcal{I}_{k}}f(\omega ).& & {}\\ \end{array}$$ -

(iii)

Consider an interval \(\mathcal{I}\in \{\mathcal{I}_{1},\ldots,\mathcal{I}_{q}\}\) satisfying

$$\displaystyle\begin{array}{rcl} \min _{1\leq k\leq q}\max _{\omega \in \mathcal{I}_{k}}f(\omega ) =\max _{\omega \in \mathcal{I}}f(\omega ) = f(\omega _{0}),\quad \omega _{0} \in \mathcal{I}.& & {}\\ \end{array}$$Suppose that \(i\mathcal{I}\) is given by \(i\mathcal{I} = [i\alpha,\,i\beta ]\) . Then the function f is strictly increasing in [α,ω 0 ] and strictly decreasing in [ω 0 ,β]. For ε < ε 0 , we have \(i\omega _{0}\notin \varLambda _{\epsilon }^{\mathrm{Ham}}(\mathcal{H}, \mathbb{F})\) , \(\mathcal{C}_{\epsilon }(\mathcal{H},i\alpha ) \cap \mathcal{C}_{\epsilon }(\mathcal{H},i\beta ) = \varnothing \) and \(i\omega _{0} \in \mathcal{C}_{\epsilon _{0}}(\mathcal{H},i\alpha ) = \mathcal{C}_{\epsilon _{0}}(\mathcal{H},i\beta ) = \mathcal{C}_{\epsilon _{0}}(\mathcal{H},i\alpha ) \cup \mathcal{C}_{\epsilon _{0}}(\mathcal{H},i\beta )\) where \(\mathcal{C}_{\epsilon _{0}}(\mathcal{H},i\alpha )\) and \(\mathcal{C}_{\epsilon _{0}}(\mathcal{H},i\beta )\) are components of \(\mathcal{H}\) containing iα and iβ respectively. Moreover, if iα has positive sign characteristic and iβ has negative sign characteristic, then the eigenvalue curves λ min (w) (satisfying ( 8.19 )) are such that λ min (ω) = f(ω) for all ω ∈ [α,β]. On the other hand, if iα has negative sign characteristic and iβ has positive sign characteristic then \(\lambda _{\min }(\omega ) = -f(\omega )\) for all ω ∈ [α,β]. In both cases there exists a J-neutral normalized eigenvector v 0 of \(J(\mathcal{H}- i\omega _{0}I)\) corresponding to the eigenvalue λ min (ω 0 ).

-

(iv)

For the J-neutral normalized eigenvector vector v 0 mentioned in part (iii), consider the matrices

$$\displaystyle\begin{array}{rcl} \begin{array}{rcl} \mathcal{E}^{0} &:=&\lambda _{\min }(\omega _{0})Jv_{0}v_{0}^{{\ast}}, \\ \mathcal{K}^{0} &:=&\lambda _{\min }(\omega _{0})J[v_{0}\;\overline{v_{0}}][v_{0},\overline{v_{0}}]^{+}, \\ \mathcal{E}_{\mu }&:=&\mu v_{0}v_{0}^{{\ast}} +\bar{\mu } Jv_{0}v_{0}^{{\ast}}J, \\ \mathcal{K}_{\mu }&:=&[\mu v_{0},\overline{\mu v_{0}}][v_{0},\overline{v_{0}}]^{+} + J([v_{0},\overline{v_{0}}]^{+})^{{\ast}}[\mu v,\overline{\mu v_{0}}]^{{\ast}}J[\mu v_{0},\overline{\mu v_{0}}] \\ & & + J[v_{0},\overline{v_{0}}][v_{0},\overline{v_{0}}]^{+}J[v_{0},\overline{v_{0}}]^{+},\qquad \mu \in \mathbb{C}.\end{array} & & {}\\ \end{array}$$Then \(\mathcal{E}^{0}\) is Hamiltonian, \(\mathcal{K}^{0}\) is real and Hamiltonian, \((\mathcal{H} + \mathcal{E}^{0})v_{0} = (\mathcal{H} + \mathcal{K}^{0})v_{0} = i\omega _{0}v_{0}\) and \(\|\mathcal{E}^{0}\|_{2} =\| \mathcal{K}^{0}\|_{2} = f(\omega _{0})\) . For any \(\mu \in \mathbb{C}\) the matrix \(\mathcal{E}_{\mu }\) is Hamiltonian, and \((\mathcal{H} + \mathcal{E}^{0} + \mathcal{E}_{\mu })v_{0} = (i\omega _{0}+\mu )v_{0}\) . If ω 0 = 0 and \(\mathcal{H}\) is real then v 0 can be chosen as a real vector. Then \(\mathcal{E}^{0} + \mathcal{E}_{\mu }\) is a real matrix for all \(\mu \in \mathbb{R}\) . If ω 0 ≠0 and \(\mathcal{H}\) is real then for any \(\mu \in \mathbb{C}\) , \(\mathcal{K}_{\mu }\) is a real Hamiltonian matrix satisfying \((\mathcal{H} + \mathcal{K}^{0} + \mathcal{K}_{\mu })v_{0} = (i\omega _{0}+\mu )v_{0}\) .

Theorem 10 is the basis for the construction an algorithm in [5] which produces a Hamiltonian perturbation to any Hamiltonian matrix \(\mathcal{H}\) such that either all the eigenvalues of the perturbed Hamiltonian matrix lie outside an infinite strip containing the imaginary axis or any further arbitrarily small Hamiltonian perturbation results in a matrix with no purely imaginary eigenvalues. This is done by repeated application of the perturbations specified by the Theorem 10 to the portion of the Hamiltonian Schur form of \(\mathcal{H}\) that corresponds to the purely imaginary eigenvalues of positive and negative sign characteristic. Each application, brings at least one pair of purely imaginary eigenvalues together on the imaginary axis to form eigenvalue(s) of mixed sign characteristic of the perturbed Hamiltonian matrix. Once this happens, the Hamiltonian Schur form of the perturbed matrix can again be utilised to construct subsequent perturbations that affect only the portion of the matrix corresponding to purely imaginary eigenvalues of positive and negative sign characteristic. When the sum of all such perturbations is considered, all the purely imaginary eigenvalues of the resulting Hamiltonian matrix are of mixed sign characteristic and there exist Hamiltonian perturbations of arbitrarily small norm that can remove all of them from the imaginary axis. These final perturbations can also be designed in a way that all the eigenvalues of the perturbed Hamiltonian matrix are outside a pre-specified infinite strip containing the imaginary axis. The procedure leads to an upper bound on the minimal Hamiltonian perturbations that achieve the desired objective.

As mentioned earlier, critical eigenvalues of other structured matrices and matrix pencils are also associated with sign characteristics and many applications require the removal of such eigenvalues. For example, critical eigenvalues of Hermitian pencils \(L(z) = A - zB\) are the ones on the real line or at infinity. If such pencils are also definite, then minimal Hermitian perturbations that result in at least one eigenvalue with a non zero purely imaginary part with respect to the norm \(\vert \!\vert \!\vert L\vert \!\vert \!\vert:= \sqrt{\|A\|_{2 }^{2 } +\| B\|_{2 }^{2}}\), (where \(\|\cdot \|_{2}\) denotes the spectral norm), is the Crawford number of the pencil [30, 39, 58]. The Crawford number was first introduced in [20] although the name was first coined only in [57]. However, it has been considered as early as in the 1960s in the work of Olga Taussky [61] and its theory and computation has generated a lot of interest since then [9, 18, 21, 22, 29, 30, 57–60, 65]. Recently, definite pencils have been characterised in terms of the distribution of their real eigenvalues with respect to their sign characteristic [6, 7]. This has allowed the extension of the techniques used in [5] to construct a minimal Hermitian perturbation \(\varDelta L(z) =\varDelta _{1} - z\varDelta _{2}\) (with respect to the norm | | | ⋅ | | | ) to a definite pencil L(z) that results in a real or infinite eigenvalue of mixed sign characteristic for the perturbed pencil (L +Δ L)(z). The Crawford number of L(z) is equal to | | | Δ L | | | as it can be shown that there exists a further Hermitian perturbation \(\tilde{L}(z) =\tilde{\varDelta } _{1} - z\tilde{\varDelta }_{2}\) that can be chosen to be arbitrarily small such that the Hermitian pencil \((L +\varDelta L +\tilde{ L})(z)\) has a pair of eigenvalues with non zero imaginary parts. The challenge in these cases is to formulate suitable definitions of the sign characteristic of eigenvalues at infinity that consider the effect of continuously changing Hermitian perturbations on these very important attributes of real or infinite eigenvalues. This work has been done in [56] and [16]. In fact, the work done in [56] also provides answers to analogous problems for Hermitian matrix polynomials with real eigenvalues and also extend to the case of matrix polynomials with co-efficient matrices are all skew-Hermitian or alternately Hermitian and skew-Hermitian.

4 Structured Rank One Perturbations

Motivated by applications in control theory Volker Mehrmann along with co-authors Mehl, Ran and Rodman investigated the effect of structured rank one perturbations on the Jordan form of a matrix [42–45]. We mention here two of the main results. In the following, a matrix \(A \in \mathbb{C}^{n,n}\) is said to be selfadjoint with respect to the Hermitian matrix \(H \in \mathbb{C}^{n,n}\) if A ∗ H = HA. Also a subset of a vector space V over \(\mathbb{R}\) is said to be generic, if its complement is contained in a proper algebraic subset of V. A Jordan block of size n to the eigenvalue λ is denoted by \(\mathcal{J}_{n}(\lambda )\). The symbol ⊕ denotes the direct sum.

Theorem 11

Let \(H \in \mathbb{C}^{n\times n}\) be Hermitian and invertible, let \(A \in \mathbb{C}^{n\times n}\) be H-selfadjoint, and let \(\lambda \in \mathbb{C}\) . If A has the Jordan canonical form

where n 1 > ⋯ > n m and where \(\varLambda (\tilde{A}) \subseteq \mathbb{C}\setminus \{\lambda \}\) and if \(B \in \mathbb{C}^{n\times n}\) is a rank one perturbation of the form B = uu ∗ H, then generically (with respect to 2n independent real variables that represent the real and imaginary components of u) the matrix A + B has the Jordan canonical form

where \(\tilde{\mathcal{J}}\) contains all the Jordan blocks of A + B associated with eigenvalues different from λ.

The theorem states that under a generic H-selfadjoint rank one perturbation precisely one of the largest Jordan blocks to each eigenvalue of the perturbed H-selfadjoint matrix A splits into distinct eigenvalues while the other Jordan blocks remain unchanged. An analogous result holds for unstructured perturbations. In view of these facts the following result on Hamiltonian perturbations is surprising.

Theorem 12

Let \(J \in \mathbb{C}^{n\times n}\) be skew-symmetric and invertible, let \(A \in \mathbb{C}^{n\times n}\) be J-Hamiltonian (with respect to transposition, i.e. \(A^{T}J = -\mathit{JA}\) ) with pairwise distinct eigenvalues \(\lambda _{1},\lambda _{2},\cdots \,,\lambda _{p},\lambda _{p+1} = 0\) and let B be a rank one perturbation of the form \(B = uu^{T}J \in \mathbb{C}^{n\times n}\) .

For every λ j , \(j = 1,2,\ldots,p + 1\) , let \(n_{1,j} > n_{2,j} >\ldots > n_{m_{j},j}\) be the sizes of Jordan blocks in the Jordan form of A associated with the eigenvalue λ j , and let there be exactly ℓ k,j Jordan blocks of size n k,j associated with λ j in the Jordan form of A, for k = 1,2,…,m j.

-

(i)

If n 1,p+1 is even (in particular, if A is invertible), then generically with respect to the components of u, the matrix A + B has the Jordan canonical form

$$\displaystyle\begin{array}{rcl} \bigoplus _{j=1}^{p+1}& & \Big(\left (\mathcal{J}_{ n_{1,j}}(\lambda _{j})^{\oplus \,\ell_{1,j}-1}\right ) \oplus \left (\mathcal{J}_{ n_{2,j}}(\lambda _{j})^{\oplus \,\ell_{2,j} }\right ) {}\\ \oplus &&\left.\cdots \oplus \left (\mathcal{J}_{n_{m_{ j},j}}(\lambda _{j})^{\oplus \,\ell_{m_{j},j} }\right )\right ) \oplus \tilde{\mathcal{J}}, {}\\ \end{array}$$where \(\tilde{\mathcal{J}}\) contains all the Jordan blocks of A + B associated with eigenvalues different from any of λ 1 ,…,λ p+1 .

-

(ii)

If n 1,p+1 is odd (in this case ℓ 1,p+1 is even), then generically with respect to the components of u, the matrix A + B has the Jordan canonical form

$$\displaystyle\begin{array}{rcl} \bigoplus _{j=1}^{p}& & \left (\left (\mathcal{J}_{ n_{1,j}}(\lambda _{j})^{\oplus \,\ell_{1,j}-1}\right ) \oplus \left (\mathcal{J}_{ n_{2,j}}(\lambda _{j})^{\oplus \,\ell_{2,j} }\right ) \oplus \cdots \oplus \left (\mathcal{J}_{n_{m_{ j},j}}(\lambda _{j})^{\oplus \,\ell_{m_{j},j} }\right )\right ) {}\\ \oplus &&\left (\mathcal{J}_{n_{1,p+1}}(0)^{\oplus \,\ell_{1,p+1}-2}\right ) \oplus \left (\mathcal{J}_{ n_{2,p+1}}(0)^{\oplus \,\ell_{2,p+1} }\right ) {}\\ \oplus &&\cdots \oplus \left (\mathcal{J}_{n_{m_{ p+1},p+1}}(0)^{\oplus \,\ell_{m_{p+1},p+1} }\right ) \oplus \mathcal{J}_{n_{1,p+1}+1}(0) \oplus \tilde{\mathcal{J}}, {}\\ \end{array}$$ -

(iii)

In either case (1) or (2) , generically the part \(\tilde{\mathcal{J}}\) has simple eigenvalues.

The surprising fact here is that concerning the change of Jordan structure, the largest Jordan blocks of odd size, say n, corresponding to the eigenvalue 0 of a J-Hamiltonian matrix are exceptional. Under a generic structured rank one perturbation two of them are replaced by one block corresponding to the eigenvalue 0 of size n + 1 and some 1 × 1 Jordan blocks belonging to other eigenvalues.

In the paper series [42–45] the authors inspect changes of the Jordan canonical form under structured rank one perturbations for various other classes of matrices with symmetries also.

5 Concluding Remarks

In this article we have given a brief overview of Volker Mehrmann’s contributions on eigenvalue perturbations for pencils occurring in control theory, Hamiltonian matrices and for structured rank one matrix perturbations. We believe that Theorem 10 can be extended to cover perturbations of the pencil (8.4) or, more generally, to some other structured matrix pencils and polynomials.

References

Adhikari, B., Alam, R.: Structured backward errors and pseudospectra of structured matrix pencils. SIAM J. Matrix Anal. Appl. 31, 331–359 (2009)

Adhikari, B., Alam, R., Kressner, D.: Structured eigenvalue condition numbers and linearizations for matrix polynomials. Linear Algebra. Appl. 435, 2193–2221 (2011)

Ahmad, Sk.S., Mehrmann, V.: Perturbation analysis for complex symmetric, skew symmetric even and odd matrix polynomials. Electron. Trans. Numer. Anal. 38, 275–302 (2011)

Ahmad, Sk.S., Mehrmann, V.: Backward errors for Hermitian, skew-Hermitian, H-even and H-odd matrix polynomials. Linear Multilinear Algebra 61, 1244–1266 (2013)

Alam, R., Bora, S., Karow, M., Mehrmann, V., Moro, J.: Perturbation theory for Hamiltonian matrices and the distance to bounded realness. SIAM J. Matrix Anal. Appl. 32, 484–514 (2011)

Al-Ammari, M.: Analysis of structured polynomial eigenvalue problems. PhD thesis, School of Mathematics, University of Manchester, Manchester (2011)

Al-Ammari, M., Tisseur, F.: Hermitian matrix polynomials with real eigenvalues of definite type. Part 1 Class. Linear Algebra Appl. 436, 3954–3973 (2012)

Antoulas, T: Approximation of Large-Scale Dynamical Systems. SIAM, Philadelphia (2005)

Ballantine, C.S.: Numerical range of a matrix: some effective criteria. Linear Algebra Appl. 19, 117–188 (1978)

Benner, P., Mehrmann, V., Xu, H.: Perturbation analysis for the eigenvalue problem of a formal product of matrices. BIT Numer. Math. 42, 1–43 (2002)

Benner, P., Kressner, D., Mehrmann, V.: Structure preservation: a challenge in computational control. Future Gener. Comput. Syst. 19, 1243–1252 (2003)

Benner, P., Byers, R., Mehrmann, V., Xu, H.: Robust method for robust control. Preprint 2004–2006, Institut für Mathematik, TU Berlin (2004)

Benner, P., Kressner, D., Mehrmann, V.: Skew-Hamiltonian and Hamiltonian eigenvalue problems: theory, algorithms and applications. In: Drmac, Z., Marusic, M., Tutek, Z. (eds.) Proceedings of the Conference on Applied Mathematics and Scientific Computing, Brijuni, pp. 3–39. Springer (2005). [ISBN:1-4020-3196-3]

Bora, S.: Structured eigenvalue condition number and backward error of a class of polynomial eigenvalue problems. SIAM J. Matrix Anal. Appl. 31, 900–917 (2009)

Bora, S., Mehrmann, V.: Linear perturbation theory for structured matrix pencils arising in control theory. SIAM J. Matrix Anal. Appl. 28, 148–191 (2006)

Bora, S., Srivastava, R.: Distance problems for Hermitian matrix pencils with eigenvalues of definite type (submitted)

Brüll, T., Schröder, C.: Dissipativity enforcement via perturbation of para-Hermitian pencils. IEEE Trans. Circuit. Syst. I Regular Paper 60, 164–177 (2012)

Cheng, S.H., Higham, N.J.: The nearest definite pair for the Hermitian generalized eigenvalue problem. Linear Algebra Appl. 302/303, 63–76 (1999). Special issue dedicated to Hans Schneider (Madison, 1998)

Coelho, C.P., Phillips, J.R., Silveira, L.M.: Robust rational function approximation algorithm for model generation. In: Proceedings of the 36th ACM/IEEE Design Automation Conference (DAC), New Orleans, pp. 207–212 (1999)

Crawford, C.R.: The numerical solution of the generalised eigenvalue problem. Ph.D. thesis, University of Michigan, Ann Arbor (1970)

Crawford, C.R.: Algorithm 646: PDFIND: a routine to find a positive definite linear combination of two real symmetric matrices. ACM Trans. Math. Softw. 12, 278–282 (1986)

Crawford, C.R., Moon, Y.S.: Finding a positive definite linear combination of two Hermitian matrices. Linear Algebra Appl. 51, pp. 37–48 (1983)

Faßbender, H., Mackey, D.S., Mackey, N., Xu, H.: Hamiltonian square roots of skew-Hamiltonian matrices. Linear Algebra Appl. 287, 125–159 (1999)

Freiling, G., Mehrmann, V., Xu, H.: Existence, uniqueness and parametrization of Lagrangian invariant subspaces. SIAM J. Matrix Anal. Appl. 23, 1045–1069 (2002)

Freund, R.W., Jarre, F.: An extension of the positive real lemma to descriptor systems. Optim. Methods Softw. 19, 69–87 (2004)

Green, M., Limebeer, D.J.N.: Linear Robust Control. Prentice-Hall, Englewood Cliffs (1995)

Grivet-Talocia, S.: Passivity enforcement via perturbation of Hamiltonian matrices. IEEE Trans. Circuits Syst. 51, pp. 1755–1769 (2004)

Guglielmi, N., Kressner, D., Lubich, C.: Low rank differential equations for Hamiltonian matrix nearness problems. Oberwolfach preprints, OWP 2013-01 (2013)

Guo, C.-H., Higham, N.J., Tisseur, F.: An improved arc algorithm for detecting definite Hermitian pairs. SIAM J. Matrix Anal. Appl. 31, 1131–1151 (2009)

Higham, N.J., Tisseur, F., Van Dooren, P.: Detecting a definite Hermitian pair and a hyperbolic or elliptic quadratic eigenvalue problem, and associated nearness problems. Linear Algebra Appl. 351/352, 455–474 (2002). Fourth special issue on linear systems and control

Higham, N.J., Konstantinov, M.M., Mehrmann, V., Petkov, P.Hr.: The sensitivity of computational control problems. Control Syst. Mag. 24, 28–43 (2004)

Karow, M.: μ-values and spectral value sets for linear perturbation classes defined by a scalar product. SIAM J. Matrix Anal. Appl. 32, 845–865 (2011)

Karow, M., Kressner, D., Tisseur, F.: Structured eigenvalue condition numbers. SIAM J. Matrix Anal. Appl. 28, 1052–1068 (2006)

Konstantinov, M.M., Mehrmann, V., Petkov, P.Hr.: Perturbation analysis for the Hamiltonian Schur form. SIAM J. Matrix Anal. Appl. 23, 387–424 (2002)

Konstantinov, M.M., Mehrmann, V., Petkov, P.Hr.: Perturbed spectra of defective matrices. J. Appl. Math. 3, pp. 115–140 (2003)

Kressner, D., Peláez, M.J., Moro, J.: Structured Hölder condition numbers for multiple eigenvalues. SIAM J. Matrix Anal. Appl. 31, 175–201 (2009)

Lancaster, P.: Strongly stable gyroscopic systems. Electron. J. Linear Algebra 5, 53–67 (1999)

Lancaster, P., Rodman, L.: The Algebraic Riccati Equation. Oxford University Press, Oxford (1995)

Li, C.-K., Mathias, R.: Distances from a Hermitian pair to diagonalizable and nondiagonalizable Hermitian pairs. SIAM J. Matrix Anal. Appl. 28, 301–305 (2006) (electronic)

Lin, W.-W., Mehrmann, V., Xu, H.: Canonical forms for Hamiltonian and symplectic matrices and pencils. Linear Algebra Appl. 301–303, 469–533 (1999)

Mehl, C., Mehrmann, V., Ran, A., Rodman, L.: Perturbation analysis of Lagrangian invariant subspaces of symplectic matrices. Linear Multilinear Algebra 57, 141–185 (2009)

Mehl, C., Mehrmann, V., Ran, A.C.M., Rodman, L.: Eigenvalue perturbation theory of classes of structured matrices under generic structured rank one perturbations. Linear Algebra Appl. 435, 687–716 (2011)

Mehl, C., Mehrmann, V., Ran, A.C.M., Rodman, L.: Perturbation theory of selfadjoint matrices and sign characteristics under generic structured rank one perturbations. Linear Algebra Appl. 436, 4027–4042 (2012)

Mehl, C., Mehrmann, V., Ran, A.C.M., Rodman, L.: Jordan forms of real and complex matrices under rank one perturbations. Oper. Matrices 7, 381–398 (2013)

Mehl, C., Mehrmann, V., Ran, A.C.M., Rodman, L.: Eigenvalue perturbation theory of symplectic, orthogonal, and unitary matrices under generic structured rank one perturbations. BIT 54, 219–255 (2014)

Mehrmann, V.: The Autonomous Linear Quadratic Control Problem, Theory and Numerical Solution. Lecture Notes in Control and Information Science, vol. 163. Springer, Heidelberg (1991)

Mehrmann, V.: A step towards a unified treatment of continuous and discrete time control problems. Linear Algebra Appl. 241–243, 749–779 (1996)

Mehrmann, V., Xu, H.: Perturbation of purely imaginary eigenvalues of Hamiltonian matrices under structured perturbations. Electron. J. Linear Algebra 17, 234–257 (2008)

Overton, M., Van Dooren, P.: On computing the complex passivity radius. In: Proceedings of the 4th IEEE Conference on Decision and Control, Seville, pp. 760–7964 (2005)

Paige, C., Van Loan, C.: A Schur decomposition for Hamiltonian matrices. Linear Algebra Appl. 14, 11–32 (1981)

Pontryagin, L.S., Boltyanskii, V., Gamkrelidze, R., Mishenko, E.: The Mathematical Theory of Optimal Processes. Interscience, New York (1962)

Ran, A.C.M., Rodman, L.: Stability of invariant maximal semidefinite subspaces. Linear Algebra Appl. 62, 51–86 (1984)

Ran, A.C.M., Rodman, L.: Stability of invariant Lagrangian subspaces I. In: Gohberg, I. (ed.) Operator Theory: Advances and Applications, vol. 32, pp. 181–218. Birkhäuser, Basel (1988)

Ran, A.C.M., Rodman, L.: Stability of invariant Lagrangian subspaces II. In: Dym, H., Goldberg, S., Kaashoek, M.A., Lancaster, P. (eds.) Operator Theory: Advances and Applications, vol. 40, pp. 391–425. Birkhäuser, Basel (1989)

Rump, S.M.: Eigenvalues, pseudospectrum and structured perturbations. Linear Algebra Appl. 413, 567–593 (2006)

Srivastava, R.: Distance problems for Hermitian matrix polynomials – an ε-pseudospectra based approach. PhD thesis, Department of Mathematics, IIT Guwahati (2012)

Stewart, G.W.: Perturbation bounds for the definite generalized eigenvalue problem. Linear Algebra Appl. 23, 69–85 (1979)

Stewart, G.W., Sun, J.-G.: Matrix perturbation theory. Computer Science and Scientific Computing. Academic, Boston (1990)

Sun, J.-G.: A note on Stewart’s theorem for definite matrix pairs. Linear Algebra Appl. 48, 331–339 (1982)

Sun, J.-G.: The perturbation bounds for eigenspaces of a definite matrix-pair. Numer. Math. 41, 321–343 (1983)

Taussky, O.: Positive-definite matrices. In: Inequalities (Proceeding Symposium Wright-Patterson Air Force Base, Ohio, 1965), pp. 309–319. Academic, New York (1967)

Thompson, R.C.: Pencils of complex and real symmetric and skew matrices. Linear Algebra Appl. 147, pp. 323–371 (1991)

Tisseur, F., Meerbergen, K.: The quadratic eigenvalue problem. SIAM Rev. 43, 235–286 (2001)

Trefethen, L.N., Embree, M.: Spectra and Pseudospectra: The Behavior of Nonnormal Matrices and Operators. Princeton University Press, Princeton (2005)

Uhlig, F.: On computing the generalized Crawford number of a matrix. Linear Algebra Appl. 438, 1923–1935 (2013)

Van Dooren, P.: The generalized eigenstructure problem in linear system theory. IEEE Trans. Autom. Control AC-26, 111–129 (1981)

Van Dooren, P.: A generalized eigenvalue approach for solving Riccati equations. SIAM J. Sci. Stat. Comput. 2, 121–135 (1981)

Zhou, K., Doyle, J.C., Glover, K.: Robust and Optimal Control. Prentice-Hall, Upper Saddle River (1995)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Bora, S., Karow, M. (2015). Structured Eigenvalue Perturbation Theory. In: Benner, P., Bollhöfer, M., Kressner, D., Mehl, C., Stykel, T. (eds) Numerical Algebra, Matrix Theory, Differential-Algebraic Equations and Control Theory. Springer, Cham. https://doi.org/10.1007/978-3-319-15260-8_8

Download citation

DOI: https://doi.org/10.1007/978-3-319-15260-8_8

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-15259-2

Online ISBN: 978-3-319-15260-8

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)