Abstract

Leonhard Euler (1707–1783) , among others in the eighteenth century, was adept at manipulating divergent series, though usually without careful justification (cf. Tucciarone [487], Barbeau and Leah [26], and Varadarajan [493]). Note, however, Hardy’s conclusion

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

(a) Background

Leonhard Euler (1707–1783) , among others in the eighteenth century, was adept at manipulating divergent series, though usually without careful justification (cf. Tucciarone [487], Barbeau and Leah [26], and Varadarajan [493]). Note, however, Hardy’s conclusion

\(\ldots\) it is a mistake to think of Euler as a “loose” mathematician.

As with singular perturbations, the ideas behind asymptotic approximations were not well understood until about 1900. They were presumably unknown to Prandtl in Munich and Hanover. For a classical treatment of infinite series , see, e.g., Rainville [404].

See Olver [360] for an example of a semiconvergent or convergently beginning series . They were defined as follows in P.-S. Laplace’s Analytic Theory of Probabilities (whose third edition of 1820 is now available online as part of his complete work):

The series converge fast for the first few terms, but the convergence worsens, and then comes to an end, turning into a divergence. This does not hinder the use of the series if one uses only the first few terms, for which convergence is rather fast. The residual of the series, which is usually neglected, is small in comparison to the preceding terms.

(This translation is from Andrianov and Manevitch [10].)

Throughout most of the nineteenth century, a strong reaction against divergent series , led by the analyst Cauchy, nearly banned their use (especially in France). (Augustin-Louis Cauchy (1789–1857) was a professor at École Polytechnique from 1815–1830. Afterwards, he held other positions, sometimes in exile, because his conservative religious and political stances made him refuse to take a loyalty oath.) Note, however, Cauchy [73]. In 1828, the Norwegian Niels Abel (1802–1829) wrote:

Divergent series are the invention of the devil, and it is shameful to base on them any demonstrations whatsoever. By using them, one may draw any conclusion he pleases and that is why these series have produced so many fallacies and so many paradoxes.

Verhulst [500] has a similar quote from d’Alembert. Kline [255] includes considerable material regarding divergent series. In particular, he points out that Abel continued

That most of the things are correct in spite of that is extraordinarily surprising. I am trying to find a reason for this; it is an exceedingly interesting question.

Further, Kline quotes the logician Augustus De Morgan of University College London as follows:

We must admit that many series are such as we cannot at present safely use, except as a means of discovery, the results of which are to be subsequently verified and the most determined rejector of all divergent series doubtless makes use of them in his closet \(\ldots\)

Finally, the practical British engineer Oliver Heaviside wrote

The series is divergent; therefore, we may be able to do something with it.

In his Electromagnetic Theory of 1899, Heaviside also wrote

It is not easy to get up any enthusiasm after it has been artificially cooled by the rigorists \(\ldots\) There will have to be a theory of divergent series.

Attitudes and developments no doubt somewhat reflect the alternations in French and European politics during those turbulent times. Abel summability of power series was originated by Euler, but it is usually named after Abel. Roy [427] reports that Abel called the technique

saying

In 1886, however, Henri Poincaré (1854–1912) and Thomas Joannes Stieltjes (1856–1894), simultaneously and independently, provided the valuable definition of an asymptotic approximation and illustrated its use and practicality. Their papers were, respectively, published in Acta Math. and Ann. Sci. École Norm. Sup. (cf. Poincaré [393] and Stieltjes [475]). The latter was Stieltjes’ dissertation. Stieltjes called the series semi-convergent. Poincaré (a professor at the Sorbonne and École Polytechnique) had studied with Hermite, who was in close contact with Stieltjes, primarily through letters (cf. Baillaud and Bourget [20]). Like most others, we tend to emphasize the special significance of Poincaré because of his important later work on celestial mechanics, basic to the two-timing methods we will later consider (see the centennial biographies, Gray [182] and Verhulst [502]). Stieltjes was Dutch, but successfully spent the last 9 years of his short life in France.

In contrast to convergent series, a couple of terms in an asymptotic series, in practice, often provide a good approximation. This is especially true for singular perturbation problems, as we shall find. McHugh [310] and Schissel [435] connect the topic to the classical ordinary differential equations literature. One’s first contact with asymptotic series may be for linear differential equations with irregular singular points (cf. Ford [151], Coddington and Levinson [91], or Wasow [513]). An example is provided by the differential equation

which has the formal power series solution

convergent only at x = 0. The same series arises (as we will find) in expanding the exponential integral, while the series

formally satisfies

The most popular book on asymptotic expansions may be Erdélyi [141]. (My copy cost $1.35.) The Dover paperback (now an e-book) was based on Caltech lectures from 1954 and was originally issued as a report to the U.S. Office of Naval Research. The material is still valuable, including operations on asymptotic series, asymptotics of integrals, singularities of differential equations, and differential equations with a large parameter. Arthur Erdélyi (1908–1977) came to Caltech in 1947 to edit the five-volume Bateman Manuscript Project (based on formulas rumored to be in Harry Bateman’s shoebox collection. Bateman was a prolific faculty member at Caltech from 1917 to 1946.) Erdélyi remained in Pasadena until 1964 when he returned to the University of Edinburgh to take the Regius chair that had been held by his hero, E. T. (later Sir Edmund) Whittaker, from 1912 to 1946. (Whittaker wrote A Course in Modern Analysis in 1902 and was coauthor with G. N. Watson of subsequent editions from 1915 (cf. [521]).) Influenced by the work feverishly underway among engineers at GALCIT, Erdélyi and some math graduate students also got involved in studying singular perturbations (one was the author’s own thesis advisor, Gordon Latta , whose 1951 thesis [281] had H. F. Bohnenblust as advisor). Erdélyi’s Asymptotic Expansions was much influenced by E. T. Copson’s Admiralty Computing Service report of 1946, commercially published by Cambridge University Press in 1965 [98], and by the work of Rudolf Langer, Thomas Cherry, and others in the 1930s regarding turning points. Much more applied mathematical activity involving asymptotics took place in Pasadena after Caltech started its applied math department about 1962, initially centered around Donald Cohen, Julian Cole, Herbert Keller, Heinz-Otto Kreiss, Paco Lagerstrom, Philip Saffman, and Gerald Whitham, among others contributing to practical applied asymptotics. Their students have meanwhile been most influential in the field.

The historical background to Cauchy’s own work on divergent series is well explained by the French mathematician Émile Borel (1871–1956) in Borel ([52], originally from 1928). Borel wrote:

The essential point which emerges from this hasty review of Cauchy’s work on divergent series is that the great geometer never lost sight of this matter and constantly searched this proposition, which he called

$$\displaystyle{\mbox{ a little difficult,}}$$that a divergent series does not have a sum. Cauchy’s immediate successors, on the contrary, accepted the proposition with neither extenuation nor restriction. They remembered the theory only as applied in Stirling’s formula , but the possibility of practical use of that divergent series seemed to be a totally isolated curiosity of no importance from the point of view of general ideas which one could try to develop on the subject of analysis.

Andrianov and Manevitch [10], among others, report that Borel traveled to Stockholm to confer with Gösta Mittag-Leffler , after realizing that his summation method of 1899 gave the “right” answer for many classical divergent series. Placing his hand on the collected works of his teacher Weierstrass, Mittag-Leffler said, in Latin,

Nonetheless, Borel had won the first prize in the 1898 Paris Academy competition “Investigation of the Leading Role of Divergent Series in Analysis.” See Costin [101] for an update on Borel summability .

Another important early book [196] on divergent series is by the British mathematician G. H. Hardy (1877–1946), a leading British pure mathematician and a professor successively at both Oxford and Cambridge. It was published posthumously in 1949 with a preface by his colleague J. E. Littlewood saying:

about the present title, now colourless, there hung an aroma of paradox and audacity.

Hardy’s introductory chapter is especially readable, filled with interesting and significant historical remarks. The book has none of the anti-applied slant nor personal reticence (cf. Hardy [195], Littlewood [296], or Leavitt [282]) often linked to Hardy. Overall the monograph is quite technically sophisticated, as is his related Orders of Infinity [194].

Olver’s Asymptotics and Special Functions [360] includes a rigorous, but very readable, coverage of asymptotics, with a computational slant toward error bounds. (British-born and educated, Olver came to the United States in 1961.) At age 85, Frank Olver (1924–2013) was the mathematics editor of the 2010 NIST Digital Library of Mathematical Functions [361] and of the associated Handbook, the web-based successor to Abramowitz and Stegun [2] (which originated at the U.S. National Bureau of Standards, the predecessor of the National Institute of Standards and Technology, and which may have been the most popular math book since Euclid.) It demonstrates that asymptotics is fundamental to understanding the behavior of special functions, which still remain highly relevant in this computer age.

Among many other mathematics books deserving attention by those wishing to learn asymptotics are Dingle [123], Bleistein and Handelsman [45], Bender and Orszag [36], Murray [338], van den Berg [40], Wong [525], Ramis [405], Sternin and Shatalov [473], Jones [229], Costin [101], Beals and Wong [33], Paris [386], and Paulsen [387]. Readers will appreciate their individual uniqueness and may develop their own personal favorites.

(b) Asymptotic Expansions

In the following, we will write

-

(i)

$$\displaystyle{ f(x) \sim \phi (x)\ \ \ \mbox{ as}\ \ \ x \rightarrow \infty }$$(2.1)

if \(\frac{f(x)} {\phi (x)}\) then tends to unity. (We will say that f is asymptotic to ϕ as x > 0 becomes unbounded.)

-

(ii)

$$\displaystyle{ f(x) = o(\phi (x))\ \ \ \mbox{ as}\ \ \ x \rightarrow \infty }$$(2.2)

if \(\frac{f(x)} {\phi (x)} \rightarrow 0\) (Alternatively, one can write \(f \ll \phi\).) and

-

(iii)

$$\displaystyle{ f(x) = O(\phi (x))\ \ \ \mbox{ as}\ \ \ x \rightarrow \infty }$$(2.3)

if \(\frac{f(x)} {\phi (x)}\) is then bounded.

We often call these relations asymptotic equality and the little o and big O Landau (or Bachmann–Landau) order symbols (after the number theorists who introduced them in 1894 and 1909, respectively). (Olver [360] calls the O symbol a fig leaf, since the implied bound (which would be very useful when known) isn’t provided.) Warning: We need to be especially careful when the comparison function ϕ has zeros as \(x \rightarrow \infty \). The symbol tilde \(\sim \) is used to distinguish asymptotic equality from ordinary equality.

As our basic definition, we will use (after Olver): A necessary and sufficient condition that f(z) possess an asymptotic (power series) expansion

is that for each nonnegative integer n

as \(z \rightarrow \infty \) in R, uniformly with respect to the allowed phase (i.e., argument) of z. The coefficients a j are uniquely determined (as for convergent series). They’re not always the Taylor series coefficients, however. Also note that the limit point ∞ can be replaced by any other point and that (2.5) can be interpreted to be a recurrence relation for the coefficients a n of (2.4).

An important case, often arising in applications , occurs when the asymptotic expansion with respect to \(\frac{1} {z}\) depends on a second parameter , say θ. When the second parameter takes on (or tends to) a critical value θ c , the expansion may become invalid. The asymptotic expansion is then said to be nonuniform with respect to θ.

Convergence factors are sometimes introduced to “make” divergent series converge. Likewise, the Borel–Ritt theorem is often invoked to provide a holomorphic sum to a divergent series (cf. Wasow [513]).

We also note, less centrally, that Martin Kruskal [266] perceptively introduced the term asymptotology as the art of handling applied mathematical systems in limiting cases, formulating seven underlying “principles” to be adhered to (cf. the original paper and Ramnath [406]). (They are simplification, recursion, interpolation, wild behavior, annihilation, maximum balance, and mathematical nonsense.)

A very useful elementary technique to obtain asymptotic approximations is the common method of integration by parts . We illustrate the technique by considering the exponential integral

with integration taken along any path in the complex plane, cut on the positive real axis, with \(\vert z\vert\) large. Repeated integration by parts gives

etc., so for any integer n > 0, we obtain

for the (scaled) remainder

If we define the region R by the conditions \(\mbox{ Re}z < 0\) and \(\vert \arg (-z)\vert <\pi\), so that \(\vert e^{t-z}\vert \leq 1\) there, we find that

i.e. the error after using the first n + 1 terms in the power series for ze −z Ei(z) is less in magnitude than the first neglected term in the series when \(z \rightarrow \infty \) in the sector of the left half plane. Thereby, as expected, the series expansion is asymptotic there. (Recall an analogous error bound, and the related pincer principle, for real power series whose terms have alternating signs.)

For n = 1,

with \(\vert e_{1}(z)\vert \leq \frac{2} {\vert z\vert ^{2}}\) in the open sector R. For z fixed, the error is bounded. Moreover, we can nicely approximate Ei(z) there by using the first two terms

of the sum if we simply let \(\vert z\vert\) be sufficiently large. This is in sharp contrast to using a convergent expansion in powers of \(\frac{1} {z}\), where we would typically need to let the number n of terms used become large in order to get a good approximation for any given z within the domain of convergence.

More surprising is the idea of optimal truncation (cf. White [519] and Paulsen [387]). A calculus exercise shows that for any given z, the absolute values of successive terms (i.e., our error bound) in the expansion (2.7) reach a minimum, after which they increase without bound. (Numerical tables for this example are available in a number of the sources cited.) This minimum occurs when \(n \sim \vert z\vert\), so if this asymptotic series is truncated just before then, the remainder will satisfy

when we use Stirling’s approximation

for \((\vert z\vert + 1)!\) (cf., e.g., Olver [360]). The latter series diverges for all x, but gives the remarkably good approximation 5.9989 for the rather small x = 4.

Spencer [470] states

Surely the most beautiful formula in all of mathematics is Stirling’s formula \(\ldots\) How do the two most important fundamental constants, e and \(\pi\), find their way into an asymptotic formula for the product of integers?

Equation (2.11) seems actually to be due to both de Moivre and Stirling (cf. Roy [427]). This error bound for Ei(z) is, indeed, asymptotically smaller in magnitude as \(\vert z\vert \rightarrow \infty \) than any term in the divergent series! Thus, this bound is naturally said to display asymptotics beyond all orders .

Paris [386] points out that similar exponential improvements via optimal truncation can often be achieved. He cites the following theorem of Fritz Ursell [489]:

Suppose f(t) is analytic for \(\vert t\vert < R\) with the Maclaurin expansion

there and suppose that

for \(r \leq t < \infty \) and positive constants K and β. Then (using the greatest integer function [ ] ), he obtains

as \(x \rightarrow \infty \). Thus, the Maclaurin coefficients of f(t) (about t = 0) provide the asymptotic series coefficients for its Laplace transform (about x = ∞) (because the kernel e −xt greatly discounts other t values).

We shouldn’t extrapolate too far from the example Ei(z) or Ursell’s theorem. The often-made suggestion to truncate when the smallest error is attained is not always appropriate. (Convergent series, indeed, attain their smallest error (zero) after an infinite number of terms.) However, we point out that considerable recent progress has resulted using exponential asymptotics , by reexpanding the remainder repeatedly and truncating the asymptotic expansions optimally each time (cf. Olde Daalhuis [358] and Boyd [55, 56, 57]). Boyd tries to explain the divergence of the formal regular power series expansion

as an asymptotic solution of

by using the representation

of the solution as an inverse Fourier transform (cf. Boyd [56]) with F being the transform of f. A critical point is the finite radius of convergence of the power series for \(\frac{1} {1+\epsilon ^{2}k^{2}}\). Boyd seems to be first of many authors to quote Gian-Carlo Rota [421]:

One remarkable fact of applied mathematics is the ubiquitous appearance of divergent series , hypocritically renamed asymptotic expansions. Isn’t it a scandal that we teach convergent series to our sophomores and do not tell them that few, if any, of the series they meet will converge? The challenge of explaining what an asymptotic expansion is ranks among the outstanding taboo problems of mathematics.

In addition to asymptotic power series to approximate a given function, it will often be helpful to use more general asymptotic expansions

Here, the a n s are constants and we will suppose that {ϕ n } is an asymptotic sequence of monotonic functions (or scale) satisfying

generalizing the powers. We will again let the symbol tilde \((\sim )\) denote asymptotic equality

where for any integer N > 0

Often, it will be helpful to limit N and to restrict ε to appropriate complex sectors (about, perhaps, the positive real half axis) . In the special case of an asymptotic power series, we simply have ϕ n (ε) = ε n. Note that the coefficients in (2.14) are uniquely determined since

To multiply asymptotic expansions (2.14), it is convenient if the sequence satisfies

for all pairs n and m. (Determining an appropriate asymptotic sequence {ϕ n }, to use for a given f arising in, say, some application may not be simple, however. In response, Murdock [335] suggests a method of undetermined gauges.) When we let the a n s depend on ε, the series (2.14) is called a generalized asymptotic expansion . Their coefficients a n (ε) are then no longer unique. Such expansions are, nonetheless, commonly used, here and elsewhere.

Some further write

whenever

for every N.

An important scale is the Hardy field of “logarithmico-exponential” functions , consisting of those functions obtained from ε by adding, multiplying, exponentiating, and taking a logarithm a finite number of times.

We note the important fact that a convergent series is asymptotic. This follows since the terms a k z k of a convergent power series or analytic function

ultimately behave like a geometric series, i.e. they satisfy

for some bound A, all large k and \(\vert z\vert \leq r\) for some r > 0. For \(\vert z\vert < \frac{r} {2}\), this implies that the remainder for any n satisfies

so the convergent power series for f for \(\vert z\vert \leq r\) is indeed asymptotic as \(z \rightarrow 0\). More simply, recall Taylor series with remainder.

A general technique to obtain asymptotic expansions for integrals is again termwise integration . Consider, for example, the Laplace transform

for λ > 0 and x large. Since \(\frac{1} {1+t} =\sum _{ s=0}^{n-1}(-t)^{s} + \frac{(-t)^{n}} {1+t}\), we obtain

in terms of Euler’s gamma (or factorial) function

(cf. Olver et al. [361]) for the remainder

Since \(\vert r_{n}(x)\vert \leq \frac{\varGamma (n+\lambda )} {x^{n+\lambda }}\),

Again, even though the Maclaurin series for \(\frac{1} {1+t}\) only converges for 0 ≤ t < 1, its coefficients determine the asymptotics for I(x) as \(x \rightarrow \infty \). (Readers should understand such typical arguments.) Generalizations of this procedure to integrals

often are labeled Laplace’s method (or Watson’s lemma ). A real variables approach to obtain such results is found in Olver [360], while a complex variables approach is presented in Wong [525]. More general techniques for the asymptotic evaluation of integrals include the stationary phase and saddle point methods (called Edgeworth expansions in statistics).

(c) The WKB Method

The WKB method (cf. Olver [360], Schissel [436], Miller [318], Cheng [83], Wong [526], and Paulsen [387]) concerns asymptotic solutions of the scalar linear homogeneous second-order differential equation

when the real parameter \(\lambda \rightarrow \infty \) and f is bounded. Introducing the logarithmic derivative

or equivalently setting

converts the given linear second-order differential equation (2.20) to the nonlinear first-order generalized Riccati equation

(since y ′ = uy and \(y^{{\prime\prime}} = (u^{{\prime}} + u^{2})y\)) which, generally, can’t be solved directly. (This is not the simple version solved by Count Riccati (or Johann Bernoulli) (cf. Roy [427]).) We will further suppose that the expansion

is known and valid on an interval α < x < β as \(\lambda \rightarrow \infty \). Then, we will seek a (formal) asymptotic solution

of (2.23) with corresponding series for u 2 and u ′. Equating coefficients of λ 2 and λ 2−n in the differential equation, we will successively need

and

Thus, we will take

and

In particular,

implies two linearly independent WKB approximates

and

for (2.20). The algebraic prefactor comes from the first term of u 1. See Keller and Lewis [245] for connections to geometrical optics and Keller [244] regarding the related Born and Rytov approximations. Note, further, that one consequence of the leading term approximation, important in quantum mechanics, is the so-called adiabatic invariance (cf. Arnold et al. [12] and Ou and Wong [385]). Knowing these linearly independent approximate solutions also allows us to solve the nonhomogeneous equation, i.e. to determine an asymptotic Green’s function (cf. Stakgold [472]).

As defined above, the o(1) symbol in (2.28–2.29) indicates an expression that goes to zero as \(\lambda \rightarrow \infty \). Its approximate form would be determined by u 2. Miller [318] proves the validity of the WKB approximation using a contraction mapping argument, while Olver [360] bounds the error involved in terms of the total variation of a natural control function. Note the singularities of y that result at any turning points where f 0 has a zero. Also note that the solutions (2.28–2.29) change from being exponential to oscillatory (or vice-versa) as such points are crossed with f 0 changing signs.

As an alternative to (2.28), we could directly seek asymptotic solutions of (2.20) in the form

for a complex-valued asymptotic power series A(x, λ) whose terms could be successively found using an undetermined coefficients scheme. Thus

must satisfy the differential equation. Because

we will need A to satisfy

as a power series

Murray [338] works out a variety of WKB examples quite explicitly.

Olver points out that the separate results of the physicists Wentzel, Kramers, and Brillouin in 1926 and those of Jeffreys in 1924 were actually obtained independently by Joseph Liouville and George Green in 1837. Carlini had even treated a special case involving Bessel functions in 1817. See Heading [200] and Fröman and Fröman [164] for further history . Nonetheless, the WKB(J) label seems to persist. (William Thomson, later Lord Kelvin, visited Paris in 1845 after his Cambridge graduation and introduced Jacques Sturm and Liouville to the work [183] of Green, a recently deceased former miller from Nottingham, memorialized in 1993 with a plaque in Westminster Abbey near the tomb of Newton and plaques to Kelvin, Maxwell, and Faraday (cf. Cannell [69]). (Green’s mill is now restored as a science center.) As late as 1953, Sir Harold Jeffreys called WKB

First, note that the WKB results provide existence and uniqueness theorems for the singularly perturbed linear ODE

when ε > 0 is small, a and b are smooth, and a(x) ≠ 0, when Dirichlet boundary conditions are applied at two endpoints, say α and β. Also note that the Sturm transformation

requires w to satisfy

for

The transformation (2.33) holds for all ε, but we will be especially concerned with the more challenging situation that ε is small but positive . Multiplying (2.34) by w and integrating by parts, supposing homogeneous boundary conditions w(α) = w(β) = 0, implies that

since the boundary terms \(\epsilon w\frac{dw} {dx}\) at α and β then vanish. Thus, \(w(x) \equiv 0\) must hold when

and the given two-point problem for y then has a unique solution (just let w be the difference between any two of them). Note that the sign condition (2.35) on f is satisfied if either

-

(i)

a(x) ≠ 0 and ε > 0 is small,

or

-

(ii)

\(2b \leq a^{{\prime}}\) and ε > 0.

Uniqueness conditions for more general Sturm-Liouville boundary value problems can be found in Courant-Hilbert [103] and Zettl [532].

Existence of the solutions y of (2.32) follows from using the two linearly independent real WKB solutions , which take the form

for asymptotic series A and B. (Note that this A is not the complex amplitude A used in the WKB solution (2.30).) In particular, the exponential factor in (2.33) is cancelled or doubled in the corresponding solutions (2.36).

The resulting outer solution A(x, ε) of (2.32) must satisfy

as a real power series in ε, so its leading term must satisfy

i.e.

Likewise \(\left (Be^{\frac{1} {\epsilon } \int ^{x}a(s)\,ds }\right )^{{\prime}} = \left (B^{{\prime}}-\frac{Ba} {\epsilon } \right )e^{-\frac{1} {\epsilon } \int ^{x}a(s)\,ds }\) and \(\left (Be^{-\frac{1} {\epsilon } \int ^{x}a(s)\,ds }\right )^{{\prime\prime}}\) \(= \left (B^{{\prime\prime}}-\frac{2} {\epsilon } B^{{\prime}}a -\frac{1} {\epsilon } Ba^{{\prime}} + \frac{B} {\epsilon ^{2}} a^{2}\right )e^{-\frac{1} {\epsilon } \int ^{x}a(s)\,ds }\), so the differential equation for y requires that

Its leading term \(B_{0}\) must satisfy \(aB_{0}^{{\prime}} + (a^{{\prime}}- b)B_{0} = 0\), so

and the general solution of (2.32) on x ≥ x 0 takes the form

when a(x) > 0. If bounded values y(x 0) and \(\epsilon y^{{\prime}}(x_{0})\) are prescribed, we will need

and

so

and

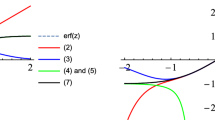

Having these two linearly independent solutions (2.36) to the linear differential equation (2.32) will allow us to asymptotically solve many boundary value problems for it and its nonhomogeneous analog. The errors made using such approximations are asymptotically negligible like e −Cx∕ε for some C > 0 and x > 0, so smaller than O(ε n) for any n > 0. See Fig. 2.1 and Howls [219]. Note that the solution (2.39) will feature an initial layer of nonuniform convergence. The asymptotic solutions of (2.32) for a(x) ≠ 0 will be more satisfactory throughout boundary layer regions than those traditionally found by matched expansions, as we shall later demonstrate. When such restrictions as f(x, ε) ≤ 0 in (2.34) don’t hold, and for nonlinear generalizations, we must expect either multiple solutions to such two-point problems or none at all.

To illustrate typical behavior near a (simple) turning point , consider the equation

for a smooth h(x) > 0. Oscillatory behavior for x < 0 and exponential behavior for x > 0 are provided by the WKB solutions. Locally, i.e. near the turning point x = 0, we naturally use the Airy equation

as a comparison equation . Its linearly independent solutions are the Airy functions Ai(t), and Bi(t) (cf. Olver et al. [361]). Their asymptotic behavior as \(t \rightarrow \pm \infty \) is well known, e.g.,

The connections to the WKB solutions follow the Langer transformation

for constants C 1 and C 2,

and the corresponding limits as \(t = \frac{S(x)} {\epsilon ^{2/3}} \rightarrow \pm \infty \) (cf. Wasow [515] for details of the so-called connection problem ). Expansions can also be found for multiple and, even, coalescing turning points. Fowkes [152] solves the problem using multiple scale methods.

More generally, it’s often valuable to realize the equivalence of the Riccati differential equation

and second-order linear homogeneous differential equations. The transformation

in (2.45) implies that w will satisfy the linear equation

Many times, its solutions can be provided in terms of special functions, thereby giving solutions y of the Riccati equation (2.45) as well through (2.46). On the other hand, if we can guess (or otherwise ascertain) a differentiable solution y of the Riccati equation, it determines a nontrivial solution w of the linear equation (2.47) and, by reduction of order, the general solution. Other transformations for linear equations are given in Kamke [232] and Fedoryuk [145].

Note, in particular, that the second-order linear equation

can be converted to

by setting

and that the latter equation can be solved asymptotically by taking

or, equivalently, by setting

for a power series C (cf. Bender and Orszag [36]), which can be sought termwise with respect to ε.

(d) The Regular Perturbation Procedure

In the following, we shall consider it natural and straightforward (even central to singular perturbations) to use a regular perturbation method to find power series solutions to nonlinear vector initial value problems

based on knowing a smooth vector solution x 0(t) to the limiting nonlinear problem

on some bounded interval 0 ≤ t ≤ T. Assuming sufficient smoothness of f and c and the series expansions

with smooth coefficients f j and

we shall let

Expanding about ε = 0,

and equating successive coefficients of powers of ε in (2.52), we naturally require

etc. These linear equations for x j with j ≥ 1 can be successively and uniquely solved using the nonsingular fundamental matrix Φ for the linearized homogeneous system

for the Jacobian

and the identity matrix I (cf. Brauer and Nohel [59]). When A is constant, Φ is the matrix exponential e At. Recall the variation of constants (parameters) method to solve the linear vector initial value problem

Set

for an unspecified vector w. First note that the unique Φ can be found by iterating in the integral equation

corresponding to (2.57). This yields the approximations

etc. (sometimes called the matrizant ) which converge. Differentiating y, we get \(\dot{y} =\dot{\varPhi } w +\varPhi \dot{ w} = A\varPhi w + b(t)\), so we will need

Integrating, we uniquely obtain \(w(t) = y(0) +\int _{ 0}^{t}\varPhi ^{-1}(s)b(s)\,ds\) (since Φ will be, at least locally, nonsingular). Thus,

as can be readily checked.

Knowing the solution x 0(t) (of (2.53)) for ε = 0 and the resulting Φ (cf.(2.57)), we next obtain

and, then, in turn

etc. We expect the series (2.54) for x to converge uniformly for ε small and bounded t. This justifies the regular perturbation technique, which we will henceforth apply routinely. When the assumptions don’t apply, the asymptotic solution may not simply be a power series in ε. Puiseaux expansions in fractional powers of ε (cf. [17]) are a possibility. As we will ultimately find, however, we cannot expect to blindly use these approximate solutions on intervals where t becomes unbounded. A proof on finite intervals is given in de Jager and Jiang [224]. See Smith [466] for the celebrated example of Einstein’s equation for the motion of Mercury about the sun.

More generally, one might also use such regular perturbation (i.e., power series) methods to solve operator equations

for small ε, justified by applying the implicit function theorem under appropriate conditions (cf. Miller [318]) to get an analytic solution u(x, ε) (cf. Baumgärtel [32] and Krantz and Parks [262]). Also, see Kato [243] and Avrachenkov et al. [17] regarding linear operators.

A classic example involves the zeroes of the Wilkinson polynomial

(cf. Wilkinson [523], Bender and Orszag [36], and Moler [324]). Its complex roots are extremely sensitive to perturbations. Corresponding to k = 15, the first correction is of the order 5 ε 1010, providing extreme sensitivity of the perturbation.

In ending the chapter, we want to emphasize that we have severely restricted the topics covered, keeping in mind our limited later needs. More generally, the use of iteration methods to obtain asymptotic expansions is often very efficient, as is the use of convergence acceleration methods (cf. Weniger [518]), among many other computational techniques. We recommend Barenblatt [27] and [28]’s unique development of intermediate asymptotics , relating and extending basic concepts from dimensional analysis, self-similarity, and scaling. Consulting the extensive literature cited is highly recommended! Bosley [53] even provides a numerical version of asymptotics.

Among recent texts, Zeytounian [533] attempts to model viscous, compressible, heat-conducting, Newtonian, baroclinic, and nonadiabatic fluid flow using

the art of modeling assisted, rationally, by the spirit of asymptotics.

Motivation for such rational asymptotic modeling is found in the autobiography Zeytounian [534].

Example 1

Gobbi and Spigler [171] consider the scalar singular linear two-point boundary value problem

Since the auxiliary polynomial ε 2 λ 2 − 1 = 0 provides the complementary solutions e −x∕ε and e −(1−x)∕ε, we can use variation of parameters to find the general solution

of the nonhomogeneous differential equation (2.63). The boundary conditions imply that

up to asymptotically negligible amounts. Since \(\varGamma (\frac{1} {2}) = \sqrt{\pi }\),

Within (0, 1), the first two terms are asymptotically negligible, while the primary contributions to the two integrals come from near t = x. Indeed,

and the second integral has the same limit. Thus, as expected,

Because the asymptotic solution is symmetric about 1∕2 and the outer limit is unbounded at the endpoints, further analysis is necessary to determine the asymptotic behavior in the endpoint boundary layers. Computations for ε = 0. 01 and 0. 0025 provide spikes near 0 and 1 with \(O(1/\sqrt{\epsilon })\) maxima, as found.

Example 2

(cf. Kapila [234]). Because \(\dot{y} > 0\) for 0 < y < 1, we know that the solution y increases monotonically from ε to the rest point 1 at \(t = \infty \). The exact solution can be obtained by separating variables since integrating \(\left (\frac{1} {y} + \frac{1} {1-y} + \frac{1} {y^{2}} \right )dy = dt\) implies that

This implicit result shows, e.g., that

while

and

Thus, the ultimate explosion is long delayed when ε is small.

For bounded values of t, the preignition solution can be represented by a small regular perturbation expansion

satisfying

and

etc. termwise. Thus

This breaks down as the slow-time \(\tau \equiv \epsilon t\) grows. Indeed, the explosion takes place about

as can be verified numerically for, say, ε = 1∕10. One might say that a boundary layer (nonuniform convergence) occurs as \(t \rightarrow \infty \).

Readers should be aware that one of the most successful texts presenting asymptotic methods has been Bender and Orszag [36], reprinted by Springer in 1999. Originated at MIT to teach the ubiquitous course in advanced mathematical methods for scientists and engineers, it featured easy, intermediate, difficult, and theoretical sections, corresponding exercises, and quotes from Sherlock Holmes at the beginning of each chapter. Paulsen [387] is a well-written new textbook seeking to simplify Bender and Orszag and make its subject more accessible.

To illustrate the centrality of asymptotics, we quote Dvortsina [128] regarding the prominent Soviet physicist I. M. Lifshitz (co-author of many outstanding texts with Nobel prizewinner Lev Landau):

Everyone who knew Lifshitz remembers well that every time he began a discussion of any work he asked first of all: “What small parameter did you choose?” He meant to say that in the majority of problems solved by theoretical physics the smallness of some quantity is always used.

After reading this chapter, and perhaps trying the exercises, the author hopes you no longer think asymptotic approximations are some sort of mystical constructions. They’re down to earth!

Exercises

-

1.

(Awrejcewicz and Krysko [18]) Show that

$$\displaystyle\begin{array}{rcl} \sin 2\epsilon & \sim & 2\epsilon -\frac{4} {3}\epsilon ^{3} + \frac{4} {15}\epsilon ^{5} +\ldots {}\\ & \sim & 2\tan \epsilon - 2\tan ^{3}\epsilon - 2\tan ^{5}\epsilon +\ldots {}\\ & \sim & 2\ln (1+\epsilon )+\ln (1+\epsilon ^{2})-2\ln (1 +\epsilon ^{3})+\ln (1 +\epsilon ^{4})+\frac{2} {5}\ln (1+\epsilon ^{5})+\ldots {}\\ & \sim & 6\left ( \frac{\epsilon } {3 + 2\epsilon ^{2}}\right ) -\frac{756} {5} \left ( \frac{\epsilon } {3 + 2\epsilon ^{2}}\right )^{5} +\ldots. {}\\ \end{array}$$ -

2.

(Awrejcewicz and Krysko [18]) Consider

$$\displaystyle{f(x,\epsilon ) = \sqrt{x+\epsilon }.}$$Note that \(f(0,\epsilon ) = \sqrt{\epsilon }\). Show that

$$\displaystyle{f(x,\epsilon ) = \sqrt{x}\left (1 + \frac{\epsilon } {2x} - \frac{\epsilon ^{2}} {8x^{2}} + \frac{\epsilon ^{3}} {16x^{3}}-\ldots \right )}$$when \(\frac{\epsilon }{x} \rightarrow 0\), so the expansion is nonuniform.

-

3.

-

a.

Find a power series expansion for the solution of the initial value problem

$$\displaystyle{y^{{\prime}} = 1 + y^{2},\ \ y(0) =\epsilon.}$$ -

b.

Find the exact solution and determine the first four terms of its power series about ε = 0.

-

a.

-

4.

Find the first three terms of two power series solutions

$$\displaystyle{u(x,\epsilon ) = u_{0}(x) +\epsilon u_{1}(x) +\epsilon ^{2}u_{ 2}(x)+\ldots }$$of the nonlinear differential equation

$$\displaystyle{\epsilon u^{{\prime\prime}} = u^{2} - u +\epsilon x.}$$ -

5.

-

a.

Find a regular perturbation solution to the initial value problem

$$\displaystyle{y^{{\prime}} = 1 + y^{2} +\epsilon y,\ \ \ y(0) =\epsilon.}$$Where does it become singular?

-

b.

Solve the equation

$$\displaystyle{w^{{\prime\prime}}-\epsilon w^{{\prime}} + 1 = 0}$$and determine

$$\displaystyle{y = -\frac{w'} {w}.}$$Where is it singular?

-

a.

-

6.

(cf. Hoppensteadt [213]) Consider the initial value problem

$$\displaystyle{\dot{x} = -x^{3} +\epsilon x,\ \ \ x(0) = 1,\ \ t \geq 0.}$$-

a.

Find the first two terms of the regular perturbation expansion.

-

b.

Find the exact solution and its limit as \(t \rightarrow \infty \).

-

c.

Explain the breakdown of the regular perturbation expansion.

-

a.

-

7.

(Hsieh and Sibuya [220]) Consider the two-point problem

$$\displaystyle{y^{{\prime\prime}} =\epsilon \sin \left ( \frac{x} {100 - y^{2}}\right ),-1 \leq x \leq 1,\ \ \ y(-1) = y(1) = 0.}$$Obtain the solution y = ϕ(x, c, ε) by “shooting,” i.e. by solving the initial value problem

$$\displaystyle{y^{{\prime\prime}} =\epsilon \sin \left ( \frac{x} {100 - y^{2}}\right ),\ \ y(-1) = 0,\ \ y^{{\prime}}(-1) = c(\epsilon )}$$for an appropriate c(ε). Determine the first two terms in the power series for y(x, ε) and c(ε). Observe the extensive and effective use of the shooting method in Hastings and McLeod [198].

-

8.

A typical ODE exercise is to compute the terms of the power series solution to the initial value problem

$$\displaystyle{y^{{\prime}} = x^{2} + y^{2},\ \ \ y(0) = 2.}$$-

a.

Set

$$\displaystyle{y(x) =\sum _{ n=0}^{\infty }C_{ n}x^{n}}$$and show that C 0 = 2, C 1 = 4, C 2 = 8, \(C_{3 } = \frac{49} {3}\), and \(C_{n+1} = \frac{1} {n+1}\sum _{p=0}^{n}C_{ p}C_{n-p}\), n ≥ 3.

-

b.

Convert the equation to the Weber equation

$$\displaystyle{w^{{\prime\prime}} + x^{2}w = 0}$$by using the representation w = e −∫ 0 x y(s) ds .

-

c.

Find the power series for w about x = 0, checking that \(y = -\frac{w^{{\prime}}} {w}\). The radius of convergence for w is infinite. Note that w can be expressed in terms of parabolic cylinder (or Weber) functions .

-

a.

-

9.

(Kevorkian and Cole [249]) Consider the initial value problem

$$\displaystyle{u^{{\prime\prime}} + u =\epsilon f(x)u}$$$$\displaystyle{u(0) = 0,\ \ u^{{\prime}}(0) = 1,\ \ x \geq 0.}$$Show that a necessary condition that the regular perturbation expansion of the solution be uniformly valid on x ≥ 0 is to have ∫ 0 x f(s) ds bounded there.

References

M. Abramowitz, I.A. Stegun (eds.), Handbook of Mathematical Functions, vol. 55 of National Bureau of Standards Applied Mathematics Series (U.S. Government Printing Office, Washington, DC, 1964). Reprinted by Dover, New York

I.V. Andrianov, L.I. Manevitch, Asymptotology: Ideas, Methods, and Applications (Kluwer, Dordrecht, 2002)

V.I. Arnold, V.V. Kozlov, A.I. Neishtadt, Mathematical Aspects of Classical and Celestial Mechanics, 2nd edn. (Springer, Berlin, 1997)

K.E. Avrachenkov, J.A. Filar, P.G. Howlett, Analytic Perturbation Theory and Its Applications (SIAM, Philadelphia, 2013)

J. Awrejcewicz, V.A. Krysko, Introduction to Asymptotic Methods (Chapman and Hall/CRC, Boca Raton, FL, 2006)

B. Baillaud, H. Bourget (eds.), Correspondance d’Hermite et de Stieltjes (Gauthier-Villars, Paris, 1905)

E.J. Barbeau, P.J. Leah, Euler’s 1760 paper on divergent series. Historia Math. 3, 141–160 (1976)

G.I. Barenblatt, Scaling, Self-Similarity, and Intermediate Asymptotics (Cambridge University Press, Cambridge, 1996)

G.I. Barenblatt, Scaling (Cambridge University Press, Cambridge, 2003)

H. Baumgärtel, Analytic Perturbation Theory for Matrices and Operators (Birkhäuser, Basel, 1985)

R. Beals, R. Wong, Special Functions: A Graduate Text (Cambridge University Press, Cambridge, 2010)

C.M. Bender, S.A. Orszag, Advanced Mathematical Methods for Scientists and Engineers (McGraw-Hill, New York, 1978)

I.P. van den Berg, Nonstandard Asymptotic Analysis, vol. 1249 of Lecture Notes in Math. (Springer, Heidelberg, 1987)

N. Bleistein, R.A. Handelsman, Asymptotic Expansions of Integrals (Holt, Rinehart and Winston, New York, 1975)

E. Borel, Lectures on Divergent Series, 2nd edn. (U.S. Energy Research and Development Administration, Washington, DC, 1975). Translated by C. L. Critchfield and A. Vakar

D.L. Bosley, A technique for the numerical verification of asymptotic expansions. SIAM Rev. 38, 128–135 (1996)

J.P. Boyd, Weakly Nonlocal Solitary Waves and Beyond-All-Orders Asymptotics (Kluwer, Dordrecht, 1998)

J.P. Boyd, The devil’s invention: Asymptotic, superasymptotic, and hyperasymptotic series. Acta Appl. Math. 56, 1–98 (1999)

J.P. Boyd, Hyperasymptotics and the linear boundary layer problem: Why asymptotic series diverge. SIAM Rev. 47, 553–575 (2005)

F. Brauer, J. Nohel, The Qualitative Theory of Ordinary Differential Equations: An Introduction (Benjamin, New York, 1969)

D.M. Cannell, George Green: Mathematician and Physicist, 1793–1843: The Background to his Life and Work (Athlone Press, London, 1993)

A.-L. Cauchy, Sur l’emploi légitime des séries divergentes. Comp. Rend. 17, 370–376 (1843)

H. Cheng, Advanced Analytic Methods in Applied Mathematics, Science, and Engineering (LuBan Press, Boston, 2007)

E.A. Coddington, N. Levinson, Theory of Ordinary Differential Equations (McGraw-Hill, New York, 1955)

E.T. Copson, Asymptotic Expansions (Cambridge University Press, Cambridge, 1965)

O. Costin, Asymptotics and Borel Summability (CRC Press, Boca Raton, FL, 2009)

R. Courant, D. Hilbert, Methods of Mathematical Physics, vol. 1 (Interscience, New York, 1953)

R.B. Dingle, Asymptotic Expansions: Their Derivation and Interpretation (Academic Press, London, 1973)

N.S. Dvortsina, Ilia Mikhailovich Lifshitz, 1917–1982. Nauka, Moscow (1989). In Russian

A. Erdélyi, Asymptotic Expansions (Dover, New York, 1956)

M.V. Fedoryuk, Asymptotic Analysis (Springer, New York, 1994)

W.B. Ford, Studies in Divergent Series and Summability (Macmillan, New York, 1916)

N.D. Fowkes, A singular perturbation method. Part I. Quart. Appl. Math. XXVI, 57–69 (1968)

N. Fröman, P.O. Fröman, Physical Problems Solved by the Phase-Integral Method (Cambridge University Press, Cambridge, 2002)

R. Gobbi and R. Spigler, Comparing Shannon to autocorrelation-based wavelets for solving singularly perturbed boundary value problems. BIT 52(1), 21–43 (2012)

J. Gray, Henri Poincaré: A Scientific Biography (Princeton University Press, Princeton, NJ, 2012)

G. Green, An Essay on the Applications of Mathematical Analysis to the Theories of Electricity and Magnetism. Printed for the author by T. Wheelhouse, Nottingham, 1828

G.H. Hardy, Orders of Infinity: the “Infinitärcalcül” of Paul DuBois-Reymond (Cambridge University Press, Cambridge, 1910)

G.H. Hardy, A Mathematician’s Apology (Cambridge University Press, Cambridge, 1940)

G.H. Hardy, Divergent Series (Oxford University Press, Oxford, 1949)

S.P. Hastings, J.B. McLeod, Classical Methods in Ordinary Differential Equations: with Applications to Boundary Value Problems (Amer. Math. Soc, Providence, 2012)

J. Heading, An Introduction to the Phase-Integral Methods (Methuen, London, 1962)

F.C. Hoppensteadt, Analysis and Simulation of Chaotic Systems, 2nd edn. (Springer, New York, 2000)

C.J. Howls, Exponential asymptotics and boundary value problems: keeping both sides happy at all orders. Proc. R. Soc. Lond. A 466, 2771–2794 (2010)

P.-F. Hsieh, Y. Sibuya, Basic Theory of Ordinary Differential Equations (Springer, New York, 1999)

E.M. de Jager, Jiang Furu, The Theory of Singular Perturbations (Elsevier, Amsterdam, 1996)

D.S. Jones, Introduction to Asymptotics: A Treatment using Nonstandard Analysis (World Scientific, Singapore, 1997)

E. Kamke, Differentialgleichungen Lösungsmethoden und Lösungen (Becker and Erler, Leipzig, 1944)

A.K. Kapila, Asymptotic Treatment of Chemically Reacting Systems (Pitman, London, 1983)

T. Kato, Perturbation Theory for Linear Operators (Springer, Berlin, 1976)

J.B. Keller, Accuracy and validity of the Born and Rytov approximations. J. Opt. Soc. Am. 59(8), 1003–1004 (1969)

J.B. Keller, R.M. Lewis, Asymptotic Methods for Partial Differential Equations: The Reduced Wave Equation and Maxwell’s Equation, vol. 1 of Surveys in Applied Mathematics, ed. by J.B. Keller, G. Papanicolaou, D.W. McLaughlin (Plenum, New York, 1995), pp. 1–82

J. Kevorkian, J.D. Cole, Multiple Scale and Singular Perturbation Methods (Springer, New York, 1996)

M. Kline, Mathematical Thought from Ancient to Modern Times (Oxford University Press, New York, 1972)

S.G. Krantz, H.R. Parks, The Implicit Function Theorem: History, Theory, and Applications (Birkhäuser, Boston, 2002)

M.D. Kruskal, Asymptotology, in Mathematical Models in the Physical Sciences, ed. by S. Drobot (Prentice-Hall, Englewood Cliffs, NJ, 1963), pp. 17–48

G.E. Latta, Singular perturbation problems. PhD thesis, California Institute of Technology, Pasadena, CA, 1951

D. Leavitt, The Indian Clerk: A Novel (Bloomsbury, New York, 2007)

J.E. Littlewood, A Mathematician’s Miscellany (Methuen, London, 1953)

J.A.M. McHugh, An historical survey of ordinary differential equations with a large parameter and turning points. Arch. Hist. Exact Sci. 7, 277–324 (1971)

P.D. Miller, Applied Asymptotic Analysis (Amer. Math. Soc., Providence, RI, 2006)

C. Moler, Wilkinson’s polynomials, MATLAB CENTRAL-Cleve’s corner: Cleve Moler on Mathematics and Computing (March 4, 2013)

J.A. Murdock, Perturbations: Theory and Methods (Wiley-Interscience, New York, 1991)

J.D. Murray, Asymptotic Analysis (Springer, New York, 1984)

A.B. Olde Daalhuis, Exponential asymptotics, in Orthogonal Polynomials and Special Functions: Leuven 2002, vol. 1817 of Lecture Notes in Mathematics, ed. by E. Koelink, W. Van Assche (Springer, Berlin, 2003), pp. 211–244

F.W.J. Olver, Asymptotics and Special Functions (Academic Press, New York, 1974)

F.W.J. Olver, D.W. Lozier, R.F. Boisvert, C.W. Clark, Handbook of Mathematical Functions (Cambridge University Press, Cambridge, 2010)

C.-H. Ou, R. Wong, Exponential asymptotics and adiabatic invariance of a simple oscillator. C. R. Acad. Sci. Paris Ser. I 343(7), 457–462 (2006)

R.B. Paris, Hadamard Expansions and Hyperasymptotic Evaluation: An Extension of the Method of Steepest Descents (Cambridge University Press, Cambridge, 2011)

W. Paulsen, Asymptotic Analysis and Perturbation Theory (CRC Press, Boca Raton, FL, 2014)

H. Poincaré, Sur les intégrales irrégulières des équations lineaires. Acta Math. 8, 295–344 (1886)

E.D. Rainville, Infinite Series (Macmillan, New York, 1967)

J.-P. Ramis, Séries divergentes et théories asymptotiques. In Panoramas et Synthèses, vol. 121 (Soc. Math. France, Paris, 1993), pp. 1–74

R.V. Ramnath, Multiple Scales Theory and Aerospace Applications (AIAA Education Series, Renton, VA, 2010)

G.C. Rota, Indiscrete Thoughts (Birkhäuser, Boston, 1997)

R. Roy, Sources in the Development of Mathematics: Series and Products from the Fifteenth to the Twenty-first Century (Cambridge University Press, Cambridge, 2011)

A. Schissel, The development of asymptotic solutions of linear ordinary differential equations, 1817–1920. Arch. Hist. Exact Sci. 16, 307–378 (1977)

A. Schissel, The initial development of the WKB solutions of linear second-order ordinary differential equations and their use in the connection problem. Historia Math. 4, 183–204 (1977)

D.R. Smith, Singular-Perturbation Theory: An Introduction with Applications (Cambridge University Press, Cambridge, 1985)

J. Spencer (with L. Florescu), Asymptopia (American Mathematical Society, Providence, 2014)

I. Stakgold, Green’s Functions and Boundary Value Problems (Wiley-Interscience, New York, 1979)

B. Yu. Sternin, V.E. Shatalov, Borel-Laplace Transform and Asymptotic Theory: Introduction to Resurgent Analysis (CRC Press, Boca Raton, FL, 1996)

T.J. Stieltjes, Recherches sur quelques séries semi-convergentes. Ann. de L’Éc. Norm. Sup. 3, 201–258 (1886)

J. Tucciarone, The development of the theory of summable divergent series from 1880 to 1925. Arch. Hist. Exact Sci. 10, 1–40 (1973)

F. Ursell, Integrals with a large parameter. A strong form of Watson’s lemma, in Elasticity, Mathematical Methods, and Applications, ed. by G. Eason and R.W. Ogden. (Ellis Horwood, Chichester, 1991)

V.S. Varadarajan, Euler Through Time: A New Look at Old Themes (Amer. Math. Soc., Providence, RI, 2006)

F. Verhulst, Methods and Applications of Singular Perturbations: Boundary Layers and Multiple Timescale Dynamics (Springer, New York, 2005)

F. Verhulst, Henri Poincaré, Impatient Genius (Springer, New York, 2012)

W. Wasow, Asymptotic Expansions for Ordinary Differential Equations (Wiley-Interscience, New York, 1965)

W. Wasow, Linear Turning Point Theory (Springer, New York, 1985)

E.J. Weniger, Nonlinear sequence transformations for the acceleration and the summation of divergent series. Comp. Phys. Rep. 10, 189–371 (1989)

R.B. White, Asymptotic Analysis of Differential Equations (Imperial College Press, London, 2010)

E.T. Whittaker, G.N. Watson, A Course of Modern Analysis, 4th edn. (Cambridge University Press, Cambridge, 1952)

J.H. Wilkinson, The evaluation of zeroes of ill-conditioned polynomials: Part 1. Num. Math. 1, 150–166 (1959)

R. Wong, Asymptotic Approximation of Integrals (Academic Press, New York, 1989)

R. Wong, A panoramic view of asymptotics, in Foundations of Computational Mathematics, Hong Kong 2008, ed. by F. Cucker, A. Pinkus, M.J. Todd (Cambridge University Press, Cambridge, 2009), pp. 190–235

A. Zettl, Sturm-Liouville Theory (Amer. Math. Soc., Providence, RI, 2005)

R.Kh. Zeytounian, Navier-Stokes-Fourier Equations: A Rational Asymptotic Modeling Point of View (Springer, Heidelberg, 2012)

R.Kh. Zeytounian, Five Decades of Tackling Models for Stiff Fluid Dynamics Problems: A Scientific Autobiography (Springer, Berlin, 2014)

Author information

Authors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this chapter

Cite this chapter

O’Malley, R.E. (2014). Asymptotic Approximations. In: Historical Developments in Singular Perturbations. Springer, Cham. https://doi.org/10.1007/978-3-319-11924-3_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-11924-3_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-11923-6

Online ISBN: 978-3-319-11924-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)