Abstract

A reduced-order strategy based on the reduced basis (RB) method is developed for the efficient numerical solution of statistical inverse problems governed by PDEs in domains of varying shape. Usual discretization techniques are infeasible in this context, due to the prohibitive cost entailed by the repeated evaluation of PDEs and related output quantities of interest. A suitable reduced-order model is introduced to reduce computational costs and complexity. Furthermore, when dealing with inverse identification of shape features, a reduced shape representation allows to tackle the geometrical complexity. We address both challenges by considering a reduced framework built upon the RB method for parametrized PDEs and a parametric radial basis functions approach for shape representation. We present some results dealing with blood flows modelled by Navier-Stokes equations.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

In a parametrized context, given a mathematical model of a system the forward problem consists in evaluating some outputs of interest (depending on the PDE solution) for specified parameter inputs. Whenever some parameters are uncertain, we aim at inferring their values (and/or distributions) from indirect observations by solving an inverse problem: given an observed output, can we deduce the value of the parameters that resulted in this output? Parameter identification can be performed in two ways, either in a deterministic or in a statistical framework. In the former case, we solve an optimization problem by minimizing (in the least-square sense) the discrepancy between the output quantities predicted by the PDE model and observations: this leads to a single-point estimate in the parameter space, provided the optimization problem is feasible. In the latter case, we quantify the relative likelihood of the parameters, which are consistent with the observed output. Following a Bayesian approach, this results in the posterior probability density function, which includes information both on prior knowledge on parameters distribution and on the model used to compute the PDE-based outputs. Inverse problems governed by PDEs entail several computational challenges for current discretization techniques, such as the finite element method. When the parameters to be identified are related with the shape of the domain, the problem is even more complicated. In this framework, computational costs arise from three distinct sources: (i) numerical approximation of the state system (usually a nonlinear system of PDEs); (ii) handling domains of arbitrary shapes; (iii) sampling high-dimensional parameter spaces or performing numerical optimization procedures.

In this paper, we address these challenges by developing a reduced framework based on both state and parameter reduction, in order to devise a low-dimensional, computationally inexpensive but accurate model that predicts outputs of a high-fidelity, computationally expensive model.

The reduction in state is obtained through a reduced basis (RB) approximation [7]: thanks to a suitable offline/online stratagem, online PDE evaluations for any value of input parameters are completely independent of the expensive offline computation and storage of the basis functions. On the other hand, when input parameters are related to geometrical features, we rely on low-dimensional but flexible shape parametrizations, able to represent wide families of complex shapes by means of a handful of input parameters.

2 Inverse Problems Governed by PDEs

We introduce a compact description of general inverse problems governed by parametrized PDEs. We denote by \(\boldsymbol{\mu }\in \mathcal{D}\subset \mathbb{R}^{P}\) the finite-dimensional vector of parameters to be identified, and consider an input-output map \(\boldsymbol{\mu }\mapsto \boldsymbol{y}(\boldsymbol{\mu })\) from parameters to observations that is given by two discretized PDEs (taken here linear for notational simplicity):

State variables and observed outputs are denoted by \(\boldsymbol{u}_{N} \in \mathbb{R}^{N}\) and \(\boldsymbol{y}_{N} \in \mathbb{R}^{M}\), respectively. By subscript N we signify the dimension of the state space, dim\((\boldsymbol{u}_{N}) = N\), which in the case of Finite Element (FE) discretizations is typically very large, whereas the dimension of the parameter space, dim\((\boldsymbol{\mu }) = P\), and of the observation space, dim\((\boldsymbol{y}_{N}) = M\) can be different and typically P, M ≪ N. In our case, \(\boldsymbol{\mu }\) is related to the shape of the domain \(\varOmega =\varOmega (\boldsymbol{\mu })\) where the state problem is posed.

Whereas the forward problem is to evaluate \(\boldsymbol{y}(\boldsymbol{\mu })\) given \(\boldsymbol{\mu }\), the inverse problem can be formulated as follows [3, 5]: given an observation \(\boldsymbol{y}^{{\ast}} =\boldsymbol{ y}+\boldsymbol{\varepsilon }\) with (additive) noise \(\boldsymbol{\varepsilon }\), find the parameter \(\boldsymbol{\mu }^{{\ast}}\) that satisfies \(\mathbf{y}^{{\ast}} = C_{N}(\boldsymbol{\mu }^{{\ast}})\boldsymbol{u}_{N}(\boldsymbol{\mu }^{{\ast}})\). This problem is often ill-posed in one of three basic ways: (i) the solution \(\boldsymbol{\mu }^{{\ast}}\) does not exist (e.g. due to M > P and the presence of noise); (ii) the solution \(\boldsymbol{\mu }^{{\ast}}\) is not unique (e.g. due to M < P or data degeneracy); or (iii) the solution \(\boldsymbol{\mu }^{{\ast}}\) does not depend continuously on \(\boldsymbol{y}^{{\ast}}\). An example of an inverse problem that is ill-posed in the third sense is the Calderón problem of determining the conductivity field inside an object based on the observation of a Dirichlet-to-Neumann or Neumann-to-Dirichlet map on a subsection of the boundary.

2.1 A Deterministic Approach

In order to treat ill-posed inverse problems, the classical approaches [1] are largely based on solving regularized least-squares (RLS) problems of the type:

The first term minimizes the discrepancy between the observation \(\boldsymbol{y}^{{\ast}}\) and the model prediction \(\boldsymbol{y}_{N}(\boldsymbol{\mu })\) given by (1). The second term convexifies the problem and assures a unique estimator \(\boldsymbol{\mu }_{\mathrm{RLS}}^{{\ast}}\) is recovered. This approach is also sometimes called variational data assimilation. The choice of the norm \(\|\boldsymbol{\mu }\|_{r}:= \sqrt{\boldsymbol{\mu }^{T } R\boldsymbol{\mu }}\), the regularization parameter α > 0, and the prior value \(\boldsymbol{\mu }_{\mathrm{prior}}\) play an important role in the quality of the estimator \(\boldsymbol{\mu }_{\mathrm{RLS}}^{{\ast}}\).

2.2 A Bayesian Approach

Under the assumption of independent and identically distributed (i.i.d.) noise, \(\boldsymbol{\varepsilon }\sim \mathcal{N}(0,\sigma ^{2}I)\), and Gaussian parameter distribution, \(\mu \sim \mathcal{N}(\bar{\mu },\varSigma )\), it is easy to show that the maximum a posteriori (MAP) estimator

obtained by maximizing the conditional probability density function

coincides with the Tykhonov-regularized least-squares estimator, that is to say \(\boldsymbol{\mu }_{\mathrm{RLS}}^{{\ast}} =\boldsymbol{\mu }_{ \mathrm{MAP}}^{{\ast}}\), as long as we choose \(\boldsymbol{\mu }_{\mathrm{prior}} =\bar{\boldsymbol{\mu }}\), \(\alpha =\sigma ^{2}\), and R = Σ −1 in (2). In fact, the estimator given by (3) is an example of a wider class of statistical estimators called Bayesian estimators. The benefit of using statistical methods for solving inverse problems is that one is able to characterize the variance of the prediction \(\boldsymbol{\mu }^{{\ast}}\) due to measurement and model errors more precisely than from the single-point estimates obtained by solving (2).

Bayesian estimators are a subset of statistical estimators that are widely used to solve ill-posed inverse problems. The basic principle of Bayesian inference is that the conditional distribution of the unknown parameters \(\boldsymbol{\mu }\) given an observation \(\boldsymbol{y}^{{\ast}}\) can be approximated by

obtained by using the Bayes’ formula on a prior distribution \(\pi _{\boldsymbol{\mu },\mathrm{prior}}(\boldsymbol{\mu })\) for the unknown parameters. The prior \(\pi _{\boldsymbol{\mu },\mathrm{prior}}\) encapsulates our prior knowledge (structure, regularity, locality, etc.) about the distribution of the uncertain parameters and should be carefully selected based on problem-specific considerations – we do not treat this point in this work since selecting an informative prior is a challenging problem all by itself. The conditional distribution \(\pi _{\boldsymbol{y}^{{\ast}}\:\vert \boldsymbol{\mu }}\), which in the case of additive noise can be expressed as

is called the likelihood function. The posterior distribution \(\pi _{\boldsymbol{\mu },\mathrm{post}}\) can then be used to compute various estimators for \(\boldsymbol{\mu }^{{\ast}}\) and to provide conditional statistics such as covariances for these estimators. The advantage of the Bayesian approach compared to more classical methods is that a prior that carries sufficient information about the true underlying structure of the parameters often provides more meaningful estimates and regularizes the inverse problem in a more natural way than relying on abstract regularization terms, as in (2), that might not have any interpretation.

Statistical methods used to solve an inverse problem can be computationally much more expensive than the deterministic approach due to the necessity of performing sampling in high-dimensional spaces in order to compute sample statistics [3, 5]. This cost is exacerbated by the fact that each evaluation requires the solution of the forward problem in the form of a (potentially large-scale) discrete PDE. To this end we introduce a reduced order model to speed up the computations entailed by statistical inversion.

3 Computational and Geometrical Reduction

We now present a brief description of the two main blocks on which the reduced order model relies: reduced basis method for parametrized PDEs and radial basis functions for low-dimensional shape parametrization. Further methodological aspects and details and can be found e.g. in [6].

The reduced basis method provides an efficient way to compute an approximation \(\mathbf{u}_{n}(\boldsymbol{\mu })\) of the solution \(\mathbf{u}_{N}(\boldsymbol{\mu })\) (as well as an approximation \(\boldsymbol{y}_{n}(\boldsymbol{\mu })\) of the output \(\boldsymbol{y}_{N}(\boldsymbol{\mu })\)) through a Galerkin projection onto a reduced subspace made up of well-chosen full-order solutions (also called snapshots), corresponding to a set of parameter values \(S_{n} =\{\boldsymbol{\mu } ^{1},\ldots,\boldsymbol{\mu }^{n}\}\) selected by means of a greedy algorithm [7]. Let us denote by \(Z_{n} \in \mathbb{R}^{N\times n}\) the matrix

obtained by aligning the snapshot vectors (a Gram-Schmidt orthonormalization procedure has to be considered after each basis is added to the reduced space, but for the sake of simplicity we consider the same notation). We denote by \(n \ll N\) the dimension of the reduced state space. Then, the reduced-order solution is given by a linear combination \(Z_{n}\mathbf{u}_{n}(\boldsymbol{\mu })\) of the snapshots, being \(\boldsymbol{u}_{n} \in \mathbb{R}^{n}\) the solution of the following problem:

where

To get very fast input/output evaluations, RB methods rely on the assumption of affine parametric dependence in \(A_{N}(\boldsymbol{\mu })\) and \(\boldsymbol{f}_{N}(\boldsymbol{\mu })\), i.e. on the possibility to express \(A_{N}(\boldsymbol{\mu }) =\sum _{ q=1}^{Q_{A}}\varTheta _{A}(\boldsymbol{\mu })A_{N}^{q}\) and \(\boldsymbol{f}_{N}(\boldsymbol{\mu }) =\sum _{ q=1}^{Q_{\boldsymbol{f}}}\varTheta _{ \boldsymbol{f}}(\boldsymbol{\mu })\boldsymbol{f}_{N}^{q}\), so that the expensive \(\boldsymbol{\mu }\)-independent quantities can be evaluated and stored just once. This is a property inherited by the PDE model, which can be eventually recovered at the discretization stage [7].

Once the reduced model is built in the offline stage, it can be exploited at the online stage to speed up the solution of the optimization problem (2) in the deterministic case or (3) in the Bayesian case. The corresponding reduced-order version of the former reads as follows:

whereas in the case of a statistical inverse problem we obtain:

being

In this way, state reduction allows to speed up both numerical optimization schemes or sampling algorithms required e.g. to compute statistical estimates based on the posterior distribution.

Concerning parameter space reduction, here we consider a low-dimensional parametrization based on Radial Basis Functions (RBF), an interpolatory technique which allows to define shape deformations through a set of control points (which can be freely chosen, according to the family of deformations to be described), i.e. a linear combination of affine and radial, nonaffine terms; see e.g. [6] for more insights. In this way, parameter space reduction is afforded by selecting only a small set of \(P \approx \mathcal{O}(10)\) control points at a preceding stage – state reduction through the RB method is built for a problem where shape parametrization has already been performed. A RB paradigm for simultaneous state and parameter reduction has been introduced in [5] in order to tackle the case of distributed parametric fields (instead of parameter vectors), and represents a possible extension of our current framework.

4 Application and Results

We now apply the reduced framework of the previous section to the solution of an inverse problem arising in modeling of blood flows. Since a strong mutual interaction exists between haemodynamic factors and vessels geometry, improving the understanding of the interplay between flows and geometries may be useful not only for the sake of design of better prosthetic devices [4], but also to characterize pathological risks, such as in the case of narrowing or thickening of an arterial vessel [3]. Typical portions of cardiovascular network where lesions and pathologies may develop are made up by curved vessels and bifurcations; an important segment where vessel diseases are often clinically observed is the human carotid artery [2, 6], which supplies blood to the head.Footnote 1

Let us consider a steady, incompressible Navier-Stokes model to describe blood flows in a two-dimensional carotid bifurcation (see Fig. 1):

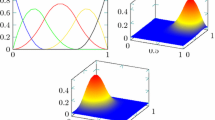

Left: shape representation of a stenosed carotid artery bifurcation through RBF parametrization. Right: velocity profiles (cm/s) in four different carotid bifurcations parametrized with respect to the diameters \(d_{c} = d_{c}(\mu _{1},\mu _{2})\) of the CCA at the bifurcation and \(d_{b} = d_{b}(\mu _{3},\mu _{4})\) of the mid-sinus level of the ICA

being \((\boldsymbol{v},p)\) the velocity and the pressure of the fluid, respectively, and ν > 0 its kinematic viscosity. In view of studying computationally expensive inverse problems, which entail the repeated simulation of these flow equations, we cannot afford at the moment the solution of PDE models involving more complex features, such as flow unsteadiness and arterial wall deformability – computational costs would be too prohibitive.

In this context, a typical forward problem is the evaluation of flow indices related with geometry variation that assess/measure the occlusion risk. Typical examples are given by vorticity, shear rates, wall shear stresses. On the other hand, we might be interested in recovering some geometrical features by observing some physical index related to flow variables. In particular, the inverse problem we want to solve is the following: is it possible to identify the entity of the occlusions (i.e. the diameters d c of the CCA at the bifurcation and d b of the mid-sinus level of the ICA, respectively) from the observation of the mean pressure drop

between the internal carotid outflow Γ out and the inflow Γ in ?

To exploit the reduced framework presented in Sect. 3, we represent local shape deformations through a RBF parametrization built over p = 4 control points (represented as the bullets in Fig. 1), located in one of the branches and close to the bifurcation. In this case, Gaussian RBFs have been used in order to describe local but moderate deformations representing possible stenoses, being \(\boldsymbol{\mu }\in \mathcal{D} = [-0.25,0.25]^{4}\) the vector of the displacements of the control point in the horizontal direction; see [6] for more details.

By applying the RB method to the parametrized Navier-Stokes problem (8) we reduce the dimension of the state space from \(N \approx 26,000\) (\(\mathbb{P}_{2}/\mathbb{P}_{1}\) FE discretization) to n = 45. Four examples of computed RB solutions are reported in Fig. 1. We remark the strong sensitivity of the flow with respect to varying diameters \(d_{c} = d_{c}(\mu _{1},\mu _{2})\) of the CCA at the bifurcation and \(d_{b} = d_{b}(\mu _{3},\mu _{4})\) of the mid-sinus level of the ICA, respectively. See e.g. [3, 6] for more insights on RB methodology for nonlinear Navier-Stokes equations.

Thus, we can take advantage of both the deterministic and the Bayesian framework to solve this inverse identification problem, by considering surrogate measurements of the mean pressure drop.

In the first case, we demonstrate the solution of the deterministic inverse problem for two different observed values of the pressure drop, s ∗ = −1, 400 and s ∗ = −2, 200, by assuming 5 % relative additive noise in the measurements. The results of the inverse identification problem are given in Fig. 2 for 100 realization of random noise in both cases: each point in the graph corresponds to the recovered diameters (d c , d b ) given a noisy observation. We observe that in the case s ∗ = −1, 400 recovered values of the diameters are more smeared out, since locally the pressure drop surface is almost flat, but result is close in values to the considered observation.

Thus, in the former case s ∗ = −1, 400 the inverse problem is worse conditioned than in the latter s ∗ = −2, 200, where the recovered values (d c , d b ) lie in a smaller region of the space. However, the solution of a single optimization problem is more feasible in the former case compared to the latter: solving 100 optimization problems took about 14 h in the former and about 25. 6 h in the latter case, respectively. We remark that solving 100 inverse problems of this type through a full-order discretization technique would have been infeasible on a standard workstation. Thus, even in presence of small noises, the result of a deterministic inverse problem may be very sensitive – just when one diameter is known, the second one can be recovered. This is due to the fact that several geometrical configurations – in terms of diameters (d c , d b ) – may correspond to the same output observation.

Following instead the Bayesian approach, we are able to characterize a set of configurations, rather than a single configuration: this is done by providing the joint probability distribution function for the (uncertain) diameters \((d_{c},d_{b})\) encapsulating the noise related to measurements, as discussed in Sect. 2.2. Let us denote by \(\mathbf{d} = (d_{c},d_{b})^{T} \in \mathbb{R}^{2}\) the vector of the two diameters and assume that the prior distribution is \(\pi _{\mathbf{d},\mathrm{prior}}(\boldsymbol{\mu }) \sim \mathcal{N}(\mathbf{d}_{M},\varSigma _{M})\), being \(\mathbf{d}_{M} \in \mathbb{R}^{2}\) the (prior) mean and \(\varSigma _{M} \in \mathbb{R}^{2\times 2}\) the (prior) covariance matrix, encapsulating a possible prior knowledge on the diameters distribution (e.g. from observations of previous shape configurations). By supposing that also the measurements of the pressure drop are expressed by i.i.d. Gaussian variables, such that \(\pi _{\mathrm{noise}}(\boldsymbol{y}^{{\ast}}-\boldsymbol{ y}_{n}(\mathbf{d})) \sim \mathcal{N}(0,\sigma ^{2})\), we can compute the explicit form of the posterior probability density \(\pi _{\mathbf{d},\mathrm{post}}(\mathbf{d}\vert \boldsymbol{y}^{{\ast}})\).

Thus, provided some preliminary information on plausible values of the diameters, the observation of a (large) sample of outputs allows to characterize a set of plausible configurations as the ones maximizing the posterior probability density \(\pi _{\mathbf{d},\mathrm{post}}(\mathbf{d}\vert \boldsymbol{y}^{{\ast}})\). In particular, we consider two different realizations of prior normal distributions, obtained by choosing the mean d M = (0. 803, 0. 684)T as given by the diameters corresponding to the reference carotid configuration, and

i.e., we assume that the two diameters are a priori independent (\(\varSigma _{M_{1}}\) case) or correlated (\(\varSigma _{M_{2}}\) case), respectively. The two prior distributions, as well as the resulting posterior distribution obtained for two different observed values s ∗ = −1, 400 and s ∗ = −2, 200 of the pressure drop are reported in Fig. 3. In the case at hand, we do not rely on the Metropolis-Hastings algorithm for the evaluation of the posterior distribution, since its expression can be computed explicitly. Thus, by computing a sample of 1, 600 values of pressure drops on a uniform 40 × 40 grid on the (d c , d b ) space, we obtain the posterior densities \(\pi _{\mathbf{d},\mathrm{post}}(\mathbf{d}\vert \boldsymbol{y}^{{\ast}})\) represented in Fig. 3 in about 0. 1 h, since any online evaluation of the reduced Navier-Stokes problem takes about 2. 5 s.

Top: two different choices of the prior distribution on diameters \(\mathbf{d} = (d_{c},d_{b})^{T}\); left: \(\pi _{\mathbf{d},\mathrm{prior}} \sim \mathcal{N}(\mathbf{d}_{M},\varSigma _{M_{1}})\), right: \(\pi _{\mathbf{d},\mathrm{prior}} \sim \mathcal{N}(\mathbf{d}_{M},\varSigma _{M_{2}})\). Center and bottom: results of the Bayesian inverse problems (left: \(\pi _{\mathbf{d},\mathrm{prior}} \sim \mathcal{N}(\mathbf{d}_{M},\varSigma _{M_{1}})\), right: \(\pi _{\mathbf{d},\mathrm{prior}} \sim \mathcal{N}(\mathbf{d}_{M},\varSigma _{M_{2}})\)) and observed pressure drop s ∗ = −1, 400 (second row) and s ∗ = −2, 200 (third row)

Notes

- 1.

The common carotid artery (CCA) bifurcates in the lower neck into two branches, the internal and the external carotid arteries (ICA and ECA, respectively). Stenoses, that is the narrowing of the inner portion of an artery, manifest quite often in the ICA.

References

Kaipio, J., Somersalo, E.: Statistical and Computational Inverse Problems. Applied Mathematical Sciences, vol. 160. Springer, New York (2005)

Kolachalama, V., Bressloff, N., Nair, P.: Mining data from hemodynamic simulations via Bayesian emulation. Biomed. Eng. Online 6(1), 47 (2007)

Lassila, T., Manzoni, A., Quarteroni, A., Rozza, G.: A reduced computational and geometrical framework for inverse problems in haemodynamics. Technical report MATHICSE 12.2011. 29(7), 741–776 (2013). http://mathicse.epfl.ch/

Lassila, T., Manzoni, A., Quarteroni, A., Rozza, G.: Boundary control and shape optimization for the robust design of bypass anastomoses under uncertainty. ESAIM: Math. Mod. Numer. Anal. 47(4), 1107–1131 (2013). http://dx.doi.org/10.1051/m2an/2012059

Lieberman, C., Willcox, K., Ghattas, O.: Parameter and state model reduction for large-scale statistical inverse problems. SIAM J. Sci. Comput. 32(5), 2523–2542 (2010)

Manzoni, A., Quarteroni, A., Rozza, G.: Model reduction techniques for fast blood flow simulation in parametrized geometries. Int. J. Numer. Methods Biomed. Eng. 28(6–7), 604–625 (2012)

Quarteroni, A., Rozza, G., Manzoni, A.: Certified reduced basis approximation for parametrized partial differential equations in industrial applications. J. Math. Ind. 1, 3 (2011)

Acknowledgements

This work was partially funded by the European Research Council Advanced Grant “Mathcard, Mathematical Modelling and Simulation of the Cardiovascular System” (Project ERC-2008-AdG 227058), and by the Swiss National Science Foundation (Projects 122136 and 135444).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this paper

Cite this paper

Manzoni, A., Lassila, T., Quarteroni, A., Rozza, G. (2014). A Reduced-Order Strategy for Solving Inverse Bayesian Shape Identification Problems in Physiological Flows. In: Bock, H., Hoang, X., Rannacher, R., Schlöder, J. (eds) Modeling, Simulation and Optimization of Complex Processes - HPSC 2012. Springer, Cham. https://doi.org/10.1007/978-3-319-09063-4_12

Download citation

DOI: https://doi.org/10.1007/978-3-319-09063-4_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-09062-7

Online ISBN: 978-3-319-09063-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)