Abstract

We give a basic and self-contained introduction to the mathematical description of electrical circuits that contain resistances, capacitances, inductances, voltage, and current sources. Methods for the modeling of circuits by differential–algebraic equations are presented. The second part of this paper is devoted to an analysis of these equations.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Electrical circuits

- Modelling

- Differential–algebraic equations

- Modified nodal analysis

- Modified loop analysis

- Graph theory

- Maxwell’s equations

2.1 Introduction

It is in fact not difficult to convince scientists and nonscientists of the importance of electrical circuits; they are nearly everywhere! To mention only a few, electrical circuits are essential components of power supply networks, automobiles, television sets, cell phones, coffee machines, and laptop computers (the latter two items have been heavily involved in the writing process of this article). This gives a hint to their large economical and social impact to the today’s society.

When electrical circuits are designed for specific purposes, there are, in principle, two ways to verify their serviceability, namely the “construct-trial-and-error approach” and the “simulation approach.” Whereas the first method is typically cost-intensive and may be harmful to the environment, simulation can be done a priori on a computer and gives reliable impressions on the dynamic circuit behavior even before it is physically constructed. The fundament of simulation is the mathematical model. That is, a set of equations containing the involved physical quantities (these are typically voltages and currents along the components) is formulated, which is later on solved numerically. The purpose of this article is a detailed and self-contained introduction to mathematical modeling of the rather simple but nevertheless important class of time-invariant nonlinear RLC circuits. These are analog circuits containing voltage and current sources as well as resistances, capacitances, and inductances. The physical properties of the latter three components will be assumed to be independent of time, but they will be allowed to be nonlinear. Under some additional, physically meaningful, assumptions on the components, we will further depict and discuss several interesting mathematical features of circuit models and give back-interpretation to physics.

Apart from the high practical relevance, the mathematical treatment of electrical circuits is interesting and challenging especially due to the fact that various different mathematical disciplines are involved and combined, such as graph theory, ordinary and partial differential equations, differential–algebraic equations, vector analysis, and numerical analysis.

This article is organized as follows: In Sect. 2.3, we introduce the physical quantities that are involved in circuit theory. Based on the fact that every electrical phenomenon is ultimately caused by electromagnetic field effects, we present their mathematical model (namely Maxwell’s equations) and define the physical variables voltage, current, and energy by means of electric and magnetic field and their interaction. We particularly highlight model simplifications that are typically made for RLC circuits. Section 2.4 is then devoted to the famous Kirchhoff laws, which can be mathematically inferred from the findings of the preceding section. It will be shown that graph theory is a powerful tool to formulate these equations and analyze their properties. Thereafter, in Sect. 2.5, we successively focus on mathematical description of sources, resistances, inductances, and capacitances. The relation between voltage and current along these components and their energetic behavior is discussed. Kirchhoff and component relations are combined in Sect. 2.6 to formulate the overall circuit model. This leads to the modeling techniques of modified nodal analysis and modified loop analysis. Both methods lead to differential–algebraic equations (DAEs), whose fundamentals are briefly presented as well. Special emphasis is placed on mathematical properties of DAE models of RLC circuits.

2.2 Nomenclature

Throughout this article we use the following notation.

\(\mathbb{N}\) | set of natural numbers |

\(\mathbb{R}\) | set of real numbers |

\(\mathbb{R}^{n,m}\) | the set of real n×m |

I n | identity matrix of size n×n |

\(M^{\mathrm{T}}\in\mathbb{R}^{m,n}\), \(x^{\mathrm{T}}\in\mathbb{R}^{1,n}\) | transpose of the matrix \(M\in\mathbb{R}^{n,m}\) and the vector \(x\in\mathbb{R} ^{n}\) |

\(\operatorname{im}M\), kerM | image and kernel of a matrix M, resp. |

M>(≥)0, | the square real matrix M is symmetric positive (semi)definite |

∥x∥ | \(= \sqrt{x^{\mathrm{T}} x}\), the Euclidean norm of \(x \in \mathbb{R}^{n}\) |

\(\mathcal{V}^{\bot}\) | orthogonal space of \(\mathcal{V} \subset\mathbb{R}^{n}\) |

\(\operatorname{sign}(\cdot)\) | sign function, i.e., \(\operatorname{sign}:\mathbb{R}\rightarrow \mathbb{R}\) with \(\operatorname{sign}(x)=1\) if x>0, \(\operatorname{sign}(0)=0\), and \(\operatorname{sign}(x)=-1\) if x<0 |

t | time variable \((\in\mathbb{R})\) |

ξ | space variable \((\in\mathbb{R}^{3})\) |

ξ x , ξ y , ξ z | components of the space variable \(\xi\in\mathbb{R}^{3}\) |

e x , e y , e z | canonical unit vectors in \(\mathbb{R}^{3}\) |

ν(ξ) | positively oriented tangential unit vector of a curve \(\mathcal {S}\subset\mathbb{R}^{3}\) in \(\xi\in\mathcal{S}\) |

n(ξ) | positively oriented normal unit vector of an oriented surface \(\mathcal{A}\subset\mathbb{R}^{3}\) in \(\xi\in\mathcal{A}\) |

u×v | vector product of \(u,v\in\mathbb{R}^{3}\) |

\(\operatorname{grad}f(t,\xi)\) | gradient of the scalar-valued function f with respect to the spatial variable |

\(\operatorname{div}f(t,\xi)\), \(\operatorname{curl}f(t,\xi )\) | divergence and, respectively, curl of an \(\mathbb{R}^{3}\)-valued function f with respect to the spatial variable |

∂Ω (\(\partial\mathcal{A}\)) | boundary of a set \(\varOmega\subset\mathbb{R}^{3}\) (surface \(\mathcal {A}\subset\mathbb{R}^{3}\)) |

\(\int_{\mathcal{S}}f(\xi)\,ds(\xi)\) (\(\oint_{\mathcal{S}}f(\xi)\,ds(\xi) \)) | integral of a scalar-valued function f over a (closed) curve \(\mathcal{A}\subset\mathbb{R}^{3}\) |

\(\iint_{\mathcal{A}}f(\xi)\,dS(\xi) \) ( | integral of a scalar-valued function f over a (closed) surface \(\mathcal{A}\subset\mathbb{R}^{3}\) |

∭ Ω f(ξ) dV(ξ) | integral of a scalar-valued function f over a domain \(\varOmega \subset\mathbb{R}^{3}\) |

The following abbreviations will be furthermore used:

2.3 Fundamentals of Electrodynamics

We present some basics of classical electrodynamics. A fundamental role is played by Maxwell’s equations. The concepts of voltage and current will be derived from these fundamental concepts and laws. The derivations will be done by using tools from vector calculus, such as the Gauss and Stokes theorems. Note that, in this section (as well as in Sect. 2.5, where the component relations will be derived), we will not present all derivations with full mathematical precision. For an exact presentation of smoothness properties on the involved surfaces, boundaries, curves, and functions to guarantee the applicability of the Gauss theorem and the Stokes theorem and interchanging the order of integration (and differentiation), we refer to textbooks on vector calculus, such as [1, 31, 37].

2.3.1 The Electromagnetic Field

The following physical quantities are involved in an electromagnetic field.

The current density and flux and field intensities are \(\mathbb{R}^{3}\)-valued functions depending on time \(t\in I\subset\mathbb{R}\) and spatial coordinate ξ∈Ω, whereas the electric charge density \(\rho:I\times \varOmega\rightarrow\mathbb{R}\) is scalar-valued. The interval I expresses the time period, and \(\varOmega\subset\mathbb{R}^{3}\) is the spatial domain in which the electromagnetic field evolves. The dependencies of the above physical variables are expressed by Maxwell’s equations [40, 57], which read

Further algebraic relations between electromagnetic variables are involved. These are called constitutive relations and are material-dependent. That is, they express the properties of the medium in which electromagnetic waves evolve. Typical constitutive relations are

for some functions \(f_{e},f_{m},g:\mathbb{R}^{3}\times\varOmega\rightarrow \mathbb{R}^{3}\). In the following, we collect some assumptions on f e , f m , and g made in this article. Their practical interpretation is subject of subsequent parts of this article.

Assumption 3.1

(Constitutive relations)

-

(a)

There exists some function \(V_{e}:\mathbb{R}^{3}\times\varOmega \rightarrow \mathbb{R}\) (electric energy density) with V e (D,ξ)>0 and V e (0,ξ)=0 for all ξ∈Ω, \(D\in\mathbb{R}^{3}\), which is differentiable with respect to D and satisfies

$$ \frac{\partial}{\partial D}V_e^{\mathrm{T}}(D,\xi )=f_e(D, \xi)\quad \text{for all}\ D\in\mathbb{R}^3,\xi\in\varOmega . $$(3) -

(b)

There exists some function \(V_{m}:\mathbb{R}^{3}\times\varOmega \rightarrow \mathbb{R}\) (magnetic energy density) with V m (B,ξ)>0 and V m (0,ξ)=0 for all ξ∈Ω, \(B\in\mathbb{R}^{3}\), which is differentiable with respect to B and satisfies

$$ \frac{\partial}{\partial B}V_m^{\mathrm{T}}(B,\xi )=f_m(B, \xi)\quad \text{for all}\ B\in\mathbb{R}^3,\xi\in\varOmega . $$(4) -

(c)

E T g(E,ξ)≥0 for all \(E\in\mathbb{R}^{3}\), ξ∈Ω.

If f e and f m are linear, assumptions (a) and (b) reduce to

for some symmetric and matrix-valued functions \(M_{e},M_{m}:\varOmega \rightarrow\mathbb{R}^{3,3}\) such that M e (ξ)>0 and M m (ξ)>0 for all ξ∈Ω. The functional relations between field intensities, displacement, and flux intensity then read

A remarkable special case is isotropy. That is, M e and M m are pointwise scalar multiples of the unit matrix, that is,

for positive functions \(\varepsilon ,\mu:\varOmega\rightarrow\mathbb{R}\). In this case, electromagnetic waves propagate with velocity c(ξ)=(ε(ξ)⋅μ(ξ))−1/2 through ξ∈Ω. In vacuum, we have

Consequently, the quantity

is the speed of light [30, 34].

As we will see soon, the function g has the physical interpretation of an energy dissipation rate. That is, it expresses energy transfer to thermodynamic domain. In the linear case, this function reads

where \(G:\varOmega\rightarrow\mathbb{R}^{3,3}\) is a matrix-valued function with the property that G(ξ)+G T(ξ)≥0 for all ξ∈Ω. In perfectly isolating media (such as the vacuum), the electric current density vanishes; the dissipation rate consequently vanishes there.

Assuming that f e , f m , and g fulfill Assumptions 3.1, we define the electric energy at time t∈I as the spatial integral of the electric energy density over Ω at time t. Consequently, the magnetic energy is the spatial integral of the magnetic energy density over Ω at time t, and the electromagnetic energy at time t is the sum over these two quantities, that is,

We are now going to derive an energy balance for the electromagnetic field: First, we see, by using elementary vector calculus, that the temporal derivative of the total energy density fulfills

The fundamental theorem of calculus and the Gauss theorem then implies the energy balance

A consequence of the above finding is that energy transfer is done by dissipation and via the outflow of the Poynting vector field \(E\times H:I\times\varOmega\rightarrow\mathbb{R}^{3}\).

The electromagnetic field is not uniquely determined by Maxwell’s equations. Besides imposing suitable initial conditions on electric displacement and magnetic flux, that is,

To fully describe the electromagnetic field, we further have to impose physically (and mathematically) reasonable boundary conditions [40]. These are typically zero conditions if \(\varOmega=\mathbb{R}^{3}\) (that is, lim∥ξ∥→∞ E(t,ξ)=lim∥ξ∥→∞ H(t,ξ)=0) or, in case of bounded domain Ω with smooth boundary, tangential or normal conditions on electrical or magnetic field, such as, for instance,

2.3.2 Currents and Voltages

Here we introduce the physical quantities that are crucial for circuit analysis.

Definition 3.2

(Electrical current)

Let \(\varOmega\subset\mathbb{R}^{3}\) describe a medium in which an electromagnetic field evolves. Let \(\mathcal{A}s\subset\varOmega\) be an oriented surface. Then the current through \(\mathcal{A}\) is defined by the surface integral of the current density, that is,

Remark 3.3

(Orientation of the surface)

Reversing the orientation of the surface means changing the sign of the current. The indication of the direction of a current is therefore a matter of the orientation of the surface.

Remark 3.4

(Electrical current in the case of absent charges/stationary case)

Let \(\varOmega\subset\mathbb{R}^{3}\) be a domain, and \(\mathcal {A}\subset \varOmega\) be a surface. If the medium does not contain any electric charges (i.e., ρ≡0), then we obtain from Maxwell’s equations that the current through A is

Elementary calculus implies that \(\operatorname{curl}H\) is divergence free, that is,

The absence of electric charges moreover gives rise to

We consider two case scenarios:

-

(a)

\(\varOmega\in\mathbb{R}^{3}\) is star-shaped. Poincaré’s lemma [1] and the divergence-freeness of the electric displacement implies the existence of an electric vector potential \(F:I\times \varOmega\rightarrow\mathbb{R}^{3}\) such that

$$D(t,\xi)=\operatorname{curl}F(t,\xi). $$The Stokes theorem then implies that the current through \(\mathcal{A}\) reads

$$\begin{aligned} i(t)&=\iint_{\mathcal{A}}n^{\mathrm{T}}(\xi)\cdot\operatorname {curl}H(t, \xi)\,dS(\xi)-\frac{d}{dt}\iint_{\mathcal{A}}n^{\mathrm {T}}(\xi) \cdot \operatorname{curl}F(t,\xi)\,dS(\xi) \\ &=\oint_{\partial\mathcal{A}}\nu^{\mathrm{T}}(\xi)\cdot H(t,\xi )\,ds(\xi)- \frac{d}{dt}\oint_{\partial\mathcal{A}}\nu^{\mathrm {T}}(\xi) \cdot F(t,\xi)\,ds( \xi). \end{aligned}$$Consequently, the current through the surface \({\mathcal{A}}\) is solely depending on the behavior of the electromagnetic field on the boundary \(\partial\mathcal{A}\). In other words, if \(\partial\mathcal {A}_{1}=\partial\mathcal{A}_{2}\) for \(\mathcal{A}_{1},\mathcal {A}_{2}\subset\varOmega\), then the current through \(\mathcal{A}_{1}\) equals the current through \(\mathcal{A}_{2}\).

Note that the condition that \(\varOmega\subset\mathbb{R}^{3}\) is star-shaped can be relaxed to the second de Rham cohomology of Ω being trivial, that is, \(H^{2}_{\mathrm{dR}}(\varOmega)\tilde{=}\{0\}\) [1]. This is again a purely topological condition on Ω, that is, a continuous and continuously invertible deformation of Ω does not influence the de Rham cohomology.

It can be furthermore seen that the above findings are true as well if the topological condition on Ω, together with the absence of electric charges, is replaced with the physical assumption that the electric displacement is stationary, that is, \(\frac{\partial}{\partial t}D\equiv0\). This follows by

$$\begin{aligned} i(t)&=\iint_{ \mathcal{A}}n^{\mathrm{T}}(\xi)\cdot j(t,\xi)\, dS(\xi) \\ &=\iint_{\mathcal{A}}n^{\mathrm{T}}(\xi)\cdot\operatorname {curl}H(t,\xi) \,dS(\xi)-\iint_{\partial\mathcal{A}}n^{\mathrm {T}}(\xi)\cdot \underbrace{ \frac{\partial}{\partial t}D(t,\xi)}_{=0}\,dS(\xi ) \\ &=\iint_{\partial\mathcal{A}}\nu^{\mathrm{T}}(\xi)\cdot H(t,\xi )\,dS( \xi). \end{aligned}$$(9)Now consider a wire as presented in Fig. 1, which is assumed to be surrounded by a perfect isolator (that is, the n T(ξ)j(ξ)=0 at the boundary of the wire). Let \(\mathcal{A}\) be a cross-sectional area across the wire. If the wire does not contain any charges or the electric field inside the wire is stationary, an application of the above argumentation implies that the current of a wire is well-defined in the sense that it does not depend on the particular choice of a cross-sectional area. This enables to speak about the current through a wire.

-

(b)

Now assume that \(\mathcal{V}\subset\varOmega\) is a domain with sufficiently smooth boundary and consider the current though \(\partial \mathcal{V}\). Applying the Gauss theorem, we obtain that, under the assumption ρ≡0, the integral of the outward component of the current density vanishes for any closed surface, that is,

Further note that, again, under the alternative assumption that the field of electric displacement is stationary, the surface integral of the current density over ∂Ω vanishes as well (compare (9)).

In each of the above two cases, we have

Now we focus on a conductor node as presented in Fig. 2 and assume that no charges are present or that the electric field inside the conductor node is stationary. Again assuming that all wires are surrounded by perfect isolators, we can choose a domain \(\varOmega\subset\mathbb{R}^{3}\) such that, for k=1,…,N, the boundary ∂Ω intersects with the kth wire to the cross-sectional area \(\mathcal{A}_{k}\). Define the number s k ∈{1,−1} to be positive if \(\mathcal{A}_{k}\) has the same orientation of ∂Ω (that is, i k (t) is an outflowing current) and s k =−1 otherwise (that is, i k (t) is an inflowing current). Then, by making use of the assumption that the current density is trivial outside the wires we obtain

where i k is the current of the kth wire. This is known as Kirchhoff’s current law.

Theorem 3.5

(Kirchhoff’s current law (KCL))

Assume that a conductor node is given that is surrounded by a perfect isolator. Further assume that the electric field is stationary or the node does not contain any charges. Then the sum of inflowing currents equals to the sum of inflowing currents.

Next, we introduce the concept of electric voltage.

Definition 3.6

(Electrical voltage)

Let \(\varOmega\subset\mathbb{R}^{3}\) describe a medium in which an electromagnetic field evolves. Let \(\mathcal{S}\subset\varOmega\) be a path (see Fig. 3). Then the voltage along \(\mathcal{S}\) is defined by the path integral

Remark 3.7

(Orientation of the path)

The sign of the voltage is again a matter of the orientation of the path. That is, a change of the orientation of \(\mathcal{S}\) results in replacing u(t) be −u(t) (compare Remark 3.3).

Remark 3.8

(Electrical current in the stationary case)

If the field of magnetic flux intensity is stationary (\(\frac{\partial }{\partial t}B\equiv0\)), then the Maxwell equations give rise to \(\operatorname{curl}E\equiv0\). Moreover, assuming that the spatial domain in which the stationary electromagnetic field evolves is simply connected [31], the electric field intensity is a gradient field, that is,

for some differentiable scalar-valued function Φ, which we call an electric potential. For a path S s ⊂Ω from ξ 0 to ξ 1, we have

In particular, the voltage along S s is solely depending on the initial and end point of S s . This enables to speak about the voltage between the points ξ 0 and ξ 1.

Note that the electric potential is unique up to addition of a function independent on the spatial coordinate ξ. It can therefore be made unique by imposing the additional relation Φ(t,ξ g )=0 for some prescribed position ξ g ∈Ω. In electrical engineering, this is called grounding of ξ g (see Fig. 4).

Now we take a closer look at a loop of conductors (see Fig. 5) in which the field of magnetic flux is assumed to be stationary:

For k=1,…,N, assume that \(\mathcal{S}_{k}\) is a path in the kth conductor connecting its nodes. Assume that the field of magnetic flux intensity is stationary and let u k (t) be the voltage between the initial and terminal point of \(\mathcal{S}_{k}\). Define the number s k ∈{1,−1} to be positive if \(\mathcal{S}_{k}\) is in the direction of the loop and s k =−1 otherwise. Taking a surface \(\mathcal{A}\subset\varOmega\) that is surrounded by the path

we can apply the Stokes theorem to see that

Theorem 3.9

(Kirchhoff’s voltage law (KVL))

In an electromagnetic field in which the magnetic flux is stationary, each conductor loop fulfills that the sum of voltages in direction of the loop equals the sum of voltages in the opposite direction to the loop.

In the following, we will make some further considerations concerning energy and power transfer in stationary electromagnetic fields (\(\frac{\partial}{\partial t}D\equiv \frac{\partial}{\partial t}B\equiv0\)) evolving in simply connected domains. Assuming that we have some electrical device in the domain \(\varOmega\subset\mathbb{R}^{3}\) that is physically closed in the sense that no current leaves the device (i.e., n T(ξ)j(t,ξ)=0 for all ξ∈∂Ω), an application of the multiplication rule

and the Gauss theorem lead to

In other words, the spatial L 2-inner product [17] between j(t 1,⋅) and the field E(t 1,⋅) vanishes for all times t 1,t 2 in which the stationary electrical field evolves.

Theorem 3.10

(Tellegen’s law for stationary electromagnetic fields)

Let a stationary electromagnetic field inside the simply connected domain \(\varOmega\subset\mathbb{R}^{3}\) be given, and assume that no electrical current leaves Ω. Then for all times t 1,t 2 in which the field evolves, the current density field j(t 1,⋅) and the electrical field density field E(t,⋅) are orthogonal in the L 2-sense.

The concluding considerations in this section are concerned with energy inside conductors in which stationary electromagnetic fields evolve. Consider an electrical wire as displayed in Fig. 3. Assume that \(\mathcal{S}\) is a path connecting the incidence nodes ξ 0,ξ 1. Furthermore, for each ξ∈S, let \(\mathcal {A}_{\xi}\) be a cross-sectional area containing ξ and assume the additional property that the spatial domain of the wire Ω is the disjoint union of the surfaces \(\mathcal{A}_{\xi}\), that is,

The KCL implies that the current through \(\mathcal{A}_{\xi}\) does not depend on \(\xi\in\mathcal{S}\). Now making the (physically reasonable) assumptions that the voltage is spatially constant in each cross-sectional area \(\mathcal{A}_{\xi}\) and using the Gauss theorem and the multiplication rule, we obtain

From this we see that the following holds for the product between the voltage along and the current through the wire:

In other words, the product between u(t) and i(t) therefore coincides with the outflow of the Poynting vector field of the wire, whence the integral

is the energy consumed by the wire.

2.3.3 Notes and References

-

(i)

The constitutive relations with properties as in Assumptions 3.1 directly constitute an energy balance via (5a), (5b). Further types of constitutive relations can be found in [30].

-

(ii)

The existence of global (weak, classical) solutions of Maxwell’s equations in the general nonlinear case seems to be not fully worked out so far. A functional analytic approach to the linear case is, with boundary conditions sightly different from (7), in [66].

2.4 Kirchhoff’s Laws and Graph Theory

In this part, we will approach the systematic description of Kirchhoff’s laws inside a conductor network. To achieve this aim, we will regard an electrical circuit as a graph. Each branch of the circuit connects two nodes. To each branch of the circuit we assign a direction, which is not a physical restriction but rather a definition of the positive direction of the corresponding voltage and current. This definition is arbitrary, but it has to be however done in advance (compare Remarks 3.3 and 3.7). We assume that the voltage and current of each branch are equally directed. This is known as a load reference-arrow system [34]. This allows us to speak about an initial node and a terminal node of a branch.

Such a collection of branches can, in an abstract way, be formulated as a directed graph (see Fig. 6).

2.4.1 Graphs and Matrices

We present some mathematical fundamentals of directed graphs.

Definition 4.1

(Graph concepts)

A directed graph (or graph for short) is a triple \(\mathcal {G}=(V,E,\varphi)\) consisting of a node set V and a branch set E together with an incidence map

If φ(e)=(v 1,v 2), we call e to be directed from v 1 to v 2; v 1 is called the initial node, and v 2 the terminal node of e. Two graphs \(\mathcal {G}_{a}=(V_{a},E_{a},\varphi_{a})\) and \(\mathcal{G}_{b}=(V_{b},E_{b},\varphi_{b})\) are called isomorphic if there exist bijective mappings ι E :E a →E b and ι V :V a →V b , such that \(\varphi_{a,1}=\iota_{V}^{-1}\circ\varphi_{b,1}\circ\iota_{E}\) and \(\varphi_{a,2}=\iota_{V}^{-1}\circ\varphi_{b,2}\circ\iota_{E}\).

Let V′⊂V, and let E′ be a set of branches fulfilling

Further, let φ| E′ be the restriction of φ to E′. Then the triple \(\mathcal{K}:=(V',E',\varphi| _{E'})\) is called a subgraph of \(\mathcal{G}\). In the case where E′=E| V′, we call \(\mathcal{K}\) the induced subgraph on V′. If V′=V, then \(\mathcal{K}\) is called a spanning subgraph. A proper subgraph is that with E≠E′.

\(\mathcal{G}\) is called finite if both the node and the branch set are finite.

For each branch e, define an additional branch −e, which is directed from the terminal to the initial node of e, that is, φ(−e)=(φ 2(e),φ 1(e)) for e∈E. Now define the set \(\tilde{E}=\{e,-e : e\in E\}\). A tuple \(w= (w_{1},\ldots,w_{r} )\in\tilde{E}^{r}\) where

is called a path from \(v_{k_{0}}\) to \(v_{k_{r}}\); w is called an elementary path if \(v_{k_{1}},\ldots,v_{k_{r}}\) are distinct. A loop is an elementary path with \(v_{k_{0}}=v_{k_{r}}\). A self-loop is a loop consisting of only one branch. Two nodes v,v′ are called connected if there exists a path from v to v′. The graph itself is called connected if any two nodes are connected. A subgraph \(\mathcal{K}:=(V',E',\varphi| _{E'})\) is called connected component if it is connected and \(\mathcal{K}^{c}:=(V\setminus V',E\setminus E',\varphi| _{E\setminus E'})\) is a subgraph.

A tree is a minimally connected (spanning sub)graph, that is, it is connected without having any connected proper spanning subgraph.

For a spanning subgraph \(\mathcal{K}=(V,E',\varphi| _{E'})\), we define the complementary spanning subgraph by \(\mathcal{G}-\mathcal{K}:=(V,E\setminus E',\varphi| _{E\setminus E'})\). The complementary spanning subgraph of a tree is called a cotree. A spanning subgraph \(\mathcal{K}\) is called a cutset if its branch set is nonempty, \(\mathcal{G}-\mathcal{K}\) is a disconnected graph, and additionally, \(\mathcal{G}-\mathcal{K'}\) is connected for any proper spanning subgraph \(\mathcal{K'}\) of \(\mathcal{K}\).

We can set up special matrices associated to a finite graph. These will be useful to describe Kirchoff’s laws.

Definition 4.2

Let a finite graph \(\mathcal{G}=(V,E,\varphi)\) with n branches E={e 1,…,e n } and m nodes V={v 1,…,v m } be given. Assume that the graph does not contain any self-loops. The all-node incidence matrix of \(\mathcal{G}\) is defined by \(A_{0}=(a_{jk})\in\mathbb{R}^{m,n}\), where

Let L={l 1,…,l b } be the set of loops of \(\mathcal{G}\). Then the all-loop matrix \(B_{0}=(b_{jk})\in\mathbb{R}^{l,n}\) is defined by

2.4.2 Kirchhoff’s Laws: A Systematic Description

Let \(A_{0}\in\mathbb{R}^{m,n}\) be the all-node incidence matrix of a graph \(\mathcal{G}=(V,E,\varphi)\) with n branches E={e 1,…,e n } and m nodes V={v 1,…,v m } and no self-loops. The jth row of A 0 is, by definition, at the kth position, equal to 1 if the kth branch leaves the jth node. On the other hand, this entry equals to −1 if the kth branch enters the jth node. If the kth node is involved in the jth node, then this entry vanishes. Hence, defining i k (t) to be the current through the kth branch in the direction to its terminal node and defining the vector

the kth row vector \(a_{k}\in\mathbb{R}^{1,n}\) gives rise to Kirchhoff’s current law of the kth node via a k i(t)=0. Consequently, the collection of all Kirchhoff laws reads, in compact form,

For k∈{1,…,n}, let u k (t) be the voltage between the initial and terminal nodes of the kth branch, and define the vector

By the same argumentation as before, the construction of the all-loop matrix gives rise to

Since any column of A 0 contains exactly two nonzero entries, namely 1 and −1, we have

This give rise to the fact that the KCL system A 0 i(t)=0 contains redundant equations. Such redundancies occur more than ever in the KVL B 0 u=0.

Remark 4.3

(Self-loops in electrical circuits)

Kirchhoff’s voltage law immediately yields that the voltage along a branch with equal incidence nodes vanishes. Kirchhoff’s current law further implies that the current from a self-loop flows into the corresponding node and also flows out of this node. A consequence is that self-loops are physically neutral: Their removal does not influence the behavior of the remaining circuit. The assumption of their absence is therefore no loss of generality.

The next aim is to determine a set of (linearly) independent equations out of the so far constructed equations. To achieve this, we present several connections between some properties of the graph and its matrices A 0, B 0. We generalize the results in [7] to directed graphs. As a first observation, we may reorder the branches and nodes of \(\mathcal{G}=(V,E,\varphi)\) into according to connected components such that we end up with

where A 0,i and B 0,i are, respectively, the all-node incidence matrix and all-loop matrix of the ith connected component.

A spanning subgraph \(\mathcal{K}\) of the finite graph \(\mathcal{G}\) has an all-node incidence matrix \(A_{\mathcal{K}}\), which is constructed by deleting rows of A 0 corresponding to the branches of the complementary spanning subgraph \(\mathcal{G}-\mathcal{K}\). By a suitable reordering of the branches, the incidence matrix has a partition

Theorem 4.4

Let a finite graph \(\mathcal{G}=(V,E,\varphi)\) with n branches E={e 1,…,e n } and m nodes V={v 1,…,v m } and no self-loops. Let \({A}_{0}\in\mathbb{R}^{m,n}\) be the all-node incidence matrix of \(\mathcal{G}\). Then

-

(a)

\(\operatorname{rank}{A}_{0}=m-k\).

-

(b)

\(\mathcal{G}\) contains a cutset if and only if \(\operatorname {rank}{A}_{0}=m-1\).

-

(c)

\(\mathcal{G}\) is a tree if and only if \(A_{0}\in\mathbb {R}^{m,m-1}\) and kerA 0={0}.

-

(d)

\(\mathcal{G}\) contains loops if and only if kerA 0={0}.

Proof

-

(a)

Since all-loop incidence matrices of nonconnected graphs allow a representation (18), the general result can be directly inferred if we prove the statement for the case where \(\mathcal{G}\) is connected. Assume that A 0 is the incidence matrix of a connected graph, and assume that \(A_{0}^{\mathrm{T}}x=0\) for some \(x\in\mathbb{R}^{m}\). Utilizing (17), we need to show that all entries of x are equal for showing that \(\operatorname{rank}{A}_{0}=m-1\). By a suitable reordering of the rows of A 0 we may assume that the first k entries of x are nonzero, whereas the last m−k entries are zero, that is, \(x=[x_{1}^{\mathrm{T}}\, 0]^{\mathrm{T}}\), where all entries of x 1 are nonzero. By a further reordering of the columns we may assume that A 0 is of the form

$$A_0= \begin{bmatrix}A_{11}&0\\A_{21}&A_{22} \end{bmatrix} , $$where each column vector of A 11 is not the zero vector. This gives \(A_{11}^{\mathrm{T}}x_{1}=0\).

Now take an arbitrary column vector a 21,i of A 21. Since each column vector of A 0 has exactly two nonzero entries, a 21,i either has no, one, or two nonzero entries. The latter case implies that the ith column vector of A 11 is the zero vector, which contradicts the construction of A 21. If a 21,i has exactly one nonzero entry at the jth position, the relation x 1 A 11=0 gives rise to the fact that the jth entry of x 1 vanishes. Since this is a contradiction, the whole matrix A 21 vanishes. Therefore, the all-node incidence matrix is block-diagonal. This however implies that none of the last m−k nodes is connected to the first k nodes, which is a contradiction to \(\mathcal{G}\) being connected.

-

(b)

This result follows from (a) by using the fact that a graph contains cutsets if and only if it is connected.

-

(c)

By definition, \(\mathcal{G}\) is a tree if and only if it is connected and the deletion of an arbitrary branch results in a disconnected graph. By (a) this means that the deletion of an arbitrary column A 0 results in a matrix with rank smaller than m−1. This is equivalent to the columns of A 0 being linearly independent and spanning an (n−1)-dimensional space, in other words, \(\operatorname {rank}A_{0}=m-1\) and kerA 0={0}.

-

(d)

Assume that the kernel of A 0 is trivial. Seeking for a contradiction, assume that \(\mathcal{G}\) contains a loop l. Define the vector \(b_{l}=[b_{l1},\ldots,b_{ln}]\in\mathbb {R}^{1,n}\setminus\{ 0\}\) with

$$b_{lk}= \begin{cases} 1 &\text{if branch}\ k\ \mbox{belongs to}\ l\ \mbox{and has the same orientation,}\\ -1 &\text{if branch}\ k\ \mbox{belongs to}\ l\ \mbox{and has the contrary orientation,}\\ 0 &\text{otherwise.} \end{cases} $$Let a 1…,a n be the column vectors of A 0. Then, by construction of b l , each row of the matrix

$$ \begin{bmatrix}b_{l1}a_1&\ldots&b_{ln}a_n \end{bmatrix} $$contains exactly one entry 1 and one entry −1 and zeros elsewhere. This implies \(A_{0}b_{l}^{\mathrm{T}}=0\).

Conversely, assume that \(\mathcal{G}\) contains no loops. By separately considering the connected components and the consequent structure (18) of A 0, it is again no loss of generality to assume that \(\mathcal{G}\) is connected. Let e be a branch of \(\mathcal{G}\), and let \(\mathcal{K}\) be the spanning subgraph whose only branch is e. Then \(\mathcal{G}-\mathcal {K}\) results in a disconnected graph (otherwise, (e,e l1,…,e lv ) would be a loop, where (e l1,…,e lv ) is an elementary path in \(\mathcal{G}-\mathcal{K}\) from the terminal node to the initial node of e). This however implies that the deletion of an arbitrary column of A 0 results in a matrix with rank smaller than n−1, which means that the columns of A 0 are linearly independent, that is, kerA 0={0}. □

Since, by the dimension formula, \(\dim\ker A_{0}^{\mathrm{T}}=k\), we can infer from (14) and (17) that \(\ker A_{0}^{\mathrm{T}}=\operatorname{span}\{c_{1},\ldots,c_{k}\}\), where

Furthermore, using the argumentation of the first part in the proof of (d), we obtain that

We will show that the row vectors of B 0 even generate the kernel of A 0.

Based on a spanning subgraph \(\mathcal{K}\) of \(\mathcal{G}\), we may, by a suitable reordering of columns, perform a partition of the loop matrix according to the branches of \(\mathcal{K}\) and \(\mathcal {G}-\mathcal{K}\), that is,

If a subgraph \(\mathcal{T}\) is a tree, then any branch e in \(\mathcal{G}-\mathcal{T}\) defines a loop in \(\mathcal{G}\) via (e,e l1,…,e lv ), where (e l1,…,e lv ) is an elementary path in \(\mathcal{T}\) from the terminal node to the initial node of e. Consequently, we may reorder the rows of \(B_{\mathcal {T}}\) and \(B_{\mathcal{G}-\mathcal{T}}\) to obtain the form

Such a representation will be crucial for the proof of the following result.

Theorem 4.5

Let \(\mathcal{G}=(V,E,\varphi)\) be a finite graph with no self-loops, n branches E={e 1,…,e n }, and m nodes V={v 1,…,v m }, and let the all-node incidence matrix \({A}_{0}\in\mathbb {R}^{m,n}\) and b loops {l 1,…,l b } be given. Furthermore, let k be the number of connected components of \(\mathcal{G}\). Then

-

(a)

\(\operatorname{im}{B}_{0}^{\mathrm{T}}=\ker A_{0}\);

-

(b)

\(\operatorname{rank}{B}_{0}=n-m+k\).

Proof

The relation \(\operatorname{im}{B}_{0}^{\mathrm{T}}\subset\ker A_{0}\) follows from (21). Therefore, the overall result follows if we prove that \(\operatorname{rank}{B}_{0}\geq n-m+k\). Again, by separately considering the connected components and using the block-diagonal representations (18), the overall result immediately follows if we prove the case k=1. Assuming that \(\mathcal{G}\) is connected, we consider a tree \(\mathcal{T}\) in \(\mathcal{G}\). Then we may assume that the all-loop matrix is of the form \(B_{0}= [B_{0\mathcal{T}}\ B_{0\mathcal{G}-\mathcal{T}}] \) with submatrices as is (23). However, since the latter submatrix has full column rank and n−m+1 columns, we have

which proves the desired result. □

Statement (a) implies that the orthogonal spaces of \(\operatorname {im}{B}_{0}^{\mathrm{T}}\) and kerA 0 coincide as well. Therefore,

To simplify verbalization, we arrange that, by referring to connectedness, the incidence matrix, loop matrix, etc. of an electrical circuit, we mean the corresponding notions and concepts for the graph describing the electrical circuit.

It is a reasonable assumption that an electrical circuit is connected; otherwise, since the connected components do not physically interact, they can be considered separately.

Since the rows of A 0 sum up to the zero row vector, one might delete an arbitrary row of A 0 to obtain a matrix A having the same rank as A 0. We call A the incidence matrix of \(\mathcal{G}\). The property \(\operatorname{rank}A_{0}=\operatorname{rank}A\) implies \(\operatorname{im}A_{0}^{\mathrm{T}}=\operatorname{im}A^{\mathrm {T}}\). Consequently, the following holds.

Theorem 4.6

(Kirchhoff’s current law for electrical circuits)

Let a connected electrical circuit with n branches and m nodes and no self-loops be given. Let \(A\in\mathbb{R}^{m-1,n}\), and let, for j=1,…,n, i j (t) be the current in branch e j in the direction of initial to terminal node of e j . Let \(i(t)\in\mathbb {R}^{n}\) be defined as in (13). Then for all times t,

We can furthermore construct the loop matrix \(B\in\mathbb{R}^{n-m+1,n}\) by picking n−m+1 linearly independent rows of B 0. This implies \(\operatorname{im}B_{0}^{\mathrm{T}}=\operatorname{im}B^{\mathrm {T}}\), and we can formulate Kirchhoff’s voltage law as follows.

Theorem 4.7

(Kirchhoff’s voltage law for electrical circuits)

Let a connected electrical circuit with n branches and m nodes be given. Let \(B\in\mathbb{R}^{n-m+1,n}\), and let, for j=1,…,n, u j (t) be the voltage in branch e j between the initial and terminal node of e j . Let \(u(t)\in\mathbb{R}^{n}\) be defined as in (15). Then for all times t,

A constructive procedure for determining the loop matrix B can be obtained from the findings in front of Theorem 4.5: Having a tree \(\mathcal{T}\) in the graph \(\mathcal{G}\) describing an electrical circuit, the loop matrix can be determined by

where the jth row of \(B_{\mathcal{T}}\) contains the information on the path in \(\mathcal{T}\) between the initial and terminal nodes of the (m−1+j)th branch of \(\mathcal{G}\).

The formulations (24) and (25) of Kirchhoff’s laws give rise to the fact that a connected circuit includes n=(m−1)+(n−m+1) linearly independent Kirchhoff equations. Using Theorem 4.5 and \(\operatorname{im}A_{0}^{\mathrm {T}}=\operatorname{im}A^{\mathrm{T}}\), \(\operatorname {im}B_{0}^{\mathrm{T}}=\operatorname{im}B^{\mathrm{T}}\), we further have

Kirchhoff’s voltage law may therefore be rewritten as \(u(t)\in \operatorname{im}A^{\mathrm{T}}\). Equivalently, there exists some \(\phi(t)\in\mathbb{R}^{m-1}\) such that

The vector ϕ(t) is called the node potential. Its ith component expresses the voltage between the ith node and the node corresponding to the deleted row of A 0. This relation can therefore be interpreted as a lumped version of (11). The node potential of the deleted row is set to zero, whence the deletion of a row of A 0 can therefore be interpreted as grounding (compare Sect. 2.3).

Equivalently, Kirchhoff’s current law may be reformulated in the way that there exists a loop current \(\iota(t)\in\mathbb {R}^{n-m+1}\) such that

The so far developed graph theoretical results give rise to a lumped version of Theorem 3.10.

Theorem 4.8

(Tellegen’s law for electrical circuits)

With the assumption and notation of Theorems 4.6 and 4.7, for all times t 1,t 2, the vectors i(t 1) and u(t 2) are orthogonal in the Euclidean sense, that is,

Proof

For the incidence matrix A of the graph describing the electrical circuit, let \(\varPhi(t_{2})\in\mathbb{R}^{m-1}\) be the corresponding vector of node potentials at time t 2. Then

□

2.4.3 Auxiliary Results on Graph Matrices

This section closes with some further results on the connection between properties of subgraphs and linear algebraic properties of the corresponding submatrices of incidence and loop matrices. Corresponding for undirected graphs can be found in [7]. First, we declare some manners of speaking.

Definition 4.9

Let \(\mathcal{G}\) be a graph, and let \(\mathcal{K}\) be a spanning subgraph.

-

(i)

\(\mathcal{L}\) is called a \(\mathcal{K}\)-cutset if \(\mathcal{L}\) is a cutset of \(\mathcal{G}\) and a spanning subgraph of \(\mathcal{K}\).

-

(ii)

l is called a \(\mathcal{K}\)-loop if l is a loop and all branches of l are contained in \(\mathcal{K}\).

Lemma 4.10

Let \(\mathcal{G}\) be a connected graph with n branches and m nodes, no self-loops, an incidence matrix \(A\in\mathbb{R}^{m-1,n}\), and a loop matrix \(B\in\mathbb{R}^{n-m+1,n}\). Further, let \(\mathcal{K}\) be a spanning subgraph. Assume that the branches of \(\mathcal{G}\) are sorted so that

-

(a)

The following three assertions are equivalent:

-

(i)

\(\mathcal{G}\) does not contain \(\mathcal{K}\)-cutsets;

-

(ii)

\(\ker A_{\mathcal{G}-\mathcal{K}}^{\mathrm{T}}=\{0\}\);

-

(iii)

\(\ker B_{\mathcal{K}}=\{0\}\).

-

(i)

-

(b)

The following three assertions are equivalent:

-

(i)

\(\mathcal{G}\) does not contain \(\mathcal{K}\)-loops;

-

(ii)

\(\ker A_{\mathcal{K}}=\{0\}\);

-

(iii)

\(\ker B_{\mathcal{G}-\mathcal{K}}^{\mathrm{T}}=\{0\}\).

-

(i)

Proof

-

(a)

The equivalence of (i) and (ii) follows from Theorem 4.4 (b). To show that (ii) implies (iii), assume that \(B_{\mathcal{K}}x=0\). Then

$$\begin{pmatrix}x\\0 \end{pmatrix} \in\ker \begin{bmatrix}B_{\mathcal{K}}&B_{\mathcal{G}-\mathcal{K}} \end{bmatrix} =\operatorname{im} \begin{bmatrix}A_{\mathcal{K}}^{\mathrm{T}}\\ A_{\mathcal{G}-\mathcal {K}}^{\mathrm{T}} \end{bmatrix} , $$that is, there exists \(y\in\mathbb{R}^{m-1}\) such that

$$\begin{pmatrix}x\\0 \end{pmatrix} = \begin{bmatrix}A_{\mathcal{K}}^{\mathrm{T}}\\ A_{\mathcal{G}-\mathcal {K}}^{\mathrm{T}} \end{bmatrix} y. $$In particular, we have \(A_{\mathcal{G}-\mathcal{K}}^{\mathrm {T}}y=0\), whence, by assumption (ii), y=0. Thus, \(x=A_{\mathcal {K}}^{\mathrm{T}}y=0\).

To prove that (iii) is sufficient for (ii), we can perform the same argumentation by interchanging the roles of \(A_{\mathcal{G}-\mathcal {K}}^{\mathrm{T}}\) and \(B_{\mathcal{K}}\).

-

(b)

The equivalence of (i) and (ii) follows from Theorem 4.4 (d). The equivalence of (ii) and (iii) can be proven analogously to part (a) (by interchanging the roles of \(\mathcal{K}\) and \(\mathcal {G}-\mathcal{K}\) and of the loop and incidence matrices). □

The subsequent two auxiliary results are concerned with properties of subgraphs of subgraphs and gives some equivalent characterizations in terms of properties of their incidence and loop matrices.

Lemma 4.11

Let \(\mathcal{G}\) be a connected graph with n branches and m nodes, no self-loops, an incidence matrix \(A\in\mathbb{R}^{n-1,m}\), and a loop matrix \(B\in\mathbb{R}^{n-m+1,n}\). Further, let \(\mathcal{K}\) be a spanning subgraph of \(\mathcal{G}\), and let \(\mathcal{L}\) be a spanning subgraph of \(\mathcal{K}\). Assume that the branches of \(\mathcal{G}\) are sorted so that

and define

Then the following four assertions are equivalent:

-

(i)

\(\mathcal{G}\) does not contain \(\mathcal{K}\)-loops except for \(\mathcal{L}\)-loops;

-

(ii)

$$\ker A_{\mathcal{K}}=\ker A_{\mathcal{L}}\times\{0\}. $$

-

(iii)

For a matrix \(Z_{\mathcal{L}}\) with \(\operatorname {im}Z_{\mathcal{L}}=\ker A^{\mathrm{T}}_{\mathcal{L}}\),

$$\ker Z_{\mathcal{L}}^{\mathrm{T}}A_{\mathcal{K}-\mathcal{L}}=\{0\}. $$ -

(iv)

$$\ker B_{\mathcal{G}-\mathcal{L}}^{\mathrm{T}}=\ker B_{\mathcal {K}-\mathcal{L}}^{\mathrm{T}}. $$

-

(v)

For a matrix \(Y_{\mathcal{G}-\mathcal{K}}\) with \(\operatorname {im}Y_{\mathcal{G}-\mathcal{K}}=\ker B^{\mathrm{T}}_{\mathcal {G}-\mathcal{K}}\),

$$Y_{\mathcal{K}-\mathcal{L}}^{\mathrm{T}}B_{\mathcal{G}-\mathcal{K}}=0. $$

Proof

To show that (i) implies (ii), let \(\tilde{B}_{\mathcal{K}}\) be a loop matrix of the graph \(\mathcal{K}\) (note that, in general, \(\tilde{B}_{\mathcal{K}}\) and \({B}_{\mathcal {K}}\) do not coincide). The assumption that all \(\mathcal{K}\)-loops are actually \({\mathcal{L}}\)-loops implies that \(\tilde{B}_{\mathcal{K}}\) is structured as

Since \(\operatorname{im}\tilde{B}_{\mathcal{K}}=\ker A_{\mathcal {K}}\), we have \(\ker A_{\mathcal{K}}=\operatorname{im}\tilde {B}_{\mathcal{L}}^{\mathrm{T}}\times\{0\}\). This further implies that \(\operatorname{im}\tilde{B}_{\mathcal {L}}^{\mathrm{T}}=\ker A_{\mathcal{L}}\) or, in other words, (b) holds.

Now we show that (ii) is sufficient for (i). Let l be a loop in \(\mathcal{K}\). Assume that \(\mathcal{K}\) has n K branches and \(\mathcal{L}\) has n L branches. Define the vector \(b_{l}=[b_{l1},\ldots,b_{ln_{K}}]\in\mathbb{R}^{1,m}\setminus\{0\}\) with

Then (ii) gives rise to \(b_{ln_{L}+1}=\dots=b_{n_{K}}=0\), whence the branches of \(\mathcal{K}-\mathcal{L}\) are not involved in l, that is, l is actually an \(\mathcal{L}\)-loop.

Aiming to show that (iii) holds, assume (ii). Let \(x\in\ker Z_{\mathcal{L}}^{\mathrm{T}}A_{\mathcal{K}-\mathcal{L}}\). Then

Thus, there exists a real vector y such that

This gives rise to

and, consequently, x vanishes.

For the converse implication, it suffices to show that (c) implies \(\ker A_{\mathcal{K}}\subset\ker A_{\mathcal{L}}\times\{0\}\) (the reverse inclusion holds in any case). Assume that

that is, \(A_{\mathcal{L}}y+A_{\mathcal{K}-\mathcal{L}}x=0\). Multiplying this equation from the left by \(Z_{\mathcal{L}}^{\mathrm{T}}\), we obtain \(x\in\ker Z_{\mathcal{L}}^{\mathrm{T}}A_{\mathcal{K}-\mathcal {L}}=\{0\}\), that is, x=0 and \(A_{\mathcal{L}}y=0\). Hence,

The following proof concerns the sufficiency of (ii) for (iv): It suffices to show that (ii) implies

since the converse inclusion holds in any case. Assume that \(B_{\mathcal{G}-\mathcal{L}}^{\mathrm{T}}x=0\). Then

whence \(B_{\mathcal{K}-\mathcal{L}}^{\mathrm{T}}x\).

Conversely, assume that (iv) holds and let

Then

that is, there exists a real vector z such that \(y=B_{\mathcal {L}}^{\mathrm{T}}z\), \(x=B_{\mathcal{K}-\mathcal{L}}^{\mathrm{T}}z\) and \(B_{\mathcal{G}-\mathcal{K}}^{\mathrm{T}}z=0\). The latter implies that \(x=B_{\mathcal{K}-\mathcal{L}}^{\mathrm{T}}z=0\), that is, (b) holds.

It remains to show that (iv) and (v) are equivalent. Assume that (iv) holds. Then

whence

Finally, assume that \(Y_{\mathcal{K}-\mathcal{L}}^{\mathrm {T}}B_{\mathcal{G}-\mathcal{K}}=0\) and let \(B_{\mathcal{G}-\mathcal {K}}^{\mathrm{T}}x=0\). Then \(x\in\operatorname{im}Y_{\mathcal{K}-\mathcal{L}}\), that is, there exists a real vector y such that \(x=Y_{\mathcal{K}-\mathcal {L}}y\). This implies

So far, we have shown that \(Y_{\mathcal{K}-\mathcal{L}}^{\mathrm {T}}B_{\mathcal{G}-\mathcal{K}}=0\) implies \(\ker B_{\mathcal{G}-\mathcal{K}}^{\mathrm{T}}\subset\ker B_{\mathcal{G}-\mathcal{L}}^{\mathrm{T}}\). Since the other inclusion holds in any case (\(B_{\mathcal{G}-\mathcal{K}}^{\mathrm{T}}\) is a submatrix of \(B_{\mathcal{G}-\mathcal{L}}^{\mathrm{T}}\)), the overall result has been proven. □

Lemma 4.12

Let \(\mathcal{G}\) be a connected graph with n branches and m nodes, no self-loops, an incidence matrix \(A\in\mathbb{R}^{m-1,n}\), and a loop matrix \(B\in\mathbb{R}^{n-m+1,n}\). Further, let \(\mathcal{K}\) be a spanning subgraph of \(\mathcal{G}\), and let \(\mathcal{L}\) be a spanning subgraph of \(\mathcal{L}\). Assume that the branches of \(\mathcal{G}\) are sorted so that

Then the following four assertions are equivalent:

-

(i)

\(\mathcal{G}\) does not contain \(\mathcal{K}\)-cutsets except for \(\mathcal{L}\)-cutsets;

-

(ii)

The initial and terminal nodes of each branch of \(\mathcal {K}-\mathcal{L}\) are connected by a path in \({\mathcal{G}-\mathcal{K}}\).

-

(iii)

$$\ker A_{\mathcal{G}-\mathcal{K}}^{\mathrm{T}}=\ker A_{\mathcal {G}-\mathcal{L}}^{\mathrm{T}}. $$

-

(iv)

For a matrix \(Z_{\mathcal{G}-\mathcal{K}}\) with \(\operatorname {im}Z_{\mathcal{G}-\mathcal{K}}=\ker A^{\mathrm{T}}_{\mathcal {G}-\mathcal{K}}\),

$$Z_{\mathcal{K}-\mathcal{L}}^{\mathrm{T}}A_{\mathcal{G}-\mathcal{K}}=0. $$ -

(v)

$$\ker B_{\mathcal{K}}=\ker B_{\mathcal{L}}\times\{0\}. $$

-

(vi)

For a matrix \(Y_{\mathcal{L}}\) with \(\operatorname {im}Y_{\mathcal{L}}=\ker B^{\mathrm{T}}_{\mathcal{L}}\),

$$\ker Y_{\mathcal{L}}^{\mathrm{T}}B_{\mathcal{K}-\mathcal{L}}=\{0\}. $$

Proof

By interchanging the roles of loop and incidence matrices, the proof of equivalence of the assertions (c)–(f) is totally analogous to the proof of equivalence of (ii)–(v) in Lemma 4.11. Hence, it suffices to show that (i), (ii), and (iii) are equivalent:

First, we show that (i) implies (iii): As a first observation, note that since \(A_{\mathcal{K}-\mathcal{L}}\) is a submatrix of \(A_{\mathcal{K}}\), (iii) is equivalent to \(\operatorname{im}A_{\mathcal{K}-\mathcal{L}}\subset\operatorname {im}A_{\mathcal{G}-\mathcal{K}}\). Now seeking for a contradiction, assume that (iii) is not fulfilled. Then, by the preliminary consideration, there exists a column vector a 1 of \(A_{\mathcal{K}-\mathcal{L}}\) with \(a_{1}\notin\operatorname{im}A_{\mathcal{G}-\mathcal{K}}\). Now, for k as large as possible, successively construct column vectors \(\tilde {a}_{1},\ldots,\tilde{a}_{k}\) of \(A_{\mathcal{K}}\) with the property that

Let a 2,…,a j be the set of column vectors of \(A_{\mathcal{K}}\) that have not been chosen by the previous procedure. Since the overall incidence matrix A has full row rank, the construction of \(\tilde{a}_{1},\ldots,\tilde{a}_{k}\) leads to

Now construct the spanning graph \(\mathcal{C}\) by taking the branches a 1,…,a j . Due to (29), \(\mathcal{G}-\mathcal{C}\) is disconnected. Furthermore, \(\mathcal{C}\) contains a branch of \(\mathcal{K}-\mathcal {L}\), namely the one corresponding to the column vector a 1. Since, furthermore, (30) implies that the addition of any branch of \(\mathcal{C}\) to \(\mathcal{G}-\mathcal{C}\) results is a connected graph, we have constructed a cutset in \(\mathcal{K}\) that contains branches of \(\mathcal{K}-\mathcal{L}\).

The next step is to show that (iii) is sufficient for (ii): Assume that the nodes are sorted by connected components in \({\mathcal{G}-\mathcal{K}}\), that is,

Then the matrices \(A_{\mathcal{G}-\mathcal{K},i}\ i=1,\ldots,n\), are all-node incidence matrices of the connected components (except for the component i g connected to the grounding node; then \(A_{\mathcal{G}-\mathcal{K},i_{g}}\) is an incidence matrix). Seeking for a contradiction, assume that e is a branch in \(\mathcal{K}-\mathcal{L}\) whose incidence nodes are not connected by a path in \(\mathcal{G}-\mathcal{K}\). Then a k has not more than two nonzero entries, and one of the following two cases holds:

-

(a)

If e is connected to the grounding node, then a k is the multiple of a unit vector corresponding to a position not belonging to the grounded component, whence \(a_{k}\notin A_{\mathcal{G}-\mathcal{K}}\).

-

(b)

If e connects two nongrounded nodes, then a k has two nonzero entries, which are located at rows corresponding to two different matrices \(A_{\mathcal{G}-\mathcal{K},i}\) and \(A_{\mathcal{G}-\mathcal{K},j}\) in \(A_{\mathcal{G}-\mathcal{K}}\). This again implies \(a_{k}\notin A_{\mathcal{G}-\mathcal{K}}\). This is again a contradiction to (iii).

For the overall statement, it suffices to prove that (ii) implies (i). Let \(\mathcal{C}\) be a cutset of \(\mathcal{G}\) that is contained in \(\mathcal{K}\) and assume that e is a branch of \(\mathcal{C}\) that is contained in \(\mathcal{K}-\mathcal{L}\). Since there exists some path in \(\mathcal {G}-\mathcal{K}\) that connects the incidence nodes of e, the addition of e to \(\mathcal{G}-\mathcal{C}\) (which is a supergraph of \(\mathcal {G}-\mathcal{K}\)) does not connect two different connected components. The resulting graph is therefore still disconnected, which is a contradiction to \(\mathcal {C}\) being a cutset of \(\mathcal{G}\). □

2.4.4 Notes and References

-

(i)

The representation of the Kirchhoff laws by means of incidence and loop matrices is also called nodal analysis and mesh analysis, respectively [16, 19, 32].

-

(ii)

The part in Proposition 4.10 about incidence matrices and subgraphs has also been shown in [22]; the parts in Lemmas 4.11 and 4.12 about incidence matrices and subgraphs have also been shown in [22]. The parts on loop matrices is novel.

-

(iii)

The correspondence between subgraph properties and linear algebraic properties of the corresponding incidence and loop matrices is an interesting feature. It can be seen from (20) that the kernel of a transposed incidence matrix can be computed by a determination of the connected components of a graph. As well, we can infer from (23) and the preceding argumentation that loop matrices can be determined by a simple determination of a tree. Conversely, the computation of the kernel of an incidence matrix leads to the determination of the loops in a (sub)graph. It is further shown in [9, 28] that a matrix \(Z_{\mathcal{L}}^{\mathrm{T}}A_{\mathcal{K}-\mathcal{L}}\) (see Lemma 4.11) has an interpretation as an incidence matrix of the graph, which is constructed from \(\mathcal{K}-\mathcal{L}\) by merging those nodes that are connected by a path in \(\mathcal{L}\). The determination of its nullspace thus again leads a graph theoretical problem.

Note that to determine nullspaces, graph computations are by far preferable to linear algebraic method. Efficient algorithms for the aforementioned problems can be found in [18]. Note that the aforementioned graph theoretical features have been used in [20, 21] to analyze special properties of circuit models.

2.5 Circuit Components: Sources, Resistances, Capacitances, Inductances

We have seen in the previous section that, for a connected electrical circuit with n branches and m nodes, the Kirchhoff laws lead to n=(m−1)+(n−m+1) linearly independent algebraic equations for the voltages and currents. Since, altogether, voltages and currents are 2n variables, mathematical intuition gives rise to the fact that n further relations are missing to completely describe the circuit. The behavior of a circuit does, indeed, not only depend of interconnectivity, the so-called network topology, but also on the type of electrical components located on the branches. These can, for instance, be sources, resistances, capacitances, and inductances. These will either (such as in case of a source) prescribe the voltage or the current, or they form a relation between voltage and current of a certain branch. In this section, we will collect these relations for the aforementioned components.

2.5.1 Sources

Sources describe physical interaction of an electrical circuit with the environment. Voltage sources are elements where the voltage  is prescribed. In current sources, the current \(i_{\mathcal {I}}(\cdot):I\rightarrow\mathbb{R}\) is given beforehand. The symbols of voltage and current sources are presented in Figs. 7 and 8.

is prescribed. In current sources, the current \(i_{\mathcal {I}}(\cdot):I\rightarrow\mathbb{R}\) is given beforehand. The symbols of voltage and current sources are presented in Figs. 7 and 8.

We will see in Sect. 2.6 that the physical variables  (and therefore also energy flow through sources) are determined by the overall electrical circuit. Some further assumptions on the prescribed functions

(and therefore also energy flow through sources) are determined by the overall electrical circuit. Some further assumptions on the prescribed functions  (such as, e.g., smoothness) will also depend on the connectivity of the overall circuit; this will as well be a subject of Sect. 2.6.

(such as, e.g., smoothness) will also depend on the connectivity of the overall circuit; this will as well be a subject of Sect. 2.6.

2.5.2 Resistances

We make the following ansatz for a resistance: Consider a conductor material in the cylindric spatial domain (see Fig. 9)

with length ℓ and radius r.

For ξ x ∈[0,ℓ], we define the cross-sectional area by

To deduce the relation between the resistive voltage and current from Maxwell’s equations, we make the following assumptions.

Assumption 5.1

(The electromagnetic field inside resistances)

-

(a)

The electromagnetic field inside the conductor material is stationary, that is,

$$\frac{\partial}{\partial t}D\equiv\frac{\partial}{\partial t}B\equiv0. $$ -

(b)

Ω does not contain any electric charges.

-

(c)

For all ξ x ∈[0,ℓ], the voltage between two arbitrary points of \(\mathcal{A}_{\xi_{x}}\) vanishes.

-

(d)

The conductance function \(g:\mathbb{R}^{3}\times\varOmega\to \mathbb{R}^{3}\) has the following properties:

-

(i)

g is continuously differentiable.

-

(ii)

g is homogeneous, that is, g(E,ξ 1)=g(E,ξ 2) for all \(E\in\mathbb{R}^{3}\) and ξ 1,ξ 2∈Ω.

-

(iii)

g is strictly incremental, that is, (E 1−E 2)T g(E 1−E 2,ξ)>0 for all distinct \(E_{1},E_{2}\in\mathbb{R}^{3}\) and ξ∈Ω.

-

(iv)

g is isotropic, that is, g(E,ξ) and E are linearly dependent for all \(E\in\mathbb{R}^{3}\) and ξ∈Ω.

-

(i)

Using the definition of the voltage (10), property (c) implies that the electric field intensity is directed according to the conductor, that is, E(t,ξ)=e(t,ξ)⋅e x , where e x is the canonical unit vector in the x-direction, and e(⋅,⋅) is some scalar-valued function. Homogeneity and isotropy, smoothness, and the incrementation property of the conductance function then imply that

for some strictly increasing and differentiable function \({g_{x}}:\mathbb{R} \rightarrow\mathbb{R}\) with g x (0)=0. Further, by using (9) we can infer from the stationarity of the electromagnetic field that the field of electric current density is divergence-free, that is, \(\operatorname{div}j(\cdot,\cdot)\equiv0\). Consequently, g x (e(t,ξ)) is spatially constant. The strict monotonicity of g x then implies that e(t,ξ) is spatially constant, whence we can set up

for some scalar-valued function e only depending on time t (see Fig. 12).

Consider now the straight path \(\mathcal{S}\) between (0,0,0) and (ℓ,0,0). The normal of this path fulfills n(ξ)=e x for all \(\xi\in\mathcal{S}\). As a consequence, the voltage reads

Consider the cross-sectional area \(\mathcal{A}_{0}\) (compare (33)). The normal of \(\mathcal {A}_{0}\) fulfills n(ξ)=e x for all \(\xi\in\mathcal{A}_{0}\). Then obtain for the voltage u(t) between the ends of the conductor and the current i(t) through the conductor that

As a consequence, we obtain the algebraic relation

where \(g:\mathbb{R}\rightarrow\mathbb{R}\) is a strictly increasing and differentiable function with g(0)=0. The symbol of a resistance is presented in Fig. 10.

Remark 5.2

(Linear resistance)

Note that in the case where the friction function is furthermore linear (i.e., g(E(t,ξ),ξ)=c g ⋅E(t,ξ)), the resistance relation (35) becomes

where

is the so-called conductance value of the linear resistance.

Equivalently, we can write

where

Remark 5.3

(Resistance, energy balance)

The energy balance of a general resistance that is operated in the time interval [t 0,t f ]

where the latter inequality holds since the integrand is positive. A resistance is therefore an energy-dissipating element, that is, it consumes energy.

Note that, in the linear case, the energy balance simplifies to

2.5.3 Capacitances

We make the following ansatz for a capacitance: Consider again an electromagnetic medium in a cylindric spatial domain \(\varOmega\subset\mathbb{R}^{3}\) as in (32) with length ℓ and radius r (see also Fig. 9). To deduce the relation between capacitive voltage and current from Maxwell’s equations, we make the following assumptions.

Assumption 5.4

(The electromagnetic field inside capacitances)

-

(a)

The magnetic flux intensity inside the medium is stationary, that is,

$$\frac{\partial}{\partial t}B\equiv0. $$ -

(b)

The medium is a perfect isolator, that is, j(⋅,ξ)≡0 for all ξ∈Ω.

-

(c)

In the lateral area

$$\mathcal{A}_{\mathrm{lat}}=[0,\ell]\times\bigl\{ (\xi_y, \xi_z):\xi _y^2+\xi_z^2= r^2\bigr\} \subset\partial\varOmega $$of the cylindric domain Ω, the magnetic field intensity is directed orthogonally to \(\mathcal{A}_{\mathrm{lat}}\). In other words, for all \(\xi\in\mathcal{A}_{\mathrm{lat}}\) and all times t, the positively oriented normal n(ξ) and H(t,ξ) are linearly dependent.

-

(d)

There is no explicit algebraic relation between the electric current density and the electric field intensity.

-

(e)

Ω does not contain any electric charges.

-

(f)

For all ξ x ∈[0,ℓ], the voltage between two arbitrary points of \(\mathcal{A}_{\xi_{x}}\) (compare (33)) vanishes.

-

(g)

The function \(f_{e}:\mathbb{R}^{3}\times\varOmega\to\mathbb {R}^{3}\) has the following properties:

-

(i)

f e is continuously differentiable.

-

(ii)

f e is homogeneous, that is, f e (D,ξ 1)=f e (D,ξ 2) for all \(D\in\mathbb{R}^{3}\) and ξ 1,ξ 2∈Ω.

-

(iii)

The function \(f_{e}(\cdot,\xi):\mathbb{R}^{3}\rightarrow\mathbb {R}^{3}\) is invertible for some (and hence any) ξ∈Ω.

-

(iv)

f e is isotropic, that is, f e (D,ξ) and D are linearly dependent for all \(D\in\mathbb{R}^{3}\) and ξ∈Ω.

-

(i)

Using the definition of the voltage (10), property (c) implies that the electric field intensity is directed according to the conductor, that is, E(t,ξ)=e(t,ξ)⋅e x for some scalar-valued function e(⋅ ,⋅). Isotropy, homogeneity, and the invertibility of f e then implies that the electrical displacement is as well directed along the conductor, whence

for some differentiable and invertible function \({q_{x}}:\mathbb {R}\rightarrow \mathbb{R}\). Further, by using that, by the absence of electric charges, the field of electric displacement is divergence-free, we obtain that it is even spatially constant. Consequently, the electric field intensity is as well spatially constant, and we can set up

for some scalar-valued function e(⋅) only depending on time.

Using that the magnetic field is stationary, we can, as for resistances, infer that the electrical field is spatially constant, that is,

for some scalar-valued function e(⋅) only depending on time, and we can use the argumentation in as in (34) to see that the voltage reads

Assume that the current i(⋅) is applied to the capacitor. The current density inside Ω is additively composed of the current density induced by the applied current j appl(⋅,⋅) and the current density j ind(⋅,⋅) induced by the electric field. Since the medium in Ω is an isolator, the current density inside Ω vanishes. Consequently, for all times t and all ξ∈Ω,

The definition of the current yields

The definition of the cross-sectional area \(\mathcal{A}_{0}\) and the lateral surface \(\mathcal{A}_{\mathrm{lat}}\) yields \(\partial \mathcal{A}_{0}\subset\mathcal{A}_{\mathrm{lat}}\). By Maxwell’s equations, Stokes theorem, stationarity of the magnetic flux intensity, and the assumption that the tangential component magnetic field intensity vanishes in the lateral surface, we obtain

That is, we obtain the dynamic relation

for some function \(q:\mathbb{R}\rightarrow\mathbb{R}\). Note that the quantity q(u) has the physical dimension of electric charge, whence q(⋅) is called a charge function. It is sometimes spoken about the charge q(u(t)) of the capacitance. Note that q(u(t)) is a virtual quantity. Especially, there is no direct relation between the charge of a capacitance and the electric charge (density) as introduced in Sect. 2.3. The symbol of a capacitance is presented in Fig. 11.

Remark 5.5

(Linear capacitance)

Note that, in the case where the constitutive relation is furthermore linear (i.e., f e (D(t,ξ),ξ)=c c ⋅D(t,ξ)), the capacitance relation (35) becomes

where

is the so-called capacitance value of the linear capacitance.

Remark 5.6

(Capacitance, energy balance)

Isotropy and homogeneity of f e and the construction of the function q x further implies that the electric energy density fulfills

Hence, the function \(q_{x}:\mathbb{R}\rightarrow\mathbb{R}\) is invertible with

where

In particular, this function fulfills V e,x (0)=0 and V e,x (q)>0 for all \(q\in\mathbb{R}\setminus\{0\}\).

The construction of the capacitance function and assumption (3) on f e implies that \(q:\mathbb{R}\rightarrow\mathbb {R}\) is invertible with

Moreover,  and

and  for all

for all  .

.

Now we consider the energy balance of a capacitance that is operated in the time interval [t 0,t f ]

Consequently, the function  has the physical interpretation of an energy storage function. A capacitance is therefore a reactive element, that is, it stores energy.

has the physical interpretation of an energy storage function. A capacitance is therefore a reactive element, that is, it stores energy.

Note that, in the linear case, the storage function simplifies to

whence the energy balance then reads

Remark 5.7

(Capacitances and differentiation rules)

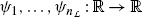

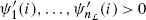

The previous assumptions imply that the function \(q:\mathbb {R}\rightarrow\mathbb{R}\) is differentiable. By the chain rule, (38) can be rewritten as

where

Monotonicity of q further implies that  is a pointwise positive function.

is a pointwise positive function.

By the differentiation rule for inverse functions, we obtain

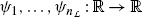

2.5.4 Inductances

It will turn out in this part that inductances are components that store magnetic energy. We will see that there are certain analogies to capacitances if one replaces electric by accordant magnetic physical quantities. The mode of action of an inductance can be explained by a conductor loop. We further make the (simplifying) assumption that the conductor with domain Ω forms a circle that is interrupted by an isolator of width zero (see Fig. 12). Assume that the circle radius is given by r, where the radius is here defined to be the distance from the circle midpoint to any conductor midpoint. Further, let l h be the conductor width.

To deduce the relation between inductive voltage and current from Maxwell’s equations, we make the following assumptions.

Assumption 5.8

(The electromagnetic field inside capacitances)

-

(a)

The electric displacement inside the medium Ω is stationary, that is,

$$\frac{\partial}{\partial t}D\equiv0. $$ -

(b)

The medium is a perfect conductor, that is, E(⋅,ξ)≡0 for all ξ∈Ω.

-

(c)

There is no explicit algebraic relation between the electric current density and the electric field intensity.

-

(d)

Ω does not contain any electric charges.

-

(e)

The function \(f_{m}:\mathbb{R}^{3}\times\varOmega\to\mathbb {R}^{3}\) has the following properties:

-

(i)

f m is continuously differentiable.

-

(ii)

f m is homogeneous, that is, f m (B,ξ 1)=f m (B,ξ 2) for all \(B\in\mathbb{R}^{3}\) and ξ 1,ξ 2∈Ω.

-

(iii)

The function \(f_{m}(\cdot,\xi):\mathbb{R}^{3}\rightarrow\mathbb {R}^{3}\) is invertible for some (and hence any) ξ∈Ω.

-

(iv)

f m is isotropic, that is, f m (B,ξ) and B are linearly dependent for all \(B\in\mathbb{R}^{3}\) and ξ∈Ω.

-

(i)

Let ξ=ξ x e x +ξ y e y +ξ z e z , and let \(h_{s}:\mathbb {R}\rightarrow \mathbb{R}\) be a differentiable function such that

and

We make the following ansatz for the magnetic flux intensity:

where h(⋅) is a scalar-valued function defined on a temporal domain in which the process evolves (see Fig. 12).

Using the definition of the current (8), Maxwell’s equations, property (c), and the stationarity of the electric field yields

Assume that the voltage u(⋅) is applied to the inductor. The electric field intensity inside the conductor is additively composed of the field intensity induced by the applied voltage E appl(⋅ ,⋅) and the electric field intensity E ind(⋅ ,⋅) induced by the magnetic field. Since the wire is a perfect conductor, the electric field intensity vanishes inside the wire. Consequently, for all times t and all \(\xi\in\mathbb{R}^{3}\) with

we have

Let \(A\subset\mathbb{R}^{3}\) be a circular area that is surrounded by the midline of the wire, that is,

Isotropy, homogeneity, and the invertibility of f m then implies that the magnetic flux is as well directed orthogonally to A, that is,

for some differentiable function \(\psi_{x}:\mathbb{R}\rightarrow \mathbb{R}\).

By Maxwell’s equations, Stokes theorem, the definition of the voltage, and a transformation to polar coordinates we obtain

That is, we obtain the dynamic relation

for some function \(\psi:\mathbb{R}\rightarrow\mathbb{R}\), which is called a magnetic flux function. The symbol of an inductance is presented in Fig. 13.

Remark 5.9

(Linear inductance)

Note that, in the case where the constitutive relation is furthermore linear (i.e., f m (B(t,ξ),ξ)=c i ⋅H(t,ξ)), the inductance relation (35) becomes

where

is the so-called inductance value of the linear inductance.

Remark 5.10

(Inductance, energy balance)

Isotropy and homogeneity of f m and the construction of the function ψ x further implies that the magnetic energy density fulfills

Hence, the function \(\psi_{x}:\mathbb{R}\rightarrow\mathbb{R}\) is invertible with

where

In particular, this function fulfills V m,x (0)=0 and V m,x (h)>0 for all \(h\in\mathbb{R}\setminus\{0\}\). The latter, together with the continuous differentiability of f m (⋅,ξ) and \(f_{m}^{-1}(\cdot,\xi)\), implies that the derivatives of both the function \(\psi_{x}^{-1}\) and ψ x are positive and, furthermore, ψ x (0)=0. Thus, the function \(\psi :\mathbb{R} \rightarrow\mathbb{R}\) is differentiable with

Consequently, ψ possesses a continuously differentiable and strictly increasing inverse function \(\psi^{-1}:\mathbb{R}\rightarrow \mathbb{R}\) with \(\operatorname{sign}\psi^{-1}(p)=\operatorname{sign}(p)\) for all \(p\in\mathbb{R}\). Now consider the function

The construction of  implies that

implies that  and

and  for all

for all  and, furthermore,

and, furthermore,

Now we consider the energy balance of an inductance that is operated in the time interval [t 0,t f ]

Consequently, the function  has the physical interpretation of an energy storage function. An inductance is therefore again a reactive element.

has the physical interpretation of an energy storage function. An inductance is therefore again a reactive element.

In the linear case, the storage function simplifies to

whence the energy balance then reads

Remark 5.11

(Inductances and differentiation rules)

The previous assumptions imply that the function \(\psi:\mathbb {R}\rightarrow \mathbb{R}\) is differentiable. By the chain rule, (42) can be rewritten as

where

The monotonicity of ψ further implies that the function  is pointwise positive.

is pointwise positive.

By the differentiation rule for inverse functions we obtain

2.5.5 Some Notes on Diodes

Resistances, capacitances, and inductances are typical components of analogue electrical circuits. The fundamental role in electronic engineering is however taken by semiconductor devices, such as diodes and transistors (see also Notes and References). A fine modeling of such components has to be done by partial differential equations (see, e.g., [36]).

In contrast to the previous sections, we are not going to model these components on the basis of the fundamental laws of the electromagnetic field. We are rather presenting a less accurate but often reliable ansatz to the description of their behavior by equivalent RCL circuits. As a showcase, we are considering diodes. The symbol of a diode is presented in Fig. 14.

An ideal diode is a component that allows the current to flow in one specified direction while blocking currents with opposite sign. A mathematical lax formulation of this property is

A mathematically more precise description is given the specification of the behavior

Since the product of voltage and current of an ideal diode always vanishes, this component behaves energetically neutral.

It is clear that such a behavior is not technically realizable. It can be nevertheless be approximated by a component consisting of a semiconductor crystal with two regions, each with a different doping. Such a configuration is called an np-junction [55].

The most simple ansatz for the modeling of a nonideal diode is by replacing it by a resistance with highly nonsymmetric conductance behavior, such as, for instance, the Shockley diode equation [55]

where i S >0 and u p >0 are material-dependent quantities. Note that the behavior of an ideal diode is the more approached, the bigger is u p .

A refinement of this model also includes capacitive effects. This can be done by adding some (small) capacitance in parallel to the resistance model of the diode [61].

2.5.6 Notes and References

-

(i)

In [16, 19, 32, 34, 60], component relations have also been derived. These however go with an a priori definition of capacitive charge and magnetic flux as physical quantities. In contrast to this, our approach is based on Maxwell’s equations with additional assumptions.

-

(ii)

Note that, apart from sources, resistances, and capacitances, there are various further components that occur in electrical circuits. Such components could, for instance, be controlled sources [22] (i.e., sources with voltage or current explicitly depending on some other physical quantity), semi-conductors [12, 36] (such as diodes and transistors), MEM devices [48, 53, 54], or transmission lines [42].

2.6 Circuit Models and Differential–Algebraic Equations

2.6.1 Circuit Equations in Compact Form

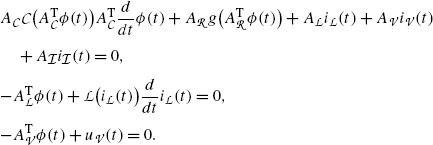

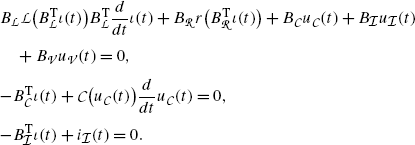

Having collected all relevant equations describing an electrical circuit, we are now ready to set up and analyze the overall model. Let a connected electrical circuit with n branches be given; let the vectors \(i(t),u(t)\in\mathbb{R}^{n}\) be defined as in (13) and (15), that is, their components are containing voltages and current of the respective branches. We further assume that the branches are ordered by the type of component, that is,

where

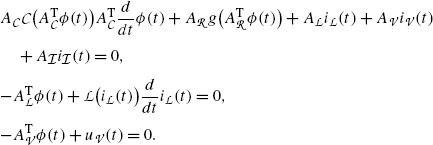

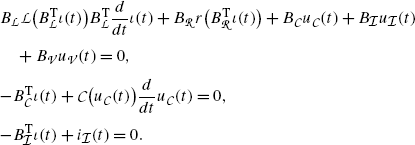

The component relations then read, in compact form,

for

where the scalar functions \(g_{i},q_{i},\psi_{i}:\mathbb{R}\rightarrow \mathbb{R}\) are respectively representing the behavior of the ith resistance, capacitance, and inductance. The assumptions of Sect. 2.5 imply that g(0)=0, and for all  ,

,

Further, since  and

and  , the functions

, the functions  and

and  possess inverses fulfilling

possess inverses fulfilling

where

In particular,  ,

,  , and

, and

Using the chain rule, the component relations of the reactive elements read (see Remarks 5.7 and 5.11)

where

In particular, the monotonicity of the scalar charge and flux functions implies that the ranges of the functions  and

and  are contained in the set of diagonal and positive definite matrices.

are contained in the set of diagonal and positive definite matrices.

The incidence and loop matrices can, as well, be partitioned according to the subdivision of i(t) and u(t) in (46), that is,

Kirchhoff’s laws can now be represented in two alternative ways, namely the incidence-based formulation (see (24) and (26))

or the loop-based formulation (see (25) and (27))

Having in mind that the functions  and \(i_{\mathcal {I}}(\cdot)\) are prescribed, the overall circuit is described by the resistance law

and \(i_{\mathcal {I}}(\cdot)\) are prescribed, the overall circuit is described by the resistance law  , the differential equations (49a) for the reactive elements, and the Kirchhoff laws either in the form (50) or (51). This altogether leads to a coupled system of equations of pure algebraic nature (such as the Kirchhoff laws and the component relations for resistances) together with a set of differential equations (such as the component relations for reactive elements). This type of systems is, in general, referred to as differential–algebraic equations. A more rigorous definition and some general facts on type is presented in Sect. 2.6.2. Since many of the above-formulated equations are explicit in one variable, several relations can be inserted into one another to obtain a system of smaller size. In the following, we discuss two possibilities: