Abstract

We propose a modified parallel-in-time — parareal — multi-level time integration method that, in contrast to previously proposed methods, employs a coarse solver based on a reduced model, built from the information obtained from the fine solver at each iteration. This approach is demonstrated to offer two substantial advantages: it accelerates convergence of the original parareal method for similar problems and the reduced basis stabilizes the parareal method for purely advective problems where instabilities are known to arise. When combined with empirical interpolation methods (EIM), we develop this approach to solve both linear and nonlinear problems and highlight the minimal changes required to utilize this algorithm to accelerate existing implementations. We illustrate the advantages through algorithmic design, through analysis of stability, convergence, and computational complexity, and through several numerical examples.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

7.1 7.1 Introduction

With the number of computational cores on large scale computing platforms increasing, the demands on scalability of computational methods likewise increase, due partly to an increasing imbalance between the cost of memory access, communication and arithmetic capabilities. Among other things, traditional domain decomposition methods tend to stagnate in scaling as the number of cores increases and the computational cost is overwhelmed by other tasks. This suggests a need to consider the development of computational techniques that better balance these constraints and allow for the acceleration of large scale computational challenges.

A recent development in this direction is the parareal method, introduced in [16], that provide a strategy for ‘parallel-in-time’ computations and offers the potential for an increased level of parallelism. Relying on combining a computational inexpensive but inaccurate solver with an accurate and expensive but parallel solver, the parareal method utilizes an iterative, predictor-corrector procedure that allows the expensive solver to run across many processors in parallel. Under suitable conditions, the parareal iteration converges after a small number of iterations to the serial solution [3]. During the last decade, the parareal method has been applied successfully to a number of applications (cf. [17,19]), demonstrating its potential, accuracy, and robustness.

As a central and serial component, the properties of the coarse solver can impact the efficiency and stability of the parareal algorithm, e.g., if an explicit scheme is used in both the coarse and the fine stage of the algorithm, the efficiency of the parareal algorithm is limited by the upper bound of the time step size [19]. One can naturally also consider a different temporal integration approach such as an implicit approach, although the cost of this can be considerable and often requires the development of a new solver. An attractive alternative is to use a simplified physics model as the coarse solver [2, 17, 18], thereby ignoring small scale phenomenon but potentially impacting the accuracy. The success of such an approach is typically problem specific.

While the choice of the coarse solver clearly impacts accuracy and overall efficiency, the stability of the parareal method is considerably more subtle. For parabolic and diffusion dominated problems, stability is well understood and observed in many applications [12]. However, for hyperbolic and convection dominated problems, the question of stability is considerably more complex and generally remains open [3,8, 22]. In [8], the authors propose to regularly project the solution onto an energy manifold approximated by the fine solution. The performance of this projection method was demonstrated for the linear wave equation and the nonlinear Burgers’ equation. As an alternative, the Krylov subspace parareal method builds a new coarse solver by reusing all information from the corresponding fine solver at previous iterations. The stability of this approach was demonstrated for linear problems in structural dynamics [10] and a linear 2-D acoustic-advection system [21]. However, the Krylov subspace parareal method appears to be limited to linear problems.

The approach of combining the reduced basis method [20] with the parareal method for parabolic equations was initiated in [13] in which it is demonstrated that a coarse solver based on an existing reduced model offers better accuracy and reduces the number of iterations in the examples considered. However, that work offers no discussion on the construction of the reduced model, nor was there any attempt to analyze the stability and convergence of the method.

Inspired by [13, 21], we propose a modified parareal method, referred to as the reduced basis parareal method in which the Krylov subspace is replaced by a subspace spanned by a set of reduced bases, constructed on-the-fly from the fine solver. This method inherits most advantages of the Krylov subspace parareal method and is observed to retain stability and convergence for linear wave problems. We demonstrate that this approach accelerates the convergence in situations where the original parareal already converges. However, it also overcomes several known challenges: (i) it deals with nonlinear problems by incorporating methodologies from the reduced basis methods; and (ii) the traditional coarse propagator is needed only once at the very beginning of the algorithm to generate an initial reduced basis. This allows for the time step restrictions to be relaxed as compared to the coarse solver of the original parareal method. The main difference between our method and [13] lies in the reduced approximation space and the construction of reduced bases. The reduced model, playing the role of the coarse solver, is updated for each iteration while the reduced model in [13] is built only once during an initial offline process. Among other advantages, this allows the proposed method to adapt the dimension of the reduced approximation space based on the regularity of the solution, while in [13] the reduced model remains fixed and must be developed using some other approach.

What remains of this paper is organized as follows. We first review the original parareal method in Sect. 7.2.1 and the Krylov subspace parareal method in Sect. 7.2.2. This sets the stage for Sect. 7.2.3 wherewe introduce the reduced basis parareal method and discuss different strategies to develop reduced models for problems with nonlinear terms. Section 7.3 offers some analysis of the stability, convergence, and complexity of the reduced basis parareal method and Sect. 7.4 demonstrates the feasibility and performance of the reduced basis parareal method through various linear and nonlinear numerical examples. We conclude the paper in Sect. 7.5.

7.2 7.2 Parareal Algorithms

To set the stage for the general discussion, let us first discuss the original and the Krylov subspace parareal methods in Sect. 7.2.1 and Sect. 7.2.2, respectively. We shall highlight issues related to stability and computational complexity to motivate the reduced basis parareal method, introduced in Sect. 7.2.3.

7.2.1 7.2.1 The original parareal method

Consider the following initial value problem:

where u ∈ ℝN is the unknown solution, L is an operator, possibly arising from the spatial discretization of a PDE, with A being the linear part of L, and N the nonlinear part.

In the following, we denote F δt as the accurate but expensive fine time integrator, using a constant time step size, δt. Furthermore, G Δt is the inaccurate but fast coarse time integrator using a larger time step size, Δt. Generally, it is assumed that Δt ≫ δt.

The original parareal method is designed to solve (7.1) in a parallel-in-time fashion to accelerate the computation. First, [0,T] is decomposed into N c coarse time intervals or elements:

Assume that

which implies that T = N c N f δt. Denote F δt (u, t i+1, t i ) as the accurate numerical solution integrated from t i to t i+1 by using F δt with the initial condition u and the constant time step size δt. Similarly for G Δt (u, t i+1, t i ). Denote also u n = F δt (u 0,T,0) as the numerical solution generated using only the fine integrator. With the above notation, the original parareal method is shown below in Algorithm 7.1

Now assume that the k-th iterated approximation u k n is known. The parareal approach proceeds to the k + 1-th iteration as

It is easy to see that F δt (u k n , t n+1, t n ) can be done in parallel across all temporal elements. If we take the limit of k → ∞ and assume that the limit of {u k n } exists, we obtain [16]:

In order to achieve a reasonable efficiency, the number of iterations, N it , should be much smaller than N c .

To demonstrate the performance of the original parareal method, let us consider a few numerical examples, beginning with the viscous Burgers’ equation:

where T = 2 and v = 10−1. A 2π-periodic boundary condition is used. The spatial discretization is a P 1 discontinuous Galerkin method (DG) with 100 elements [15] and the time integrator is a first-order forward Euler method. We use the following parameters in the parareal integration

Figure 7.1 illustrates the L ∞-error of the parareal solution at T = 2 against the number of iterations. Notice that for this nonlinear problem the algorithm converges after only four iterations, illustrating the potential for an expected acceleration in a parallel environment.

As a second example, we consider the Kuramoto-Sivashinsky equation [25]:

with final time T = 40 and periodic boundary conditions.

As a spatial discretization we use a Fourier collocation method with 128 points [14] and an IMEX scheme [1] as a time integrator, treating the linear terms implicitly and the nonlinear term explicitly. The parameters in the parareal method are taken as

Figure 7.2 (left) shows the time evolution of the chaotic solution to the Kuramoto-Sivashinsky equation with a Gaussian initial condition. In Fig. 7.2 (right), we show the L ∞-error at T = 40 against the number of iterations. In this case, we take the solution computed by the fine solver as the exact solution. It is clear that the parareal solution converges, albeit at a slower rate. It should also be noted that Δt/δt = 100, indicating the potential for a substantial acceleration.

As a last and less encouraging example, we consider the 1-D advection equation

with a final time T = 10, a = 2π and a 2π-periodic boundary condition. We use a DG method of order 32 and 2 elements in space [15], a singly diagonal implicit fourth-order Runge-Kutta scheme in time (a five-stage fourth-order scheme, cf. S54b in [23]), and the parareal parameters:

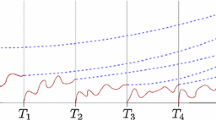

Figure 7.3 shows the L ∞-error at T = 10 against the number of iterations. The instability of the original parareal method is apparent, as has also been observed by others [3, 8, 22].

7.2.2 7.2.2 The Krylov Subspace Parareal Method

We notice in Algorithm 7.1 that only \(\{ u_{{f_{i + 1}}}^k\} _{i = 0}^{{N_c} - 1}\) is used in the advancement of the solution to k + 1. To fix the stability issue, [10] proposed to improve the coarse solver by reusing information computed at all previous iterations and applied this idea to linear hyperbolic problems in structural dynamics. Recently, a similar idea was successfully applied to linear hyperbolic systems [21].

The basic idea of the Krylov subspace parareal method is to project u k+1 i onto a subspace spanned by all numerical solutions integrated by the fine solver at previous iterations. Denote the subspace as

The corresponding orthogonal basis set {s 1,…,s r } is constructed through a QR factorization.

Denote ℙk as the L 2-orthogonal projection onto S k. The previous coarse solver G Δt is now replaced by K Δt as:

For a linear problem, F δt (ℙk u,t i+1, t i ) can be computed efficiently as

where F δt (s j , t i+1, t i ) are computed and stored once the s j ’s are available. Since this approach essentially produces an approximation to the fine solver, the new coarse solver is expected to be more accurate than the old coarse solver. It was shown in [11] that as the dimension of S k increases, \({\mathbb{P}^k} \to \mathbb{I}\) and K Δt → F δt , thus achieving convergence. The algorithm outline is presented in Algorithm 7.2.

To demonstrate the performance of the Krylov subspace parareal method, we use it to solve the linear advection equation, (7.10). In Fig. 7.4 (left) we show the L ∞-error at T = 10 against the number of iterations. It is clear that the Krylov subspace parareal method stabilizes the parareal solver for this problem.

Two observations are worth making. First, the Krylov subspace parareal method needs to store all the values of S k and F(S k). As k increases, this induces a memory requirement scaling O(kN c N) and this may be become a bottleneck as illustrated in Fig. 7.4 (right). Furthermore, the efficiency of the coarse solver depends critically on the assumption of linearity of the operator and it is not clear how to extend this framework to nonlinear problems. These constraints appear to limit the practicality of the method.

7.2.3 7.2.3 The reduced basis parareal method

Let us first recall a few properties of reduced basis methods that will subsequently serve as key elements of the proposed reduced basis parareal method.

7.2.3.1 7.2.3.1 Reduced Basis Methods

We are generally interested in solving the nonlinear ODE (7.1). As a system, the dimensionality of the problem can be very large, e.g., if the problem originates from a method-of-lines discretization of a nonlinear PDE, so to achieve a high accuracy, requiring a high number of degrees of freedom, N, and it is tempting to seek to identify an approximate model to enhance the computational efficiency without significantly impacting the accuracy.

A general representation of a reduced model in matrix-form is

where the r columns of the matrix V r represent a linear space - the reduced basis - and ũ(t) ∈ ℝr are the coefficients of the reduced model. Projecting the ODE system (7.1) onto V r , we recover the reduced system:

Assuming that V r is orthonormal, this simplifies as

One is now left with specifying how to choose a good subspace, V r , to adequately represent the dynamic behavior of the solution and develop a strategy for how to recover the coefficients for the reduced model in an efficient manner. There are several ways to address this question, most often based on the construction of V r through snapshots of the solution.

Proper orthogonal decomposition. The proper orthogonal decomposition (POD) [5, 6] is perhaps the most widely used approach to generate a reduced basis from a collection of snapshots. In this case, we assume we have a collection of N s snapshots

where each u i is a vector of length N; this N can be large as it reflects the number of degrees of freedom in system. The POD basis, denoted by {φ i } r1 ∈ ℝN, is chosen as the orthonormal vectors that solve the minimization problem:

The solution to this minimization problem is found through the singular value decomposition (SVD) of U:

where V ∈ ℝN×r and \(W \in {\mathbb{R}^{{N_s} \times r}}\) are the left and right singular vectors, respectively, and V is the sought after basis. The entries of the diagonal matrix ∑ provides a measure of the relative energy of each of the orthogonal vectors in the basis.

Once the basis is available, we can increase the computational efficiency for solving (7.17) by precomputing V T r AV r of size r × r. However, the computational complexity of the nonlinear term remains dependent on N and, hence, potentially costly.

Discrete Empirical Interpolation. To address this, [7] proposed an approach, originating in previous work on empirical interpolation methods [4] but limited to the case of an existing discrete basis set. In this approach N(V r ũ(t))) is represented by Ñ(t) ∈ ℝN which is subsequently approximated as

Here V p = [v 1,…,v m ] is an orthogonal POD basis set based on snapshots of N(t). To recover c(t), we seek a solution to an overdetermined system. However, rather than employing an expensive least square method, we extract m equations from the original set of snapshots. Denote

where \({e_{{p_1}}} = {[0, \ldots ,0,1,0, \ldots ,0]^T} \in {\mathbb{R}^N}\) (1 only appears on the p 1-th position of the vector). If P T V p is nonsingular, c(t) can be uniquely determined by

resulting in a final approximation of Ñ(t) as

The interpolation index p i is selected iteratively by minimizing the largest magnitude of the residual r = u k — V p,k c. The procedure, sometimes referred to as discrete empirical interpolation, is outlined in Algorithm 7.3.

With the above approximation, we can now express the reduced system as

Full Empirical Interpolation. Pursuing the above approach further, one is left wondering if we can use a basis other than the computational expensive POD basis, and whether we can choose the interpolation position based on other guidelines. Addressing these questions leads us to propose a full empirical interpolation method.

It is well-known that the original empirical interpolation method is commonly used to separate the dependence of parameters and spatial variables [4], and that the method chooses ‘optimal’ interpolation points in a certain sense. We propose to consider time as a parameter, and use the empirical interpolation to construct the reduced bases V E,k of u and the reduced bases V pE,k of the nonlinear term, i.e.,

The resulting reduced model can be written as

The essential difference between the models based on discrete empirical interpolation and the full empirical interpolation approach is found in the way in which one constructs the reduced basis set. In the former case, the importance of the basis elements is guided by the SVD and the relative size of the singular values, resulting in a potentially substantial cost. The latter case is based on the interpolation error and the basis in constructed in a full greedy fashion. A detailed comparative study of the performance between the two approaches is ongoing and will be presented in a forthcoming paper.

7.2.3.2 7.2.3.2 The Reduced Basis Parareal Method

Let us now introduce the new reduced basis parareal method. Our first observation is that the first term in (7.13) can be dropped under the assumption that the projection error vanishes asymptotically. Hence, for linear problems, we can replace K Δt by \({\hat K_{\Delta t}}\) as

This is essentially an approximation to the fine time integrator with an admissible truncation error. Keeping in mind that F δt is an expensive operation, we seek to reduce the dimension of S k to achieve a better efficiency. If the solution to the ODE is sufficiently regular, it is reasonable to seek an r-dimensional subspace, S k r (the reduced basis space), of the original space S k. Now redefine ℙ k r to be the orthogonal projection from u onto S k r . Then (7.26) becomes

which is essentially an approximation to the fine time integrator using the reduced model.

Consequently, our reduced basis parareal method for linear problems is as follows:

Depending on the construction of the reduced model, we refer to it as the POD parareal method or the EIM parareal method.

Algorithm 7.4 describes the basic steps of the reduced basis parareal method for linear problems. It follows a procedure similar to Algorithm 7.2, but requires less memory for storing the bases. Notice that for linear problems, the coarse solver is needed only for initializing the algorithm. After this first step, the fine solver produces all the information needed for the reduced model, and the algorithm no longer depends on the coarse solver.

For nonlinear problems, the relationship

does not generally hold, even if ℙk u → u. Therefore, the Krylov subspace parareal method is not applicable. Fortunately, the knowledge of the development of reduced models using empirical interpolation offers insight into dealing with nonlinear problems, as mentioned in Sect. 7.2.3.1. We construct the coarse time integrator as follows:

where F rδt is the reduced model constructed by POD or EIM as we described in the previous section. Consequently, our reduced basis parareal method for nonlinear problems becomes

As long as there exists a suitable reduced model for the problem, we can evaluate \({\hat K_{\Delta t}}\) efficiently while maintaining an accuracy commensurate with the fine solver. The reduced basis parareal method for nonlinear problems is outlined in Algorithm 7.5.

7.3 7.3 Analysis of the Reduced Basis Parareal Method

In the following we provide some analysis of the reduced basis parareal method to understand its stability, convergence and overall computational complexity. Throughout, we assume that there exists a reduced model for the continuous problem.

7.3.1 7.3.1 Stability analysis

We first consider the linear case. Define the projection error:

where r is the dimension of the reduced space. We assume a projection error

and define:

It is reasonable to assume that the fine propagator is L 2 stable, i.e., there exists a nonnegative constant C F independent of the discretization parameters, such that,

Theorem 7.1 (Stability for the linear case) Under the assumption of (7.33) and (7.35), the reduced basis parareal method is stable for (7.1) with N ≡ 0, i.e., for each i and k,

where CL is a constant depending only on Cp,r, CF, and u 0

Proof Using the triangle inequality, linearity of the operator, and assumption (7.35), we obtain

Then, by the discrete Gronwall’s lemma [9] and (7.33), we recover

This completes the proof.

Note that if there exists an small integer M (indicating a compact reduced approximation space) such that,

then we recover the same stability property as that of the fine solver:

For the nonlinear case, we further assume that there exists a nonnegative constant C r , independent of the discretization parameters, such that,

where q k i is the L 2-difference between the fine propagator and the reduced model using the same initial condition v at t i . As before, we assume

Theorem 7.2 (Stability for the nonlinear case) Under assumptions (7.35), (7.43) and (7.44), the reduced basis parareal method is stable for (7.1) in the sense that for each i and k

where C ⋆ = max{C F ,C r } and C N is a constant depending only on C p,r , C F , C r, and u 0.

Proof Using the triangle inequality and assumptions (7.35) and (7.43), we have

Next, by the discrete Gronwall’s lemma and (7.44), we derive

This completes the proof.

7.3.2 7.3.2 Convergence analysis

To show convergence for the linear case, we first assume that there exists a nonnegative constant C F , such that,

We define

and assume that

Theorem 7.3 (Convergence for the linear case) Under assumption (7.33), (7.42), (7.51), (7.53) and N ≡ 0 in (7.1), the reduced basis parareal solution converges to u i+1 for each i.

Proof Using the reduced basis parareal formula and the linearity of the operator, we obtain

By the triangular inequality and assumption (7.51), we recover

Finally by the discrete Gronwall’s lemma, (7.33) and (7.53), we obtain

which approaches zero as r increases. This completes the proof.

For the nonlinear case, we must also assume that there exists a nonnegative constant C r , such that,

where q k i and p k i represent the L 2-difference between the fine operator and the reduced solver using the same initial condition u k i and u i . As before, we assume that

Theorem 7.4 (Convergence of the nonlinear case) Under assumptions (7.42), (7.43), (7.44), (7.66) and (7.67), the reduced basis parareal solution of (7.1) converges to u i+1 for each i.

Proof Using the reduced basis parareal formula, we obtain

By the triangular inequality and assumptions (7.66) and (7.66), we have

Then, by the discrete Gronwall’s lemma, (7.44) and (7.67) we recover

which approaches zero as r increases under assumption (7.42).

For the above analysis it is worth emphasizing two points:

-

The accuracy of the new parareal algorithm is O(ε), since C p,r depends on ε as a measure of the quality of the reduced model. We shall confirm this point by the numerical tests in Sect. 7.4.

-

Theorem 7.3 and 7.4 indicate that if there exists a good reduced approximation space for the problem, the new parareal algorithm converges in one iteration.

7.3.3 7.3.3 Complexity Analysis

Let us finally discuss the computational complexity of the reduced basis parareal method. Recall that the dimension of the reduced space is r and that of the fine solution is N. This is assumed to be the same for the coarse and fine solvers although this may not be a requirement in general. The compression ratio is R = r/N. Following the notation of [21]: τ QR (k), τ RB (k) (representing τ SVD (k), τ EIM (k), and τ DEIM (k) in different scenarios) reflect computing times required by the corresponding operations at the k-th iteration. τ c and τ f is the time required by the coarse and fine solvers, respectively. N t = N c N f is the total number of time steps in one iteration with N c being the number of the coarse time intervals and N f the number of fine time steps on each coarse time interval. N p is the number of processors.

In [21], the speedup is estimated as

In the reduced basis parareal method, τ c = R 2τ f , since the complexity of the computation of the right hand side of system is O(r 2). In addition, τ QR becomes τ SVD or τ EIM . With this in mind, the speedup can be estimated as

Next, we examine the first two terms in the denominators of (7.74) and (7.75).

-

In the first term, τ c /τ f takes the role of R 2. Hence, we can achieve a comparable performance, if \(R \approx \sqrt {{\tau _c}/{\tau _f}} \), i.e, if the underlying PDE solution can be represented by a reduced basis set of size \(O\left( {\sqrt {{\tau _c}/{\tau _f}} N} \right)\). Suppose that \(\sqrt {{\tau _c}/{\tau _f}} = \sqrt {1/20} \approx 0.23\). This requires that R < 1/4, which is a reasonable compression ratio for many problems. In addition, it is possible to use a reduced basis approximation to achieve a better performance for cases where CFL conditions lead to restrictions for the coarse solver.

-

For the second term, τ SVD ≈ τ QR ≈ O(NN 2 it N 2 c ), while τ EIM ≈ O(r 3/2N it N c + rNN it N c ). Therefore, τ SVD /τ EIM ≈ O(2N it N c /Rr 2). As N c increases, τ EIM becomes smaller. In addition, EIM has very good parallel efficiency and requires less memory during the computation.

Also note that N it would typically be different for the reduced basis parareal method and the original parareal method. If a reduced space exists, the modified algorithm usually converges within a few iterations, hence accelerating the overall convergence significantly.

7.4 7.4 Numerical Results

In the following, we demonstrate the feasibility and efficiency of the reduced basis parareal method for both linear and nonlinear problems. We generally use the solution obtained from the fine time integrator as the exact solution.

7.4.1 7.4.1 The Linear Advection Equation

We begin by considering the performance of the reduced basis parareal method and illustrate that it is stable for the 1-D linear advection equation (7.10). The spatial and temporal discretizations are the same as used in Sect. 7.2 and parameters in (7.11) are used.

In Fig. 7.5 (left), we show the L ∞-error at T = 10 against the number of iterations for the original parareal method, the POD parareal method, and the EIM parareal method. The accuracy of the fine time integrator at T = 10 is 4 × 10−13. The original parareal method is clearly unstable, while the other two remain stable. The very rapid convergence of the reduced basis parareal method reflects that the accuracy of reduced model is very high for this simple test case. As we will see for more complex nonlinear problems, this behavior does not carry over to general problems unless a high-accuracy reduced model is available.

POD parareal method, and the EIM parareal method for the 1-D advection equation. On the left we show the L ∞-error at T = 10 against the number of iterations while the right shows the number of bases used for satisfying the tolerance of ε(10−13) in the POD and EIM parareal methods across the iterations

In Fig. 7.5 (right), we show the number of bases used to satisfy the tolerance ε in the POD parareal method and the EIM parareal method. Here ε in the POD context is defined as the relative energy in the truncated mode and in the EIM context it is the interpolation error. In both cases, the tolerance in the basis selection using POD or EIM is set to 10−13.We note that the EIM parareal method achieves higher accuracy but requires more memory to store the bases. This suggests that one can explore a tradeoff between accuracy and efficiency for a particular application.

Remark 7.1 It should be noted that if only snapshots from the previous iteration is used in the EIM basis construction, the scheme becomes unstable. However, when including all snapshots collected up to the previous iteration level, stability is restored.

Figure 7.6 (upper left) shows the convergence behavior of the EIM parareal algorithm with different tolerances (ε = 10−k,k = 2,4,6,8,10, 12). The convergence stagnates at a certain level and instability may set in after further iterations. There are two reasons for this: 1) as ε becomes small, the reduced bases may become linear dependent, leading to a bad condition number of the related matrices that may impact stability; 2) the newly evolved reduced bases \({S_{{f_i}}}\) for the fine solution may not be within S anymore. To resolve this problem, we first perform the reorthogonalization of the reduced bases to obtain a new space \(\tilde S\) and then project the newly evolved solution \({\hat K_{\Delta t}}(u_i^{k + 1},{t_{i + 1}},{t_i})\) back to \(\tilde S\). In Fig. 7.6 (lower left) we show the convergence results following this approach. Most importantly, stability is restored. Furthermore, the dependence of the final accuracy on ε is clear. These results are consistent with Theorem 7.3, stating that the parareal solution converges to the serial solution integrated by the fine solver as long as the subspace S saturates in terms of accuracy. In practice, one can choose ε such that the accuracy of the parareal solution and the serial fine solution are comparable.

The performance of the EIM parareal method for the 1-D advection equation against the tolerance used in the design of the reduced basis. On the upper left we show the L∞-error at T = 10 against the number of iterations as the tolerance ε decreases and on the upper right the number of bases used for satisfying the tolerance as ε decreases, where ε = 10−k,k = 2,4,6,8,10, 12; On the lower left and right, we show the corresponding convergence results and the number bases with the reorthogonalization procedure of the evolved basis

7.4.2 7.4.2 The second order wave equation

To further evaluate the stability of the new parareal algorithm, we consider the second-order wave equation from [8]:

where T = 10 and c = 5 and a 2π-periodic boundary condition is used. The initial conditions are set as

and

and set p = 4. In the following we use a Fourier spectral discretization with 33 modes in space [14] and the velocity Verlet algorithm in time [24]. The following parameters are used in the parareal algorithm:

The tolerance for POD is set to 10−11, respectively.

In Fig. 7.7 (left), we show the L ∞-error at T = 10 against the number of iterations for the original parareal method and the POD parareal method. The original parareal method is clearly unstable, while the POD parareal remains stable and converges in one iteration. This confirms our analysis: if the reduced model is accurate enough, the reduced basis parareal should converge in one iteration. In Fig. 7.5 (right), we show the number of bases needed to satisfy the tolerance ε in the POD parareal method.

Results obtained using the original parareal method, the POD parareal method for the 1-D second order wave equation. On the left we show the L ∞-error at T = 10 against the number of iterations while the right shows the number of bases used for satisfying the tolerance of ε(10−11) in the POD parareal method across the iterations

7.4.3 7.4.3 Nonlinear Equations

Let us also apply the reduced basis parareal method to examples with nonlinear PDEs. We recall that the Krylov based approach is not applicable in this case.

7.4.3.1 7.4.3.1 Viscous Burgers’ Equation

We first consider the viscous Burgers’ equation (7.6), with the same spatial and temporal discretization and the same parameters as in (7.7). To build the reduced basis, we set the tolerance for POD and EIM to be 10−15 and 10−10, respectively.

In Fig. 7.8 (left), we show the L ∞-error at T = 2 against the number of iterations for the original parareal method, the POD parareal method, and the EIM parareal method. Note that in this case, the RB parareal performs worse than the original parareal does. It is a result of the reduced model not adequately capturing the information of the fine solver. Recall that in the nonlinear case, we have to deal with two approximations: one for the state variables and one for the nonlinear term. For the POD parareal algorithm, we choose the number of reduced bases based on the tolerance for the state variable u; alternatively, we can choose the dimension of the reduced approximation space based on the tolerance for the nonlinear term. The latter approach shows better convergence behavior in Fig. 7.8 (left, parareal-podmodified). It is apparent that the quality of the reduced model directly impacts the convergence.

We emphasize that although the reduced basis parareal method converges slower than the original parareal, it is less expensive, as discussed in Sect. 7.2.3.1.

7.4.3.2 7.4.3.2 Kuramoto-Sivashinsky Equation

Next we consider the Kuramoto-Sivashinsky equation (7.8). The same spatial and temporal discretization and the same parameters as in (7.9) are used. To build the reduced basis, we set the tolerance for POD and EIM to be 10−13 and 10−8, respectively.

In Fig. 7.9 we show the L ∞-error at T = 40 against the number of iterations for the original parareal method, the POD parareal method, the modified POD parareal, and the EIM parareal method. It is clear that the reduced basis parareal method converges faster than the original parareal method. This is likely caused by the solution of the problem being smooth enough to ensure that there exists a compact reduced model. Moreover, to keep the corresponding tolerance, the number of degrees of freedom in the reduced basis parareal methods is roughly one-third that of the original parareal method.

We compare the performance of the original parareal method, the POD parareal method, and the EIM parareal method for the 1-D Kuramoto-Sivashinsky equation. On the left we show the L ∞-error at T = 40 against the number of iterations, while the right shows the number of bases used against the number of iterations

7.4.3.3 7.4.3.3 Allan-Cahn Equation: Nonlinear Source

As a third nonlinear example we consider the 1-D Allan-Cahn equation:

where T = 2 and v = 2,1, 10−1, 10−2. A periodic boundary condition is assumed. We use a P 1 DG method with 100 elements in space [15] and a forward Euler scheme in time. The following parameters are used in the parareal algorithm

We set the tolerance for POD and EIM to be 10−12 and 10−8, respectively.

In Fig. 7.10 (left), we show the L ∞-error at T = 2 against the number of iterations for the POD parareal method for different values of v’s. It is clear that for larger values of v, the solution converges faster and less elements in the reduced basis is needed. This is expected since a larger v indicates a smoother and more localized solution which is presumed to allow for an efficient representation in a lower dimensional space. Similar results are obtained by an EIM based parareal approach and are not reproduced here.

We compare the performance of the original parareal method, the POD parareal method, and the EIM parareal method for the 1-D Kuramoto-Sivashinsky equation. On the left we show the L ∞-error at T = 40 against the number of iterations, while the right shows the number of bases used against the number of iterations

7.4.3.4 7.4.3.4 KdV Equation: Nonlinear Flux

As a last example we consider the KdV equation (taken from [26]):

where T = 2 and v = 10−3 and we assume a periodic boundary condition. The equation conserves energy, much like the linear wave equation, but the nonlinearity induces a more complex behavior with the generation of propagating waves. In the parareal algorithm we use

We use a first order local discontinuous Galerkin method (LDG) with 100 elements in space [15, 26] and an IMEX scheme in time [1], with the linear terms treated implicitly and the nonlinear term explicitly. We set the tolerance for POD and EIM to be 10−13 and 10−8, respectively.

In Fig. 7.11 (left) we show the L ∞-error at T = 2 against the number of iterations for the original parareal method, the POD parareal method, and the EIM parareal method. While the POD parareal method does not work well in this case, the EIM parareal method shows remarkable performance, i.e., it converges much faster than the original parareal method. Note that even if the tolerance for the POD is smaller than that of the EIM, it does not guarantee that the reduced model error based on the POD approach is smaller. There are two reasons: 1) the meaning of the tolerance in the context of the POD and the EIM are different. 2) in the convergence proof of (7.71), the constants C r ,C p,r depend on the details of the reduced approximation and the dimension of reduced approximation space, which impact the final approximation error.

7.5 7.5 Conclusions

In this paper, we propose an approach to produce and use a reduced basis method to replace the coarse solver in the parareal algorithm. We demonstrate that, as compared with the original parareal method, this new reduced basis parareal method has improved stability characteristics and efficiency, provided that the solution can be represented well by a reduced model. The analysis of the method is confirmed by the computational results, e.g., the accuracy of the parareal method is determined by the accuracy of the fine solver and the reduced model, used to replace the coarse solver. Unlike the Krylov subspace parareal method, this approach can be extended to include both linear problems and nonlinear problems, while requiring less storage and computing resources. The robustness and versatility of the method has been demonstrated through a number of different problems, setting the stage for the evaluation on more complex problems.

Acknowledgements The authors acknowledge partial support by OSD/AFOSR FA9550-09-1-0613 and AFOSR FA9550-12-1-0463.

References

Ascher, U.M., Ruuth, S.J., Wetton, B.T.R.: Implicit-explicit methods for time-dependent partial differential equations. SIAM J. Numer. Anal. 32(3), 797–823 (1995)

Baffico, L., Bernard, S., Maday, Y., Turinici, G., Zérah, G.: Parallel-in-time moleculardynamics simulations. Physical Review E 66(5) (2002)

Bal, G.: On the Convergence and the Stability of the Parareal Algorithm to Solve Partial Differential Equations. In: Domain decomposition methods in science and engineering, pp. 425–432. Lecture Notes in Computational Science and Engineering, Vol. 40. Springer-Verlag, Berlin Heidelberg (2005)

Barrault, D., Maday, Y., Nguyen, N., Patera, A.: An empirical interpolation method: application to efficient reduced-basis discretization of partial differential equations. Comptes Rendus Mathematique 339(9), 667 (2004)

Cavar, D., Meyer, K.E.: LES of turbulent jet in cross flow: Part 2 POD analysis and identification of coherent structures. Inter. J. Heat Fluid Flow 36, 35–46 (2012)

Chatterjee, A.: An introduction to the proper orthogonal decomposition. Current Science-Bangalore 78(7), 808 (2000)

Chaturantabut, S., Sorensen, D.: Nonlinear model reduction via discrete empirical interpolation. SIAM Journal on Scientific Computing 32(5), 2737 (2010)

Dai, X., Maday, Y.: Stable parareal in time method for first and second order hyperbolic system. arXiv preprint arXiv:1201.1064 (2012)

Emmerich, E.: Discrete versions of Gronwall’s lemma and their application to the numerical analysis of parabolic problems, 1st ed.. TU, Fachbereich 3, Berlin (1999)

Farhat, C.: Cortial, J.: Dastillung, C., Bavestrello, H.: Time-parallel implicit integrators for the near-real-time prediction of linear structural dynamic responses.. International journal for numerical methods in engineering 67(5), 697 (2006)

Gander, M., Petcu, M.: in ESAIM: Analysis of a Krylov subspace enhanced parareal algorithm for linear problems. Proceedings, vol. 25, pp. 114–129 (2008)

Gander, M., Vandewalle, S.: Analysis of the parareal time-parallel time-integration method. SIAM Journal on Scientific Computing 29(2), 556 (2007)

He, L.: The reduced basis technique as a coarse solver for parareal in time simulations. J. Comput. Math 28, 676 (2010)

Hesthaven, J.S., Gottlieb, S., Gottlieb, D.: Spectral Methods for Time-Dependent Problems. Cambridge University Press, Cambridge, UK (2007)

Hesthaven, J.S., Warburton, T.: Nodal Discontinuous Galerkin Methods: Algorithms, Analysis, and Applications. Springer-Verlag, New York (2008)

Lions, J., Maday, Y., Turinici, G.: A “parareal” in time discretization of pde’s. Comptes Rendus de l’Academie des Sciences Series I Mathematics 332(7), 661 (2001)

Maday, Y., Turinici, G.: Parallel in time algorithms for quantum control: Parareal time discretization scheme. International journal of quantum chemistry 93(3), 223 (2003)

Maday, Y.: Parareal in time algorithm for kinetic systems based on model reduction. High-dimensional partial differential equations in science and engineering 41, 183

Nielsen, A.S.: Feasibility study of the parareal algorithm. MSc thesis, Technical University of Denmark (2012)

Rozza, G., Huynh, D., Patera, A.T.: Reduced basis approximation and a posteriori error estimation for affinely parametrized elliptic coercive partial differential equations. Archives of Computational Methods in Engineering 15(3), 229 (2008)

Ruprecht, D., Krause, R.: Explicit parallel-in-time integration of a linear acousticadvection system. Computers & Fluids 59, 72 (2012)

Staff, G.; Rønquist, E.: Stability of the parareal algorithm. Domain decomposition methods in science and engineering pp. 449–456 (2005)

Skvortsov, L.M.: Diagonally implicit Runge-Kutta methods for stiff problems. Computational Mathematics and Mathematical Physics 46(12), 2110 (2006). DOI 10.1134/S0965542506120098. http://www.springerlink.com/index/10.1134/S0965542506120098

Verlet, L.: Computer “experiments” on classical fluids. i. thermodynamical properties of lennard-jones molecules. Physical review 159(1), 98 (1967)

Xu, Y., Shu, C.: Local discontinuous galerkin methods for the Kuramoto-Sivashinsky equations and the Ito-type coupled KdV equations. Comp. Methods Appl. Mech. Engin. 195(25), 3430–3447 (2006)

Yan, J., Shu, C.: A local discontinuous Galerkin method for KdV type equations. SIAM J. Num. Anal. 40(2), 769–791 (2002)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Chen, F., Hesthaven, J.S., Zhu, X. (2014). On the Use of Reduced Basis Methods to Accelerate and Stabilize the Parareal Method. In: Quarteroni, A., Rozza, G. (eds) Reduced Order Methods for Modeling and Computational Reduction. MS&A - Modeling, Simulation and Applications, vol 9. Springer, Cham. https://doi.org/10.1007/978-3-319-02090-7_7

Download citation

DOI: https://doi.org/10.1007/978-3-319-02090-7_7

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-02089-1

Online ISBN: 978-3-319-02090-7

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)