Abstract

In this case study, we compare two methods for model reduction of parametrized systems, namely, Reduced-Basis and Loewner rational interpolation.

While having the same goal of constructing reduced-order models for large-scale parameter-dependent systems, the two methods follow fundamentally different approaches. On the one hand, the well known Reduced-Basis method takes a time domain approach, using offline snapshots of the full-order system combined with a rigorous error bound. On the other hand, the recently introduced Loewner matrix framework takes a frequency-domain approach that constructs rational interpolants of transfer function measurements, and has the flexibility of allowing different reduced-orders for each of the frequency and parameter variables.

We apply the two methods to a parametrized partial differential equation modeling the transient temperature evolution near the surface of a cylinder immersed in fluid. Then, we compare the resulting reduced-order models with the full-order finite element system by running both time- and frequency-domain simulations.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Loewner Matrix

- Full-order System

- Reduced Order Model

- Reduced Basis Approach

- Transfer Function Measurements

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

2.1 Introduction

The growing need for highly accurate modeling of physical phenomena often leads to large-scale dynamical systems. For example, accurate simulations involving partial differential equations require taking fine spatial discretizations that, in turn, lead to dynamical systems of large dimensions. Hence, high accuracy comes at a steep price. Simulating such large-scale systems is a prohibitively expensive task that requires long simulation times and large data storage.

Model reduction seeks to overcome these obstacles by constructing models of low dimension that have short simulation times, require low data storage, but still accurately capture the behavior of the large-scale system.

In the case of systems that do not depend on parameters, reduced-order models can be obtained using an extensive array of model reduction methods [1]. For instance, we can follow SVD-based approaches such as the proper orthogonal decomposition (POD) [27] for non-linear systems and Balanced Truncation [8] for linear systems. Alternatively, we can follow rational interpolation approaches such as (iterative) Rational Krylov [9, 11]. These methods are well understood and known to give accurate reduced-order models in various practical applications [1,5]. However, in the case of systems that depend on parameters, there is a limited choice of available model reduction methods. The main obstacle is the fact that, in the presence of parameters, approaches like Balanced Truncation or iterative Rational Krylov are difficult to generalize.

Nevertheless, in recent years, a number of efficient methods have emerged to form the so-called Reduced-Basis framework for parametrized model reduction [13, 14, 18, 22 – 24]. Reduced-Basis methods extend the POD approach to the case of parametrized systems by relying on an offline space that contains snapshots of state trajectories of the full-order system. An error bound is used to iteratively enrich this space and extract a reduced-basis that yields accurate reduced-order models.

More recently, the rational interpolation approach has also been generalized to the case of parametrized systems [3]. Here, we apply this recent approach to construct reduced-order models that interpolate transfer function measurements of the full-order system. The key of the rational interpolation approach is the Loewner matrix, which allows the flexibility of choosing different reduced orders for each of the frequency and parameter variables. The reduced-order models are efficiently computed using a rational barycentric formula together with the null space of a generalized two-variable Loewner matrix.

In this case study, we compare the Reduced-Basis approach and the Loewner matrix approach, i.e., we compare a time-domain, POD-based method with a frequency-domain, rational interpolation method. In Sect. 2.2, we review the two methods, showcasing their common traits and differences. Then, in Sect. 2.3, we present a numerical example involving a parametrized partial differential equation modeling the transient temperature evolution near the surface of a cylinder immersed in fluid. After applying the Reduced-Basis and Loewner frameworks, we compare the resulting reduced-order models in both time- and frequency-domain simulations.

2.2 Parametrized Model Reduction

We begin with a short introduction to model reduction of parametrized systems, followed by an overview of the two reduction methods compared in this study.

We define a parametrized linear dynamical system of order n in terms of state-space equations that depend on parameters p ∈ ℝd:

where x(t) ∈ ℝn denotes the system’s state, u(t) ∈ ℝ the input, y(t) ∈ ℝ the output, and E(p), A(p) ∈ ℝn x n, B(p), C T (p) ∈ ℝn, D(p) ∈ ℝ are the parameter-dependent system matrices. Notice that the system’s state x(t) and output y(t) also depend on the parameters p as the system evolves in time; however, for notational simplicity, we only depict their dependence on time t.

Model order reduction methods seek models of order k

with Ê(p), Â(p) ∈ ℝk x k, \(\hat B(p),\) ĈT (p) ∈ ℝk, such that

-

the new state \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{x} (t)\) has reduced dimension k ≪ n ;

-

the reduced-order model (2.2) accurately captures the behavior of the full-order system (2.1), by introducing a small time-domain approximation error ∣y(t) — ŷ(t)∣, or a small frequency-domain approximation error ∣H(s,p) — Ĥ(s,p)∣, for

$$H\left( {s,p} \right) = C\left( p \right){\left( {sE\left( p \right) - A\left( p \right)} \right)^{ - 1}}B\left( p \right) + D\left( p \right),$$(2.3)denoting a system’s transfer function.

We now review the two methods that approach the model reduction problem from different perspectives, but, as we shall ultimately see, both lead to accurate reduced-order models.

2.2.1 Reduced-Basis Approach

Since their introduction in [18, 23], Reduced-Basis methods have become a reliable tool for obtaining accurate parametrized reduced-order models. Here, we summarize the Reduced-Basis approach along the lines of the presentation given in [14].

Reduced-Basis methods construct the reduced state \(\hat x(t)\) by means of a judiciously chosen Petrov-Galerkin projection VW T. The reduced-order model (2.2) is obtained by projecting the system matrices:

with initial conditions \(\hat x(0) = {W^T}x(0),\) and the reduced state defined as

However, before discussing how to choose the projection VW T in a Reduced-Basis setting, we outline an error analysis [14] that is valid for any general projection with W T V = I k . Consider the error introduced by the projection framework when approximating the full-order state:

Next, we derive a bound for this error that can be efficiently computed for different values of the parameters p and time t. Towards this end, we define the residual vector

that depends only on the reduced state and the input, and satisfies by construction the orthogonality condition W T R(t, p) = 0.

Then, it is easily checked that the error satisfies the following evolution equation

In most practical applications, the matrix E(p) is invertible for all parameter values inside a domain of interest, and, therefore, we can define Ã(p) = E(p)-1 A(p) and \(tilde R(t,p) = E{(p)^{ - 1}}R(t,p)\) to obtain

which has the solution

Then, it immediately follows that the output error can be bounded by

and, assuming that we can bound the matrix exponential of the full-order system ‖Ce tÃ(p)‖ ≤ C 1 (p), the error bound becomes

Since for fixed p, the residual \(\tilde R(t,p)\) depends on the reduced state \(tilde x(t)\) and not on the original state x(t), the bound can be efficiently evaluated at different values of time t, by using numerical quadrature [16] to compute the integral.

In practical applications, the bound given in (2.4) can be improved by using a norm ‖•‖ G tailored for the specific application, namely, ‖•‖ G is the vector norm induced by a problem-specific symmetric positive definite matrix G, ‖•‖ 2 G = z T Gz. In addition, the bound can be further improved by considering the so-called dual problem. For details concerning the dual problem, such as the additional computational cost involved, we direct the reader to the discussion given in [12, 22].

The error bound shown in (2.4), or its tighter dual, represents a key result for the offline stage of Reduced-Basis methods. In this stage, we obtain the most computationally intensive quantities needed in the Reduced-Basis approach, and, usually, it may take arbitrarily long to complete. Nevertheless, the benefits of the offline computational effort become clear in the online stage, when we perform fast simulations of the reduced-order model, with simulation times independent of the full order n.

In the offline stage, we compute the right-hand term C 1 (p) in (2.4), i.e., we bound the matrix exponential of the full-order system (2.1). In a large-scale setting, this task has its own well known challenges, and it often requires great computational effort [20, 21]. Then, we compute the so-called offline space

which is a collection of full-order state snapshots obtained for a user-selected time grid t i , i = 1 : N, and parameter grid p j , j = 1 : M. Computing the offline space requires solving the large-scale equations (2.1), leading to significant computational effort. In practice, the snapshots are obtained by discretizing the time t and then employing an Euler scheme [16].

The next step in the offline stage is to extract a reduced-basis V from the offline space X, i.e., to compute the projection matrix V, ∈ ℝn × k such that column span V ⊂ column span X. In short, there are various ways of choosing an appropriate V, such as using a combination of POD, greedy algorithms and adaptive approaches [12, 13, 22, 24]. The main idea behind these iterative approaches is to start with an initial reduced-basis V = V 0, then evaluate the error bound (2.4) and search for additional basis components V 1 to obtain an enriched reduced-basis V = [V 0, V 1] that in turn gives a new lower error bound. In this case study, the Reduced-Basis model shown in Sect. 2.3 is obtained using the greedy scheme presented in [22].

Once the reduced-basis V is computed, we can obtain the reduced-order model. It is assumed that the parameter dependence of the full-order system (2.1) is separable into sums of constant matrices weighted by scalar functions of the parameters:

Then, the reduced-order parameter-dependent matrices result from projecting the constant matrices \({\hat E_i} = {W^T}{E_i}V,\quad {\hat A_i} = {W^T}{A_i}V,\quad {\hat B_i} = {W^T}{B_i},\quad {\hat C_i} = {C_i}V,\) namely

The final step in Reduced-Basis methods is the online stage, where the pre-computed reduced-order model from the offline stage is used for fast simulations of the output ŷ(t) for different input signals u(t) and parameter values p. The reduced matrices (2.6) can be evaluated for different p in real time, since this operation only requires evaluation of scalar functions εi(p), αi(p), βi(p) and γi(p). Then, the output ŷ(t) is computed using an Euler scheme involving only the reduced-order matrices, resulting in an overall computational complexity of the online phase that is independent of the full-order n.

2.2.2 Loewner Matrix Approach

Next, we construct parametrized reduced-order models using a two-variable rational interpolation approach. The discussion summarizes the recent results in [3], where a Loewner matrix framework was introduced for constructing rational interpolants for frequency-domain measurements of systems with one parameter p.

The Loewner approach starts from measurements of the full-order parametrized transfer function (2.3):

i = 1 : N, j = 1 : M, and constructs a two-variable rational function Ĥ(s, p) that interpolates these measurements, Ĥ(s i , p j) = φ i , j .

In the Loewner framework, the order of the reduced model Ĥ(s, p) is a pair (k, q), where k is the reduced order in the frequency variable s, and q is the reduced order in the parameter variable p, with k not necessarily equal to q. Therefore, we can choose different orders for s and p, resulting in greater flexibility and a better understanding of the structure of the underlying interpolant Ĥ(s, p).

The first step consists in identifying the reduced order (k,q) directly from the given measurements φi,j, by computing the ranks of appropriate one-variable Loewner matrices [2, 19]. Hence, consider the pairs (x i , f i ), i = 1 : T, which we partition in any two disjoint sets

such that r + ℓ = T. Then, the one-variable Loewner matrix \(\mathbb{L}\) associated with (x i , f i ) and the partitioning in (2.8) is defined as

Using this definition, we introduce the following one-variable Loewner matrices associated with the two-variable measurements given in (2.7):

where the index p j (s i ) indicates that \({\mathbb{L}_{pj}}({\mathbb{L}_{pj}})\) corresponds to measurements given by constant p = p j (s = s i ). Then, the ranks of these Loewner matrices give the order (k,q) of the underlying interpolant Ĥ(s, p):

Next, we construct the rational interpolant Ĥ(s, p) of order (k,q) by computing the null space of an appropriate two-variable Loewnermatrix \({\mathbb{L}_{2D}}.\). Towards this end, we partition the frequency and parameter grids (2.7) into any disjoint sets

using the following notation for the corresponding partitioned measurements

namely φ 11 contains w i,j : = H(λ i , π j ) for i = 1 : n′, j = 1 : m′, while φ 22 contains v i,j := H(μ i ,v j), for i = 1 : (N — n′), j = 1 : (M — m′).

then, from this partitioning, we define the two-variable Loewner matrix

of dimension (N ′ n′)(M — m′)×(n′ m′) and with indices \(e,\hat e,f,\hat f\) having the following Kronecker structure

for 1n′ ∈ ℝ1 × n′ a row vector with all entries equal to 1.

The main feature of the Loewner matrix \({\mathbb{L}_{2D}}\) is that its rank encodes the order (k, q) of the underlying rational interpolant Ĥ(s, p), and, furthermore, Ĥ(s, p) can be easily constructed from its null space.

Theorem 2.1 (Two-variable rational interpolation [3]) If (k,q) is given by (2.10) and n′ > k, m′ > q, then the two-variable Loewner matrix (2.13) is singular, with

In addition, if we set (n′, m′) = (k + 1, q + 1) in (2.11), then the rational function Ĥ(s, p) of order (k, q) that interpolates all given measurements φi, j has the form

with c = [c1,1, c1,2,… ,c2,1, c2,2,…,ck+1,q+1] in the null space of \({\mathbb{L}_{2D}},i.e,{\mathbb{L}_{2D}}c = 0.\)

Notice that Ĥ(s, p) is given in terms of a rational barycentric formula that depends on the two-variables s and p, and is, in fact, a generalization of the one-variable rational barycentric formula [2, 4]. It is easily checked that if we multiply both the numerator and denominator in (2.14) with ∏ k+1 i=1 ∏ q+1 j=1 (s — λ i )(p — π j ), then, after simplification, we obtain two polynomials having the highest degree in s equal to k and the highest degree in p equal to q. Hence, Ĥ(s, p) is a two-variable rational function of order (k, q).

The barycentric formula allows us to write down the interpolant in terms of the two-variable Lagrange basis (s — λ i )(p — π j ), i = 1 : n′, j = 1 : m′, which is formed directly from the partitioned frequency and parameter grids in (2.11). The Kronecker structure of the Lagrange basis dictates the Kronecker structure of the denominator in each entry of \({\mathbb{L}_{2D}}\). As a result, the rank of \({\mathbb{L}_{2D}}\) is not fixed, but it depends on the order (k,q) of the underlying interpolant and on the dimensions (n′,m′) of the partitioning. To obtain Ĥ(s, p) of order (k,q), we choose (n′,m′) = (k + 1, q + 1).

Furthermore, the barycentric formula in (2.14) cannot be directly evaluated at the grid points λi and πj as it requires dividing by zero. However, just like in the case of evaluating a one-variable barycentric formula [4], we use the convention that Ĥ(λi, πj) = c i,j w i,j / c i,j = w i,j . Therefore, Ĥ(s, p) interpolates the measurements wi, j contained in Φ 11 by construction. Then, we force interpolation of the remaining measurements Φ 12, Φ 21, Φ 22 by computing the barycentric coefficients c such that \({\mathbb{L}_{2D}}c = 0\).

In practice, it is possible to obtain models of even lower order (k,q) than the one given by (2.10). Choosing k < max rank \({\mathbb{L}_{pj}}\) and q < max rank \({\mathbb{L}_{{s_i}}}\), results in a Loewner matrix \({\mathbb{L}_{2D}}\) that is full rank. However, if \({\mathbb{L}_{2D}}\) is close to being singular, we can still compute barycentric coefficients such that \({\mathbb{L}_{2D}}c \approx 0\). In this case, the coefficients c give a rational function Ĥ(s, p) (2.14) that interpolates Φ 11 by construction, and, approximates the entries in Φ 12, Φ 21, Φ 22 with small error, i.e., we get a two-variable rational approximant, instead of an interpolant.

Finally, notice that equation (2.14) gives Ĥ(s, p) in transfer function form as a ratio of barycentric sums. Therefore, evaluating Ĥ(s, p) for a particular frequency s and parameter p, can be efficiently implemented using only O(kq) operations. Nevertheless, in practical applications, we also need to have Ĥ(s, p) expressed in terms of state-space matrices, as in (2.2). Next, we present two simple state-space realizations for Ĥ(s, p).

Lemma 2.1 (State-space realization) The rational barycentric form Ĥ(s, p) in equation (2.14) has the following state-space realization

with the system matrices defined as

and the convention that βi(πj) = c i,j w i,j and α i (π j ) = c i,j .

The proof of this result relies on exploiting the non-zero structure of the matrices together with a cofactor expansion to show that det (sÊ - Â(p)) equals the denominator in (2.14). For simplicity, the full details are omitted here.

The above state-space realization uses system matrices of dimension k + 1 and has no D(p) term. The parameter dependencies are present only in the Ĉ(p) and Â(p) matrices, and take the form of barycentric sums involving the parameter p. In contrast to standard state-space realizations that use a companion matrix Â(p) and coefficients α i (p) that are polynomials in p[1], the realization given in (2.15) is better suited for practical implementations, since the coefficients α i (p) do not contain powers of p.

Furthermore, we can also avoid barycentric sums by using the following result.

Lemma 2.2 (State-space realization [3]) The rational barycentric form Ĥ(s, p) in equation (2.14) has the following state-space realization

with the system matrices defined as

Unlike the realization of Lemma 2.1, the parameter p enters only linearly in the resolvent Θ(s, p) = sÊ — Â(p). However, having such a simple parameter dependence results in a realization of dimension k + 2(q + 1).

We also remark that the existence of state-space realizations with linear dependence in p and minimal dimension k is still an open problem [6,7,17,25]. Such minimal realizations are known only for the special case of Ĥ(s, p) having a separable denominator, i.e., the denominator can be factored as the product of two one-variable polynomials in s and p [10]. Nevertheless, the realizations provided in this section are useful in practical applications, since their dimensions are close to the minimal dimension k in a reduced-order setting.

2.2.3 Discussion

Next, we discuss the common traits and differences between the two methods. We begin with the computational effort required for each. Notice that the most computationally intensive part of the Reduced-Basis approach is the offline stage. Its computational cost depends on the number of operations needed for obtaining the snapshots and on the algorithm used for assembling the reduced basis. For the Loewner approach, the computational effort consists in computing the full-order transfer function measurements, the reduced-order (k,q) and the null space of L2D. In practice, computing (k,q) does not require the ranks of all Loewner matrices Lpj and Lsi ; in fact, the ranks of only a few of these matrices usually give a good indication for appropriate values of (k,q). The most computationally intensive part is computing the full-order measurements H(s i , p j ), since it involves the full-order matrices and (2.3). Inmost practical applications, the resolvent s E(p) — A(p) has sparse structure; hence, we can use sparse linear system solvers [26] in (2.3) to efficiently compute the measurements.

The use of explicit transfer function measurements H(s i , p j ) has another advantage. Suppose we do not have a model of the full-order system (2.1), but we only have access to its transfer function measurements; for instance, suppose we use a device to take frequency response measurements of a system. Then, we can still obtain a reduced-order model by applying the Loewner approach; i.e., we identify a reduced-order model directly from the available measurements.

We also remark that the results given in [3] developed the Loewner approach for the case of systems that depend on a scalar parameter p, unlike the Reduce-Basis approach which can accommodate a vector of parameters p. However, since the publication of [3], the authors of this case study have generalized the Loewner approach to a vector of parameters p. A detailed discussion of this case is scheduled for publication [15].

Perhaps the most obvious difference between the two methods is the possibility of choosing different reduced-orders for s and p in the Loewner approach. This is a direct consequence of using the two-variable Lagrange basis, and, in practice, it can prove useful to differentiate between s and p, since some systems have an inherently low order dependence on the parameter p. This feature is discussed in detail in the example given in Sect. 2.3.

The common trait of the two methods is the fact that they both offer ways of efficiently evaluating the reduced-order models for different values of p. The Reduced- Basis approach achieves this in the online stage using equation (2.6), while the Loewner approach uses the rational barycentric formula (2.14).

Finally, after these theoretical remarks, we are ready to see how these methods compare in a practical application. In the next section, we give such an example.

2.3 Numerical Experiments

In this section, we compare the Reduced-Basis approach and the Loewner rational interpolation approach through a numerical example treating a parameter-dependent partial differential equation. This parametrized system models the transient evolution of the temperature field near the surface of a cylinder immersed in fluid. For details on deriving the state-space matrices (2.1) using a finite element spatial discretization, we direct the reader to the book [22] and its software package.

The parameter dependence is present only in the A matrix as

with the parameter p ∈ [0.1, 100] representing the Peclet number. The dimension of the full-order state-space matrices (2.1) is n = 878, and the output matrix C is highly sparse with dimension 919 × 878, as it maps the n = 878 system states to the 919 nodes in the spatial discretization.

2.3.1 The Reduced-Order Models

First, we obtain a Reduced-Basis model (2.6) of order k = 11. This particular reduced-order model is already available as part of the software package included with [22]. The Reduced-Basis V is computed using a greedy approach and an offline space (2.5) generated from a parameter grid p ∈ [0.1,100] and a time grid t ∈ [0, 1] having time step βt = 0.01. We denote the Reduced-Basis model with Σ RB .

Next, to obtain the Loewnermodel (2.15), we consider a frequency grid of N = 50 frequencies si logarithmically spaced in [10-2, 102], and a parameter grid of M = 50 parameters p j logarithmically spaced in [0.1, 100]. We then compute the associated transfer function measurements φ i,j = H(s i , p j ).

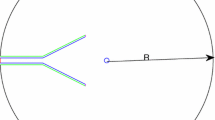

The crucial step of the Loewner approach is to determine the reduced order (k,q), with k representing the order in the frequency variable s, and q the order in the parameter variable p. Therefore, in Fig. 2.1, we plot the singular values of the one-variable Loewner matrices \({\mathbb{L}_{{p_j}}}\) and \({\mathbb{L}_{{s_i}}}\), and, from (2.10), the maximum rank of \({\mathbb{L}_{{p_j}}}\) gives k and the maximum rank of \({\mathbb{L}_{{s_i}}}\) gives q. Then, by Theorem 2.1, the two-variable Loewner matrix \({\mathbb{L}_{2D}}\) is singular and the barycentric coefficients c in its null space, \({\mathbb{L}_{2D}}c = 0\), give a model Ĥ(s, p) (2.14) that interpolates all given measurements φ i,j .

However, for the purpose of comparing the Loewner model with the Reduced-Basis model, we select k and q lower than the ranks of \({\mathbb{L}_{{p_j}}}\) and \({\mathbb{L}_{{s_i}}}\), namely, we take k = 11, the same value as for the Reduced-Basis model. In addition, we take q = 7 to showcase that the order in p can be chosen to be different from the order in s.

As a result of this choice of (k, q) = (11, 7), the two-variable Loewner matrix \({\mathbb{L}_{2D}}\) is not singular. However, its smallest singular value is equal to 3 • 10-8, i.e., \({\mathbb{L}_{2D}}\) is close to being singular, and we can still compute barycentric coefficients c such that \({\mathbb{L}_{2D}}c = 0\). Thus, Ĥ(s, p) in (2.14) approximates the given measurements φ i,j , instead of interpolating them. The final step consists in forming a state-space realization using either (2.15) or (2.16). We denote the Loewner model with \({\sum _\mathbb{L}}\).

2.3.2 Comparison of the Reduced-Order Models

We now compare Σ RB , the Reduced-Basis model, and \({\sum _\mathbb{L}}\), the Loewner model. Before presenting their time- and frequency-domain behavior, we briefly discuss their reduced orders.

Notice that the parameter dependence (2.17) of the full-order system has a rational form, present only in the A(p) matrix; therefore, the resolvent (s E — A(p))-1 ∈ ℂ878 × 878 is also rational in both s and p. Hence, the system’s transfer function H(s, p) (2.3) is a two-variable rational function with the highest degree in s equal to 878 and highest degree in p equal to 878, i.e., H(s, p) has order (878,878).

Since the projection framework (2.6) preserves the structure of the parameter dependence, the Reduced-Basis model Σ RB is also rational in both s and p, and has order (11, 11), given by the dimension of the reduced-order system matrices (2.6). On the other hand, \({\sum _\mathbb{L}}\) has the flexibility of differentiating between the orders of s and p. Therefore, we have selected a lower order for the parameter p, resulting in a Loewner model \({\sum _\mathbb{L}}\) of order (11,7).

Next, we compare the frequency-domain behavior of Σ RB and \({\sum _\mathbb{L}}\). In Fig. 2.2, we plot the frequency response of the two models for 4 different values of the parameter p ∈ {0.1, 1, 10, 100}. The models have one input and 919 outputs, with frequency responses Ĥ RB (jω i , p \({\hat H_\mathbb{L}}(j{\omega _i},p) \in {\mathbb{C}^{919 \times 1}}\). To get a single line plot for each parameter value, we show the average of each frequency response.

On one hand, Fig. 2.2 shows that Σ RB provides a loose approximation of the full-order system frequency-domain behavior. This was to be expected, since the Reduced-Basis method is tailored for approximation of time-domain snapshots. On the other hand, \({\sum _\mathbb{L}}\) accurately matches the full-order system, since the Loewner approach is a bespoke frequency-domain method. Nevertheless, for this particular example of a parametrized partial differential equation, the frequency-domain behavior has secondary importance. Our primary goal is to accurately match the time-domain transient behavior using reduced-order models.

Therefore, we now simulate the transient behavior of the temperature field when the system is excited by the input u(t) = 10t for t ∈ [0, 1]. Figure 2.3 shows the temperature field around the cylinder at final time t = 1 when the simulation is run for the parameter value p = 0.1. Because of the problem’s symmetry, we plot only half of the rectangular domain and half of the cylinder.

As expected, the Reduced-Basis approach gives an accurate approximation of the temperature field, with the relative error ∣y(t) — ŷ(t)∣/∣y(t)∣ bellow 10-2. In addition, the lower half of Fig. 2.3 shows that the Loewner approach produces similar levels of accuracy.

Therefore, through this numerical example, we have seen that, although they approach the problem from different perspectives, both methods produce accurate reduced- order models.

2.4 Conclusions

Motivated by the ever increasing need for accurate, low dimension models of parameter- dependent systems, this case study is one of the first efforts to compare different approaches for parametrized model reduction. More precisely, we compared the well known Reduced-Basis approach with the recently introduced Loewner matrix approach for rational interpolation.

We saw that the main difference between the two is the fact that Reduced-Basis uses time-domain snapshots, while the Loewner approach uses frequency-domain transfer function measurements. Furthermore, the key feature of Reduced-Basis is an error bound; while for the Loewner approach, it is the possibility of choosing different reduced orders for the frequency and parameter variables.

Although different in their approach, both methods proved successful at computing accurate reduced-order models in a numerical example involving a parametrized partial differential equation.

References

Antoulas, A.C.: Approximation of large-scale dynamical systems. Advances in Design and Control, DC-06. SIAM, Philadelphia (2005, second printing: Summer 2008)

Antoulas, A.C., Anderson, B.D.O.: On the scalar rational interpolation problem. IMA J. of Mathematical Control and Information 3, 61–88 (1986)

Antoulas, A.C., Ionita, A.C., Lefteriu, S.: On two-variable rational interpolation. Linear Algebra and its Applications 436(8), 2889–2915 (2012). DOI 10.1016/j.laa.2011.07.017

Berrut, J.P., Trefethen, L.N.: Barycentric Lagrange Interpolation. SIAM Review 46(3), 501–517 (2004). DOI 10.1137/S0036144502417715

Chahlaoui, Y., Van Dooren, P.: A collection of benchmark examples for model reduction of linear time invariant dynamical systems. SLICOT Working Note 2002–2 (2002)

Eising, R.: Realization and stabilization of 2-d systems. Automatic Control, IEEE Transactions on 23(5), 793–799 (1978). DOI 10.1109/TAC.1978.1101861

Fornasini, E., Marchesini, G.: Doubly-indexed dynamical systems: State-space models and structural properties. Theory of Computing Systems 12, 59–72 (1978). DOI 10.1007/BF01776566

Glover, K.: All optimal Hankel-norm approximations of linear multivariable systems and their L∞-error bounds. International Journal of Control 39(6), 1115–1193 (1984). DOI 10.1080/00207178408933239

Grimme, E.J.: Krylov projection methods for model reduction. Ph.D. Thesis, ECE Dept., U. of Illinois, Urbana, Champaign, IL, USA(1997)

Gu, G., Aravena, J.L., Zhou, K.: On minimal realization of 2-D systems. IEEE Transactions on Circuits and Systems 38(10), 1228–1233 (1991). DOI 10.1109/31.97545

Gugercin, S., Antoulas, A.C., Beattie, C.: ℋ2 Model Reduction for Large-Scale Linear Dynamical Systems. SIAM Journal on Matrix Analysis and Applications 30(2), 609–638 (2008). DOI 10.1137/060666123

Haasdonk, B.: Reduzierte-Basis-Methoden. Preprint 2011/004 — Institute of Applied Analysis and Numerical Simulation, University of Stuttgart, Stuttgart (2011)

Haasdonk, B., Ohlberger, M.: Reduced basis method for finite volume approximations of parametrized linear evolution equations. ESAIM: Mathematical Modelling and Numerical Analysis 42(02), 277–302 (2008). DOI 10.1051/m2an:2008001

Haasdonk, B., Ohlberger, M.: Efficient reduced models and a posteriori error estimation for parametrized dynamical systems by offline/online decomposition. Mathematical and Computer Modelling of Dynamical Systems 17(2), 145–161 (2011). DOI 10.1080/13873954.2010.514703

Ionita A.C., Antoulas A.C.: Data-driven parametrized model reduction in the Loewner framework. Submitted to SIAM Journal on Scientific Computing (2013)

Kahaner, D., Moler, K., Nash, S.: Numerical Methods and Software. Prentice Hall, NJ, USA (1989)

Kung, S.Y., Levy, B.C., Morf, M., Kailath, T.: New results in 2-D systems theory, part II: 2-D state-space models-Realization and the notions of controllability, observability, and minimality. Proceedings of the IEEE 65(6), 945–961 (1977). DOI 10.1109/PROC.1977.10592

Machiels, L., Maday, Y., Oliveira, I.B., Patera, A.T., Rovas, D.V.: Output bounds for reduced-basis approximations of symmetric positive definite eigenvalue problems. Comptes Rendus de l’Académie des Sciences, Series I, Mathematics 331(2), 153–158 (2000). DOI 10.1016/S0764-4442(00)00270-6

Mayo, A.J., Antoulas, A.C.: A framework for the solution of the generalized realization problem. Linear Algebra and its Applications 425(2–3), 634–662 (2007). DOI 10.1016/j.laa.2007.03.008

Moler, C., Van Loan, C.: Nineteen Dubious Ways to Compute the Exponential of a Matrix. SIAM Review 20(4), 801–836 (1978). DOI 10.1137/1020098

Moler, C., Van Loan, C.: Nineteen Dubious Ways to Compute the Exponential of a Matrix, Twenty-Five Years Later. SIAM Review 45(1), 3–49 (2003). DOI 10.1137/S00361445024180

Patera, A.T., Rozza, G.: Reduced Basis Approximation and A Posteriori Error Estimation for Parametrized Partial Differential Equations. MIT Pappalardo Graduate Monographs in Mechanical Engineering. Cambridge, MA (2007). Available from http://augustine.mit.edu/methodology/methodology_book.htm

Prudhomme, C., Rovas, D.V., Veroy, K., Machiels, L., Maday, Y., Patera, A.T., Turinici, G.: Reliable Real-Time Solution of Parametrized Partial Differential Equations: Reduced-Basis Output Bound Methods. Journal of Fluids Engineering 124(1), 70 (2002). DOI 10.1115/1.1448332

Quarteroni, A., Rozza, G., Manzoni, A.: Certified reduced basis approximation for parametrized partial differential equations and applications. Journal of Mathematics in Industry 1(1), 3 (2011). DOI 10.1186/2190-5983-1-3

Roesser, R.: Adiscrete state-space model for linear image processing. Automatic Control, IEEE Transactions on 20(1), 1–10 (1975). DOI 10.1109/TAC.1975.1100844

Saad, Y.: Iterative Methods for Sparse Linear Systems, 2nd Ed. SIAM (2003)

Volkwein, S.: Proper orthogonal decomposition and singular value decomposition. Technical Report SFB-153, Institut für Mathematik, Universität Graz. Appeared also as part of the author’s Habilitationsschrift, Institut für Mathematik, Universität Graz, Graz (2001) (1999)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Ionita, A.C., Antoulas, A.C. (2014). Case Study: Parametrized Reduction Using Reduced-Basis and the Loewner Framework. In: Quarteroni, A., Rozza, G. (eds) Reduced Order Methods for Modeling and Computational Reduction. MS&A - Modeling, Simulation and Applications, vol 9. Springer, Cham. https://doi.org/10.1007/978-3-319-02090-7_2

Download citation

DOI: https://doi.org/10.1007/978-3-319-02090-7_2

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-02089-1

Online ISBN: 978-3-319-02090-7

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)