Abstract

We define the heavy-tailed distribution as distribution with infinite mathematical expectation. For such distributions the standard statistical tools—sample mean and sample standard deviation—exhibit a high instability. Some examples illustrating this conclusion are presented. We discuss relations between the maximum event \( M_{\textrm{max}}^{(n)} = max\left({x_{1},\ldots,x_{n}}\right)\) and the total sum \( S_{n}=x_{1} + \cdots + x_{n} \) for heavy-tailed distributions. The asymptotical proportionality of \( M_{\hbox{max} }^{(n)} \) and S n is derived for the heavy-tailed Pareto distributions (Eq. (1.18)).

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

The main focus of our study is made on damages from disasters whose distribution can be described by power-like laws with a heavy tail. The disasters of this type typically occur in a very broad range of scales, the rare greatest events being capable of causing losses comparable with the total loss due to all the other (smaller) events. The disasters of such a type are the most unexpected ones and they frequently entail huge losses. One of the most important results of our study is the conclusion about the instability of the “maximum possible earthquake” parameter M max which is frequently used in the seismic risk assessment. Instead of focusing on the unstable parameter M max we suggest a stable and convenient characteristic M max(τ) defined as the maximum size of a given phenomenon that can be recorded over a future time interval τ. The random value M max(τ) can be described by its distribution function, or equivalently by its quantiles Q q (τ), which are stable, robust characteristics in contrast to M max. Besides, if τ → ∞, then M max(τ) → M max with probability one. The method of calculation of Q q (τ) is exposed below. In particular, we can estimate Q q (τ) for, say, q = 90, 95 and 99 %, as well as for the median (q = 50 %) for any desirable time interval τ. Our method provides an alternative and robust way to parameterize the rightmost tail of the frequency-size relation. The final goal of our data processing consists in obtaining a family of quantiles Q q (τ) that corresponds to damage (fatalities in a disaster, economic damage, insurance losses etc.) that will not be exceeded in future τ years with a prescribed probability q. This characteristic seems to be particularly useful, for instance in the insurance business.

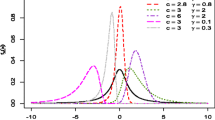

It is well known that distributions of some parameters of natural processes (earthquake energy, economic damage and casualties from natural disasters and others) are often modeled by power-like laws, such as the Pareto distribution. The Pareto distribution function and probability density are given as follows:

If the exponent β of such a distribution is less than unity, β ≤ 1, then the mathematical expectation of the corresponding random variable is infinite. In this case, the standard statistical tools, such as sample mean and sample standard deviation, exhibit a high instability. Such distributions are often called distributions with heavy tails. It should be noted here that there is no commonly accepted definition of a heavy tailed distribution. Here we apply this term to distributions F(x) whose mathematical expectation is infinite:

Accordingly, the opposite term light tail will designate distributions with finite expectation.

However there is a wide class of distributions where mathematical expectation is finite but higher statistical moments are infinite. Such distributions might be named “heavy-tailed” as well.

One may note that there are alternative definitions of the terms “heavy tail” and “light tail”, see Reiss and Thomas (1997), Embrechts et al. (1997).

The Pareto law is a classical example of a heavy tailed distribution provided that β <= 1. In that case its expectation is infinite, so that the Law of Large Numbers is inapplicable, and the sample mean and the sample standard deviation are unstable. In contrast with a more common case of distributions with finite expectation, the increase of sample size does not improve the accuracy of the sample mean. The large statistical scatter of values of the sample mean (see e.g. Osipov 2002) makes this widely used statistical characteristic inadequate for applications to data sets with heavy tailed distributions. Moreover, its use in such situations may lead to essential errors and incorrect conclusions. Hereafter we are giving an illustration to this affirmation.

The total number of fatalities from typhoons, hurricanes and floods in the world during the period from 1947 till 1960 is about 900,000 (the flood causing the highest fatality of 1,300,000 occurred in China in 1931). The mean annual fatality (arithmetic mean) for the period from 1947 till 1960 was \( \hat{X}_{ann} = 64{,}300 \). The mean annual fatality for the period from 1962 till 1992 is \( \hat{X}_{ann} = 3 6{,}000 \). These sample means differ significantly and provide no indication about the probability of such a super-catastrophic event as the 1931 flood in China.

Let us consider another example: the total number of fatalities from earthquakes in the world during the period from 1947 till 1970 was about 151,000 (the earthquake causing the highest death toll of 240,000 occurred in China in 1976). The mean annual fatality rate (arithmetic mean) for the period from 1947 till 1970 is \( \hat{X}_{ann} = 6{,}300 \). The mean annual fatality rate for the period from 1962 till 1992 is \( \hat{X}_{ann} = 1 8{,}600 \). Here again, both sample means give quite an uncertain characteristic of annual fatality, which is a typical indication of a data set with the heavy-tailed distribution.

Figure 1.1 shows the non-normalized complementary sample distribution function for: a—annual casualties; b—number of victims in a single event ; c—annual economic losses. These data are taken from the Web site of the US Geological Survey (http://www.neic.cr.usgs.gov/neis/eqlists). The Pareto law fits to the middle range and to the extreme events range are shown by dotted lines, with β = 0.77 ± 0.11 (a); β = 0.73 ± 0.11 (b); β = 0.65 ± 0.16 (c). We can see that all the three distributions on Fig. 1.1 can be qualified as heavy-tailed.

Non-normalized complementary sample of world-wide losses from earthquakes distribution function for: a annual casualties; b casualties for individual event; c annual economic losses. The power-law functions fitting tails are shown by dotted lines, with β = 0.77 ± 0.11 (a); β = 0.73 ± 0.11 (b); β = 0.65 ± 0.16 (c)

Below we present some new approaches to the reliable statistical estimation in the situations where the standard statistical tools fail.

In the statistical analysis of data with heavy-tailed distributions an important role belongs to the maximum observed event \( M_{\hbox{max} }^{(n)} = max\left( {x_{1} , \ldots ,x_{n} } \right) \). It can be shown that for a data set with a heavy tailed distribution (according to definition (1.2)) the maximum event \( M_{\hbox{max} }^{(n)} \) is commensurable with the total sum \( S_{n}=x_{1} + \cdots + x_{n} \), i.e. \( M_{\hbox{max} }^{(n)} \) and S n are of the same order. For example, in the case of the Pareto distribution with exponent β < 1 one has:

Conversely, for a data set with a light tailed distribution (in which case the expectation is finite E|x| < \( \infty \)), the contribution of \( M_{\hbox{max} }^{(n)} \) in the total sum S n decreases with the sample size n:

If a sample is characterized by the Pareto distribution with index β, then \( M_{\hbox{max} }^{(n)} \) grows with the size of the sample as n 1/β. (We would like to emphasize that this tendency of a non-linear growth of \( M_{\hbox{max} }^{(n)} \) with the sample size n or, equivalently, with the observation time span τ can be incorrectly interpreted as an evidence of a non-stationarity in time (Pisarenko and Rodkin 2010). In many cases, the wide-spread belief that the rate of losses from natural disasters has a clear tendency of increasing with time is precisely connected with the misinterpretation of this apparent non-stationarity effect).

The treatment of heavy-tailed data is often facilitated by using logarithms of original values. Switching to logarithms (which can be done only when the original numerical values are positive) ensures almost always that all the statistical moments exist, and hence the Law of Large Numbers and the Central Limit Theorem are applicable to the sums of logarithms. We are advocating the use of the logarithmic transform of the original data values as one of the main tools to prevent complications in the processing of heavy-tailed data sets. As we shall see later, the use of the logarithmic transform turned to be efficient in all the considered cases except one (the data set on the fatality of floods in USA from 1995 till 2011).

It should be remarked that if X has the Pareto distribution, then log(X) has exactly exponential distribution. We shall use this fact below.

Another statistical tool helping to overcome difficulties connected with processing heavy tailed data is the order statistics: sample median, sample quantiles, interquartile range etc. The order statistics can be used also for construction of confidence intervals. The main statistical tool suggested in this short-monograph for description of distribution tail—the family of quantiles Q q (τ)—is in fact a continuous analog of the sample ordered statistics. This explains its robustness and stability.

In our approach we model the sequence of disaster occurrences by well-known Poisson point process, see Embrechts et al. (1997). The disaster effects can be different: fatalities and economic losses due to natural catastrophes, earthquake seismic moments and ground acceleration at a fixed point etc. These effects are “marks” assigned to occurrence times of the point Poisson process. Thus, the whole construction is called the marked point process. In our applications we shall model our catalog usually by a stationary Poisson process with intensity λ events per year (but we devote the special section Non-stationarity of natural processes, “operational time” method to the case of non-stationary Poisson processes). The random number of events in stationary process for T years is a random Poisson variable with the mean λΤ. Let us denote the maximum event for this time period as M T and the total sum as ∑ T . We are now going to derive some relations between M T and ∑ T . It is well known (see, e.g., Gumbel 1958) that for distributions with a light, exponential tail M T increases as the logarithm of T:

where c is some constant, whereas ∑ T increases as T, in accordance with the CLT:

where ζ is some standard normal rv, b is the expectation of a single event (here we assume b > 0), and σ is the standard deviation (std) of a single event. We consider the ratio R(T) of the total sum to maximum event:

It follows from (1.5), (1.6) that

Hence it follows that the R(T) ratio increases with T linearly for the cases of light tail if we disregard the slowly varying log(λT). A quite different behavior of R(T) appears for heavy-tailed distributions. For such distributions R(T) grows much slower and can even have a finite expectation (cf. with (1.3)). In other words, in this case the total and the maximum event become comparable, i.e., the total sum is determined in a large extent by the single maximum event.

In order to illustrate this assertion, we are going to derive lower and upper bounds for R(T) corresponding to the Pareto distribution. Taking logarithms and their expectation we get

Using Jensen’s inequality for concave functions, we get:

As shown in Pisarenko (1998),

ER(T) =

where γ(α; t) is the incomplete gamma function. The function ER(T) is tabulated in Table 1.1.

If λΤ → ∞, then in (1.10) the incomplete gamma function γ(1/β; λΤ) tends to the standard gamma function Γ(1/β). It is possible to derive an explicit expression for E log(M T ) (Pisarenko 1998):

where C is the Euler constant (C = 0.577…), and Εi(−λΤ) is the integral exponential function

Combining Eqs. (1.8)–(1.12), we derive an upper bound on \( E\;\text{log} \,\Sigma_{T} \):

If λΤ ≫ 1, then (1.13) can be simplified. Keeping only terms growing with (λT), we get:

Now we derive a lower bound for E logΣ Τ . If β > 1 then by virtue of the Law of Large Numbers \( \frac{1}{n} \)Σ Τ → 1/β and we get:

If β < 1, then we just drop the first term in rhs of (1.8) and get:

We can put down (1.15) and (1.16) in one relation that holds true for any β and disregards terms of lower order:

We get from (1.14) and (1.17) that asymptotically (as \( \lambda T \, \to \infty \)) up to terms of lower order for any β:

Thus, one can say that for β < 1 random quantities logΣ Τ and logM T are comparable in value and both grow as log(λΤ) 1/β. This fact might be interpreted as a nonlinear growth of Σ Τ and M T at the same rate as (λΤ)1/β does, since their logarithms are asymptotically proportional. We recall that both random quantities have infinite expectations if β < 1. If β > 1 then Σ Τ increases linearly with T (cf. with (1.6)), whereas M T increases more slowly, as Τ 1/β. The relation (1.18) holds true for any probability density that decreases asymptotically as a power.

References

Embrechts P, Klueppelberg C, Mikosch T (1997) Modelling extremal events. Springer, Berlin

Gumbel EJ (1958) Statistics of extremes. Columbia University Press, New York

Osipov VI (2002) Natural hazards control. Vestnik RAN 8:678–686 (in Russian)

Pisarenko VF (1998) Non-linear growth of cumulative flood losses with time. Hydrol Process 12:461–470

Pisarenko VF, Rodkin MV (2010) Heavy-tailed distributions in disaster analysis. Springer, Dordrecht

Reiss RD, Thomas M (1997) Statistical analysis of extreme values. Birkhauser, Basel

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Copyright information

© 2014 The Author(s)

About this chapter

Cite this chapter

Pisarenko, V.F., Rodkin, M.V. (2014). Heavy-Tailed Distributions and Their Properties. In: Statistical Analysis of Natural Disasters and Related Losses. SpringerBriefs in Earth Sciences. Springer, Cham. https://doi.org/10.1007/978-3-319-01454-8_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-01454-8_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-01453-1

Online ISBN: 978-3-319-01454-8

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)