Abstract

Industry 4.0 is reshaping manufacturing by seamlessly integrating data acquisition, analysis, and modeling, creating intelligent and interconnected production ecosystems. Driven by cyber-physical systems, the Internet of Things (IoT), and advanced analytics, it enables real-time monitoring, predictive maintenance, adaptable production, and enhanced customization. By amalgamating data from sensors, machines, and human inputs, Industry 4.0 provides holistic insights, resulting in heightened efficiency, and optimized resource allocation. Deep Learning (DL), a crucial facet of artificial intelligence, plays a pivotal role in this transformation. This article delves into DL fundamentals, Autoencoders, Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Generative Adversarial Networks (GANs) and, Deep Reinforcement Learning discussing their functions and applications. It also elaborates on key DL components: neurons, layers, activation functions, weights, bias, loss functions, and optimizers, contributing to network efficacy. The piece underscores Industry 4.0’s principles: interoperability, virtualization, decentralization, real-time capabilities, service orientation, and modularity. It highlights DL’s diverse applications within Industry 4.0 domains, including predictive maintenance, quality control, resource optimization, logistics, process enhancement, energy efficiency, and personalized production. Despite transformative potential, implementing DL in manufacturing poses challenges: data quality and quantity, model interpretability, computation demands, and scalability. The article anticipates trends, emphasizing explainable AI, federated learning, edge computing, and collaborative robotics. In conclusion, DL’s integration with Industry 4.0 heralds a monumental manufacturing paradigm shift, fostering adaptive, efficient, and data-driven production ecosystems. Despite challenges, a future envisions Industry 4.0 empowered by DL’s capabilities, ushering in a new era of production excellence, transparency, and collaboration.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Industry 4.0, recognized as a significant advancement in the manufacturing sector, revolves around the utilization of data and models within industrial contexts through data acquisition, analysis, and application [8]. Unlike its predecessors, Industry 4.0 places a distinct emphasis on the amalgamation of cyber-physical systems, the Internet of Things (IoT), and sophisticated data analytics. This integration results in the establishment of intelligent and interconnected manufacturing ecosystems. This integration also enables real time monitoring, predictive maintenance, flexible production, and enhanced customization. By harnessing data from various sources within the manufacturing process, including sensors, machines, and human inputs, Industry 4.0 enables a holistic view of operations, leading to improved efficiency, reduce downtime, and optimized resource allocation. The significance of Industry 4.0 lies in its potential to revolutionize manufacturing by ushering in a new era of agility, adaptability, and efficiency. It addresses the limitations of traditional manufacturing, where manual data collection, isolated processes, and reactive approaches were predominant. With Industry 4.0, manufacturers can transition towards proactive and data-driven strategies, allowing them to respond swiftly to changing market demands, reduce waste, and enhance overall productivity. Despite just a few decades passing since the onset of the first industrial revolution, we are on the cusp of the fourth revolution. Central to industry 4.0 is the fusion of digitalization and integration within manufacturing and logistics, facilitate by the internet and “smart” objects. Given the increasing complexity of modern industrial challenges, intelligent systems are imperative, an deep machine learning within Artificial Intelligence (AI) has emerged as a key player. While the field of Deep Learning is expansive and continually evolving, this discussion centers on prominent techniques. The methods covered include Convolutional Neural Networks, Autoencoders, Recurrent Neural Networks, Deep Reinforcement Learning, and Generative Adversarial Networks. These methods collectively represent a powerful toolkit for driving the industrial landscape towards the possibilities of the fourth industrial revolution.

2 Fundamentals of Deep Learning

2.1 Overview of Deep Learning Neural Networks

Deep learning is really a vast field, presenting some of the most promising methods. There are many techniques but will be focusing on some of the prominent ones [5].

-

1.

Convolutional Neural Networks (CNNs): Convolutional Neural Networks (CNNs) have demonstrated significant prowess in tasks pertaining to images [10]. Their capabilities shine particularly in domains such as image classification, object detection, semantic segmentation, and human pose estimation. The incorporation of techniques such as Rectified Linear Units (ReLU) nonlinearity, dropout, and data augmentation has led to notable enhancements in the performance of CNN models.

-

2.

Autoencoders (AEs): Auto encoders are designed for data representation learning. They consist of an encoder that abstracts data features and a decoder that reproduces input. Auto encoders find applications in dimensionality reduction, anomaly detection, data denoising and information retrieval.

-

3.

Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM): RNNs, equipped with memory of past states, are suitable for sequential data tasks. LSTMs, a type of RNN, address long-term dependency problems and are essential for tasks involving sequences like language modelling and speech recognition.

-

4.

Deep Reinforcement Learning (RL): Deep Reinforcement Learning agents interacting with environments to maximize rewards. Applications include robotics, optimization, control, and monitoring tasks in Industry 4.0.

-

5.

Generative Adversarial Networks (GANs): GANs consist of a generator and a discriminator. They create data that’s indistinguishable from real data. GANs find applications in image-to-image translation, text-to-image synthesis, video generation, 3D object generation, music composition, and medical imaging.

These deep learning neural networks collectively offers a diverse range of capabilities, driving innovation and progress in Industry 4.0 and various other fields.

2.2 The Foundations of Deep Learning

Going deep into the deep learning field, coming up are some of the key components of a neural networks like neurons, layers, activation functions. A schematic view of deep learning model is given in Fig. 1.

-

1.

Neurons: The basic unit of a neural network is the neuron. Neurons are interconnected and work together to process information. Each neuron has a number of inputs, each of which is multiplied by the weight. The weighted inputs are later summed together and passes through an activation function to produce an output.

-

2.

Layers:Neurons are arranged into distinct layers. Commencing with the input layer as the first tier, which ingests raw data, and concluding with the output layer as the ultimate stratum, generating the conclusive output. Intermediate to these, the hidden layers engage in the data processing role.

-

3.

Activation functions: Activation functions are pivotal for instilling non-linearity within neural networks. They render the output of a neuron a non-linear function of its inputs. This non-linearity holds significance as it empowers neural networks to grasp intricate associations existing between input and output data. Figure 2 discussed some of the widely used activation functions.

-

4.

Weights: The weights in a neural network delineate the connections linking neurons. These weights dictate the degree of influence that each input wields over a neuron’s output. During the training phase, these weights undergo adjustments aimed at minimizing the discrepancy between projected and actual outputs.

-

5.

Bias: Introducing a bias parameter, added to the aggregated, weighted input sum prior to traversing the activation function, significantly impacts the neuron’s output. This bias parameter contributes to regulating the neuron’s behavior.

-

6.

Loss function:The role of the loss function is to gauge the disparity between the anticipated and factual output. In guiding the neural network’s training process, the loss function plays a pivotal role.

-

7.

Optimizer: The optimizer is an algorithm that updates the weights and biases of the neural network to minimize the loss function.

The performance of a neural network can be significantly influenced by critical hyperparameters including the quantity of layers, the number of neurons housed within each layer, the selection of activation functions, and the learning rate associated with the optimizer. Typically, these hyperparameters are determined via an iterative process involving experimentation and refinement. It’s worth noting that the optimal values for these hyperparameters may vary based on the specific problem and dataset. As a result, a thorough exploration of different configurations is often necessary to attain the best performance for a given task.

3 Industry 4.0: The Fourth Industrial Revolution

Industry 4.0 is the name given to the current trend of automation and data exchange in manufacturing technologies [2]. It is characterized by the use of cyber-physical systems (CPS), the Internet of Things (IoT), cloud computing, and Artificial Intelligence (AI) [14]. These technologies are converging to create a more connected, intelligent, and efficient manufacturing environment. The core principles of Industry 4.0 are shown in Fig. 3

-

Interoperability: The ability of different systems and devices to communicate and exchange data.

-

Virtualization: The creation of a virtual representation of the physical world.

-

Decentralization: The distribution of control and decision-making to the edge of the network.

-

Real-time capability: The ability to collect, analyze, and act on data in real time.

-

Service orientation: The provision of services as a way to interact with and manage systems.

-

Modularity: The ability to easily add, remove, or replace components.

Automation, IoT and Data-driven decision making are the three key technologies that are driving the fourth industrial revolution, also known as Industry 4.0. These three technologies are converging to create a more connected, intelligent, and efficient manufacturing environment. By automating tasks, collecting data, and using data to make decisions, manufacturers can improve their productivity, quality, and profitability.

Automation is the use of machines and software to perform tasks that would otherwise be done by humans. In manufacturing, automation can be used to automate tasks such as welding, painting, and assembly. This can help to improve efficiency and productivity, as well as reduce the risk of human error.

Internet of Things (IoT) is a network of physical objects that are embedded with sensors, software, and network connectivity to enable them to collect and exchange data. In manufacturing, the IoT can be used to collect data from machines, sensors, and other devices. This data can be used to monitor the performance of equipment, identify potential problems, and improve efficiency. Also, In Healthcare Industry IoT has been used in the remote patient monitoring systems, fitness tracker devices, etc. [3].

Data-driven decision-making is the use of data to make decisions. In manufacturing, data-driven decision-making can be used to optimize production processes, improve quality, and reduce costs. For example, data can be used to identify the most efficient way to produce a product, or to predict when a machine is likely to fail.

4 Deep Learning Techniques and Architectures

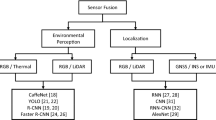

In continuation to Sect. 2, various techniques and architectures associated like CNNs, RNNs, GANs are listed in Fig. 4 and are discussed as follows: [5]. Also, Table 1 gives a comparison between CNNs, RNNs and GANs.

-

Convolutional Neural Networks (CNNs) represent a category of deep learning algorithms that find widespread application in tasks like image classification, object detection, and segmentation. These networks draw inspiration from the functioning of the human visual cortex, effectively learning to identify relevant image features for a given task. Structurally, CNNs consist of a sequence of specialized layers, each assigned a distinct role in the process [1]. The initial layer, known as the convolutional layer, employs a convolution operation on the input image. This operation extracts pertinent attributes from the image, such as edges and textures. The outcome of this convolutional layer is then channeled into a pooling layer, which downsamples the data to mitigate overfitting and reduce data dimensions.

Subsequently, the output from the pooling layer is directed through a sequence of fully connected layers. These layers are responsible for learning and classifying the features as extracted by the convolutional counterparts. Ultimately, the CNN culminates with an output layer that generates the anticipated class label for the input image. Demonstrating impressive effectiveness across various image processing tasks, CNNs excel in object classification, object detection, and image segmentation. Their scope extends beyond images as well, encompassing domains like natural language processing and speech recognition.

-

Recurrent Neural Networks (RNNs) stand as a class of deep learning algorithms with prominent usage in the domain of natural language processing, encompassing tasks like speech recognition and machine translation [15]. RNNs exhibit the capability to comprehend and model sequential data, such as text and speech.

Constituted by an array of interconnected nodes, RNNs embrace the ability to store values. These nodes are configured in a loop, enabling the output of each node to be fed into the succeeding one. This cyclic arrangement empowers the RNN to discern and model the interdependencies existing among distinct elements of the sequence. Ultimately, the RNN yields a prognosis of the subsequent element within the sequence. The RNN undergoes training through the minimization of the error between predicted and actual outputs. Proven to be remarkably potent in an array of natural language processing undertakings, RNNs have been harnessed for speech recognition, language translation, and text generation. Furthermore, their utility extends to various other domains like robotics and financial forecasting.

-

Generative Adversarial Networks (GANs) represent a category of deep learning algorithms primarily employed for the purpose of image generation. This architecture comprises two neural networks, namely the generator and the discriminator [5]. The generator is tasked with crafting new images, while the discriminator’s role is to differentiate between authentic and counterfeit images [18].

The generator is trained to fabricate images that exhibit the highest degree of realism achievable. Conversely, the discriminator undergoes training to proficiently discriminate between genuine and fabricated images. A distinctive aspect of GANs is their adversarial training approach, wherein the two networks enter into a competitive relationship. The generator strives to deceive the discriminator, while the latter endeavors to accurately distinguish authentic and synthetic images.

GANs have notably demonstrated their efficacy in generating lifelike images. Their applications encompass generating depictions of objects, crafting authentic facial representations, and producing images of nonexistent individuals. Beyond image generation, GANs are finding application in diverse domains including text and music generation.

In a more generalized way, GANs are super-intelligent artistic robots that may produce outputs of different types. Table 2 discusses different types of GANs on the basis of function they perform.

These are just three of the many popular deep learning architectures. Other popular architectures include deep belief networks (DBNs), stacked autoencoders, and capsule networks. Deep learning is a rapidly evolving field, and new architectures are being developed all the time.

Now, Deep Learning is applied in various industries but lets see how it is applied in the Industry 4.0 challenges:

-

1.

Predictive maintenance: Deep Learning can be used to predict when the machines are likely to fail. This can mainly help in preventing unplanned downtime and improve the efficiency of the manufacturing process.

-

2.

Quality Control: Deep Learning can be used to identify defects in products. This can help to improve the quality of products and reduce the number of products that need to be scrapped.

-

3.

Resource optimization: Deep Learning can be used to optimize the use of resources, such as energy and material. This can help to reduce costs and improve the environmental impact of the manufacturing process.

-

4.

Logistics: Deep Learning can be used to optimize the logistics of the manufacturing process, such as transportation and warehousing. This can help to reduce costs and improve the efficiency of the supply chain.

5 Data Acquisition and Preprocessing

The realm of deep learning applications rests upon a foundational dependency on data, rendering an ample supply of data imperative for both effective training and operational success. Within this context, the quality and quantity of data assume crucial roles that significantly influence the performance of deep learning models. The significance of data in deep learning applications is underscored by several key reasons. Primarily, data forms the bedrock upon which model training is built. This foundational aspect allows the model to discern intricate patterns and facilitate predictions by engaging with extensive sets of labeled data. Moreover, data occupies a pivotal role in evaluating model performance. Through the use of dedicated test data, the model’s capacity to generalize to novel and unseen data is measured.

Furthermore, data contributes to model refinement. This is achieved either through the introduction of additional data or the calibration of model parameters, a process that culminates in an enhanced overall model performance. The pivotal role of data quality is evidenced by the fact that subpar data quality hampers proper model learning, consequently leading to inaccurate predictions. Moreover, the accuracy of data labeling is equally crucial. Mislabeling data can steer the model towards learning incorrect patterns, ultimately undermining its predictive capability. The quantity of data is equally pivotal, as deep learning models thrive on extensive datasets for effective training. Insufficient data, on the other hand, stymies proper learning and compromises predictive accuracy.

It is also important to acknowledge various characteristics of data that hold significance. Considerations such as data quality, quantity, and preprocessing techniques hold paramount importance within deep learning applications, shaping the course of model development and predictive outcomes.

-

Data quality: The quality of the data is essential for the performance of deep learning models. The data should be clean, accurate, and representative of the problem that the model is trying to solve.

-

Data quantity: The quantity of the data is also important. Deep learning models need a lot of data to train properly. If the data is not enough, the model will not be able to learn properly and will not be able to make accurate predictions.

-

Data preprocessing: Data preprocessing is the process of cleaning and preparing the data for training the model. This includes tasks such as removing noise, correcting errors, and transforming the data into a format that the model can understand.

In deep learning applications, ensuring data quality, quantity, and preprocessing involves employing specific techniques. Data cleaning targets noise and outliers, which can hinder model training, by removing them from the dataset. Data normalization transforms data to have a mean of 0 and a standard deviation of 1 [6], enhancing model performance and comparability. Data augmentation generates new data from existing sources through actions like rotation or cropping, bolstering model robustness against data variations [16]. Additionally, feature selection aims to extract essential data attributes, often using statistical tests or machine learning algorithms, reducing noise and ultimately refining model performance. These techniques collectively fortify the data-driven foundation of deep learning models, enabling more accurate and effective outcomes.

6 Process Optimization and Energy Efficiency

Deep Learning optimizes manufacturing processes through its ability to extract insights, recognize patterns, and make predictions from complex data [8]. Deep learning has revolutionized manufacturing processes by harnessing its data analysis capabilities to enhance efficiency, quality, and productivity across various aspects of the production chain. Through the application of advanced neural networks, manufacturing industries have gained the ability to optimize operations and make informed decisions based on insights extracted from intricate data streams.

In the realm of quality control and defect detection, Convolutional Neural Networks (CNNs) have emerged as powerful tools. These networks are adept at scrutinizing visual data, such as images of products or materials, to identify imperfections, anomalies, or deviations from the desired standard. By employing CNNs along assembly lines, manufacturers can detect issues in real-time and promptly initiate corrective measures, ensuring that only products meeting stringent quality criteria proceed further in the production process.

Predictive maintenance, another pivotal application, leverages Recurrent Neural Networks (RNNs) to foresee equipment failures. By analyzing data from sensors embedded in machinery, RNNs can predict potential breakdowns before they occur. This proactive approach enables maintenance teams to schedule timely interventions, minimizing downtime and preventing costly production disruptions. Process optimization is yet another domain transformed by deep learning. The technology’s aptitude for deciphering intricate patterns within extensive datasets enables manufacturers to fine-tune parameters influencing production. Deep learning algorithms can analyze factors like temperature, pressure, and material composition, leading to refined processes, reduced waste, and enhanced operational efficiency.

Supply chain management is further optimized by deep learning’s predictive capabilities. Recurrent Neural Networks excel in forecasting demand by analyzing historical trends, market shifts, and other pertinent data points. Manufacturers can align production schedules and resource allocation more accurately, thereby reducing excess inventory and streamlining operations. Moreover, deep learning contributes to energy efficiency efforts. By scrutinizing energy consumption patterns, models can propose strategies for optimizing energy usage. This might involve scheduling energy-intensive tasks during periods of lower demand or recommending adjustments to equipment settings to minimize energy consumption.

In a context of increasing demand for customized products, deep learning aids in efficiently fulfilling individual preferences. By analyzing customer data and production constraints, models can suggest configurations that align with consumer desires while maintaining production efficiency. Deeper insights into root causes of quality issues or process failures are unlocked through deep learning. By scrutinizing data across production stages, models can reveal correlations and patterns that contribute to problems, facilitating continuous improvement initiatives.

7 Challenges and Limitations

The implementation of deep learning in manufacturing processes is accompanied by notable challenges and limitations. Acquiring sufficient and accurate data, a prerequisite for effective model training, remains a hurdle, particularly for rare events or intricate processes. The opacity of deep learning models, due to their complex architectures, raises concerns about interpretability, critical for regulatory compliance and troubleshooting. High computational demands for training hinder accessibility, particularly for smaller manufacturers, while ensuring model generalization and scalability across diverse environments proves intricate. Data security and privacy concerns arise when sharing proprietary manufacturing data for model development. Additionally, the trade-off between real-time processing demands and model processing time must be balanced, and vigilance is needed to prevent biased decisions originating from biased training data. Ultimately, managing these challenges while achieving a positive return on investment remains pivotal for successful integration of deep learning in manufacturing.

8 Future Trends

In the forthcoming landscape of manufacturing, the application of deep learning is projected to usher in a series of transformative trends that promise to redefine industry practices. A notable trend on the horizon is the advancement of explainable AI, a response to the increasing complexity of deep learning models. This trajectory emphasizes the development of techniques that provide transparent insights into the decision-making processes of these models. By unraveling the rationale behind predictions, explainable AI aims to cultivate trust among stakeholders, enabling them to comprehend and endorse the reasoning driving these AI-driven outcomes. This will be crucial in sectors where accountability, regulatory compliance, and user confidence are paramount.

Concerns surrounding data privacy and security have fueled the rise of federated learning. This innovative approach allows models to be collaboratively trained across multiple devices or locations while keeping the underlying data decentralized. In the manufacturing context, where proprietary data is sensitive, federated learning stands as a solution to drive collective learning while safeguarding data privacy. This trend is poised to reshape how manufacturers harness the power of deep learning while respecting data confidentiality.

The synergy between deep learning and edge computing is set to play a pivotal role in manufacturing’s future. With the growing capabilities of edge devices, the deployment of deep learning models directly at the data source is becoming increasingly viable. This paradigm shift enables real-time analysis and decision-making at the edge, circumventing the need to transmit massive data volumes to central servers. The outcome is reduced latency, enhanced operational efficiency, and the potential to react swiftly to critical events, underscoring the transformative potential of edge-driven deep learning applications in manufacturing.

Integration with the Internet of Things (IoT) is another key trend that will reshape manufacturing. Deep learning models integrated with IoT devices will bring about highly accurate predictive maintenance and optimization capabilities. Sensors embedded within manufacturing equipment will continuously feed data to these models, enabling early identification of potential issues and averting costly downtimes. This seamless connection between devices and AI models is poised to elevate manufacturing efficiency to new heights, offering an intelligent and proactive approach to maintenance and resource utilization.

Furthermore, deep learning’s impact on collaborative robotics (cobots) is set to expand. Future developments in machine learning algorithms will facilitate more profound insights into human behavior, enabling cobots to interact more safely and efficiently with human counterparts on the factory floor. Enhanced human-machine collaboration will foster an environment where automation and human expertise harmonize, optimizing productivity and safety in manufacturing processes.

An overarching theme that is expected to define the future of deep learning in manufacturing is cross-disciplinary collaboration. AI experts, domain specialists, and manufacturers will increasingly join forces to tailor deep learning solutions to the unique challenges of the industry. This multidisciplinary approach promises to fuel innovation, driving the industry towards smarter, adaptive manufacturing systems that address challenges and seize opportunities across diverse sectors.

As these future trends unfold, the manufacturing landscape is poised to witness a profound transformation driven by the capabilities of deep learning. From transparency and privacy considerations to real-time edge analysis, IoT integration, and collaborative robotics, deep learning is on the brink of revolutionizing manufacturing practices in ways that were once only imagined. Through careful navigation of these trends, industries stand to gain a competitive edge and unlock new avenues of growth and efficiency in the Industry 4.0 era.

In the dynamic landscape of Industry 4.0, the amalgamation of data-driven technologies has paved the way for transformative shifts in manufacturing. Deep Learning, as a cornerstone of this revolution, has illuminated avenues that were once only conceivable in the realm of science fiction. The fusion of cyber-physical systems, IoT, and advanced analytics has ignited a paradigm shift from traditional manufacturing methodologies towards a future brimming with agility, adaptability, and efficiency. As we delve into the depths of deep learning techniques and architectures, it becomes evident that these methods hold the power to unravel complexities, discern patterns, and predict outcomes in ways that were previously unattainable.

Convolutional Neural Networks have empowered the identification of defects and quality deviations, opening the door to real-time intervention and elevated product excellence. Recurrent Neural Networks, with their sequential analysis prowess, have unlocked the realm of predictive maintenance, minimizing downtimes and optimizing resource allocation. Generative Adversarial Networks have transcended the boundaries of imagination, enabling the generation of synthetic data that parallels reality. These profound advancements, bolstered by the principles of data quality, quantity, and preprocessing, are propelling the industry towards a new era of production excellence.

However, these leaps are not without their challenges. Data remains both the fuel and the bottleneck of deep learning applications, necessitating a delicate balance between quality, quantity, and privacy concerns. The complexity of deep learning models raises interpretability issues, requiring innovative approaches to ensure transparent decision-making. Moreover, the computational demands and scalability concerns must be addressed to democratize the benefits of this technology across the manufacturing spectrum. As we forge ahead, the synthesis of innovative solutions and collaborative endeavors is essential to surmount these obstacles and reap the rewards of deep learning integration.

The future holds promises that extend beyond the horizon. Explainable AI is poised to infuse transparency into the decision-making processes, fostering trust and accountability. Federated learning is set to revolutionize data privacy by enabling collective learning while preserving sensitive information. Edge computing, in tandem with IoT integration, is propelling us towards real-time insights at the source, rendering processes swift and responsive. Collaborative robotics is poised for safer, more efficient interactions, where human expertise and automation harmonize seamlessly. Cross-disciplinary collaboration, the cornerstone of innovation, will orchestrate the rise of smarter, adaptive manufacturing systems, creating a future where technology is harnessed to its fullest potential.

As we stand at the confluence of human ingenuity and technological prowess, the journey towards a deeply integrated Industry 4.0 is underway. Through perseverance, collaboration, and a dedication to overcoming challenges, the fusion of deep learning and manufacturing promises a future that is not only intelligent and efficient, but also profoundly transformative. The fourth industrial revolution is not a distant concept; it is unfolding before us, driven by the power of deep learning and the boundless possibilities it bestows upon the manufacturing landscape.

References

Albawi, S., Mohammed, T.A., Al-Zawi, S.: Understanding of a convolutional neural network. In: 2017 International Conference on Engineering and Technology (ICET), pp. 1–6 (2017). https://doi.org/10.1109/ICEngTechnol.2017.8308186

Bali, V., Bhatnagar, V., Aggarwal, D., Bali, S., Diván, M.J.: Cyber-Physical, IoT, and Autonomous Systems in Industry 4.0. CRC Press, Boca Raton (2021)

Bhat, O., Gokhale, P., Bhat, S.: Introduction to IoT. Int. Adv. Res. J. Sci. Eng. Technol. 5(1), 41–44 (2007). https://doi.org/10.17148/IARJSET.2018.517

Han, Z., et al.: Stackgan: text to photo-realistic image synthesis with stacked generative adversarial networks. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 5908–5916. IEEE (2017)

Hernavs, J., Ficko, M., Berus, L., Rudolf, R., Klančnik, S.: Deep learning in industry 4.0 - brief. J. Prod. Eng. 21(2), 1–5 (2018). https://doi.org/10.24867/JPE-2018-02-001

Jamal, P., Ali, M., Faraj, R.H.: Data normalization and standardization: a technical report. In: Machine Learning Technical Reports, pp. 1–6. Machine Learning Lab, Koya University (2014). https://doi.org/10.13140/RG.2.2.28948.04489

Li-Chia, Y., Szu-Yu, C., Yi-Hsuan, Y.: Midinet: a convolutional generative adversarial network for symbolic-domain music generation. In: Europe: ISMIR (2017)

May, M.C., Neidhöfer, J., Körner, T., Schäfer, L., Lanza, G.: Applying natural language processing in manufacturing. In: Procedia CIRP, pp. 184–189. Elsevier B.V. (2022). https://doi.org/10.1016/j.procir.2022.10.071

Menon, A., Mehrotra, K., Mohan, C.K., Ranka, S.: Characterization of a class of sigmoid functions with applications to neural networks. Neural Netw. 9(5), 819–835 (1996). https://doi.org/10.1016/0893-6080(95)00107-7. https://www.sciencedirect.com/science/article/pii/0893608095001077

O’Shea, K., Nash, R.: An introduction to convolutional neural networks (2015). https://doi.org/10.48550/arXiv.1511.08458

Schlegl, T., Seeböck, P., Waldstein, S.M., Schmidt-Erfurth, U., Langs, G.: Unsupervised anomaly detection with generative adversarial networks to guide marker discovery. In: Niethammer, M., et al. (eds.) IPMI 2017. LNCS, vol. 10265, pp. 146–157. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-59050-9_12

Shah, P., Sekhar, R., Kulkarni, A., Siarry, P.: Metaheuristic Algorithms in Industry 4.0. Advances in Metaheuristics. CRC Press (2021). https://books.google.co.in/books?id=7jc8EAAAQBAJ

Sharma, S., Sharma, S., Athaiya, A.: Activation functions in neural networks. Int. J. Eng. Appl. Sci. Technol. 4, 310–316 (2020). https://www.ijeast.com

Sony, M., Naik, S.: Key ingredients for evaluating industry 4.0 readiness for organizations: a literature review. Benchmark. Int. J. 27(7), 2213–2232 (2020). https://doi.org/10.1108/BIJ-09-2018-0284

Tarwani, K., Edem, S.: Survey on recurrent neural network in natural language processing. Int. J. Eng. Trends Technol. 48(6), 301–304 (2017). https://doi.org/10.14445/22315381/IJETT-V48P253

Van Dyk, D.A., Meng, X.L.: The art of data augmentation. J. Comput. Graph. Stat. 10(1), 1–50 (2001). https://doi.org/10.1198/10618600152418584

Vondrick, C., Pirsiavash, H., Torralba, A.: Generating videos with scene dynamics. In: Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 29. Curran Associates, Inc. (2016)

Wang, K., Gou, C., Duan, Y., Lin, Y., Zheng, X., Wang, F.Y.: Generative adversarial networks: introduction and outlook. IEEE/CAA J. Automatica Sinica 4(4), 588–598 (2017). https://doi.org/10.1109/JAS.2017.7510583

Wu, J., Zhang, C., Xue, T., Freeman, B., Tenenbaum, J.: Learning a probabilistic latent space of object shapes via 3D generative-adversarial modeling. In: Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R. (eds.) Advances in Neural Information Processing Systems, vol. 29. Curran Associates, Inc. (2016)

Zhu, J.Y., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 2242–2251. IEEE (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Agrawal, K., Nargund, N. (2024). Deep Learning in Industry 4.0: Transforming Manufacturing Through Data-Driven Innovation. In: Devismes, S., Mandal, P.S., Saradhi, V.V., Prasad, B., Molla, A.R., Sharma, G. (eds) Distributed Computing and Intelligent Technology. ICDCIT 2024. Lecture Notes in Computer Science, vol 14501. Springer, Cham. https://doi.org/10.1007/978-3-031-50583-6_15

Download citation

DOI: https://doi.org/10.1007/978-3-031-50583-6_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-50582-9

Online ISBN: 978-3-031-50583-6

eBook Packages: Computer ScienceComputer Science (R0)