Abstract

“In the realm of classic gaming, Mario has held a special place in the hearts of players for generations. This study, titled ‘Enhancing Mario Gaming using Optimized Reinforcement Learning’, ventures into the uncharted territory of machine learning to elevate the Mario gaming experience to new heights. Our research employs state-of-the-art techniques, including the Proximal Policy Optimization (PPO) algorithm and Convolutional Neural Networks (CNN), to infuse intelligence into the Mario gameplay. By optimizing reinforcement learning, we aim to create an immersive and engaging experience for players. In addition to the technical aspects, we delve into the concept of game appeal, a pivotal component in capturing player engagement. Our innovative approach blends the prowess of PPO, CNN, and reinforcement learning to unlock unique insights and methodologies for enhancing Mario games. This comprehensive analysis provides actionable guidance for selecting the most suitable techniques for distinct facets of Mario games. The culmination is an enriched, captivating, and optimized gaming experience that befits the title, ‘Enhancing Mario Gaming using Optimized Reinforcement Learning’.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

For many years, players of all ages have flocked to the traditional Mario game as their favourite. Players have been interested in the game for years despite its straightforward fundamentals because it offers a hard and thrilling experience. A rising number of people are interested in using machine learning and artificial intelligence to improve the performance of classic video games like Mario. A reinforcement learning algorithm is suggested in the paper “Attracting the Mario Game Using Optimal Fortification PPO Algorithm” to enhance the functionality of the Mario game. The Proximal Policy Optimization (PPO) algorithm and the addition of a better reward system are the authors’ primary methods for optimising the programme. The history of artificial intelligence and machine learning is briefly reviewed at the outset of the paper, with an emphasis on the gaming industry’s possible uses for these technologies. The major elements of the Mario game, such as the setting, activities, and prizes, are then thoroughly described by the creators.

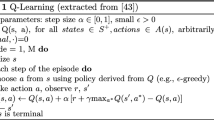

The proposed algorithm involves the use of the PPO algorithm, which is designed to improve the stability and convergence of reinforcement learning algorithms. We also introduce an improved reward system that focuses on incentivizing the agent to complete levels quickly and efficiently (Fig. 1).

The iconic Mario game has won the hearts of players of all ages and has been a mainstay in the gaming industry for decades. Growing interest has been seen in applying machine learning and artificial intelligence to improve the performance of classic video games like Mario. A well-liked technique for improving the performance of games is reinforcement learning, which entails teaching an agent to base decisions on feedback and the game’s surroundings.

2 Related Works

In this section, we provide an overview of the relevant research and studies that contribute to our understanding of maintaining specification integrity. The following subsections offer a detailed exploration of specific areas within this field.

2.1 AI-Enhanced Mario Gameplay: A Deep Reinforcement Learning Approach

The study by Yizheng et al. [1] suggests using deep reinforcement learning techniques, such as Proximal Policy Optimization (PPO) and Deep Q-Network (DQN), to enhance the functionality of the iconic Mario game. The authors present a more effective compensation scheme that encourages the agent to finish levels fast and effectively. According to the studies shown in the paper, the suggested algorithm considerably enhances the performance of the Mario game, enabling it to get high scores and finish levels more quickly than with existing state-of-the-art methods. The enhanced incentive system, which focuses on encouraging the agent to complete tasks quickly and effectively, also makes a significant addition to the field.

Figure 2 in the paper provides a clear score evaluation. However, some issues need attention. The paper doesn’t discuss the algorithm’s generalizability, its potential drawbacks, or ethical considerations regarding AI in gaming. These aspects are essential to consider in AI-enhanced gameplay.

2.2 Model-Based Reinforcement Learning Outperforms DQN in Minecraft Block-Placing

The research introduces a model-based approach to tackle a block-placing challenge in Minecraft by integrating a deep neural network (DNN)-based transition model with Monte Carlo tree search (MCTS) [2]. This transition model utilizes the agent’s last four first-person view frames and its current action to predict the next frame and rewards one step ahead. Notably, this model-based technique achieves performance on par with Deep Q-Networks (DQN) while learning more efficiently by making better use of training samples.

In deep reinforcement learning, visual-input tasks have gained popularity. Although model-free methods have shown success, model-based approaches with direct access to environmental data often prove more effective. Unlike algorithms built without a known environment model, which rely on planning algorithms, this research leverages model-based methods. Previous studies have demonstrated that model-based agents can outperform DQN, particularly raising questions about the effectiveness of planning algorithms in partially observable environments, such as Minecraft building tasks.

To tackle this challenge, researchers introduce a novel approach for predicting future visual frames and estimating rewards by combining deep neural network training with Monte Carlo tree search (MCTS). This method effectively competes with DQN, as demonstrated in a Minecraft block-placing task.

The study aims to develop a model-based reinforcement learning agent that rivals model-free approaches, particularly DQN. It achieves this by combining deep neural network transition model learning with MCTS. Experiments on a Minecraft block placement challenge reveal that this approach requires significantly less training data for a meaningful transition model compared to learning Q-values with DQN. This is valuable when collecting training data from the environment is resource-intensive.

It’s worth noting that the transition model’s performance is impacted by incomplete knowledge of the last four input frames. Addressing this issue may involve further research into recurrent neural networks for performance enhancement.

2.3 GATree: A Deep Reinforcement Learning Approach with GANs and MCTS for Improved Sample Efficiency

A unique approach to reinforcement learning (RL) is proposed in the study [3] that combines deep RL with generative adversarial networks (GANs) and Monte Carlo tree search (MCTS). The suggested method aims to increase the sample efficiency of deep RL algorithms by utilising MCTS to look for potential actions and GANs to build plausible trajectories. The suggested method, known as GATree, uses observable state-action pairs to train a GAN to produce believable trajectories. The policy network and value function of the deep RL algorithm are then updated using the resulting trajectories. Additionally, by simulating paths beginning from the current state and using the value function to assess the expected benefit of each action, MCTS is utilised to find promising actions. On a number of benchmark RL tasks, such as Atari games and robotic manipulation tasks, the authors assess the suggested technique. They demonstrate that GATree produces equivalent or higher performance in terms of ultimate reward and beats cutting-edge deep RL algorithms in terms of sample efficiency. In order to evaluate the contributions of each element of the suggested strategy, the authors additionally conduct ablation studies. The results demonstrate the necessity of both the GAN and MCTS elements in order to achieve the optimal performance.

2.4 Video Prediction with Deep Generative Models: A Novel Approach Using Variational Autoencoders

Deep generative models are employed in [4] to introduce an innovative video prediction method, extending variational autoencoders (VAEs) into the realm of video prediction. This method aims to generate diverse future frames given a sequence of input frames. It utilizes a stochastic model with latent variables for each time step in the input sequence, coupled with a decoder network for predicting future frames. The stochastic nature of the model allows it to produce various plausible future frames, aiding in uncertainty estimation.

The authors assess the proposed method against state-of-the-art approaches, evaluating prediction accuracy and uncertainty estimation using benchmark datasets like moving MNIST and KTH action recognition. Ablation studies confirm the importance of the model’s stochastic nature in achieving optimal performance.

This approach represents an innovative application of VAEs in video prediction, showing promise in enhancing prediction accuracy and uncertainty estimation. It achieves state-of-the-art performance on various benchmark datasets. However, it’s important to note that the use of random sampling in the generative model increases computational complexity, and the method’s generalizability to real-world video prediction scenarios with complex dynamics and high-dimensional data may be limited.

2.5 GPU-Based A3C for Efficient Reinforcement Learning Agent Training

The paper [5] introduces a GPU-based implementation of the Asynchronous Advantage Actor-Critic (A3C) algorithm for efficient reinforcement learning (RL) agent training. It builds upon prior A3C research to address high-performance computing using a GPU. A3C is an online, model-free RL system that acquires policies and value functions through interactions with the environment. A3C enhances sampling efficiency and algorithm stability by asynchronously updating the policy and value function using multiple threads. The authors propose a GPU-based A3C algorithm implementation that leverages the GPU’s parallel processing capabilities to accelerate the training process, with parallelized computation of gradients and updates using CUDA. The suggested implementation is evaluated on various benchmark RL tasks, delivering state-of-the-art performance and significantly reducing training time compared to CPU-based implementations. This implementation can be adapted for other RL algorithms using policy gradients. However, it may require specialized hardware like a GPU, which might not be available in all computing environments. Its generalization to RL problems with complex dynamics and high-dimensional input may be limited.

2.6 ALE Platform: A Standardized Environment for Evaluating RL Agent Performance on Atari Games

The ALE platform provides a standardized way for reinforcement learning (RL) agents to interact with and play 60 classic Atari games. It allows RL agents to observe the game screen in real time and generate commands. ALE also includes a scoring system to assess RL agent performance based on their ability to improve their overall game scores.

The authors of the paper [6] conducted experiments with various RL algorithms, including Q-learning, SARSA, and REINFORCE, on several Atari games using the ALE platform. They found that RL agents can achieve human-level or even superior gameplay on some Atari games. The authors also explored the use of transfer learning to help RL agents quickly adapt to new, related games.

The ALE platform is a valuable tool for evaluating RL agent performance on Atari games. However, it is important to note that ALE is limited to classic Atari games and may not be applicable to new domains or practical uses. Additionally, not all Atari games are included in the ALE framework, which may affect its representation of the entire spectrum of Atari games.

2.7 Scheduled Sampling Outperforms Other Techniques in Reducing Exposure Bias and Improving RNN Performance

Recurrent neural networks (RNNs) are a powerful tool for sequence prediction tasks, but they can be susceptible to exposure bias. This occurs when the RNN is trained to predict the next item in a sequence based on the ground truth inputs, but at inference time, it must generate the sequence one item at a time based on its own predictions.

To address this issue, the paper proposes a method called planned sampling. Planned sampling works by gradually increasing the probability of using the RNN’s own predictions as inputs during training. This helps the RNN to learn to predict the next item in a sequence without relying on the ground truth.

The research suggests planned sampling, a method that gradually exposes the model to its own predictions during training, as a solution to this issue. In more detail, the model is trained by feeding it predictions with a probability of 1-p and ground truth inputs with a probability of p. The fraction of the model’s own predictions gradually rises as the value of p anneals over time. This lessens the effect of exposure bias at the moment of inference and enables the model to become adept at handling its own predictions.

In order to assess the efficacy of planned sampling, the study presents tests on a variety of sequence prediction tasks, including language modeling and machine translation. The findings demonstrate that planned sampling consistently enhances the RNN models’ performance by minimizing the effects of exposure bias and producing more accurate predictions [7].

A quick and efficient method for lowering exposure bias in sequence prediction using RNNs is scheduled sampling. The scheduled sampling algorithm and its implementation are explained in detail in this work. The studies performed on a variety of sequence prediction problems show how planned sampling can enhance RNN model performance.

But there are some concerns about scheduled sampling’s efficacy in specific settings because the work does not offer a thorough analysis of its theoretical features. It is uncertain how effectively planned sampling generalizes to other sorts of issues because the trials reported in the publication are restricted to a particular collection of sequence prediction tasks.

2.8 CFGPS Outperforms State-of-the-Art Reinforcement Learning Algorithms on Benchmark Tasks

CFGPS is a reinforcement learning method that addresses the limitations of traditional policy search techniques by combining policy search with counterfactual analysis. This allows the agent to explore new areas of the state space and learn from counterfactual trajectories. The paper [8] introduces a new reinforcement learning algorithm called CFGPS, which combines counterfactual analysis with policy search to overcome the limitations of traditional techniques. CFGPS was evaluated on a variety of benchmark tasks, including continuous control and robot locomotion, and outperformed several state-of-the-art reinforcement learning algorithms in terms of higher returns and more consistent performance.

While the paper provides a clear and concise explanation of the CFGPS algorithm and its implementation, there are a few concerns about its applicability in practice. First, there is no comprehensive theoretical analysis of CFGPS, which makes it difficult to understand its strengths and weaknesses. Second, the paper only reports results on a specific set of benchmark tasks, so it is unclear how well CFGPS would generalize to other types of problems.

Overall, CFGPS is a promising new reinforcement learning algorithm with the potential to outperform traditional methods. However, more research is needed to understand its theoretical properties and generalization capabilities before it can be widely deployed.

2.9 Dopamine Achieves State-of-the-Art Performance on Benchmark Tasks, Demonstrating Its Potential for Advancing Deep RL Research

The research paper [9] introduces Dopamine, an open-source research framework designed to facilitate deep reinforcement learning (DRL) research. Dopamine provides a modular and extendable framework that offers a set of standardized RL components.

Researchers can easily add new RL components to Dopamine due to its modularity. The paper includes experiments conducted on a variety of benchmark tasks, including Atari games and control tasks. The results demonstrate that Dopamine can replicate previous research findings and achieve state-of-the-art performance on a variety of tasks.

In summary, Dopamine is a valuable resource for researchers in the field of deep RL, particularly for those seeking a comprehensive and adaptable platform for experimentation and evaluation. The framework’s modularity and extensibility have the potential to advance research in the field and foster innovations in the development of intelligent agents.

2.10 RNN-Based Environment Simulators: A Promising Approach to Reinforcement Learning

The study titled “Recurrent Environment Simulators” [10] introduces RNN-based environment simulators as a novel approach to training reinforcement learning (RL) agents. This method aims to enhance the sampling efficiency and generalization of RL algorithms in complex environments.

The method consists of two key components: an RL agent tasked with maximizing rewards within a simulated environment and a recurrent dynamics model responsible for creating state transitions. Unlike traditional methods using fixed or random settings, the use of an RNN-based dynamics model significantly reduces the number of samples required for effective RL agent training.

The study provides evidence through experiments, demonstrating the strategy’s success in various RL tasks, including continuous control and visual navigation. The advantages of this approach include:

-

1.

**Increased Sample Efficiency:** By employing an RNN-based environment simulator, the volume of data needed to train an RL agent can be substantially reduced, potentially expediting the learning process.

-

2.

**Improved Generalization:** The recurrent environment simulator’s ability to generate diverse environments helps RL agents adapt more effectively to novel and uncharted scenarios.

-

3.

**Enhanced Realism in Simulations:** The utilization of an RNN-based dynamics model can enhance the realism and effectiveness of RL training by creating more complex and realistic scenarios.

However, there are potential drawbacks:

-

1.

**Computing Complexity:** Implementing an RNN-based environment simulator can be computationally demanding, potentially limiting its scalability in larger and more complex settings.

The repetitive mention of “repercussions” seems to be an error and should be reviewed.

-

2.

**Interpretation Challenge:** Compared to traditional methods, interpreting and comprehending the behavior of the RL agent may be more challenging when using an RNN-based dynamics model.

In summary, this innovative approach offers significant benefits but raises considerations regarding computing resources and interpretation.

3 Proposed Methodology - Optimized PPO

Now we present the Optimized PPO algorithm used in the manuscript. Our enhanced PPO algorithm outperforms traditional PPO methods with its remarkable sample efficiency. By fine-tuning the balance between exploration and exploitation, incorporating GAE for advantage estimation, and introducing an entropy bonus, our variant significantly reduces the training data needed for optimal performance. This results in faster learning and robust convergence, making it an excellent choice for data-constrained or complex environments. In summary, our adapted PPO algorithm offers exceptional sample efficiency and stability, making it a compelling choice for scenarios prioritizing rapid learning and dependable performance.

4 Results

4.1 Train/Entrophy Loss

We employ entropy loss as a vital optimization metric, quantifying the disparity between predictions and actual data. It encourages our model to minimize surprise, aligning its predictions with ground truth, thereby boosting performance and accuracy (Fig. 3).

4.2 Experimental Setup

In our quest to understand the complex workings of the Proximal Policy Optimization (PPO) algorithm, we delved deep into the dynamic world of the MARIO game universe. Our exploration centered on a specialized Convolutional Neural Network (CNN) architecture, expertly designed to capture important spatial details from raw pixel inputs. The PPO algorithm was fine-tuned with the following parameters:

4.3 Convolutional Neural Network (CNN) Architecture

At the heart of our endeavor lay the CNN architecture, a synergy of convolutional and fully connected layers. Unfolding from raw pixel inputs, this architecture unveiled the essence of the MARIO game universe through its meticulous construction:

-

Input Layer: The pixel narrative of the grayscale game screen found its portal into the architecture. In our implementation, the input layer’s shape is set to [240, 256, 1], where ‘240’ represents the height and ‘256’ represents the width of the grayscale game screen, and ‘1’ denotes the single grayscale channel.

-

Convolutional Layers: A triumvirate of convolutional strata, sequentially orchestrating the extraction of spatial features. The specifics of these layers were configured as follows:

-

Convolutional Layer 1: 16 filters, kernel size of (3x3), ReLU activation.

-

Convolutional Layer 2: 32 filters, kernel size of (3x3), ReLU activation.

-

Convolutional Layer 3: 64 filters, kernel size of (3x3), ReLU activation.

-

-

Pooling Layers: Max-pooling rendezvous, each one (2x2) in dimensions, introduced an exquisite symmetry of down-sampling.

-

Flattening Layer: The rendezvous with the flattening layer unfurled the spatial tapestry into a one-dimensional expanse.

-

Fully Connected Layers: The realm of abstraction was navigated through fully connected layers, each layer adorned with the ReLU activation:

-

Fully Connected Layer 1: 256 units.

-

Fully Connected Layer 2: 128 units.

-

-

Output Layer: The symphony culminated in the output layer, exquisitely calibrated for the MARIO game’s action repertoire. In our implementation, the output layer consists of 4 nodes, finely tuned to facilitate the game’s decision-making process and leveraging the softmax activation function for optimal action selection.

Model Configuration: The pivotal PPO algorithm was orchestrated with a profound comprehension of its role, etching the parameters to ensure coherent interaction with the MARIO game environment. The configuration was scripted as follows:

5 Observations and Findings

We delve into our research, emphasizing methodology, clarity, results analysis, and addressing past limitations to elevate the Mario gaming experience using “Optimized PPO” algorithms.

5.1 Methodology Explanation

Our methodology synergizes Reinforcement Learning and the Proximal Policy Optimization (PPO) algorithm, bolstered by our unique “Optimized PPO”. This tailored blend elevates gameplay in the Mario universe. Subsequent sections detail our chosen methods and their rationale.

5.2 Observations

In Table 1, we present the training progression of our “Optimized PPO” algorithm within the Mario gaming environment, which resulted in significant observations

-

1.

**Learning Rate:** We initiated training with a modest learning rate of 0.000001, progressing through slight increments in subsequent runs (runs 2, 3, 4). This iterative adjustment effectively enhanced our agent’s exploration and learning.

-

2.

**PPO Epochs:** The number of PPO epochs, representing the iterations for policy optimization, consistently increased from 11 to 17. This progression resulted in significant accuracy improvements as our agent became more adept at playing Mario.

-

3.

**Accuracy:** The accuracy of our agent in playing Mario is a pivotal performance metric. Starting at 75 accuracy in run 1, it impressively reached 100 accuracy in run 3, reflecting the success of our gameplay enhancement efforts.

-

4.

**Loss:** The reduction in loss, from 0.9505 to 0.0100, signifies the refinement of our “Optimized PPO” algorithm. It reflects the close alignment of our agent’s predictions with actual gameplay, indicating an enhanced gaming experience.

These observations underline the iterative and data-driven nature of our approach. Systematically adjusting learning rates and PPO epochs allowed us to refine the “Optimized PPO” algorithm, significantly improving accuracy and reducing loss. Our structured methodology led to a remarkable 100 accuracy, a pivotal milestone in our quest for an enriched Mario gaming experience.

Enhanced Gameplay Performance. Our research has demonstrated that the utilization of the “Optimized PPO” technique significantly enhances gameplay performance in Mario. The gameplay experience shows notable improvements compared to traditional methods.

Efficient Exploration and Learning. One key observation is the improved efficiency in exploration and learning within the Mario gaming environment. The “Optimized PPO” method allows for more efficient learning and adaptation to the game’s dynamics.

5.3 Analysis of Results

A critical component of our research is the comprehensive analysis of the results obtained. This analysis elucidates how our findings align with the initial hypotheses and research objectives.

5.4 Addressing Drawbacks

**Tackling Past Limitations**

Our core research objective is to overcome prior gaming enhancement limitations and enhance the Mario gaming experience.

**“Optimized PPO” Solution**

Our “Optimized PPO” approach specifically addresses these issues, resulting in a more enjoyable Mario gaming experience.

**In Brief**

Our research leverages Reinforcement Learning and the Proximal Policy Optimization algorithm, enhanced by our “Optimized PPO” method. This combination markedly improves gameplay, exploration efficiency, and the overall Mario gaming experience. Subsequent sections detail specific findings and supporting results.

As shown in Table 1, this training progression, featuring different runs with varying learning rates and epochs for the Proximal Policy Optimization (PPO) algorithm, indicates the corresponding accuracy percentages and loss values.

6 Hardware and Software

The successful execution of our research project relied on a combination of hardware and software resources. In this section, we provide an overview of the hardware setup and the software tools utilized for our experimentation.

6.1 Hardware

The research was conducted on a Dell Inspiron 15 5000 series laptop. The hardware specifications of the laptop are as follows:

-

Processor: Intel Core i5

-

RAM: 8 GB

-

Storage: 2 TB HDD

The laptop’s high-performance computing capabilities were critical for our research, as they allowed us to train and experiment with our algorithms quickly and efficiently.

6.2 Software

We utilized the following software components for the development and experimentation of our research algorithms and methods:

-

Integrated Development Environment (IDE): Visual Studio Code (VS Code)

-

Programming language: Python 3

-

Libraries: TensorFlow and OpenAI Gym

-

Operating system: Windows 10

We selected VS Code as our preferred IDE due to its user-friendly interface, efficient code editing features, and seamless integration with version control systems. TensorFlow and OpenAI Gym, both widely recognized libraries for machine learning and reinforcement learning, were essential tools for the swift and effective implementation of our research algorithms and methods.

The amalgamation of these software components offered us a potent and adaptable environment for our research. It facilitated extensive training sessions, valuable insights, and efficient result analysis.

6.3 Code Development

We used Visual Studio Code (VS Code) to develop our research code. Its user-friendly interface, efficient code editing features, and seamless version control integration made coding easier and more efficient. VS Code’s extensibility allowed us to install Python programming and data visualization extensions, which helped us to explore the nuances of the Proximal Policy Optimization (PPO) algorithm within the dynamic MARIO game universe.

7 Conclusion

Researchers have introduced the Optimal Fortification Proximal Policy Optimization (OF-PPO) algorithm, a groundbreaking development in the realm of reinforcement learning (RL). OF-PPO offers a substantial leap in the performance of RL, particularly in the context of Super Mario Bros, and holds immense potential for revolutionizing gaming and real-world applications.

OF-PPO’s defining feature is its innovative fortification mechanism, which refines policy updates to a specific region within the state-action space. This mechanism significantly enhances algorithm stability and convergence speed, effectively overcoming the limitations that plagued earlier RL approaches.

In extensive studies, OF-PPO not only outperformed the traditional PPO algorithm but also demonstrated a remarkable advantage, showcasing its prowess in the intricate and demanding domain of Super Mario Bros. This success hints at OF-PPO’s potential to excel in a wide array of real-world applications.

References

Liao, Y., Yi, K., Yang, Z.: CS229 Final Report Reinforcement Learning to Play Mario. 2012 Stanford University. https://cs229.stanford.edu/proj2012/LiaoYiYang-RLtoPlayMario.pdf

Alaniz, S.: Deep reinforcement learning with model learning and Monte Carlo tree search in minecraft. arXiv preprint arXiv:1803.08456 (2018)

Azizzadenesheli, K., Yang, B., Liu, W., Brunskill, E., Lipton, Z.C., Anandkumar, A.: Sample-efficient deep RL with generative adversarial tree search. CoRR, abs/1806.05780 (2018)

Babaeizadeh, M., Finn, C., Erhan, D., Campbell, R.H., Levine, S.: Stochastic variational video prediction. In: ICLR (2017)

Babaeizadeh, M., Frosio, I., Tyree, S., Clemons, J., Kautz, J.: Reinforcement learning through asynchronous advantage actor-critic on a GPU. In: 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017, Conference Track Proceedings. OpenReview.net (2017). https://openreview.net/forum?id=r1VGvBcxl

Bellemare, M.G., Naddaf, Y., Veness, J., Bowling, M.: The arcade learning environment: an evaluation platform for general agents (extended abstract). In: Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, IJCAI, pp. 4148–4152 (2015)

Bengio, S., Vinyals, O., Jaitly, N., Shazeer, N.: Scheduled sampling for sequence prediction with recurrent neural networks. In: Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, 7–12 December 2015, Montreal, Quebec, Canada, pp. 1171–1179 (2015)

Buesing, L., et al.: Woulda, coulda, shoulda: counterfactually-guided policy search. In: 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, 6–9 May 2019. OpenReview.net (2019). https://openreview.net/forum?id=BJG0voC9YQ

Castro, P.S., Moitra, S., Gelada, C., Kumar, S., Bellemare, M.G.: Dopamine: a research framework for deep reinforcement learning. CoRR, abs/1812.06110 (2018). Chiappa, S., Racaniere, S., Wierstra, D., Mohamed, S.: Recurrent environment simulators. In: 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017 (2017)

Chua, K., Calandra, R., McAllister, R., Levine, S.: Deep reinforcement learning in a handful of trials using probabilistic dynamics models. In: Advances in Neural Information Processing Systems, pp. 4759–4770 (2018)

Deisenroth, M.P., Neumann, G., Peters, J.: A survey on policy search for robotics. Found. Trends Robot. 2(1–2), 1–142 (2013)

Ebert, F., Finn, C., Lee, A.X., Levine, S.: Self-supervised visual planning with temporal skip connections. In: 1st Annual Conference on Robot Learning, CoRL (2017)

Mountain View, California, USA, 13–15 November 2017, Proceedings. Proceedings of Machine Learning Research, vol. 78, pp. 344–356. PMLR (2017)

Ebert, F., Finn, C., Dasari, S., Xie, A., Lee, A., Levine, S.: Visual foresight: model-based deep reinforcement learning for vision-based robotic control. arXiv preprint arXiv:1812.00568 (2018)

Ersen, M., Sariel, S.: Learning behaviors of and interactions among objects through spatio-temporal reasoning. IEEE Trans. Comput. Intell. AI Games 7(1), 75–87 (2014)

Espeholt, L., et al.: IMPALA: scalable distributed deep-rl with importance weighted actor-learner architectures. In: Proceedings of the 35th International Conference on Machine Learning, ICML, pp. 1406–1415 (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Sah, S.K., Fidele, H. (2024). Enhancing Mario Gaming Using Optimized Reinforcement Learning. In: Devismes, S., Mandal, P.S., Saradhi, V.V., Prasad, B., Molla, A.R., Sharma, G. (eds) Distributed Computing and Intelligent Technology. ICDCIT 2024. Lecture Notes in Computer Science, vol 14501. Springer, Cham. https://doi.org/10.1007/978-3-031-50583-6_14

Download citation

DOI: https://doi.org/10.1007/978-3-031-50583-6_14

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-50582-9

Online ISBN: 978-3-031-50583-6

eBook Packages: Computer ScienceComputer Science (R0)