Abstract

Extremely Lossy Functions (ELFs) are families of functions that, depending on the choice during key generation, either operate in injective mode or instead have only a polynomial image size. The choice of the mode is indistinguishable to an outsider. ELFs were introduced by Zhandry (Crypto 2016) and have been shown to be very useful in replacing random oracles in a number of applications.

One open question is to determine the minimal assumption needed to instantiate ELFs. While all constructions of ELFs depend on some form of exponentially-secure public-key primitive, it was conjectured that exponentially-secure secret-key primitives, such as one-way functions, hash functions or one-way product functions, might be sufficient to build ELFs. In this work we answer this conjecture mostly negative: We show that no primitive, which can be derived from a random oracle (which includes all secret-key primitives mentioned above), is enough to construct even moderately lossy functions in a black-box manner. However, we also show that (extremely) lossy functions themselves do not imply public-key cryptography, leaving open the option to build ELFs from some intermediate primitive between the classical categories of secret-key and public-key cryptography. (The full version can be found at https://eprint.iacr.org/2023/1403.)

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

1 Introduction

Extremely lossy functions, or short ELFs, are collections of functions that support two modes: the injective mode, in which each image has exactly one preimage, and the lossy mode, in which the function merely has a polynomial image size. The mode is defined by a seed or public key \(\textsf{pk}\) which parameterizes the function. The key \(\textsf{pk}\) itself should not reveal whether it describes the injective mode or the lossy mode. In case the lossy mode does not result in a polynomially-sized image, but the function compresses by at least a factor of 2, we will speak of a (moderately) lossy function (LF).

Extremely lossy functions were introduced by Zhandry [31, 32] to replace the use of the random oracle model in some cases. The random oracle model (ROM) [4] introduces a truly random function to which all parties have access to. This random function turned out to be useful in modeling hash functions for security proofs of real-world protocols. However, such a truly random function clearly does not exist in reality and it has been shown that no hash function can replace such an oracle without some protocols becoming insecure [11]. Therefore, a long line of research aims to replace the random oracle by different modeling of hash functions, e.g., by the notion of correlation intractability [11] or by Universal Computational Extractors (UCEs) [3]. However, all these attempts seem to have their own problems: Current constructions of correlation intractability require extremely strong assumptions [10], while for UCEs, it is not quite clear which versions are instantiable [5, 9]. Extremely lossy functions, in turn, can be built from relatively standard assumptions.

Indeed, it turns out that extremely lossy functions are useful in removing the need for a random oracle in many applications: Zhandry shows it can be used to generically boost selective security to adaptive security in signatures and identity-based encryption, construct a hash function which is output intractable, point obfuscation in the presence of auxiliary information and many more [31, 32]. Agrikola, Couteau and Hofheinz [1] show that ELFs can be used to construct probabilistic indistinguishability obfuscation from only polynomially-secure indistinguishability obfuscation. In 2022, Murphy, O’Neill and Zaheri [23] used ELFs to give full instantiations of the OAEP and Fujisaki-Okamoto transforms. Recently, Brzuska et al. [8] improve on the instantiation of the Fujisaki-Okamoto transform and instantiate the hash-then-evaluate paradigm for pseudorandom functions using ELFs.

While maybe not as popular as their extreme counterpart, moderately lossy functions have their own applications as well: Braverman, Hassidim and Kalai [7] build leakage-resistant pseudo-entropy functions from lossy functions, and Dodis, Vaikuntanathan and Wichs [12] use lossy functions to construct extractor-dependent extractors with auxiliary information.

1.1 Our Contributions

One important open question for extremely lossy functions, as well as for moderately lossy functions, is the minimal assumption to build them. The constructions presented by Zhandry are based on the exponential security of the decisional Diffie-Hellman problem, but he conjectures that public-key cryptography should not be necessary and suggests for future work to try to construct ELFs from exponentially-secure symmetric primitives (As Zhandry shows as well in his work, polynomial security assumptions are unlikely to be enough for ELFsFootnote 1). Holmgren and Lombardi [17] wondered whether their definition of one-way product functions might suffice to construct ELFs.

For moderately lossy functions, the picture is quite similar: While all current constructions require (polynomially-secure) public-key cryptography, it is generally assumed that public-key cryptography should not be necessary for them and that private-key assumptions should suffice (see, e.g., [28]).

In this work, we answer the questions about building (extremely) lossy functions from symmetric-key primitive mostly negative: There exists no fully-black box construction of extremely lossy functions, or even moderately lossy functions, from a large number of primitives, including exponentially-secure one-way functions, exponentially-secure collision resistant hash functions or one-way product functions. Indeed, any primitive that exists unconditionally relative to a random oracle is not enough. We will call this family of primitives Oraclecrypt, in reference to the famous naming convention by Impagliazzo [19], in which Minicrypt refers to the family of primitives that can be built from one-way functions in a black-box way.

Note that most of the previous reductions and impossibility results, such as the renowned result about the impossibility of building key exchange protocols from black-box one-wayness [20], are in fact already cast in the Oraclecrypt world. We only use this term to emphasize that we also rule out primitives that are usually not included in Minicrypt, like collision resistant hash functions [30].

On the other hand, we show that public-key primitives might not strictly be needed to construct ELFs or moderately lossy functions. Specifically, we show that no fully black-box construction of key agreement is possible from (moderately) lossy functions, and extend this result to prevent any fully black-box construction even from extremely lossy functions (for a slightly weaker setting, though). This puts the primitives lossy functions and extremely lossy functions into the intermediate area between the two classes Oraclecrypt and Public-Key Cryptography.

Finally, we discuss the relationship of lossy functions to hard-on-average problems in SZK, the class of problems that have a statistical zero-knowledge proof. We see hard-on-average SZK as a promising minimal assumption to build lossy functions from – indeed, it is already known that hard-on-average SZK problems follow from lossy functions with sufficient lossiness. While we leave open the question of building such a construction for future work, we give a lower bound for hard-on-average SZK problems that might be of independent interest, showing that hard-on-average SZK problems cannot be built from any Oraclecrypt primitive in a fully black-box way. While this is already known for some primitives in Oraclecrypt [6], these results do not generalize to all Oraclecrypt primitives as our proof does.

Note that all our impossibility results only rule out black-box constructions, leaving the possibility of future non-black-box constructions. However, while there is a growing number of non-black-box constructions in the area of cryptography, the overwhelming majority of constructions are still black-box constructions. Further, as all mentioned primitives like exponentially-secure one-way functions, extremely lossy functions or key agreement might exist unconditionally, ruling out black-box constructions is the best we can hope for to show that a construction probably does not exist.

1.2 Our Techniques

Our separation of Oraclecrypt primitives and extremely/moderately lossy functions is based on the famous oracle separation by Impagliazzo and Rudich [20]: We first introduce a strong oracle that makes sure no complexity-based cryptography exists unconditionally, and then add an independent random oracle that allows for specific cryptographic primitives (specifically, all Oraclecrypt primitives) to exist again. We then show that relative to these oracles, (extremely) lossy functions do not exist by constructing a distinguisher between the injective and lossy mode for any candidate construction. A key ingredient here is that we can identify the heavy queries in a lossy function with high probability with just polynomially many queries to the random oracle, a common technique used for example in the work by Bitansky and Degwekar [6]. Finally, we use the two-oracle technique by Hsiao and Reyzin [18] to fix a set of oracles. We note that our proof technique is similar to a technique in the work by Pietrzak, Rosen and Segev to show that the lossiness of lossy functions cannot be increased well in a black-box way [27]. Our separation result for SZK, showing that primitives in Oraclecrypt may not suffice to derive hard problems in SZK, follows a similar line of reasoning.

Our separation between lossy functions and key agreement is once more based on the work by Impagliazzo and Rudich [20], but this time using their specific result for key agreement protocols. Similar to the techniques in [14], we try to compile out the lossy function to be then able to apply the Impagliazzo-Rudich adversary: We first show that one can build (extremely) lossy function oracles relative to a random oracle (where the lossy function itself is efficiently computable via oracle calls, but internally makes an exponentially number of random oracle evaluations). The heart of our separation is then a simulation lemma showing that any efficient game relative to our (extremely) lossy function oracle can be simulated efficiently and sufficiently close given only access to a random oracle. Here, sufficiently close means an inverse polynomial gap between the two cases but where the polynomial can be set arbitrarily. Given this we can apply the key agreement separation result of Impagliazzo and Rudich [20], with a careful argument that the simulation gap does not infringe with their separation.

1.3 Related Work

Lossy Trapdoor Functions. Lossy trapdoor functions were defined by Peikert and Waters in [25, 26] who exclusively considered such functions to have a trapdoor in injective mode. Whenever we talk about lossy functions in this work, we refer to the moderate version of extremely lossy functions which does not necessarily have a trapdoor. The term extremely lossy function (ELFs) is used as before to capture strongly compressing lossy functions, once more without requiring a trapdoor for the injective case.

Targeted Lossy Functions. Targeted lossy functions were introduced by Quach, Waters and Wichs [28] and are a relaxed version of lossy functions in which the lossiness only applies to a small set of specified inputs. The motivation of the authors is the lack of progress in creating lossy functions from other assumptions than public-key cryptography. Targeted lossy functions, however, can be built from Minicrypt assumptions, and, as the authors show, already suffices for many applications, such as construct extractor-dependent extractors with auxiliary information and pseudo-entropy functions. Our work very much supports this line of research, as it shows that any further progress in creating lossy functions from Minicrypt/Oraclecrypt assumptions is unlikely (barring some construction using non-black-box techniques) and underlines the need of such a relaxation for lossy functions, if one wants to build them from Minicrypt assumptions.

Amplification of Lossy Functions. Pietrzak, Rosen and Segev [27] show that it is impossible to improve the relative lossiness of a lossy function in a black-box way by more than a logarithmic amount. This translates into another obstacle in building ELFs, even when having access to a moderately lossy function. Note that this result strengthens our result, as we show that even moderately lossy functions cannot be built from anything in Oraclecrypt.

2 Preliminaries

This is a shortened version of the preliminaries, omitting some standard definitions. The full version [13] of the paper contains the complete preliminaries.

2.1 Lossy Functions

A lossy function can be either injective or compressing, depending on the mode the public key \(\textsf{pk}\) has been generated with. The desired mode (\(\textsf{inj}\) or \(\textsf{loss}\)) is passed as argument to a (randomized) key generating algorithm \(\textsf{Gen}\), together with the security parameter \(1^\lambda \). We sometimes write \(\textsf{pk}_{\textsf{inj}}\) or \(\textsf{pk}_{\textsf{loss}}\) to emphasize that the public key has been generated in either mode, and also \(\textsf{Gen}_\textsf{inj}(\cdot )=\textsf{Gen}(\cdot ,\textsf{inj})\) as well as \(\textsf{Gen}_\textsf{loss}(\cdot )=\textsf{Gen}(\cdot ,\textsf{loss})\) to explicitly refer to key generation in injective and lossy mode, respectively. The type of key is indistinguishable to outsiders. This holds even though the adversary can evaluate the function via deterministic algorithm \(\textsf{Eval}\) under this key, taking \(1^\lambda \), a key \(\textsf{pk}\) and a value x of input length \(\text {in}(\lambda )\) as input, and returning an image \(f_\textsf{pk}(x)\) of an implicitly defined function f. We usually assume that \(1^\lambda \) is included in \(\textsf{pk}\) and thus omit \(1^\lambda \) for \(\textsf{Eval}\)’s input.

In the literature, one can find two slightly different definitions of lossy function. One, which we call the strict variant, requires that for any key generated in injective or lossy mode, the corresponding function is perfectly injective or lossy. In the non-strict variant this only has to hold with overwhelming probability over the choice of the key \(\textsf{pk}\). We define both variants together:

Definition 1 (Lossy Functions)

An \(\omega \)-lossy function consists of two efficient algorithms \((\textsf{Gen},\textsf{Eval})\) of which \(\textsf{Gen}\) is probabilistic and \(\textsf{Eval}\) is deterministic and it holds that:

-

(a)

For

the function \(\textsf{Eval}(\textsf{pk}_{\textsf{inj}},\cdot ):\{0,1\}^{\text {in}(\lambda )}\rightarrow \{0,1\}^*\) is injective with overwhelming probability over the choice of \(\textsf{pk}_{\textsf{inj}}\).

the function \(\textsf{Eval}(\textsf{pk}_{\textsf{inj}},\cdot ):\{0,1\}^{\text {in}(\lambda )}\rightarrow \{0,1\}^*\) is injective with overwhelming probability over the choice of \(\textsf{pk}_{\textsf{inj}}\). -

(b)

For

, the function \(\textsf{Eval}(\textsf{pk}_{\textsf{loss}},\cdot ):\{0,1\}^{\text {in}(\lambda )}\rightarrow \{0,1\}^*\) is \(\omega \)-compressing i.e., \(\left| \{\textsf{Eval}(\textsf{pk}_{\textsf{loss}},\{0,1\}^{\text {in}(\lambda )})\}\right| \le 2^{\text {in}(\lambda )-\omega }\), with overwhelming probability over the choice of \(\textsf{pk}_{\textsf{loss}}\).

, the function \(\textsf{Eval}(\textsf{pk}_{\textsf{loss}},\cdot ):\{0,1\}^{\text {in}(\lambda )}\rightarrow \{0,1\}^*\) is \(\omega \)-compressing i.e., \(\left| \{\textsf{Eval}(\textsf{pk}_{\textsf{loss}},\{0,1\}^{\text {in}(\lambda )})\}\right| \le 2^{\text {in}(\lambda )-\omega }\), with overwhelming probability over the choice of \(\textsf{pk}_{\textsf{loss}}\). -

(c)

The random variables \(\textsf{Gen}_\textsf{inj}\) and \(\textsf{Gen}_\textsf{loss}\) are computationally indistinguishable.

We call the function strict if properties (a) and (b) hold with probability 1.

Extremely lossy functions need a more fine-grained approach where the key generation algorithm takes an integer r between 1 and \(2^{\text {in}(\lambda )}\) instead of \(\textsf{inj}\) or \(\textsf{loss}\). This integer determines the image size, with \(r=2^{\text {in}(\lambda )}\) asking for an injective function. As we want to have functions with a sufficiently high lossiness that the image size is polynomial, say, \(p(\lambda )\), we cannot allow for any polynomial adversary. This is so because an adversary making \(p(\lambda )+1\) many random (but distinct) queries to the evaluating function will find a collision in case that \(\textsf{pk}\) was lossy, while no collision will be found for an injective key. Instead, we define the minimal r such that \(\textsf{Gen}(1^\lambda ,2^\lambda )\) and \(\textsf{Gen}(1^\lambda ,r)\) are indistinguishable based on the runtime and desired advantage of the adversary:

Definition 2 (Extremely Lossy Function)

An extremely lossy function consists of two efficient algorithms \((\textsf{Gen},\textsf{Eval})\) of which \(\textsf{Gen}\) is probabilistic and \(\textsf{Eval}\) is deterministic and it holds that:

-

(a)

For \(r=2^{\text {in}(\lambda )}\) and

the function \(\textsf{Eval}(\textsf{pk},\cdot ):\{0,1\}^{\text {in}(\lambda )}\rightarrow \{0,1\}^*\) is injective with overwhelming probability.

the function \(\textsf{Eval}(\textsf{pk},\cdot ):\{0,1\}^{\text {in}(\lambda )}\rightarrow \{0,1\}^*\) is injective with overwhelming probability. -

(b)

For \(r<2^{\text {in}(\lambda )}\) and

the function \(\textsf{Eval}(\textsf{pk},\cdot ):\{0,1\}^{\text {in}(\lambda )}\rightarrow \{0,1\}^*\) has an image size of at most r with overwhelming probability.

the function \(\textsf{Eval}(\textsf{pk},\cdot ):\{0,1\}^{\text {in}(\lambda )}\rightarrow \{0,1\}^*\) has an image size of at most r with overwhelming probability. -

(c)

For any polynomials p and d there exists a polynomial q such that for any adversary \(\mathcal {A}\) with a runtime bounded by \(p(\lambda )\) and any \(r\in [q(\lambda ), 2^{\text {in}(\lambda )}]\), algorithm \(\mathcal {A}\) distinguishes \(\textsf{Gen}(1^\lambda ,2^{\text {in}(\lambda )})\) from \(\textsf{Gen}(1^\lambda , r)\) with advantage at most \(\frac{1}{d(\lambda )}\).

Note that extremely lossy functions do indeed imply the definition of (moderately) lossy functions (as long as the lossiness-parameter \(\omega \) still leaves an exponential-sized image size in the lossy mode):

Lemma 1

Let \((\textsf{Gen},\textsf{Eval})\) be an extremely lossy function. Then \((\textsf{Gen},\textsf{Eval})\) is also a (moderately) lossy function with lossiness parameter \(\omega =0.9\lambda \).

The proof for this lemma can be found in the full version [13].

2.2 Oraclecrypt

In his seminal work [19], Impagliazzo introduced five possible worlds we might be living in, including two in which computational cryptography exists: Minicrypt, in which one-way functions exist, but public-key cryptography does not, and Cryptomania, in which public-key cryptography exists as well. In reference to this classification, cryptographic primitives that can be built from one-way functions in a black-box way are often called Minicrypt primitives.

In this work, we are interested in the set of all primitives that exist relative to a truly random function. This of course includes all Minicrypt primitives, as one-way functions exist relative to a truly random function (with high probability), but it also includes a number of other primitives, like collision-resistant hash functions and exponentially-secure one-way functions, for which we don’t know that they exist relative to a one-way function, or even have a black-box impossibility result. In reference to the set of Minicrypt primitives, we will call all primitives existing relative to a truly random function Oraclecrypt primitives.

Definition 3 (Oraclecrypt)

We say that a cryptographic primitive is an Oraclecrypt primitive, if there exists an implementation relative to truly random function oracle (except for a measure zero of random oracles).

We will now show that by this definition, indeed, many symmetric primitives are Oraclecrypt primitives:

Lemma 2

The following primitives are Oraclecrypt primitives:

-

Exponentially-secure one-way functions,

-

Exponentially-secure collision resistant hash functions,

-

One-way product functions.

We moved the proof for this lemma to the full version [13].

3 On the Impossibility of Building (E)LFs in Oraclecrypt

In this chapter, we will show that we cannot build lossy functions from a number of symmetric primitives, including (exponentially-secure) one-way functions, collision-resistant hash functions and one-way product functions, in a black-box way. Indeed, we will show that any primitive in Oraclecrypt is not enough to build lossy functions. As extremely lossy functions imply (moderately) lossy functions, this result applies to them as well.

Note that for exponentially-secure one-way functions, this was already known for lossy functions that are sufficiently lossy: Lossy functions with sufficient lossiness imply collision-resistant hash functions, and Simon’s result [30] separates these from (exponentially-secure) one-way functions. However, this does not apply for lossy functions with e.g. only a constant number of bits of lossiness.

Theorem 1

There exists no fully black-box construction of lossy functions from any Oraclecrypt primitive, including exponentially-secure one-way functions, collision resistant hash functions, and one-way product functions.

Our proof for this Theorem follows a proof idea by Pietrzak, Rosen and Segev [27], which they used to show that lossy functions cannot be amplified well, i.e., one cannot build a lossy function which is very compressing in the lossy mode from a lossy function that is only slightly compressing in the lossy mode. Conceptually, we show an oracle separation between lossy functions and Oraclecrypt: For this, we will start by introducing two oracles, a random oracle and a modified \(\textrm{PSPACE}\) oracle. We will then, for a candidate construction of a lossy function based on the random oracle and a public key \(\textsf{pk}\), approximate the heavy queries asked by \(\textsf{Eval}(\textsf{pk},\cdot )\) to the random oracle. Next, we show that this approximation of the set of heavy queries is actually enough for us approximating the image size of \(\textsf{Eval}(\textsf{pk},\cdot )\) (using our modified \(\textrm{PSPACE}\) oracle) and therefore gives an efficient way to distinguish lossy keys from injective keys. Finally, we have to fix a set of oracles (instead of arguing with a distribution of oracles) and then use the two-oracle technique [18] to show the theorem. Due to the use of the two-oracle techique, we only get an impossibility result for fully black-box constructions (see [18] and [2] for a discussion of different types of black-box constructions).

3.1 Introducing the Oracles

A common oracle to use in an oracle separation in cryptography is the \(\textrm{PSPACE}\) oracle, as relative to this oracle, all non-information theoretic cryptography is broken. As we do not know which (or whether any) cryptographic primitives exist unconditionally, this is a good way to level the playing field. However, in our case, \(\textrm{PSPACE}\) is not quite enough. In our proof, we want to calculate the image size of a function relative to a (newly chosen) random oracle. It is not possible to simulate this oracle by lazy-sampling, though, as to calculate the image size of a function, we might have to save an exponentially large set of queries, which is not possible in \(\textrm{PSPACE}\). Therefore, we give the \(\textrm{PSPACE}\) oracle access to its own random oracle \(\mathcal {O}^\prime : \{0,1\}^{\lambda } \rightarrow \{0,1\}^{\lambda }\) and will give every adversary access to \(\textrm{PSPACE}^{\mathcal {O}^\prime }\).

The second oracle is a random oracle \(\mathcal {O}: \{0,1\}^\lambda \rightarrow \{0,1\}^\lambda \). Now, we know that a number of primitives exist relative to a random function, including exponentially-secure one-way functions, collision-resistant hash functions and even more complicated primitives like one-way product functions. Further, they still exist if we give the adversary access to \(\textrm{PSPACE}^{\mathcal {O}^\prime }\), too, as \(\mathcal {O}^\prime \) is independent from \(\mathcal {O}\) and \(\textrm{PSPACE}^{\mathcal {O}^\prime }\) does not have direct access to \(\mathcal {O}\).

We will now show that every candidate construction of a lossy function with access to \(\mathcal {O}\) can be broken by an adversary \(\mathcal {A}^{\mathcal {O},\textrm{PSPACE}^{\mathcal {O}^\prime }}\). Note that we do not give the construction access to \(\textrm{PSPACE}^{\mathcal {O}^\prime }\)—this is necessary, as \(\mathcal {O}^\prime \) should look like a randomly sampled oracle to the construction. However, giving the construction access to \(\textrm{PSPACE}^{\mathcal {O}^\prime }\) would enable the construction to behave differently for this specific oracle \(\mathcal {O}^\prime \). Not giving the construction access to the oracle is fine, however, as we are using the two-oracle technique.

Our proof for Theorem 1 will now work in two steps. First, we will show that with overwhelming probability over independently sampled \(\mathcal {O}\) and \(\mathcal {O}^\prime \), no lossy functions exist relative to \(\mathcal {O}\) and \(\textrm{PSPACE}^{\mathcal {O}^\prime }\). However, for an oracle separation, we need one fixed oracle. Therefore, as a second step (Sect. 3.4), we will use standard techniques to select one set of oracles relative to which any of our Oraclecrypt primitives exist, but lossy functions do not.

For the first step, we will now define how our definition of lossy functions with access to both oracles looks like:

Definition 4 (Lossy functions with Oracle Access)

A family of functions \(\textsf{Eval}^\mathcal {O}(\textsf{pk},\cdot ): \{0,1\}^{\text {in}(\lambda )} \rightarrow \{0,1\}^*\) with public key \(\textsf{pk}\) and access to the oracles \(\mathcal {O}\) is called \(\omega \)-lossy if there exist two PPT algorithms \(\mathsf {Gen_{inj}^{}}\) and \(\mathsf {Gen_{loss}^{}}\) such that for all \(\lambda \in \mathbb {N}\),

-

(a)

For all \(\textsf{pk}\) in \([\mathsf {Gen_{inj}^{\mathcal {O}}}(1^\lambda )]\cup [\mathsf {Gen_{loss}^{\mathcal {O}}}(1^\lambda )]\), \(\textsf{Eval}^{\mathcal {O}}(\textsf{pk},\cdot )\) is computable in polynomial time in \(\lambda \),

-

(b)

For

, \(\textsf{Eval}^\mathcal {O}(\textsf{pk},\cdot )\) is injective with overwhelming probability (over the choice of \(\textsf{pk}\) as well as the random oracle \(\mathcal {O}\)),

, \(\textsf{Eval}^\mathcal {O}(\textsf{pk},\cdot )\) is injective with overwhelming probability (over the choice of \(\textsf{pk}\) as well as the random oracle \(\mathcal {O}\)), -

(c)

For

, \(\textsf{Eval}^\mathcal {O}(\textsf{pk},\cdot )\) is \(\omega \)-compressing with overwhelming probability (over the choice of \(\textsf{pk}\) as well as the random oracle \(\mathcal {O}\))

, \(\textsf{Eval}^\mathcal {O}(\textsf{pk},\cdot )\) is \(\omega \)-compressing with overwhelming probability (over the choice of \(\textsf{pk}\) as well as the random oracle \(\mathcal {O}\)) -

(d)

The random variables \(\mathsf {Gen_{inj}^{\mathcal {O}}}\) and \(\mathsf {Gen_{loss}^{\mathcal {O}}}\) are computationally indistinguishable for any polynomial-time adversary \(\mathcal {A}^{\mathcal {O},\textrm{PSPACE}^{\mathcal {O}^\prime }}\) with access to both \(\mathcal {O}\) and \(\textrm{PSPACE}^{\mathcal {O}^\prime }\).

3.2 Approximating the Set of Heavy Queries

In the next two subsections, we will construct an adversary \(\mathcal {A}^{\mathcal {O},\textrm{PSPACE}^{\mathcal {O}^\prime }}\) against lossy functions with access to the random oracle \(\mathcal {O}\) as described in Definition 4.

Let \((\textsf{Gen}^\mathcal {O},\textsf{Eval}^\mathcal {O})\) be some candidate implementation of a lossy function relative to the oracle \(\mathcal {O}\). Further, let \(\textsf{pk}\leftarrow \mathsf {Gen_{?}^{\mathcal {O}}}\) be some public key generated by either \(\mathsf {Gen_{inj}^{}}\) or \(\mathsf {Gen_{loss}^{}}\). Looking at the queries asked by the lossy function to \(\mathcal {O}\), we can divide them into two parts: The queries asked during the generation of the key \(\textsf{pk}\), and the queries asked during the execution of \(\textsf{Eval}^\mathcal {O}(\textsf{pk},\cdot )\). We will denote the queries asked during the generation of \(\textsf{pk}\) by the set \(Q_G\). As the generation algorithm has to be efficient, \(Q_G\) has polynomial size. Let \(k_G\) be the maximal number of queries asked by any of the two generators. Further, denote by \(k_f\) the maximum number of queries of \(\textsf{Eval}^\mathcal {O}(\textsf{pk},x)\) for any \(\textsf{pk}\) and x—again, \(k_f\) is polynomial. Finally, let \(k = \max \left\{ k_G,k_f\right\} \).

The set of all queries done by \(\textsf{Eval}(\textsf{pk},\dot{)}\) for a fixed key \(\textsf{pk}\) might be of exponential size, as the function might ask different queries for each input x. However, we are able to shrink the size of the relevant subset significantly, if we concentrate on heavy queries—queries that appear for a significant fraction of all inputs x:

Definition 5 (Heavy Queries)

Let k be the maximum number of \(\mathcal {O}\)-queries made by the generator \(\mathsf {Gen_{?}^{\mathcal {O}}}\), or the maximum number of queries of \(\textsf{Eval}(\textsf{pk},\cdot )\) over all inputs \(x\in \{0,1\}^{\text {in}(\lambda )}\), whichever is higher. Fix some key \(\textsf{pk}\) and a random oracle \(\mathcal {O}\). We call a query q to \(\mathcal {O}\) heavy if, for at least a \(\frac{1}{10k}\)-fraction of \(x\in \{0,1\}^{\text {in}(\lambda )}\), the evaluation \(\textsf{Eval}(\textsf{pk},x)\) queries \(\mathcal {O}\) about q at some point. We denote by \(Q_H\) the set of all heavy queries (for \(\textsf{pk},\mathcal {O}\)).

The set of heavy queries is polynomial, as \(\textsf{Eval}^{\mathcal {O}}(\textsf{pk},\cdot )\) only queries the oracle a polynomial number of times and each heavy query has to appear in a polynomial fraction of all x. Further, we will show that the adversary \(\mathcal {A}^{\mathcal {O},\textrm{PSPACE}^{\mathcal {O}^\prime }}\) is able to approximate the set of heavy queries, and that this approximation is actually enough to decide whether \(\textsf{pk}\) was generated in injective or in lossy mode. We will start with a few key observations that help us prove this statement.

The first one is that the generator, as it is an efficiently-computable function, will only query \(\mathcal {O}\) at polynomially-many positions, and these polynomially-many queries already define whether the function is injective or lossy:

Observation 1

Let \(Q_G\) denote the queries by the generator. For a random \(\textsf{pk}\leftarrow \mathsf {Gen_{inj}^{\mathcal {O}}}\) generated in injective mode and a random \(\mathcal {O}^\prime \) that is consistent with \(Q_G\), the image size of \(\textsf{Eval}^{\mathcal {O}^\prime }(\textsf{pk},\cdot )\) is \(2^\lambda \) (except with a negligible probability over the choice of \(\textsf{pk}\) and \(\mathcal {O}^\prime \)). Similarly, for a random \(\textsf{pk}\leftarrow \mathsf {Gen_{loss}^{\mathcal {O}}}\) generated in lossy mode and a random \(\mathcal {O}^\prime \) that is consistent with \(Q_G\), the image size of \(\textsf{Eval}^{\mathcal {O}^\prime }(\textsf{pk},\cdot )\) is at most \(2^{\lambda -1}\) (except with a negligible probability over the choice of \(\textsf{pk}\) and \(\mathcal {O}^\prime \)).

This follows directly from the definition: As \(\mathsf {Gen_{?}^{\mathcal {O}}}\) has no information about \(\mathcal {O}\) except the queries \(Q_G\), properties (2) and (3) of Definition 1 have to hold for every random oracle that is consistent with \(\mathcal {O}\) on \(Q_G\). We will use this multiple times in the proof to argue that queries to \(\mathcal {O}\) that are not in \(Q_G\) are, essentially, useless randomness for the construction, as the construction has to work with almost any possible answer returned by these queries.

An adversary is probably very much interested in learning the queries \(Q_G\). There is no way to capture them in general, though. Here, we need our second key observation. Lossiness is very much a global property: to switch a function from lossy to injective, at least half of all inputs x to \(\textsf{Eval}^{\mathcal {O}}(\textsf{pk},x)\) must produce a different result, and vice versa. However, as we learned from the first observation, whether \(\textsf{Eval}^{\mathcal {O}}(\textsf{pk},\cdot )\) is lossy or injective, depends just on \(Q_G\). Therefore, some queries in \(Q_G\) must be used over and over again for different inputs x—and will therefore appear in the heavy set \(Q_H\). Further, due to the heaviness of these queries, the adversary is indeed able to learn them!

Our proof works alongside these two observations: First, we show in Lemma 3 that for any candidate lossy function, an adversary is able to compute a set \(\hat{Q}_H\) of the interesting heavy queries. Afterwards, we show in Lemma 5 that we can use \(\hat{Q}_H\) to decide whether \(\textsf{Eval}^{\mathcal {O}}(\textsf{pk},\cdot )\) is lossy or injective, breaking the indistinguishability property of the lossy function.

Lemma 3

Let \(\textsf{Eval}^{\mathcal {O}}(\textsf{pk},\cdot )\) be a (non-strict) lossy function and \(\textsf{pk}\leftarrow \mathsf {Gen_{?}^{\mathcal {O}}}(1^\lambda )\) for oracle \(\mathcal {O}\). Then we can compute in probabilistic polynomial-time (in \(\lambda \)) a set \(\hat{Q}_H\) which contains all heavy queries of \(\textsf{Eval}^{\mathcal {O}}(\textsf{pk},\cdot )\) for \(\textsf{pk},\mathcal {O}\) with overwhelming probability.

Proof

To find the heavy queries we will execute \(\textsf{Eval}^{\mathcal {O}}(\textsf{pk},x)\) for t random inputs x and record all queries to \(\mathcal {O}\) in \(\hat{Q}_H\). We will now argue that, with high probability, \(\hat{Q}_H\) contains all heavy queries.

First, recall that a query is heavy if it appears for at least an \(\varepsilon \)-fraction of inputs to \(\textsf{Eval}^{\mathcal {O}}(\textsf{pk},\cdot )\) for \(\varepsilon =\frac{1}{10k}\). Therefore, the probability for any specific heavy query \(q_{\textsf {heavy}}\) to not appear in \(\hat{Q}_H\) after the t evaluations can be bounded by

Furthermore, there exist at most \(\frac{k}{\varepsilon }\) heavy queries, because each heavy query accounts for at least \(\varepsilon \cdot 2^{\text {in}(\lambda )}\) of the at most \(k\cdot 2^{\text {in}(\lambda )}\) possible queries of \(\textsf{Eval}^{\mathcal {O}}(\textsf{pk},x)\) when iterating over all x. Therefore, the probability that any heavy query \(q_{\textsf {heavy}}\) is not included in \(\hat{Q}_H\) is given by

Choosing \(t= 10k\lambda \) we get

which is negligible. Therefore, with all but negligible probability, all heavy queries are included in \(\hat{Q}_H\). \(\square \)

3.3 Distinguishing Lossiness from Injectivity

We next make the transition from oracle \(\mathcal {O}\) to our \(\textrm{PSPACE}\)-augmenting oracle \(\mathcal {O}^\prime \). According to the previous subsection, we can compute (a superset \(\hat{Q}_H\) of) the heavy queries efficiently. Then we can fix the answers of oracle \(\mathcal {O}\) on such frequently asked queries in \(\hat{Q}_H\), but otherwise use the independent oracle \(\mathcal {O}^\prime \) instead. Denote this partly-set oracle by \(\mathcal {O}^{\prime }_{|\hat{Q}_H}\). Then the distinguisher for injective and lossy keys, given some \(\textsf{pk}\), can approximate the image size of \(\#im(\textsf{Eval}^{\mathcal {O}^{\prime }_{|\hat{Q}_H}}(\textsf{pk},\cdot ))\) with the help of its \(\textrm{PSPACE}^{\mathcal {O}^\prime }\) oracle and thus also derives a good approximiation for the actual oracle \(\mathcal {O}\). This will be done in Lemma 5.

We still have to show that the non-heavy queries do not violate the above approach. According to the proof of Lemma 4 it suffices to look at the case that the image sizes of oracles \(\mathcal {R}:=\mathcal {O}^{\prime }_{|\hat{Q}_H}\) and for oracle \(\mathcal {R}^\prime :=\mathcal {O}^{\prime }_{|\hat{Q}_H\cup Q_G}\), where we als fix on the key generator’s non-heavy queries to values from \(\mathcal {O}\), cannot differ significantly. Put differently, missing out the generator’s non-heavy queries \(Q_G\) in \(\hat{Q}_H\) only slightly affects the image size of \(\textsf{Eval}^{\mathcal {O}^{\prime }_{|\hat{Q}_H}}(\textsf{pk},\cdot )\), and we can proceed with our approach to consider only heavy queries.

Lemma 4

Let \(\textsf{pk}\leftarrow \mathsf {Gen_{?}^{\mathcal {R}}}(1^\lambda )\) and \({Q}_G^{\textsf {nonh}}=\{q_1,\dots ,q_{k^\prime }\}\) be the \(k^\prime \) generator’s queries to \(\mathcal {R}\) in \(Q_G\) when computing \(\textsf{pk}\) that are not heavy for \(\textsf{pk},\mathcal {R}\). Then, for any oracle \(\mathcal {R}^\prime \) that is identical to \(\mathcal {R}\) everywhere except for the queries in \({Q}_G^{\textsf {nonh}}\), i.e., \(\mathcal {R}(q)=\mathcal {R}^\prime (q)\) for any \(q\notin {Q}_G^{\textsf {nonh}}\), the image sizes of \(\textsf{Eval}^{\mathcal {R}}(\textsf{pk},\cdot )\) and \(\textsf{Eval}^{\mathcal {R}^\prime }(\textsf{pk},\cdot )\) differ by at most \(\frac{2^{\text {in}(\lambda )}}{10}\).

Proof

As the queries in \({Q}_G^{\textsf {nonh}}\) are non-heavy, every \(q_i\in {Q}_G^{\textsf {nonh}}\) is queried for at most \(\frac{2^{\text {in}(\lambda )}}{10k}\) inputs x to \(\textsf{Eval}^{\mathcal {R}}(\textsf{pk},\cdot )\) when evaluating the function. Therefore, any change in the oracle \(\mathcal {R}\) at \(q_i\in {Q}_G^{\textsf {nonh}}\) affects the output of \(\textsf{Eval}^{\mathcal {R}}(\textsf{pk},\cdot )\) for at most \(\frac{2^{\text {in}(\lambda )}}{10k}\) inputs. Hence, when considering the oracle \(\mathcal {R}^\prime \), which differs from \(\mathcal {R}\) only on the \(k^\prime \) queries from \({Q}_G^{\textsf {nonh}}\), moving from \(\mathcal {R}\) to \(\mathcal {R}^\prime \) for evaluating \(\textsf{Eval}^{\mathcal {R}}(\textsf{pk},\cdot )\) changes the output for at most \(\frac{k^\prime 2^{\text {in}(\lambda )}}{10k}\) inputs x. In other words, letting \(\varDelta _f\) denote the set of all x such that \(\textsf{Eval}^{\mathcal {R}}(\textsf{pk},x)\) queries some \(q\in {Q}_G^{\textsf {nonh}}\) during the evaluation, we know that

and

We are interested in the difference of the two image sizes of \(\textsf{Eval}^{\mathcal {R}}(\textsf{pk},\cdot )\) and \(\textsf{Eval}^{\mathcal {R}^\prime }(\textsf{pk},\cdot )\). Each \(x\in \varDelta _f\) may add or subtract an image in the difference, depending on whether the modified output \(\textsf{Eval}^{\mathcal {R}^\prime }(\textsf{pk},x)\) introduces a new image or redirects the only image \(\textsf{Eval}^{\mathcal {R}}(\textsf{pk},x)\) to an already existing one. Therefore, the difference between the image sizes is at most

where the last inequality is due to \(k^\prime \le k\). \(\square \)

Lemma 5

Given \(\hat{Q}_H\supseteq Q_H\), we can decide correctly whether \(\textsf{Eval}^{\mathcal {O}}(\textsf{pk},\cdot )\) is lossy or injective with overwhelming probability.

Proof

As described in Sect. 3.1, we give the adversary, who has to distinguish a lossy key from a injective key, access to \(\textrm{PSPACE}^{\mathcal {O}^\prime }\), where \(\mathcal {O}^\prime \) is another random oracle sampled independently of \(\mathcal {O}\). This is necessary for the adversary, as we want to calculate the image size of \(\textsf{Eval}^{\mathcal {O}^\prime }(\textsf{pk},\cdot )\) relative to a random oracle \(\mathcal {O}^\prime \), and we cannot do this in \(\textrm{PSPACE}\) with lazy sampling.

We will consider the following adversary \(\mathcal {A}\): It defines an oracle \(\mathcal {O}^\prime _{|\hat{Q}_H}\) that is identical to \(\mathcal {O}^\prime \) for all queries \(q\not \in \hat{Q}_H\) and identical to \(\mathcal {O}\) for all queries \(q\in \hat{Q}_H\). Then, it calculates the image size

Note that this can be done efficiently using \(\textrm{PSPACE}^{\mathcal {O}^\prime }\) as well as polynomially many queries to \(\mathcal {O}\). If \(\#im(\textsf{Eval}^{\mathcal {O}^\prime _{|\hat{Q}_H}}(\textsf{pk},\cdot ))\) is bigger than \(\frac{3}{4} 2^{\text {in}(\lambda )}\), \(\mathcal {A}\) will guess that \(\textsf{Eval}^\mathcal {O}(\textsf{pk},\cdot )\) is injective, and lossy otherwise. For simplicity reasons, we will assume from now on that \(\textsf{pk}\) was generated by \(\mathsf {Gen_{inj}^{}}\)—the case where \(\textsf{pk}\) was generated by \(\mathsf {Gen_{loss}^{}}\) follows by a symmetric argument.

First, assume that all queries \(Q_G\) of the generator are included in \(\hat{Q}_H\). In this case, any \(\mathcal {O}^\prime \) that is consistent with \(Q_H\) is also consistent with all the information \(\mathsf {Gen_{inj}^{}}\) have about \(\mathcal {O}\). However, this means that by definition, \(\textsf{Eval}^{\mathcal {O}}(\textsf{pk},\cdot )\) has to be injective with overwhelming probability, and therefore, an adversary can easily check whether \(\textsf{pk}\) was created by \(\mathsf {Gen_{inj}^{}}\).

Otherwise, let \(q_1,\dots ,q_{k^\prime }\) be a set of queries in \(Q_G\) which are not included in \(\hat{Q}_H\). With overwhelming probability, this means that \(q_1,\dots ,q_{k^\prime }\) are all non-heavy. We now apply Lemma 4 for oracles \(\mathcal {R}:=\mathcal {O}^{\prime }_{|\hat{Q}_H}\) and \(\mathcal {R}^\prime :=\mathcal {O}^{\prime }_{|\hat{Q}_H\cup Q_G}\). These two oracles may only differ on the non-heavy queries in \(Q_G\), where \(\mathcal {R}\) coincides with \(\mathcal {O}^\prime \) and \(\mathcal {R}^\prime \) coincides with \(\mathcal {O}\); otherwise the oracles are identical. Lemma 4 tells us that this will change the image size by at most \(\frac{2^{\text {in}(\lambda )}}{10}\). Therefore, with overwhelming probability, the image size calculated by the distinguisher is bounded from below by

and the distinguisher will therefore correctly decide that \(\textsf{Eval}^{\mathcal {O}}(\textsf{pk},\cdot )\) is in injective mode. \(\square \)

Theorem 2

Let \(\mathcal {O}\) and \(\mathcal {O}^\prime \) be two independent random oracles. Then, with overwhelming probability over the choice of the two random oracles, lossy functions do not exist relative the oracles \(\mathcal {O}\) and \(\textrm{PSPACE}^{\mathcal {O}^\prime }\).

Proof

Given the key \(\textsf{pk}\), our distinguisher (with oracle access to random oracle \(\mathcal {O}\)) against the injective and lossy mode first runs the algorithm of Lemma 3 to efficiently construct a super set \(\hat{Q}_H\) of the heavy queries \(Q_H\) for \(\textsf{pk},\mathcal {O}\). This succeeds with overwhelming probability, and from now on we assume that indeed \(Q_H\subseteq \hat{Q}_H\). Then our algorithm continues by running the decision procedure of Lemma 5 to distinguish the cases. Using the \(\textrm{PSPACE}^{\mathcal {O}^\prime }\) oracle, the latter can also be carried out efficiently. \(\square \)

3.4 Fixing an Oracle

We have shown now (in Theorem 2) that no lossy function exists relative to a random oracle with overwhelming probability. However, to prove our main theorem, we have to show that there exists one fixed oracle relative to which one-way functions (or collision-resistant hash functions, or one-way product functions) exist, but lossy functions do not.

In Lemma 2, we have already shown that (exponentially-secure) one-way functions, collision-resistant hash functions and one-way product functions exist relative to a random oracle with high probability. In the next lemma, we will show that there exists a fixed oracle relative to which exponentially-secure one-way functions exist, but lossy functions do not. The proofs for existence of oracles relative to which exponentially-secure collision-resistant hash functions or one-way product functions, but no lossy functions exist follow similarly.

Lemma 6

There exists a fixed set of oracles \(\mathcal {O}\), \(\textrm{PSPACE}^{\mathcal {O}^\prime }\) such that relative to these oracles, one-way functions using \(\mathcal {O}\) exist, but no construction of lossy functions from \(\mathcal {O}\) exists.

Now, our main theorem of this section directly follows from this lemma (and its variants for the other primitives):

Theorem 1 (restated)

There exists no fully black-box construction of lossy functions from any Oraclecrypt primitive, including exponentially-secure one-way functions, collision resistant hash functions, and one-way product functions.

The proof of Lemma 6 and Theorem 1 follow from standard techniques for fixing oracles and can be found in the full version [13].

4 On the Impossibility of Building Key Agreement Protocols from (Extremely) Lossy Functions

In the previous section we showed that lossy functions cannot be built from many symmetric primitives in a black-box way. This raises the question if lossy functions and extremely lossy functions might be inherent asymmetric primitives. In this section we provide evidence to the contrary, showing that key agreement cannot be built from lossy functions in a black-box way. For this, we adapt the proof by Impagliazzo and Rudich [20] showing that key agreement cannot be built from one-way functions to our setting. We extend this result to also hold for extremely lossy functions, but in a slightly weaker setting.

4.1 Lossy Function Oracle

We specify our lossy function oracle relative to a (random) permutation oracle \(\varPi \), and further sample (independently of \(\varPi \)) a second random permutation \(\varGamma \) as integral part of our lossy function oracle. The core idea of the oracle is to evaluate \(\mathsf {Eval^{\varGamma ,\varPi }}(\textsf{pk}_{\textsf{inj}},x)=\varPi (\textsf{pk}_{\textsf{inj}}\Vert ax+b)\) for the injective mode, but set \(\mathsf {Eval^{\varGamma ,\varPi }}(\textsf{pk}_{\textsf{loss}},x)=\varPi (\textsf{pk}_{\textsf{loss}}\Vert \textsf{setlsb}(ax+b))\) for the lossy mode, where a, b describe a pairwise independent hash permutation \(ax+b\) over the field \({{\,\textrm{GF}\,}}(2^{\mu })\) with \(a\ne 0\) and \(\textsf{setlsb}\) sets the least significant bit to 0. Then the lossy function is clearly two to one. The values a, b will be chosen during key generation and placed into the public key, but we need to hide them from the adversary in order to make the keys of the two modes indistinguishable. Else a distinguisher, given \(\textsf{pk}\), could check if \(\mathsf {Eval^{\varGamma ,\varPi }}(\textsf{pk},x)=\mathsf {Eval^{\varGamma ,\varPi }}(\textsf{pk},x')\) for appropriately computed \(x\ne x'\) with \(\textsf{setlsb}(ax+b)=\textsf{setlsb}(ax'+b)\). Therefore, we will use the secret permutation \(\varGamma \) to hide the values in the public key. We will denote the preimage of \(\textsf{pk}\) under \(\varGamma \) as pre-key.

Another feature of our construction is to ensure that the adversary cannot generate a lossy key \(\textsf{pk}_{\textsf{loss}}\) without calling \(\mathsf {Gen^{\varGamma ,\varPi }}\) in lossy mode, while allowing it to generate keys in injective mode. We accomplish this by having a value k in our public pre-key that is zero for lossy keys and may take any non-zero value for an injective public key. Therefore, with overwhelming probability, any key generated by the adversary without a call to the \(\mathsf {Gen^{\varGamma ,\varPi }}\) oracle will be an injective key.

We finally put both ideas together. For key generation we hide a, b and also the string k by creating \(\textsf{pk}\) as a commitment to the values, \(\textsf{pk}\leftarrow \varGamma (k\Vert a\Vert b\Vert z)\) for random z. To unify calls to \(\varGamma \) in regard of the security parameter \(\lambda \), we will choose all entries in the range of \(\lambda /5\).Footnote 2 When receiving \(\textsf{pk}\) the evaluation algorithm \(\mathsf {Eval^{\varGamma ,\varPi }}\) first recovers the preimage \(k\Vert a\Vert b\Vert z\) under \(\varPi \), then checks if k signals injective or lossy mode, and then computes \(\varPi (a\Vert b\Vert ax+b)\) resp. \(\varPi (a\Vert b\Vert \textsf{setlsb}(ax+b))\) as the output.

Definition 6 (Lossy Function Oracle)

Let \(\varPi ,\varGamma \) be permutation oracles with \(\varPi ,\varGamma : \{0,1\}^\lambda \rightarrow \{0,1\}^\lambda \) for all \(\lambda \). Let \(\mu =\mu (\lambda )={\lfloor }(\lambda -2)/5{\rfloor }\) and \(\textsf{pad}=\textsf{pad}(\lambda )= \lambda -2-5\mu \) define the length that the rounding-off loses to \(\lambda -2\) in total (such that \(\textsf{pad}\in \{0,1,2,3,4\}\)). Define the lossy function \((\mathsf {Gen^{\varGamma ,\varPi }},\mathsf {Eval^{\varGamma ,\varPi }})\) with input length \(\text {in}(\lambda )=\mu (\lambda )\) relative to \(\varPi \) and \(\varGamma \) now as follows:

-

Key Generation: Oracle \(\mathsf {Gen^{\varGamma ,\varPi }}\) on input \(1^\lambda \) and either mode \(\textsf{inj}\) or \(\textsf{loss}\) picks random

,

,  and random

and random  . For mode \(\textsf{inj}\) the algorithm returns \(\varGamma (k \Vert a\Vert b\Vert z)\). For mode \(\textsf{loss}\) the algorithm returns \(\varGamma (0^\mu \Vert a\Vert b\Vert z)\) instead.

. For mode \(\textsf{inj}\) the algorithm returns \(\varGamma (k \Vert a\Vert b\Vert z)\). For mode \(\textsf{loss}\) the algorithm returns \(\varGamma (0^\mu \Vert a\Vert b\Vert z)\) instead. -

Evaluation: On input \(\textsf{pk}\in \{0,1\}^\lambda \) and \(x\in \{0,1\}^\mu \) algorithm \(\mathsf {Eval^{\varGamma ,\varPi }}\) first recovers (via exhaustive search) the preimage \(k\Vert a\Vert b\Vert z\) of \(\textsf{pk}\) under \(\varGamma \) for \(k,a,b\in \{0,1\}^\mu \), \(z\in \{0,1\}^{2\mu +\textsf{pad}}\). Check that \(a\ne 0\) in the field \(\text {GF}(2^\mu )\). If any check fails then return \(\bot \). Else, next check if \(k=0^\mu \). If so, return \(\varPi (a \Vert b \Vert \textsf{setlsb}(ax+b))\), else return \(\varPi (a \Vert b \Vert ax+b)\).

We now show that there exist permutations \(\varPi \) and \(\varGamma \) such that relative to \(\varPi \) and the lossy function oracle \((\mathsf {Gen^{\varGamma ,\varPi }},\mathsf {Eval^{\varGamma ,\varPi }})\), lossy functions exist, but key agreement does not. We will rely on the seminal result by Impagliazzo and Rudich [20] showing that no key agreement exists relative to a random permutation. Note that we do not give direct access to \(\varGamma \)—it will only be accessed by the lossy functions oracle and is considered an integral part of it.

The following lemma is the technical core of our results. It says that the partly exponential steps of the lossy-function oracles \(\mathsf {Gen^{\varGamma ,\varPi }}\) and \(\mathsf {Eval^{\varGamma ,\varPi }}\) in our construction can be simulated sufficiently close and efficiently through a stateful algorithm \(\textsf{Wrap}\), given only oracle access to \(\varPi \), even if we filter out the mode for key generation calls. For this we define security experiments as efficient algorithms \(\textsf{Game}_{}\) with oracle access to an adversary \(\mathcal {A}\) and lossy function oracles \(\mathsf {Gen^{\varGamma ,\varPi }},\mathsf {Eval^{\varGamma ,\varPi }},\varPi \) and which produces some output, usually indicating if the adversary has won or not. We note that we can assume for simplicity that \(\mathcal {A}\) makes oracle queries to the lossy function oracles and \(\varPi \) via the game only. Algorithm \(\textsf{Wrap}\) will be black-box with respect to \(\mathcal {A}\) and \(\textsf{Game}_{}\) but needs to know the total number \(p(\lambda )\) of queries the adversary and the game make to the primitive and the quality level \(\alpha (\lambda )\) of the simulation upfront.

Lemma 7 (Simulation Lemma)

Let \(\textsf{Filter}\) be a deterministic algorithm which for calls \((1^\lambda ,\textsf{mode})\) to \(\mathsf {Gen^{\varGamma ,\varPi }}\) only outputs \(1^\lambda \) and leaves any input to calls to \(\mathsf {Eval^{\varGamma ,\varPi }}\) and to \(\varPi \) unchanged. For any polynomial \(p(\lambda )\) and any inverse polynomial \(\alpha (\lambda )\) there exists an efficient algorithm \(\textsf{Wrap}\) such that for any efficient algorithm \(\mathcal {A}\), any efficient experiment \(\textsf{Game}_{}\) making at most \(p(\lambda )\) calls to the oracle, the statistical distance between \(\textsf{Game}_{}^{\mathcal {A},(\mathsf {Gen^{\varGamma ,\varPi }},\mathsf {Eval^{\varGamma ,\varPi }},\varPi )}(1^\lambda )\) and \(\textsf{Game}_{}^{\mathcal {A},\textsf{Wrap}^{\mathsf {Gen^{\varGamma ,\varPi }},\varPi }\circ \textsf{Filter}}\) is at most \(\alpha (\lambda )\). Furthermore \(\textsf{Wrap}\) initially makes a polynomial number of oracle calls to \(\mathsf {Gen^{\varGamma ,\varPi }}\), but then makes at most two calls to \(\varPi \) for each query.

In fact, since \(\mathsf {Gen^{\varGamma ,\varPi }}\) is efficient relative to \(\varGamma \), and \(\textsf{Wrap}\) only makes calls to \(\mathsf {Gen^{\varGamma ,\varPi }}\) for all values up to a logarithmic length \(L_0\), we can also write \(\textsf{Wrap}^{\varGamma _{|L_0},\varPi }\) to denote the limited access to the \(\varGamma \)-oracle. We also note that the (local) state of \(\textsf{Wrap}\) only consists of such small preimage-image pairs of \(\varGamma \) and \(\varPi \) for such small values (but \(\textsf{Wrap}\) later calls \(\varPi \) also about longer inputs).

Proof

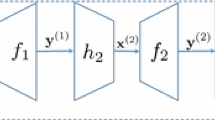

The proof strategy is to process queries of \(\textsf{Game}_{}\) and \(\mathcal {A}\) efficiently given only access to \(\varPi \), making changes to the oracle gradually, depending on the type of query. The changes will be actually implemented by our stateful algorithm \(\textsf{Wrap}\), and eventually we will add \(\textsf{Filter}\) at the end. To do so, we will perform a series of games hops where we change the behavior of the key generation and evaluation oracles. For each game \(\textsf{Game}_{1},\textsf{Game}_{2},\dots \) let \({\textsf{Game}_{i}(\lambda )}\) be the randomized output of the game with access to \(\mathcal {A}\). Let \(p(\lambda )\) denote the total number of oracle queries the game itself and \(\mathcal {A}\) make through the game, and let \(\textsf{Game}_{0}(\lambda )\) be the original attack of \(\mathcal {A}\) with the defined oracles. The final game will then immediately give our algorithm \(\textsf{Wrap}\) with the upstream \(\textsf{Filter}\). We give an overview over all the game hops in Fig. 2.

\(\textsf{Game}_{1}\). In the first game hops we let \(\textsf{Wrap}\) collect all information about very short queries (of length related to \(L_0\)) in a list and use this list to answer subsequent queries. Change the oracles as follows. Let

Then our current version of algorithm \(\textsf{Wrap}\), upon initialization, queries \(\varPi \) about all inputs of size at most \(2L_0\) and stores the list of queries and answers. The reason for using \(2L_0\) is that the evaluation algorithm takes as input a key of security parameter \(\lambda \) and some input of size \(\mu \approx \lambda /5\), such that we safely cover all evaluations for keys of security size \(\lambda \le L_0\).

Further, for any security parameter less than \(2L_0\), our algorithm queries \(\mathsf {Gen^{\varGamma ,\varPi }}\) for \(\lambda 2^{2 L_0}\) times; recall that we do not assume that parties have direct access to \(\varGamma \) but only via \(\mathsf {Gen^{\varGamma ,\varPi }}\). This way, for any valid key, we know that it was created at some point except with probability \((1-2^{-2L_0})^{\lambda 2^{2L_0}}\le 2^{-\lambda }\) and therefore the probability that any key was not generated is at most \(2^{L_0} 2^{-\lambda }\), which is negligible. Further, for every public key, it evaluates \(\mathsf {Eval^{\varGamma ,\varPi }}\) at \(x=0\) and uses the precomputed list for \(\varPi \) to invert, revealing the corresponding a and b. Note that all of this can be done in polynomial time.

Any subsequent query to \(\mathsf {Gen^{\varGamma ,\varPi }}\) for security parameter at most \(L_0\), as well as to \(\mathsf {Eval^{\varGamma ,\varPi }}\) for a public keys of size at most \(L_0\) (which corresponds to a key for security parameter at most \(L_0\)), as well as to \(\varPi \) for inputs of size at most \(2L_0\), are answered by looking up all necessary data in the list. If any data is missing, we will return \(\bot \). Note that as long as we do not return \(\bot \), this is only a syntactical change. As returning \(\bot \) happens at most with negligible probability over the randomness of \(\textsf{Wrap}\),

From now one we will implicitly assume that queries of short security length up to \(L_0\) are answered genuinely with the help of tables and do not mention this explicitly anymore.

\(\textsf{Game}_{2}\). In this game, we will stop using the lossy function oracles altogether, and instead introduce a global state for the \(\textsf{Wrap}\) algorithm. Note that this state will be shared between all parties having access to the oracles (via \(\textsf{Wrap}\)). Now, for every call to \(\mathsf {Gen^{\varGamma ,\varPi }}\), we do the following: If the key is created in injective mode, \(\textsf{Wrap}\) will sample  and

and  , if the key is created in lossy mode, it sets \(k=0^\mu \). Further, it samples a public key

, if the key is created in lossy mode, it sets \(k=0^\mu \). Further, it samples a public key  , and sets the state \(\textsf{st}_\textsf{pk}\leftarrow (k,a,b)\). Finally it returns \(\textsf{pk}\). Any call to \(\mathsf {Eval^{\varGamma ,\varPi }}(\textsf{pk},x)\) will be handled as follows: First, \(\textsf{Wrap}\) checks whether a state for \(\textsf{pk}\) exists. If this is not the case, we generate

, and sets the state \(\textsf{st}_\textsf{pk}\leftarrow (k,a,b)\). Finally it returns \(\textsf{pk}\). Any call to \(\mathsf {Eval^{\varGamma ,\varPi }}(\textsf{pk},x)\) will be handled as follows: First, \(\textsf{Wrap}\) checks whether a state for \(\textsf{pk}\) exists. If this is not the case, we generate  (with checking that \(a\ne 0\)) and save \(\textsf{st}_\textsf{pk}\leftarrow (k,a,b)\). Then, we read \((k,a,b)\leftarrow \textsf{st}_\textsf{pk}\) from the (possibly just initialized) state and return \(\varPi (a \Vert b \Vert ax+b)\).

(with checking that \(a\ne 0\)) and save \(\textsf{st}_\textsf{pk}\leftarrow (k,a,b)\). Then, we read \((k,a,b)\leftarrow \textsf{st}_\textsf{pk}\) from the (possibly just initialized) state and return \(\varPi (a \Vert b \Vert ax+b)\).

What algorithm \(\textsf{Wrap}\) does here can be seen as emulating \(\varGamma \). However, there are two differences: We do not sample z, and we allow for collisions. The collisions can be of either of two types: Either we sample the same (random) public key \(\textsf{pk}=\textsf{pk}'\) but for different state values \((k,a,b)\ne (k',a',b')\), or we sample the same values \((k,a,b)=(k',a',b')\) but end up with different public keys \(\textsf{pk}\ne \textsf{pk}'\). In this case, an algorithm that finds such a collision of size at least \(\mu \) for \(\mu \ge L_0/5\) —smaller values are precomputed and still answered as before— could be able to distinguish the two games. Still, the two games are statistically close since such collisions happen with probability at most \(2^{-2L_0/5 + 1}\) for each pair of generated keys:

\(\textsf{Game}_{3}\). Next, instead of generating and saving a value k depending on the lossy or injective mode, we just save a label \(\textsf{inj}\) or \(\textsf{loss}\) for the mode the key was created for. Further, whenever \(\mathsf {Eval^{\varGamma ,\varPi }}(\textsf{pk},x)\) is called on a public key without saved state, i.e., if it has not been created via key generation, then we always label this key as injective.

The only way the adversary is able to recognize the game hop change is because a self-chosen public key, not determined by key generation, will now never be lossy (or will be invalid because \(a= 0\)). However, any adversarially chosen string of size at least \(5\mu \ge L_0\) would only describe a lossy key with probability at most \(\frac{1}{2^{\mu }-p(\lambda )}\) and yield an invalid \(a=0\) with the same probability. Hence, taking into account that the adversary learns at most \(p(\lambda )\) values about \(\varGamma \) though genuinely generated keys, and the adversary makes at most \(p(\lambda )\) queries, the statistical difference between the two games is small:

\(\textsf{Game}_{4}\). Now, we remove the label \(\textsf{inj}\) or \(\textsf{loss}\) again. \(\textsf{Wrap}\) will now, for any call to \(\textsf{Eval}\), calculate everything in injective mode.

There are two ways an adversary can distinguish between the two games: Either by inverting \(\varPi \), e.g., noting that the last bit in the preimage is not as expected, or by finding a pair \(x\ne x'\) for a lossy key \(\textsf{pk}_\textsf{loss}\) such that \(\textsf{Eval}(\textsf{pk}_\textsf{loss},x) = \textsf{Eval}(\textsf{pk}_\textsf{loss},x')\) in \(\textsf{Game}_{3}\). Inverting \(\varPi \) (or guessing a and b) only succeeds with probability \(\frac{2(p(\lambda )+1)}{2^\mu }\). For the probability of finding a collision, note that viewing the random permutation \(\varPi \) as being lazy sampled shows that the answers are chosen independently of the input (except for repeating previous answers), and especially of a, b for any lossy public key of the type considered here. Hence, we can imagine to choose a, b for any possible pairs of inputs only after \(x,x'\) have been determined. But then the probability of creating a collision among the \(p(\lambda )^2\) many pairs for the same key is at most \(\frac{2p(\lambda )^2}{2^\mu }\) for \(\mu > L_0/5\). Therefore, the distance between these two games is bounded by

\(\textsf{Game}_{5}\). We split the random permutation \(\varPi \) to have two oracles. For \(\beta \in \{0,1\}\) and \(x\in \{0,1\}^{5\mu }\), we now define \(\varPi _\beta (x) = \varPi (\beta \Vert x)_{1\dots 5\mu -1}\), i.e., we add a prefix \(\beta \) and drop the last bit. We now replace any use of \(\varPi \) in \(\textsf{Wrap}\), including direct queries to \(\varPi \), by \(\varPi _1\).

Would \(\varPi _1\) be a permutation, this would be a perfect simulation. However, \(\varPi _1\) is not even injective anymore, but finding a collision is still very unlikely (as random functions are collision resistant). In particular, using once more that we only look at sufficiently large values, the statistical distance of the games is still small:

\(\textsf{Game}_{6}\). Next, we stop using the global state \(\textsf{st}\) for information about the values related to a public key (except for keys of security parameter at most \(L_0\)). The wrapper for \(\textsf{Gen}\) now only generates a uniformly random \(\textsf{pk}\) and returns it. For \(\textsf{Eval}\) calls, \(\textsf{Wrap}\) instead calculates \(a\Vert b\leftarrow \varPi _0(\textsf{pk})\) on the fly. Note that there is a small probability of \(2^{-L_0/5+1}\) of \(a=0\), yielding an invalid key. Except for this, since the adversary does not have access to \(\varPi _0\), this game otherwise looks completely identical to the adversary:

\(\textsf{Game}_{7}\). For our final game, we use \(\varPi _0\) to evaluate the lossy function:

Note that, as \(\mathcal {A}\) has no access to \(\varPi _0\), calls to \(\textsf{Eval}\) in \(\textsf{Game}_{7}\) are random for \(\mathcal {A}\). For \(\textsf{Game}_{6}\), calls to \(\textsf{Eval}\) looks random as long as \(\mathcal {A}\) does not invert \(\varPi _1\), which happens at most with probability \(\frac{2(p(\lambda )+1)}{2^\mu }\). Therefore, the statistical distance between the two games is bound by

In the final game the algorithm \(\textsf{Wrap}\) now does not need to save any state related to large public keys, and it behaves identically for the lossy and injective generators. We can therefore safely add our algorithm \(\textsf{Filter}\), stripping off the mode before passing key generation requests to \(\textsf{Wrap}\). Summing up the statistical distances we obtain a maximal statistical of \(\frac{7}{8}\alpha (\lambda )\le \alpha (\lambda )\) between the original game and the one with our algorithms \(\textsf{Wrap}\) and \(\textsf{Filter}\). \(\square \)

We next argue that the simulation lemma allows us to conclude immediately that the function oracle in Definition 6 is indeed a lossy function:

Theorem 3

The function in Definition 6 is a lossy function for lossiness parameter 2.

The proof can be found in the full version [13].

4.2 Key Exchange

We next argue that given our oracle-based lossy function in the previous section one cannot build a secure key agreement protocol based only this lossy function (and having also access to \(\varPi \)). The line of reasoning follows the one in the renowned work by Impagliazzo and Rudich [20]. They show that one cannot build a secure key agreement protocol between Alice and Bob, given only a random permutation oracle \(\varPi \). To this end they argue that, if we can find \(\textsf{NP}\)-witnesses efficiently, say, if we have access to a \(\textrm{PSPACE}\) oracle, then the adversary with oracle access to \(\varPi \) can efficiently compute Alice ’s key given only a transcript of a protocol run between Alice and Bob (both having access to \(\varPi \)).

We use the same argument as in [20] here, noting that according to our Simulation Lemma 7 we could replace the lossy function oracle relative to \(\varPi \) by our algorithm \(\textsf{Wrap}^\varPi \). This, however, requires some care, especially as \(\textsf{Wrap}\) does not provide access to the original \(\varPi \).

We first define (weakly) secure key exchange protocols relative to some oracle (or a set of oracles) \(\mathcal {O}\). We assume that we have an interactive protocol \(\left\langle \text {Alice} ^\mathcal {O},\text {Bob} ^\mathcal {O}\right\rangle \) between two efficient parties, both having access to the oracle \(\mathcal {O}\). The interactive protocol execution for security parameter \(1^\lambda \) runs the interactive protocol between \(\text {Alice} ^\mathcal {O}(1^\lambda ;z_A)\) for randomness \(z_A\) and \(\text {Bob} ^\mathcal {O}(1^\lambda ,z_B)\) with randomness \(z_B\), and we define the output to be a triple \((k_A,T,k_B)\leftarrow \left\langle \text {Alice} ^\mathcal {O}(1^\lambda ;z_A),\text {Bob} ^\mathcal {O}(1^\lambda ;z_B)\right\rangle \), where \(k_A\) is the local key output by Alice, \(T\) is the transcript of communication between the two parties, and \(k_B\) is the local key output by Bob. When talking about probabilities over this output we refer to the random choice of randomness \(z_A\) and \(z_B\).

Note that we define completeness in a slightly non-standard way by allowing the protocol to create non-matching keys with a polynomial (but non-constant) probability, compared to the negligible probability the standard definition would allow. The main motivation for this definition is that it makes our proof easier, but as we will prove a negative result, this relaxed definition makes our result even stronger.

Definition 7

A key agreement protocol \(\left\langle \text {Alice},\text {Bob} \right\rangle \) relative to an oracle \(\mathcal {O}\) is

-

complete if there exists an at least linear polynomial \(p(\lambda )\) such that for all large enough security parameters \(\lambda \):

-

secure if for any efficient adversary \(\mathcal {A}\) the probability that

is negligible.

Theorem 4

There exist random oracles \(\varPi \) and \(\varGamma \) such that relative to \(\mathsf {Gen^{\varGamma ,\varPi }}\), \(\mathsf {Eval^{\varGamma ,\varPi }}\), \(\varPi \) and \(\textrm{PSPACE}\), the function oracle \((\mathsf {Gen^{\varGamma ,\varPi }},\mathsf {Eval^{\varGamma ,\varPi }})\) from Definition 6 is a lossy function, but no construction of secure key agreement from \(\mathsf {Gen^{\varGamma ,\varPi }}\), \(\mathsf {Eval^{\varGamma ,\varPi }}\) and \(\varPi \) exists.

From this theorem and using the two-oracle technique, the following corollary follows directly:

Corollary 1

There exists no fully black-box construction of a secure key agreement protocol from lossy functions.

Proof (Theorem 4)

Assume, to the contrary, that a secure key agreement exists relative to these oracles. We first note that it suffices to consider adversaries in the \(\textsf{Wrap}\)-based scenario. That is, \(\mathcal {A}\) obtains a transcript \(T\) generated by the execution of \(\text {Alice} ^{\textsf{Wrap}^{\varGamma ,\varPi }\circ \textsf{Filter}}(1^\lambda ;z_A)\) with \(\text {Bob} ^{\textsf{Wrap}^{\varGamma ,\varPi }\circ \textsf{Filter}}(1^\lambda ;z_A)\) where \(\textsf{Wrap}\) is initialized with randomness \(z_W\) and itself interacts with \(\varPi \). Note that \(\textsf{Wrap}^{\varPi }\circ \textsf{Filter}\) is efficiently computable and only requires local state (holding the oracle tables for small values), so we can interpret the wrapper as part of Alice and Bob without needing any additional communication between the two parties—see Fig. 3.

We now prove the following two statements about the key agreement protocol in the wrapped mode:

-

1.

For non-constant \(\alpha (\lambda )\), the protocol \(\langle \text {Alice} ^{\textsf{Wrap}^{\varGamma ,\varPi }\circ \textsf{Filter}},\text {Bob} ^{\textsf{Wrap}^{\varGamma ,\varPi }\circ \textsf{Filter}}\rangle \) still fulfills the completeness property of the key agreement, i.e., at most with polynomial probability, the keys generated by \(\text {Alice} \) and \(\text {Bob} \) differ; and

-

2.

there exists a successful adversary \(\mathcal {E}^{\textsf{Wrap}^{\varGamma ,\varPi }\circ \textsf{Filter},\textrm{PSPACE}}\) with additional \(\textrm{PSPACE}\) access, that, with at least polynomial probability, recovers the key from the transcript of \(\text {Alice} \) and \(\text {Bob} \).

If we show these two properties, we have derived a contradiction: If there exists a successful adversary against the wrapped version of the protocol, then this adversary must also be successful against the protocol with the original oracles with at most a negligible difference in the success probability – otherwise, this adversary could be used as a distinguisher between the original and the wrapped oracles, contradicting the Simulation Lemma 7.

Completeness. The first property holds by the Simulation Lemma: Assume there exists a protocol between \(\text {Alice} \) and \(\text {Bob} \) such that in the original game, the keys generated differ for at most a polynomial probability \(\frac{1}{p(\lambda )}\), while in the case where we replace the access to the oracles by \(\textsf{Wrap}^{\varGamma ,\varPi }\circ \textsf{Filter}\) for some \(\alpha (\lambda )\), the keys differ with constant probability \(\frac{1}{c_\alpha }\). In such a case, we could—in a thought experiment— modify \(\text {Alice} \) and \(\text {Bob} \) to end their protocol by revealing their keys. A distinguisher could now tell from the transcripts whether the keys of the parties differ or match. Such a distinguisher would however now be able to distinguish between the oracles and the wrapper with probability \(\frac{1}{c_\alpha }-\frac{1}{p(\lambda )}\), which is larger than \(\alpha (\lambda )\) for large enough security parameters, which is a contradiction to the Simulation Lemma.

Attack. For the second property, we will argue that the adversary by Impagliazzo and Rudich from their seminal work on key agreement from one-way functions [20] works in our case as well. For this, first note that the adversary has access to both \(\varPi _1\) (by \(\varPi \)-calls to \(\textsf{Wrap}\)) and \(\varPi _0\) (by \(\textsf{Eval}\)-calls to \(\textsf{Wrap}\)) and \(\textsf{Wrap}\) also makes the initial calls to \(\varGamma \). Combining \(\varGamma \), \(\varPi _0\) and \(\varPi _1\) into a single function we can apply the Impagliazzo-Rudich adversary. Specifically, [20, Theorem 6.4] relates the agreement error, denoted \(\epsilon \) here, to the success probability approximately \(1-2\epsilon \) of breaking the key agreement protocol. Hence, let \(\epsilon (\lambda )\) be the at most polynomial error rate of the original key exchange protocol. We choose now \(\alpha (\lambda )\) sufficiently small such that \(\epsilon (\lambda ) + \alpha (\lambda )\) is an acceptable error rate for a key exchange, i.e., at most 1/4. Then this key exchange using the wrapped oracles is a valid key exchange using only our combined random oracle, and therefore, we can use the Impagliazzo-Rudich adversary to recover the key with non-negligible probability.

Fixing the Oracles. Finally, we have to fix the random permutations \(\varPi \) and \(\varGamma \) such that the Simulation Lemma holds and the Impagliazzo-Rudich attack works. This happens again using standard techniques – see the full version [13] for a proof. \(\square \)

4.3 ELFs

We will show next that our result can also be extended to show that no fully black-box construction of key agreement from extremely lossy functions is possible. However, we are only able to show a slightly weaker result: In our separation, we only consider constructions that access the extremely lossy function on the same security parameter as used in the key agreement protocol. We call such constructions security-level-preserving. This leaves the theoretic possibility of building key agreement from extremely lossy functions of (significantly) smaller security parameters. At the same time it simplifies the proof of the Simulation Lemma for this case significantly since we can omit the step where \(\textsf{Wrap}\) samples \(\varGamma \) for all small inputs, and we can immediately work with the common negligible terms.

We start by defining an ELF oracle. In general, the oracle is quite similar to our lossy function oracle. Especially, we still distinguish between an injective and a lossy mode, and make sure that any key sampled without a call to the \(\mathsf {Gen^{\varGamma ,\varPi }_{ELF}}\) oracle will be injective with overwhelming probability. For the lossy mode, we now of course have to save the parameter r in the public key. Instead of using \(\textsf{setlsb}\) to lose one bit of information, we take the result of \(ax+b\) (calculated in \(GF(2^{\mu })\)) modulo r (calculated on the integers) to allow for the more fine-grained lossiness that is required by ELFs.

Definition 8 (Extremely Lossy Function Oracle)

Let \(\varPi ,\varGamma \) be permutation oracles with \(\varPi ,\varGamma : \{0,1\}^\lambda \rightarrow \{0,1\}^\lambda \) for all \(\lambda \). Let \(\mu =\mu (\lambda )={\lfloor }(\lambda -2)/5{\rfloor }\) and \(\textsf{pad}=\textsf{pad}(\lambda )= \lambda -2-5\mu \) defines the length that the rounding-off loses to \(\lambda -2\) in total (such that \(\textsf{pad}\in \{0,1,2,3,4\}\). Define the extremely lossy function \((\mathsf {Gen^{\varGamma ,\varPi }_{ELF}},\mathsf {Eval^{\varGamma ,\varPi }_{ELF}})\) with input length \(\text {in}(\lambda )=\mu (\lambda )\) relative to \(\varGamma \) and \(\varPi \) now as follows:

-

Key Generation: The oracle \(\mathsf {Gen^{\varGamma ,\varPi }_{ELF}}\), on input \(1^\lambda \) and mode r, picks random

,

,  and random

and random  . For mode \(r=2^{\text {in}(\lambda )}\) the algorithm returns \(\varGamma (k \Vert a\Vert b\Vert r\Vert z)\). For mode \(r<2^{\text {in}(\lambda )}\) the algorithm returns \(\varGamma (0^\mu \Vert a\Vert b\Vert r\Vert z)\) instead.

. For mode \(r=2^{\text {in}(\lambda )}\) the algorithm returns \(\varGamma (k \Vert a\Vert b\Vert r\Vert z)\). For mode \(r<2^{\text {in}(\lambda )}\) the algorithm returns \(\varGamma (0^\mu \Vert a\Vert b\Vert r\Vert z)\) instead. -

Evaluation: On input \(\textsf{pk}\in \{0,1\}^\lambda \) and \(x\in \{0,1\}^\mu \) algorithm \(\mathsf {Eval^{\varGamma ,\varPi }_{ELF}}\) first recovers (via exhaustive search) the preimage \(k\Vert a\Vert b\Vert r \Vert z\) of \(\textsf{pk}\) under \(\varGamma \) for \(k,a,b,r\in \{0,1\}^\mu \), \(z\in \{0,1\}^{\mu +\textsf{pad}}\). Check that \(a\ne 0\) in the field \(\text {GF}(2^\mu )\). If any check fails then return \(\bot \). Else, next check if \(k=0^m\). If so, return \(\varPi (a \Vert b \Vert (ax+b\mod r))\), else return \(\varPi (a \Vert b \Vert ax+b)\).

We can now formulate versions of Theorem 4 and Corollary 1 for the extremely lossy case.

Theorem 5

There exist random oracles \(\varPi \) and \(\varGamma \) such that relative to \(\mathsf {Gen^{\varGamma ,\varPi }_{ELF}}\), \(\mathsf {Eval^{\varGamma ,\varPi }_{ELF}}\), \(\varPi \) and \(\textrm{PSPACE}\), the extremely lossy function oracle \((\mathsf {Gen^{\varGamma ,\varPi }_{ELF}},\mathsf {Eval^{\varGamma ,\varPi }_{ELF}})\) from Definition 8 is indeed an ELF, but no security-level-preserving construction of secure key agreement from \(\mathsf {Gen^{\varGamma ,\varPi }_{ELF}}\),\(\mathsf {Eval^{\varGamma ,\varPi }_{ELF}}\) and \(\varPi \) exists.

Corollary 2

There exists no fully black-box security-level-preserving construction of a secure key agreement protocol from extremely lossy functions.

Proving Theorem 5 only needs minor modifications of the proof of Theorem 4 to go through. Indeed, the only real difference lies in a modified Simulation Lemma for ELFs, which we will formulate next, together with a proof sketch that explains where differences arrive in the proof compared to the original Simulation Lemma. To stay as close to the previous proof as possible, we will continue to distinguish between an injective generator \(\mathsf {Gen_{inj}^{}}(1^\lambda )\) and a lossy generator \(\mathsf {Gen_{loss}^{}}(1^\lambda , r)\), where the latter also receives the parameter r.

Lemma 8 (Simulation Lemma (ELFs))