Abstract

Positional embedding is an effective means of injecting position information into sequential data to make the vanilla Transformer position-sensitive. Current Transformer-based models routinely use positional embedding for their position-sensitive modules while no efforts are paid to evaluating its effectiveness in specific problems. In this paper, we explore the impact of positional embedding on the vanilla Transformer and six Transformer-based variants. Since multivariate time series classification requires distinguishing the differences between time series sequences with different labels, it risks causing performance degradation to inject the same content-irrelevant position token into all sequences. Our experiments on 30 public multivariate time series classification datasets show positional embedding positively impacts the vanilla Transformer’s performance yet negatively impacts Transformer-based variants. Our findings reveal the varying effectiveness of positional embedding on different model architectures, highlighting the significance of using positional embedding cautiously in Transformer-based models.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Multivariate time series classification plays a critical role in various fields, such as gesture recognition [29], disease diagnosis [15], and brain-computer interfaces [6]. Recent years have witnessed Transformer-based methods making remarkable breakthroughs in numerous disciplines, such as natural language processing [19, 24], computer vision [1, 9], and visual-audio speech recognition [20, 22]. This success has inspired an increasing application of Transformer-based architectures [4, 16, 30] to multivariate time series classification. Besides, Transformer’s ability to perform parallel computation and leverage long-range dependencies in sequential data make it especially suitable for modeling time series data [26].

Since Transformer is position-insensitive, positional embedding was introduced to allow the model to learn the relative position of tokens. Positional embedding generally injects position information into sequence data [23]. It takes the form of sinusoidal functions of different frequencies, with each embedding dimension corresponding to a sinusoid whose wavelengths form a geometric progression. To date, positional embedding has been a routine for Transformer-based models that deal with multivariate time series classification problems.

Despite the widespread use, there have been debates [27, 31] around the necessity of positional embedding, and a comprehensive investigation of positional embedding’s effectiveness on various Transformer-based models is still to be developed. Firstly, Transformer-based models [14, 33] that contain position-sensitive modules (e.g., convolutional and recurrent layers) can automatically learn the position information, making positional embedding redundant to some extent. This point is supported by studies in other fields [18, 28] suggesting positional embedding may be unnecessary and replaced with position-sensitive layers. Secondly, positional embedding has inherent limitations that may potentially impair the classifier’s performance. Since positional embedding is hand-crafted, it may bring inductive bias that may adversely impact the model’s performance in some cases. While positional embedding injects the same position tokens into time series of different classes, it poses additional challenges to the classifier in figuring out the differences between sequences with different class labels.

In this paper, we explore the impact of positional embedding on various Transformer-based models to facilitate researchers and practitioners in making informed decisions on whether to incorporate positional embedding in their models for multivariate time series classification. In a nutshell, we make the following contributions in this paper:

-

We comprehensively review existing Transformer-based models that contain position-sensitive layers and summarize them into six types of Transformer-based variants.

-

We conduct extensive experiments on 30 multivariate time series classification datasets and evaluate the impact of positional embedding on the vanilla Transformer and Transformer-based variants.

-

Our results show that positional embedding positively impacts the performance of the vanilla Transformer while negatively influencing the performance of the Transformer-based variants in multivariate time series classification.

2 Background

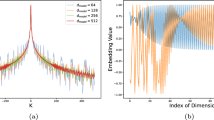

2.1 Positional Embedding

Positional embedding was first proposed for Transformer in [23], which uses fixed sine and cosine functions of different frequencies to represent the position information, as described below:

where pos and i are the position and the dimension indices, respectively. \(d_{model}\) is the dimensionality of the input time series, where each dimension of thepositional embedding corresponds to a sinusoid. For any fixed offset k, \(PE_{\text{ pos }+k}\) is represented as a linear function of \(PE_{\text {pos}}\); this enables the model to learn the relative positions easily. The positional embedding is then added to the time series as the input to Transformer.

Considering hand-crafted positional embedding is generally less expressive and adaptive [26], Time Series Transformer (TST) [32] enhances the vanilla Transformer [23] by implementing learnable positional embedding. Specifically, TST shares the same architecture as the vanilla Transformer, which stacks several basic blocks, each consisting of scaled dot-product multi-head attention and a feed-forward network (FFN) to leverage temporal data. But it differs in initializing the positional embedding using fixed values and then updating the embedding jointly with other model parameters through the training procedure.

2.2 Transformer-Based Variants

Current studies often incorporate convolutional or recurrent layers in the vanilla Transformer architecture in dealing with sequence-related tasks, including time series analysis. Figure 1 summarize these Transformer-based variants into six categories of representative methods, as detailed below.

-

Convolutional Embedding: Methods in this category, namely Informer [33], Tightly-Coupled Convolutional Transformer (TCCT) [21], and ETSformer [27], implement a convolutional layer to obtain convolutional embeddings, which map the raw input sequences to a latent space before feeding them to the transformer block.

-

Convolutional Attention: Instead of calculating the point-wise attention, LogTrans [13] and Long-short Transformer [34] use the convolutional layer to calculate the attention matrix (including queries, keys, and values) of segments to leverage the local temporal information.

-

Convolutional Feed-forward: Uni-TTS [17] and Conformer [8] implement a convolutional layer after the multi-head attention as the feed-forward layer (or part of the feed-forward layer) to capture local temporal correlations.

-

Recurrent Embedding: Temporal Fusion Transformer (TFT) [14] and the work in [3] use a recurrent layer to encode content-based order dependencies into the input sequence.

-

Recurrent Attention: Recurrent Memory Transformer [2], Block Recurrent Transformer [11], and R-Transformer [25] use a recurrent neural net to calculate the attention matrix, which harnesses the temporal information more effectively when compared with the point-wise attention.

-

Recurrent Feed-forward: Instead of point-wise feed-forward, TRANS-BLSTM [10] uses a recurrent layer after multi-head attention to harness non-linear temporal dependencies.

3 Problem Definition

A multivariate time series sequence can be described as: \(X=\left\{ x_1, x_2, \ldots x_T\right\} \), where \(x_i \in \mathbb {R}^N\), \(i \in \{1,2,\cdots ,T\}\), T is the maximum length of the sequence, and N is the number of variables. A dataset contains multiple (sequence, label) pairs and is denoted by \(D=\left\{ \left( X_1, y_1\right) ,\left( X_2, y_2\right) , \ldots ,\left( X_n, y_n\right) \}\right. \), where each \(y_k\) denotes a label, \(k\in \{1,2,\cdots ,n\}\). The objective of multivariate time series classification is to train a classifier to map the input sequences to probability distributions over the classes for the dataset D.

4 Methodology

We call TST [32] the basic model. To avoid having to compare all the related studies exhaustively, we design six Transformer-based variants based on the six types of techniques that are incorporated in the related studies (shown in Fig. 1), respectively. We further identify three configurable components of a Transformer architecture (shown in Fig. 2) as the input embedding layer (which projects the input time series into the latent space), the projection layer (which calculates the attention matrix), and the feed-forward layer (which leverages non-linear relationships). For each variant, we try different techniques (e.g., a Linear layer, a Convolutional layer, or a Gated Recurrent Unit) in each layer/component, as detailed in Table 1.

4.1 Basic Model

The basic model adopts linear layers in all three components. In this case, for each sample \(\textbf{x}_{\textbf{t}} \in \mathbb {R}^M: \textbf{X} \in \mathbb {R}^{M\times T}=\left[ \textbf{x}_1, \textbf{x}_2, \ldots , \textbf{x}_{\textbf{T}}\right] \), where T is the sequence length and M is the variable number. The input embedding can be described as:

where \(t=0, 1, ..., T\) is the time stamp index, \(W^x\in \mathbb {R}^{M \times d_k}\) and \(b^x\in \mathbb {R}^{d_k}\) are learnable parameters. The projection layer can be described as:

where \(W^Q\in \mathbb {R}^{d_k \times d_k}\), \(W^K\in \mathbb {R}^{d_k \times d_k}\), \(W^V\in \mathbb {R}^{d_k \times d_k}\), \(b^Q\in \mathbb {R}^{d_k}\), \(b^K\in \mathbb {R}^{d_k}\), and \(b^V\in \mathbb {R}^{d_k}\) are are learnable parameters. We use standard scaled Dot-Product attention proposed in the vanilla Transformer [23] for self-attention calculation:

The feed-forward layer can be described as:

where \(W_1\in \mathbb {R}^{d_k \times d_k}\), \(W_2\in \mathbb {R}^{d_k \times d_k}\), \(b_1\in \mathbb {R}^{d_k}\), and \(b_2\in \mathbb {R}^{d_k}\) are all leanable parameters.

4.2 Convolutional-Based Variants

We refer to the architectures that employ convolutional layers in any of the three components (input embedding layer, projection layer, or feed-forward layer) as convolutional-based variants. Here, we utilize a one-dimensional convolutional layer with a kernel size of 3. We also set the padding to 1 to preserve the lengths of representations. In the following, we illustrate our convolutional-based variants one by one.

Convolutional Embedding Variant replaces the linear layer with the convolution layer in the input embedding layer, which is formulated below:

where \(*\) is the convolutional operation, \(W^x\in \mathbb {R}^{M \times d_k \times P}\) and \(b^x\in \mathbb {R}^{M}\) are learnable parameters, and P is the kernel size.

Convolutional Attention Variant replaces the linear layer with the convolution layer in the projection layer, which is formulated below:

where \(W^Q\in \mathbb {R}^{d_k \times d_k \times P}\), \(W^K\in \mathbb {R}^{d_k \times d_k \times P}\), \(W^V\in \mathbb {R}^{d_k \times d_k \times P}\), \(b^Q\in \mathbb {R}^{d_k}\), \(b^K\in \mathbb {R}^{d_k}\), and \(b^V\in \mathbb {R}^{d_k}\) are learnable parameters.

Convolutional Feed-forward Variant formulated the linear layer with the convolution layer in the feed-forward layer, which is described below:

where \(W_1\in \mathbb {R}^{d_k \times d_k \times P}\), \(W_2\in \mathbb {R}^{d_k \times d_k \times P}\), \(b_1\in \mathbb {R}^{d_k}\), and \(b_2\in \mathbb {R}^{d_k}\) are the leanable parameters.

4.3 Recurrent-Based Variants

We name the architectures that use recurrent layers in any of the three components (input embedding layer, projection layer, or feed-forward layer) as recurrent-based variants. Here, we use Gate Recurrent Unit (GRU) [5] as the recurrent layer. In the following, we illustrate our recurrent-based Variants one by one.

Recurrent Embedding Variant replaces the linear layer with the GRU in the input embedding layer, which is formulated below:

where \(W_{i r}^x\in \mathbb {R}^{M \times d_k}\), \(W_{i z}^x\in \mathbb {R}^{M \times d_k }\), \(W_{i n}^x\in \mathbb {R}^{M \times d_k}\), \(W_{h r}^x\in \mathbb {R}^{d_k \times d_k}\), \(W_{h z}^x\in \mathbb {R}^{d_k \times d_k}\), \(W_{h n}^x\in \mathbb {R}^{d_k \times d_k}\), \(b_{i r}^x\in \mathbb {R}^{d_k}\), \(b_{h r}^x\in \mathbb {R}^{d_k}\), \(b_{i z}^x\in \mathbb {R}^{d_k}\), \(b_{h z}^x\in \mathbb {R}^{d_k}\), \(b_{i n}^x\in \mathbb {R}^{d_k}\), and \(b_{h n}^x\in \mathbb {R}^{d_k}\) are learnable parameters, \(\circ \) is the Hadamard product.

Recurrent Attention Variant replaces the linear layer with the GRU in the projection layer. Since the calculation processes of all the matrices are similar, for simplicity, we only present the calculation process of the query matrix Q in the projection layer below:

where \(W_{i r}^Q\in \mathbb {R}^{d_k \times d_k}\), \(W_{i z}^Q\in \mathbb {R}^{d_k \times d_k }\), \(W_{i n}^Q\in \mathbb {R}^{d_k \times d_k}\), \(W_{h r}^Q\in \mathbb {R}^{d_k \times d_k}\), \(W_{h z}^Q\in \mathbb {R}^{d_k \times d_k}\), \(W_{h n}^Q\in \mathbb {R}^{d_k \times d_k}\), \(b_{i r}^Q\in \mathbb {R}^{d_k}\), \(b_{h r}^Q\in \mathbb {R}^{d_k}\), \(b_{i z}^Q\in \mathbb {R}^{d_k}\), \(b_{h z}^Q\in \mathbb {R}^{d_k}\), \(b_{i n}^Q\in \mathbb {R}^{d_k}\), and \(b_{h n}^Q\in \mathbb {R}^{d_k}\) are learnable parameters.

Recurrent Feed-forward Variant replaces the linear layer with the GRU in the feed-forward layer, which is formulated below:

where \(W_{i r}\in \mathbb {R}^{d_k \times d_k}\), \(W_{i z}\in \mathbb {R}^{d_k \times d_k }\), \(W_{i n}\in \mathbb {R}^{d_k \times d_k}\), \(W_{h r}\in \mathbb {R}^{d_k \times d_k}\), \(W_{h z}\in \mathbb {R}^{d_k \times d_k}\), \(W_{h n}\in \mathbb {R}^{d_k \times d_k}\), \(b_{i r}\in \mathbb {R}^{d_k}\), \(b_{h r}\in \mathbb {R}^{d_k}\), \(b_{i z}\in \mathbb {R}^{d_k}\), \(b_{h z}\in \mathbb {R}^{d_k}\), \(b_{i n}\in \mathbb {R}^{d_k}\), and \(b_{h n}\in \mathbb {R}^{d_k}\) are learnable parameters, and O is the final output of the feed-forward layer.

5 Experiments

We empirically evaluate the impact of positional embedding on the performance of the basic model and transformer-based variants (illustrated in Sect. 4) for multivariate time series classification. We report our experimental configurations and discuss the results in the following subsections.

5.1 Datasets

We selected 30 public multivariate time series datasets from the UEA Time Series Classification Repository [7]. All datasets were pre-split into training and test setsFootnote 1. We normalized all datasets to zero mean and unit standard deviation and applied zero padding to ensure all the sequences in each dataset bear the same length.

5.2 Model Configuration and Evaluation Metrics

We trained the basic model and six variants for 500 epochs using the Adam optimizer [12] on all the datasets with and without the learnable positional embedding. Besides, we applied an adaptive learning rate, which was reduced by a factor of 10 after every 100 epochs, and employed dropout regularization to prevent overfitting. Table 2 summarizes our model configurations for each dataset.

We evaluate the models using two metrics: accuracy and macro F1-Score. To mitigate the effect of randomized parameter initialization, we repeated the training and test procedures five times and took the average as the final results.

5.3 Results and Analysis

Table 3 and Table 4 show the methods’ performance on the 30 datasets. The results show positional embedding positively impacts the basic model—with positional embedding, the basic model’s performance improves by 17.5% and 14.3% in accuracy and macro F1-Score, respectively. This reveals the significance of enabling the basic model to leverage the position information (e.g., via positional embedding) in solving the multivariate time series classification problem.

In contrast, positional embedding negatively impacts the performance of the Transformer-based variants. Without positional embedding, convolutional embedding (i.e., ConvEmbedding in Table 1) and recurrent embedding (i.e., RecEmbedding in Table 1) models outperformed all other variants, achieving the best accuracy of 56.21% and 56.17%, respectively, and the best macro F1-Scores of 0.528 and 0.5375, respectively. These two models differ from all other models in that their input embedding layers encode the position information when projecting the raw data to a latent space, making the position information accessible by subsequent layers for feature extraction and resulting in superior performance. Incorporating positional embedding decreased the average accuracy of the variants by 12.7% (convolutional embedding), 9.1% (convolutional attention), 18.6% (convolutional feed-forward), 22.1% (recurrent embedding), 21.5% (recurrent attention), and 15.7% (recurrent feed-forward), respectively. Results for the macro F1-Score show similar trends.

Since the convolutional and recurrent layers can inherently capture the position information from sequential data, it is natural to consider positional embedding redundant for Transformer-based variants. Besides, positional embedding risks introducing inductive bias and contaminating the original data. Specifically, positional embedding injects the same information into sequences of different classes, bringing new challenges to the classifiers; this may also contribute to performance degradation.

Further reflecting on the results, we suggest that positional embedding may not be necessary for Transformer-based variants that already contain position-sensitive modules. In particular, for time series classification tasks, while the classifier focuses on the differences between time series sequences across different classes, positional embedding is content-irrelevant, adding the same position information to all sequences regardless of their class labels. As position-sensitive modules generally consider content information when encoding the position information, redundant content-irrelevant positional embedding may lead the model towards capturing spurious correlations that potentially hinder the classifier’s performance.

6 Conclusion

Existing Transformer-based architectures generally contain position-sensitive layers while routinely incorporating positional embedding without comprehensively evaluating its effectiveness on multivariate time series classification. In this paper, we investigate the impact of positional embedding on the vanilla Transformer architecture and six types of Transformer-based variants in multivariate time series classification. Our experimental results on 30 public time series datasets show that positional embedding lifts the performance of the vanilla Transformer while adversely impacting the performance of Transformer-based variants on classification tasks. Our findings refute the necessity of incorporating positional embedding in Transformer-based architectures that already contain position-sensitive layers, such as convolutional or recurrent layers. We also advocate applying position-sensitive layers directly on the input for any Transformer-based architecture that considers using position-sensitive layers to gain better results in multivariate time series classification.

Notes

- 1.

Details about datasets and train-test split can be found at http://www.timeseriesclassification.com/dataset.php.

References

Arnab, A., Dehghani, M., Heigold, G., Sun, C., Lučić, M., Schmid, C.: Vivit: a video vision transformer. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6836–6846 (2021)

Bulatov, A., Kuratov, Y., Burtsev, M.S.: Recurrent memory transformer. arXiv preprint arXiv:2207.06881 (2022)

Chen, K., Wang, R., Utiyama, M., Sumita, E.: Recurrent positional embedding for neural machine translation. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pp. 1361–1367 (2019)

Chen, Z., Chen, D., Zhang, X., Yuan, Z., Cheng, X.: Learning graph structures with transformer for multivariate time series anomaly detection in IoT. IEEE Internet Things J. 9, 9179–9189 (2021)

Cho, K., et al.: Learning phrase representations using rnn encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078 (2014)

Coyle, D., Prasad, G., McGinnity, T.M.: A time-series prediction approach for feature extraction in a brain-computer interface. IEEE Trans. Neural Syst. Rehabil. Eng. 13(4), 461–467 (2005)

Dau, H.A., et al.: Hexagon-ML: the UCR time series classification archive (2018)

Gulati, A., et al.: Conformer: convolution-augmented transformer for speech recognition. arXiv preprint arXiv:2005.08100 (2020)

Han, K., Wang, Y., Chen, H., Chen, X., Guo, J., Liu, Z., Tang, Y., Xiao, A., Xu, C., Xu, Y., et al.: A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 45(1), 87–110 (2022)

Huang, Z., Xu, P., Liang, D., Mishra, A., Xiang, B.: Trans-blstm: transformer with bidirectional LSTM for language understanding. arXiv preprint arXiv:2003.07000 (2020)

Hutchins, D., Schlag, I., Wu, Y., Dyer, E., Neyshabur, B.: Block-recurrent transformers. arXiv preprint arXiv:2203.07852 (2022)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Li, S., et al.: Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting. Adv. Neural Inf. Process. Syst. 32 (2019)

Lim, B., Arık, S.Ö., Loeff, N., Pfister, T.: Temporal fusion transformers for interpretable multi-horizon time series forecasting. Int. J. Forecast. 37(4), 1748–1764 (2021)

Liu, M., Kim, Y.: Classification of heart diseases based on ecg signals using long short-term memory. In: 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), pp. 2707–2710. IEEE (2018)

Liu, M., et al.: Gated transformer networks for multivariate time series classification. arXiv preprint arXiv:2103.14438 (2021)

Liu, Y., et al.: Delightfultts: the microsoft speech synthesis system for blizzard challenge 2021. arXiv preprint arXiv:2110.12612 (2021)

Pan, Z., Cai, J., Zhuang, B.: Fast vision transformers with hilo attention. arXiv preprint arXiv:2205.13213 (2022)

Raganato, A., Tiedemann, J.: An analysis of encoder representations in transformer-based machine translation. In: Proceedings of the 2018 EMNLP Workshop BlackboxNLP: Analyzing and Interpreting Neural Networks for NLP. The Association for Computational Linguistics (2018)

Serdyuk, D., Braga, O., Siohan, O.: Transformer-based video front-ends for audio-visual speech recognition, p. 15. arXiv preprint arXiv:2201.10439 (2022)

Shen, L., Wang, Y.: TCCT: tightly-coupled convolutional transformer on time series forecasting. Neurocomputing 480, 131–145 (2022)

Song, Q., Sun, B., Li, S.: Multimodal sparse transformer network for audio-visual speech recognition. IEEE Trans. Neural Netw. Learn. Syst. (2022)

Vaswani, A., et al.: Attention is all you need. Adv. Neural Inf. Process. Syst. 30 (2017)

Wang, Q., et al.: Learning deep transformer models for machine translation. arXiv preprint arXiv:1906.01787 (2019)

Wang, Z., Ma, Y., Liu, Z., Tang, J.: R-transformer: recurrent neural network enhanced transformer. arXiv preprint arXiv:1907.05572 (2019)

Wen, Q., et al.: Transformers in time series: a survey. arXiv preprint arXiv:2202.07125 (2022)

Woo, G., Liu, C., Sahoo, D., Kumar, A., Hoi, S.: Etsformer: exponential smoothing transformers for time-series forecasting. arXiv e-prints arXiv:2202.01381 (2022)

Wu, H., et al.: CVT: introducing convolutions to vision transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 22–31 (2021)

Yang, C., Jiang, W., Guo, Z.: Time series data classification based on dual path CNN-RNN cascade network. IEEE Access 7, 155304–155312 (2019)

Yuan, Y., Lin, L.: Self-supervised pretraining of transformers for satellite image time series classification. IEEE J. Sel. Topics Appl. Earth Obs. Remote Sens. 14, 474–487 (2020)

Zeng, A., Chen, M., Zhang, L., Xu, Q.: Are transformers effective for time series forecasting? arXiv preprint arXiv:2205.13504 (2022)

Zerveas, G., Jayaraman, S., Patel, D., Bhamidipaty, A., Eickhoff, C.: A transformer-based framework for multivariate time series representation learning. In: Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pp. 2114–2124 (2021)

Zhou, H., et al.: Informer: beyond efficient transformer for long sequence time-series forecasting. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, pp. 11106–11115 (2021)

Zhu, C., et al.: Long-short transformer: efficient transformers for language and vision. Adv. Neural. Inf. Process. Syst. 34, 17723–17736 (2021)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Yang, C., Chen, Y., Li, Z., Wang, X. (2023). Exploring the Effectiveness of Positional Embedding on Transformer-Based Architectures for Multivariate Time Series Classification. In: Yang, X., et al. Advanced Data Mining and Applications. ADMA 2023. Lecture Notes in Computer Science(), vol 14176. Springer, Cham. https://doi.org/10.1007/978-3-031-46661-8_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-46661-8_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-46660-1

Online ISBN: 978-3-031-46661-8

eBook Packages: Computer ScienceComputer Science (R0)