Abstract

Efficient and interpretable classification of time series is an essential data mining task with many real-world applications. Recently several dictionary- and shapelet-based time series classification methods have been proposed that employ contiguous subsequences of fixed length. We extend pattern mining to efficiently enumerate long variable-length sequential patterns with gaps. Additionally, we discover patterns at multiple resolutions thereby combining cohesive sequential patterns that vary in length, duration and resolution. For time series classification we construct an embedding based on sequential pattern occurrences and learn a linear model. The discovered patterns form the basis for interpretable insight into each class of time series. The pattern-based embedding for time series classification (PETSC) supports both univariate and multivariate time series datasets of varying length subject to noise or missing data. We experimentally validate that MR-PETSC performs significantly better than baseline interpretable methods such as DTW, BOP and SAX-VSM on univariate and multivariate time series. On univariate time series, our method performs comparably to many recent methods, including BOSS, cBOSS, S-BOSS, ProximityForest and ResNET, and is only narrowly outperformed by state-of-the-art methods such as HIVE-COTE, ROCKET, TS-CHIEF and InceptionTime. Moreover, on multivariate datasets PETSC performs comparably to the current state-of-the-art such as HIVE-COTE, ROCKET, CIF and ResNET, none of which are interpretable. PETSC scales to large datasets and the total time for training and making predictions on all 85 ‘bake off’ datasets in the UCR archive is under 3 h making it one of the fastest methods available. PETSC is particularly useful as it learns a linear model where each feature represents a sequential pattern in the time domain, which supports human oversight to ensure predictions are trustworthy and fair which is essential in financial, medical or bioinformatics applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Time series classification is an important real-world problem as currently technology enables the collection of huge volumes of temporal data from users and devices. Existing time series classification methods often make stringent assumptions on the format of the time series and require that time series start at the same time, are univariate, are of equal length or only contain continuous values. However, in the real word, there is more variation in time series. For instance, IoT devices collect sensor values and operating system events at the same time and exhibit missing data and irregular sampling. Making accurate predictions on this large variety of datasets remains a hard problem despite recent advances. This variety of time series is illustrated in Fig. 1.

In the active research area of dictionary- and shapelet-based time series classification, patterns are fixed-length continuous and contiguous subsequences referred to as shapelets, motifs or words. Shapelet-based methods use subsequences of the raw numeric time series with elastic distance measures (Ye and Keogh 2011; Hills et al. 2014; Lucas et al. 2019). Dictionary-based methods convert continuous time series data to a symbolic sequence using Symbolic Aggregate Approximation (SAX) after which fixed-length contiguous subsequences are extracted for classification (Lin et al. 2003, 2012; Senin and Malinchik 2013). Alternatively, time series are first transformed using Symbolic Fourier Approximation (SFA) instead of SAX resulting in the best performing dictionary-based time series classification method, Bag of SFA Symbols (BOSS) (Schäfer 2015). Because of its good performance on the ‘bake off’ datasets of the UCR/UEA Archive (Bagnall et al. 2017), many extensions to BOSS have been proposed recently, such as WEASEL, cBOSS, S-BOSS and TDE (Schäfer and Leser 2017; Middlehurst et al. 2019; Large et al. 2019; Middlehurst et al. 2020b). However, since these methods combine the predictions of hundreds of individual BOSS classifiers and rely on the hard to interpret Symbolic Fourier Approximation representation, the resulting predictions are not interpretable. Finally, nearest neighbours with Dynamic Time Warping (DTW) have proven to be a remarkably strong baseline on both univariate and multivariate datasets (Shokoohi-Yekta et al. 2017; Bagnall et al. 2017).

Many more methods have been proposed. Heterogeneous ensemble methods, such as COTE (Bagnall et al. 2015), HIVE-COTE (Lines et al. 2018) and TS-CHIEF (Shifaz et al. 2020) combine strong shapelet-, dictionary- and distance-based baseline methods. High accuracy is also achieved by recent methods based on deep learning architectures, such as ResNet (Wang et al. 2017) and InceptionTime (Fawaz et al. 2019, 2020). All of the above mentioned methods mostly focus on increasing the accuracy on the UCR/UEA time series benchmark, but they require significant resources to train and make predictions and often do not scale to very large datasets and take days or weeks to complete on all 85 ‘bake off’ datasets of the UCR archive (Dau et al. 2018). More recently, ROCKET (Dempster et al. 2020) achieves both high accuracy and efficiency, by completing on all 85 ‘bake off’ datasets under 2 h. Another interesting new development is MR-SEQL (Le Nguyen et al. 2019) which is also accurate and, excluding the SFA representation, offers an interpretable model.

For the related task of sequence (or event log) classification, many algorithms have been proposed based on frequent pattern mining (Fan et al. 2008; Cheng et al. 2008; Zhou et al. 2016). Given the wealth of algorithms for efficiently enumerating sequential patterns (Aggarwal and Han 2014; Zaki and Meira 2014) and event log classification, one might wonder why it is scarcely mentioned within continuous time series classification. We argue this is due to the following causes. First, time series are highly autocorrelated, causing many repetitive symbols afters discretisation. That is, autocorrelated time series often contain long repetitive subsequences, e.g. aaaaaaaaaa or bbbbbbbbb, that occur frequently and as a consequence, all shorter subsequences of such sequences are also frequent causing a large output of less discriminative sequential patterns. Second, in contrast to irregular event logs, the cohesion (in terms of time) of matching pattern symbols is more important for regularly sampled continuous time series. For instance, sequential pattern ab matches with window axxxxxxxxxb and we show that, without temporal constraints on matching, this foils accurate classification. Thirdly, since frequency is anti-monotonic, shorter patterns are more frequent than longer ones, causing a long list of patterns of length 1, 2 or 3, while in dictionary-based methods discriminative subsequences are much longer, i.e., a length of 10 is more usual.

The traditional design choice of dictionary- and shapelet-based time series classifiers is to limit patterns to a fixed length, duration and resolution. This makes sense given that the space of sequential patterns grows exponentially with the length of the pattern and the size of the alphabet. Our method, however, adopt a different approach. First, we consider patterns of varying length, which is important, for instance, if pattern aaa is discriminative for class A and pattern bbbccc for class B, limiting the candidate patterns to fixed length inhibits optimally separating instances of both classes. Second, unlike related work into varying length patterns for time series classification (Le Nguyen et al. 2017; Raza and Kramer 2020), we do not focus only on contiguous subsequences, but on sequential patterns where the duration of the sequential pattern occurrences varies as we allow for gaps between consecutive symbols when matching the pattern to a discretised subsequence, e.g., aaabbb matches with sequence aaaxbbb. Third, we consider different resolutions when creating the representations, which is determined by the choice of the sliding window parameter. If this is set to a relatively high value, this smooths each segment and captures longer patterns, e.g., trends or seasonal patterns. If the window is set to a relatively low value, we smooth less and discover shorter and more diverse local shapes. We argue that choosing is losing when committing to a fixed length, duration or resolution of patterns.

We propose PETSC, a new dictionary-based method that discovers candidate sequential patterns of varying length and duration. The major steps of PETSC are illustrated in Fig. 2. Our method first transforms the time series dataset using a sliding window and SAX. For discovery of sequential patterns we extend principled techniques from frequent, discriminative and top-k pattern mining literature to reduce the number of candidates dramatically (Fan et al. 2008; Fradkin and Mörchen 2015). Since the frequency of the prefix is higher than or equal to the frequency of any sequential pattern starting with this prefix, i.e., frequency is anti-monotonic, we use this to efficiently prune candidates. That is, we enumerate candidate sequential patterns efficiently based on prefix-projected pattern growth (Pei et al. 2004) with respect to a novel constraint on relative duration. We propose two mining algorithms that discover the top-k most frequent and top-k most discriminative sequential patterns directly.

Overview of the different steps in PETSC. First we transform each continuous time series to a symbolic sequence using SAX. Next we discover a set of varying-length frequent sequential patterns. Next we create an embedding based on matching pattern occurrences and finally train a linear model that assigns a different weight to each discovered pattern. An extension of PETSC mines discriminative patterns and another extension is based on near-matching patterns

After discovering k sequential patterns we create an embedding matrix where each value represents the frequency of a sequential pattern for a time series. For multivariate time series we repeat this process and concatenate embedding vectors from each dimension independently. Additionally, we create an ensemble method that discovers the top-k sequential patterns in a limited number of SAX representations at multiple resolutions. Finally, for classification we train a linear model using an elastic net trained on the pattern-based embedding.

In summary, we make the following key contributions:

-

We classify time series using a white-box linear model based on an embedding of varying length frequent sequential patterns. We show that this model is easy to interpret thereby supporting applications such as medical diagnosis or financial applications, where explainable predictions and a transparent model are essential for asserting fairness or building trust.

-

We scale to much larger datasets and are on par with ROCKET (Dempster et al. 2020) concerning runtime performance by taking only 2.7 h to process all 85 ‘bake off’ datasets (Bagnall et al. 2017). Moreover, our model is small and supports real-time applications, such as edge computation to handle the deluge of sensing data from IoT devices.

-

We outperform dictionary-based methods such as BOP and SAX-VSM even with default hyperparameters and are competitive with BOSS. We also outperform distance-based approaches on both univariate and multivariate datasets (Shokoohi-Yekta et al. 2017; Bagnall et al. 2018). While out method performs slightly worse in terms of accuracy than the state-of-the-art method ROCKET on average, we still outperform ROCKET on 32 out of 109 univariate datasets and 10 out of 26 multivariate datasets.

-

We explore and extend pattern mining research techniques to discover long, cohesive and discriminative sequential patterns in time series directly. Additionally, we present a novel temporal constraint on relative duration and an alternative to exact pattern matching to deal with discretisation errors.

-

We do not require stringent assumptions on the format of the time series and naturally handle univariate, multivariate and mixed-type multivariate time series subject to missing data or irregular sampling. This supports applications for classification of IoT devices that log both sensor values and discrete operating events.

The remainder of this paper is organised as follows. We present an overview of the related work in Sect. 2. In Sect. 3, we introduce the necessary preliminaries. In Sect. 4, we provide a detailed description of our method for sequential pattern mining, creating the pattern-based embedding and classification using a linear model. In Sect. 5 we present several optimisations that result in three additional variants of our algorithm. In Sect. 6, we present an experimental evaluation of our method and compare with state-of-the-art methods before presenting conclusions in Sect. 7.

2 Related work

In this section we present the relevant related work, starting off with various approaches to time series classification, before discussing the fields of frequent pattern mining, event log classification and explainability, which our work builds on.

2.1 Shapelet-based time series classification

Shapelet-based time series classification uses continuous subsequences to separate time series with different labels (Bagnall et al. 2017). Often Euclidean distance or an elastic distance measure, such as dynamic time warping, is used in combination with the nearest neighbour classifier (Ye and Keogh 2011) or other (binary and multiclass) algorithms such as decision trees (Hills et al. 2014). A disadvantage of shapelets is that the nearest neighbour search makes it relatively slow on larger datasets which has resulted in substantial work on optimisation (Rakthanmanon et al. 2012; Petitjean et al. 2014; Yeh et al. 2016).

2.2 Dictionary-based time series classification

For dictionary-based time series classification, Lin et al. (2012) proposed bag-of-patterns (BOP) where each time series is converted into segments using a sliding-window and then converted to discrete subsequences, or words, using SAX. Senin and Malinchik (2013) extended BOP in SAX-VSM thereby computing TF-IDF weights for each word and label. In both approaches, time series are assigned a label based on the nearest neighbour after transformation to a dictionary of words.

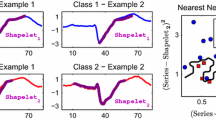

In Fig. 3 we show an illustrative example on a dataset provided by Lin et al. (2012) where we ran PETSC and highlight 2 sequential patterns with high support in each class. To make the visualisation clearer we removed overlapping pattern occurrences. In Fig. 4 we show another illustrative example and compare BOP with PETSC. Each time series of length 1000 is segmented with a sliding-window of length 50 and discretised using SAX into words of length 12 consisting of 4 symbols. Using BOP we create a dictionary that contains all unique subsequences of length 12. Using PETSC we mine the top-1000 most frequent sequential patterns with a length between 6 and 12 and a relative duration of 1.2, meaning that at most 2 gaps are allowed. We observe that if we compute the nearest neighbour using Euclidean distance on the raw time series this is not an instance with the same label. The nearest neighbour in the embedding space of both BOP and PETSC is much more reliable for this dataset, however, BOP identifies \(S_{4}\) as the nearest neighbour of \(S_{13}\), even though the two time series belong to different classes.

Illustrative example where we show 8 time series of different classes and highlight occurrences for the top-2 sequential patterns with the highest support in each class. First, we segment the time series using a sliding-window of length \(\varDelta t=120\) and discretise each segment using SAX with 4 symbols and a word length of 15. Next, we mine the top-200 frequent sequential patterns with a length between 5 and 15 and allow for 1 gap

Illustrative example where we show 16 times series of 8 different classes. In the second column, we show the corresponding PETSC embedding that represents the frequency of thousands of varying length sequential patterns. In the third column, we show the corresponding BOP embedding that represents the frequency of thousands of fixed-length sequences. In addition we connect each time series to its nearest neighbour in each column—if the line is green, the nearest neighbour is of the same class, and if the line is red, the nearest neighbour is of another class (Color figure online)

Schäfer (2015, 2016) proposes BOSS which first transforms time series using Symbolic Fourier Approximation (SFA) and then creates a dictionary of patterns, in this case (unordered) sets of SFA symbols. BOSS offers high accuracy and has been extended to be more efficient. BOSS achieves an accuracy on the UCR Benchmark (Dau et al. 2018) that is within a critical distance of deep-learning based approaches, such as ResNet (Wang et al. 2017) and ensemble methods such as Proximity Forests (Lucas et al. 2019).

2.3 Time series classification with variable length patterns

Most related to our method is SEQL (Le Nguyen et al. 2017) which mines varying length sequences, after transformation using SAX. SEQL tackles the large search space by employing greedy search thereby mining the most discriminative subsequences directly. The authors further extend their method and propose an ensemble MR-SEQL that combines multiple resolutions of SAX and SFA representations which results in an accuracy competitive with recent deep learning and heterogeneous ensemble techniques (Le Nguyen et al. 2019). Unlike MR-SEQL, PETSC does not depend on SFA and uses non-contiguous patterns instead of subsequences by allowing pattern occurrences with varying duration. Another difference is that MR-SEQL combines \(\sqrt{|S|}\) representations and linear models, while we propose a single linear model based on \(\log (|S|)\) representations. Another related method based on varying length SAX patterns is MiSTiCl (Raza and Kramer 2020). MiSTiCl tackles the large subspace of varying length patterns using frequent (contiguous) string mining and creates an embedding based on different SAX representations similar to SEQL. Key differences with PETSC are that we mine non-contiguous sequential patterns and use a linear model for classification where MiSTiCl uses a random forest for classification making it uninterpretable. Finally, using pattern mining we naturally handle a larger variety of time series data sources, such as univariate time series with missing values, irregular sampling and multivariate time series (possibly of mixed type). We experimentally compare with MR-SEQL and MiSTiCl in Sect. 6.

2.4 Frequent pattern mining

Within the pattern mining community, there has always been a focus on interpretable association rules, based on itemsets and sequential patterns (Agrawal et al. 1994), also referred to as parallel and serial episodes for sequential data (Mannila et al. 1997). Early algorithms focused on how to generate many frequent patterns of varying length efficiently (Pei et al. 2004; Chen et al. 2007). Others have investigated how to efficiently incorporate various constraints (Pei et al. 2007). Others have adapted methods to produce less redundant patterns, leading to various condensed representations, i.e., closed, maximal or non-derivable (conjunctive) patterns. Finally, there have been attempts to directly report patterns ranked on non-frequency based interestingness measures such as leverage (Petitjean et al. 2016) and cohesion (Cule et al. 2019), or based on minimal description length to produce a set of patterns that compresses the sequence best (Lam et al. 2014). A known problem with frequent patterns is that they are often not interesting, which is even worse within the context of time series. We circumvent these issues, by first computing sliding windows and using SAX, which includes a local z-normalisation of each window, which is important in limiting the diversity of patterns. Additionally, we demonstrate that setting constraints on minimal length and relative duration is very important. We propose a new sequential pattern mining algorithm in Sect. 4 that is related to prefix-projected pattern growth such as PrefixSpan (Pei et al. 2004), mining with temporal constraints such as PG (Pei et al. 2007), algorithms that mine the top-k most frequent sequential patterns directly such as TSK (Fournier-Viger et al. 2013) and algorithms that mine the top-k most cohesive sequential patterns directly such as QCSP (Feremans et al. 2018).

2.5 Event log classification

Frequent and discriminative pattern mining has been proposed for discrete sequence or event log classification (Fan et al. 2008; Cheng et al. 2008; Zhou et al. 2016). In Sect. 5, we propose a new sequential pattern algorithm that discovers the most discriminative patterns directly where we rank patterns on contrast, defined as the difference in relative support in time series with and without a certain label. We also investigate the use of sequential covering to remove redundant patterns. In sequential covering we mine the best discriminative pattern and then remove all windows covered by this pattern. We repeat this process iteratively until all time series windows are covered by at least one discriminative pattern. In other works, authors have also proposed to create a representation based on occurrences of sequential patterns before classification, such as in BIDE-DC (Fradkin and Mörchen 2015) and SQN2VEC (Nguyen et al. 2018). Similar to our proposed method, they consider redundancy between patterns, gap constraints and a one-versus-all strategy for dealing with multiple classes. A key difference, however, is that only discrete sequential datasets, typically with a small alphabet, are considered, thereby ignoring challenges specific to time series classification.

2.6 Explainability

In practice, end-users of algorithms prefer the output to be explainable. Deep learning algorithms are often seen as black box methods, and practitioners in many fields prefer to use more interpretable methods, even if they produce lower accuracy. Recently, methods have been developed within the deep learning community for visualising attribution, i.e., quantifying the contribution of each time series interval (Hsieh et al. 2021). Other general algorithms have been constructed to explain individual decisions post hoc for black-box models (Molnar 2020). Most notably, algorithms such as LIME (Ribeiro et al. 2016) and SHAP (Lundberg and Lee 2017) attempt to provide explanations for each individual classification on a point-by-point basis. This enables instance-based decision support, i.e., highlight which parts of a test instance contribute the most to the assigned class label. However, this is still a weaker form of interpretability, as it only allows to explain a decision for an individual instance.

An intrinsically interpretable model (Molnar 2020) consists of a linear model or decision tree and a set of interpretable features (or patterns). With this type of white-box model, we look at the model internals, such as the weights of the linear model or decision tree branches, to trust decisions. That is, using an interpretable model it is possible for human experts to inspect both the model and the features in order to trust decisions for any instance. Note that it is always possible to train a global surrogate model post hoc thereby mimicking the behaviour of a black-box model at the cost of slightly worse accuracy, however training an interpretable model directly is far more straightforward and certainly preferable if high accuracy is achieved.

Another important aspect of a good explanation is selectivity or complexity of the model (Molnar 2020). That is, having thousands of features in a linear model hinders interpretation, and having fewer features or sparse linear models is better for providing decision support towards human end-users interpreting the model. In general selecting the top-k features with the highest information gain (Peng et al. 2005) can be employed to reduce the number of features. We remark that pattern redundancy has been extensively studied by the pattern mining community (Han et al. 2011; Aggarwal and Han 2014) and we can filter closed or maximal sequential patterns or patterns that are non-discriminatory, i.e., having low contrast using sequential covering (see Sect. 5.2).

Within the context of time series classification, there also exist techniques that are reducing overlapping patterns, such as numerosity reduction (Lin et al. 2012). Another technique is shapelet clustering, where the authors propose to reduce the number of shapelets using a clustering of (equal-length) shapelets to increase model interpretation at the cost of a slight drop in accuracy (Hills et al. 2014). However, we remark that most existing methods for time series classification are difficult to interpret and the employed models are unsuitable for human interpretation. That is, by adopting an SFA representation, random forests, deep learning or an ensemble thereof, existing methods are extremely hard to interpret. A notable exception is MR-SEQL (Le Nguyen et al. 2019) since its model consists of a linear model of features. However, MR-SEQL depends on SFA and since SFA symbols represent a complex-valued function of frequency this hinders interpretability.

In this work we discuss both instance-based decision support (by visualising attribution) and learn an intrinsically interpretable model directly. Additionaly we present a use-case where we reduce the number of patterns to further improve explanations in Sect. 6.3.

3 Preliminaries

In this section, we introduce the necessary concepts and notations, before formally defining our problem setting.

3.1 Time series data

A time series is defined as a time-ordered sequence of measurements \(S=(\langle x_1, t_1\rangle , \ldots , \langle x_n, t_n\rangle )\), where each measurement has a timestamp \(t_k\) and \(\forall \ i,j \in \{1..n\}: i < j \Rightarrow t_i \le t_j\). For continuous time series \(x_k \in {\mathbb {R}}\). For discrete time series \(x_k \in \varOmega \) where \(\varOmega \) denotes the finite domain of event types. We remark that for sequential pattern mining we do not require a single event at each timestamp (i.e. \(t_i = t_j\)) nor that the sample rate is regular (i.e. \(\forall i: t_{i+1} - t_i\) is not constant). We use |S| to denote the length of the time series. If time series are of different length we define |S| to be the minimal length of any time series.

A univariate time series dataset consists of multiple labelled time series, i.e. \({\mathcal {S}}=\{S_i,y_i\}_{i=1}^{m}\). Each time series \(S_i\) may be of different length, however, in the univariate case we assume all measurements are of the same type. A class, or label, \(y_i \in {\mathcal {Y}}\) where \({\mathcal {Y}}\) denotes the finite domain of classes, which is either binary or multi-class if it consists of more than two classes.

In a multivariate time series dataset, each labelled instance is composed of several time series, or dimensions: \({\mathcal {S}}^{1,\ldots ,d} =\{ S_{i}^{1},\ldots S_{i}^{d},y_i\}_{i=1}^{m}\). That is, there are m labelled instances and each instance consists of d time series, \(S_{i}^{1},\ldots ,S_{i}^{d}\). Like in the univariate case, we assume that time series in each dimension d are of the same type, however, in different dimensions the types may vary. The sampling and length of each time series can also vary. In the remainder of this paper, we use the notation \({\mathcal {S}}^d\) (or \({\mathcal {S}}\)) to refer to all time series in a single dimension of a multivariate time series dataset. We remark that PETSC reduces a multivariate time series dataset to d univariate time series datasets for extracting a sequential pattern-based embedding.

3.2 Segmentation

A time series window \(S_k[t_a,t_b]\) is a contiguous subsequence of time series \(S_k\) and contains all measurements \(\{\langle x_i, t_i\rangle \in S_k \ | \ t_a \le t_i \le t_b\}\) in the univariate case. In this work, we use fixed-length sliding windows. This means choosing a fixed time interval \(\varDelta t\) (e.g. 5 s, 10 min or 1 h) and creating windows starting at \(t_1, \ldots t_n\). For a time series of length \(|S_i|\) there are \(|S_i| - \varDelta t +1\) windows assuming an increment of 1. We denote the resulting set of windows as \({\mathcal {S}}^s\). For multivariate datasets, we apply a sliding-window over each dimension independently. It is known that the accuracy of time series classification is heavily dependent on the value of \(\varDelta t\), since segments must be long enough to contain the distinctive features, but also short enough to be informativeFootnote 1 (Senin and Malinchik 2013; Le Nguyen et al. 2019). After transforming the time series data using a sliding-window, the dataset consists of many small segments and we ignore the order between segments. This enables us to mine local, phase-independent, patterns and is in contrast to interval-based time series classification methods. We remark that sequential pattern mining specific to intervals, i.e., by discovering and counting the frequency of sequential patterns in each interval separately, is not explored to date, but seems a logical direction for future work.

3.3 Symbolic aggregate approximation

After a sliding-window transformation, we reduce and discretise each continuous segment, or window, \(S^s[t_a,t_b]\) using SAX (Lin et al. 2003). SAX has two parameters: the word size w and the alphabet size \(\alpha \). First, we z-normalise each window resulting in values distributed with a mean of 0.0 and a standard deviation of 1.0. If the standard deviation is below a certain threshold, typically set to 0.01, we do not z-normalise to prevent that noise is amplified. Next, the length of each window is reduced by computing the Piecewise Aggregate Approximation (PAA), e.g., with a word size, or PAA window, of 8 we divide the normalised segment into 8 equally sized subsegments and store the mean value for each subsegment (Keogh et al. 2001). Finally, we convert each mean value to a letter (or digit) using a lookup table into \(\alpha \) equal-density regions assuming the Gaussian distribution. Figure 5 from Lin et al. (2012) illustrates the transformation of a single segment. We remark that parameters w and \(\alpha \) have a large influence on the final classification accuracy. Lin et al. (2012) propose to set \(\alpha \) to 3 or 4 for most datasets and w from 6 to 8. Since we mine variable length patterns, it makes sense to increase w, e.g., on some datasets setting w to 20 (and \(\alpha \) as high as 12) resulted in best accuracy as we will discuss in Sect. 6.

Illustration of the SAX transform where the normalised continuous time series segment with length \(|S|=128\) is transformed using PAA with a word size of \(w=8\) and discretised into \(\alpha =3\) equal-density regions into the discrete sequence baabccbc (Lin et al. 2012)

3.4 Sequential pattern mining

A sequential pattern X is a sequence of one or more items, denoted as \(X = ({s_1}, \ldots ,{s_l})\), where \({s_k} \in \varOmega \) and \(\varOmega \) is the finite domain of symbols. For continuous time series \(|\varOmega |=\alpha \) after transformation using SAX. A sequential pattern may contain repeating items and we allow gaps (or unmatched symbols) between items. A sequential pattern \(X=({s_1}, \ldots ,{s_l})\) covers a segment \(S_k^s[t_a,t_b]\) if:

The cover and support of a sequential pattern X in a segmented time series dataset \({\mathcal {S}}^{s}\) is defined as:

A sequential pattern is frequent if its support is higher than a user-defined threshold on minimal support, or min_sup. Note that frequency based on a sliding-window is somewhat harder to interpret in the original time series since a sequential pattern often covers multiple overlapping windows.Footnote 2 For example, given discretised time series \(S_k=xx\) we create the following windows using \(\varDelta t=5\): xxxab, xxabc, xabcx, abcxx and bcxxx. Subsequently, the support of sequential pattern \(X=(a,b,c)\) in this instance is 3. A sequential pattern X is not closed if there exists a sequential pattern Z, such that X is a subsequence of Z and \( support (X,{\mathcal {S}}) = support (Z, {\mathcal {S}})\), e.g., if (a, b) and (a, b, c) have the same support closed pattern mining would only keep (a, b, c). After segmentation and discretisation we can use any sequential pattern mining algorithm to efficiently mine all frequent sequential patterns in time series (Zaki and Meira 2014; Aggarwal and Han 2014). However, as discussed previously, existing pattern mining algorithms have issues concerning continuous time series.

3.5 Problem setting

Given a training dataset of time series \({\mathcal {S}}_{ train }\) (or \({\mathcal {S}}^{1\ldots d}_{ train }\)) that is either univariate, multivariate or mixed-type the task is to predict the correct class \({\hat{y}}_q \in {\mathcal {Y}}\) for each test time series instance in \({\mathcal {S}}_{ test }=\{S_q\}_{q=1}^t\) or \({\mathcal {S}}^{1\ldots d}_{ test } = \{S^1_q,\ldots ,S^d_q\}_{q=1}^t\) for multivariate time series. We evaluate PETSC on accuracy, execution time and interpretability and compare with state-of-the-art time series classification methods on univariate and multivariate benchmark from the UCR/UEA time series classification archive (Dau et al. 2018).

4 Pattern-based embedding for time series classification

In this section, we describe a method that constructs a pattern-based embedding for time series classification (PETSC). PETSC has four major steps. First, the time series is segmented and transformed using SAX. Second, a dictionary containing the top-k most frequent sequential patterns with respect to temporal constraints is mined from \({\mathcal {S}}\) (or in each dimension \({\mathcal {S}}^d\) for multivariate time series). Third, the sequential pattern dictionary is used to compute the frequency of each pattern thereby creating a pattern-based embedding. Fourth, we train a linear model with L1 and L2 regularisation to separate each class based on the pattern-based embedding. In Sect. 5, we present three possible variants of our base PETSC classifier which we experimentally evaluate in Sect. 6.

The main steps of our method are shown in Algorithm 1. For brevity, we show only the version that takes as input a univariate time series. For multivariate (or mixed-type) time series, we repeat lines 1-9 for each time series and then merge the resulting embeddings, before constructing a classifier in line 10.

4.1 Preprocessing

Preprocessing is the first step in petsc_train shown in Algorithm 1 (line 1-4). First, we segment each time series in \({\mathcal {S}}\) using a fixed-length sliding window of length \(\varDelta t\). After this transformation, we create equal length windows (in time). Note that these windows do not necessarily contain the same number of items if sampling is irregular. Next, each continuous window \(S^s[t_a,t_b]\) is z-normalised because time series have widely different amplitudes. Frequent sequential patterns are often limited in length, i.e., traditionally a length of 5 is quite high, therefore we reduce the length of each window using the Piecewise Aggregate Approximation (PAA), such that a sequential pattern covers a large part of a window. For example, a continuous time series of length 1000 is transformed to \(1000-120 + 1\) sliding windows of length 120 (\(\varDelta t = 2 min \)). Each continuous window of length 120 is then reduced to a length of 16 using PAA (\(w=16\)) and consequently, the mean amplitude is discretised to 4 symbols (\(\alpha =4\)).

We remark that for larger datasets we could increase the increment of the sliding window to a larger value than 1, i.e., with a value of 2 (or 10) we decrease the number of windows \(|{\mathcal {S}}^s| = |{\mathcal {S}}| \cdot (|S| - \varDelta t + 1)\) by a factor of 2 (or 10) thereby conserving memory and decreasing execution time. Increasing the increment would mean skipping some overlapping segments, which would clearly result in a considerable reduction in runtimes, but it would potentially come at a cost of some important patterns not being discovered. In preliminary experiments we found that increasing the increment has a large influence on runtimes and a limited negative effect on accuracy, but since our algorithm is already very fast, we decided to set the default value of the window increment to 1, and not to skip any segments.

4.2 Discover top-k frequent cohesive sequential patterns

To create a dictionary for time series classification we propose a method based on frequent pattern mining to efficiently discover sequential patterns. Many different algorithms have been proposed to discover sequential patterns. However, continuous time series have their own challenges such as autocorrelation, the relatively small alphabet after the SAX transform and the goal to discover long and cohesive patterns similar to subsequences found by related dictionary-based methods. We propose the following goals for discovering patterns in time series:

-

Mine sequential patterns above a specified minimum length.

-

Enumerate the top-k most frequent sequential patterns directly instead of fine-tuning the threshold on minimal support (min_sup parameter) which is resource consuming.

-

Only consider cohesive occurrences of sequential patterns which is known to be important in literature on episode mining (Zimmermann 2014; Cule et al. 2019).

Relative duration

Traditionally, temporal constraints bound the number of gaps or duration of a pattern regardless of the length of the pattern (Pei et al. 2007). We propose a constraint on the duration of a pattern occurrence relative to the length of the pattern (\( rdur \)). The cover and support of a sequential pattern X in a segmented time series dataset \({\mathcal {S}}^{s}\) with respect to \( rdur \) are defined as:

That is, X must cover a subsequence of a segment that is at most \( rdur \cdot |X|\) long. For instance, if \( rdur \) is 1.2 this implies that we allow a maximal duration of \(\lfloor 4\cdot 1.2 \rfloor = 4\) for patterns of length 4, 6 for patterns of length 5 and 24 for patterns of length 20.

Algorithm

The algorithm mine_freq_sp, shown in Algorithm 2, discovers longer, frequent and cohesive sequential patterns. We start by creating an empty priority queue that stores all sequential pattern candidates sorted on support (line 1). During recursion we maintain candidate sequential patterns \(X=(s_1,\ldots ,s_l)\) and construct sequential patterns Z of length \(l+1\) where X is the prefix and item \(s_{l+1}\) is from the set Y of (unvisited) symbols, or candidate items. For incrementally computing projections of supersequences Z we also maintain the projection of X on \({\mathcal {S}}^s\) denoted as \(P_X\). Next, we create a heap of patterns (line 2). Like the queue, we also use a priority queue data structure, but this time sorted on descending support. Initially, \( min\_sup \) is 0 (line 3). However, after k candidates are discovered, the current minimal support in the heap is used to prune future candidates (line 13) instead of a fixed value for \( min\_sup \). In the main loop, we first retrieve the most likely candidate sequential pattern, i.e., the one with the highest support, from the priority queue (line 5). Next, we check if the set of candidate items is empty (line 6) and we have a leaf in our search process. In this case, we add the current candidate pattern to the pattern heap if its support is higher than \( min\_sup \) and the length of the pattern is greater than or equal to \( min\_len \) (line 7-9). Since we want to return at most k sequential patterns, the pattern with the lowest support is removed (line 10) and \( min\_sup \) is updated (line 11). Remark that we do not assume a maximum length, but since we depend on SAX, the length of any sequential pattern is limited by w. If the set of candidate items is not empty, we first check if the current candidate prefix is frequent (line 13). Next, we create two nodes in the search tree: the current sequential pattern X without the first item \(s_{l+1}\) in the set of candidate items Y (line 16) and the sequential pattern \(Z=(s_1,\ldots ,s_l, s_{l+1})\) (Line 20).

Pseudo-projections

Crucial to performance of mine_freq_sp is how to compute the support with respect to \( rdur \) and limit the number of candidates. We compute support (and candidates) using incremental pseudo-projections. A projection of X on \({\mathcal {S}}^s\) is denoted by \(P_X\) and consists of all windows \(S^s[t_i,t_j]\) covered by X with respect to \( rdur \). The support for sequential pattern X is then computed as \(|P_X|\). In the projection data structure we maintain the index of each window and offsets \(\langle t_1, t_l \rangle \) to the first (\(s_1\)) and last symbol (\(s_l\)) of \(X=(s_1,\ldots ,s_l)\) in the covered windows. The projection \(P_Z\) of supersequences \(Z = (s_1, \ldots , s_{l}, s_{l+1})\) are computed incrementally by checking if \(s_{l+1}\) occurs in the suffix of each window \(S^s[t_i,t_j]\), i.e., starting after the last offset of \(s_l\) for each window in \(P_X\). For example, assume \(S_k=xx\) and we create the following windows using \(\varDelta t=5\): \(w_1=xxxab\), \(w_2=xxabc\), \(w_3=xabcx\), \(w_4=abcxx\) and \(w_5=bcxxx\). After projection on \(X=(a,b)\) we keep references to windows and store \(P_X=\{w_1: \langle 4,5\rangle , w_2: \langle 3,4\rangle , w_3: \langle 2,3\rangle , w_4:\langle 1,2\rangle \}\) in memory. For supersequence \(Z=(a,b,c)\) we construct \(P_Z=\{w_2: \langle 3,5\rangle , w_3: \langle 2,4\rangle , w_4:\langle 1,3\rangle \}\).

Restricting projections using relative duration

Since we only consider cohesive occurrences, we use the relative duration \( rdur \) to further restrict the projection by translating this constraint to a constraint on the absolute duration and a limit on the number of gaps.

Firstly, there is limit on the maximum duration:

As a consequence, we limit the search for \(s_{l+1}\) in each window in \(P_X\) with offsets \(\langle t_1,t_l \rangle \) and range \([t_i,t_j]\) to indexes \(t_k \in [t_l+1, t_1 + max\_duration ]\) which might be smaller than \([t_l+1, t_j]\).

Secondly, the maximal number of remaining gaps is given by:

As a consequence we limit the search in each suffix to indexes \(t_k \in [t_l+1, t_l + max\_rem\_gap + 1]\). For example, assume we have \(X=(a,b)\), \(Z=(a,b,c)\), \( max\_len =5\), \( rdur =1.2\) and \(w_1=abxxxxxxxc\) and \(w_2=axbxc\). Z covers both windows, however for \(w_1\) the duration would be 10, but \( max\_duration \) of 6 is preventing a match with respect to \( rdur \). For \(w_2\) the duration would be 5, however, \( max\_gap\_total =1\) and \( current\_gaps = (3 - 1 + 1) - 2 = 1\), so \( max\_rem\_gap =0\) and since c is at index 5 and not 4 this would also not be a match.

4.3 Constructing the pattern-based embedding

Having obtained a dictionary \({\mathcal {P}}\), i.e., a set of varying length patterns for the segmented time series \({\mathcal {S}}^s\), PETSC maps \({\mathcal {S}}^s\) to a pattern-based embedding. In the embedding space each time series \(S_i\) is represented by a vector of size \(|{\mathcal {P}}|\), where each value is associated with the support of pattern \(X_1,\ldots ,X_{k}\) in \(S_i\). This is shown in Algorithm 1 (line 6-9) where we first initialise the embedding matrix \({\mathcal {F}}\) to \(|{\mathcal {S}}| \times |{\mathcal {P}}|\) dimensions. Next, we compute the support of each pattern in each time series, computed as the number of sliding windows covered by the pattern with respect to the constraint imposed by \( rdur \). Note that by using the support as feature, we regard both the (cohesive) occurrence of a pattern and its frequency as important components for discriminating classes. For instance, a pattern might occur on average 10 times in each time series of class A, while occurring only once in class B.

Naïve algorithm

We implemented a naïve algorithm that computes the support of every pattern X in \({\mathcal {P}}\) in each time series \(S_i\) (in both the training and test database). The time complexity of constructing the pattern-based embedding of \({\mathcal {S}}\) is \({\mathcal {O}}(|{\mathcal {P}}| \cdot |{\mathcal {S}}|)\). Since the number of patterns \(|{\mathcal {P}}|\) is controlled by the hyper-parameter k the total runtime is still reasonable since k is limited to a few thousand in practice for high accuracy. However, we remark that this results in a runtime that is an order of magnitude worse than the discovery of the top-k most frequent sequential patterns and might impede real-time applications. For example, in preliminary experiments on UCR datasets we found that computing the embedding takes minutes while mining the patterns only takes seconds.

Efficient algorithm

We can improve the naïve algorithm for computing the embedding based on the observation that the embedding matrix produced by PETSC is sparse and the density is often less than \(1\%\) on many datasets, similar to related dictionary-based methods such as BOP or SAX-VSM. For efficiently computing the sparse embedding we use prefix-projected pattern growth to identify all segments matching the pattern X without the cost of matching the full pattern \(X=(s_1,\ldots ,s_l)\). First, we compute the projection \(P_{X_p}\) for each prefix \(X_{p}=(s_1,\ldots ,s_p)\) for p from 1 to l incrementally. The number of segments matching in each successive projection, \(P_{X_p}\), grows smaller (since support is anti-monotonic), thereby discarding segments that do not match prefix \((s_1,\ldots ,s_p)\) before considering them for \((s_1,\ldots ,s_p,s_{p+1})\). By matching the pattern X with respect to \( rdur \) using incremental prefix-projections and given that in practice most patterns cover only a few time series with respect to \( rdur \), most windows are discarded early on without the computational cost of matching the complete pattern. In preliminary experiments on UCR datasets we found that constructing the embedding with this algorithm took seconds where the naïve algorithm would take minutes.

4.4 Constructing the time series classifier

The final step of PETSC (line 10 of Algorithm 1) is to construct the model for classifying time series. In principle we could use any classification algorithm such as a random forest or k-nearest neighbours, but given that we are interested in interpretable results and because of its simplicity we adopt a simple linear model. For each label \(y \in {\mathcal {Y}}\) we learn a linear model that separates time series \(S_i\) based on its pattern-based embedding \(F_i\) (after normalisation):

The model coefficients \(W=(w_0,\ldots ,w_k)\) are learned by minimising the following loss function:

The loss function combines regression with L1 and L2 regularisation referred to as an elastic net (Zou and Hastie 2005). L1, or LASSO, regularisation is particularly useful since frequent pattern-based features that are not discriminative have a high likelihood of a zero coefficient making the model more condensed and improving overall interpretability. Meanwhile, combining it with L2, or ridge, regularisation overcomes limitations by adding a quadratic part to the penalty which makes the loss function convex and a unique minimum is guaranteed. We remark that for multi-class time series datasets we use a one-vs-all approach.

5 Optimisations

In this section, we discuss possible optimisations of the PETSC algorithm presented above. Concretely, we develop three additional variants of the algorithm. First, we propose MR-PETSC, an ensemble method that combines patterns discovered in different resolutions. Second, we propose PETSC-DISC, a method that mines the top-k most discriminative patterns directly. Third, we present PETSC-SOFT that relaxes the requirement of an exact match to deal with discretisation errors. Next we discuss the use of common transformations to the original time series before applying dictionary-based methods. We conclude this section with an analysis of the time and space complexity of PETSC and variants.

5.1 Ensemble of multiresolution frequent sequential patterns

A disadvantage of PETSC is that, like related dictionary-based methods, its accuracy is highly dependent on the hyper-parameter \(\varDelta t\). Additionally, PETSC ignores discriminative patterns at different resolutions. Both of these issues are addressed by the MR-PETSC variant, shown in Algorithm 3 and illustrated in Fig. 6. In this approach, we still mine patterns with the same constraints and the same parameters for the SAX representation, but we do this recursively starting with a single segment equal to the length of the time series (or the minimum length in varying length time series) (line 3) and recursively divide the sliding window by two until the segment length is lower than the SAX word size (line 4 and 12). At each resolution, we join the embedding vectors (line 5-11) and train a linear model on the final embedding vector where we combine pattern-based feature values from different resolutions (line 13). The overhead of MR-PETSC is limited since we create at most \( log (|S|)\) representations. For instance, if the original time series length is 128, we first create a single segment with \(\varDelta t = 128\), then \(\varDelta t\) is equal to 64, 32 and finally 16 (assuming w is 15). In the embedding vector we will have \(k \times 4\) pattern-based feature values from 4 different resolutions.

We remark that MR-PETSC produces high accuracy with default parameters, i.e., if we mine the top-200 sequential patterns with a minimum length of 5 and a constraint on relative duration of 1.1 on SAX strings consisting of 4 symbols (\(\alpha =4\)) and a fixed length of 15 (\(w=15\)). However, for optimal performance we tune the hyper-parameters. We experimentally validate the accuracy of this ensemble method in Sect. 6.

5.2 Direct mining of the most discriminative patterns

In PETSC we mine all frequent patterns and rely on the elastic net classifier to select patterns that are frequent in one class rather than over different classes. We now propose PETSC-DISC, an alternative mining algorithm that discovers the most discriminative sequential patterns directly. Given a segmented time series dataset \({\mathcal {S}}^s\), label \(\lambda \), time series windows with label \(\lambda \) (denoted by \({\mathcal {S}}^s_{\lambda ^{+}}\)) and any other label (denoted by \({\mathcal {S}}^s_{\lambda ^{-}}\)), we define discriminative support, or contrast, as

The algorithm for mining discriminative patterns directly is shown in Algorithm 4. A key difference with Algorithm 2 is that we employ heuristic search using \( contrast \) and visit candidates with high contrast first. However, since contrast is not anti-monotonic we cannot prune on contrast nor guarantee that after i iterations the current discovered top-k patterns have the highest contrast overall.

We start by creating an empty priority queue that contains all sequential pattern candidates sorted on contrast and a heap of discovered patterns sorted on descending contrast (line 1-2). Like before, during recursion we maintain candidate sequential patterns \(X=(s_1,\ldots ,s_l)\) and construct sequential patterns Z of length \(l+1\) where X is the prefix and item \(s_{l+1}\) is from the set Y. For efficiently computing contrast we maintain separate projections of X on time series instances with and without label \(\lambda \), i.e., computed on \({\mathcal {S}}^s_{\lambda ^{+}}\) and \({\mathcal {S}}^s_{\lambda ^{-}}\), and denoted as \(P_{X,\lambda ^{+}}\) and \(P_{X,\lambda ^{-}}\). In the main loop, we first retrieve the candidate sequential pattern with the highest contrast from the priority queue (line 5). If the set Y of candidate items is empty, we add the current candidate (if \(|X| \ge min\_len \)) to the pattern heap. As soon as k patterns have been found we remove the pattern with the lowest contrast from the heap (line 9-10). If the set of candidate items is not empty, we also remove discriminative patterns that are too infrequent overall, using the \( min\_sup \) parameter (line 13). Next, we create two nodes in the search tree: the current sequential pattern X without the first item \(s_{l+1}\) in the set of candidate items Y (line 16) and the sequential pattern \(Z=(s_1,\ldots ,s_l, s_{l+1})\) (line 21). Here, we compute incremental pseudo projections on both \({\mathcal {S}}_{\lambda ^{+}}\) and \({\mathcal {S}}_{\lambda ^{-}}\) (line 18-20). The main loop stops if the queue is empty, meaning we have enumerated all frequent sequential patterns. Alternatively, we stop after a fixed number of iterations i (line 4) or dynamically, i.e., when no new patterns with high contrast are discovered in the last k iterations. For multiclass datasets we run mine_discr_sp for each label and join all patterns resulting in a set of at most \(|{\mathcal {Y}}|\cdot k\) patterns. We then return the top-k patterns with the highest contrast for any label. We remark that we also experimented with sequential covering to remove redundant patterns (Cheng et al. 2008). However, in preliminary experiments, we found that the increase in accuracy was too marginal to justify the additional complexity and runtime of sequential covering.

5.3 Relaxing exact pattern matching

Several alternatives have been proposed to create an embedding based on sequences, shapelets or sequential patterns. Laxman et al. (2007) proposes to compute frequency based on non-overlapping occurrences for discrete patterns. Hills et al. (2014) propose to compute the minimal Euclidean distance between each contiguous (and continuous) pattern and continuous segment in the time series and Senin and Malinchik (2013) propose to compute class-specific weights using TF-IDF. From a quality perspective we argue that for matching discrete patterns small differences might be ignored, e.g., given the pattern \(X=(a,a,a,b,b,b,b)\) and the segment \(S_1=(a,a,a,b,b,b,c)\) the distance is small and the mismatch possibly due to noise. Especially as the discretisation error grows with long patterns, e.g., for a pattern of length 14 and an alphabet of size 3 the average rounding error accumulates to \(14 \cdot 1/(2 \cdot 3)\). Based on this observation, we propose to count near matching occurrences as an alternative to exact matching and propose another variant of PETSC, namely PETSC-SOFT. For a pattern X and segmented time series \({\mathcal {S}}^s\) we define the soft support as:

Here \(\frac{|X|}{2\cdot \alpha }\) returns the average rounding error and \( dist \) is the Euclidean distance.Footnote 3 For instance, in our previous example, with \(\tau = 1.0\), X would match segment \(S_1\) since \(dist(X,S_1) = 1\) is lower than the expected rounding error of \(7/(2\cdot 3) =1.166\). We introduce the parameter \(\tau \) to allow user-defined control for soft matching, e.g. given \(\alpha =4\) and \(\tau =1.0\), we would allow a distance of 0 for patterns of length 7, 1 for patterns of length 8 and 2 for patterns of length 16. For \(\tau \) equal to 2 or 3 matching is more relaxed. Remark that when using soft matching, we assume the relative duration to be 1.0, as the method’s complexity would severely increase otherwise. In Sect. 6 we experimentally validate \( support _{ soft }\).

5.4 Basic time series transformations

While the original authors of both BOP and SAX-VSM report more wins over 1-NN DTW on their selection of datasets, the opposite is reported by Bagnall et al. (2017) on the complete UCR benchmark. We remark that both methods have their own biases and that performance will vary depending on the type of dataset, akin to the No Free Lunch Theorem (Wolpert and Macready 1997). Nearest neighbour with DTW will miss correlation of discriminative subsequences at different locations. Inversely, BOP misses overall correlation of two time series by extracting only local phase-independent patterns. Consequently, ensemble methods that combine both approaches such as DTW-F (Kate 2016) result in an overall increase in accuracy. We conclude that applying the following basic time series transformations (Hyndman and Athanasopoulos 2018) on \(S_i=(x_1,\ldots ,x_n)\) may result in improved performance:

-

Derivative (or lag \(n=1\)): \({\hat{x}}_t = x_t - x_{t-1}\)

-

Second derivative (or lag \(n=2\)): \(x_t = {\hat{x}}_t - {\hat{x}}_{t-1}\)

-

Logarithm: \(x_t = \ln x_t\)

-

Logarithm of derivative: \(x_t = \ln {\hat{x}}_t\)

Especially, in the context of the UCR benchmark that contains many smaller datasets of synthetic and/or sine-like nature with a strong overall correlation, applying dictionary-based methods on both the original time series and after each transform might enhance the ability to discover local discriminative patterns. For instance, on the UCR Adiac dataset the error of DTW is 0.376 and the error of PETSC is 0.381. However, after transforming this dataset using the logarithm the error of PETSC drops to 0.350. However, to be fair to all methods, we did not apply any time series transformations on the data used in our experiments in Sect. 6.

5.5 Time and space complexity

Since PETSC mines sequential patterns on each dimension independently the execution time is linear in the number of dimensions d for multivariate time series. The total number of windows in the sequential database for mining in each dimension is dependent on the number of instances \(|{\mathcal {S}}|\), the time series length |S| and the sliding-window interval \(\varDelta t\), i.e. \(|{\mathcal {S}}^s| = |{\mathcal {S}}| \cdot (|S| - \varDelta t + 1)\). If we assume that top-k frequent cohesive sequential pattern mining is linear in \(|{\mathcal {S}}^s|\) the complexity of MR-PETSC for multivariate datasets is approximately \({\mathcal {O}}(d \cdot |{\mathcal {S}}| |S| \log (|S|))\).Footnote 4

For univariate time series PETSC is thus linear in both the training set size and time series length while MR-PETSC is quasilinear in the time series length since we consider at most log(|S|) SAX representations. Similar to MR-PETSC, MR-SEQL is also linear in the training set size and quasilinear in the time series length. However, for high accuracy MR-SEQL uses \(\sqrt{|S|}\) different SAX and SFA representations, resulting in a slighly worse complexity of \({\mathcal {O}} (d \cdot |{\mathcal {S}}| |S|^{\frac{3}{2}} log(|S|))\). Other scalable multivariate time series methods include Proximity Forests and TS-CHIEF that are based on an ensemble of decision trees are quasilinear in the training set size, but quadratic in the time series length. Finally, ROCKET is only linear in the time series length, i.e. the complexity on multivariate time series is given by \({\mathcal {O}} (d \cdot k |{\mathcal {S}}||S|)\). However the constant k that specifies the number of different kernels to train on is high, i.e. the suggested default is 10, 000.

From a space complexity perspective, the proposed pattern mining algorithms relies on a depth-first search strategy to enumerate candidate sequential patterns and as such has manageable memory requirements, i.e. the mining algorithm only has to keep at most \(max\_len\) candidate sequential patterns in memory, which is limited by w. Likewise the size of heap is limited by the maximal number of patterns k. Furthermore, by relying on incremental pseudo-projections of the sequential database, the space complexity is bounded by the representation of the sequential database itself which is linear in the training set size and linear in the time series length. The embedding data structure is linear in the training set size and in the number of patterns. Since the number of patterns k is limited to 1000 in practice and by adopting sparse data structures (since most pattern occurrences will be 0) this is efficient. Overall, for MR-PETSC, the space complexity is \({\mathcal {O}}(d \cdot k \cdot log(|S|) |{\mathcal {S}}|)\) since we concatenate \(|{\mathcal {S}}|\) embedding vectors of length k for each dimension d in different resolutions log(|S|) in memory.

For large multivariate datasets, we see that in practice out-of-memory errors can occur, since a space complexity of \({\mathcal {O}}(|{\mathcal {S}}|\cdot |S|)\) for the sequential database can be problematic for very large datasets. In this case, we suggest to limit memory consumption by selecting a larger for the window increment i. For instance, for EigenWorms \(|{\mathcal {S}}^s|=128 \cdot (17{,}984 - 20+1) \approx 2.2 \cdot 10^6\) for \(\varDelta t = 20\). By selecting a larger window increment (or stride) than 1, we can reduce the size of the sequential database by a constant factor. For instance, given an increment i equal to \(\varDelta t\) (i.e. non-overlapping sliding windows), the number of windows in each time series drops with |S| /i. For instance, for Eigenworms \(|{\mathcal {S}}^s|=128 \cdot (17{,}984) / 20 = 115{,}098\) for \(i = \varDelta t = 20\). For large multivariate datasets this can lead to a speed-up proportional to i, however the frequency of patterns is then computed only approximately, as we require overlapping sliding windows to compute the exact count of pattern occurrences.

6 Experiments

In this section, we compare PETSC and its variants with existing state-of-the-art methods.

6.1 Experimental setup

Datasets

We compare methods against univariate and multivariate datasets from the UCR/UEA benchmark (Dau et al. 2018). In Table 1 we show the details on a subset of 19 univariate datasets from the UCR archive (Senin and Malinchik 2013). In Table 2 we show the details on 19 multivariate datasets from the UCR archive (Bagnall et al. 2018). We selected multivariate time series where |S| was at least 30 and the total file size was not too large (i.e., \(<300\) MB). We also compare totals against the 85 ‘bake off’ univariate datasets (Bagnall et al. 2017; Dau et al. 2018), and 26 ‘bake off’ equal-length multivariate datasets (Bagnall et al. 2018; Ruiz et al. 2021). Time series are from different domains and applications, i.e., time series extracted from images (such as shapes of leaves), resulting from a spectrograph or medical devices (ECG/EEG) and various sensors, simulations, motion detection and human activity recognition (HAR).

State-of-the-art methods

We compare the accuracy of PETSC and its variants with base learner such as 1-NN with DTW and comparable interpretable dictionary-based methods such as BOP, SAX-VSM and MR-SEQL (Le Nguyen et al. 2019) for univariate datasets. We also compare with non-interpretable dictionary-based methods based on a Bag of SFA Symbols, such as BOSS (Schäfer 2015), cBOSS (Middlehurst et al. 2019), S-BOSS (Large et al. 2019) and TDE (Middlehurst et al. 2020b), methods based on deep learning such as ResNet (Wang et al. 2017) and InceptionTime (Fawaz et al. 2020) and heterogeneous ensemble methods such as Proximity Forests (Lucas et al. 2019), ROCKET (Dempster et al. 2020), HIVE-COTE (Lines et al. 2018) and TS-CHIEF (Shifaz et al. 2020).

For multivariate datasets we compare with base learner such as 1-nearest neighbour classification using either Euclidean distance (1-NN ED), dimension independent dynamic time warping (DTWI) and dimension dependent dynamic time warping (DTWD) (Shokoohi-Yekta et al. 2017). We also compare with ensembles of state-of-the-art univariate classifiers that train a separate classifier over each dimension independently, such as ROCKET, HIVE-COTE, ResNet, cBOSS, Shapelet Transform Classifier (STC) (Hills et al. 2014) and methods based on random forests using shapelet-based and other time series features, such as Time Series Forest (TSF) (Deng et al. 2013), Generalized Random Shapelet Forest (gRSC) (Karlsson et al. 2016) and Canonical Interval Forest (CIF) (Middlehurst et al. 2020a). In univariate and multivariate experiments we use the publicly reported results from the UCR/UEA benchmark and ‘bake off’ studies (Bagnall et al. 2017, 2018; Ruiz et al. 2021).

Parametrisation of methods

All dictionary-based methods have the same preprocessing steps which include setting an appropriate window size for segmentation (\(\varDelta t\)) and setting the word (w) and alphabet (\(\alpha \)) length for the SAX (or SFA) representation. BOP and SAX-VSM have no additional parameters. BOSS has an additional parameter that controls if normalisation should be applied. For mining patterns PETSC has three additional parameters, namely the number of patterns (k), minimum length of a pattern (\( min\_len \)) and a constraint on the duration (or cohesion) of pattern occurrences (\( rdur \)). PETSC-SOFT has an additional parameter \(\tau \) to control soft matching. PETSC-DISC has an additional \( min\_sup \) parameter to control the minimal support for discriminative patterns which we set to 3. For MR-PETSC we report the results with default parameters (i.e., \(w=15\), \(\alpha =4\), \(k=200\), \( min\_len =5\) and \( rdur =1.1\)) and varying parameters. For all PETSC variations we set the regularisation parameters \(\lambda _1\) and \(\lambda _2\) for linear regression to default values (i.e. . We remark that the implementation and experimental scripts for PETSC are implemented using Java and Python and are open source.Footnote 5

Hyper-parameter optimisation

For optimising preprocessing parameters \(\varDelta t\), w and \(\alpha \) we use random search on a validation set (Bergstra and Bengio 2012). That is, we iterate through a fixed number of randomly sampled parameter settings and keep the parameter setting with the best accuracy after 100 iterations. Here, \(\varDelta t\) is randomly sampled between \(10\%\) and \(100\%\) of the time series length. For SAX, w is between 5 and 30 and \(\alpha \) between 3 and 12. For all PETSC variants, k is between 500 and 2500, \( min\_len \) in \(\{0.1w, 0.2w, 0.3w, 0.4w\}\) and \( rdur \) in \(\{1.0,1.1,1.2,1.5\}\). For PETSC-SOFT, \(\tau \) is in \( \{1/2\alpha , \ 2/2\alpha ,\ 3/2\alpha \}\).

6.2 Effect of hyper-parameters on the accuracy of PETSC

We begin our experimental analysis by having a closer look at the effect various hyper-parameters have on the accuracy of our algorithm.

Window and SAX parameters

In this experiment we run PETSC on a grid with varying window, SAX word and alphabet length on Adiac and Beef. Figure 7 shows the test error for varying w and \(\alpha \) for PETSC on Adiac when \(\varDelta t\) is \(0.5 \cdot |S|\) and Beef when \(\varDelta t\) is \(0.1 \cdot |S|\). Default values for mining are \(k=1000, min\_len =0.3w\) and \( rdur =1.0\). First, we observe that accuracy deteriorates for small values of either w or \(\alpha \). Next, we observe that for Adiac and Beef the error is minimal for high values of \(\alpha \) and medium values for w, or the other way around with medium values for \(\alpha \) and high values for w, but slightly worse if both parameters are set to high values.

In Adiac the optimal parameters result in frequent patterns of length 5 to 19 consisting of 12 distinct symbols and cover almost half the time series (\(\varDelta t = 0.5 \cdot |S|\)), which is sensible since in Adiac time series are overall similar long sine-like waves, where subtle differences result in a different label. In Beef the optimal parameter for \(\varDelta t\) is 10% and for \(\varDelta t =0.25 \cdot |S|\) and \(\varDelta t=0.5 \cdot |S|\) the minimal error (for any combination of w and \(\alpha \)) increases with \(6\%\) and \(10\%\). This suggests that short patterns are far more discriminative for this dataset and explains the high accuracy of PETSC on this dataset.

Pattern mining parameters

In this experiment we run PETSC on a grid with varying k, minimum length and relative duration on Adiac and Beef. Figure 8 shows the test error for varying k and \( min\_len \) when \( rdur \) is 1.0 with optimal window and SAX parameters. First, we observe that setting k too low or \( min\_len \) too high (i.e. more than w/2) results in lower accuracy. Second, we observe that the error (for any combination of k and \( min\_len \)) increases with \(4\%\) and \(10\%\) with a relative duration of 2.0. We remark that it only took a couple of seconds to run PETSC for each parameter setting with a maximum of 30s for high values of \( rdur \) and k and low values of \(\varDelta t\).

Based on these and further experiments we conclude that:

-

The parameter \(\varDelta t\) is domain-specific and ranges from \(0.1 \cdot |S|\) to \(0.5\cdot |S|\) and has a severe impact on accuracy. For short time series (i.e. \(|S| < 50\)), we set \(\varDelta t = |S|\), but this invalidates the use-case of discovering local phase-independent patterns.

-

SAX parameters \(\alpha \) and w should be sampled together and have a severe impact on accuracy. Too low values might degrade performance, while setting both values high causes too much entropy.

-

The parameter \( rdur \) is domain-specific and has impact on accuracy. On Adiac and Beef a value of 1.0 works best, however, we see that on most datasets the error is lowest if \( rdur \) is 1.1 or 1.5. If \( rdur \) is set too high (i.e., 2.0 or more) this results in degraded performance.

-

For mining, k has less influence on accuracy but should be set sufficiently high, i.e., 1000 is a good default value. We experimented with k up to 5000, but this did not improve results. Finally, \( min\_len \) should be lower than w/2, but not lower than 3.

6.3 Explaining PETSC and MR-PETSC

In this section we first discuss how we can use our model for instance-based decision support by visualising attribution and how to visualise and learn from the intrinsically interpretable model. We also provide a use-case where we discuss techniques to reduce the number of patterns to further improve explanations.

6.3.1 Instance-based decision support

For interpretability, we can compute the influence, or attribution of each sequential pattern occurrence weighted by the coefficient of the linear model. We use this attribution to highlight regions of the time series that lead to predicting each label y or not. First, we determine for each sequential pattern \(X_k\) its occurrences in the segmented SAX representation of a time series \(S_i\). Next, we compute the inverse transform that maps each occurrence back to the original raw time series based on the window offset and the scaling factor \(\varDelta t/w\). Next, we compute the attribution of a pattern at each location in \(S_i\) which is \(w_k / support _{ rdur }(X_k)\) where \(w_k\) is the corresponding coefficient of pattern \(X_k\) in the linear model. We remark this only includes patterns that occur at least once in \(S_i\) and have a non-zero coefficient \(w_k\). For interpretability, we make sure the intercept of the linear model (\(w_0\)) is 0 and that the embedding vector is mean-centred, such that the weights multiplied by the feature values (feature effects) explain the contribution to the predicted outcome (Molnar 2020). The sum of the attribution of each pattern at each location indicates the relative importance of a location and the sign indicates if is a positive or negative for class y. For MR-PETSC the sum of attributions is composed of patterns at each resolution, but this does not impede interpretation compared to PETSC, since we still employ a single linear model of patterns. In Fig. 9 we show the first two time series with different label for the GunPoint, ECG200 and TwoPatterns dataset where we highlight discriminative regions by visualising the sum of pattern attributions at each location. In the visualisation, the colour and the thickness of the line are determined by the sign and the absolute value of the sum of attributions. We observe that for discriminating between the Gun-drawn and Point gestures in the GunPoint dataset, the small dip after the main movement is discriminative for the Point gesture which confirms existing work (Ye and Keogh 2011; Le Nguyen et al. 2019).

6.3.2 Global explanations

PETSC learns an intrinsic sparse interpretable model, consisting of k weights \(w_1, \ldots w_k\) of the linear model and the corresponding k sequential patterns. We can inspect this model, visually or otherwise, and provide decision support instance-based as explained previously, on a feature level, i.e., by rendering all occurrences of a single pattern, or globally, for instance by rendering the most discriminative patterns directly as shown in Fig. 3.

In Table 3 we show the top-5 patterns with the highest absolute weight \(w_k\) in each resolution on the Gunpoint dataset where MR-PETSC has an error of 0.04 (\(w=20\), \(\alpha =12\), \(k=250\), \( min\_len =6\) and \( rdur =1.0\)). We remark that there is redundancy between the patterns and we will discuss techniques to handle this in the next section. A positive weight and a large absolute value indicate that the first pattern is specific to class Gun, meaning the gesture of drawing a gun and holding it, instead of pointing a finger. Note that our model is sparse, which is beneficial for explanations (Molnar 2020), and that the first pattern only occurs in 8% of time series windows. We conclude that PETSC and MR-PETSC offer a transparent model (Molnar 2020) and enable human experts to inspect each pattern specific to class A or B and trust decisions for any possible instance.

6.3.3 Interpretation use-case

While the SAX representation is interpretable, as are the resulting sequential patterns, it is not obvious how to explain real-world use-cases, especially since MR-PETSC discovers thousands of patterns in multiple resolutions. Therefore we further illustrate this based on a use-case. We consider the BeetleFly dataset from the UCR/UEA benchmark. The BeetleFly dataset consists of 20 training images of either a beetle or fly transformed to a time series as illustrated in Fig. 10.

Top figure shows instances of the BeetleFly and BirdChicken datasets. Bottom figure illustrates the outline of a beetle represented as a time series where a subsequence is highlighted in blue (Hills et al. 2014). With optimised pre-processing parameters and after removing redundant patterns based on Jaccard similarity PETSC achieves an accuracy of 80% and the model consists of a single subsequence which can subsequently be highlighted in each image outline to facilitate trivial interpretation

Identifying optimal hyper-parameters

We used a random search to identify that the optimal SAX representation consists of 12 bins (\(\alpha =12\)) and words of size 30 (\(w=30\)). For pattern mining the optimal parameters are \(k=1000\), \(min\_len=8\) and \(rdur=1.0\). Note that using random search we identified multiple parameter settings at which the error on the validation set was 0.0. The total time for running MR-PETSC 100 times with random parameters was 10 min using 8 cores. Note that using our open-source code it is easy to identify optimal parameters using random search on a validation set (using multiple threads) and then run the method with the optimal parameters on the test set.

Accuracy

We find that MR-PETSC achieves state-of-the-art performance with a large relative increase over ROCKET on many datasets originating from the shapelet tranformation method (Hills et al. 2014), such as BeetleFly where the accuracy is \(100\%\). Shapelets and sequential patterns are both based on rotational-invariant subsequences, so it is not surprising that both approaches work well on similar datasets. The relative improvements achieved by MR-PETSC over ROCKET on such datasets is significant, i.e., BeetleFly (\(+13\%\)), Herring (\(+12\%\)), Lightning2 (\(+12\%\)), CinCECGTorso (\(+10\%\)), FaceFour (\(+7\%\)) and BirdChicken (\(+2\%\)).

Multi-resolution pattern visualisation

The benefit of our model is that at each resolution we have the sequence of symbols of each pattern. Moreover, we can match each sequential pattern to the time series and inspect the individual occurrences. In Fig. 11 we show 4 resolutions of the same time series of a fly and a beetle. With a window of 512 the window size is equal to the time series length. In the last subfigure each window has size 64 or 1/8 of the time series. Remark that by using the same SAX parameters on different windows results in more fine-grained patterns matching more closely a shorter piece of the time series. For visualisation purposes we normalise the entire time series instead of applying window-level normalisation.

Reducing the number of patterns

A problem is that by having 4 resolutions with 1000 patterns each, interpretation becomes difficult. We propose to reduce the number of varying length patterns in each resolution significantly by removing redundant patterns, thereby removing patterns while maintaining high accuracy. Pattern redundancy has been extensively studied in the pattern mining community (Han et al. 2011; Aggarwal and Han 2014). We chose to remove redundant patterns based on Jaccard similarity, defined as:

Next we create a non-redundant pattern set \({\mathcal {P}}'_{\gamma }\) such that no two patterns in the set have Jaccard similarity higher than \(\gamma \), that is we add \(X_j\) to \({\mathcal {P}}'_{\gamma }\) if and only if \(\not \exists X_i \in {\mathcal {P}}'_{\gamma }: i\ne j \wedge jaccard (X_i,X_j,{\mathcal {S}}^s) > \gamma .\) For instance, with \(\gamma =0.9\) we remove patterns where \(90\%\) of occurrences overlap. The Jaccard similarity is of particular interest because of the sliding window-based preprocessing which results in many patterns that overlap within a small delay, i.e., given the SAX sequences (a, a, a, b, b) and (a, a, b, b, b) we have frequent sequential patterns \(X_1 = (a,a)\), \(X_2 = (a,b)\), \(X_3=(b,b)\) and \(X_4 = (a,a,b,b)\) which all make each other redundant, and it is safe to remove all but one of them.