Abstract

Since neural networks play an increasingly important role in critical sectors, explaining network predictions has become a key research topic. Counterfactual explanations can help to understand why classifier models decide for particular class assignments and, moreover, how the respective input samples would have to be modified such that the class prediction changes. Previous approaches mainly focus on image and tabular data. In this work we propose SPARCE, a generative adversarial network (GAN) architecture that generates SPARse Counterfactual Explanations for multivariate time series. Our approach provides a custom sparsity layer and regularizes the counterfactual loss function in terms of similarity, sparsity, and smoothness of trajectories. We evaluate our approach on real-world human motion datasets as well as a synthetic time series interpretability benchmark. Although we make significantly sparser modifications than other approaches, we achieve comparable or better performance on all metrics. Moreover, we demonstrate that our approach predominantly modifies salient time steps and features, leaving non-salient inputs untouched.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Explainable Artificial Intelligence

- Counterfactual Explanations

- Multivariate Time Series

- Generative Adversarial Networks

- Long Short-Term Memorys

1 Introduction

With the advent of machine learning for decision making in critical sectors like healthcare, predictive maintenance, or traffic, serious concerns have been raised about the trustworthiness of these algorithms. In recent years, the field of explainable artificial intelligence (XAI) has therefore gained increasing popularity. While manifold techniques for explaining tabular data and image classifiers have been proposed, temporal data has largely been neglected. In contrast to image data, time series interpretability poses manifold challenges, including the presence of distinct time and space dimensions and an increased difficulty of visualizing information in a meaningful way. Recent work has raised strong concerns about the adaptability of prevalent XAI methods to multivariate time series [7].

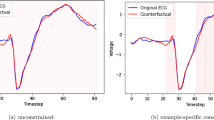

Counterfactuals generated using a state-of-the-art approach [16] and our approach for a multivariate time series. Columns represent features and rows represent time steps. The curves arranged right to the boxes depict respective sequences for one of the center features.

Counterfactual Explanations. Derived from philosophical reasoning, counterfactual explanations try to find modifications to an input query so that the classification changes to a desired class [18]. Features of the input query can be mutable, i.e. the values can and may be modified, or immutable. A valid counterfactual should only modify mutable input features [8]. Meaningful counterfactual explanations can guide users towards a better understanding of decisions made by a system. If a classifier predicts a certain disease risk based on a patient’s medical record, it is helpful to understand not only what factors led to the decision, but also what factors would have to change and in which way to minimize the risk.

1.1 Objectives for Counterfactual Explanations

Precision, Similarity & Realism. A valuable counterfactual explanation is close to the original data point, looks plausible and realistic and suggests actionable modifications [2, 9]. The choice of distance functions to measure the actionability of a counterfactual has been a topic of discussion. The original approach by [18] iteratively minimizes the distance between the predicted class for the counterfactual and the target class (via \(\mathcal {L}_{2}\) norm) as well as the distance between query and counterfactual (via \(\mathcal {L}_{1}\) norm) using gradient descent. [2] additionally assess realism of the generated counterfactual by measuring how likely it is that the counterfactual stems from the observed data distribution.

Sparsity. [2] implement sparsity as the \(\mathcal {L}_{0}\) norm between query and counterfactual, that measures how many features were changed to go from the original data point to the counterfactual. [14] do not include sparsity into the loss function, but modify the generated counterfactuals post-hoc using a greedy algorithm to set increasingly more features with smaller modifications zero until the prediction changes. In contrast, [9] define a rigid threshold for sparsity stating that a good counterfactual for tabular data may only modify up to two features. Adapting this paradigm to time series, [3] only allow for modification of one single contiguous section of the time series. Others only ensure feature sparsity, while modifying all time steps of the sequence [1].

Similarity vs. Sparsity. Figure 1 demonstrates why similarity alone does not guarantee actionability. The counterfactual generated by our approach makes sparse, but more substantial modifications, while the counterfactual generated using a state-of-the-art approach makes minor changes in all time steps and features. If solely regularized by the \(\mathcal {L}_{1}\) norm (i.e. the similarity constraint), the latter would be preferred. Taking the actionability of the counterfactual into account, one would most likely prefer the counterfactual generated by SPARCE despite the higher \(\mathcal {L}_{1}\) loss. As a consequence, sparsity plays a central role in our approach.

1.2 Generative Approaches

To generate more realistic and plausible counterfactuals, while overcoming high computational costs of iterative optimization methods, generative adversarial networks (GANs) have recently been introduced for the generation of counterfactual explanations [16, 17]. GANs have become popular for generating realistic looking fake images by training a generator to create fake samples that a discriminator would erroneously perceive as real samples [4]. GAN-based architectures for counterfactual search add a classifier to the standard GAN approach. In this way, the generator learns to produce realistic looking counterfactuals that change the classifier’s prediction to a target class.

While [16] only evaluate their model on image and tabular data, [17] also assess their approach on univariate time series. Both approaches use \(\mathcal {L}_{1}\) or \(\mathcal {L}_{2}\) norms as regularization terms that act on the generator’s loss function. Particularly for multivariate time series, this formulation is problematic, since it creates proximate, but not sparse counterfactuals. Indeed, sample counterfactuals generated by [17] modify every single time step of the query sequence. In some domains, this might be necessary. However, it is questionable whether such a counterfactual explanation would have any explanatory power. Besides, it is unclear whether these modifications could actually be acted upon in reality. Our approach is thus designed to create truly sparse counterfactual explanations for multivariate time series without compromising other important objectives of counterfactuals, including realism, similarity, and plausibility.

2 Method

Motivated by the insufficient adaptation of counterfactual approaches to multivariate time series, we propose SPARCE: a novel framework to efficiently generate SPARse Counterfactual Explanations for multivariate time series data. Our approach aims to change the class label of an original time series to a target class (precision). Generated counterfactuals should be within the distribution of the original data points (realism) and stay as close as possible to the query sequence (similarity). In contrast to related approaches for multivariate time series, we postulate that counterfactuals are time- and feature-sparse, i.e. that only a subset of features and time steps is modified (sparsity). Finally, for applications where time series evolve smoothly over time, we aim to modify the original data point in a temporally plausible manner (smoothness).

2.1 Generating Counterfactual Explanations

Basing our approach on a generator-discriminator architecture, we ensure realism of the generated samples. In line with [16] we define a modified generator \(\mathcal {G}\) which learns to generate residuals \(\mathbf {\delta }= \mathcal {G}(\textbf{x}_{q})\) from the input sample \(\textbf{x}_{q}\). In contrast to standard GANs, the generator does not use a random seed, but real samples as inputs. Thus, original samples are first divided into queries \(\textbf{x}_{q}\) and targets \(\textbf{x}_{t}\). Targets are samples labeled as the target class \(\textbf{c}_{t}\) and are used as real examples for the discriminator \(\mathcal {D}\). The query subset contains all other samples and is presented as inputs to the generator \(\mathcal {G}\). Residuals created by the generator are added to the query to produce a counterfactual (\(\textbf{x}_{cf}= \textbf{x}_{q}+ \mathbf {\delta }\)). A pre-trained classifier \(\mathcal {C}\) determines the class prediction for the generated counterfactual. At the same time, the counterfactual is presented to the discriminator as a fake sample. In combination with real target samples, the discriminator tries to distinguish between real and fake (i.e. generated) samples. The realism of the generated counterfactual examples increases as the generator learns to fool the discriminator. The classifier prevents the generator from producing zero-residuals, i.e. from learning the identity function (Fig. 2).

Schematic illustration of our GAN-based approach for counterfactual search. Inputs are divided into query and target time series (displayed as heatmaps) according to the desired target class. A recurrent generator with sparsity activation generates residuals for each query. Residuals are added to the corresponding query to create a counterfactual explanation. A pretrained sequence classifier predicts the class label of the counterfactual. A recurrent discriminator tries to distinguish counterfactuals from real targets.

Generator. The generator is realized with a many-to-many sequence prediction model trained to generate modifications to a query sequence. To capture temporal dependencies in the input, different types of sequence models can be chosen, including long short-term memories (LSTMs), gated recurrent units, or temporal convolutional neural networks. Input and output of the generator are of the same shape. Loss functions for generator and discriminator derive from the minimax loss suggested by [4]. The generator maximizes the discriminator’s estimate that the counterfactual is real (Eq. 1). One important aspect of the generator is the subtractive dual ReLU [15] output in the sparsity layer. Instead of a single linear output the two contrastive outputs allow the network to produce positive and negative residuals while it is still easy to generate exact zero-residuals (\(\mathbf {\delta }= ReLU(\mathbf {\delta }_{pos}) - ReLU(\mathbf {\delta }_{neg})\)).

Immutable Features. In case of immutable features in the original dataset, the generator only produces residuals for all mutable features. In this specific case, the input to the generator is larger than its output. Generated residuals for the mutable features are then likewise added to the respective mutable features in the query sequence. All immutable features of the query instance remain untouched.

Discriminator. The discriminator takes on the role of distinguishing between real samples (i.e. samples from the original dataset) and fake samples (i.e. generated counterfactuals). It aims to maximize its estimate that the counterfactual is fake and the query is real (Eq. 7). It is implemented as a binary many-to-one sequence classification model with sigmoid activation that takes in a multivariate time series and produces a probability between 0 and 1, indicating whether the given sample looks like a real or fake sample. As the counterfactuals begin to look more realistic, the discriminator’s accuracy drops towards 50% (chance).

Classifier. Unlike vanilla GANs, a counterfactual GAN needs a third neural network, the classifier. In our approach the classifier is realized with a many-to-one sequence classification model. The classifier is pretrained on the original dataset and learns to classify the label of a sequence. In contrast to [16], our classifier does not only distinguish between samples which belong and samples that do not belong to the target class. Instead, we train a full classifier which learns to distinguish all classes in the original dataset. That said, our classifier can either be binary (with sigmoid activation) or multi-class (with softmax activation) in case of two or multiple original class labels, respectively. This property allows us to flexibly alter the desired target class for the generated counterfactuals without retraining the classifier. Moreover, our approach could also simultaneously be trained on all target classes. In this case, generating counterfactuals for different target classes would not require retraining of any network element of our approach.

Regularization. The combination of adversarial loss \(\mathcal {L}_{adv}\) and classification loss \(\mathcal {L}_{cls}\) loss ensures that the generated counterfactual changes the class label, while resembling a sample from the original data distribution. The classification loss between the predicted class for the counterfactual and the target class is derived from the cross-entropy loss (Eq. 2). In line with other counterfactual approaches, we apply the \(\mathcal {L}_{1}\) norm as a similarity regularization term \(\mathcal {L}_{sim}\) on the generator loss (Eq. 3). Importantly, we also use the \(\mathcal {L}_{0}\) norm as a real sparsity constraint \(\mathcal {L}_{sparse}\) which ensures that the number of modifications stays low (Eq. 4). It was shown that \(\mathcal {L}_{0}\) regularization effectively fosters sparse hidden state updates in RNNs [5]. To address the sequentiality of time series, we introduce another regularization term, the jerk constraint \(\mathcal {L}_{jerk}\). This term ensures that changes are evenly distributed over time by penalizing large differences between modifications in consecutive time steps (Eq. 5). Additional weighting factors \(\lambda _{1-5}\) allow each component of the generator loss to be switched on or off to meet the specific needs of individual datasets. A more fine-grained weighting with weighting factors between 0 and 1 enables a direct influence on the loss balance (Eq. 6).

2.1.1 On the Sparsity of Generated Counterfactuals.

One key difference of our model in comparison with other counterfactual approaches is the clear distinction between similarity and sparsity. The combination of the sparsity constraint \(\mathcal {L}_{sparse}\) and the sparsity layer as part of the generator architecture produces truly sparse counterfactuals with zero-residuals in a number of time steps and features. Importantly, we let the system inherently learn the trade-off between realism, precision, similarity, sparsity and smoothness during the training process. As a consequence, unlike other counterfactual approaches for time series, there is no need to define a fixed number of time steps and features that which may be changed. On the same lines, there is not only one specific section of the series which can be modified. Instead, we demonstrate that our approach identifies and modifies salient time steps and features while leaving most non-salient time steps and features untouched.

3 Experiments

Our approach is evaluated on three different multivariate time series datasets in comparison with three related counterfactual methods. The evaluated tasks comprise two movement datasets for multi-class classification and one synthetic time series interpretability benchmark for binary classification. Human motion datasets are anonymized and cannot be mapped back to individual subjects.

3.1 Datasets

MotionSense: The human motion dataset MotionSense [13] (Open Database License ODbL) provides multivariate time series collected by accelerometer and gyroscope sensors of a smartphone stored in a subject’s pocket as they perform different actions. Actions include walking downstairs, walking upstairs, sitting, standing, walking and jogging. For this work, we only used active movement sequences and thus excluded sitting and standing trials which yielded a total number of 11194 samples. Each time series was truncated to a length of 100 time steps. All twelve features describing attitude, gravity, rotation and user acceleration are treated as mutable.

Catching: The Catching dataset [11] (provided by personal permission) contains multivariate two-dimensional movement trajectories of healthy and pathological ball catching trials over 60 time steps. At each time step, 20 features capture the catcher’s arm position as well as the position of the ball. Each of the 1975 catching trials is assigned a label indicating the subject’s disease status: healthy control, patient with Autism Spectrum Disorder or patient with Spinocerebellar Ataxia. All features specifying the catcher’s body posture are defined as mutable features, while the two features describing the ball position are treated as immutable.

Moving Box: The synthetic Moving Box dataset was introduced to benchmark interpretability in time series predictions [7]. It portrays a wide range of temporal and spatial properties commonly found in multivariate time series. Each time series spans 50 time steps and 50 features of which only a subset is salient. Samples are assigned a binary label (0: negative class, 1: positive class) and have a defined start and end point of salient time steps and features per sample. In this dataset, all features are mutable. We used a representative subset containing 13950 samples with boxes of different sizes and at varying positions as well as a variety of generating time series processes.

3.2 Approaches

ICS: We loosely follow [18] for an implementation of an iterative counterfactual search algorithm. Each counterfactual is initialized with a random uniform distribution between the minimum and maximum values of the query sequence. The class of the generated counterfactual is predicted using a pretrained classifier. We use the \(\mathcal {L}_{2}\) distance to measure the classification loss and the unweighted \(\mathcal {L}_{1}\) norm to enforce similarity.

All following approaches are based on GANs combined with a pretrained classifier. To account for temporal dependencies in the data, generator and discriminator are implemented as bidirectional LSTMs [6]. The generator is a two-layer many-to-many bidirectional LSTM with 256 hidden neuron and dropout of 0.4. The discriminator is built up as a one-layer many-to-one bidirectional LSTM with 16 hidden neurons, sigmoid output activation and dropout of 0.4. For both networks, the final LSTM layer is followed by a fully-connected output layer.

GAN: This approach consists of a counterfactual LSTM-GAN producing complete counterfactuals based on query sequences. The fully-connected output layer of the generator is followed by a tanh activation. The generator loss is regularized using the \(\mathcal {L}_{1}\) norm to optimize the distance between counterfactual and query.

CounteRGAN: This approach is a time series specific implementation of [16] and implements an LSTM generator that produces residuals based on query sequences. All other aspects of the implementation are equal to the GAN approach.

SPARCE: Our approach likewise generates residuals instead of complete counterfactuals. In comparison to CounteRGAN, we additionally regularize the generator loss via sparsity and smoothness constraints (cf. Section 2.1). Moreover, we add weighting factors \(\lambda _{1-5}\) to enable the (de-)activation of single regularization constraints if required. Most importantly, the LSTM generator implemented in our approach does not conclude with a linear or tanh activation layer, but instead uses a custom sparsity layer of two interoperating ReLU activations (cf. Section 2.1).

3.3 Evaluation Metrics

Realism: In line with [19], we use t-distributed stochastic neighbor embedding (t-SNE) for a visual assessment of the in-distributionness of the generated counterfactuals [12]. We separately plot query and target samples of the original dataset along with the counterfactuals generated by each approach to determine whether the generated counterfactuals rather resemble queries or targets.

Precision: Classification error of generated counterfactuals is measured by the \(\mathcal {L}_{2}\) norm between the classifier’s prediction for a counterfactual sequence and the target class. The metric is indicated as the average distance across all test samples. The lower the metric, the higher the precision of the counterfactual approach. A precision value of 0.0 means that all generated counterfactuals were correctly classified as the target class.

Similarity: The \(\mathcal {L}_{1}\) distance between each query and the corresponding counterfactual is used to assess similarity. The metric is averaged over all test samples and normalized using the number of time steps and features in the dataset. Lower values indicate higher mean proximity of the generated counterfactuals to the corresponding queries.

Sparsity: Generated counterfactuals of each approach are evaluated on the number of modified time steps and features to transform the query into the counterfactual using the \(\mathcal {L}_{0}\) norm between queries and corresponding counterfactual examples. Values are averaged and normalized in the same way as the similarity metric. Here lower values represent higher average sparsity, i.e. fewer modifications in the time and feature dimensions. In the case of immutable features in the dataset, the sparsity metric is only computed on all mutable features. As a consequence, the maximum sparsity value equals 1.0 indicating that all features in all time steps have been modified in each counterfactual.

Smoothness: This time series specific metric is assessed with the \(\mathcal {L}_{2}\) distance between modifications of consecutive time steps. High values indicate large differences between modifications in subsequent steps. Lower values represent modifications that are more smoothly distributed over the course of the sequence. This metric is likewise averaged across all samples and normalized using the number of time steps and features.

4 Results

4.1 Quantitative Evaluation

All results are reported on the held-out subsets for testing (20% of each dataset). Unless otherwise stated, results are averaged over five repetitions with random seeds. The target class for counterfactuals is healthy control for Catching, walking upstairs for MotionSense and class 1 for Moving Box. ICS is performed for 100 steps (\(\lambda _{init} = 1.0\), max. \(\lambda \) steps = 10). The loss is minimized with Adam [10] optimization (\(lr = 0.4, \beta _1 = 0.9, \beta _2 = 0.999\)). All GAN-based approaches are trained for 100 epochs in batches of 32 samples using Adam optimization (\(lr = 0.0002, \beta _1 = 0.5, \beta _2 = 0.999\)). For all quantitative metrics, lower values represent better performance. The best value for each metric is printed in bold numbers.

Considering the Catching dataset, GAN and CounteRGAN achieve a precision of 100%, however closely followed by our approach (Table 1). SPARCE outperforms ICS, GAN and CounteRGAN on the similarity and sparsity of generated counterfactuals and shares the best smoothness value with the CounteRGAN approach. It can be seen that no tested approach besides ours can generate sparse counterfactuals. This observation also holds for the MotionSense and Moving Box datasets. SPARCE reaches the best or second-best performance on each metric in spite of making considerably sparser modifications than the other approaches.

4.2 Realism

We qualitatively assess the in-distributionness of generated counterfactuals for the synthetic Moving Box dataset via t-SNE visualization (\(components = 2\), \(perplexity = 4.4\), \(iterations = 300\)). In Fig. 3, the first subplot illustrates the distribution of queries and targets in the original dataset. The remaining subplots additionally show the distribution of counterfactuals generated by the respective approaches. While counterfactuals generated by ICS lie within but also largely out of the original distribution, those generated by GAN form separate groups next to queries and targets. Since the task of counterfactual search is to find samples that modify a query sample to look like a target, counterfactuals generated by CounteRGAN and SPARCE show the most promising distributions. Indeed, counterfactuals of both approaches modify queries in a way that the resulting sequences approximate and even overlap with target samples.

4.3 Saliency

Since salient features and time steps are known upfront for the synthetic Moving Box dataset, we compare the overlap with time steps modified by each approach. A perfect counterfactual would only modify salient inputs. We first visually compare modifications for queries with boxes of different sizes and positions (Fig. 4). In all heatmaps, the x-axis represents the feature axis and time is on the y-axis. In the second column, the salient features and time steps corresponding to each query are shown in color. All remaining sub-figures demonstrate the modifications to the queries. White spaces are zero-residuals (i.e. sparse time steps and features without modifications). Darker colors indicate stronger modifications.

ICS largely fails to identify salient points in the input. All GAN-based methods detect the position of most salient inputs. However, GAN and CounteRGAN additionally modify non-salient inputs. In contrast, SPARCE modifies far fewer inputs overall and focuses on salient inputs. For this dataset, the performance of our approach can be further improved by switching off \(\lambda _{4,5}\), i.e. sparsity and jerk regularization. This shows that sparsity is primarily induced by the sparsity layer. It also demonstrates that the application of the jerk constraint depends on the problem. Here, a clear value increase marks the transition from non-salient to salient inputs. In human motion datasets, in contrast, smooth movements are natural and desired.

We furthermore assess the salience overlap in a quantitative manner via the receiver operating characteristic (ROC) curve in combination with the area under the curve (AUC) score. Higher AUC scores indicate better discrimination performance between salient and non-salient inputs. Figure 5 visualizes mean ROC curves over five repetitions for both target classes. In both cases, we see that our approach produces counterfactuals that show a substantially higher overlap with predefined salient inputs than other approaches. Visual and quantitative evaluation therefore demonstrates that our approach creates sparse counterfactual explanations and is also suitable for the identification of salient inputs in multivariate time series.

4.4 Geometric Plausibility

To assess geometric plausibility of the Catching dataset, we compute the Euclidean distances between body parts for the original dataset and the generated counterfactuals (Fig. 6). ICS is excluded from the figure, since the corresponding values lie outside of the displayed area. Counterfactuals generated by our approach most closely resemble the body-part distances found in the original data. This can indicate higher geometric plausibility of our generated counterfactuals. In order to fully inspect geometric plausibility, however, the angles at which the joints are positioned in relation to one another would also have to be examined.

5 Conclusions

We proposed GAN architecture for generating time- and feature-sparse counterfactual explanations for multivariate time series. Our approach extends previous methods by a custom sparsity layer and additional loss regularization for sparsity and smoothness. In extensive experiments, we demonstrate that in spite of making substantially sparser modifications SPARCE achieves comparable or superior performance on common metrics for counterfactual search. Benchmarking our approach on a synthetic interpretability dataset, we show that it can also be used for feature attribution. The application to real-world human motion datasets demonstrates that our approach generates sparser and more plausible counterfactuals than related approaches.

The design of our approach allows for a flexible change of the desired target class, as well as an easy adaptation of the counterfactual value function catering to the needs of other applications. Future extensions can consider other applications (e.g. weather, stocks) and domain-specific regularization terms. In critical sectors such as healthcare, misinterpretation of systems can have severe consequences. XAI systems should thus always be validated by human experts. To enhance the understandability of generated counterfactuals, further work can investigate the visualization of explanations for end-users (e.g. in textual or visual form).

References

Ates, E., Aksar, B., Leung, V.J., Coskun, A.K.: Counterfactual explanations for multivariate time series. In: 2021 International Conference on Applied Artificial Intelligence (ICAPAI), pp. 1–8. IEEE (2021)

Dandl, S., Molnar, C., Binder, M., Bischl, B.: Multi-objective counterfactual explanations. In: Bäck, T., Preuss, M., Deutz, A., Wang, H., Doerr, C., Emmerich, M., Trautmann, H. (eds.) PPSN 2020. LNCS, vol. 12269, pp. 448–469. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58112-1_31

Delaney, E., Greene, D., Keane, M.T.: Instance-based counterfactual explanations for time series classification. In: Sánchez-Ruiz, A.A., Floyd, M.W. (eds.) ICCBR 2021. LNCS (LNAI), vol. 12877, pp. 32–47. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-86957-1_3

Goodfellow, I., et al.: Generative adversarial nets. In: Advances in Neural Information Processing Systems, vol. 27 (2014)

Gumbsch, C., Butz, M.V., Martius, G.: Sparsely changing latent states for prediction and planning in partially observable domains. In: Advances in Neural Information Processing Systems, vol. 34 (2021)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Ismail, A.A., Gunady, M., Corrada Bravo, H., Feizi, S.: Benchmarking deep learning interpretability in time series predictions. Adv. Neural. Inf. Process. Syst. 33, 6441–6452 (2020)

Karimi, A.H., Schölkopf, B., Valera, I.: Algorithmic recourse: from counterfactual explanations to interventions. In: Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, pp. 353–362 (2021)

Keane, M.T., Smyth, B.: Good counterfactuals and where to find them: a case-based technique for generating counterfactuals for explainable AI (XAI). In: Watson, I., Weber, R. (eds.) ICCBR 2020. LNCS (LNAI), vol. 12311, pp. 163–178. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58342-2_11

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: International Conference on Learning Representations (2015)

Lang, J., Giese, M.A., Synofzik, M., Ilg, W., Otte, S.: Early recognition of ball catching success in clinical trials with RNN-based predictive classification. In: Farkaš, I., Masulli, P., Otte, S., Wermter, S. (eds.) ICANN 2021. LNCS, vol. 12894, pp. 444–456. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-86380-7_36

Van der Maaten, L., Hinton, G.: Visualizing data using t-SNE. J. Mach. Learn. Res. 9(11), 1–27 (2008)

Malekzadeh, M., Clegg, R.G., Cavallaro, A., Haddadi, H.: Mobile sensor data anonymization. In: Proceedings of the International Conference on Internet of Things Design and Implementation, pp. 49–58. IoTDI 2019, ACM, New York, NY, USA (2019)

Mothilal, R.K., Sharma, A., Tan, C.: Explaining machine learning classifiers through diverse counterfactual explanations. In: Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, pp. 607–617 (2020)

Nair, V., Hinton, G.E.: Rectified linear units improve restricted Boltzmann machines. In: ICML (2010)

Nemirovsky, D., Thiebaut, N., Xu, Y., Gupta, A.: CounterGAN: generating realistic counterfactuals with residual generative adversarial nets. arXiv preprint arXiv:2009.05199 (2020)

Van Looveren, A., Klaise, J., Vacanti, G., Cobb, O.: Conditional generative models for counterfactual explanations. arXiv preprint arXiv:2101.10123 (2021)

Wachter, S., Mittelstadt, B., Russell, C.: Counterfactual explanations without opening the black box: automated decisions and the GDPR. Harv. JL & Tech. 31, 841 (2017)

Yoon, J., Jarrett, D., Van der Schaar, M.: Time-series generative adversarial networks. In: Advances in Neural Information Processing Systems, vol. 32 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Lang, J., Giese, M.A., Ilg, W., Otte, S. (2023). Generating Sparse Counterfactual Explanations for Multivariate Time Series. In: Iliadis, L., Papaleonidas, A., Angelov, P., Jayne, C. (eds) Artificial Neural Networks and Machine Learning – ICANN 2023. ICANN 2023. Lecture Notes in Computer Science, vol 14259. Springer, Cham. https://doi.org/10.1007/978-3-031-44223-0_15

Download citation

DOI: https://doi.org/10.1007/978-3-031-44223-0_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-44222-3

Online ISBN: 978-3-031-44223-0

eBook Packages: Computer ScienceComputer Science (R0)