Abstract

Social media is a potential source of information that infers latent mental states through Natural Language Processing (NLP). While narrating real-life experiences, social media users convey their feeling of loneliness or isolated lifestyle, impacting their mental well-being. Existing literature on psychological theories points to loneliness as the major consequence of interpersonal risk factors, propounding the need to investigate loneliness as a major aspect of mental disturbance. We formulate lonesomeness detection in social media posts as an explainable binary classification problem, discovering the users at-risk, suggesting the need of resilience for early control. To the best of our knowledge, there is no existing explainable dataset, i.e., one with human-readable, annotated text spans, to facilitate further research and development in loneliness detection causing mental disturbance [9]. In this work, three experts: a senior clinical psychologist, a rehabilitation counselor, and a social NLP researcher define annotation schemes and perplexity guidelines to mark the presence or absence of lonesomeness, along with the marking of text-spans in original posts as explanation, in 3, 521 Reddit posts. We expect the public release of our dataset, LonXplain, and traditional classifiers as baselines via GitHub (https://github.com/drmuskangarg/lonesomeness_dataset).

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

According to the World Health Organization,Footnote 1 one in three older people feel lonely. Loneliness has a serious impact on older people’s physical and mental health, quality of life, and their longevity. According to the Loneliness and the Workplace: 2020 U.S. Report, three in five Americans (61%) report the feeling of loneliness, compared to more than half (54%) in 2018 [16]. Older adults are at high risk for morbidity and mortality due to prolonged isolation, especially during the COVID-19 [8] era. To this end, researchers demonstrate loneliness as a major concern for increased risk of depression, anxiety, and stress thereby affecting cognitive functioning, sleep quality, and overall well-being [7]. We define lonesomeness as an unpleasant emotional response to perceived isolation through mind and character, especially in terms of concerns for social lifestyle. The anonymous nature of Reddit social media platform provides an opportunity for its users to express their thoughts, concerns, and experiences with ease. We leverage Reddit to formulate a new annotation scheme and perplexity guidelines for constructing an explainable annotated dataset for Lonesomeness. We start with an example of how this information is reported in social media texts.

Example: Within the last month, I have lost my best friend and my grandmother, to whom I was very close with (

). This time last year, I was involved in an incident where I was

(we were not dating at the time), and it is bringing up some

for me. I am continuously mentioning about moving cities on my own. I am

now and have

!

In this example, a person is upset about losing all loved ones and coping with the memories of manipulative situations in their life. Red colored words depict explanations for lonesomeness and blue colored words represent the triggering circumstances.

Sociologists Weiss et al. in 1975 [22] introduce a theory of loneliness suggesting the need for six social needs to prevent loneliness in stressful situations: (i) attachment, (ii) social integration, (iii) nurturing, (iv) reassurance of worth, (v) sense of reliable alliance and (vi) guidance. Furthermore, Baumeister et al. [5] explains various indices of social isolation associated with suicide as living alone, and low social support across the lifespan. In recent investigations of attachment style, Nottage et al. [18] argue that loneliness mediates a positive association between attachment style and depressive symptoms. Loneliness results in disrupted work-life balance, emotional exhaustion, insomnia and depression [6]. With this background, the annotation guidelines are developed through the collaborative efforts of three experts (a clinical psychologist, a rehabilitation counselor and a social NLP researcher) for early detection of lonesomeness, which if left untreated, may cause chronic disease such as self harm or suicide risk.

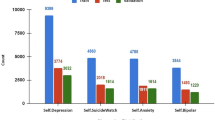

We examine potential indicators of mental disturbance in Reddit posts, aiming to discover users at risk through explainable lonesomeness annotations, as shown in Fig. 1. We first introduce the Reddit dataset for lonesomeness detection in social media posts reflecting mental disturbance. Our data annotation scheme is designed to facilitate the discovery of users with (potential) underlying tendencies toward self harm, including suicide, through loneliness detection. We construct this scheme using three clinical questionnaires: (i) UCLA Loneliness Scale [21], (ii) De Jong Gierveld Loneliness Scale [19], and (iii) Loneliness and Social Dissatisfaction scale [3].

The quantitative literature on loneliness and mental health has limited, openly available language resources due to the sensitive nature of the data. Examples are shown in Table 1. We aim to fill this gap by introducing a new dataset for lonesomeness classification with human-generated explanations and to make it publicly available on Github. Our contributions are summarized as follows:

-

We define an experts-driven annotation scheme for Lonesomeness detection.

-

We deploy the annotation scheme to construct and release LonXplain, a new dataset containing 3521 instances for early detection of textitlonesomeness that thwarts belongingness and potentially leads to self harm.

-

We deploy existing classifiers and investigate explainability through Local Interpretable Model-Agnostic Explanations (LIME), suggesting an initial step toward more responsible AI models.

2 Corpus Construction

We collect Reddit posts through The Python Reddit API Wrapper (PRAW) API, from 02 December 2021 to 06 January 2022 maintaining a consistent flow of 100 posts per day. The subreddits extracted for this dataset are those most widely used in the discussion forum for depression (r/depression) and suicide risk (r/SuicideWatch). We manually filter out irrelevant posts with empty strings and/or posts containing only URLs.

We further clean and preprocess the dataset and filter out the data samples (posts) longer than 300 words, to simplify the complexity of a given task. The length of a single sample in the original dataset varies from 1 to more than 4000 words, highlighting the need for bounding the length, thus inducing comparatively consistent data points for developing AI models. We define the experts-driven annotation scheme, train and employ three student annotators for data annotation and compute inter-annotator agreement to ensure the coherence and reliability of the annotations. We emphasize FAIR principles [23] while constructing and releasing LonXplain.

2.1 Annotation Scheme

Dunn introduces six dimensions of wellness (spiritual, social, intellectual, vocations, emotional, and physical), affecting users’ mental well-being. A key consequence of mental disturbance is a tendency toward the negative end of the scale for these dimensions, e.g., loneliness derives from negative values associated with the spiritual, social, and emotional dimensions. Further intensification may lead to thwarted belongingness [2], suicidal tendencies, and self harm.

Bringing together the two disiplines of Natural Language Processing (NLP) and clinical psychology, we adopt an annotation approach that leverages both the application of NLP to Reddit posts and clinicial questionnaires on loneliness detection, as two concrete baselines. The annotations are based on two research questions: (i) “RQ1: Does the text contain indicators of lonesomeness which alarms suicidal risk or self harm in a person?,” and (ii) “RQ2: What should be the extent to which annotators are supposed to read in-between-the-lines for marking the text-spans indicating the presence or absence of lonesomeness.”

Our experts access three clinical questionnaires used by mental health practitioner, to define lonesomeness annotation guidelines. The UCLA Loneliness Scale [21] that measures loneliness was adapted to distinguish among three dimensions of loneliness: social loneliness (the absence of a social network), emotional loneliness (the absence of a close and intimate relationship), and existential loneliness (the feeling of being disconnected from the larger world). The De Jong Gierveld Loneliness Scale [19] is a 6-item self-report questionnaire over 5-point Likert scale (ranging from 0 (not at all) to 4 (completely)) that assesses two dimensions of loneliness: emotional loneliness and social loneliness.

From Loneliness and Social Dissatisfaction scale [3] we use 10 out of 20 items, reflecting loneliness on a 5-point Likert scale, ranging from 1 (strongly disagree) to 5 (strongly agree). The experts annotate 40 data points using fine-grained guidelines seperately at 3 different places to avoid any influence. Furthermore, we find the possibility of dilemmas due to the psychology-driven subjective and complex nature of the task.

2.2 Perplexity Guidelines

We propose perplexity guidelines to simplify the task and facilitate future annotations. We observe following:

-

1.

Lonesomeness in the Past: A person with a history of loneliness may still be at risk of self harm or suicide. For instance,’I was so upset being lonely before Christmas and today I am celebrating New Year with friends’. We define rules to capture indicators of prior lonesomeness such as attending a celebration to fill the void associated with this negative emotion. With both negative and positive clauses in the example above, the NLP expert would deem this neutral, yet our clinical psychologist discerns the presence of lonesomeness, with both clauses contributing to its likelihood. This post is thus marked as presenting lonesomeness, an indicator that the author is potentially at risk.

-

2.

Ambiguity with Social Lonesomeness: Major societal events such as breakups, marriage, best friend related issues may be mentioned in different contexts, suggesting different perceptions. We formulate two annotation rules: (i) Any feeling of void/missing/regrets/or even mentioning such events with negative words is marked as the presence of lonesomeness. Example: ‘But I just miss her SO. much. It’s like she set the bar so high that all I can do is just stare at it.’, (ii) Mentions of fights/ quarrels/ general stories are marked with absence of lonesomeness. Example: ‘My husband and I just had a huge argument and he stormed out. I should be crying or stopping him or something. But I decided to take a handful of benzos instead.’.

2.3 Annotation Task

We employ three postgraduate students, trained by experts on manual annotations. Professional training and guidelines are supported by perplexity guidelines. After three successive trial sessions to annotate 40 samples in each round, we ensure their coherence and understanding of the task for further annotations.

Each data sample is annotated by three annotators in three different places to confirm the authenticity of the task. We restrict the annotations to 100 per day, to maintain the quality of the task and consistency. We further validate three annotated files using Fliess’ Kappa inter-observer agreement study to ensure reliability of the dataset, where kappa is calculated at \(71.83\%\), and carry out agreement studies for lonesomeness detection. We obtain final annotations based on a majority voting mechanism and experts’ opinions, resulting in LonXplain dataset. Furthermore, the explanations are annotated by a group of 3 experts to ensure the nature of LonXplain as psychology-grounded and NLP-driven. We deploy FAIR principle [23] by releasing LonXplain data in a public repository of Github, making it findable and accessible. The comma separated format contains <text, label, explanations> in English language, ensuring the interoperability and re-usability. We illustrate the samples of LonXplain in Table 2, with blue and red color indicating the presence of cause [10] and consequence [11], respectively. This task of early consequence detection, may prevent chronic disease such as depression and self-harm tendencies in the near future.

3 Data Analyses

Corpus construction is accompanied by fine-grained analyses for: (i) statistical information about the dataset and (ii) overlapping terms and syntactic similarity based on word cloud and keyword extraction. In this section, we further discuss the linguistic challenges with supporting psychological theories for LonXplain.

3.1 Statistical Information

LonXplain contains 3, 521 data points among which 54.71% are labeled as positive sample, depicting the presence of lonesomeness in a given text. We observe the statistics for number of words and sentences in both the Text and Explanation (see Table 3). The average number of Text words is 4 and the maximum number of words reported as explainable in text spans is 19, highlighting the need for identifying focused words for classification.

3.2 Overlapping Information

We investigate words that represent class 0: absence of lonesomeness and class 1: presence of lonesomeness in a given text. Words such as life, im, feel, and people indicate a very large syntactic overlap between these classes (see Fig. 2). However, a seemingly neutral (or even positive) word like friend indicates a discussion about interpersonal relations, which be an indicator of a negative mental state. We further obtain word clouds for explainable text-spans indicating label 1 in LonXplain. We find that words such as lonely, alone, friend, someone, talk may be indicators of the presence of lonesomeness.

For keyword extraction, we use KeyBERT, a pre-trained model that finds the sub-phrases in a data sample reflecting the semantics of the original text. BERT extracts document embeddings as a document-level representation and word embeddings for N-gram words/phrases [12]. Consider a top-20 word list for each of label 0 (K0) and label 1 (K1):

K0 : sleepiness, depresses, tiredness, relapses, fatigue, suffer, insomnia, suffers, stressed, sleepless, selfobsessed, stress, numbness, cry, diagnosis, sleepy, moodiness, depressants, stressors, fatigued.

K1 : dumped, hopelessness, introvert, breakup, hopeless, loneliness, heartbroken, dejected, introverted, psychopath, breakdown, graduation, breakdowns, overcome, depressant, solace, counseling, befriend, sociopaths, abandonment.

Although some important terms are missed by KeyBERT, e.g., homelessness and isolated, most of the terms in K1 indicate lonesomeness. Thus, KeyBERT plays a pivotal role in LonXplain, as an example of a context-aware AI classifier that lends itself to explainable output, and (more generally) responsible AI.

4 Experiments and Evaluation

We formulate the problem of lonesomeness detection as a binary class classification problem, define the performance evaluation metrics, and discuss the existing classifiers. Following this, we present the experimental setup and implement the existing classifiers for setting up baselines on LonXplain. The explainable AI method, LIME [17], is used to find text-spans responsible for decision making, highlighting the scope of improvement.

Problem Formulation. We define the task of identifying Lonesomeness L and its explanation E in a given document D. The ground-truth contains a tuple \(<D (text), L (bool), E (text)>\) for every data point in a comma separated format. A corpus of D documents where \(D=\{d_1, d_2, ..., d_n\}\) for n documents where n = 3,522 in LonXplain. We develop a binary classification model for every document \(D_i\) to classify it as \(L_i\).

4.1 Experimental Setup

Evaluation Metrics. We evaluate the performance of our experiments in terms of precision, recall and f-score. Accuracy is a simple and intuitive measure of overall performance that is easy to interpret. However, accuracy alone may not be a good measure of performance for imbalanced datasets, where one class (e.g., user’s lonely posts) is not equal to the other (e.g., user’s non-lonely posts). In such cases, a model that always predicts the majority class can achieve high accuracy, but will not be useful for detecting the minority class. In this work, our task is to identify lonesomeness and not to identify a user’s non-lonely posts. Thus, we consider Accuracy as an important metrics for evaluation.

Baselines. We compare results with linear classifiers using word embedding Word2Vec [15]. We further use GloVe [20] to obtain word embeddings and deploy them on two Recurrent Neural Networks (RNN): Long Short Term Memory (LSTM) and Gated Recurrent Unit (GRU) for performance evaluation.

-

1.

LSTM: Long Short-Term Memory networks (LSTM) take a sequence of data as an input and make predictions at individual time steps of the sequential data. We apply a LSTM model for classifying texts that indicate lonesomeness versus those that do not.

-

2.

GRU: Gated Recurrent Units (GRU) use connections between the sequence of nodes to resolve the vanishing gradient problem. Since the textual sequences in the LonXplain present a mixed context, GRU’s non-sequential nature offers improvement over LSTM.

We additionally built out versions of each approach above with bidirectional RNNs (BiLSTM, BiGRU, respectively), where the input sequence is processed in both forward and backward directions. This enrichment enables the above technologies to capture the past and future context of the input sequence—yielding a significant advantage over the standard RNN formalism.

Hyperparameters. We used grid-search optimization to derive the optimal parameters for each method. For consistency, we used the same experimental settings for all models with 10-fold cross-validation, reporting the average score. Varying length posts are padded and trained for 150 epochs with early stopping, and patience of 20 epochs. Thus, we set hyperparameters for our experiments with transformer-based models as H = 256, O = Adam, learning rate = 1\(\times 10^{-3}\), and batch size 128.

4.2 Experimental Results

Table 4 reports precision, recall and f-score for all classifiers, resulting in non-reliable Accuracy for real-time use. Word2vec achieved the lowest Accuracy of 0.64. We postulate this low performance because word2vec is unable to capture contextual information. GloVe + GRU, a state-of-the-art deep learning model, achieved the highest performance among all recurrent neural network models, counterparts with an F1-score and Accuracy of 0.77 and 0.78, respectively.

We further examine the explanations for Recurrent neural network models through Local Interpretable Model-Agnostic Explanations (LIME). LIME provide a human-understandable explanation of how the model arrived at its prediction of lonesomneness in a given text. We further use ROUGE-1 scores to validate the explanations obtained through LIME, over all positive samples in test data with explainable text-spans in ground-truth of LonXplain (see Table 5). We observe that all explanations are comparable and achieve high precision as compared to recall. In the near future, we plan to formulate better explainable approaches by incorporating clinical questionnaires in language models. Consider the following text T1:

T1: What bothers me is the soul crushing

, i haven’t had a

in years and I haven’t been

in what seems like forever. I spend all day in a

writing and hardly see

nor hair of another person besides my dad most of the time. I’m

done with it all to be

, I don’t really see any reason to

this.

BiGRU decides label 1 for T1 with 0.96 prediction probability, highlighting the text-spans: (i) focused by BiGRU for making decision (blue + red colored text), (ii) marked as explanations in the ground truth of LonXplain (red colored text), and (iii) missed text-spans by BiGRU (brown colored text).

5 Conclusion and Future Work

We present LonXplain, a new dataset for identifying lonesomeness through human-annotated extractive explanations from Reddit posts, consisting of 3,522 English Reddit posts labeled across binary labels. In future work, we plan to enhance the dataset with more samples and develop new models tailored explicitly to lonesomeness detection.

The implications of our work are the potential to improve public health surveillance and to support other health applications that would benefit from the detection of lonesomeness. Automatic detection of lonesomeness in the posts at early stage of mental health issues has the potential for preventing prospective chronic diseases. We define annotation guidelines based on three clinical questionnaires. If accommodated as external knowledge from a lexical resource, the outcome of our study has the potential to improve existing classifiers. We keep this idea as an open research direction.

References

Gaur, M., et al.: Iseeq: Information seeking question generation using dynamic meta-information retrieval and knowledge graphs (2022)

Ghosh, S., et al.: Am i no good? towards detecting perceived burdensomeness and thwarted belongingness from suicide notes. In: IJCAI (2022)

Asher, S., Wheeler, V.: Children’s loneliness and social dissatisfaction scale. Child Dev. 55(4), 1456–1464 (1984)

Badal, V.D., et al.: Do words matter? detecting social isolation and loneliness in older adults using natural language processing. Front. Psychiatry 12, 1932 (2021)

Baumeister, R.F., Leary, M.R.: The need to belong: Desire for interpersonal attachments as a fundamental human motivation. Interpers. Dev. 57–89 (2017)

Becker, W.J., Belkin, L.Y., Tuskey, S.E., Conroy, S.A.: Surviving remotely: how job control and loneliness during a forced shift to remote work impacted employee work behaviors and well-being. Hum. Resour. Manage. 61, 449–464 (2022)

Cacioppo, J.T., Hughes, M.E., Waite, L.J., Hawkley, L.C., Thisted, R.A.: Loneliness as a specific risk factor for depressive symptoms: cross-sectional and longitudinal analyses. Psychol. Aging 21(1), 140 (2006)

Donovan, N.J., Blazer, D.: Social isolation and loneliness in older adults: review and commentary of a national academies report. Am. J. Geriatr. Psychiatry 28(12), 1233–1244 (2020)

Garg, M.: Mental health analysis in social media posts: a survey. Arch. Comput. Methods Eng. 30, 1–24 (2023)

Garg, M., et al.: CAMS: an annotated corpus for causal analysis of mental health issues in social media posts. In: Language Resources Evaluation Conference (LREC) (2022)

Ghosh, S., Maurya, D.K., Ekbal, A., Bhattacharyya, P.: EM-PERSONA: emotion-assisted deep neural framework for personality subtyping from suicide notes. In: Proceedings of the 29th International Conference on Computational Linguistics, pp. 1098–1105 (2022)

Grootendorst, M.: Keybert: Minimal keyword extraction with bert. Zenodo (2020)

Kivran-Swaine, F., Ting, J., Brubaker, J., Teodoro, R., Naaman, M.: Understanding loneliness in social awareness streams: expressions and responses. In: Proceedings of the International AAAI Conference on Web and Social Media, vol. 8, pp. 256–265 (2014)

Mahoney, J., et al.: Feeling alone among 317 million others: disclosures of loneliness on twitter. Comput. Hum. Behav. 98, 20–30 (2019)

Mikolov, T., Sutskever, I., Chen, K., Corrado, G.S., Dean, J.: Distributed representations of words and phrases and their compositionality. In: Burges, C.J.C., Bottou, L., Welling, M., Ghahramani, Z., Weinberger, K.Q. (eds.) Advances in Neural Information Processing Systems, vol. 26, pp. 3111–3119. Curran Associates, Inc. (2013). http://papers.nips.cc/paper/5021-distributed-representations-of-words-and-phrases-and-their-compositionality.pdf

Nemecek, D.: Loneliness and the workplace: 2020 us report. Cigna, January (2020)

Nguyen, D.: Comparing automatic and human evaluation of local explanations for text classification. In: Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, vol. 1 (Long Papers), pp. 1069–1078 (2018)

Nottage, M.K., et al.: Loneliness mediates the association between insecure attachment and mental health among university students. Personality Individ. Differ. 185, 111233 (2022)

Penning, M.J., Liu, G., Chou, P.H.B.: Measuring loneliness among middle-aged and older adults: the UCLA and de Jong Gierveld loneliness scales. Soc. Indic. Res. 118, 1147–1166 (2014)

Pennington, J., Socher, R., Manning, C.: Glove: global vectors for word representation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 1532–1543 (2014)

Russell, D., Peplau, L.A., Cutrona, C.E.: The revised UCLA loneliness scale: concurrent and discriminant validity evidence. J. Pers. Soc. Psychol. 39(3), 472 (1980)

Weiss, R.: Loneliness: The Experience of Emotional and Social Isolation. MIT press, Cambridge (1975)

Wilkinson, M.D., et al.: The fair guiding principles for scientific data management and stewardship. Sci. Data 3(1), 1–9 (2016)

Zirikly, A., Dredze, M.: Explaining models of mental health via clinically grounded auxiliary tasks. In: CLPsych (2022)

Acknowledgement

We would like to sincerely thank the postgraduate student annotators, Ritika Bhardwaj, Astha Jain, and Amrit Chadha, for their dedicated work in the annotation process. We are grateful to Veena Krishnan, a senior clinical psychologist, and Ruchi Joshi, a rehabilitation counselor, for their unwavering support during the project. Furthermore, we would like to express our heartfelt appreciation to Prof. Sunghwan Sohn for consistently guiding and supporting us.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Ethics declarations

Ethical Considerations and Broader Impact

We emphasize that the sensitive nature of our work necessitates that we use publicly available Reddit posts in a purely observational manner. This research intends to improve public health surveillance and other health applications that automatically identify lonesomeness on Reddit. To adhere to privacy constraints, we do not disclose any personal information such as demographics, location, and personal details of social media user while making LonXplain publicly available [24]. The annotations scheme is carried out under the observation of a senior clinical psychologist, a rehabilitation counselor, and a social NLP expert. This research is purely observational and we do not claim any solution for clinical diagnosis at this stage [1]. Reddit posts might subject to biased demographics such as race, location and gender of a user. Therefore, we do not claim diversity in our dataset. Our dataset is susceptible to the prejudices and biases of our student annotators. There will be no ethical issues or legal impact with our dataset and is subject to IRB approval.

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Garg, M., Saxena, C., Samanta, D., Dorr, B.J. (2023). LonXplain: Lonesomeness as a Consequence of Mental Disturbance in Reddit Posts. In: Métais, E., Meziane, F., Sugumaran, V., Manning, W., Reiff-Marganiec, S. (eds) Natural Language Processing and Information Systems. NLDB 2023. Lecture Notes in Computer Science, vol 13913. Springer, Cham. https://doi.org/10.1007/978-3-031-35320-8_27

Download citation

DOI: https://doi.org/10.1007/978-3-031-35320-8_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-35319-2

Online ISBN: 978-3-031-35320-8

eBook Packages: Computer ScienceComputer Science (R0)

). This time last year, I was involved in an incident where I was

). This time last year, I was involved in an incident where I was  (we were not dating at the time), and it is bringing up some

(we were not dating at the time), and it is bringing up some  for me. I am continuously mentioning about moving cities on my own. I am

for me. I am continuously mentioning about moving cities on my own. I am  now and have

now and have  !

!

, i haven’t had a

, i haven’t had a  in years and I haven’t been

in years and I haven’t been  in what seems like forever. I spend all day in a

in what seems like forever. I spend all day in a

writing and hardly see

writing and hardly see  nor hair of another person besides my dad most of the time. I’m

nor hair of another person besides my dad most of the time. I’m  done with it all to be

done with it all to be  , I don’t really see any reason to

, I don’t really see any reason to  this.

this.