Abstract

Algorithms have penetrated into every aspect of our lives. While promoting the development of the digital economy, algorithms have also brought many problems. China is one of the first countries to introduce laws and regulations on recommendation algorithms to safeguard users’ rights of independent choice in the digital information environment. In the policy context of algorithmic governance, we need more empirical research on algorithm awareness from the perspective of information users. This study focuses on Chinese rural users’ content recommendation algorithm awareness on Douyin, a short-video platform. We triangulated survey and in-depth interviews to explore factors behind different levels of content recommendation algorithm awareness, as well as the link between content recommendation algorithm awareness and user experience, and users’ attitude towards turning off the recommendation algorithm function, which has been listed in China’s recent algorithm regulation as a required function that digital platforms have to provide to users. In this study, we constructed a multi-dimensional scale on algorithm awareness, and identified two types of factors that account for users’ algorithm awareness: External and internal factors. We also proposed suggestions and counter-measures to improve users’ algorithm awareness. Findings from this research have implications on the policymaking of algorithm governances in China and beyond, particularly, the research suggests regulatory directions for increasing the transparency of content recommendation algorithms and practical approaches to protect users’ rights in shaping what they consume on a daily basis.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Algorithm awareness

- Content recommendation algorithm awareness

- Recommendation algorithm

- Digital divides

- Douyin

- Short-video platform

1 Introduction

1.1 The Rise of Algorithmically Driven Content Recommendation Systems

In recent years, the rapid development of the Internet accelerates the digitalization of various industries in China, and algorithms are becoming an important underlying architecture of Internet platforms. However, algorithms have also brought a series of problems such as algorithmic black boxes, information “filter bubbles”, the lack of diverse social values, and the invasions of user privacy. Regulators of digital platforms are aiming to strengthen their research and policymaking on algorithm auditing [1]. As a result, the management and governance of algorithms have received increasing media attention and public scrutiny.

Algorithm governance has become an important issue for various countries and international organizations. The G20 and IEEE released their guidelines on artificial intelligence and algorithm ethics, the European Union also introduced regulatory frameworks such as GDPR (General Data Protection Regulation), DSA (Digital Services Act) and DMA (Digital Markets Act). China took the lead in introducing one of the world’s first legislations on recommendation algorithm: the Regulations on Recommendation algorithm for Internet Information Services, which is considered as a comprehensive and systematic regulation of algorithm services.

Algorithms are increasingly involved in information users’ everyday information seeking, search and sharing processes. Short-video platforms, which recommend personalized short videos to users using automatic personalization algorithms, have become one of the most popular social media platforms among the young generations and are important digital platforms for understanding the social impact of algorithmic recommendation technologies. As of December 2021, China has over 934 million short-video platform users [2]. Douyin is the largest short-video platform in China. As a short video community platform for all ages, users can browse other people’s videos or make their own short videos on Douyin. The total number of its users has exceeded 800 million. Douyin users are highly engaging in interacting with the platform. The high engagement levels of users on short-video platforms mean that users have richer and more complex interactions with algorithms than users of other social media platforms. Algorithms are playing a key role in shaping information flows for Douyin users and also having a strong influence on how users engage and interact with algorithmically driven content recommendation systems. This paper addresses the important question of how users perceive algorithms by focusing on Douyin and users’ awareness of content recommendation algorithm on Douyin.

1.2 A New Digital Divide?

The changing media environment, which is now increasingly shaped by algorithms as gatekeepers, might have differentiated consequences for various social groups. While the existing divide between the information-rich and information-poor users still exists in how users adopt, adapt and use information technologies, new forms of digital divides are appearing in how users engage and interact with algorithms in everyday contexts.

The concept of digital divide focuses on inequalities in the access, adoption, and use of digital technologies. Secondary and tertiary digital divides (adoption or use divide) shift the focus of digital divide research to the gap in Internet use skills and purposes between different groups [3, 4]. At the digital divide use level, the presence or absence of the awareness of algorithms and the strength of algorithm awareness become important differentiating factors: “People’s awareness and understanding of the systems that operate behind-the-scenes to bring content to users” [5] is listed as one of the Internet skills [6].

In this study, we refer to this skill as algorithm awareness, which describes users’ level of awareness of the existence, function, and impact of algorithms on a digital platform, and whether or not they are able to interact with algorithms consciously and critically. Existing research suggests that algorithm awareness can improve other Internet skills and overall information skills. However, this digital advantage is distributed differently across the population [7]. Therefore, algorithm awareness can be seen as a new and reinforcing dimension of the secondary and tertiary digital divides [7].

While empirical studies of the Chinese rural Internet have depicted a widening digital divide in China [8,9,10], it is unclear if the digital divides in the level of algorithm awareness also exist in China. Also missing from the literature is what social-economic or internet use factors could account for the users’ variances in their level of algorithm awareness.

This study will combine quantitative and qualitative methods to examine the users’ awareness of content recommendation algorithm among rural users of Douyin. Findings from this research will help to understand the dimensions, variances, and factors of algorithm awareness among Chinese short-video users. Three main research questions are raised: What are the factors influencing algorithm awareness? Does algorithm awareness have an impact on users’ platform experience? Does algorithm awareness affect users’ willingness to turn off features related to personalized recommendation algorithms? More importantly, by answering these research questions, this study can also help regulators, technology companies, researchers to explore how to build a better algorithmically driven media environment for Chinese Internet users and how to create a better future of algorithm governance that benefits both information rich and poor populations.

2 Literature Review and Research Questions

2.1 Algorithm Awareness as an Important Digital Skill

Recent research has begun to focus on people’s understanding and adoption of algorithms, and “algorithms” are appearing more often in public discourses and academic research on digital divides. Hargittai et al. highlighted inequalities in Internet use skills and digital literacy. She argues that group differences in Internet use skills are evident in most online activities, from the types of content people seek and consume to the content materials they produce and share [11]. Algorithmic skill can be therefore viewed as an Internet skill [5]. Algorithmic skills are also referred to as algorithm awareness in other empirical studies. Algorithm-based applications are embedded in the daily lives of users, and how users interact with algorithms forms a new form of digital divide in the increasingly complex digital environment [12].

Scholars have also conducted a series of empirical studies to measure and compare users’ algorithm awareness and algorithmic attitudes across different digital platforms. Most empirical research have focused on social media and news platforms. Studies have found that, surprisingly, more than half of Facebook users are unaware of the existence of Facebook news streaming algorithms [13]. Some scholars studied users’ attitudes and perceptions of algorithmic news in Mainland China and found that 67% of users are aware of recommendation algorithms when using news platforms, but were unaware of the algorithmic rules [14]. This shows that there is a general lack of algorithm awareness and algorithmic knowledge among users of algorithmic platforms. Meanwhile, compared to social media or digital news platform, fewer studies have been conducted on the algorithm awareness of video platform users.

Users’ algorithm awareness can be defined and measured, and some empirical studies on algorithmic cognition and attitudes have designed algorithmic attitude scales for users [7, 14], but few studies have systematically measured algorithm awareness. Zarouali et al. developed and validated the Algorithm Media Content Awareness (AMCA) [15], this scale includes four dimensions of algorithm awareness: Content filtering, Automated decision making, Human-algorithm interplay, and Ethical considerations. This scale is a reliable and valid tool for measuring users’ algorithm awareness, which is tested on Facebook, YouTube, and Netflix. However, there are many different platforms using algorithms, and there is still much room for discussion on how to measure algorithm awareness.

2.2 Mechanisms for the Formation of Algorithm Awareness

What factors influence people’s algorithm awareness? Based on the knowledge gap hypothesis [16] and digital divides theory, we argue that information inequality is associated with socioeconomic disadvantages (e.g., low education and income levels), which implies that more resourceful and more privileged social groups are better prepared to benefit from algorithms [17]. Researchers have found that differences in user attitudes toward algorithms are to some extent caused by the digital divides, with users who spent longer hours online, users with higher education, and higher media literacy levels are also the same group of users who are more aware of algorithmic logics and potential risks with algorithms [14].

While mobile devices are considered as internet leapfrogging technologies for developing countries, users who solely depend on mobile devices as internet access channels might be more disadvantaged than users who also have PCs to access the Internet. Studies have shown that people who use PCs to access the Internet have higher levels of user engagement, content creation, and information search than those who only use mobile devices, implying that the gap in knowledge and skills needed to use the Internet effectively increases with the proportion of people who are “mobile-only” [18]. Thus, the use of Internet access devices may also affect algorithm awareness.

Moreover, the level of “digital literacy”, which is a key dimension of the secondary and tertiary digital divides, is found to be related to the capabilities of internet users to utilize digital technologies for capital-enhancing activities [19, 20]. Digital literacy is an important skill for everyday learning and working living in the digital era, and improving digital literacy is the key step to bridging the digital divide in Internet usage. Since algorithmic skills can be used as a new enhanced dimension of Internet skills, this study will add digital literacy to the discussion of factors influencing algorithm awareness.

Based on the above-mentioned empirical studies, this study hopes to describe the current status and influencing factors of algorithm awareness among rural users who use the short-video platform Douyin in China. The study of the factors influencing algorithm awareness will demonstrate user variances in algorithm awareness across different social groups and pinpoint which social groups are more likely to be in a disadvantaged position in terms of algorithm awareness. We propose the following research questions and hypotheses based on existing literature of digital divides and digital literacy.

-

RQ1: What are the main factors that influence users’ algorithm awareness?

-

H1: Socio-demographic factors are significantly and positively associated with users’ algorithm awareness.

-

H2: Internet device access is related to users’ algorithm awareness.

-

H3: Users’ digital literacy is significantly and positively related to users’ algorithm awareness.

Researchers have pointed out that algorithms can be more easily understood through the perspective of users [17]. Based on this perspective, we need to focus on users’ everyday experience and actual usage of algorithms. In a survey of Facebook users, it was found that after experiencing and being aware of the presence of algorithms, users strengthened their overall sense of control over Facebook’s algorithms, and the extent to which users understand and experience algorithms may influence their attitudes toward using the platform [13]. Algorithm awareness guides users to envision, understand, and interact with algorithms [21]. Awareness of the presence of recommendation algorithms on online platforms may make users think more critically about the content they see, and users’ algorithm awareness may help them make careful assessments of the platform and decide how to interact with algorithms [15]. An important insight from studies that center around users’ everyday experiences with algorithms is that users’ awareness of algorithms is a dynamic process, which might change while users interact with the algorithmic platform. Through understanding how users interact with platforms, researchers can also infer the level of user awareness of algorithms. Therefore, we are also interested in exploring the influence of users’ daily interactions with algorithms on algorithmic awareness. We propose the following hypotheses that focus on user interactions with algorithms:

-

H4: Users’ experience and intensity of platform use are significantly and positively related to users’ awareness of algorithms.

-

H5: The interaction behavior between users and algorithms is related to users’ algorithm awareness.

2.3 Algorithm Awareness and User Experience

Algorithm awareness can further help users to meet their personal needs in the algorithm society. Studies have shown that the understanding of algorithmic systems can increase users’ motivation to use algorithmic platforms and users’ trust in algorithms [22]. The level of user understanding of recommendation algorithms positively influences users’ trust in and acceptance of the platform [23]. User satisfaction is the metric that is of interest to most digital platforms. Yet studies have found that users’ satisfaction increases when the digital platform can explain the recommendation results to the user [24]. Users have a cognitive need for algorithmic recommendation mechanisms, and there is a strong link between user experience and the level of transparency of algorithms used by digital platforms [25]. Therefore, this study also focuses on the link between users’ platform usage experience and algorithm awareness by asking a second research question.

RQ2: Does algorithm awareness influence users’ experiences of the digital platform?

Nowadays, the collection, use, and storage of users’ personal data is a mandatory condition for users to enjoy algorithmic services. Users should have the right to know what private data they give up in return for the services they received and make an informed decision on whether users want to reveal personal data to digital platforms [26]. The permanent retention of data has led to digital surveillance and large-scale collection of sensitive personal data [27], and the legal community has responded to the risk of personal information collection by private companies [26]. “The right to be forgotten” was first introduced in the GDPR to uphold the right of information subjects to demand that information controllers delete their personal information. In algorithmic governance, user rights are an important consideration, and we should also focus on this in algorithm awareness research.

We note that the algorithm regulation in China explicitly requires algorithmic service platforms to provide users with the option to turn off algorithmic recommendation services, and this regulation was originally set up to protect users’ rights and interests. Technology companies such as Douyin has added a function that will allow users to turn off personalized content recommendations and personalized ad. This allows users to opt out of the recommendation algorithm service provided by Douyin with one click. However, it is unclear whether or not users are aware of the function or to what extent users feel empowered after using this function. This study hopes to investigate users’ attitudes and practices regarding the algorithmic recommendation function from the perspective of their algorithm awareness, and therefore poses the third research question.

RQ3: Does algorithm awareness affect users’ willingness to withdrawal from features related to personalized recommendation algorithms?

To date, algorithm awareness research mainly focuses on users’ algorithmic cognition and attitude, user-algorithm interaction, and user rights in algorithmic society; previous research has extensively focused on traditional search engines, social networking sites, or news recommendation platforms, leaving popular digital platforms such as TikTok (or Douyin in China) unstudied. Meanwhile, although countries around the world are enhancing algorithmic governance to protect users’ rights when engaging with algorithmically shaped content, very few studies have discussed algorithmic governance in the context of algorithm awareness. We believe that the understanding of the level of user awareness of algorithm will significantly contribute to the debate about and discussion of algorithm governance.

Findings from our research will have the following contributions: First, this study focuses on short-video platforms and investigates users’ algorithm awareness and its influence mechanism by combining quantitative and qualitative approaches. We will contribute new findings about users’ everyday experiences on short-video platforms. Second, we are also interested in exploring factors accounting for the levels of users’ awareness of algorithms, including socio-economic factors, internet use, and users’ engagement in digital platforms. Understanding the mechanisms of the shaping of algorithm awareness will contribute to the empirical research on digital divides, algorithmic divides, and algorithm literacy. Finally, our research will also test if algorithm awareness is related to users’ sense of control in front of platform algorithms.

3 Methods

3.1 Quantitative Research Design

This study built an algorithm awareness framework and a scale to understand users’ algorithm awareness. We build the scale of algorithm awareness based on AMCA and applied the new scale in the context of Douyin, an example of short-video platforms. We defined four dimensions of algorithm awareness for Douyin users, including a total of 14 questions (see Table 1).

The first dimension is users’ awareness of personalized recommendation algorithms and content filtering. Compared to the AMCA framework, we added the users’ independent judgment on the degree of algorithm awareness in this dimension. This dimension refers to users’ awareness of the existence of the Douyin algorithm and its personalized recommendation and filtering features. Algorithm awareness should be based on users’ knowledge of the algorithm for personalized content filtering, because this knowledge and awareness play an important role in changing users’ choice of platform and behavior on the platform.

The second dimension is the awareness of algorithmic automation. Considering the difficulty of understanding, we condensed and simplified the AMCA question items. Algorithms are designed to implement human judgments in an efficient way, hence, user awareness of this automated judgment or decision-making process is an important step in understanding how algorithms shape the network environment [15].

The third dimension is user awareness of the human-algorithm interaction, which we have refined this dimension based on the characteristics of short-video platforms. The algorithm collects user information and presents the content on the platform through logical operations, so the user’s behavior, the information provided and the algorithm’s logical operations collaborate to produce the push content [28]. We argue that a sense of control, or the feeling that users can influence the algorithm’s output through online behaviors is an important part of algorithm awareness. For example, Douyin users’ interests may change over time, and if users do not know how to provide feedback or interact with the algorithm, they may not be able to reject receiving content that they are not interested in.

The fourth dimension is whether users are aware of the ethical issues related to the recommendation algorithm. Based on the literature review, we summarize the ethical privacy issues of algorithms into three dimensions, including the possible bias and discrimination of algorithms, algorithmic transparency from the users’ perspective, and personal information security issues. As discussed earlier, the policies related to algorithm governance in China required technology companies to provide switch-off bottoms for users to opt out of content recommendation algorithms and protect users’ rights to algorithm transparency. Therefore, we add questions about user-initiated privacy function settings as an extension to AMCA.

Scholars have not yet agreed on the definition and measurement of digital literacy. We note that some scholars have categorized and used the type of online activity in the discussion of Internet skills and use in the secondary digital divide [29], and we measured digital literacy in terms of the type and frequency of users’ online activities.

To explore users’ awareness of the opt-out option required by the new algorithm governance policy in China, we demonstrated to users in the questionnaire that they could turn off the Douyin algorithmic recommendation feature and showed the steps to turn it off to those who were unaware of this feature. This part of survey questions is placed after the measurement of algorithm awareness and platform engagement. We hope to explore if users’ awareness of algorithms is associated with the likelihood of opting out of content recommendation algorithms. Table 2 shows the important variables included in the questionnaire.

This study combines surveys and interviews to explore users’ awareness of algorithms. The survey method was adopted to collect quantitative data. The sample was residents of X town, Du’an County, Hechi City, Guangxi Province, and the main respondents were residents over 18 years old who grew up or live in this area. Before sending out the survey, we ran a pilot study with a smaller sample of population. We then distributed the final survey questionnaire using a snowballing approach by sharing the questionnaire through two channels: WeChat and QQ. We set filter questions and test questions in the questionnaire, with the filter questions distinguishing between respondents who use Douyin and those who are non-users, and the test questions requiring respondents to select specific Likert scale dimensions to identify invalid responses and ensure the quality of survey data.

3.2 Qualitative Research Design

In order to gain a deeper understanding of the algorithm awareness of rural users of Douyin, this study also designed an interview outline based on the research questions and existing empirical research. The qualitative interview contains three aspects regarding algorithm on Douyin: algorithm awareness, user interaction, and user feedback.

We selected respondents who completed the survey questionnaire to participate in in-depth interviews. In the process of selecting interviewees, we ensured that the demographic backgrounds of respondents were diverse. We selected interviewees based on their levels of algorithmic literacy, experiences in using Douyin, whether or not they choose to turn off the personalized recommendation algorithm function, and whether or not they use Douyin to actively create content online. We invited six interviewees for the qualitative study and transcribed the interviews for in-depth analysis.

4 Results

4.1 Descriptive Analysis

A total of 377 valid questionnaires were collected in this study, and a total of 309 people had used Douyin (82%). The overall Cronbach’s α of the questionnaire was 0.898, which was greater than 0.8 and close to 0.9, which indicates a satisfactory level of reliability. The reliability of the subscales ranged from 0.807 to 0.885, indicating high reliability of the questionnaire scale measurements.

4.2 Exploratory Factor Analysis of Algorithm Awareness

To understand the algorithm awareness of Douyin users, respondents were required to assess and score 14 statements about Douyin algorithms. This study examined the feasibility of the scale through Exploratory Factor Analysis and adjusted the scale accordingly. Before conducting the factor analysis, the Kaiser-Meyer-Olkin (KMO) was conducted, and the results showed a KMO of 0.837 and Bartlett’s sphericity test of 0.000 for the probability of compatibility, indicating the suitability of the algorithm awareness scale for factor analysis. We selected principal component analysis and performed factor matrix rotation using the variance maximization method. The first four factors had factor eigenvalues greater than 1 and explained 61.348% of variances (see Table 3).

The results of the factor analysis differed somewhat from the expected framework, and we divided the scale of algorithm awareness into four factors (see Fig. 1). These were (1) awareness of the personalized content filtering characteristics of algorithms; (2) awareness of ethical privacy issues posed by algorithms, including concerns about personal data leakage, awareness of limiting algorithm permissions, and awareness that algorithms may exacerbate inequality and bias; (3) hearing about and understanding algorithms, including hearing about the Douyin algorithm and thinking they understand how to influence personalized information. (4) Awareness of the automated nature of the algorithm, is that the algorithm automates the pushing of content without human intervention.

The proportion of variance contributions corresponding to each common factor was used as the weight coefficient to calculate and generate the new variable algorithm awareness composite score (AA_Score). The algorithm awareness composite score will be used as the main reference to measure users’ algorithm awareness, and the higher the score, the higher the level of algorithm awareness. The formula for calculating the Algorithm awareness Score is as follows.

4.3 Results Analysis

RQ1: What are the Factors Influencing Algorithm Awareness? From the results of the correlation analysis, it can be seen that the socio-demographic variables that are significantly related to users’ algorithm awareness are marital status, occupation, education level and age, and H1 holds. H2 considers the influence of users’ Internet access, and whether or not they use devices other than cell phones is significantly related to algorithm awareness. H5 considers users’ interactive behaviors with algorithms, and it is found that among the 14 interactive behaviors, only the behavior of following Douyin account is related to algorithm awareness. In this regard, we believe that Douyin is a content product, and users shape personalized content by following accounts of interest. “Follow” is a way for users to express their liking of videos or creators and their expectation of similar content, and this participation itself has a strong user initiative.

To further explore the possible differences in H1, we conducted multiple regressions using the aggregated score of algorithm awareness as the dependent variable and demographic variables as the independent variables (see Table 4). It has been argued that the variable of educational attainment is an important variable explaining variances in algorithm awareness [17]. With the addition of the educational attainment variable in Model 2, income and education remained significant predictors of algorithm awareness, and the increase in R2 of the model indicated that the variable of educational attainment accounted for a larger variance in algorithm awareness, and that there was a larger educational difference in users’ overall algorithm awareness, with users with high school and bachelor’s degrees having higher algorithm awareness compared to those with middle school or less education.

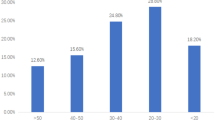

For the digital literacy factor, the results of factor analysis showed that the digital literacy scale could be downscaled into five factors (see Fig. 2). Finally, we calculated the overall digital literacy score based on the exploratory factor analysis data of digital literacy (see Table 5) to measure the high level of digital literacy of users. The correlation analysis shows that digital literacy is significantly correlated with algorithm awareness. Specifically, except for online sales literacy, all other digital literacy factors are significantly correlated with algorithm awareness, and H3 holds.

The regression analysis of each factor of digital literacy and the composite score of algorithm awareness showed (see Table 6) that the addition of each factor variable of digital literacy increased the adjusted R2, indicating that the variable of digital literacy was more important in algorithm awareness. Specifically, smartphone application literacy (β = 0.330) and digital browsing learning literacy (β = 0.153) were significantly and positively correlated with algorithm awareness composite scores, and the higher the digital literacy of the above dimensions of users, the higher the algorithm awareness. We believe that most mobile applications use recommendation algorithms, which shape users’ daily algorithmic environment, and users implicitly feel and understand algorithms in their daily digital application practices. Therefore, improving users’ digital literacy can bridge the digital divide and also positively influence algorithm awareness and improve users’ knowledge about algorithms.

RQ2: Does Algorithm Awareness Have an Impact on Users’ Platform Experience? Answering this question requires a downscaling of users’ Douyin usage experience scale. According to the results of factor analysis, the nine items of users’ Douyin usage experience can be downscaled into two factors. Factor 1 is named video production and social interaction experience, and factor 2 is named content and advertising experience.

After correlation tests, it was found that hearing about and understanding the Douyin algorithm and being aware of the automation features of the algorithm were related to user video production and social interaction experience; being aware of the personalized content filtering features of the algorithm and being aware of the ethical privacy issues of the algorithm were significantly related to user content and advertising experience. The results of linear regression showed (Table 7) that Model 2 increased Adjusted R2 with the addition of variables for the algorithm awareness factor, indicating that algorithm awareness is a significant influencing factor in the experience of using Douyin. Hearing about the algorithm (β = 0.1885) and being aware of the algorithm as an automated push (β = 0.137) were positively associated with video creation and social interaction experience, with the former having higher importance on the experience impact; being aware of the algorithm as personalized recommended content (β = 0.351) and being aware of the algorithm’s ethical privacy issues (β = 0.239) were positively associated with the content and advertising experience, with the former having a and ad experience were higher.

In our qualitative study, we found that algorithm awareness influenced users’ awareness of Douyin’s video posting assistance features and rules, which further influenced the experience of using the video posting feature. Douyin users can choose to add a topic in the form of “#+text” when posting videos. Adding a topic enables more users interested in the same topic to see the video and get more traffic. People with low algorithm awareness may have used hashtags but do not understand the recommended features they carry. One interviewee had added a hashtag when posting a video (see Fig. 3), but she said:

I don’t know what the ‘#’ means, let alone what it does. It seems like this is a quote? I saw it and clicked to add it.

RQ3: Does Algorithm Awareness Affect Users’ Willingness to Turn off Features Related to Personalized Recommendation Algorithms? Among respondents who use Douyin, half of the users think they know how to turn off the personalized content recommendation function. Overall, 1/3 of users chose to turn off personalized content recommendation function, and 2/3 of users chose to turn off personalized advertising recommendation function.

Based on the interviews, we speculate that some respondents were actually unaware of the exact location of the button to turn off algorithmic recommendations. One respondent chose in the questionnaire that he knew how to turn off the personalized content recommendation function, stating:

The relevant buttons for these softwares are normally in the settings, I guess, but I haven’t gone through them.

Currently, Douyin does not place the button to turn off the personalized recommendation algorithm function in a conspicuous location, and it takes at least four steps to turn off the button within Douyin (see Fig. 4). Some respondents said that Douyin hides the button to turn off the function too deeply and that it is “too much trouble” to turn off the button.

RQ3 focused on the relationship between algorithm awareness and turning off algorithmic recommendations. The results of the analysis showed that the overall score of algorithm awareness was significantly correlated with whether or not users turned off personalized ad display. Whether to turn off the personalized content recommendation function was significantly correlated with Algorithm Awareness Factor 1 and Algorithm Awareness Factor 2, and whether to turn off the personalized ad recommendation function was significantly correlated with Algorithm Awareness Factor 2. Further dichotomous logistic regression results showed that (see Table 8), the more aware and perceptive users are of the algorithm’s personalized content, the more likely they are to keep the personalized content recommendation function; the more aware users are of the possible ethical privacy issues of the algorithm, the more likely they are to choose to turn off the personalized content recommendation function.

In the interview, we found that users’ acceptance of personalized advertising is also related to the individual’s willingness to shop online. In the digital economy, digitalization is the key to connecting buyers and sellers. Douyin often induce users to click and consume by serving advertising videos, and these ads are determined by algorithms. The combination of ads and personalized videos greatly affects user attention, engagement and decision-making. “Prevent myself from shopping online because of ads”, “Lessen my desire to consume” are the reasons often cited by respondents who choose to turn off personalized ads displays.

5 Conclusion and Discussion

Algorithms have embedded in almost every aspect of our daily lives, and the development of technology often carries two sides. In the context of the hot debate of algorithm criticism and the policy of algorithm governance, more empirical research is needed on algorithm awareness from the users’ perspective.

This study contributes to the research of digital divides, algorithm literacy, algorithm governance from the perspective of algorithm awareness. First, this study supplements and extends the digital divide theory and applies it to the digital environment of algorithmic recommendations, contributing to the empirical study of the social impact of algorithms. This study focuses on algorithmic users and chooses the popular short-form video platform, Douyin, to examine content recommendation algorithms. Second, this study contributes important measures of algorithm awareness, and the designed algorithm awareness scale integrates important dimensions such as personalized filtering, automated features, and ethical safety awareness of content recommendation algorithms. This scale shows good reliability and validity in the investigation of content recommendation algorithm awareness for Douyin users. The valid and reliable algorithm awareness scale provides a toolkit for studying the algorithm awareness of Chinese internet users, especially short-video platform users. In the future, we aim to apply the scale of algorithm awareness in other empirical studies of recommendation algorithms, including but not limited to search engines, short-video platforms, and mobile news Apps. Third, this study adds an important qualitative research perspective on users’ algorithm usage experience and algorithm choice. We find that users’ algorithm awareness affects users’ platform usage experience, and together with other factors, influences users’ consideration of recommendation algorithm functions, which leads us to start a discussion on platform algorithm governance policy implementation.

5.1 The Mechanisms of User Variances in Algorithm Awareness

Existing literature have emphasized the importance of user awareness of algorithms. We identified several different types of factors explaining variances in user awareness of algorithms. First, the socio-demographic factors. We found that demographic variables such as age, marital status, education level, and occupation are significantly associated with algorithm awareness. Regression analysis showed that income and education were the main significant predictors of algorithm awareness, which is consistent with previous research on socioeconomic status and algorithmic knowledge [17], demonstrating the impact of economic income and education on the digital divide in the new technological environment. People with higher education and income have a higher level of algorithm awareness. This gap may stem from the stratification and inconsistency of various resources brought about by education and income; higher income and education provide users with greater advantages in terms of access to processing relevant information and exposure to algorithmic knowledge, etc. The objective conditions and social experiences of this distinction are unconsciously internalized into the users themselves, which can bring about differences in habits of thinking, feeling, and acting [30, 31], which is also reflected in user algorithm awareness.

Second, we can see a significant relationship between Internet devices that users access and the level of their algorithm awareness. This echoes findings from studies that revealed that mobile devices exacerbate digital inequality, and mobile Internet access cannot fully replace PC Internet access [18]. We can see that in today’s highly popular mobile Internet, those who receive poor quality content in mobile algorithmic platforms and overly rely on algorithmic push mechanisms face new challenges in online information seeking [18]. We found that this group is also situated in a disadvantaged position of algorithm awareness, and one way to help these groups improve their algorithm awareness is to conduct digital literacy training and algorithmic education across different network terminals. We verified the correlation between digital literacy and algorithm awareness in our study. The level of algorithm awareness showed a positive correlation with users’ Internet skills.

In summary, this study concludes that users’ algorithm awareness is influenced by both external and internal factors. The former requires more algorithm knowledge popularization by platforms or education systems, more media coverage and discussion of algorithms, and policies inform users of their choices in algorithmically shaped information environment. Meanwhile, user awareness of algorithms, as we identified in this research, largely stems from the education and resources shaped by the socio-economic variables. This indicates that the improvement of users’ awareness of algorithm also require policymakers and technology companies to pay close attention to the structural variances in how users perceive and understand technologies. Existing divides in the access, adoption and usage of digital technology are still reflected in how users engage with algorithms.

Therefore, to raise the level of algorithm awareness and bridge the algorithm gap, we should consider two aspects: First, increase the publicity and discussion of algorithms, prioritizing debates around algorithms as a more prominent social discussions, and create a social environment that focuses on improving algorithm awareness among the public. Second, increase digital literacy training and algorithm education, and emphasize data thinking and risk perception when popularizing algorithm knowledge from the perspective of algorithm users [32] and to improve people’s vigilance awareness and critical thinking of algorithms [33].

In addition, we found that information diversity is an important element behind algorithm awareness. Diversity here refers to not only the diversity in platforms, but also diversity in information content users receive on a daily basis. As our findings suggest, an overly simplistic personalization algorithm will have a negative impact on the empowerment of information users, restricting the quality and quantity of information they receive from the platform. Therefore, we argue that as content recommendation algorithm push personalized content to users, technology companies need to consider how to best inform users of a more diversified information diet, and how to educate users of the technological functions available on the digital platforms.

5.2 Strengthen the Transparency of Algorithms and Protect the Subjectivity of Users

The results of RQ2 prove the significant relationship between user satisfaction and platform algorithm transparency. To a certain extent, algorithmic literacy represents users’ awareness of the platform and their ability to use it, and the higher the algorithm awareness, the higher users’ ability to use the platform to meet their informational needs. Our study found that users’ awareness of algorithmic personalized content filtering and awareness of algorithmic ethical privacy issues influenced users’ experience of using the platform. In addition, algorithm awareness directly affects users’ understanding and use of functions such as platform production and publishing, which further affects their experience of using the platform.

Therefore, improving the level of users’ algorithm awareness is a win-win solution for both users and the platform. Platforms that want to enhance positive feedback from users should appropriately increase the transparency of their algorithms, explain their rules in sorting, pushing and retrieval in a simple and clear way, pay attention to algorithm transparency and interpretability to avoid adverse effects on users and prevent disputes and controversies. In the long run, algorithmic transparency is not only one of the ways to improve users’ awareness of algorithms, but also an inevitable issue to be discussed in algorithm regulation and algorithm optimization [34]. At the same time, algorithmic transparency should not stop at the design of hidden functions under policy mandates. We found that the steps to turn off the algorithm function in Douyin are cumbersome, and users generally cannot easily find the close button. When the function that empowers users to actively close algorithmic recommendations is hidden behind a heavy user interface design, it essentially violates the principle of transparent and open presentation and does not effectively implement the right of algorithmic users to close algorithmic recommendations.

Algorithm platform should ensure that human intervention and the users’ right to independent choice, in the “provisions” in Article 17, in addition to the clear platform to provide the option to close the recommendation algorithm service, but also provides that “the recommendation algorithm service provider should provide the user with the ability to select or delete the user tag for the recommendation algorithm service for their personal characteristics” In view of the purpose of users’ use of the platform, the diversity of demands and the differences in users’ algorithm awareness, we believe that the platform should also design a functional interface to meet the users’ power to manage and delete their own tags when implementing the regulations. Algorithm platforms should further refine industry standards, ethical norms, and software practices for tag management in personalized recommendation algorithms, and actively seek a balance between user empowerment and user experience.

Algorithm awareness research from the users’ perspective helps us explore which groups may be at a disadvantage of the digital divide in a digital environment where algorithmic recommendations are popular. And understand whether the closure of algorithmic recommendation interfaces required by the policy is truly accessible to users and whether user rights and interests are truly protected. Currently, much effort is still needed to raise the algorithm awareness of different social groups.

5.3 Limitation

There are still a number of limitations in this study. In terms of data collection, limited by the online questionnaire survey method, there are many samples of undergraduate and people under the age of 25, and the incomplete data has a certain impact on the analysis results. In terms of data analysis, more complex statistical analysis can be performed for some data results. In terms of interview design, there is also a lack of more qualitative interview samples. In follow-up research, a return survey can be conducted to explore the changes and performance of user algorithm awareness.

References

Gorwa, R., Binns, R., Katzenbach, C.: Algorithmic content moderation: Technical and political challenges in the automation of platform governance. Big Data Soc. 7(1), 2053951719897945 (2020)

CNNIC: Statistical report on Internet development in China 2022. China Internet Network Information Center (2022)

Hargittai, E.: Second-level digital divide: differences in people’s online skills. First Monday 7(4) (2002)

DiMaggio, P., et al.: Digital inequality: from unequal access to differentiated use. Soc. Inequal., 355–400 (2004)

Hargittai, E., et al.: Black box measures? How to study people’s algorithm skills. Inf. Commun. Soc. 23(5), 764–775 (2020)

Hargittai, E., Micheli, M.: Internet skills and why they matte. Society and the Internet: how networks of information and communication are changing our lives, pp. 109–124. Oxford University Press, Oxford (2019)

Gran, A.-B., Booth, P., Bucher, T.: To be or not to be algorithm aware: a question of a new digital divide? Inf. Commun. Soc. 24(12), 1779–1796 (2021)

Yu, L.: How poor informationally are the information poor? J. Doc. 66(6), 906–933 (2010)

Yan, P., Schroeder, R.: Grassroots information divides in China: theorising everyday information practices in the Global South. Telemat. Inform. 63, 101665 (2021)

Yu, L., Zhou, W.: Information inequality in contemporary Chinese urban society: the results of a cluster analysis. J. Assoc. Inf. Sci. Technol. 67(9), 2246–2262 (2016)

Hargittai, E., Walejko, G.: The participation divide: content creation and sharing in the digital age. Inf. Commun. Soc. 11(2), 239–256 (2008)

Beer, D.: Power through the algorithm? Participatory web cultures and the technological unconscious. New Media Soc. 11(6), 985–1002 (2009)

Eslami, M., et al.: I always assumed that I wasn’t really that close to [her]: reasoning about invisible algorithms in news feeds. In: Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, pp. 153–162. (2015). Association for Computing Machinery

Huang, X.: A research on the attitudes of individuals towards recommendation algorithm: based on 1075 users of news apps applied recommendation algorithm in China. Editor. Friend 06, 63–68 (2019)

Zarouali, B., Boerman, S.C., de Vreese, C.H.: Is this recommended by an algorithm? The development and validation of the algorithmic media content awareness scale (AMCA-scale). Telemat. Inform. 62, 101607 (2021)

Tichenor, P.J., Donohue, G.A., Olien, C.N.: Mass media flow and differential growth in knowledge. Publ. Opin. Quart. 34(2), 159–170 (1970)

Cotter, K., Reisdorf, B.C.: Algorithmic knowledge gaps: a new dimension of (digital) inequality (2020)

Napoli, P.M., Obar, J.A.: The emerging mobile internet underclass: a critique of mobile internet access. Inf. Soc. 30(5), 323–334 (2014)

Eshet, Y.: Digital literacy: a conceptual framework for survival skills in the digital era. J. Educ. Multimedia Hypermedia 13(1), 93–106 (2004)

Zhu, H., Jiang, X.: A review of domestic digital literacy research. Libr. Work Study (08), 52–59 (2019)

Shin, D., Kee, K.F., Shin, E.Y.: Algorithm awareness: why user awareness is critical for personal privacy in the adoption of algorithmic platforms? Int. J. Inf. Manag. 65, 102494 (2022)

DeVito, M.A., Gergle, D., Birnholtz, J.: Algorithms ruin everything: #RIPTwitter, folk theories, and resistance to algorithmic change in social media. In: Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, Colorado, USA, pp. 3163–3174. Association for Computing Machinery (2017)

Bostandjiev, S., O’Donovan, J., Höllerer, T.: TasteWeights: a visual interactive hybrid recommender system. In: Proceedings of the Sixth ACM Conference on Recommender Systems (2012)

Herlocker, J.L., Konstan, J.A., Riedl, J.: Explaining collaborative filtering recommendations. In: Proceedings of the 2000 ACM Conference on Computer Supported Cooperative Work (2000)

Yang, G., She, J.: News visibility, user activeness and echo chamber effect on news algorithmic recommendation: a perspective of the interaction of algorithm and users. Journal. Res. (02), 102–118+123 (2020)

Peng, L.: Illusion, prisoner of algorithm, and transfer of rights: the new risks in the age of data and algorithm. J. Northwest Norm. Univ. (Soc. Sci.) 55(05), 20–29 (2018)

Mayer-SchÖNberger, V.: Delete: The Virtue of Forgetting in the Digital Age. Princeton University Press (2009)

Beer, D.: The social power of algorithms. Inf. Commun. Soc. 20(1), 1–13 (2017)

Van Deursen, A.J., Van Dijk, J.A.: The digital divide shifts to differences in usage 16(3), 507–526 (2014)

Bourdieu, P.: Distinction: A Social Critique of the Judgement of Taste. Routledge, London (2010)

Li, Q.: A brief analysis of bourdieu’s field. Theory J. Yantai Univ. (Philos. Soc. Sci. Ed.) (02), 146–150 (2002)

Peng, L.: How to achieve “living with algorithms”: algorithmic literacy and its two main directions in the algorithmic society. Explor. Free Views (03), 13–15+2 (2021)

Peng, L.: The multiple factors that lead to the infor-mation cocoon and the path of “breaking the cocoon”. Press Circ. (01), 30–38+73 (2020)

Shen, W.: The myth of the principle of algorithmic transparency: a critique of the theory of algorithmic regulation global. Law Rev. 41(06), 20–39 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Wei, S., Yan, P. (2023). Measuring Users’ Awareness of Content Recommendation Algorithm: A Survey on Douyin Users in Rural China. In: Sserwanga, I., et al. Information for a Better World: Normality, Virtuality, Physicality, Inclusivity. iConference 2023. Lecture Notes in Computer Science, vol 13971. Springer, Cham. https://doi.org/10.1007/978-3-031-28035-1_15

Download citation

DOI: https://doi.org/10.1007/978-3-031-28035-1_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-28034-4

Online ISBN: 978-3-031-28035-1

eBook Packages: Computer ScienceComputer Science (R0)