Abstract

The idea of creating a direct link between the brain and a computer, once exotic and for many people inconceivable, is steadily becoming mainstream. Building on decade-long academic research and fueled by successful demonstrations of brain-controlled devices, tech investors are now increasingly engaged in developing and patenting such technology. While early conceptions focused on assistive applications, e.g., to control a prosthesis or restore communication, new paradigms are implemented to enhance brain function to or above the norm. Moreover, so-called bidirectional brain/neural-computer interfaces could restore sensory capacities or suppress pathological brain activity in neuropsychiatric disorders. Merging this technology with artificial intelligence (AI) promises to increase applicability in various medical and non-medical applications. The rapid advancements of such AI-enhanced brain–computer interfaces (BCIs) beyond the medical field raise many concerns, including dual-use, cybersecurity, and brain-hacking. This chapter will provide an overview of the most innovative trends in current brain/neural–interface technology and elaborate on the associated technical and conceptual challenges ahead. While it is very difficult to anticipate which applications will evolve, we will outline BCI technology’s most likely course of evolution and its implications for society.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Brain–computer interface

- Artificial intelligence

- Cybersecurity

- Adaptive neuromodulation

- Assistive technology

- Neurorestoration

- Hybridmind

1 Introduction

Mind-reading devices were already conceptualized in the late nineteenth century and have, since then, inspired numerous science fiction authors and filmmakers. After the invention of devices that turned the invisible, e.g., sound, into something measurable, it seemed plausible that thoughts too could be measured and translated into something visible. Although the biological substrates of thought remained elusive, effects of direct electric stimulation of the brain suggested that thought, memories, and emotions have an electric manifestation in the brain that could influence electrical properties of the body. Using a galvanometer, the first attempts to measure these influences, e.g., for lie detection, seemed promising [1] but remained rather unspecific and inaccurate.

In the early twentieth century, Hans Berger, who self-reportedly searched for the substrate of telepathy, successfully invented electroencephalography (EEG), turning the brain’s invisible electric oscillations directly into curves and patterns painted on long tapes of paper [2]. Using such an apparatus, it was found that brain responses evoked by external stimuli, e.g., a flash, tone, or touch, could allow inferences about mental states or cognitive processes. The finding that modulations of EEG activity, e.g., alpha oscillations (9–15 Hz), were state- or task-dependent and apparently confined to circumscribed brain regions (e.g., occipital alpha oscillations related to visual function or central alpha oscillations related to sensory and motor function) raised the expectation that it would be possible to decipher the mental processes underlying perception and action. After initial enthusiasm, it turned out, however, that mapping these EEG patterns to specific thoughts was exceptionally difficult. Nonetheless and despite its variability and non-stationarity, EEG provided useful information about a person’s level of alertness and proved very helpful in the characterization of brain disorders, such as epilepsy [3].

With the advent of computers in the 1950s, it was hoped that the automatic interpretation of electric brain signals would lead to new insights into the workings of the brain. Thus, in 1961, the National Institutes of Health (NIH) funded the first computer facility for this purpose at the Brain Research Institute of the University of California in Los Angeles (UCLA) [4]. Here, Thelma Estrin, a pioneer in biomedical engineering, successfully established the first analog-to-digital converter to realize an online EEG digital computing system. Previously, Grey Walter developed the first automatic EEG frequency analyzer [5], which was later used to provide online feedback of brain activity. In this context, it was soon discovered that operant conditioning, i.e., increasing or decreasing the probability of a specific behavior depending on the presence or absence of a contingent reward or punishment, was also valid for the behavior of brain signals [6]. Such operant conditioning of neural events (OCNE) soon became the subject of broad interest. It was not only demonstrated for wide-spread synchronized activity of neuronal cell ensembles in the sensorimotor cortex of cats [7] but also for single cells in the motor cortex of macaques [8].

Building on these developments, the first brain–computer interface (BCI) project that aimed at direct brain–computer communication using EEG signals was started at UCLA in the early 1970s. This project was born of the conviction that EEG does not only consist of random noise, but contains “concomitances of conscious and unconscious experiences” resulting in a complex mixture of neural events [9]. Building on well-characterized evoked responses, e.g., those in the visual domain and other phenomena such as OCNE or the contingent negative variation (CNV), the proposed BCI system was geared toward the use of both spontaneous EEG and specific evoked responses to establish a direct “brain-computer dialogue.”

Today, 50 years later, we may still not have achieved precise and accurate mind-reading as envisioned in the nineteenth century, but the feasibility, utility, and limits of BCI-enabled human–computer interaction have been increasingly well charted. Besides active or directed control of external devices [10], additional generally unanticipated forms of BCI applications were established, e.g., BCIs designed to trigger neural recovery (also termed restorative BCIs) [11] or BCIs conveying information regarding the user state to improve the ergonomics of human–computer interaction (also termed passive BCIs) [12, 13].

Increasing digitalization of processes and interactions, ubiquitous computing, and wireless connectivity have paved the way for so-called immersive technologies [14] that are now increasingly blurring the boundaries between the human mind and body and digital tools [15]. The ambitions and collective efforts to merge the human mind and machines have never been higher. But how could state-of-the-art BCIs contribute to this endeavor? Where are the limits on this path? And where should these limits be?

In this chapter, we aim to provide an overview of the most well-established BCI concepts and will introduce current state-of-the-art medical and non-medical use cases that involve BCI technology. We will then describe current efforts to extend the scope of BCI applications and map out the prospects of merging BCIs with artificial intelligence (AI) and other emerging technologies, such as quantum sensors. At the end of this chapter, we will shortly outline the possible impact of future BCI technology on society and human self-conception.

2 Modes of Operation and Applications of Brain/Neural–Computer Interfaces

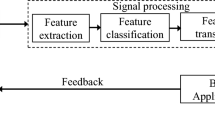

The original ambition of the first BCI project at UCLA was to establish electrical brain signals as carriers of information in human–computer interaction or for the purpose of controlling external devices, such as prosthetics or even spaceships [9]. To implement such BCI, three distinct components are required: (1) a brain signal recording unit translating analogue electric, magnetic, or metabolic measures into digital data streams, (2) a real-time signal processing unit for interpretation of this incoming data, and (3) an actuator, output generator, or basically any device that uses this information in a purposeful way [16].

While first conceptions of BCIs were mainly designed to convey information (specifically control commands) from the brain to the computer, e.g., to restore movement or communication in paralysis [17], subsequent concepts extended from unidirectional toward bidirectional BCIs, i.e., systems that not only read-out brain activity but also write into it, e.g., via brain stimulation of the central nervous system (CNS) [18, 19]. Here, the stimulator acts as a BCI output creating a direct feedback loop between brain states and brain stimulation that does not involve or depend on the user’s sensory system.

However, since it is not entirely clear how information is represented and stored in the brain, the ability to transfer information into the brain using brain stimulation is still very limited. Thus, the notion of “read and write” may be misleading in the context of current bidirectional BCIs, to the extent that it implies an analogy between the brain and a hard disk or magnetic tape. In contrast to conventional computers that use a two-symbol system to represent information (i.e., zeros and ones), the symbol system underlying information processing and its physiological representation in the brain is not well understood [20, 21]. As long as this relationship remains an enigma, information transfer from the computer to the brain will be very limited.

Before outlining more specific BCI applications, the following section introduces the established modes of operation and categories of BCIs that influence the range and scope of applications across various possible use cases.

From the user’s perspective, three different modes of operation can be distinguished: active, reactive, and passive. Active BCIs are designed for the voluntary control of external devices, and typically translate brain activity linked to goal-directed behavior into control commands directed at tools that assist in achieving the intended goal [22, 23]. Reactive BCIs, in contrast, infer their output from the brain’s reaction to external sensory or direct stimulation [24,25,26]. Due to the relatively high signal-to-noise ratio of evoked brain responses, such paradigms achieved the highest information transfer rate (ITR) in noninvasive BCIs, e.g., in a speller task [27]. Finally, passive BCIs derive their output from automatic or spontaneous brain activity serving as implicit input to support an ongoing task [12]. Here, depending on the level of interactivity, four categories can be distinguished: (1) Mental state assessment to replace or support other data (e.g., from questionnaires or behavioral observations) without direct feedback to the user [28], (2) online state assessment (e.g., of fatigue or mental workload) with feedback to the user (e.g., indicated by a warning light), (3) state assessment with the purpose of directly influencing the assessed state in a closed control loop manner (e.g., reducing workload of an air traffic controller by limiting irrelevant sensory input in critical situations) [29, 30], and (4) automated adaptation, where the BCI system continuously learns and adapts according to the user state [31]. Here, the system builds a model to represent aspects of the user’s affective or cognitive responses that serve as a basis for the system’s autonomous behavior. For example, in case of the air traffic controller, the system would have learned which situations will most likely provoke a mental overload and automatically call for assistance ahead of time.

Besides these different modes of operation, BCI systems are generally categorized according to the invasiveness or location of the brain signal recordings, as well as the purpose of use. When brain signals are recorded from inside the skull, a system is referred to as an invasive or implantable BCI. Here, a variety of signals have been established for BCI applications ranging from synaptic or local field potentials (LFP) using electrocorticography (ECoG) [32] to action potential spike trains [33]. Noninvasive BCIs typically use brain signals recorded from the surface of or at some distance from the scalp. Here, mainly six types of brain signals are used for BCI applications so far. Four were established primarily for use in active BCIs: (1) Slow cortical potentials (SCP) [34], (2) sensorimotor or mu rhythms (9–15 Hz) [35, 36], (3) blood-oxygen-level-dependent (BOLD) signals in functional magnetic resonance imaging (fMRI) [37, 38], and (4) concentration changes of oxy/deoxy hemoglobin using near-infrared spectroscopy (NIRS) [39, 40]. Two other types were established primarily in reactive BCIs: (5) event-related potentials (ERPs) and (6) steady-state visual or auditory evoked potentials (SSVEP/SSAEP) [41, 42]. SCPs, i.e., slow potential drifts with a duration of 500 ms to several seconds, were among the first signals used for noninvasive control of a BCI [43]. When related to motor activity, SCPs were specified as motor-related cortical potentials (MRCP) and can be further sub-categorized into the self-initiated Bereitschaftspotential (BP) [44], also termed the readiness potential (RP), and the externally triggered contingent negative variation (CNV) [45]. Since the BP builds up 2 s before voluntary movements, it was mainly explored in the context of controlling motor prosthetics or exoskeletons [46, 47]. BP-based BCIs were also used in ways beyond such medical applications, e.g., as a research tool [48] or in the context of detecting emergency braking intention [49].

To increase classification accuracy, BCI control paradigms were designed that use pre-defined time windows during which the presence or absence of a particular signal feature is evaluated. Reactive BCIs commonly use this approach by design because the external stimulus determines the relevant time window in which brain responses are evaluated. Active BCIs can be either operated with such pre-defined time windows, which is called a synchronous mode of operation, or in a self-paced or self-initiated mode, which is then called an asynchronous mode of operation [50]. The synchronous mode of operation vastly limits the applicability and practicality of active BCI control in daily life contexts since users must wait for the pre-defined time windows before active control is possible. Moreover, since time windows are indicated by external stimuli or triggers, such a paradigm requires an intact (afferent) sensory domain as well as the user’s attention (like reactive BCIs). Thus, this type of BCI has been mainly established in the auditory [51] or visual [52] domain since these two sensory domains are typically less affected in many neurological disorders (e.g., stroke, spinal cord injury or motor neuron diseases). In contrast, the asynchronous mode of operation allows for self-initiated or self-paced BCI control providing more autonomy, flexibility, and intuitiveness to the user. However, classification accuracies of self-paced BCIs are typically lower compared to BCIs operating in a synchronous mode.

To increase control accuracy, BCI paradigms were established that combine different types of brain signals, e.g., MRCP or ERP with mu rhythms [53, 54]. Such hybrid BCIs also include error-related potentials (ErrPs), i.e., stimulus-triggered negative and positive EEG deflections that are related to error processing, reward prediction, and conscious error perception [55]. Such signals can be used to correct false classifications or to improve classification algorithms. In addition, other bio-signals, e.g., related to eye movements or peripheral muscle activity, were implemented to improve the applicability of brain-controlled devices in real-life scenarios. When brain-controlled systems also infer mental states, including intentions, from such peripheral signals, these systems are typically referred to as brain/neural–computer or –machine interfaces [10, 56].

Given that the physiological representations of the symbol system underlying information processing in the brain is largely unknown, how can classification accuracies of BCIs be further improved?

A very important strategy to increase the classification accuracies of active BCIs is operant conditioning of neural events (OCNE) which was mentioned before [57]. These neural events are typically measures of electrical, magnetic, or metabolic brain activity and their derivatives.

Behavior of single cells or larger neuronal cell ensembles (e.g., increased firing rates or desynchronization/synchronization of sensorimotor EEG activity) are contingently rewarded (e.g., by delivery of a banana-flavored pellet in the case of a monkey, or monetary incentive in the case of humans) to increase the likelihood of the behavior’s recurrence. It was found that the intactness of the cortical-basal ganglia-thalamic feedback loop plays an important role for OCNE [58] and might explain why people with brain lesions or neurodegenerative disorders struggle in acquiring BCI control. Still, depending on the brain signal and signal-to-noise ratio, BCI learning based on operant conditioning can require days to weeks in healthy volunteers, or even months of training in patients. Another strategy to increase BCI control accuracy is feedback learning. Being independent of external reward, feedback learning depends, however, on the user’s intrinsic motivation and the involvement of internal reward mechanisms ascribed to intact cortico-striatal circuitry including the ventromedial prefrontal cortex (VMPFC) [59]. Using such an approach, more complex measures of brain activity, e.g., cortico-thalamic BOLD connectivity, could be trained [38]. With the advent of novel machine learning approaches, including convolutional neural networks (CNN), new and more complex derivatives of brain activity have been proposed for BCI applications [60, 61]. The main challenge with these more complex derivatives is to ensure that meaningful brain activity and not (systematic) signal artifacts are decoded, and that the underlying algorithms are real-time compatible.

In contrast to active BCIs, reactive BCIs based on evoked responses do not require any learning. This increases their applicability, e.g., in BCI naïve users, but comes with other disadvantages mentioned before (e.g., dependence on the sensory system and user attention, or possible distraction from and interference with other tasks).

An aspect of OCNE and feedback learning that was rather neglected in the early history of BCIs is the impact of BCI learning on brain physiological processes and, thus, brain function. After automatic EEG frequency analyzers became available in the early 1960s, M. Barry Sterman discovered that operant conditioning of SMR can reduce seizure frequency in cats exposed to seizure-inducing agents or persons diagnosed with epilepsy [7]. Later it was found that such conditioning of brain activity, commonly termed neurofeedback, can also improve symptoms of attention deficit and hyperactivity disorder (ADHD) in children [62]. These first successes raised great hopes that EEG neurofeedback could improve symptoms of a multitude of brain disorders. While some positive effects, e.g., in ADHD, could be replicated in larger controlled and double-blinded studies [63, 64], others could not be confirmed. Although it became widely accepted that OCNE can influence brain function and behavior, the underlying mechanisms remained incompletely understood, and the specificity and effect size of neurofeedback seemed to depend on a variety of factors [65]. Thus, the initial enthusiasm gradually abated over the ensuing decades but became later revived in the context of BCI research.

While the first BCI paradigms used OCNE to improve BCI control, e.g., to operate a prosthesis, it was found that repeated use of such BCI can also have restorative effects on the brain. For instance, it was shown that repeated use of a BCI-controlled exoskeleton can trigger motor recovery, even in chronic paralysis after stroke [35, 66] or cervical spinal cord injury [67]. Thus, assistive and restorative BCIs came to be distinguished on the purpose of the application [11, 68]. In this sense, restorative BCIs build on the old research tradition of neurofeedback and may now—with the technological advances implemented in the BCI field—elucidate some of the unknown mechanisms underlying neurofeedback. Although first conceptions of BCIs mainly focused on the external effects (i.e., a BCI conceptualized as a tool to act on the environment), it becomes increasingly clear that any form of BCI interaction also impacts the brain itself, to a larger (restorative BCIs) or lesser extent (reactive or passive BCIs). This aspect is even more apparent in bidirectional BCIs that involve direct stimulation of the CNS.

Building on the described modes of operation, various medical and non-medical BCI applications have been realized so far. These include versatile control of multi-joint prosthetics, robotic arms, or functional electric stimulation (FES) that assist in grasping and manipulating objects of daily living [69, 70]. By using a bidirectional interface to restore sensory capacity during prosthesis control, manipulation could be substantially improved [71]. Here, a simple linear relationship between external force sensors and applied stimulation intensities could induce the feeling of touch. Using a combination of noninvasive EEG/EOG signals enabled patients with complete hand paralysis after a high spinal cord injury to independently eat and drink in a restaurant [10]. In this study, closing motions were initiated using modulations in EEG, and hand opening motions were controlled by EOG. Such a paradigm was also successfully implemented for whole-arm exoskeleton control [72]. In the context of robot or exoskeleton control, also error-related potentials (ErrP) were used to optimize brain control of robotic devices [73].

Another important medical application aims at the restoration of communication. Here, early demonstrations of SCP-based systems allowed patients who were diagnosed with locked-in syndrome (LIS), i.e., the inability to speak or move, to spell full sentences by selecting letters on a display [34]. Different modalities were applied, including electrocorticography (ECoG) and functional NIRS, with variable success [74, 75]. The main limitation of these approaches was that once patients entered a complete locked-in state (CLIS), BCI-enabled communication failed. The reason for this is still not well understood, but fragmentation of sleep patterns [76] and progressive neurodegenerative processes affecting cell metabolism may play a role. Since, by definition, LIS patients can still communicate with eye movements or subtle muscle twitches, the necessity of developing BCIs for this clinical population was, thus, quite controversially discussed [77]. By providing an alternative communication channel, BCIs in LIS were nonetheless positively received and regularly used by the patients [78]. Only recently, successful communication in CLIS was reported using two microelectrode arrays. By modulating neural firing rates based on auditory feedback, a 35yo patient diagnosed with amyotrophic lateral sclerosis (ALS), an often rapidly progressing neurodegenerative disorder, could sustain communication despite CLIS [79]. Interestingly, the communication rates the patient could achieve with the implanted device were comparable to those of noninvasive BCIs used in healthy volunteers. Communication often dealt with requests related to body position, health status, food, personal care, and social activities. Since all attempts to restore communication using noninvasive BCIs in CLIS have failed before, this first successful use case may now increase the willingness of ALS patients to undergo implantation. However, this implantation is costly and not broadly available, yet. Furthermore, its use still requires a team of experts to maintain the functionality of the system. It is unclear whether health insurance will cover the associated costs. Nevertheless, the prospect of successfully overcoming the profound communication impairment of CLIS marks an important milestone in the history of BCIs and provides important reasons to use this technology in the medical field.

Beyond these examples, the use of active BCIs in non-medical applications is still confined to research environments. While it has been shown that active BCIs can be also used to steer an airplane or drone [80, 81], or to play video games [82], acquiring the necessary BCI control is cumbersome and time consuming. Although BCI learning itself could be part of a game, this would necessitate that BCI hardware be inexpensive and accessible and BCI control be of sufficient reliability. To date, despite extensive investment into technology development, this has not been achieved [83]. It can be anticipated, though, that inexpensive and accessible hardware will become available, and this will facilitate the implementation of BCI technology as part of video games or other forms of entertainment. Especially reactive and passive BCIs may find future applications beyond entertainment. For instance, reactive BCIs, e.g., based on SSVEP, are currently explored in autonomous driving [84, 85]. Passive BCIs have been mainly tested in the context of the ergonomics of human–computer or human–machine interaction, but they may incorporate other bio- or neuroadaptive approaches that take peripheral measures such as muscle tone, dynamic posture, pupillometry, or skin conductance into account [86].

While state assessment with feedback in passive BCIs resembles classical neurofeedback paradigms, the aim of such systems is mainly to improve human–computer interaction and not to normalize or alter brain states [12]. However, it can be argued that passive BCIs may also exert such an effect when used over longer periods of time. More systematic studies are needed to address this question.

3 Next-Generation Brain/Neural–Computer and Machine Interfaces

Current BCI technology mainly covers the motor domain (e.g., to re-establish movement and communication) and seeks to improve human–computer or human–machine interaction by integrating measures related to error processing, attention, cognitive workload, or fatigue. The next-generation B/NCIs will extend toward other domains, e.g., emotion regulation, memory formation, cognitive control, and perception. These brain functions are often affected across various brain disorders, such as depression, ADHD, obsessive-compulsive or anxiety disorder, addiction, or dementia [87]. With the development and clinical application of these next-generation interfaces, it is hoped that it will be possible to alleviate the burden of brain disorders and to better understand the relationship between brain functions and clinical symptoms.

To establish such BCI system, the so-called brain–behavior relationship, i.e., the link between specific individual neurophysiological measures and domain-specific brain function or behaviors and symptoms, must be revealed [88]. Despite tremendous progress in neuroscience over the last decades, this has not been achieved yet. Although various correlations between certain brain physiological measures and brain functions have been found, their causal relationships have remained largely unclear. Moreover, attempts to reveal these causal relationships often included the averaging of brain physiological measures over tens to hundreds of trials (i.e., repetitions of experimental tests) to reduce variability and noise. Such averaging may, however, also reduce relevant information for precise mapping to brain functions or behavioral outcome measures.

A possible approach to overcome this challenge is to use brain stimulation targeting specific physiological measures, e.g., brain oscillations at certain frequencies, and assess the impact on brain function and behavior at millisecond-to-millisecond precision [89,90,91]. To achieve this, several technical challenges must be mastered: (1) Brain physiological measures must be recorded and analyzed in real-time, (2) stimulation must be delivered at high temporal and spatial precision, (3) stimulation artifacts must be sufficiently eliminated to assess the online stimulation effects.

The first two points are equally necessary to establish bidirectional BCIs, and thus, share the same technological framework. Typically, implantable bidirectional BCIs that deliver electric stimulation to the brain do so at some distance from the recording electrodes to reduce interference of stimulation with BCI classification [71]. Currently, as previously mentioned, it is unclear which stimulation parameters are most effective to interact with the human brain. In this context, novel approaches have been introduced that use deep learning to derive effective stimulation patterns, e.g., for optic nerve stimulation to restore normal vision in disorders affecting the retina [92].

In the noninvasive field, a few important milestones toward bidirectional BCIs have been reached, e.g., in vivo assessment of brain oscillations during transcranial direct current stimulation using magnetoencephalography (MEG) [89] and, recently, recovery of targeted brain oscillations during transcranial alternating current stimulation (tACS) using EEG [90]. The MEG has the advantage that neuromagnetic activity passes through transcranial stimulation electrodes undistorted, so that brain activity immediately underneath the stimulation electrodes can be reconstructed. This is not the case in EEG, and due to the variable path of the electric currents through the skull, precise and focal stimulation using transcranial electric stimulation (tES) remains a challenge. Brain oscillations can be also targeted with transcranial magnetic stimulation (TMS) [93], but due to the magnitude of artifacts that are associated with magnetic fields at 2–3 Tesla, sufficient artifact elimination is difficult to achieve. Moreover, TMS commonly uses short magnetic pulses of 160–250 μs duration that do not resemble the targeted neural activity. Nevertheless, successful implementation of the first EEG-based closed-loop TMS targeting brain oscillations at millisecond-precision represented an important milestone on the path toward effective neurostimulation.

Recent advances in brain stimulation methods using temporal interference of electric [94] or magnetic [95] fields or ultrasound [96] may overcome some of the current limitations in noninvasive brain stimulation and neuromodulation by offering higher focality and penetration depth. Both temporal interference and ultrasound stimulation can reach deeper brain areas and provide higher focality than other established methods like TMS or tES. Currently, stimulation effects of these new methods and their underlying mechanisms have not yet been well explored, and it is unclear whether closed-loop operation is feasible, since stimulation artifacts have not been well characterized yet. Consequently, these new stimulation methods have not been implemented in the context of bidirectional BCI but promise to further advance the field.

Another frontier in the development of next-generation B/NCIs relates to the precise recording of neural activity at high temporal and spatial resolution. In this context, new high-density microelectrodes were developed [97]. However, these necessitate implantation with the risk of bleedings or infections [98]. Moreover, replacement or repair of implanted device components requires another surgical procedure. Other approaches include semi-invasive methods such as intravascular [99] or sub-scalp recordings to assess LFP or EEG. It is most likely that all these approaches will remain in the experimental, medical domain for the foreseeable future because the cost-benefit-ratio across users and applications has yet to be determined. It is unclear whether recording from more neurons will automatically result in higher decoding accuracy, precision in the assessment of brain states, or, using OCNE, in more degrees of freedom for active BCI control, or whether there are some inherent boundaries for implantable BCIs [100]. To further advance our understanding of brain–behavior relationships and to possibly elucidate the symbol system underlying information processing in the brain, these methods are, however, of critical importance.

In analogy to BCI-triggered motor recovery after stroke, it is conceivable that next-generation B/NCIs may be only used for a defined time or intermittently to facilitate or maintain recovery of brain function, e.g., to recover from depression or to maintain memory function in neurodegenerative disorders. This is another reason for the assumption that rather noninvasive BCIs will be more prevalent than implantable solutions. Nevertheless, there might be cases in which recovery of brain functions is not possible so that continuous use of such a neuroprosthesis is necessary. The main challenge with noninvasive means to record brain activity is their susceptibility to noise and their lack of spatial resolution compared to implanted electrodes or sensors [101]. Moreover, when using EEG, brain signals are dampened and distorted by various tissues. This reduces signal quality, particularly in the upper frequency bands above 25 Hz. Recently, quantum sensors were introduced that promise to increase precision of brain recordings. These quantum sensors, e.g., optically pumped magnetometers (OPM), can measure neuromagnetic fields passing the skull undistorted [102]. Provided proper calibration, OPMs could reach much higher spatial resolution than any other established noninvasive neuroimaging tool, e.g., conventional helium-cooled magnetometry using super-conducting quantum interference devices (SQUID). Moreover, they allow for the assessment of brain signal frequencies up to the kilohertz range, i.e., they might make physiological information in higher frequencies, e.g., the gamma range, more accessible. The main drawback with these highly sensitive magnetometers is that they require substantial magnetic shielding from environmental fields. Magnetometers based on synthetic diamonds or polymers that can be operated in the earth’s magnetic field may overcome this limitation, however [103].

Taken together, important advances in sensor technology, stimulation techniques, real-time signal processing, and machine learning have been made that now allow the study of brain–behavior relationships with unprecedented precision. Although many unsolved questions remain (e.g., the link between brain functions and specific clinical symptoms, the cause for reoccurring episodes of mental illness), the prospects for extending the scope of B/NCIs toward domains such as emotion regulation, memory formation, cognitive control or perception have never been better. Particularly, the combination of BCI technology with other emerging technologies, such as AI and quantum computing, may catalyze the feasibility of new applications outlined in the next section.

4 Merging Brain/Neural–Computer Interfaces with Artificial Intelligence

For many decades, machine learning has been used as part of BCI technology to improve the accuracy of brain signal classification [104]. In recent years, new machine learning-enabled tools and applications have been developed outside of the BCI field, e.g., image or speech recognition using neural networks, subsumed under the term artificial intelligence (AI) technology, and they are now increasingly being merged with BCI technology [105]. Overall, there are three main areas in which the implementation of AI components is being explored in the development of new BCI applications: 1. improving classification of brain/neural activity patterns, 2. identifying effective stimulation parameters for bidirectional BCIs, 3. implementing shared control of brain/neural-controlled external devices, e.g., robots or exoskeletons.

While the most established and broadly used BCI algorithms use linear classification because of their robustness (in the presence of noise or signal outliers and a comparably small amount of training data) and low computational cost, kernel-based, e.g., support vector machines (SVM), and other non-linear methods have also been implemented [106]. These methods reach slightly higher classification accuracies but at the cost of higher computation time and memory. Since this is nowadays less of a problem due to broader availability of computational power and memory, non-linear classifiers, e.g., convolutional neural networks (CNN), are being increasingly explored in the context of BCI feature classification [107]. Here, it should be underlined that the use of non-linear methods requires good understanding of the data, because several parameters and network design decisions must be chosen in an informed way. For example, signal artifacts (e.g., related to heartbeat, pulse waves, breathing, voluntary movements, eye blinks, increased muscle tone, eye movements, or other artifacts from the environment) may unintentionally influence classification. Consequently, with non-linear methods it is more difficult to verify what kind of information (e.g., neural vs. artifactual sources) is being exploited by a particular model although concepts for explainable AI exist [108, 109]. In the case of implantable BCIs, this might be less of a challenge, but caution needs to be taken. Nevertheless, neural networks may be superior under certain conditions and paradigms compared to other approaches. With the advent of quantum sensors, non-linear classifiers might also prove particularly useful in noninvasive or minimally invasive BCIs.

The second area where the implementation of AI may play an increasing role for future BCI technology is the development of effective stimulation parameters to interact with the human brain. This would be a critical prerequisite to establish bidirectional BCIs and sensory neuroprostheses, i.e., systems that substitute for motor, sensory, or cognitive functions. For instance, by using a convolutional neural network (CNNs) as a model of the ventral visual stream, optic nerve stimulation patterns could be derived to elicit static and dynamic visual scenes [92]. In other words, specific stimulation patterns were identified that could convey a certain visual image directly to the brain. While this has been only achieved in silico so far and not in real time, these results indicate that neural networks may play an important role for computer-to-brain communication, and may also help to gain better understanding of how information is represented in the brain [110]. Once it becomes feasible to convey rich sensory information to the brain, neuroadaptive algorithms could be implemented, e.g., to reduce or augment sensory information depending on the user’s state. It is very likely, though, that—in analogy to active BCIs used in paralysis—this technology will first be used to restore compromised or lost sensory function in blind or deaf individuals. Besides improving function of a sensory system, such technology could be also used to provide information beyond accurate representations of the environment, but it is unclear to what degree this would be possible due to top-down regulation of sensory input [111] and whether this would negatively interfere with normal perception of the environment. Since the brain can also generate quasi-sensory experience in the absence of input from the sensory system (e.g., during dreaming), it is not entirely inconceivable that sensory experience could be influenced or induced in such state with a bidirectional BCI.

The third area where merging B/NCI technology and AI has been explored and successfully demonstrated is the so-called shared control of external devices, e.g., robots or exoskeletons [72, 112]. Controlling a multi-joint robotic arm or any other device or manipulator with many degrees of freedom requires high ITRs that cannot be achieved with noninvasive means. Currently, the boundaries for OCNE-based BCI control in terms of precision, reliability, and robustness are still unclear and are the subject of further exploration. Even implantable BCIs using several hundreds of electrodes are limited in their capacity to control such devices.

Thus, concepts of human-machine collaboration have been implemented in which the intended goal is inferred from brain activity, and the best solution to achieve the goal is computed and executed by the machine. To compute such a solution, the availability of a precise model of the environment is an important prerequisite. Various AI-enabled tools, such as 3D-object recognition, have been successfully implemented to create such a model allowing for, e.g., brain/neural whole-arm exoskeleton control [72]. Using a shared-control paradigm, grasping and manipulating a bottle, then moving it to the mouth and drinking was feasible, for example [113].

To increase applicability and practicality, it is essential to implement a veto function in shared-control paradigms that include an AI-enabled autonomous machine [114]. Such a veto function is also critical for questions of accountability and liability. Since a BCI-triggered veto is subject to the same inaccuracies inherent to all active BCI applications, other bio-signals providing higher control accuracy, e.g., related to eye movements, are currently used to trigger a veto [10, 115]. Independent of the machine’s precision or capabilities, it will be always the user’s responsibility to decide the contexts and situations in which to use shared-control systems.

Beyond these three main areas, machine learning methods are also contributing to the advancement of neuroadaptive technologies. For instance, real-time analysis of brain responses was used to create a continuously updated user model of expectations [116]. With such a model, a computer could learn to adapt to the user’s mindset to optimize goal congruency. Here, it is important to note that the model of expectation can be derived without user awareness, i.e., implicitly [110]. Whereas increasing applicability, this subconsciously informed brain–computer interaction raises important neuroethical questions, however.

5 Neuroethical Perspective

The development of bidirectional BCIs relying on AI methods and coupled to the human brain marks an interesting new step in human–machine or –computer interaction. It generates a hybrid cognitive system that runs on, or is fed by inputs from, the organic hardware of the brain as well as the AI implementing BCI. This creates, as some say, hybrid minds [117]. Surely, many technologies have deeply influenced the workings and perhaps even the evolution of the human mind. Philosophers subscribing to the Extended Mind Thesis may even go as far as saying that cognitive tools such as iPads or even pen and paper sometimes become part of the mind. Nonetheless, in those cases the interaction between the human brain and the cognitive system is less direct; the input of the extended cognitive systems proceeds via external sensory perception. BCIs afford a direct coupling at the physiological level. Also, BCIs may be more deeply integrated in the operation of the brain, and future applications may well restore or replace mental functions today carried out by the brain. The novelty thus lies in the direct, internal coupling of computers, AI, and the human brain and their resulting functional integration [118]. First cases of such hybridminds are on the verge, e.g., in people engaging with adaptive DBS or closed-loop BCIs. In the near future, closed-loop applications that affect and regulate emotions or other mental functioning are to be expected raising a range of unanswered neuroethical questions.

Apart from safety and side-effects, a central question concerns the user perspective, the experience of having-or being-a hybrid mind. Will users realize if part of their mental functioning is executed or influenced by a BCI, and how will that feel? A related question concerns the attitude people take toward their hybrid mind. There are indications that some users of DBS, for instance, feel alienated from themselves and ascribe this to the influence of the DBS on their mental life [119]. Others experience substantial changes in emotions and behavior, apparently resulting from the DBS, but welcome those changes [120]. More broadly, there is an open debate about whether brain stimulation with DBS causes personality changes, how frequently this might occur, and whether there are other explanations for the apparent changes [121]. Thus, the subjective experience of users of BCIs is an intriguing aspect to be explored, e.g., via phenomenological methods.

The blending of minds and machines raises further questions: Do BCIs become part of the person, in a strong sense; or at least, do the BCIs become part of their bodies—or do they remain tools, despite their functional and sometimes inseparable integration with the brain? Answers to these conceptual questions lead to a range of further moral and legal questions, from responsibility for negative outcomes of the actions of hybrid minds to manufacturer liability; from intellectual property in the code that might become part of the mind to issues of privacy. The range of practical problems that arise is broad and is already confronting society. Patients using the retinal implant Second Sight have encountered trouble accessing information about the device, patient support, and replacement parts following the company’s financial collapse [122]. Given the reliance of users, not just for sensory or motor functions but perhaps also for mental functions on these advanced devices, should we be approaching them as we would any normal medical device, or are different social and legal arrangements warranted?

The issues are complex and fascinating, and they will vary according to the application in question. The tendency with these technologies is to focus on the immediate replacement, support, or enhancement of a human function. For example, the success of a motor BCI is largely a matter of how usable it is for the individual. However, some human functions are inherently relational, i.e., they require two human beings. The example of communication neuroprostheses offers a good example. Routine communications about their basic daily needs and wishes are a first and important objective for paralyzed people. However, if these devices are to support high-stakes communications (e.g., requesting or refusing medical care with potentially fatal consequences, or testifying in court), the listener’s ability to judge the voluntariness and accuracy of communications will be critical [123]. The incorporation of layers of non-transparent machine learning within decoders of neural signals to produce communication will greatly complicate this effort, and the roles and needs of listeners must be built into the technology to maximize the utility of the technology.

Perhaps the most important question is how far the blending of minds and machines can and should go. The possibility of writing specific information to the brain—creating sensations, memories, and other mental states—while still far-off, is in view as an eventual possibility, as discussed above. These technologies need not be sophisticated and fully successful to be ethically important. Even a relatively modest or partial restoration of lost sensory functions is evidently exciting and valuable, but the abilities to do so would open up a host of other possibilities from the non-therapeutic creation of desired sensations (e.g., possible risk of developing dependencies that interfere with daily functioning) to the infliction of undesired sensations or states (e.g., in interrogations). Together all of this raises the perennial question with all human technological invention—that of how to capture the benefits while limiting the possible risks and unethical applications. Are there ethical lines that should not be crossed—or should technologies be developed but used only for alleviating severe disorders? These questions become pressing as BCI technology advances; in the best case, following careful consideration of the ethical implications for individuals and society.

6 Summary and Conclusions

Innovative neurotechnologies are rapidly evolving, rendering new medical and non-medical applications possible that were previously not anticipated. However, implementation of brain-controlled technology into everyday life environments is challenging. Due to the limited reliability and robustness of control, the most promising areas of application for active BCI systems remain in the medical field, e.g., for restoration of movement and communication. Here, however, the availability of versatile, robust, and certified actuators, e.g., individually tailored prostheses or exoskeletons, represent an important bottleneck for further adoption. In contrast, reactive BCIs can achieve higher classification accuracies but seem primarily attractive in scenarios in which providing feedback to the computer by other means, e.g., voice, gestures, or touch, is either undesirable or not feasible. Since high classification accuracy of passive BCI systems is less critical, such technology may become adopted faster in non-medical applications, e.g., to augment learning or to optimize ergonomics of human–computer interaction.

The implementation of brain state-informed or closed-loop stimulation of the brain marks an important milestone in BCI technology because it allows for interacting with ongoing brain activity independently of the sensory system. Besides providing direct feedback to the brain during prosthesis control, it could also sustain communication in neurodegenerative brain disorders affecting the motor and sensory system. Moreover, it could be used to suppress pathological brain activity to improve brain function. Importantly, combining neuromodulation with in vivo assessment of brain physiology could contribute to elucidating brain–behavior relationships, e.g., in the domains of cognitive, emotional, and memory function. Here, machine learning could contribute to the development of artificial models of the brain that inform new and effective stimulation patterns. Combining BCIs with AI-enabled tools, e.g., 3D-object recognition or tracking, does not only enhance versatility and performance of brain-controlled systems, but may catalyze the emergence of new applications beyond the medical field. The possibility to merge biological and artificial cognitive system gives rise to new entities that are referred to as hybridminds. Feasibility of such an entity depends, however, on understanding and direct manipulation of the mind’s symbol system and its manifestation in the brain.

References

Peterson F, Jung CG. Psycho-physical investigations with the galvanometer and pneumograph in normal and insane individuals. Brain. 1907;30(2):153–218.

Berger H. Über das Elektrenkephalogramm des Menschen. Arch Psychiatr Nervenkr. 1929;87(1):527–70.

Gibbs FA, Davis H, Lennox WG. The electro-encephalogram in epilepsy and in conditions of impaired consciousness. Arch Neurol Psychiatr. 1935;34(6):1133–48.

Estrin T. The UCLA Brain Research Institute data processing laboratory: In Proceedings of ACM conference on History of medical informatics. Bethesda, MD: Association for Computing Machinery; 1987. p. 75–83.

Walter WG. An automatic low frequency analyser. Electron Eng. 1943;16:9–13.

Kamiya J. Conditioned discrimination of the EEG alpha rhythm in humans. San Francisco, CA: Western Psychological Association; 1962.

Sterman MB, Wyrwicka W, Howe R. Behavioral and neurophysiological studies of the sensorimotor rhythm in the cat. Electroencephalogr Clin Neurophysiol. 1969;27(7):678–9.

Fetz EE. Operant conditioning of cortical unit activity. Science. 1969;163(3870):955–8.

Vidal JJ. Toward direct brain-computer communication. Annu Rev Biophys Bioeng. 1973;2(1):157–80.

Soekadar SR, et al. Hybrid EEG/EOG-based brain/neural hand exoskeleton restores fully independent daily living activities after quadriplegia. Sci Robot. 2016;1(1):eaag3296.

Soekadar S, et al. Brain–machine interfaces in neurorehabilitation of stroke. Neurobiol Dis. 2015;83:172–9.

Zander TO, Kothe C. Towards passive brain-computer interfaces: applying brain-computer interface technology to human-machine systems in general. J Neural Eng. 2011;8(2):025005.

Blankertz B, et al. The berlin brain-computer interface: non-medical uses of BCI technology. Front Neurosci. 2010;4:198.

Suh A, Prophet J. The state of immersive technology research: a literature analysis. Comput Hum Behav. 2018;86:77–90.

Lee LH, et al. All one needs to know about metaverse: a complete survey on technological singularity, virtual ecosystem, and research agenda. 2021.

Wolpaw JR. Brain-computer interfaces as new brain output pathways. J Physiol. 2007;579(3):613–9.

Soekadar SR, Haagen K, Birbaumer N. Brain-computer interfaces (BCI): restoration of movement and thought from neuroelectric and metabolic brain activity. Coordination: neural, behavioral and social dynamics, 2008: 229.

Jackson A, Zimmermann JB. Neural interfaces for the brain and spinal cord—restoring motor function. Nat Rev Neurol. 2012;8(12):690.

Esmaeilpour Z, et al. Temporal interference stimulation targets deep brain regions by modulating neural oscillations. Brain Stimul. 2021;14(1):55–65.

Barsalou LW. Perceptual symbol systems. Behav Brain Sci. 1999;22(4):577–609; discussion 610–60

Reilly J, et al. Linking somatic and symbolic representation in semantic memory: the dynamic multilevel reactivation framework. Psychon Bull Rev. 2016;23(4):1002–14.

Wolpaw JR. Chapter 6—Brain–computer interfaces. In: Barnes MP, Good DC, editors. Handbook of clinical neurology. Amsterdam: Elsevier; 2013. p. 67–74.

Steinert S, et al. Doing things with thoughts: brain-computer interfaces and disembodied agency. Philosophy Technol. 2019;32(3):457–82.

Farwell LA, Donchin E. Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr Clin Neurophysiol. 1988;70(6):510–23.

Sellers EW, Donchin E. A P300-based brain-computer interface: initial tests by ALS patients. Clin Neurophysiol. 2006;117(3):538–48.

Muller-Putz GR, et al. Steady-state visual evoked potential (SSVEP)-based communication: impact of harmonic frequency components. J Neural Eng. 2005;2(4):123–30.

Chen X, et al. High-speed spelling with a noninvasive brain-computer interface. Proc Natl Acad Sci U S A. 2015;112(44):E6058–67.

Miklody D, Blankertz B. Cognitive workload of tugboat captains in realistic scenarios: adaptive spatial filtering for transfer between conditions. Front Hum Neurosci. 2022;16:818770.

Venthur B, et al. Novel applications of BCI technology: Psychophysiological optimization of working conditions in industry. In 2010 IEEE International Conference on Systems, Man and Cybernetics. 2010.

Borghini G, et al. A multimodal and signals fusion approach for assessing the impact of stressful events on air traffic controllers. Sci Rep. 2020;10(1):8600.

Krol LR, Andreessen LM, Zander TO. Passive brain–computer interfaces: a perspective on increased interactivity. In: Brain–computer interfaces handbook: technological and theoretical advances. Boca Raton, FL: CRC Press; 2018. p. 69–86.

Leuthardt EC, et al. A brain-computer interface using electrocorticographic signals in humans. J Neural Eng. 2004;1(2):63–71.

Stavisky SD, et al. A high performing brain-machine interface driven by low-frequency local field potentials alone and together with spikes. J Neural Eng. 2015;12(3):036009.

Birbaumer N, et al. A spelling device for the paralysed. Nature. 1999;398(6725):297–8.

Ramos-Murguialday A, et al. Brain-machine interface in chronic stroke rehabilitation: a controlled study. Ann Neurol. 2013;74(1):100–8.

Soekadar SR, et al. ERD-based online brain-machine interfaces (BMI) in the context of neurorehabilitation: optimizing BMI learning and performance. IEEE Trans Neural Syst Rehabil Eng. 2011;19(5):542–9.

Sitaram R, et al. fMRI brain-computer interfaces. IEEE Signal Process Mag. 2007;25(1):95–106.

Liew SL, et al. Improving motor corticothalamic communication after stroke using real-time fMRI connectivity-based neurofeedback. Neurorehabil Neural Repair. 2016;30(7):671–5.

Naseer N, Hong KS. Classification of functional near-infrared spectroscopy signals corresponding to the right- and left-wrist motor imagery for development of a brain-computer interface. Neurosci Lett. 2013;553:84–9.

Soekadar SR, et al. Optical brain imaging and its application to neurofeedback. Neuroimage Clin. 2021;30:102577.

Wolpaw JR, et al. Brain-computer interfaces for communication and control. Clin Neurophysiol. 2002;113(6):767–91.

Chen X, et al. High-speed spelling with a noninvasive brain-computer interface. Proc Natl Acad Sci. 2015;112(44):E6058–67.

Kübler A, et al. The thought translation device: a neurophysiological approach to communication in total motor paralysis. Exp Brain Res. 1999;124(2):223–32.

Kornhuber HH, Deecke L. Hirnpotentialänderungen beim Menschen vor und nach Willkürbewegungen, dargestellt mit Magnetbandspeicherung und Rückwärtsanalyse. Pflügers Arch. 1964;281(1):52.

Walter WG, et al. Contingent negative variation : an electric sign of sensori-motor association and expectancy in the human brain. Nature. 1964;203(4943):380–4.

Pereira J, et al. EEG neural correlates of goal-directed movement intention. NeuroImage. 2017;149:129–40.

Savić AM, et al. Online control of an assistive active glove by slow cortical signals in patients with amyotrophic lateral sclerosis. J Neural Eng. 2021;18(4):046085.

Schultze-Kraft M, et al. The point of no return in vetoing self-initiated movements. Proc Natl Acad Sci U S A. 2016;113(4):1080–5.

Haufe S, et al. Electrophysiology-based detection of emergency braking intention in real-world driving. J Neural Eng. 2014;11(5):056011.

de Almeida Ribeiro PR, et al. Controlling assistive machines in paralysis using brain waves and other biosignals. Adv Hum Comput Interact. 2013;2013:1–9.

Simon N, et al. An auditory multiclass brain-computer interface with natural stimuli: usability evaluation with healthy participants and a motor impaired end user. Front Hum Neurosci. 2014;8:1039.

Treder MS, Blankertz B. (C)overt attention and visual speller design in an ERP-based brain-computer interface. Behav Brain Funct. 2010;6(1):28.

Hong K-S, Khan MJ. Hybrid brain–computer interface techniques for improved classification accuracy and increased number of commands: a review. Front Neurorobot. 2017;11:35.

Muller-Putz G, et al. Towards noninvasive hybrid brain-computer interfaces: framework, practice, clinical application, and beyond. Proc IEEE. 2015;103(6):926–43.

Schmidt NM, Blankertz B, Treder MS. Online detection of error-related potentials boosts the performance of mental typewriters. BMC Neurosci. 2012;13(1):19.

Soekadar SR, et al. An EEG/EOG-based hybrid brain-neural computer interaction (BNCI) system to control an exoskeleton for the paralyzed hand. Biomed Tech (Berl). 2015;60(3):199–205.

Moritz CT, Perlmutter SI, Fetz EE. Direct control of paralysed muscles by cortical neurons. Nature. 2008;456(7222):639–42.

Hinterberger T, et al. Neuronal mechanisms underlying control of a brain-computer interface. Eur J Neurosci. 2005;21(11):3169–81.

Haber SN. Corticostriatal circuitry. Dialogues Clin Neurosci. 2016;18(1):7–21.

Schirrmeister RT, et al. Deep learning with convolutional neural networks for EEG decoding and visualization. Hum Brain Mapp. 2017;38(11):5391–420.

Zhang J, Yan C, Gong X. Deep convolutional neural network for decoding motor imagery based brain computer interface. in 2017 IEEE International Conference on Signal Processing, Communications and Computing (ICSPCC). 2017.

Lubar JF, Shouse MN. EEG and behavioral changes in a hyperkinetic child concurrent with training of the sensorimotor rhythm (SMR): a preliminary report. Biofeedback Self Regul. 1976;1(3):293–306.

Strehl U, et al. Neurofeedback of slow cortical potentials in children with attention-deficit/hyperactivity disorder: a multicenter randomized trial controlling for unspecific effects. Front Hum Neurosci. 2017;11:135.

Enriquez-Geppert S, et al. Neurofeedback as a treatment intervention in ADHD: current evidence and practice. Curr Psychiatry Rep. 2019;21(6):46.

Ros T, et al. Consensus on the reporting and experimental design of clinical and cognitive-behavioural neurofeedback studies (CRED-nf checklist). Brain. 2020;143(6):1674–85.

Cervera MA, et al. Brain-computer interfaces for post-stroke motor rehabilitation: a meta-analysis. Ann Clin Transl Neurol. 2018;5(5):651–63.

Donati AR, et al. Long-term training with a brain-machine INTERFACE-based gait protocol induces partial neurological recovery in paraplegic patients. Sci Rep. 2016;6:30383.

Soekadar SR, Birbaumer N, Cohen LG. Brain-computer-interfaces in the rehabilitation of stroke and neurotrauma. 2011.

Hochberg LR, et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012;485(7398):372–5.

Ajiboye AB, et al. Restoration of reaching and grasping movements through brain-controlled muscle stimulation in a person with tetraplegia: a proof-of-concept demonstration. Lancet. 2017;389(10081):1821–30.

Flesher SN, et al. A brain-computer interface that evokes tactile sensations improves robotic arm control. Science. 2021;372(6544):831–6.

Crea S, et al. Feasibility and safety of shared EEG/EOG and vision-guided autonomous whole-arm exoskeleton control to perform activities of daily living. Sci Rep. 2018;8(1):10823.

Dornhege G, et al. Error-related EEG potentials in brain-computer interfaces. In: Toward brain-computer interfacing. Cambridge, MA: MIT Press; 2007. p. 291–301.

Birbaumer N, et al. Direct brain control and communication in paralysis. Brain Topogr. 2014;27(1):4–11.

Miller KJ, Hermes D, N.P. Staff. The current state of electrocorticography-based brain-computer interfaces. Neurosurg Focus. 2020;49(1):E2.

Soekadar SR, et al. Fragmentation of slow wave sleep after onset of complete locked-in state. J Clin Sleep Med. 2013;9(9):951–3.

Birbaumer N, et al. Ideomotor silence: the case of complete paralysis and brain-computer interfaces (BCI). Psychol Res. 2012;76(2):183–91.

Kubler A, Birbaumer N. Brain-computer interfaces and communication in paralysis: extinction of goal directed thinking in completely paralysed patients? Clin Neurophysiol. 2008;119(11):2658–66.

Chaudary U, et al. Spelling interface using intracortical signals in a completely locked-in patient enabled via auditory neurofeedback training. Nat Commun. 2022;13(1):1236.

Kryger M, et al. Flight simulation using a brain-computer interface: a pilot, pilot study. Exp Neurol. 2017;287(Pt 4):473–8.

LaFleur K, et al. Quadcopter control in three-dimensional space using a noninvasive motor imagery-based brain-computer interface. J Neural Eng. 2013;10(4):046003.

Congedo M, et al. “Brain invaders”: a prototype of an open-source P300- based video game working with the OpenViBE platform. In BCI 2011—5th International Brain-Computer Interface Conference. Graz, Austria. 2011.

Cattan G. The use of brain–computer interfaces in games is not ready for the general public. Front Computer Sci. 2021:20.

Wang M, et al. A wearable SSVEP-based BCI system for quadcopter control using head-mounted device. Ieee Access. 2018;6:26789–98.

Stawicki P, Gembler F, Volosyak I. Driving a semiautonomous Mobile robotic car controlled by an SSVEP-based BCI. Comput Intell Neurosci. 2016;2016

Arico P, et al. Passive BCI beyond the lab: current trends and future directions. Physiol Meas. 2018;39(8):08TR02.

Menon V. Large-scale brain networks and psychopathology: a unifying triple network model. Trends Cogn Sci. 2011;15(10):483–506.

Buzsáki G. The brain–cognitive behavior problem: a retrospective. eneuro. 2020;7(4):ENEURO.0069-20.2020.

Soekadar SR, et al. In vivo assessment of human brain oscillations during application of transcranial electric currents. Nat Commun. 2013;4:2032.

Haslacher D, et al. Stimulation artifact source separation (SASS) for assessing electric brain oscillations during transcranial alternating current stimulation (tACS). NeuroImage. 2021;228:117571.

Garcia-Cossio E, et al. Simultaneous transcranial direct current stimulation (tDCS) and whole-head magnetoencephalography (MEG): assessing the impact of tDCS on slow cortical magnetic fields. NeuroImage. 2016;140:33–40.

Romeni S, Zoccolan D, Micera S. A machine learning framework to optimize optic nerve electrical stimulation for vision restoration. Patterns. 2021;2(7):100286. (this issue)

Zrenner C, et al. Real-time EEG-defined excitability states determine efficacy of TMS-induced plasticity in human motor cortex. Brain Stimul. 2018;11(2):374–89.

Grossman P, Taylor EW. Toward understanding respiratory sinus arrhythmia: relations to cardiac vagal tone, evolution and biobehavioral functions. Biol Psychol. 2007;74(2):263–85.

Xin Z, et al. Magnetically induced temporal interference for focal and deep-brain stimulation. Front Hum Neurosci. 2021;15:693207.

Darmani G, et al. Non-invasive transcranial ultrasound stimulation for neuromodulation. Clin Neurophysiol. 2022;135:51–73.

Musk E, Neuralink. An integrated brain-machine interface platform with thousands of channels. J Med Internet Res. 2019;21(10):e16194.

Leuthardt EC, Moran DW, Mullen TR. Defining surgical terminology and risk for brain computer interface technologies. Front Neurosci. 2021;15:599549.

Watanabe H, et al. Intravascular neural interface with nanowire electrode. Electron Commun Jpn. 2009;92(7):29–37.

Baranauskas G. What limits the performance of current invasive brain machine interfaces? Front Syst Neurosci. 2014;8:68.

Zhang X, et al. Tiny noise, big mistakes: adversarial perturbations induce errors in brain–computer interface spellers. Natl Sci Rev. 2020;8(4):nwaa233.

Boto E, et al. Moving magnetoencephalography towards real-world applications with a wearable system. Nature. 2018;555(7698):657–61.

Webb JL, et al. Nanotesla sensitivity magnetic field sensing using a compact diamond nitrogen-vacancy magnetometer. Appl Phys Lett. 2019;114(23):231103.

Blankertz B, et al. The Berlin Brain-Computer Interface: Progress beyond communication and control. Front Neurosci. 2016;10:530.

Cao Z. A review of artificial intelligence for EEG-based brain−computer interfaces and applications. Brain Sci Adv. 2020;6(3):162–70.

Müller K-R, et al. Machine learning and applications for brain-computer interfacing. Berlin, Heidelberg: Springer; 2007.

Dai G, et al. HS-CNN: a CNN with hybrid convolution scale for EEG motor imagery classification. J Neural Eng. 2020;17(1):016025.

Samek W, et al. Explainable AI: Interpreting, Explaining and Visualizing Deep Learning. Explainable AI: interpreting, explaining and visualizing deep learning. 2019.

Goebel R, et al. Explainable AI: the new 42? In machine learning and knowledge extraction. Cham: Springer; 2018.

Nasr K, Haslacher D, Soekadar S. Advancing sensory neuroprosthetics using artificial brain networks. Patterns. 2021;2(7):100304.

Gilbert CD, Li W. Top-down influences on visual processing. Nat Rev Neurosci. 2013;14(5):350–63.

Downey JE, et al. Blending of brain-machine interface and vision-guided autonomous robotics improves neuroprosthetic arm performance during grasping. J Neuroeng Rehabil. 2016;13(1):28.

Nann M, et al. Restoring activities of daily living using an EEG/EOG-controlled semi-autonomous and mobile whole-arm exoskeleton in chronic stroke. IEEE Syst J. 2020;15(2):2314–21.

Clausen J, et al. Help, hope, and hype: ethical dimensions of neuroprosthetics. Science. 2017;356(6345):1338–9.

Nann M, et al. Feasibility and safety of bilateral hybrid EEG/EOG brain/neural-machine interaction. Front Hum Neurosci. 2020;14:580105.

Zander TO, et al. Neuroadaptive technology enables implicit cursor control based on medial prefrontal cortex activity. Proc Natl Acad Sci U S A. 2016;113(52):14898–903.

Soekadar SR, et al. On the verge of the hybrid mind. Morals Machines. 2021;1(1):30–43.

Bublitz C, Chandler J, Ienca M. Human–machine symbiosis and the hybrid mind: implications for ethics, law and human rights. In: Ienca M, et al., editors. Cambridge handbook of information technology, life sciences and human rights. Cambridge: Cambridge University Press; 2022.

Gilbert F, et al. I miss being me: phenomenological effects of deep Brain stimulation. AJOB Neurosci. 2017;8(2):96–109.

Mosley PE, et al. Woe betides anybody who tries to turn me down.’ A qualitative analysis of neuropsychiatric symptoms following subthalamic deep brain stimulation for Parkinson’s disease. Neuroethics. 2021;14(1):47–63.

Gilbert F, Viaña JNM, Ineichen C. Deflating the “DBS causes personality changes” bubble. Neuroethics. 2021;14(1):1–17.

Strickland E, Harris M. Their bionic eyes are now obsolete and unsupported. IEEE Spectrum. 2022 [cited 2022 March 14th 2022]; https://spectrum.ieee.org/bionic-eye-obsolete

Chandler JA, et al. Brain Computer interfaces and communication disabilities: ethical, legal, and social aspects of decoding speech from the Brain. Front Hum Neurosci. 2022;16:841035.

Acknowledgments

This chapter and the presented studies were supported by the ERA-NET NEURON project HYBRIDMIND (BMBF, 01GP2121A and -B), the European Research Council (ERC) under the project NGBMI (759370) and TIMS (101081905), the Federal Ministry of Research and Education (BMBF) under the projects SSMART (01DR21025A), NEO (13GW0483C), QHMI (03ZU1110DD) and QSHIFT (01UX2211), and the Einstein Foundation Berlin (A-2019-558).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Soekadar, S.R. et al. (2023). Future Developments in Brain/Neural–Computer Interface Technology. In: Dubljević, V., Coin, A. (eds) Policy, Identity, and Neurotechnology. Advances in Neuroethics. Springer, Cham. https://doi.org/10.1007/978-3-031-26801-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-26801-4_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-26800-7

Online ISBN: 978-3-031-26801-4

eBook Packages: MedicineMedicine (R0)