Abstract

Electrooculography is a technique that detects and analyses eye movement based on electrical potentials recorded using electrodes placed around the eyes. The electrical signal recorded is named electrooculogram (EOG) and can be used as an alternative input for medical and human-computer interface systems. To implement an eye movement-based system, at least four main stages will be required: signal denoising, feature extraction, signal classification and decision-making. The first one after the EOG signal acquisition is signal denoising, which suppresses noise that could not be removed by the analogue filters. In this task, the slope of the signal edges, as well as the amplitudes of the signal to distinguish between different eye movements, must be preserved. After denoising, the second task is to extract the features of the EOG signal based mainly on the detection of saccades, fixations, and blinks. The next stage is the automatic identification of eye movements. This task, called signal classification, is essential for generating accurate commands, especially in real-time applications. This classification is carried out mainly using a combination of algorithms in artificial intelligence (AI). These types of algorithms are the most suitable for adaptive systems that require real-time decision-making supported by AI techniques. In some applications, EOG modelling, and compression are also applied as an additional signal processing stage.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

8.1 Introduction

8.1.1 EOG Fundamentals

The electrooculogram (EOG) is the electrical signal produced by the potential difference between the cornea (the positive pole) and the retina (the negative pole). This potential difference is measured by placing surface electrodes near the eyes, and the process of measuring the EOG is called electrooculography.

The eye movements can be either saccades, smooth pursuits, vergence or vestibule-ocular, which can further be reflex or voluntary. Saccades are eye voluntary movements used in clinical studies to analyse eye movements. Smooth pursuits are also voluntary but are slower tracking eye movements that keep a moving stimulus on the fovea. Vergence and vestibulo-ocular movements have involuntary origins [1], so they are usually removed in EOG applications.

EOG-based human−computer interfaces (HCIs) offer disabled people a new means of communication and control as eye movements can easily be interpreted in EOG signals. In recent years, different types of HCI systems have been developed such as controlling the computer cursor, virtual keyboards, electric wheelchairs, games, hospital alarm systems, television control systems, home automation applications and smartphones [2,3,4,5,6,7,8]. Diabetic retinopathy or refractive disorders such as hypermetropia and myopia can be diagnosed early based on the EOG results [9]. EOG also provides reliable information to identify sleep stages and detect anomalies [10, 11].

Figure 8.1 shows a block diagram of the main stages to develop an EOG system. For the development of widely used EOG-based applications in the real world, it is necessary to provide accurate hardware and efficient software to implement the tasks shown in Fig. 8.1, which will be introduced in this chapter.

8.1.2 EOG Signal Measurement

Saccadic eye movements are the most interesting as they are voluntary and easily identifiable on the EOG. The most basic movements are up, down, right, and left. To distinguish between these eye movement classes, two pairs of bipolar electrodes and a reference electrode are positioned around the eyes as shown in Fig. 8.1.

The amplitude of the EOG signals has a mean range of 50–3500 μV and the frequency is between zero and about 50 Hz. Another issue to consider is that muscle noise spreads along with the signal bandwidth almost constantly, which makes it very difficult to completely remove it. The amplitude of the signal obtained using two electrodes to record the differential potential of the eye is directly proportional to the angle of rotation of the eyes within the range ± 30°. Sensitivity is on the order of 15 μV/º [12].

Voluntary and involuntary blinks produce spikes in EOG signals that must be detected because they can be mistaken for saccades. Figure 8.2 shows EOG fundamentals by modelling the eye as a dipole and an electrooculogram where two typical saccades with different amplitudes depending on the gaze angle are represented.

Before any further analysis, a preprocessing step will be necessary to reduce mainly the base-line drift, the powerline interference and the electromyographic potential. For this task, analogue filters are usually used. Table 8.1 shows the most relevant commercial bio amplifiers used in EOG for experimental measurements.

On the other hand, several datasets are publicly available that offer signals which are already pre-processed and ready to be used. Some of the most widely used datasets in the literature are shown in Table 8.2, mostly related to sleep recordings.

8.2 EOG Signal Denoising

After hardware acquisition and preprocessing, EOG signals are still contaminated by several noise sources that can mask eye movements and simulate eyeball events. Additional denoising must be done to remove unwanted spectral compo-nents to improve analogue filtering and remove other kinds of human biopotentials. Denoising must preserve the signal amplitudes and the slope of the EOG signals to detect blinks and saccades. In some cases, it is an additional feature while in others, it is considered an artefact that should be eliminated. In these latter cases, the blink artefact region is easily removed because blinking rarely occurs during saccades, instead, they usually occur immediately before and after the saccades. Another issue that needs to be considered in EOG noise removal is crosstalk or the interdependence between acquisition channels. Many changes in eye movements recorded by one channel generally appear in other EOG channels. The main strategy is to ignore those signals with a low amplitude.

Several methods are proposed in the literature to attenuate or eliminate the effects of artefacts on EOG signals. Digital filters are typically employed to reduce muscle artefacts and remove power line noise and linear trends. Adaptive filters, such as Kalman and Wiener filters are used to remove the effects of overlap frequencies over the EOG spectrum from electrocardiographic and electromyographic artefacts. In addition to linear filtering, the median filter is very robust in removing high-frequency noise, and preserving amplitude and the slope, without introducing any shift in the signal [11].

Regression methods can learn signal behaviour by modelling colour noise that distorts the EOG signal and subtracting it. Due to the relationship between nearby samples, these methods include the nearby noise samples for the prediction of the given sample. The noise distribution characteristics are not considered in the regression models.

The wavelet transform (WT) is a powerful mathematical tool for noise removal. The WT consists of chopping a signal into scaled and displaced versions of a wavelet that is called “mother wavelet”. From the point of view of signal processing, wavelets act as bandpass filters. The WT is a stable representation of transient phenomena, and therefore, conserves energy. In this way, the WT provides much more information about the signal than the Fourier transform because it allows to highlight its peculiarities by acting as a mathematical microscope.

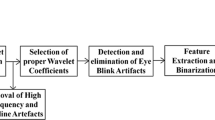

Figure 8.3 shows the general scheme of the WT-based filtering procedure. The decomposition module is responsible for obtaining the wavelet coefficients of the EOG signal at the output of the conditioning stage. The thresholding stage consists of selecting an appropriate threshold for the wavelet coefficients so that those of lower values are eliminated because they correspond to noise and interference. Finally, the EOG signal is reconstructed through the coefficients not discarded in the previous stage. To do this, the reverse process of the wavelet transformation carried out in the first module is followed.

For a complete decomposition and reconstruction of the signal, it is necessary that the filters of the wavelet structures have a finite number of coefficients (finite impulse response filters) and that they are regular. In addition, it is also important to ensure that the filters have phase linearity, as this prevents the use of non-trivial orthogonal filters but allows the use of biorthogonal filters. It is very common to choose the Biorthogonal and Daubechies families for EOG signal processing [3, 24].

8.3 Compression

In some EOG applications, such as sleep studies, it is necessary to compress the signals because they can extend up to several gigabytes. Storage and transmission for remote health monitoring of this amount of data comes at a high cost. In these cases, compression techniques are needed for the transmission of the signal through the communication networks. An alternative is the Turning point compression algorithm reported in [25]. This algorithm reduces the effective sampling rate by half and saves the turning points that represent the peaks and valleys of the EOG signal. The main purpose of compressing data is to reduce the size while retaining the characteristic and useful features. Figure 8.4 shows the original, filtered, and compressed EOG signal using this technique.

8.4 EOG Feature Processing

EOG feature processing consists of selecting how many and which signal features are the most relevant for the application. An important issue is that the chosen features must be independent of each other to prevent collecting redundant data. By evaluating the EOG signals, it is possible to conclude that fixations have a stable slope whereas saccades and blinks increase quickly. The same happens for smooth eye movements and other features such as average speed, range, variance, and signal energy. Fixations are the slowest eye movements and saccades are the fastest [26]. The processing of informative, discriminatory, and independent features is a key step in preparing an appropriate collection of values for a classifier.

8.4.1 Feature Extraction

Feature extraction is the process of extracting features from the processed signals to obtain significant discrimination on several independent features. The goal is to find a space that makes the extracted features more independent and where they can be discriminated against. Feature extraction techniques can fall in the time domain, frequency domain, time-frequency domain, and nonlinear domain [27].

8.4.1.1 Time-Domain Features

Time-domain features represent the morphological characteristics of a signal. They are simply interpretable and suitable for real-time applications. The most popular time-based parameters are compiled in Table 8.3.

-

Statistical features are mean, variance, standard deviation, skewness, kurtosis, median and the 25th, and 75th percentile of the signal.

-

Hjorth features are activity, mobility, and complexity parameters to measure the variance of a time series, the proportion of standard deviation of the power spectrum and change in the signal frequency, respectively.

-

Zero crossing rate features refer to the number of times that a signal crosses the baseline. This parameter is very sensitive to additive noises.

Other time-domain EOG features for eye movements are amplitude, latency, deviation, velocity, slope, peak polarity, and duration.

8.4.1.2 Frequency Domain Features

The main features in the frequency domain are energy, power ratio, spectral frequency, duration ratio and power spectral density (PSD). The most popular methods to estimate the PSD are Autoregressive (AR), Moving average and Autoregressive moving average. In [28] AR was considered to extract features from the EOG signal. These methods are named the parametric method because the spectrum is estimated by the signal model. These approaches are suitable for signals with both low SNR and length. In the non-parametric methods, such as Periodogram and Welch, the PSD values are calculated directly from the signal samples in each signal window. In [29] a non-parametric statistical analysis is performed using Welch method. The features obtained by the Welch technique discriminate better due to the lower sensitivity of nonparametric methods to residual noise and motion artefacts compared to parametric and cumulant-based methods. The non-parametric methods based on the Fast Fourier transform are easy to implement. Another method used to extract the frequency domain features is the higher-order spectra, which represent the frequency content of a higher-order signal static.

8.4.1.3 Time-Frequency Features

EOG signals are non-stationary, and to transfer a signal from the time domain to the frequency domain, three main techniques are available:

-

Signal decomposition: The aim of signal decomposition is to decompose the signals into a series of basic functions, and the most common methods are Short-Time Fourier and WT [3]. The first one is simple and well-known, however, for EOG signals, the second one is the most widely used. Continuous wavelet transforms have more separable features and the coefficients are more redundant than the discrete wavelet transforms within the same period.

-

Energy distribution: Several methods are proposed for energy distribution: Choi–Williams distribution and Wigner-Ville distribution are the traditional non-linear time-frequency methods widely used to analyse non-stationary signals. Hilbert–Huang transform is a more recent method to obtain momentary regularity of nonlinear and non-stationary signals such as EOGs [4].

-

Modelling: The Gaussian mixture model (GMM) is used in some works to estimate the continuous probability density of the signal. The model parameters are estimated using the Expectation-Maximization algorithm such that the probability of observation is maximised. Figure 8.5 depicts the GMM model [8, 26, 30].

GMM structure where three parameters must be estimated separately for each Gaussian function: mean vector (μ), covariance matrix (∑) and weight (w). The weighted sum on these probabilities builds the output based on the input observations, x(t) = {x1, ..., xn} where xn is the nth observation or feature vector

8.4.1.4 Non-Linear Features

Non-linear methods employed for EOG signal feature extraction fall into two main groups:

-

Entropy and complexity-based methods. Complexity methods are used to estimate the nonlinear dynamic parameters of EOG, electroencephalographic (EEG) and electromyographic (EMG) signals. Among complexity methods, entropy-based algorithms are robust estimators for evaluating the regularity of signals. Shannon’s entropy method is the most famous one. However, in some cases, the data for the decision-making processes cannot be measured accurately and other methods have been proposed, such as Renyi’s, Sample, Tsallis, Permutation, Multi scale and Approximate entropy [31].

-

Fractal-based methods. They propose measuring the fractal dimension of the EOG irregular shape and determining the amount of self-similarity on the signal. The Correlation Dimension, Lyapunov exponent and Hurst exponent are examples of fractal-based methods. First, they map a signal into the phase space and then measure the self-similarity of its trajectory shape [32].

Both techniques are suitable for measuring the amount of roughness in the signal, in turn increasing the entropy of the signal with the irregularity. These techniques are only effective at detecting stage transitions, not for the signal bandwidth.

8.4.2 Feature Selection

After the feature extraction, feature selection techniques are applied to find a discriminative subset of features to reduce the number of features needed to feed and train subsequent classification models, for avoiding over-fitting and reducing the computational time [33].

Minimum redundancy maximum relevance is an algorithm for feature selection according to the criteria of minimum redundancy (least correlation between themselves) and maximum relevance (most correlation with the class). Redundancy can be computed by using Pearson’s R for continuous features or mutual information for discrete ones. Relevance can be calculated using F-distribution under the null hypothesis for continuous features or mutual information for discrete ones [34].

Another selection method is named the Clear based feature selection (CBFS). CBFS computes the distance between the objective sample and the centroid of each class. Then, the algorithm compares the class of the closest centroid with the class of the target sample. [35] reports an efficient classification of EOG signals using this algorithm.

8.4.3 Feature Normalization

Feature normalization can be applied to reduce the effects of the individual variability and is performed over values for each feature separately. This process can prevent extremely high or low values from influencing any conclusions. [36] reports the procedure for feature normalization in an automatic sleep staging method. Another example of normalization is shown in [37] in which the original EOG signal, managed by a dynamic threshold (includes a positive and a negative threshold), would be transformed into a series of rectangular pulses that have −1 or 1 in their amplitude.

8.5 Classification

The automatic identification of eye movements (classification) is essential to generate accurate commands, especially in real-time applications. Classification techniques based on static or dynamic thresholds are also not easily generalisable, so methods based on artificial intelligence (AI) are needed. Many conventional machine learning algorithms and recently deep learning due to the increased computational power are truly becoming virtual assistants for clinicians to classify EOG features and improve medical diagnosis. The most widely used classifiers are herewith briefly commented.

The main parameters associated with classification performance are accuracy, precision, sensitivity, specificity, recall, F1 and F2 score, true positive rate, false-positive rate and Genni’s or Mathew’s correlation coefficient. Confusion matrices are also commonly used to compare the performance of different classification methods and avoid misleading when data is unbalanced.

8.5.1 Machine Learning Techniques

Conventional machine learning techniques include a wide variety of algorithms. All of them have shown great performance in EOG features’ classification compared to threshold-based classification techniques proposed in some preliminary EOG-based systems. The main machine learning techniques used in EOG features’ classification are briefly described below.

K-Nearest Neighbor (K-NN) is an algorithm that finds the nearest observations to the one it is trying to predict and classifies the observation of interest according to the majority of the surrounding data. The only parameter to set is the number of neighbouring points to consider in the vicinity to classify the different classes that are already known in advance.

Support vector machines are other conventional hierarchical supervised classifiers. They involve the adoption of a nonlinear kernel function to transform the input data into an optimal hyperplane for separating the features.

Decision trees are non-parametric supervised learning techniques that require little preprocessing and have a good runtime performance to handle tasks in real-time. The goal is to create a model that predicts the value of an objective variable according to various input variables [38].

Random Forest (RF) is one of the best algorithms for classifying large data with accuracy. RF is an ensemble of predictor trees such that each tree depends on the values of a random vector. This random vector is tested independently and presents the same distribution for each tree. Each tree is grown through bootstrap training. Figure 8.6 shows the general structure of RF. The classification is made from the vote of each tree in the ensemble and by selecting the most popular class among them [26]. [39] reports an automatic scoring of sleep stages classification using EOG signals.

Linear Discriminant Analysis (LDA) is a method of supervised classification in which two or more groups of variables are known a priori and new observations are classified into one of them according to their characteristics. The result is created on the nearest centre classifier applied to the LDA outputs. After training, the nearest centres calculate the distance between any point and each class. In [40], an LDA classifier was applied to EOG classification with good training and testing accuracy that could be used for disabled people.

Logistic Regression (LG) optimises a set of weights assigned to each input feature to provide the best classification performance using a training dataset. LG was used in the design of an omnidirectional robot controlled by eye movements because of its efficiency and the low computing resources needed [7].

GMM as a classifier learns the input features of each class and assigns a specific label to them. When a sample fits into the scheme of Fig. 8.5, the label that produces the highest probability is assigned. The GMM provides a framework to model unknown distributional shapes. The key issue is to estimate how many components to include in the model.

A Hidden Markov Model (HMM) is a statical model in which the parameters are unknown. The training is done using maximum likelihood. HMM assesses the transition and emission probabilities from the observation sequence to the state sequence. This classifier can tolerate time warping of the input data. [8, 41] report a wheelchair navigation system based on an HMM for people with restricted mobility. Figure 8.7 shows an example of the transition of states of HMM.

Clustering is an unsupervised grouping classifier where the samples lack labels. The goal is to create groups with similar samples using criteria such as information, statistical measures, and distance metrics. Each eye movement has specific features; therefore, first grouping the signals into the two categories, centre gazes and non-centre gazes might be a useful step in some classification schemes. Figure 8.8 shows the application of this concept to the hierarchical clustering procedure for classifying eye movements.

Based on how the clusters are related to each other and the objects in the dataset, the first division of clustering algorithms can be established. In hard clustering, each object belongs to a single cluster, so the clusters would become a partition of the dataset. In soft (or fuzzy) clustering, the objects belong to the clusters according to a degree of trust or belonging (e.g., Fuzzy C-Means). Clustering can be classified by looking at how the object is related: flat clustering, hierarchical clustering, graph-based clustering, and density-base clustering. Examples of these clusters can be found in [26, 42].

Even EOG signals from the same eye movement can differ in amplitude and time and thus, produce errors in recognition. The Dynamic time wrapping algorithm can solve this problem by breaking the problem recursively into subproblems, storing the results and later using those results when needed. For large datasets, this algorithm employs a lot of time for training the model [8, 43].

Artificial neural network (ANN) has numerous applications for pattern classification in the medical field to easily interpret the EOG signals and diagnose the problem more accurately. An ANN comprises several highly interconnected processing elements called neurons, which are organized into layers. These layers have a geometry and functionality linked to the human brain. ANNs include three layers: input, hidden and output as depicted in Fig. 8.9 [44].

8.5.2 Deep Learning Techniques

Deep learning (DL) replicates the functioning of the human brain regarding sending information from one neuron to another and handling a great amount of data. DL produces more insight knowledge than machine learning techniques as it can learn multiple levels of representation from raw data using unsupervised learning and model more complex relationships. The nucleus of DL is the ANN with multiple nonlinear hidden layers. DL offers robust computing power and enormous datasets, as they generally use a greater number of recordings to develop and evaluate their methodologies than the traditional machine learning classification methods.

A convolutional neural network (CNN) is an ANN class composed of a convolution layer to filter the extraction of features, a pooling layer to reduce the size of the analysed data, a fully connected layer, and a loss function to calculate the errors between the current and the desired network output. Back propagation is applied to update weights for convolutional layers and pooling filters cascade. Figure 8.10 shows an example of a deep neural network for eye movement classification.

CNN requires fewer parameters than the conventional neural network, therefore CNN can be applied for solving regression problems. For example, in [45] CNN was used to eliminate eye blinking artefacts, and in [46], the authors used CNN for drowsiness detection based on EOG signals.

The recurrent neural network (RNN) is basically an ANN developed under the premise that humans always consider the past when making decisions. RNN automatically stores past information through a loop within its architecture. Based on this fact, in [47], an RNN was considered for real-time eye blink suppression in EEG recordings.

The time distributed convolutional neural network (TDConvNet) is a DL model comprising two main stages: a one-dimensional CNN epoch encoder, to extract the time-invariant features from raw EOG signals, and another one-dimensional CNN stage, to infer labels from the sequence of epochs. TDConvNet was applied to classify the sleep stages of polysomnography signals [48].

Unsupervised pre-training algorithms initialise the parameters such that the optimisation process ends up with a higher speed of training. In [49, 50], two pre-training methods were presented for EOG signals: restricted Boltzmann machine (RBM) and deep belief networks (DBN). Figure 8.11a shows an example of the RBM system. The relation between the input and output layers allows the network to be trained much faster. RBM can be extended if the output layer of one RBM is the input layer for another RBM, as shown in Fig. 8.11b.

Long short-term memory (LSTM) deep networks are developed to obtain long-term dependencies in the data. LSTM algorithm as a classifier uses three kinds of gates to configure the data entering a network: input, forget and output. The more important formations can be saved between data segments using two forward and backward LSTMs. This architecture of two LSTMs, named Bidirectional long short-term memory (Bi-LSTM), presents each forward and backward training sequence in two separate LSTM layers, connected to the equal output layer.

Since the large length of the data can cause a leakage gradient problem, Gated Recurrent Unit (GRU) networks can be used to learn the representation of the EOG signal. This recurrent neural layer not only allows the improvement of the memory capacity but also eases the training since they retain the information within the unit while a sequence flows in the gating unit [51].

8.6 Decision-Making

The major difficulty in classification and subsequent decision-making is the variability of the data. Hence the importance of having large datasets that allow the creation of generalisable models and the learning process. The classification methods must be adapted to each user based on the previous actions and the results derived from them. Eye movements and blinks (voluntary and involuntary) are considered commands in the EOG-based systems and are used as input in different medical diagnostic systems and operation interfaces, such as serious games, home automation or communication and mobility solutions.

Some of the EOG-based communication solutions also include text-to-speech modules. These multilingual speech synthesisers are one of the recent forms of AI. They convert the stream of digital text selected by eye movements and blinks into natural-sounding speech. EOG can also be found in the design of industry-oriented robotic arms. In these systems, decision-making based on the results of previous commands improves real-time usability to provide the user with a reasonable degree of control.

8.6.1 Intelligent Decision Support Systems

Intelligent decision support systems (IDSS) use AI tools to improve the decision-making related to complex problems that involve a large amount of data in real-time. ANNs, Fuzzy logic, Expert systems, Case-based reasoning (CBR), and Intelligent agents (IA) can be considered IDSS. This section is a brief introduction to some of these techniques related to bio-signals for medical applications.

Fuzzy logic is a very promising technology within the medical decision-making application. Its main challenge is obtaining the required fuzzy data, even more when one must produce such data from patients. Usually, Fuzzy logic is used for the classification of the EOG and EEG signals, but they need calibration parameters obtained previously during the user training. [52] is an example of a Fuzzy logic-based controller for wheelchair motion control using the EOG technique.

An Expert system tries to solve human problems by embedding human knowledge in the computer. A typical application of expert systems is to filter ocular artefacts hidden in EEG signals without affecting clinically important EEG information [53]. Another application is reported in [54] for multichannel sleep data analysis.

ANNs have the advantage of executing the trained network quickly, which is a key issue for signal processing applications. However, the ANN algorithm is iterative and suffers from convergence problems. ANN has many practical applications, for example, ANN can be used for the diagnosis of a subnormal eye through the analysis of EOG signals [44].

To solve a new problem, CBR compares that problem with earlier solved problems and adjusts their well-known solutions instead of starting from scratch [55]. A CBR problem requires recovering relevant cases from the memory of cases, choosing the best cases, developing a solution, assessing the solution, and storing in the memory the newly solved case. A CBR system was used to classify ocular artefacts in EEG signals [56].

Alexa and Siri are examples of IA. They gather data from the internet without the help of the user. In the field of biomedical application, IA is used to diagnose, treat, and manage problems associated with dementia and Alzheimer’s [57]. In these cases, the agents may be any methodologies with decision-making abilities such as patient analysts, signal processing, neural network models and Bayesian systems. The information of each agent can be shared with other agents.

In the last ten years, Multi-Agent System (MAS) has gained interest due to the advances in AI, wireless sensor networks and sensors. In MAS, a larger problem can be divided into smaller subproblems. A task can be delegated among different agents, and each agent produces the output according to its task. Then, all outputs are joined and converted into the final answer to the complete problem. The interaction between agents increases the speed of problem resolution. MAS is a research topic in complex medical applications [58].

8.6.2 Learning Approaches

Learning approaches try to overcome the problems related to training data of the classification models in many EOG studies. Another important challenge is to improve the automatic classification.

Two of these learning approaches are Transfer learning (TL) and Deep transfer learning. These are powerful methods that reuse previously trained models as the starting point. This approach avoids the needed large training dataset and saves time, while, at the same time, does not reduce the accuracy of the assessment. Figure 8.12 outlines the TL from the source to the target domains [59]. The base model is trained using the data from the source domain and then fitted to the data from the target domain to complete the transfer of knowledge and make EOG-based systems more reliable and accurate. Through transfer learning, the classification performance improves significantly in all learning cases for temporal models trained only on the target domains.

Due to the limited ability of the EOG signals to adapt to the characteristics of each user, a Reinforcement Learning (RL) algorithm is included, which allows adapting the interface to the user. The RL algorithm allows the adaptation of the user’s commands to the responses in the interfaces controlled by EOG. This algorithm is usually implemented in computer serious games as a moderator of the intensity of user commands given experience [60].

A model-free Q-learning method was proposed in [61] for the planning of robot motion through the user EOG signals, including obstacles surrounding the robotic platform. Figure 8.13 depicts the navigation approach in a simplified way.

Learning vector quantization (LVQ) is a supervised classification algorithm frequently used to recognise eye movements in EOG-based systems. LVQ is an artificial neural network that lets us choose how many training instances to latch onto and learns exactly what those instances should look like. EOG features can be considered as training data to build a network for recognition. Despite not being particularly powerful compared to other methods, it is simple and intuitive for the recognition of eye movements [62].

8.7 Discussion

Considering the articles published in the last decade, we can say that for EOG signal denoising, wavelet transform is the most useful technique for data preprocessing because this mathematical tool is better focused on transient and high-frequency phenomena. For EOG feature selection, the CBFS allows reducing redundant features and increases the precision and accuracy of the neural network-based classifier. EOG compression improves the signal transmission with fewer data from the original signal. As a result, the size of memory is reduced, which is an important feature for large polysomnogram signals.

EOG signal classification can be done automatically using any conventional classification algorithm. K-NN is the typical classification algorithm based on supervised ML that offers better performance and simplicity. K-NN employs the complete dataset to train “every point”, which is why the required memory is higher than other classifiers. Therefore, K-NN is recommended for small datasets with fewer features.

CNN is a very efficient classification method in EOG signal processing, especially for EOG-based HCIs. CNN yields models of significantly higher correlation coefficients than the traditional K-NN classification algorithm for large datasets. The RL layer is used to help the user by selecting proper actions, and at the same time, learning from previous behaviours. For example, to prevent collisions in a robotic platform or improve wheelchair navigation. Deep transfer learning can be used for a relatively small amount of data for sleep stage classification and models.

The development of sophisticated AI-based models together with the availability of larger datasets will allow better interpretation of EOG. This will result in the design of more efficient systems that also present an improvement in the decision-making stage.

8.8 Conclusions

This chapter introduced and discussed signal processing in electrooculographic signals, which is a challenging problem due to the wide variability in the morphology and features of electrooculograms within the population. The key aspect is to find the technique that presents the best overall performance in each of the basic signal processing stages that are divided: denoising, feature extraction, classification, and decision-making. Some applications require the processing of large electrooculograms lasting several hours to monitor the health status of patients. Such scenarios also bring the need for powerful artificial intelligence-based techniques for classification and modelling, as well as compression of the signal for efficient decision and storage.

References

E. Kowler, Eye movements: The past 25 years. Vis. Res. 51(13), 1457–1483 (2011). https://doi.org/10.1016/j.visres.2010.12.014

A. López, F.J. Ferrero, D. Yangüela, C. Álvarez, O. Postolache, Development of a computer writing system based on EOG. Sensors 17, 1505 (2017). https://doi.org/10.3390/s17071505

A. López, M. Fernández, H. Rodríguez, F.J. Ferrero, O. Postolache, Development of an EOG-based system to control a computer serious game. Measurement 127, 481–488 (2018). https://doi.org/10.1016/j.measurement.2018.06.017

G. Teng, Y. He, H. Zhao, D. Liu, J. Xiao, S. Rankumar, Design and development of human computer interface using electrooculogram with deep learning. Artif. Intell. Med. 102, 101765 (2021). https://doi.org/10.1016/j.artmed.2019.101765

Q. Huang, S. He, Q. Wang, Z. Gu, N. Peng, K. Li, Y. Zhang, M. Shao, Y. Li, An EOG-based human–machine interface for wheelchair control. IEEE Trans. Biomed. Eng. 65(9), 2023–2032 (2017). https://doi.org/10.1109/tbme.2017.2732479

R. Zhang, S. He, X. Yang, X. Wang, K. Li, Q. Huang, Z. Yu, X. Zhang, D. Tang, Y. Li, An EOG-based human-machine interface to control a smart home environment for patients with severe spinal cord. IEEE Trans. Biomed. Eng. 66(1), 89–100 (2018). https://doi.org/10.1109/tbme.2018.2834555

F.D. Pérez-Reynoso, L. Rodríguez-Guerrero, J.C. Salgado-Ramírez, R. Ortega-Palacios, Human–machine interface: multiclass classification by machine learning on 1D EOG Signals for the Control of an Omnidirectional Robot. Sensors 21(17), 5882 (2021). https://doi.org/10.3390/s21175882

F. Fang, T. Shinozaki, Electrooculography-based continuous eye-writing recognition system for efficient assistive communication systems. PLOS One 13(2), e0192684 (2018). https://doi.org/10.1371/journal.pone.0192684

D. Kumar, K. Priyadharsini, Analysis of CNN model based classification of diabetic retinopathy diagnosis, in Proceedings of International Conference on Secure Cyber Computing and Communication (ICSCCC), (2021)

A. López, F.J. Ferrero, O. Postolache, An affordable method for evaluation of ataxic disorders based on electrooculographic signals. Sensors 19, 3756 (2019). https://doi.org/10.3390/s19173756

R.A. Becerra-García, R.V. García-Bermúdez, G. Joya-Caparrós, A. Fernández-Higuera, C. Velázquez-Rodríguez, M. Velázquez-Mariño, F.R. Cuevas-Beltrán, F. García-Lagos, R. Rodríguez-Labrada, Data mining process for identification of non-spontaneous saccadic movements in clinical electrooculography. Neurocomputing 250, 28–36 (2017). https://doi.org/10.1016/j.neucom.2016.10.077

R.J. Leigh, D.S. Zee, The neurology of eye movements. Encyclopedia of biomedical engineering, 5th edn. (Oxford, New York USA, 2015)

PowerLab 26 Series. ADInstruments. [Online]. Available: https://www.adinstruments.com/products/powerlab/35-and-26-series

BlueGain Cambridge Research Systems. BlueGain EOG Biosignal Amplifier. [Online]. Available: http://www.crsltd.com/tools-for-vision-science/eye-tracking/bluegain-eog-biosignal-amplifier/

ActiveTwo AD-box. Biosemi. [Online]. Available: https://www.biosemi.com/ad-box_activetwo.htm

g.USBAMP Research. G.Tec. [Online]. Available: https://www.gtec.at/product/gusbamp-research/

C. Velázquez-Rodríguez, R.V. García-Bermudez, F. Rojas-Ruiz, R. Becerra-García, Automatic glissade determination through a mathematical model in electrooculographic records, in Proceedings of Lecture Notes in Computer Science, (2017)

A.L. Goldberger, L.A. Amaral, L. Glass, J.M. Hausdorff, P.C. Ivanov, R.G. Mark, J.E. Mietus, G.B. Moody, C.K. Peng, H.E. Stanley, Physiobank. Physiotool. Physionet Circulat. 101(23), e215–e220 (2000). https://doi.org/10.1161/01.cir.101.23.e215

G.Q. Zhang, L. Cui, R. Mueller, S. Tao, M. Kim, M. Rueschman, S. Mariani, D. Mobley, S. Redline, The national sleep research resource: towards a sleep data communications. J. Am. Med. Inform. Assoc. 25(10), 1351–1358 (2008). https://doi.org/10.1093/jamia/ocy064

C. O’Reilly, N. Gosselin, J. Carrier, T. Nielsen, Montreal archive of sleep studies: An open-access resource for instrument benchmarking & exploratory research. J. Sleep Res. 1(24), 628–635 (2014). https://doi.org/10.1111/jsr.12169

A. Sterr, J.K. Ebajemito, K.B. Mikkelsen, M.A. Bonmati-Carrion, N. Santhi, C. Della Monica, L. Grainger, G. Atzori, V. Revell, S. Debener, D.J. Dijk, M. De Vos, Sleep EEG derived from behind-the-ear electrodes (ceegrid) compared to standard polysomnography: A proof of concept study. Front. Hum. Neurosci. 12(452), 1–9 (2018). https://doi.org/10.3389/fnhum.2018.00452

M. Rezaei, H. Mohammadi, H. Khazaie, EEG/EOG/EMG data from a cross sectional study on psychophysiological insomnia and normal sleep subjects. Mendeley Data V4 (2017). https://doi.org/10.17632/3hx58k232n.4

N. Barbara, T.A. Camilleri, K.P. Camilleri, A comparison of EOG baseline drift mitigation techniques. Biomed. Sign. Proces. Cont. 57(540), 101738 (2020). https://doi.org/10.1016/j.bspc.2019.101738

A. Banerjee, A. Konar, D.A. Timbarewala, R. Janarthanan, Detecting eye movement direction from stimulated electro-oculogram by Intelligent Algorithms, in Proceedings of International Conference on Computing Communication & Networking Technologies (ICCCNT), (2012)

A. López, F.J. Ferrero, J.R. Villar, EOG signal compression using turning point algorithm, in Proceedings of IEEE International Instrumentation and Measurement Technology Conference (I2MTC), (2021)

R. Boostani, F. Karimzadeh, M. Nami, A comparative review on sleep stage classification methods in patients and healthy individuals. Comput. Methods Prog. Biomed. 140, 77–91 (2017). https://doi.org/10.1016/j.cmpb.2016.12.004

K. Mehta, A Review on different methods of EOG signal analysis. Internat. J. Innovat. Res. Sci. Eng. Technol. 5(2), 1862–1865 (2016). https://doi.org/10.15680/IJIRSET.2016.0502128

A. Banerjee, S. Dattab, M. Palb, A. Konarb, Tibarewalaa, DN Janarthananc R Classifying electrooculogram to detect directional eye movements, in Proceedings of International Conference on Computational Intelligence: Modeling Techniques and Applications (CIMTA), (2013)

S. D’Souza, N. Sriraam, Statistical based analysis of electrooculogram (EOG) signals: A Pilot Study. Internat. J. Biomed. Clin. Eng. 2(1), 12–25 (2013). https://doi.org/10.4018/ijbce.2013010102

A. López, F.J. Ferrero, S.M. Qaisar, O. Postolache, Gaussian mixture model of saccadic eye movements, in Proceedings of Medical Measurements and Applications (MeMeA), (2022)

H. Wang, C. Wu, T. Li, Y. He, P. Chen, A. Bezerianos, Driving fatigue classification based on fusion entropy analysis combining EOG and EEG. IEEE Access 7, 61975–61986 (2019). https://doi.org/10.1109/ACCESS.2019.2915533

G.R.M. Babu, S. Gopinath, E. Arunkumar, An intelligent EOG system using fractal features and neural networks. Test Eng. Manag. 83, 9920–9925 (2020)

S. Mala, K. Latha, Feature selection in categorizing activities by eye movements using electrooculograph signals, in Proceedings of Science Engineering and Management Research (ICSEMR), (2014)

C. Ding, H. Peng, Minimum redundancy feature selection from microarray gene expression data. J. Bioinforma. Comput. Biol. 3(2), 185–205 (2005). https://doi.org/10.1142/S0219720005001004

S. Mala, K. Latha, Efficient Classification of EOG using CBFS feature selection algorithm, in Proceedings of International Conference on Emerging Research in Computing Information, Communication and Applications (ERCICA), (2013)

C.-E. Kuo, G.-T. Chen, Automatic Sleep staging based on a hybrid stacked LSTM Neural Network Verification Using Large-Scale Dataset. IEEE Access 8, 111837–111849 (2020). https://doi.org/10.1109/ACCESS.2020.3002548

Z. Lv, X.-P. Wu, M. Li, D.-X. Zhang, Development of a human computer interface system using EOG. Health 1(1), 39–46 (2009). https://doi.org/10.4236/health.2009.11008

P. Babita Syal, P. Kumari, Comparative analysis of KNN, SVM, DT for EOG based human computer interface, in Proceedings of International Conference on Current Trends in Computer, Electrical, Electronics and Communication (CTCEEC), (2017)

M.M. Rahman, M.I.H. Bhuiyan, A.R. Hassanb, Sleep stage classification using single-channel EOG. Comput. Biol. Med. 102, 211–220 (2018). https://doi.org/10.17605/OSF.IO/SCGJX

F. Anis, M. Mustafa, N. Sulaiman, M. Rashid, B. Sama, M. Islam, M. Hasan, N. Ali, The classification of Electrooculogram (EOG) through the application of Linear discriminant analysis (LDA) of selected time-domain signals, in Proceedings of Innovative Manufacturing, Mechatronics & Materials Forum (IM3F), (2020)

F. Aziz, H. Arof, N. Mokhtar, M. Mubin, HMM based automated wheelchair navigation using EOG traces in EEG. J. Neural Eng. 11(5), 1–11 (2014). https://doi.org/10.1088/1741-2560/11/5/056018

N. Flad, T. Fomina, H. Buelthoff, L. Chuang, Unsupervised clustering of EOG as a viable substitute for optical eye tracking, in Proceedings of Eye Tracking and Visualization (ETVIS), (2015)

Z. Lv, X.P. Wu, M. Li, D. Zhang, A novel eye movement detection algorithm for EOG driven human computer interface. Pattern Recogn. Lett. 31(9), 1041–1047 (2010). https://doi.org/10.1016/j.patrec.2009.12.017

L. Jia, N. Alias, Comparison of ANN and SVM for classification of eye movements in EOG signals. J. Phys. 971, 1–11 (2018). https://doi.org/10.1088/1742-6596/971/1/012012

M. Jurczak, M. Kolodziej, A. Majkowski, Implementation of a convolutional neural network for eye blink artifacts removal from the electroencephalography signal. Front. Neurosci. 16, 782367 (2022). https://doi.org/10.3389/fnins.2022.782367

X. Zhu, W.L. Zheng, B.L. Lu, X. Chen, S. Chen, C. Wang, EOG-based drowsiness detection using convolutional neural networks, in Proceedings of International Joint Conference on Neural Networks (IJCNN), (2014)

A. Erfanian, B. Mahmoudi, Real-time ocular artifact suppression using recurrent neural network for electro-encephalogram based brain-computer interface. Med. Biol. Eng. Comput. 43, 296–305 (2005). https://doi.org/10.1007/BF02345969

M. Dutt, M. Goodwin, C.W. Omlin, Automatic sleep stage identification with time distributed convolutional neural network, in Proceedings of International Joint Conference on Neural Networks (IJCNN), (2021)

B. Xia, Q. Li, J. Jia, J. Wang, U. Chaudhary, A. Ramos-Murguialday, N. Birbaumer, Electrooculogram based sleep stage classification using deep belief network, in Proceedings of International Joint Conference on Neural Networks (IJCNN), (2017)

P. Kawde, G.K. Verma, Deep belief network based affect recognition from physiological, in Proceedings of Uttar Pradesh Section International Conference on Electrical, Computer and Electronics (UPCON), (2017)

I. Niroshana, X. Zhu, Y. Chen, W. Chen, Sleep stage classification based on EEG, EOG, and CNN-GRU deep learning model, in Proceedings of IEEE International Conference on Awareness Science and Technology (iCAST), (2019)

N.M.M. Noor, S. Ahmad, Implementation of Fuzzy logic controller for wheelchair motion control based on EOG data. Appl. Mech. Mater. 661, 183–189 (2014). https://doi.org/10.4028/www.scientific.net/amm.661.183

M.T. Hellyara, E.C. Ifeachora, D.J. Mappsa, E.M. Allen, N.R. Hudson, Expert system approach to electroencephalogram signal processing. Knowl.-Based Syst. 8(4), 164–175 (1995). https://doi.org/10.1016/0950-7051(95)96213-B

T.G. Chang, J.R. Smith, J.C. Principe, An expert system for multichannel sleep EEG/EOG signal analysis. ISA Trans. 28(1), 45–51 (1989). https://doi.org/10.1016/0019-0578(89)90056-6

I. Watson, F. Marir, Case-based reasoning: A review (Cambridge University, 2009)

S. Barua, S. Begum, M.U. Ahmed, P. Funk, Classification of ocular artifacts in EEG signals using hierarchical clustering and case-based reasoning, in Proceedings of International Conference on Case-Based Reasoning (ICCBR), (2014)

H.B. Abdessalem, A. Byrns, C. Frasson, Optimizing Alzheimer’s disease therapy using a neural intelligent agent-based platform. International Journal of Biosensors & Bioelectronics. Inter. J. Biosens. Bioelectron. 11(2), 70–96 (2021). https://doi.org/10.4236/ijis.2021.112006

T.P. Filgueiras, P.B. Filho, Intelligent agents in biomedical engineering: a systematic review. Inter. J. Biosens. Bioelectron. 6(5), 123–128 (2020). https://doi.org/10.15406/ijbsbe.2020.06.00200

H. Phan, O.Y. Chén, P.K. Zongqing, I. McLoughlin, A. Mertins, M. De Vos, Towards more accurate automatic sleep staging via deep transfer learing. IEEE Trans. Biomed. Eng. 68(6), 1787–1798 (2021). https://doi.org/10.1109/TBME.2020.3020381

J. Perdiz, L. Garrote, G. Pires, U.J. Nunes, A Reinforcement learning assisted eye-driven computer game employing a decision tree-based approach and CNN classification. IEEE Access 9, 46011–46021 (2021). https://doi.org/10.1109/ACCESS.2021.3068055

L. Garrote, J. Perdiz, G. Pires, U. Nunes, Reinforcement learning motion planning for an EOG-centered robot assisted navigation in a virtual environment, in Proceedings of IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), (2019)

P. Zhang, M. Ito, S.-I. Ito, M. Fukumi, Implementation of EOG mouse using Learning vector quantization and EOG-feature based methods, in Proceedings of Conference on Systems, Process & Control (ICSPC), (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

López, A., Ferrero, F. (2023). Biomedical Signal Processing and Artificial Intelligence in EOG Signals. In: Qaisar, S.M., Nisar, H., Subasi, A. (eds) Advances in Non-Invasive Biomedical Signal Sensing and Processing with Machine Learning. Springer, Cham. https://doi.org/10.1007/978-3-031-23239-8_8

Download citation

DOI: https://doi.org/10.1007/978-3-031-23239-8_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-23238-1

Online ISBN: 978-3-031-23239-8

eBook Packages: Computer ScienceComputer Science (R0)