Abstract

Scalar-on-image regression aims to investigate changes in a scalar response of interest based on high-dimensional imaging data. We propose a novel Bayesian nonparametric scalar-on-image regression model that utilises the spatial coordinates of the voxels to group voxels with similar effects on the response to have a common coefficient. We employ the Potts-Gibbs random partition model as the prior for the random partition in which the partition process is spatially dependent, thereby encouraging groups representing spatially contiguous regions. In addition, Bayesian shrinkage priors are utilised to identify the covariates and regions that are most relevant for the prediction. The proposed model is illustrated using the simulated data sets.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Bayesian nonparametric

- Gibbs-type priors

- Potts model

- Clustering

- Generalised Swendsen-Wang

- High-dimensional imaging data

1 Introduction

Through advances in data acquisition, vast amounts of high-dimensional imaging data are collected to study phenomena in many fields. Such data are common in biomedical studies to understand a disease or condition of interest [2, 5, 39, 44], and in other fields such as psychology [3, 42], social sciences [7, 15, 17, 38], economics [12, 26, 27], climate sciences [30, 31], environmental sciences [4, 11, 22] and more. While extracting features from the images based on predefined regions of interest favours interpretation and eases computational and statistical issues, changes may occur in only part of a region or span multiple structures. In order to capture the complex spatial pattern of changes and improve accuracy and understanding of the underlying phenomenon, sophisticated approaches are required that utilize the entire high-dimensional imaging data. However, the massive dimension of the images, which is often in the millions, combined with the relatively small sample size, which at best is usually in the hundreds, pose serious challenges.

In the statistical literature, this is framed as a scalar-on-image regression (SIR) problem [10, 14, 16, 19]. SIR belongs to the “large p, small n” paradigm; thus, many SIR models utilise shrinkage methods that additionally incorporate the spatial information in the image [10, 14, 16, 18, 19, 24, 37, 40, 46]. In the SIR problem, the covariates represent the image value at a single pixel/voxel, i.e. a very tiny region, and the effect on the response is most often weak, unreliable and difficult to interpret. Moreover, neighbouring pixels/voxels are highly correlated, making standard regression methods, even with shrinkage, problematic due to multicollinearity.

To overcome these difficulties, we develop a novel Bayesian nonparametric (BNP) SIR model that extracts interpretable and reliable features from the images by grouping voxels with similar effects on the response to have a common coefficient. Specifically, we employ the Potts-Gibbs model [21] as the prior of the random image partition to encourage spatially dependent clustering. In this case, features represent regions that are automatically defined to be the most discriminative. This not only improves the signal and eases interpretability, but also reduces the computational burden by drastically decreasing the image dimension and addressing the multicollinearity problem. Moreover, it allows sharp discontinuities in the coefficient image across regions, which may be relevant in medical applications to capture irregularities [46].

In this direction, [19] proposed the Ising-DP SIR model, which combines an Ising prior to incorporate the spatial information in the sparsity structure with a Dirichlet Process (DP) prior to group coefficients. Still, the spatial information is only incorporated in the sparsity structure and not in the BNP clustering model, which could result in regions that are dispersed throughout the image. Instead, we propose to incorporate the spatial information in the random partition model, encouraging spatially contiguous regions. Further advantages of the nonparametric model include a data-driven number of clusters, interpretable parameters, and efficient computations. Moreover, we combine this with heavy-tailed shrinkage priors [41] to identify relevant covariates and regions.

The remainder of this article is organized as follows. Section 2 outlines the development of the SIR model based on the Potts-Gibbs models. Section 3 derives the MCMC algorithm for posterior inference using the generalized Swendsen-Wang (GSW) [47] algorithm for efficient split-merge moves that take advantage of the spatial structure. Section 4 illustrates the methods through simulation studies. Section 5 concludes with a summary and future work.

2 Model Specification

We introduce the statistical models that form the basis of the proposed Potts-Gibbs SIR model: SIR, random image partition model and shrinkage prior.

2.1 Scalar-on-Image Regression

SIR is a statistical linear method used to study and analyse the relationship between a scalar outcome and two or three-dimensional predictor images under a single regression model [10, 14, 16, 19]. For each data point, \(i = 1, \ldots ,n\), we have

where \(y_i\) is a scalar continuous outcome measure, \({\textbf {w}}_i = (w_{i1}, \ldots ,w_{iq})^T \in \mathbb {R}^q\) is a q-dimensional vector of covariates, and \({\textbf {x}}_i= (x_{i1}, \ldots ,x_{ip})^T \in \mathbb {R}^p\) is a p-dimensional image predictor. Each \(x_{ij}\) indicates the value of the image at a single pixel with spatial location \({\textbf {s}}_j = (s_{j1}, s_{j2})^T \in \mathbb {R}^2 \) for \(j = 1, \ldots ,p\). We define \(\boldsymbol{\mu } = (\mu _1, \ldots ,\mu _q)^T \in \mathbb {R}^q\) as a q-dimensional fixed effects vector and \(\boldsymbol{\beta } = \left( \beta ({\textbf {s}}_1), \ldots ,\beta ({\textbf {s}}_p)\right) ^T\) (with \(\beta _j := \beta ({\textbf {s}}_j)\)) as the spatially varying coefficient image described on the same lattice as \({\textbf {x}}_i\). We model the high-dimensional \(\boldsymbol{\beta }\) by spatially clustering the pixels into M regions and assuming common coefficients \(\beta ^*_1, \ldots , \beta ^*_M\) within in each cluster, i.e. \(\beta _j = \beta _m^*\) given the cluster label \(z_j =m\). Thus, the prior on the coefficient image is decomposed into two parts: the random image partition model for spatially clustering the pixels and a shrinkage prior for the cluster-specific coefficients \(\boldsymbol{\beta }^* = \left( \beta ^*_1, \ldots , \beta ^*_M\right) ^T\). The SIR model in (1) can be extended for other types of responses through a generalized linear model framework (GLM) [23].

2.2 Random Image Partition Model

The image predictors are observed on a spatially structured coordinate system. Exchangeability is indeed no longer the proper assumption as the images contain covariate information, that we wish to leverage to improve model performance in this high-dimensional setting. To do so, we combine BNP random partition models, which avoid the need to prespecify the number of clusters, allowing it be determined and grow with the data, with a Potts-like spatial smoothness component [36]. Spatial random partition models in this direction are a growing research area, including Markov random field (MRF) with the product partition model (PPM) [32], with DP [29, 47], with Pitman-Yor process (PY)[21] and with mixture of finite mixtures (MFM) [13, 48]. Precisely, within the BNP framework, we focus on the class of Gibbs-type random partitions [1, 9, 20, 35], motivated by their comprise between tractable predictive rules and richness of the predictive structure, including important cases, such as the DP [6], PY [33, 34], and MFM [25]. The Potts-Gibbs models induce a distribution on the partition \(\pi _p = \{ C_1, \ldots , C_{M} \}\) of p pixels into M nonempty, mutually exclusive, and exhaustive subsets \(C_1, \ldots , C_{M}\) such that \(\cup _{C \in \pi _p} C = \{1, \ldots ,p\}\). The model can be summarised as:

where \(z_j \in \{1, \cdots , M\}\), \(j \sim k\) means that j and k are neighbors, and \(\boldsymbol{1}_{z_j=z_k}\) equals to 1 if j and k in the same cluster and 0 otherwise. In the following, we assume the spatial locations lie on a rectangular lattice with first-order neighbors and a common coupling parameter \(\upsilon \) for all neighbor pairs; a higher value of \(\upsilon \) encourages more spatial smoothness in the partition. We use the general notation \(\phi \) to denote the parameters of the Gibbs-type partition models, and focus our study on three cases 1) DP with concentration parameter \(\alpha > 0\); 2) PY with discount parameter \(\delta \in [0,1)\) and concentration parameter \(\alpha >-\delta \); and 3) MFM with parameter \(\gamma >0\) (larger values encouraging more equally sized clusters) and a distribution \(P_L(\cdot | \lambda )\) with parameter \(\lambda \) related to the prior on the number of clusters. The \(\{ V_p(M) : p \ge 1, 1 \le M \le p\}\) denotes the set of non-negative weights, which solves the backward recurrence relation \(V_p(M) = (p-\delta M) V_{p+1}(M) + V_{p+1}(M+1)\) with \(V_1(1) = 1\). Table 1 describes the \(V_p(M)\) and \(W_m(\phi )\) for DP, PY and MFM models.

2.3 Shrinkage Prior

To identify relevant regions, we use heavy tailed priors for the unique values \((\beta _1^*, \ldots , \beta _M^*)\) of \(\left( \beta ({\textbf {s}}_1), \ldots , \beta ({\textbf {s}}_p) \right) \). Specifically, a t-shrinkage prior is used, motivated by its computational efficiency and nearly optimal contraction rate and selection consistency [41]:

where \( t_{\nu }(s \sigma )\) denotes t-distribution with degree of freedom \(\nu \) and scale parameter \(s \sigma \). For posterior inference, the t-distribution (2) is rewritten as a hierarchical inverse-gamma scaled Gaussian mixture,

where \(a_\eta \) and \(b_\eta \) are the shape and scaling parameter of the mixing distribution for each \(\eta _m^*\) respectively with \(\nu = 2 a_\eta \) and \(s = \sqrt{b_\eta /a_\eta }\).

3 Inference

We aim to infer the posterior distribution of the parameters based on the proposed Potts-Gibbs SIR model:

where \(x_{im}^* = \sum ^p_{j=1} x_{ij} \boldsymbol{1}(j \in C_m) / \sqrt{\mid C_m \mid }\) represents the total value, e.g. volume in the mth region of the image, \({\textbf {m}}_\mu = (m_{\mu _1} , \ldots , m_{\mu _q})\), \(\Sigma _\mu = \text {diag}(c_{\mu _1}, \ldots , c_{\mu _q})^T\), and \(\Sigma _{\beta ^*} = \text {diag}(\eta _{1}^*, \ldots , \eta ^*_{_M})\). Note that when defining \(x_{im}^*\), we rescale by the square root of cluster size , which is equivalent to rescaling the variance of \(\beta ^*_m\) by the cluster size, encouraging more shrinkage for larger regions.

We develop a Gibbs sampler to simulate from the posterior with a generalized Swendsen-Wang (GSW) algorithm to draw samples from the Potts-Gibbs model. Poor mixing can be seen in single-site Gibbs sampling [8] due to the high correlation between the pixel labels. The SW algorithm [43] addresses this by forming nested clusters of neighbouring pixels, then updating all of the labels within a nested cluster to the same value. The generalisation of the technique for standard Potts models to generalised Potts-partition models is called GSW [47]. At each step of the algorithm, we proceed through the following steps:

-

1.

Sample the image partition \(\pi _p\) given \(\boldsymbol{\eta }^*\) and the data (with \(\boldsymbol{\beta }^*, \boldsymbol{\mu }, \sigma ^2\) marginalized). GSW is used to update simultaneously nested groups of pixels and hence improve the exploration of the posterior. The algorithm relies on the introduction of auxiliary binary bond variables, where \(r_{jk} = 1\) if pixels j and k are bonded, otherwise 0. The bond variables define a partition of the pixels into nested clusters \(A_1, \ldots , A_O\), where O denotes the number of nested clusters and each \(A_o \subseteq C_m\) for some \(m=1, \ldots , M\). For each neighbor pair \(j \sim k\) for \(1 \le j < k \le p\), we sample the bond variables as follows, \(r_{jk} \sim \text {Ber}\{ 1 - \exp (- \upsilon _{jk} \zeta _{jk} \boldsymbol{1}_{z_j=z_k})\}\), where we define \(\zeta _{jk} = \kappa \exp \{-\tau d(\hat{\beta }_{j}, \hat{\beta }_{k})\}\) with \(\hat{\beta }_{j}\) denoting the estimated coefficient from univariate regression on the jth pixel and \(\kappa , \tau \) are the tuning parameters of the GSW sampler. Notice that the algorithm reduces to single-site Gibbs when \(\kappa =0\), and recovers classical SW when \(\kappa =1\) and \(\tau =0\). As we are dealing with non-conjugate priors, we update the cluster assignment by extending Gibbs sampling with the addition of auxiliary parameters, which is widely known as Algorithm 8 [28]. We denote by \(A_o\) the current nested cluster; \(C_1^{-A_o}, \ldots ,C_M^{-A_o}\) the clusters without nested cluster \(A_o\); \(M^{-A_o}\) the number of distinct clusters excluding \(A_o\) and h the number of temporary auxiliary variables. For each nested cluster \(A_o\), it is assigned to an existing cluster \(m=1, \ldots ,M^{-A_o}\) or a new cluster \(m = M^{-A_o}+1, \ldots ,M^{-A_o}+h\) with probability as follows,

$$\begin{aligned} \begin{aligned}&\text {pr}(A_o \in C_m^{-A_o} \mid \cdots ) \\&\propto {\left\{ \begin{array}{ll} \frac{\Gamma (|C_{m}^{-A_o}| +|A_o| - \delta )}{\Gamma (|C_{m}^{-A_o}|-\delta )} \text {pr}\left( {\textbf {y}} \mid \pi _p^{A_o \rightarrow m} , \boldsymbol{\eta ^*}\right) \\ \prod \nolimits _{ \{ (j,k) \mid j \in A_o, k \in C_m^{-A_o}, r_{jk}=0 \} } \exp \left\{ \upsilon _{jk} (1-\zeta _{jk}) \right\} , &{} \text {for } C_m^{-A_o} \in \pi _p^{-A_o},\\ \\ \frac{1}{h}\frac{V_{p}(M^{-A_o}+1)}{V_{p}(M^{-A_o})} \frac{\Gamma (|A_o| - \delta )}{\Gamma (1-\delta )} \text {pr}\left( {\textbf {y}} \mid \pi _p^{A_o \rightarrow M+1} , \boldsymbol{\eta ^*} \right) , &{} \text {for new } C_m^{-A_o}; \end{array}\right. } \end{aligned} \end{aligned}$$where \(\text {pr}\left( {\textbf {y}} \mid \pi _p^{A_o \rightarrow m} , \boldsymbol{\eta ^*} \right) \) and \(\text {pr}\left( {\textbf {y}} \mid \pi _p^{A_o \rightarrow M+1} , \boldsymbol{\eta ^*} \right) \) denote the marginal likelihood of data obtained by moving \(A_o\) from its current cluster to existing clusters or newly created cluster respectively. Before updating the cluster assignments, we sample the nested clusters and compute the volume of each nested cluster for all images, with computational cost \(\mathcal {O}( np)\). When updating the cluster assignments, the marginal likelihood dominates the computational cost, as it involves inversion and determinants of \((M+q)\times (M+q)\) matrices and updating the sufficient statistics for every nested cluster and every outer cluster allocation, i.e. the cost is \(\mathcal {O}([[M+q]^3 + n[M+q]] OM )\).

-

2.

Sample \(\boldsymbol{\beta }^*, \boldsymbol{\mu }, \sigma ^2\) jointly given the partition \(\pi _p\), \(\boldsymbol{\eta }^*\) and the data. Notationally, we reformulate \(\tilde{{\textbf {x}}}_i = ( {\textbf {w}}_i^T, {\textbf {x}}^{*\, T}_i )^T\) and \(\tilde{\boldsymbol{\beta }}= (\boldsymbol{\mu }^T, \boldsymbol{\beta }^{* \, T} )^T\). We define \(\tilde{{\textbf {X}}}\) be the matrix with rows equal to \(\tilde{{\textbf {x}}}_i^T\). The corresponding full conditional for \(\tilde{\boldsymbol{\beta }}\) and \(\sigma ^2\) is

$$\begin{aligned} \begin{aligned} \sigma ^2 \mid \cdots \sim&\text {IG} ( \hat{a}_{\sigma }, \hat{b}_{\sigma }),\\ \tilde{\boldsymbol{\beta }}\mid \sigma ^2, \cdots \sim&\text {N} ( \hat{{\textbf {m}}}_{\tilde{\beta }}, \sigma ^2\hat{\Sigma }_{\tilde{\beta }}), \end{aligned} \end{aligned}$$where \(\hat{\Sigma }_{\tilde{\beta }}= ( \Sigma _{\tilde{\beta }}^{-1} +\tilde{{\textbf {X}}}^T \tilde{{\textbf {X}}}) ^{-1}\), \(\hat{{\textbf {m}}}_{\tilde{\beta }}= \hat{\Sigma }_{\tilde{\beta }}(\Sigma _{\tilde{\beta }}^{-1} {\textbf {m}}_{\tilde{\beta }}+ \tilde{{\textbf {X}}}^T {\textbf {y}})\), and \(\text {IG}(\hat{a}_{\sigma }, \hat{b}_{\sigma })\) denotes the inverse-gamma distribution with updated shape \(\hat{a}_{\sigma }= a_{\sigma }+ n/2\) and scale \(\hat{b}_{\sigma }= b_{\sigma }+ [ {\textbf {m}}_{\tilde{\beta }}^T\Sigma _{\tilde{\beta }}^{-1}{\textbf {m}}_{\tilde{\beta }}+{\textbf {y}}^T{\textbf {y}}- \hat{{\textbf {m}}}_{\tilde{\beta }}^T\hat{\Sigma }_{\tilde{\beta }}^{-1}\hat{{\textbf {m}}}_{\tilde{\beta }}]/2\).

-

3.

Sample \(\boldsymbol{\eta }^*\) given \(\boldsymbol{\beta }^*\). The corresponding full conditional for each \(\eta _m^*\) is an inverse-gamma distribution with updated shape \(\hat{a}_{\eta }= a_\eta +1/2\) and scale \(\hat{b}_{\eta }= b_\eta + (\beta ^*_m)^2/(2\sigma ^2)\):

$$\begin{aligned} \begin{aligned} \eta _m^* \mid \cdots \sim \text {IG} (\hat{a}_{\eta }, \hat{b}_{\eta }), \quad \text {for } m=1, \ldots , M . \end{aligned} \end{aligned}$$

4 Numerical Studies

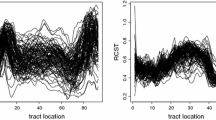

We study through simulations the performance of the proposed model and compare it with Ising-DP [19]. We consider 2D images in this simulation. The \(n=300\) images are simulated on a two dimensional grid of size \(10 \times 10\), with spatial locations \({\textbf {s}}_j = (s_{j1}, s_{j2}) \in \textbf{R}^2\) for \(1\le s_{j1}, s_{j2} \le 10\). For simplicity’s sake, we include an intercept but do not consider others covariates, \({\textbf {w}}_i\). We concentrate on the two simulation scenarios with true \(M=2\) and \(M=5\) as shown in Figs. 1 and 2. For each experiment, we summarise the posterior of the clustering structure of the data sets by minimising the posterior expected Variation of Information (VI) [45].

The Potts-Gibbs models can detect correctly the cluster structure under scenario 1 (Fig. 1). The Potts-Gibbs models are also capable of capturing and identifying the more complex cluster structure underlying the data for scenario 2 (Fig. 2) with the ARI 0.621–0.830 (Table 2). On the contrary, Ising-DP has failed terribly to recover the cluster structure for scenario 2, as illustrated in Fig. 2. It is observed that under the Potts-Gibbs models, most of the resultant clusters are spatially proximal, while under Ising-DP, the clusters are dispersed throughout the image. By taking into consideration spatial dependence in the random partition model via the Potts-Gibbs models, the proposed models produce spatially aware clustering and thus improve the predictions.

DP has a concentration parameter \(\alpha \), with larger values encouraging more new clusters and a rich-get-richer property that favours allocation to larger clusters. The PY has an additional discount parameter \(\delta \in [0,1)\) that helps to mitigate the rich-get-richer property and phase transition of the Potts model. The MFM has a parameter \(\gamma \), with larger values encouraging more equal-sized clusters and helping to avoid phase transition of the Potts model, as well as additional parameters \(\lambda \) which are related to the prior on the number of clusters.

5 Conclusion

We have developed novel Bayesian scalar-on-image regression models to extract interpretable features from the image by clustering and leveraging the spatial coordinates of the pixels/voxels. To encourage groups representing spatially contiguous regions, we incorporate the spatial information directly in the prior for the random partition through Potts-Gibbs random partition models. We have shown the potential of Potts-Gibbs models in detecting the correct cluster structure on simulated data sets. In our experiments, the hyperparameters of the Potts-Gibbs model were determined via a simple grid search on selected combinations of hyperparameters. However, future work will consist of investigating the influence of the various parameters inherent to the model and guidelines and tools to determine hyperparameters. The model will then be applied to real images, e.g. neuroimages. Motivated by examining and identifying brain regions of interest in Alzheimer’s disease, we will use MRI images obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (www.adni-info.org). The proposed SIR model will be extended to classification problems through the GLM framework.

References

Cerquetti, A.: Generalized Chinese restaurant construction of exchangeable Gibbs partitions and related results. arXiv:0805.3853 (2008)

Craddock, R.C., Holtzheimer, P.E., III., Hu, X.P., Mayberg, H.S.: Disease state prediction from resting state functional connectivity. Magn. Reson. Med. 62(6), 1619–1628 (2009)

Davatzikos, C., Shen, D., Gur, R.C., Wu, X., Liu, D., Fan, Y., Hughett, P., Turetsky, B.I., Gur, R.E.: Whole-brain morphometric study of schizophrenia revealing a spatially complex set of focal abnormalities. Arch. Gen. Psychiatry 62(11), 1218–1227 (2005)

Debois, D., Ongena, M., Cawoy, H., De Pauw, E.: MALDI-FTICR MS imaging as a powerful tool to identify Paenibacillus antibiotics involved in the inhibition of plant pathogens. J. Am. Soc. Mass Spectrom. 24(8), 1202–1213 (2013)

Fan, Y., Resnick, S.M., Wu, X., Davatzikos, C.: Structural and functional biomarkers of prodromal Alzheimer’s disease: a high-dimensional pattern classification study. NeuroImage 41(2), 277–285 (2008)

Ferguson, T.S.: A Bayesian analysis of some nonparametric problems. Ann. Stat. 1, 209–230 (1973)

Ferwerda, B., Schedl, M., Tkalcic, M.: Using instagram picture features to predict users’ personality. In: International Conference on Multimedia Modeling. Springer (2016)

Geman, S., Geman, D.: Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 6, 721–741 (1984)

Gnedin, A., Pitman, J.: Exchangeable Gibbs partitions and Stirling triangles. J. Math. Sci. 138(3), 5674–5685 (2006)

Goldsmith, J., Huang, L., Crainiceanu, C.M.: Smooth scalar-on-image regression via spatial Bayesian variable selection. J. Comput. Graph Stat. 23(1), 46–64 (2014)

Gundlach-Graham, A., Burger, M., Allner, S., Schwarz, G., Wang, H.A.O., Gyr, L., Grolimund, D., Hattendorf, B., Günther, D.: High-speed, high-resolution, multielemental laser ablation-inductively coupled plasma-time-of-flight mass spectrometry imaging: Part i. instrumentation and two-dimensional imaging of geological samples. Anal. Chem. 87(16), 8250–8258 (2015)

Henderson, J.V., Storeygard, A., Weil, D.N.: Measuring economic growth from outer space. National Bureau of Economic Research, Cambridge, Mass (2009)

Hu, G., Geng, J., Xue, Y., Sang, H.: Bayesian spatial homogeneity pursuit of functional data: an application to the U.S. income distribution. arXiv:2002.06663 (2020)

Huang, L., Goldsmith, J., Reiss, P.T., Reich, D.S., Crainiceanu, C.M.: Bayesian scalar-on-image regression with application to association between intracranial DTI and cognitive outcomes. NeuroImage 83, 210–223 (2013)

Hum, N.J., Chamberlin, P.E., Hambright, B.L., Portwood, A.C., Schat, A.C., Bevan, J.L.: A picture is worth a thousand words: a content analysis of Facebook profile photographs. Comput. Hum. Behav. 27(5), 1828–1833 (2011)

Kang, J., Reich, B.J., Staicu, A.M.: Scalar-on-image regression via the soft-thresholded Gaussian process. Biometrika 105(1), 165–184 (2018)

Kim, Y., Kim, J.H.: Using computer vision techniques on instagram to link users’ personalities and genders to the features of their photos: an exploratory study. Inf. Process. Manage. 54(6), 1101–1114 (2018)

Lee, K., Cao, X.: Bayesian group selection in logistic regression with application to MRI data analysis. Biometrics 77(2), 391–400 (2021)

Li, F., Zhang, T., Wang, Q., Gonzalez, M.Z., Maresh, E.L., Coan, J.A.: Spatial Bayesian variable selection and grouping for high-dimensional scalar-on-image regression. Ann. Appl. Stat. 9(2), 687–713 (2015)

Lijoi, A., Prünster, I.: Models beyond the Dirichlet process. In: Bayesian Nonparametrics (2010)

Lü, H., Arbel, J., Forbes, F.: Bayesian nonparametric priors for hidden Markov random fields. Stat. Comput. 30(4), 1015–1035 (2020)

Maloof, K.A., Reinders, A.N., Tucker, K.R.: Applications of mass spectrometry imaging in the environmental sciences. Curr. Opin. Environ. Sci. Health. 18, 54–62 (2020)

McCullagh, P., Nelder, J.A.: Generalized linear models. Routledge (2019)

Mehrotra, S., Maity, A.: Simultaneous variable selection, clustering, and smoothing in function-on-scalar regression. Can. J, Stat (2021)

Miller, J.W., Harrison, M.T.: Mixture models with a prior on the number of components. J. Am. Stat. Assoc. 113(521), 340–356 (2018)

Naik, N., Kominers, S.D., Raskar, R., Glaeser, E.L., Hidalgo, C.A.: Computer vision uncovers predictors of physical urban change. Proc. Natl. Acad. Sci. U.S.A. 114(29), 7571–7576 (2017)

Naik, N., Raskar, R., Hidalgo, C.A.: Cities are physical too: using computer vision to measure the quality and impact of urban appearance. Am. Econ. Rev. 106(5), 128–132 (2016)

Neal, R.M.: Markov chain sampling methods for Dirichlet process mixture models. J. Comput. Graph Stat. 9(2), 249–265 (2000)

Orbanz, P., Buhmann, J.M.: Nonparametric Bayesian image segmentation. Int. J. Comput. Vis. 77(1–3), 25–45 (2007)

O’Neill, S.J.: Image matters: climate change imagery in US. UK and Australian newspapers. Geoforum 49, 10–19 (2013)

O’Neill, S.J., Boykoff, M., Niemeyer, S., Day, S.A.: On the use of imagery for climate change engagement. Glob. Environ. Change 23(2), 413–421 (2013)

Pan, T., Hu, G., Shen, W.: Identifying latent groups in spatial panel data using a Markov random field constrained product partition model. arXiv:2012.10541 (2020)

Perman, M., Pitman, J., Yor, M.: Size-biased sampling of Poisson point processes and excursions. Probab. Theory Relat. Fields 92(1), 21–39 (1992)

Pitman, J.: Some developments of the Blackwell-Macqueen urn scheme. Lect. Notes-Monograph Ser. 30, 245–267 (1996)

Pitman, J.: Lecture Notes in Mathematics. Springer (2006)

Potts, R.B., Domb, C.: Some generalized order-disorder transformations. Math. Proc. Cambridge Philos. Soc. 48(1), 106 (1952). https://doi.org/10.1017/S0305004100027419

Reiss, P., Mennes, M., Petkova, E., Huang, L., Hoptman, M., Biswal, B., Colcombe, S., Zuo, X., Milham, M.: Extracting information from functional connectivity maps via function-on-scalar regression. NeuroImage 56, 140–148 (2011)

Samany, N.N.: Automatic landmark extraction from geo-tagged social media photos using deep neural network. Cities 93, 1–12 (2019)

Shi, J., Lepore, N., Gutman, B., Thompson, P., Baxter, L., Caselli, R., Wang, Y.: Genetic influence of apolipoprotein E4 genotype on hippocampal morphometry: an N = 725 surface-based Alzheimer’s disease neuroimaging initiative study. Hum. Brain Mapp. 35(8), 3903–3918 (2014)

Smith, M., Fahrmeir, L.: Spatial Bayesian variable selection with application to functional magnetic resonance imaging. J. Am. Stat. Assoc. 102(478), 417–431 (2007)

Song, Q., Liang, F.: Nearly optimal Bayesian shrinkage for high dimensional regression. arXiv:1712.08964 (2017)

Sun, D., van Erp, T.G., Thompson, P.M., Bearden, C.E., Daley, M., Kushan, L., Hardt, M.E., Nuechterlein, K.H., Toga, A.W., Cannon, T.D.: Elucidating a magnetic resonance imaging-based neuroanatomic biomarker for psychosis: Classification analysis using probabilistic brain atlas and machine learning algorithms. Biol. Psychiatry (1969) 66(11), 1055–1060 (2009)

Swendsen, R.H., Wang, J.S.: Nonuniversal critical dynamics in Monte Carlo simulations. Phys. Rev. Lett. 58(2), 86–88 (1987)

Van Walderveen, M., Kamphorst, W., Scheltens, P., Van Waesberghe, J., Ravid, R., Valk, J., Polman, C., Barkhof, F.: Histopathologic correlate of hypointense lesions on T1-weighted spin-echo MRI in multiple sclerosis. Neurology 50(5), 1282–1288 (1998)

Wade, S., Ghahramani, Z.: Bayesian cluster analysis: Point estimation and credible balls (with discussion). Bayesian Anal. 13(2), 559–626 (2018)

Wang, X., Zhu, H., Initiative, A.D.N.: Generalized scalar-on-image regression models via total variation. J. Am. Stat. Assoc. 112(519), 1156–1168 (2017)

Xu, R.Y.D., Caron, F., Doucet, A.: Bayesian nonparametric image segmentation using a generalized Swendsen-Wang algorithm. arXiv:1602.03048 (2016)

Zhao, P., Yang, H.C., Dey, D.K., Hu, G.: Bayesian spatial homogeneity pursuit regression for count value data. arXiv:2002.06678 (2020)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Teo, M.S.X., Wade, S. (2022). Bayesian Nonparametric Scalar-on-Image Regression via Potts-Gibbs Random Partition Models. In: Argiento, R., Camerlenghi, F., Paganin, S. (eds) New Frontiers in Bayesian Statistics. BAYSM 2021. Springer Proceedings in Mathematics & Statistics, vol 405. Springer, Cham. https://doi.org/10.1007/978-3-031-16427-9_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-16427-9_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-16426-2

Online ISBN: 978-3-031-16427-9

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)