Abstract

Predictive maintenance as one of the most prominent data-driven approaches enables companies to not only maximize the reliability of production processes but also to improve their efficiency. This is especially valuable in today’s volatile environment. Nevertheless, companies still struggle to implement digital technologies to track and improve their manufacturing processes, which includes data driven decision support systems. Based on practitioner interviews we identified the lack of guidance as a root cause. Additionally, literature reveals a shortcoming of methods especially suited for the needs of the manufacturing industry. This study contributes to this field by answering the question of how a procedural method can look like to guide practitioners to build decision support systems for effective interventions in manufacturing. Applying a design science research approach, the manuscript presents a seven-step procedural method to build decision support systems in manufacturing. The approach was designed and field tested at the example of a predictive maintenance model for a spring production process. The findings indicate that the incorporation of all stakeholders and the uncovering and use of implicit process knowledge in humans is of utmost importance for success.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The importance of managing manufacturing volatility has become ever more present due to recent events such as the COVID-19 pandemic and the Ukraine war. Despite them being external events, the ability to deal with internal disruptions and disturbances has become more important as well [1, 2]. According to Heil [3] and Peukert et al. [4] externally caused disruptions account for less than 30% of all disruptions. Hence, internal disruptions are important. These are, for example machine failures, quality defects, or personnel failures [4]. To cope with such internal events, two approaches are suitable: reactive or preventive [4]. The first approach describes measures reacting to an already occurred event, while the latter describes measures that will prevent disruptions from occurring [4]. Of particular importance are cyber-physical solutions, which are expected to “significantly contribute to the better transparency and to the more robust functioning of supply chains” [1]. This is due to their ability to quickly and reliably unveil potential events, while at the same time reducing potential negative consequences [1].

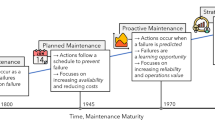

“One of the most prominent data-driven approaches for monitoring industrial systems aiming to maximize reliability and efficiency” is Predictive Maintenance (PdM) [5]. PdM “consists of assets or systems monitoring to predict trends, behavior patterns, and correlations by statistical or machine learning models aimed at developing prognostic methods for fault detection and diagnosis” [5]. Introducing PdM can reduce maintenance costs by up to 30% and at the same time reduce breakdowns by up to 75% [6, 7]. Bunzel [8] even states that 50% of the preventive maintenance costs are a waste. PdM can increase efficiency significantly, as maintenance accounts for a total of 15–60% of the total costs of manufacturing operations [9, 10]. Establishing a ratio: Mobley [10] states that “the U.S. industry spends more than $200 billion each year on maintenance”. As a result, PdM 1) can reduce machine breakdowns, which in turn reduces the possibilities of internal disturbances and 2) has the potential to reduce manufacturing costs [10, 11]. Therefore, PdM is one potential solution to solve the trade-off between cost efficiency and increase in robustness. As a result, the study focuses on this particular measure.

Despite a variety of use cases of data-driven improvements, companies are far from implementing such approaches [5]. Interviews with practitioners from three different companies reveal that one reason is the lack of a “roadmap” or “user manual” that guides an implementation. These statements show that current approaches lack a manufacturing focus and are too complex to apply.

Hence, we want to answer the research question of “how can a procedural method look like to guide practitioners to build decision support systems for effective interventions in manufacturing?” We do so by following the design science research (DSR) approach from Hevner [12]. The practical contributions include both the procedural method and insights about the role of humans. Additionally, scientifical contributions include the necessary steps to implement data-driven improvements in a manufacturing environment, and the importance of implicit human knowledge.

The remainder of this article is structured as follows: After the introduction, related literature on data-driven improvement approaches and PdM is discussed. Subsequently, the research methodology and the artifact development are introduced. Afterwards, the field testing is described. Lastly, we briefly discuss the theoretical and practical implications of this paper, as well as its limitations and possible future research.

2 Research Background

All major countries with a strong manufacturing industry have identified the integration of digital technology into manufacturing processes as important for future competitiveness. In Germany the concept is called Industry 4.0 [13], China initiated China 2025 [14], and in the USA, the National Institute of Standards and Technology has coined the term smart manufacturing [15]. Although the concepts differ, all promote the use of modern IT technologies and data in manufacturing [16], with one of the key technologies being big data analysis [17]. Despite the announcement a decade ago (e.g., 2011 in Germany) [13], companies still struggle to implement the technologies [5].

Big data analytics refers to a set of data with high volume and complex structure that cannot be handled by traditional methods of data processing [17]. It aims to transform data into meaningful and usable information [18]. Four levels of analytic capabilities are apparent: descriptive, diagnostic, predictive, and prescriptive [19,20,21,22]. Descriptive answers the question of “what happened”. At this first level of data analytics, no root-causes analysis is conducted [22]. Diagnostic addresses the question of “why did it happen” and identifies root-causes [22]. Predictive addresses the question of “what will happen” and seeks to predict potential future outcomes based on drivers of observed phenomena [22]. Prescriptive addresses the question of “what should be done” combining results of the previous stages [22]. We aim to develop a procedural method for stage three (prescriptive), since PdM can be of great value for companies (see Sect. 1) and companies so far struggle to make use of data, resulting in stage 4 being out of scope for a significant number of companies.

A literature review reveals many approaches for data mining and exploitation (i.e., [23,24,25,26,27,28,29,30,31,32,33]). One of the most famous is the Cross Industry Standard Process for Data Mining (CRISP-DM) [34]. It is industry-independent consisting of six iterative phases: business understanding, data understanding, data preparation, modeling, evaluation, and deployment [25, 34]. It emphasizes the following aspects as essential to succeed with big data projects: understanding the business problems, a well-planned project map, adoption of innovative visualization techniques, top management involvement, and a data-driven decision making culture [22]. Another approach is the Data Value Chain, a framework to manage data holistically from capture to decision making [31]. The chain provides a framework to examine how to bring disparate data together in an organized fashion and create valuable information that can inform decision making at the enterprise level [31]. The flexible analytics framework (FlexAnalytics) proposes several potential data-analytics placement strategies. It is applicable for data pre-processing, runtime data analysis and visualization, as well as for large scale data transfer [33]. Another well-known method is “Sample, Explore, Modify, Model and Assess” (SEMMA), which focuses to integrate data mining tools [35]. However, the lack of integration into organizational management results in a decreased importance [35].

To assess the suitability of existing methods, we derived literature-based criteria (i.e., [36]) and from practitioners (see Fig. 1). On the one hand, the assessment reveals that all analyzed approaches strive for general validity. Consequently, they fail to address the specific needs of the manufacturing industry. An example for this shortcoming is the CRISP-DM approach: Several attempts to increase the approach were undertaken [35]. One of the most acknowledged (i.e., Analytics Solutions Unified Method for Data Mining/Predictive Analytics (ASUM-DM)), developed by IBM, however, is not open source available [35, 37]. To still categorize ASUM-DM we used the works from Mockenhaupt [30] and Angée et al. [29]. On the other hand, existing solutions are complex, expensive, and too comprehensive for a simple bottom-up approach and not adapted for and tested in manufacturing companies. We found, for example, that process understanding is not considered as starting point of data-based decision making and process optimization. However, the involvement of human operators is considered key (i.e., research stream Human-In-The-Loop [38]) [39]. As a result, a procedural method that is suited for the manufacturing industry describing interactions between humans and non-human agents is lacking [39].

3 Research Approach

We opted for a DSR approach to design a procedural method that provides guidance to build decision support systems for effective interventions in operations. DSR originates from information systems (IS) research [40] and is well established in the field [41,42,43]. It supports the development of a “well-tested, well-understood and well-documented innovative generic design that has been field tested to establish pragmatic validity” [44]. The approach is well suited for operations management research as well [44]. For this particular study it is suited, as we intend to develop a generic method (see [45] for different types of artifacts), which is applicable to data-driven improvements.

Various processes and guidelines to conduct DSR exist [41, 43]. While the framework from Hevner et al. [46] is the most cited by IS researchers [47], the three cycle view from Hevner [12] provides a more detailed and improved version [48], which is why we rely on this version. It is divided into three cycles: relevance cycle, rigor cycle, and design cycle [12].

The DSR process starts with the relevance cycle by providing the “problem space” [46]. In this study, the application context is motivated by problem owners (POs) from three companies (i.e., two industrial companies and one smart factory solutions provider). The authors conducted interviews of about one hour each with a different number of participants (see Table 1). Based on the POs’ statements, and supported by related literature, the research team derived requirements for an artifact: 1) proper guidance to implement PdM, 2) include context aware information [49] also considering the human role, and 3) include a quality and maturity assessment of collected data.

The rigor cycle provides “past knowledge to the research project to ensure its innovation” [12]. The knowledge base for the conceptual development of this study is provided in Sect. 2. Its findings led to a first procedural method, which was evaluated by POs during the design cycle. Eventually, the results of the conducted DSR are added as contribution to the scientific community [12], which closes the rigor cycle.

The design cycle represents the core of DSR, aiming to develop the artifact and rigorously evaluate it, “until a satisfactory design is achieved” [12]. The first procedural method was evaluated and refined with POs during an eight-hour workshop. Afterwards, it was transferred to the field testing (i.e., relevance cycle). It was applied to a specific use-case of PdM by conducting an interview, a series of workshops, and utilizing company data (see Sect. 5). To ensure the completeness of the artifact, the outcome of the application of the procedural method and the method itself were validated with the POs (final interview and meeting). We stimulated a guided discussion a) to validate the procedural method and b) about success factors in each step. Based on the predefined requirements, the artifact was approved (i.e., giving proper guidance, including sufficient context aware information, and assessing the quality and maturity of data). Additionally, the POs highlighted the importance of implicit knowledge of humans in all steps of the method (e.g., step 7: the capability to visually assess the cutting burr quality). Moreover, the solution provider representatives stated that the artifact is suited for other use cases, and that they will incorporate it in their service offerings.

4 Development of the Artifact

The development of the artifact followed two phases: First, we searched the literature for existing methods and models to implement data-driven solutions. Based on this knowledge and the goal to develop a procedural method that is applicable and understandable for practitioners, we derived the first artifact. The design cycle was further enriched by the initial interviews with POs. Second, we evaluated and refined the first artifact during an eight-hour workshop with POs and based on an interview with one PO and manufacturing experts (i.e., operators). The outcome was the definition of the procedural method, which was at the same time the project plan. It comprises seven major steps and incorporates iterative elements (see Fig. 2).

Step 1: Establishing a sound process understanding

The interviews revealed the establishment of a thorough understanding of the underlying process as an essential precondition of all optimization efforts in the manufacturing industry. It ensures the analysis of the original problem without premature conclusions and that the right data is gathered. Consequently, it is important that not only manufacturing experts (e.g., process engineers) develop a thorough process understanding, but all stakeholders. This includes, for example machine operators, data-scientists, IT-experts, and project managers.

Step 2: Identification and classification of available data

The second step of the procedural method intends to identify and classify the data, which is available in the current configuration of the production equipment. The following information have been proven to be relevant: object that is described by data (e.g., torque of axis a); group to which the object belongs (e.g., process quality, material); type of data (e.g., boolean, string, array); availability (e.g., yes, yes under certain circumstances, no); frequency of measurement (e.g., every 1 ms, 5 ms, 1 s); type of measurement device (e.g., sensor, camera system, manual input). The resulting output of step two is a list of available data that can be used for further analysis.

Step 3: Understanding quality-critical factors

During step three, two aspects are relevant: 1) Identifying factors that have the potential to influence the quality of the produced part, and 2) determining if and which data is available within the current configuration of the production equipment to describe manifestations of the identified potential factors. The following example describes this: The incorrect fastening of screws (i.e., overtightened or not tight enough) can result in a substandard product quality. To describe the manifestation of the fastened screw, the torque used to fasten the screws would be an appropriate metric, which can be tracked by sensors. The output of step three is a list with quality-critical factors and its data sources to describe the manifestation.

Step 4: Formulation of hypotheses

The fourth step combines the collected knowledge from the previous steps to formulate admissible hypotheses. A hypothesis needs to clearly state two aspects: 1) the influence of a certain element of a machine on the output quality of a product and 2) the metric to measure deviations from the ideal manifestation.

Step 5: Data collection, verification, and complementation

Step five is composed of collecting the data with which the hypotheses in step four were formulated. Subsequently, it is necessary to validate if the collected data describes the causal relationship from the hypothesis. Additionally, the right frequency to collect the data has to be determined (e.g., every 1 ms). Finally, the causal relationship has to be analyzed for moderating effects (e.g., temperature and vibrations).

Step 6: Visualization & analysis

In order to assess the data and identify trends and anomalies, time series graphs can be applied. IT tools can support the handling of greater data amounts during this step. If no insights are generated, an iteration of step five is necessary. Such an iteration can lead to the collection of different data or frequencies. If an iteration of step five does not result in insights, a reanalysis of the hypotheses (i.e., step 4) has to be undertaken.

Step 7: Prediction

The last step of the procedural method is the definition of thresholds that allow to predict the transition from a desired to an undesired condition of a machine. As a result, the previous steps 1–6 enable the project team to predict the optimal point in time to maintain the machine or change a tool. It is recommended that many different systems settings are tested to validate the prediction model.

5 Field Testing

To field test the procedural method we conducted a case study in the spring industry (i.e., several workshops, relevant machine and process data, and documentations) (see Fig. 3). In consultation with the company, we chose the process of welding and cutting the wire to produce springs. The objective was to identify and develop a data-based use case for more effective quality related operator machine interventions.

First, we established a process understanding for the spring production process and visualized it (step 1). Afterwards, we identified and classified available data to describe the process (step 2). Based on the extensive implicit knowledge of the operators the following data were chosen: lag error of cutting knife, parameter of automatic correction of spring lengths of internal control unit, speed of wire, position of winding finger, motor currency, knife speed, and the corresponding spring quality. For the third step, we identified the length of the spring and the free cut of the wire as quality-critical factors. However, the process didn´t allow to measure the quality of the cut directly. Based on the process understanding, the persons involved concluded that the cut depends on the sharpness of the cutting knife, making it a quality-critical factor. This resulted in the hypothesis that the sharpness of the knife correlates with the quality of the cut and therefore with the quality of the burr of the spring. Nevertheless, the sharpness was not measurable directly, but the cutting system´s drive controller monitors the lag error of the cutting knife. The team assumed that a cut with a sharp knife shows a different time series of the lag error than a cut with a blunt knife (step 4). To test the hypotheses of the causal relationship between lag error of the cutting knife and the quality of the burr, the lag error was measured over a defined time period. We tested the hypotheses with different materials and systems stats to strengthen the traceability and generalizability of the prediction model (step 5). Plotting the lag error of the knife over time revealed that the graphs of the sharp and blunt knife differ (step 6). During step 7 thresholds of the lag error at which the blades of the knifes should be renewed were defined for certain instances. The instances were highly dependent on the input material and thresholds were difficult to derive due to a self-adjusting control system of the machine, preventing clearly distinguishable graphs. The implicit knowledge and visual inspection capability of the operators (i.e., for the burr) was a vital mean.

6 Conclusion

This study yielded a seven-step procedural method to guide practitioners to build decision support systems for effective interventions in operations. We conducted interviews to evaluate the artifact and field tested it in a spring production process.

During our study, the first step establishing a sound process understanding turned out to be of utmost importance. That is due to the involvement of different human stakeholders, which subsequently allows to capture the implicit knowledge of humans. First, the management board needs to back such projects, as it displays the relevance of such projects. This in turn leads to employee commitment. Second, the establishment of a process understanding with all stakeholders results in less fearful employees as they are part of the project team. Constant updates over the duration of the project ensure the commitment. Third, the implicit knowledge of humans is a vital part of the process understanding and needs to be extracted in all steps. Hence, the right execution of the first step, i.e., the correct involvement of all stakeholders is key for all subsequent steps.

This study contributes to science as it is empirically grounded in the manufacturing environment. Hence, addressing the specific needs of the manufacturing industry. Additionally, our work touches on the research of human-centric smart manufacturing, highlighting the requirement of built-in human-in-the-loop control and the knowledge about when and how to involve human operators. The procedural method contributes to practice, providing structured guidance to implement decision support systems.

A limitation of this study is the evaluation and field testing in one company and with one specific process only. However, a smaller sample allows for more in-depth research. In future studies this limitation may be addressed by applying the procedural method to different manufacturing processes in different companies.

References

Monostori, J.: Supply chains robustness: challenges and opportunities. Procedia CIRP 67, 110–115 (2018)

Bernard, G., Luban, K., Hänggi, R.: Resilienz in der Theorie. In: Luban, K., Hänggi, R. (eds.) Erfolgreiche Unternehmensführung durch Resilienzmanagement. Springer, Heidelberg (2022)

Heil, M.: Entstörung betrieblicher Abläufe (1995)

Peukert, S., Lohmann, J., Haefner, B., Lanza, G.: Towards increasing robustness in global production networks by means of an integrated disruption management. Procedia CIRP 93, 706–711 (2020)

Arena, S., Florian, E., Zennaro, I., Orrù, P.F., Sgarbossa, F.: A novel decision support system for managing predictive maintenance strategies based on machine learning approaches. Saf. Sci. 146, 105529 (2022)

Gao, R., et al.: Cloud-enabled prognosis for manufacturing. CIRP Ann. 64(2), 749–772 (2015)

Matyas, K., Nemeth, T., Kovacs, K., Glawar, R.: A procedural approach for realizing prescriptive maintenance planning in manufacturing industries. CIRP Ann. 66(1), 461–464 (2017)

Bunzel, M.: As much as half of every dollar you spend on preventive maintenance is wasted. IBM, 4 May 2016

Zonta, T., da Costa, C.A., da Rosa Righi, R., de Lima, M.J., da Trindade, E.S., Li, G.P.: Predictive maintenance in the Industry 4.0: a systematic literature review. Comput. Ind. Eng. 150, 106889 (2020)

Mobley, R.K.: An Introduction to Predictive Maintenance. Elsevier, Amsterdam (2002)

Coleman, C., Damodaran, S., Deuel, E.: Predictive maintenance and the smart factory: predictive maintenance connects machines to reliability professionals through the power of the smart factory, Deloitte Consulting LLP (2017)

Hevner, A.R.: A three cycle view of design science research. Scand. J. Inf. Syst. 19(2), 4 (2007)

Steinhoff, C.: Aktueller Begriff Industrie 4.0, Wissenschaftliche Dienste (2016)

Babel, W.: Industrie 4.0, China 2025, IoT. Springer Fachmedien Wiesbaden, Wiesbaden (2021)

Thoben, K.-D., Wiesner, S., Wuest, T.: “Industrie 4.0” and smart manufacturing – a review of research issues and application examples. Int. J. Autom. Technol. 11(1), 4–16 (2017)

Tao, F., Qi, Q., Liu, A., Kusiak, A.: Data-driven smart manufacturing. J. Manuf. Syst. 48, 157–169 (2018)

Kang, H.S., et al.: Smart manufacturing: past research, present findings, and future directions. Int. J. Precis. Eng. Manuf.-Green Technol. 3(1), 111–128 (2016). https://doi.org/10.1007/s40684-016-0015-5

Kletti, J.: MES - Manufacturing Execution System. Springer, Heidelberg (2015)

O’Donovan, P., Leahy, K., Bruton, K., O’Sullivan, D.T.J.: An industrial big data pipeline for data-driven analytics maintenance applications in large-scale smart manufacturing facilities. J. Big Data 2(1), 1–26 (2015). https://doi.org/10.1186/s40537-015-0034-z

Shuradze, G., Wagner, H.-T.: Towards a conceptualization of data analytics capabilities. In: 2016 49th Hawaii International Conference on System Sciences (HICSS), 2016 49th Hawaii International Conference on System Sciences (HICSS), Koloa, HI, USA, 05 January 2016–08 January 2016. IEEE (2016)

Shao, G., Shin, S.-J., Jain, S.: Data analytics using simulation for smart manufacturing. In: Proceedings of the Winter Simulation Conference 2014, 2014 Winter Simulation Conference - (WSC 2014), Savanah, GA, USA, 07 December 2014–10 December 2014. IEEE (2014)

Banerjee, A., Bandyopadhyay, T., Acharya, P.: Data analytics: hyped up aspirations or true potential? Vikalpa J. Decis. Mak. 38(4), 1–12 (2013)

Fayyad, U., Piatetsky-Shapiro, G., Smyth, P.: From data mining to knowledge discovery in databases. AI Mag. 3(17), 37 (1996)

Dutta, D., Bose, I.: Managing a big data project: the case of Ramco cements limited. Int. J. Prod. Econ. 165, 293–306 (2015)

Shaerer, C.: The CRISP-DM model: the new blueprint for data mining. J. Data Warehous. 5(4), 13–22 (2000)

Köhler, M., Frank, D., Schmitt, R.: Six Sigma. In: Pfeifer, T., Schmitt, R. (eds.) Masing Handbuch Qualitätsmanagement. Hanser, München (2014)

Gandomi, A., Haider, M.: Beyond the hype: big data concepts, methods, and analytics. Int. J. Inf. Manage. 35(2), 137–144 (2015)

Hu, H., Wen, Y., Chua, T.-S., Li, X.: Toward scalable systems for big data analytics: a technology tutorial. IEEE Access 2, 652–687 (2014)

Angée, S., Lozano-Argel, S.I., Montoya-Munera, E.N., Ospina-Arango, J.-D., Tabares-Betancur, M.S.: Towards an improved ASUM-DM process methodology for cross-disciplinary multi-organization big data & analytics projects. In: Uden, L., Hadzima, B., Ting, I.-H. (eds.) Knowledge Management in Organizations, vol. 877. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-95204-8_51

Mockenhaupt, A.: Datengetriebene Prozessanalyse. In: Mockenhaupt, A. (ed.) Digitalisierung und Künstliche Intelligenz in der Produktion. Springer Fachmedien Wiesbaden, Wiesbaden (2021)

Miller, H.G., Mork, P.: From data to decisions: a value chain for big data. IT Prof. 15(1), 57–59 (2013)

Vera-Baquero, A., Colomo-Palacios, R., Molloy, O.: Business process analytics using a big data approach. IT Prof. 15(6), 29–35 (2013)

Zou, H., Yu, Y., Tang, W., Chen, H.-W.M.: FlexAnalytics: a flexible data analytics framework for big data applications with I/O performance improvement. Big Data Res. 1, 4–13 (2014)

Schröer, C., Kruse, F., Gómez, J.M.: A systematic literature review on applying CRISP-DM process model. Procedia Comput. Sci. 181, 526–534 (2021)

Schäfer, F., Zeiselmair, C., Becker, J., Otten, H.: Synthesizing CRISP-DM and quality management: a data mining approach for production processes. In: 2018 IEEE International Conference on Technology Management, Operations and Decisions (ICTMOD), 2018 IEEE International Conference on Technology Management, Operations and Decisions (ICTMOD), Marrakech, Morocco, 21 November 2018–23 November 2018. IEEE (2018)

Greiffenberg, S.: Methoden als Theorien der Wirtschaftsinformatik. In: Uhr, W., Esswein, W., Schoop, E. (eds.) Wirtschaftsinformatik 2003/Band II: Medien - Märkte - Mobilität, s. l. Physica-Verlag HD, Heidelberg (2003)

Brenner, W., van Giffen, B., Koehler, J., Fahse, T., Sagodi, A.: Stand in Wissenschaft und Praxis. In: Brenner, W., van Giffen, B., Koehler, J., Fahse, T., Sagodi, A. (eds.) Bausteine eines Managements Künstlicher Intelligenz. Springer Fachmedien Wiesbaden, Wiesbaden (2021)

Nunes, D.S., Zhang, P., Sa Silva, J.: A survey on human-in-the-loop applications towards an internet of all. IEEE Commun. Surv. Tutor. 17(2), 944–965 (2015)

Cimini, C., Pirola, F., Pinto, R., Cavalieri, S.: A human-in-the-loop manufacturing control architecture for the next generation of production systems. J. Manuf. Syst. 54, 258–271 (2020)

Winter, R., Aier, S.: Design science research in business innovation. In: Hoffmann, C.P., Lennerts, S., Schmitz, C., Stölzle, W., Uebernickel, F. (eds.) Business Innovation: Das St. Galler Modell. BIUSG, pp. 475–498. Springer, Wiesbaden (2016). https://doi.org/10.1007/978-3-658-07167-7_25

Dresch, A., Lacerda, D.P., Antunes Jr, J.A.V.: Design Science Research. Springer, Cham (2015)

Gregor, S., Hevner, A.R.: Positioning and presenting design science research for maximum impact. MIS Q. 37(2), 337–355 (2013)

Winter, R.: Design science research in Europe. Eur. J. Inf. Syst. 17(5), 470–475 (2008)

van Aken, J., Chandrasekaram, A., Halman, J.: Conducting and publishing design science research. J. Oper. Manage. 47, 1–8 (2018)

March, S.T., Smith, G.F.: Design and natural science research on information technology. Decis. Support Syst. 15(4), 251–266 (1995)

Hevner, A.R., March, S.T., Park, J., Ram, S.: Design science in information systems research. MIS Q. 28(1), 75 (2004)

Hjalmarsson, A., Rudmark, D., Lind, M.: When designers are not in control – experiences from using action research to improve researcher-developer collaboration in design science research. In: Winter, R., Zhao, J.L., Aier, S. (eds.) DESRIST 2010. LNCS, vol. 6105, pp. 1–15. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-13335-0_1

Cahenzli, M., Deitermann, F., Aier, S., Haki, K., Budde, L.: Intra-organizational nudging: designing a label for governing local decision-making. In: itAIS2021: XVIII Conference of the Italian Chapter of AIS - Digital Resilience and Sustainability: People, Organizations, and Society, Trento, Italy (2021)

Schmidt, B., Wang, L.: Cloud-enhanced predictive maintenance. Int. J. Adv. Manuf. Technol. 99(1–4), 5–13 (2016). https://doi.org/10.1007/s00170-016-8983-8

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 IFIP International Federation for Information Processing

About this paper

Cite this paper

Deitermann, F., Budde, L., Friedli, T., Hänggi, R. (2022). A Procedural Method to Build Decision Support Systems for Effective Interventions in Manufacturing – A Predictive Maintenance Example from the Spring Industry. In: Kim, D.Y., von Cieminski, G., Romero, D. (eds) Advances in Production Management Systems. Smart Manufacturing and Logistics Systems: Turning Ideas into Action. APMS 2022. IFIP Advances in Information and Communication Technology, vol 663. Springer, Cham. https://doi.org/10.1007/978-3-031-16407-1_24

Download citation

DOI: https://doi.org/10.1007/978-3-031-16407-1_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-16406-4

Online ISBN: 978-3-031-16407-1

eBook Packages: Computer ScienceComputer Science (R0)