Abstract

Wireless sensor networks (WSNs) have been affected by data due to their placement in random and risky atmospheres. Sensitive data in computer systems are increasing drastically and, thus, there is an utmost need to provide efficient cybersecurity. While detecting security bugs, software engineers discuss these bugs privately and they are not made public until security patches are available. This leads to many failures such as communication failure and hardware or software failure. This work aims to assist software developers in classifying bug reports in a better way by identifying security vulnerabilities as security bugs reports (SBRs) through the tuning of learners and data preprocessors. Practically, machine learning (ML) techniques are used to detect intrusions based on data and to learn by what means secure and nonsecure bugs can be differentiated. This work proposes a rudimentary classification model for bug prediction by involving Adaptive Ensemble Learning with Hyper Optimization (AEL-HO) technique. Classifier performance is analyzed based on the F1-score, detection accuracy (DA), Matthew’s correlation coefficients (MCC), and true positive rate (TPR) parameters. Comparisons are made among different already-existing classifiers.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Wireless sensor networks (WSNs) play a major role in the world because of its applications in wildlife tracking, military movements sensing, health care system, building health monitoring, and storing environmental observations [7]. Geographic routing-based distributed sensor systems have applications in Internet of Things (IoT) domain. The mechanism of covetous sending is considered one of the excellent geographic directing plans due to its straightforwardness and proficiency. The eager mechanism for data transport has a demerit, e.g., communication void. Most of the geographic directing plans comprise two components: (1) covetous sending and (2) reinforcement. In this chapter, we propose a novel void dealing technique that identifies the void boundary. Moreover, a transfer hub is used that bundles from source run around the void to build a way with less bounce tally.

The nodes in the WSNs interact with the surrounding environment using sensors and actuators. Sensor nodes in WSN are equipped with severe energy constraints [6]. Difficulties that arise due to power, processing, sensing, and location unity in the hardware of the sensor units may result in hardware failure. Also, badly written sensor program may result in software failure. Moreover, complications in the transceiver of the sensors lead to communication failure in the WSNs. The data are classified based on the WSNs sensed data [12].

Voronoi polygons are haphazardly isolated into the inactive hubs of the WSNs within the two-dimensional square-checking zone. Furthermore, the key zones of the static nodes are associated within the counterclockwise heading to decide the gap zones. Concurring to the distinctive concave shapes, convexity worsens into an arched body, and the raised body center is utilized as the base point. The Delaunay triangulation is combined with the bulge vertices to calculate the position of the virtual repair hub. Finally, based on the hubs’ relative removal of the hubs, remaining vitality & hub of criticality, multi-factor cooperative energy coordinating choice table between the virtual repair hub & the versatile hub concurring the choice table, the portable hub performs the limited separate development to realize the repair optimization of the scope gap. Recreation tests are designed for calculating existing scope gap. AEL-HO calculation moves forward to arrange scope and expands the arranged life cycle.

2 Related Work

Data are available in various forms, i.e., lost data, offset data, out of bounds data, gain data, spike data, stuck-at data, noise data, or random data. Detection of data in the WSNs becomes complex due to restricted sensors and different placement fields. In recent years, with the advancements in ad hoc network, numerous researchers are actively contributing to the domain of IoT and sensor systems. Notwithstanding, in contrast to MANET, the versatility of vehicles in IOT is commonly compelled by predefined streets. The speed of the vehicle is additionally confined in the parts of speed limits, level of blockage in streets, and traffic control components. Along these lines, building up a practical versatility model of IOT is critical for assessing and structuring steering convention. This linkage is utilized to safeguard the dynamic transmission that enhances the transmission scope of the vehicle as indicated by situations of nearby traffic. The effect of vehicular traffic for the most part on Matt’s traceroute (MTR) breaks down when thickness changes from steady trade to greatest congested driving conditions. Clients must perceive the urban infill which is not simply to copy the length of vehicles. In addition to this, the automobile overloads bring down vehicles at normal speed. The assumption of nearby non-renouncement encourages a hub to join a Bayesian sober mindedness inside the nearby neighborhood, where the hub is fit for perceiving the neighbors.

Data detection techniques play a major role in obtaining an assured operating condition of WSNs. Different types of data detection algorithms are used in WSNs, which contain the basic operation of supervised or unsupervised learning. Support vector machine (SVM) classifier and statistical learning theory [8] can be introduced to detect data in the networks. A classifier’s training is performed using kernel functions that contain radial basis, polynomial, and linear kernel functions. The indoor dataset of single hop is not considered. The trend analysis of least squares SVM [4] is developed for improved sensor data diagnosis to overcome these problems. The error-correcting output matrix used for data classification has limitations. SVM with Statistical Time-Domain Features [10] and one-class SVM with Stochastic Gradient Descent [3] methods are presented for data detection in WSNs, which are suitable only for binary classification of data. For multiple data classification, data classifier identification process is combined with convolutional neural networks (CNN) [11] and Random Forest (RF) [13] utilized for consumption of energy in the sensors during anomaly detection. However, there is no detailed study on evaluation of hybrid classifiers. The comparison is made by applying this algorithm on different datasets and their feature values are utilized for the classification of sensed data.

2.1 Challenges and Problem Statement

The WSNs face various challenges in data detection mechanism due to the following reasons.

-

The resources at the node level will make use of node’s utilize classifiers [2] only in restricted ways, since there is no need of difficult calculation.

-

In hazardous and uncertain atmosphere, there is a need for placement of sensor nodes.

-

Medical information detection techniques [14] must be accurate and random to eliminate loss. For example, the method would identify the changes among normal data and sensor data. As a result, it lacks encompassing in obtaining inaccurate information, which might lead to a misrepresentative response.

2.2 Contributions

-

Adaptive ensemble learning with hyper-optimization classifier is utilized to detect health information in WSNs.

-

In addition to that, three more classifiers are utilized on the datasets. A wide experimental evaluation is accompanied to find WSN. In this chapter, SVM, RF, and CNN classifiers are utilized for comparing the proposed AEL-HO classifier.

-

Performance of classifiers is analyzed based on the parameters of the measures such as F1-score, detection accuracy (DA), Matthew’s correlation coefficient (MCC), and true positive rate (TPR).

In the work discussed in this chapter, a novel adaptive ensemble learning with hyper-optimization algorithm has been developed to reduce the complication of the AEL-HO algorithm dealing with comprehensive training datasets. The proposed technique stores related data points and cleans irrelevant data points present in the dataset. Initially, the k-mean clustering process was applied to specify training data points. Next, the quickhull algorithm [5] collects the single class label data points from each cluster in the convex hull. The data belonging to the convex hull vertices and clusters of more class label’s data points are ultimately considered the specified training data points of the AEL-HO algorithm classifiers. The experimental outputs of the large dataset demonstrate that the proposed technique minimizes the total training data points without reducing the accurateness of the training data points. Here, reduction of 90% training time is achieved in comparison to the AEL-HO method.

This chapter is organized as follows. The general explanation of WSNs is given in Sect. 1. Related works and the proposed contribution are presented in Sect. 2. The proposed methodology of the AEL-HO classifier is explained in Sect. 3. The system model result is structured in Sect. 4, and the conclusion of the chapter is given in Sect. 5.

3 Proposed Methodology

3.1 Preprocessing

The aim of preprocessing is to eliminate unwanted words from the bug report. During analysis, unnecessary words are removed since they may worsen the learning performance. Thus, the space for the feature set is minimized making it easy for learning and performing data analysis. This process comprises three steps: tokenization, stop-word removal, and stemming. First, a sequence of text is partitioned as numbers, words, punctuation, etc., which are termed as tokens. Then, every punctuation is substituted with spaces; escape characters that are non-printable are eliminated and all words are changed to lowercase. Here, the common stem of words is substituted and saved as selected features. For instance, words like “moving,” “moved,” “moves,” and “move” are substituted with the word “move.” The words obtained, once preprocessing is completed, are termed as features as given in Eqs. (1) and (2).

3.1.1 N-Gram Extraction

While extracting the features, information present in the bug report is expressed as a feature vector. It supports extending the network by transforming the information of the bug report into various sets of n-gram data. Thus, features are represented in a better way. N-gram method is deployed for observing the semantic relationship and for estimating the frequency of feature order. N-gram technique is reduced as k − 1 and organizes the series of i features into unigram, bigram, and trigram.

where, fi and p() represent the feature (word) and probability, respectively; in unigram, it is assumed that the successive features are not dependent on one another. The features of the feature string have no mutual information. Hence, the conditional probability of unigram is given as follows.

In bigram, two adjoining features provide language information. Its conditional probability is written as follows.

Various n-gram techniques must be integrated to exploit its entire ability. Several n-gram techniques can be used for analyzing an individual sentence, and then the results obtained are combined. Thus, the relationship among n-gram feature is expressed as an analysis at word level, which is given as follows.

Algorithm

Input ← Training data Dtr, testing data Dts, and defective rate σd Output → Class label cj prediction of every instance in testing data For every instance of Dtr and Dts Delete every duplicate instance from Dtr and Dts. Fill the missing instance value in Dtr and Dts with the mean of the corresponding instance value2. Normalize both the Dtr and Dts using min–max method3. Input ← SDA for generating a deep representation of aik, where kth metrics of ith instance of class label ci. Output → Deepaik is the deep representation of aik. End for for every base learner bl EL phase l=1, 2,...10. Train Dtr; According to bl, a cross-fold validation is performed on Dtr. Calculate average MCC(AvGMCCi) and average F-measure(AvGF) End for tuning xk with yk yk = ak + f × (bk − ck) {np, f , cr} = {10n, 0.8, 0.9}

Data Point Clustering: Initially, the k-mean clustering technique performs the clustering operation on the new data points in training which separates the data points into k clusters. In this method, depending upon the dataset structure and total data points, the selection of clusters has been carried out. The clustering technique’s accuracy is based on two things, i.e., initial centroids and the k values. In the clustering method, singular clusters are formed with the help of only one data point class. The nonsingular clusters are formulated where more than one data point class are present. Using k-mean techniques, five cluster groups are formed on the data points. It contains four “singular” clusters and one “nonsingular” cluster in the dataset.

Convex Hull Construction: Using quickhull technique, the convex hull is constructed for every cluster. Then, the convex hull is calculated. It contains the singular and nonsingular cluster. V 1 and V 2 denote the vertices set of class label 1 and 2 correspondingly.

Redundant Data Points Elimination: In this step, we eliminate the data point, which is not used to form the vertices in step 2. The data points in the dataset are used to form the vertices and are defined as “Rem” data points. Here, 41 data points are used to perform the next step of the naïve Bayes classifier.

The system model of this work contains two TelosB mote sensors and one desktop computers, which is accumulated to make the measurements. This model contains three stages.

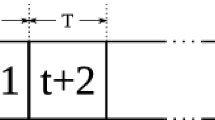

Stage 1

TelosB sensor is used to take the sensed data readings. These readings are utilized to form the data preparation block with new data measurement V_t. It makes a new observation vector. The measurement of temperature (T_1 and T_2) and humidity (H_1 and H_2) is combined to form the dataset. The new observation vectors are formed by the three sequential data measurements V_t, V_(t-1), and V_(t-2).

Stage 2

In the second stage, the faults are included in the training dataset. From [1], four different faults are taken (offset, gain, out of bounds, and stuck-at). In addition to that, data loss fault and spike fault are taken in this system model.

Stage 3

In this third stage, the many cluster nodes are connected to form the WSNs. To make the communication between other nodes and the network layer, each cluster contains one cluster head. In each cluster head, the naïve Bayes algorithm is incorporated to classify the fault. To form the decision function, the observation vectors are used. This process is less expensive because the decision functions are incorporated in each cluster head along with the classifiers. By using the decision function, the fault may be classified. Classifiers classify the dataset into positive (fault) and negative (normal).

3.2 Attribute-Based Encryption

Attribute-based encryption is a form of public key encryption that depends on the attribute of the user’s secret key and the cipher text. In such a framework, the unscrambling of a cipher text is conceivable if the arrangement of the trait of the cipher text is an urgent security part of property-based encryption resistance. An individual getting numerous keys may have the choice of obtaining data if, at any rate, one individual key awards is received.

3.3 Symmetric-Key Algorithm

Executions of symmetric key encryption can be especially successful to ensure that consumers do not encounter any significant time delay due to encryption and unscrambling. Likewise, symmetric-key encryption offers a degree of confirmation as data mixed with one symmetric key cannot be decoded with some other symmetric key. Therefore, if it can be used by the two gatherings to scramble correspondences and keep the symmetric key private, each gathering will ensure that it communicates to the other as long as the decoded messages become consistent and pleasant.

3.4 Cipher Text Attribute–Based Encryption

A collection of descriptive attributes will define private keys. In our construction, a party that wants to scramble a message will suggest a method that private keys must follow to unscramble through an entrance tree structure. Every inside hub of the tree is an edge door and the leaves are related with qualities. We utilize similar documentation to depict the entrance trees, even though for our situation, the credit is utilized to distinguish the keys (as restricted to the data) and specified in the private key.

3.5 Performs the Naïve Bayes Classifier on the Remaining Data Points

In the last step, the remaining 41 data points are used to perform the naïve Bayes classifier. From 85 training data points, only 50% of the data are utilized to obtain the accurate naïve Bayes classifier technique [38]. Here, the data points can be reduced requiring fewer mathematical formulation steps to classify data points. In addition to that, the computation times are reduced and obtain higher accuracy.

Proposed AEL-HO Algorithm

-

1.

Choose the cluster value K.

-

2.

Perform the k-means clustering techniques.

-

3.

Where k varies up to K (k ≤ K) for each cluster do.

-

4.

Based on cluster k, check the data points class label.

-

5.

If the cluster data points are a single class.

-

6.

Allocate the cluster label as “Singular.”

-

7.

Else, allocate the cluster label as “Nonsingular.”

-

8.

End.

-

9.

End.

-

10.

For “Singular” cluster, do the following:

-

11.

Perform quickhull techniques.

-

12.

Estimate the convex hull (V 1), which denotes the class-1 label vertices points.

-

13.

Estimate the convex hull (V 2), which denotes the class-2 label vertices points.

-

14.

Set of vertices points are formed.

-

15.

Eliminate each clusters sample not related to the group.

-

16.

End.

-

17.

For “Nonsingular” cluster do the following:

-

18.

Choose each cluster data points and form in a single set.

-

19.

End.

-

20.

Remaining samples are structured as “Rem” dataset.

-

21.

Perform naïve Bayes classifier to the “Rem” values.

4 Experimental Results

The experimental results of the proposed system are evaluated. Here, the dataset used in this work is explained and the performance analysis parameter and their equations are presented. A final comparison of the proposed method with the other three classifier algorithms is discussed.

System configuration:

-

Operating System: Windows 8.

-

Processor: Intel Core i3.

-

RAM: 4 GB.

-

Platform: MATLAB.

4.1 Dataset

In WSNs, measurements of the sensor and the different fault types are combined to form the labeled dataset. This dataset is used based on the existing dataset proposed by the investigators in the North Carolina University at Greensboro in 2010 [13]. From the single hop, multi-hop, and two outdoor multi-hop sensors WSNs, the data are gathered in TelosB motes. The sensed data contain temperature and humidity measurements. Each vector is formed from the three successive instances t_0, t_1, and t_2. By using temperature T_1 and T_2 and humidity H_1 and H_2 measurements, the construction of each instance has been carried out. Here, six different faults (stuck-at, data loss, offset, out of bounds, gain, and spike) are taken at different rates (10%, 20%, 30%, 40%, and 50%), which is introduced in the dataset. From 9566 observations, 40 datasets have been prepared: each has 12 dimensions. Each dataset contains the measurement values and target values (1 for normal and 2 for fault). The naïve Bayes classifier is used to classify the whole dataset into two labels, i.e., normal case and fault case. Table 1 summarizes the various types of fault results.

4.2 Performance Evaluation Parameters

DA is the first metric [1, 7], which is represented in Eq. (7):

TPR is the second metric [15]. It is defined by actual positive quantity that is identified as correct. The corresponding expression is given in Eq. (8).

where, true positive (TP) denotes the estimation of fault capable of identifying true positives. The false negative (FN) denotes the estimation of fault which is wrongly requested as negative. MCC is the third metric [17,18,19,20,21,22,23,24,25], which ranks the classifiers based on the accuracy values. It ranges between −1 and 1. The expression of MCC is given in Eq. (9):

where, true negative (TN) denotes the estimation of non-faulty nodes correctly and false positive (FP) denotes the estimation of faulty nodes incorrectly. F1-score is the fourth metrics [16], which is the mean of harmonics precision and recall.

Table 1 shows the ranking of the proposed AEL-HO classifier with existing CNN, SVM, and RF classifiers based on the MCC score. The AEL-HO classifier is considered to perform well based on MCC values. A classifier with an MCC value of 1 means the classifier is the best. Here, the MCC value of the proposed naïve Bayes classifiers is close to 1 as compared to SVM, CNN, and RF classifiers. Therefore, the naïve Bayes classifier is proven to be the best classifier (MCV value, 0.73) followed by SVM classifier (MCC value, 0.65) [26,27,28,29,30,31].

Table 2 shows the accuracy and response time of the proposed AEL-HO classifier compared with CNN, SVM, and RF classifiers [32–37]. The analysis shows that the proposed classifier exhibits a higher accuracy of 96.42% and response time of 0.97 s.

5 Conclusions and Future Work

The clustering is performed on the data points, and then the convex hull algorithm is used to find the vertices of the data points that belong to each cluster. The performance of the classifiers is analyzed based on the metrics such as F1-score, DA, MCC, and TPR. Based on the values of DA and TPR, it has been concluded that the proposed algorithm has performed better than the existing methods. For future work, the same dataset can be applied to different new data that appear in WSNs. In addition, identification of WSNs data is found to be accurate in the network layer and the sensor nodes.

References

Alenezi, M., Magel, K., & Banitaan, S. (2013). Efficient bug triaging using text mining. Journal of Software, 8(9), 2185–2190.

Guo, S., Chen, R., Li, H., Zhang, T., & Liu, Y. (2019). Identify severity bug report with distribution imbalance by CR-SMOTE and ELM. International Journal of Software Engineering and Knowledge Engineering, 29(6), 139–175.

Jindal, R., Malhotra, R., & Jain, A. (2017). Prediction of defect severity by mining software project reports. International Journal of Systems Assurance Engineering and Management, 8, 334–351.

Kamei, Y., Shihab, E., Adams, B., et al. (2013). A large-scale empirical study of just-in-time quality assurance. IEEE Transactions on Software Engineering, 39(6), 757–773.

Kanwal, J., & Maqbool, O. (2012). Bug prioritization to facilitate bug report triage. Journal of Computer Science and Technology, 27(2), 397–412.

Lamkanfi, S., Demeyer, E. G., & Goethals, B. (2010). Predicting the severity of a reported bug. In Mining Software Repositories (MSR) (pp. 1–10).

Li, H., Gao, G., Chen, R., Ge, X., & Guo, S. (2019). The influence ranking for testers in bug tracking systems. International Journal of Software Engineering and Knowledge Engineering, 29(1), 1–21.

Sampathkumar, A., & Vivekanandan, P. (2018). Gene selection using multiple queen colonies in large scale machine learning. Journal of Electrical Engineering, 9(6), 97–111.

Singh, V. B., & Chaturvedi, K. K. (2011). Bug tracking and reliability assessment system. International Journal of Software Engineering and Its Applications, 5(4), 17–30.

Yang, X.-L., Lo, D., Xia, X., Huang, Q., & Sun, J.-L. (2017). High-impact bug report identification with imbalanced learning strategies. Journal of Computer Science and Technology, 32(1), 181–198.

Yu, H., Zhang, W. Y., & Li, H. (2019). Data-tolerant compensation control based on sliding mode technique of unmanned marine vehicles subject to unknown persistent ocean disturbances. International Journal of Control, Automation, and Systems, 18(9), 739–752.

Zhang, T., Chen, J., Yang, G., Lee, B., & Luo, X. (2016). Towards more accurate severity prediction and fixer recommendation of software bugs. Journal of Systems and Software, 117, 166–184.

Alrosan, A., Alomoush, W., Norwawi, N., Alswaitti, M., & Makhadmeh, S. N. (2020). An improved artificial bee colony algorithm based on mean best-guided approach for continuous optimization problems and real brain MRI images segmentation. Neural Computing and Applications, 33(3), 1671–1697.

Elgamal, Z. M., Yasin, N. B. M., Tubishat, M., Alswaitti, M., & Mirjalili, S. (2020). An improved Harris hawks optimization algorithm with simulated annealing for feature selection in the medical field. IEEE Access, 8, 186638–186652.

Tubishat, M., Alswaitti, M., Mirjalili, S., Al-Garadi, M. A., Alrashdan, M. T., et al. (2020). Dynamic butterfly optimization algorithm for feature selection. IEEE Access, 8, 194303–194314.

Rohit, R., Kumar, S., & Mahmood, M. R. (2020). Color object detection based image retrieval using ROI segmentation with multi-feature method. Wireless Personal Communications, 112(1), 169–192.

Sandeep, K., Jain, A., Shukla, A. P., Singh, S., Raja, R., Rani, S., Harshitha, G., AlZain, M. A., & Masud, M. (2021). A comparative analysis of machine learning algorithms for detection of organic and nonorganic cotton diseases. Mathematical Problems in Engineering, 2021. https://doi.org/10.1155/2021/1790171

Naseem, U., Razzak, I., Khan, S. K., & Prasad, M. (2020). A comprehensive survey on word representation models: From classical to state-of-the-art word representation language models. arXiv preprint arXiv, 15036.

Vikram, K. K., & Narayana, V. L. (2016). Cross-layer Multi Channel MAC protocol for wireless sensor networks in 2.4-GHz ISM band. In IEEE conference on, computing, analytics and security trends (CAST-2016) on DEC 19–21, 2016 at Department of Computer Engineering & information technology. College of Engineering, Pune, Maharashtra. https://doi.org/10.1109/CAST.2016.7914986

Tiwari, L., Raja, R., Awasthi, V., Rohit Miri, G. R., Sinha, M. H., & Alkinani, K. P. (2021). Detection of lung nodule and cancer using novel Mask-3 FCM and TWEDLNN algorithms, 108882. Measurement, 172. https://doi.org/10.1016/j.measurement.2020.108882, ISSN 0263-2241.

Raja, R., Raja, H., Patra, R. K., Mehta, K., & Gupta, A. (2020). Assessment methods of cognitive ability of human brains for inborn intelligence potential using pattern recognition. In Biometric systems. IntechOpen. ISBN 978-1-78984-188-6.

Vikram, K., & Sahoo, S. K. (2017, December). Load Aware Channel estimation and channel scheduling for 2.4GHz frequency band wireless networks for smart grid applications. International Journal on Smart Sensing and Intelligent Systems, 10(4), 879–902. https://doi.org/10.21307/ijssis-2018-023

Naseem, U., Khan, S. K., Farasat, M., & Ali, F. (2019). Abusive language detection: A comprehensive review. Indian Journal of Science Technology, 12(45), 1–13.

Sharma, D. K., Singh, B., Regin, R., Steffi, R., & Chakravarthi, M. K. (2021). Efficient classification for neural machines interpretations based on mathematical models. In 2021 7th International Conference on Advanced Computing and Communication Systems (ICACCS) (pp. 2015–2020). https://doi.org/10.1109/ICACCS51430.2021.9441718

Arslan, F., Singh, B., Sharma, D. K., Regin, R., Steffi, R., & Suman Rajest, S. (2021). Optimization technique approach to resolve food sustainability problems. In 2021 International Conference on Computational Intelligence and Knowledge Economy (ICCIKE) (pp. 25–30). https://doi.org/10.1109/ICCIKE51210.2021.9410735

Ogunmola, G. A., Singh, B., Sharma, D. K., Regin, R., Rajest, S. S., & Singh, N. (2021). Involvement of distance measure in assessing and resolving efficiency environmental obstacles. In 2021 International Conference on Computational Intelligence and Knowledge Economy (ICCIKE) (pp. 13–18). https://doi.org/10.1109/ICCIKE51210.2021.9410765

Sharma, D. K., Singh, B., Raja, M., Regin, R., & Rajest, S. S. (2021). An efficient python approach for simulation of Poisson distribution. In 2021 7th International Conference on Advanced Computing and Communication Systems (ICACCS) (pp. 2011–2014). https://doi.org/10.1109/ICACCS51430.2021.9441895

Sharma, D. K., Singh, B., Herman, E., Regine, R., Rajest, S. S., & Mishra, V. P. (2021). Maximum information measure policies in reinforcement learning with deep energy-based model. In 2021 International Conference on Computational Intelligence and Knowledge Economy (ICCIKE) (pp. 19–24). https://doi.org/10.1109/ICCIKE51210.2021.9410756

Metwaly, A. F., Rashad, M. Z., Omara, F. A., & Megahed, A. A. (2014). Architecture of multicast centralized key management scheme using quantum key distribution and classical symmetric encryption. The European Physical Journal Special Topics, 223(8), 1711–1728.

Farouk, A., Zakaria, M., Megahed, A., & Omara, F. A. (2015). A generalized architecture of quantum secure direct communication for N disjointed users with authentication. Scientific Reports, 5(1), 1–17.

Naseri, M., Raji, M. A., Hantehzadeh, M. R., Farouk, A., Boochani, A., & Solaymani, S. (2015). A scheme for secure quantum communication network with authentication using GHZ-like states and cluster states controlled teleportation. Quantum Information Processing, 14(11), 4279–4295.

Wang, M. M., Wang, W., Chen, J. G., & Farouk, A. (2015). Secret sharing of a known arbitrary quantum state with noisy environment. Quantum Information Processing, 14(11), 4211–4224.

Zhou, N. R., Liang, X. R., Zhou, Z. H., & Farouk, A. (2016). Relay selection scheme for amplify-and-forward cooperative communication system with artificial noise. Security and Communication Networks, 9(11), 1398–1404.

Supritha, R., Chakravarthi, M. K., & Ali, S. R. (2016). An embedded visually impaired reconfigurable author assistance system using LabVIEW. In Microelectronics, electromagnetics and telecommunications (pp. 429–435). Springer.

Ganesh, D., Naveed, S. M. S., & Chakravarthi, M. K. (2016). Design and implementation of robust controllers for an intelligent incubation Pisciculture system. Indonesian Journal of Electrical Engineering and Computer Science, 1(1), 101–108.

Chakravarthi, M. K., Gupta, K., Malik, J., & Venkatesan, N. (2015, December). Linearized PI controller for real-time delay dominant second order nonlinear systems. In 2015 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT) (pp. 236–240). IEEE.

Yousaf, A., Umer, M., Sadiq, S., Ullah, S., Mirjalili, S., Rupapara, V., & Nappi, M. (2021b). Emotion recognition by textual tweets classification using voting classifier (LR-SGD). IEEE Access, 9, 6286–6295. https://doi.org/10.1109/access.2020.3047831

Sadiq, S., Umer, M., Ullah, S., Mirjalili, S., Rupapara, V., & Nappi, M. (2021). Discrepancy detection between actual user reviews and numeric ratings of Google App store using deep learning. Expert Systems with Applications, 181, 115111. https://doi.org/10.1016/j.eswa.2021.115111

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Ramirez-Asis, E.H., Zapata, M.A.S., Sivakumaran, A.R., Phasinam, K., Chaturvedi, A., Regin, R. (2023). Data Detection in Wireless Sensor Network Based on Convex Hull and Naïve Bayes Algorithm. In: Pandey, S., Shanker, U., Saravanan, V., Ramalingam, R. (eds) Role of Data-Intensive Distributed Computing Systems in Designing Data Solutions. EAI/Springer Innovations in Communication and Computing. Springer, Cham. https://doi.org/10.1007/978-3-031-15542-0_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-15542-0_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-15541-3

Online ISBN: 978-3-031-15542-0

eBook Packages: EngineeringEngineering (R0)