Abstract

In statistics of extremes, the estimation of the extreme value index (EVI) is an important and central topic of research. We consider the probability weighted moment estimator of the EVI, based on the largest observations. Due to the specificity of the properties of the estimator, a direct estimation of the threshold is not straightforward. In this work, we consider an adaptive choice of the number of order statistics based on the double bootstrap methodology. Computational and empirical properties of the methodology are here provided.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction and Scope of the Article

Let \((X_1, \dots , X_n)\) denote a random sample of size n from a population with unknown cumulative distribution function (CDF) \(F(x)=\mathbb {P}(X\le x)\) and consider the associated sample of ascending order statistics (OSs) \( (X_{1:n}:=\mathop {\min }\limits _{1\le i\le n} X_i\le \cdots \le X_{n:n}:= \mathop {\max }\limits _{1\le i\le n} X_i). \) Further assume that for large values of x, F(x) is a Pareto-type model, i.e., a model with a regular varying right tail with a negative index of regular variation equal to \(-1/\xi \) \((\xi >0)\). Consequently,

with \(L(\cdot )\) a slowly varying function, i.e.

Models satisfying the condition (1) are in the domain of attraction for maxima of a non-degenerate distribution. This means that there exist normalizing constants \(a_n>0\) and \(b_n\in \mathbb {R}\) such that

with \(G(\cdot )\) a non-degenerate CDF. With the appropriate choice of the normalizing constants in (2), and under a general framework, G is the general extreme value (EV) distribution,

given here in the von Mises-Jenkinson form (see [1, 2]). Whenever such a non-degenerate limit exists, we write \(F\in \mathcal{D_M}(EV_\xi )\), and the real parameter \(\xi \) is the extreme value index (EVI).

As already mentioned, we shall deal with Pareto right-tails, i.e. heavy right-tails or equivalently a model with a positive EVI. Then, the right-tail function is of regular variation with an index of regular variation equal to \(-1/\xi \), i.e.

where the notation \(RV_\alpha \) stands for the class of regularly varying functions at infinity with an index of regular variation equal to \(\alpha \), i.e. positive measurable functions g such that \(\lim \limits _{t\rightarrow \infty }g(tx)/g(t)=x^\alpha \), for all \(x>0\). With the notation

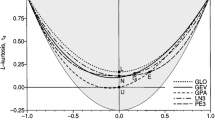

condition (4) is equivalent to \(U \in RV_\xi \). Pareto-type models are extremely important in practice due to the frequency and magnitude of extreme values and inference on extreme and large events is usually performed on the basis of the \(k+1\) largest order statistics in the sample, as sketched in Fig. 1.

1.1 EVI-Estimators Under Consideration

One of the first classes of semi-parametric estimators of a positive EVI was the class of Hill (H) estimators introduced in [3] and given by

This estimator can be highly sensitive to the choice of k, especially in the presence of a substantial bias. As an alternative, we shall also consider the Pareto probability weighted moments (PPWM) EVI-estimators, introduced in [4]. They are consistent for \(0<\xi <1\), compare favourably with the Hill estimator, and are given by

with

For other alternative estimators of the EVI see Refs. [5,6,7], among others. Consistency of the EVI-estimators in (6) and (7) is achieved if \(X_{n-k:n}\) is an intermediate OS, i.e. if

In order to derive the asymptotic normality of these EVI-estimators, it is often assumed the validity of a second-order condition, like

where \(U(\cdot )\) is defined in (5) and \(|A|\in RV_\rho \), \(\rho \le 0\). Under such a second-order framework, if \(\sqrt{k}A(n/k)\rightarrow \lambda _{_A}\), finite, as \(n\rightarrow \infty \), these EVI-estimators are asymptotically normal. Denoting \(\hat{\xi }_{k,n}^\bullet \), any of the estimators above, we have, with \(Z_k^\bullet \) an asymptotically standard normal random variable and for adequate \((b_\bullet ,\ \sigma _\bullet )\in (\mathbb {R},\ \mathbb {R}^+)\),

with \(b_\bullet \) the asymptotic bias, and \(\sigma _\bullet ^2\) the asymptotic standard deviation of the approximation, given in Table 1.

Under the above second-order framework, in (8), but with \(\rho <0\), let us use the parametrization

where \(\beta \) and \(\rho \) are generalized scale and shape second-order parameters, which need to be adequately estimated on the basis of the available sample. Let us denote the optimal level by

with MSE standing for mean squared error. With \(\mathbb {E}\) denoting the mean value operator and AMSE standing for asymptotic MSE, a possible substitute for MSE\((\hat{\xi }^\bullet _{k,n}\)) is

cf. Eq. (9). Then, with the notation \(k_{0}^\bullet (n) := \arg \min _k \textrm{AMSE}\big (\hat{\xi }^\bullet _{k,n}\big )\), we get

For the Hill estimator in (6), and as can be seen in Table 1, we have \((b_{_\textrm{H}},\sigma _{_\textrm{H}})=(1/(1-\rho ),\xi )\). Consequently, with \((\hat{\beta }, \hat{\rho })\) a consistent estimator of \((\beta , \rho )\) and [x] denoting the integer part of x, we have an asymptotic justification for the estimator

The same does not happen with the PPWM EVI-estimators, due to the fact that \(\sigma _{_\mathrm{\mathrm PPWM}}\), \(b_{_\textrm{PPWM}}\) and consequently \(k_0^\textrm{PPWM}\) depend on the value of \(\xi \) (see Table 1, again). It is thus sensible to use the bootstrap methodology for the adaptive choice of the threshold associated to the PPWM EVI-estimation.

1.2 Scope of the Article

The main goal is the adaptive estimation of the EVI. For that purpose, the choice of the threshold is crucial and we study computationally a recent bootstrap algorithm. After a review, in Sect. 2, of the role of the bootstrap methodology in the estimation of optimal sample fractions, we provide an algorithm for the adaptive estimation through the Hill and the PPWM EVI-estimators. In Sect. 3 we provide results from a Monte Carlo simulation study. In Sect. 4, as an illustration, we apply such methodology to a data set in the field of insurance. Section 5 concludes the paper.

2 Adaptive EVI-Estimation and the Bootstrap Methodology

Similarly to what has been done in Gomes and Oliveira [8], for the H estimator, and in Gomes et al. [9], for adaptive reduced-bias estimation, we can use the algorithm in Caeiro et al. [10] (see also [4]), considering the auxiliary statistic,

which converges to the known value zero, and double-bootstrap it adequately, in order to estimate \(k_{0}^\bullet (n)\), through a bootstrap estimate \(\hat{k}_{0}^{\bullet , *}\). Indeed, again under the second-order framework, in (8), we get, for the auxiliary statistic \(T_{k,n}^\bullet \), in (11), the asymptotic distributional representation,

with \(Q_k^\bullet \) asymptotically standard normal, and \((b_\bullet , \sigma _\bullet )\) given in Table 1. The AMSE of \(T_{k,n}^\bullet \) is thus minimal at a level \(k_{0|T}^\bullet (n)\) such that \(\sqrt{k}\ A(n/k)\rightarrow \lambda '_{_A}\not =0\), i.e. a level of the type of the one in (10), with \(b_\bullet \) replaced by \(b_\bullet (2^{\rho }-1)\), and we consequently have

2.1 The Bootstrap Methodology in Action

Given the sample \(\underline{X}_n=(X_1,\dots ,X_n)\) from an unknown model F, consider for any \(n_1=O(n^{1-\epsilon })\), with \(0<\epsilon <1\), the bootstrap sample \(\underline{X}_{n_1}^{*}=(X_1^{*},\dots ,X_{n_1}^{*})\), from the model \(F_n^{*}(x)=\frac{1}{n} \sum _{i=1}^{n}I_{\{X_i \le x\}}\), the empirical CDF associated with the original sample \(\underline{X}_n\). We choose the resample size \(n_1\) to be less than the original sample size to avoid underestimation of the bias (see Hall [11]). Next, associate to that bootstrap sample the corresponding bootstrap auxiliary statistic, denoted \(T_{k_1,n_1}^{\bullet ,*}\), \(1< k_1 < n_1\). Then, with the notation

we have that

Consequently, for another sample size \(n_2=n_1^2/n\),

We are now able to estimate \(k_{0}^{\bullet }(n)\), on the basis of any estimate \(\hat{\rho }\) of \(\rho \). With \(\hat{k}_{{0|T}}^{\bullet ,*}\) denoting the sample counterpart of \(k_{{0|T}}^{\bullet ,*}\), \(\hat{\rho }\) the \(\rho \)-estimate and taking into account (10), we can build the \(k_0\)-estimate,

and the \(\xi \)-estimate

A few questions, some of them with answers outside the scope of this paper, may be raised: How does the bootstrap method work for small or moderate sample sizes? Is the method strongly dependent on the choice of \(n_1\)? What is the type of the sample path of the EVI-estimator, as a function of \(n_1\)? What is the sensitivity of the bootstrap method with respect to the choice of the \(\rho \)-estimate? Although aware of the theoretical need to have \(n_1=o(n)\), what happens if we choose \(n_1=n\)?

2.2 An Algorithm for the Adaptive EVI-Estimation

The estimates \((\hat{\beta }, \hat{\rho })\), of the vector \((\beta , \rho )\) of second-order parameters, are the ones already used in previous papers:

-

1.

Given a sample \((x_1, \dots , x_n)\), consider the observed values of the \(\rho \)-estimators \(\hat{\rho }_\tau (k)\), introduced and studied in Fraga Alves et al.[12], for tuning parameters \(\tau =0\) and \(\tau =1\).

-

2.

Select \(\left\{ \hat{\rho }_\tau (k)\right\} _{k\in {\mathcal K}}\), with \(\mathcal{K}=([n^{0.995}],\ [n^{0.999}])\), and compute their median, denoted by \(\eta _\tau \), \(\tau =0,1\).

-

3.

Next compute \(I_\tau :=\sum _{k\in \mathcal{K}}\left( \hat{\rho }_\tau (k)-\eta _\tau \right) ^2\), \(\tau =0, 1\), and choose the tuning parameter \(\tau ^*=0\) if \(I_0 \le I_1\); otherwise, choose \(\tau ^*=1\).

-

4.

Work with \(\hat{\rho }\equiv \hat{\rho }_{{\tau ^*}}=\hat{\rho }_{{\tau ^*}}(k_1)\) and \(\hat{\beta }\equiv \hat{\beta }_{{\tau ^*}}:=\hat{\beta }_{ \hat{\rho }_{{\tau ^*}}}(k_1)\), \(k_1=[n^{0.999}]\) and \(\hat{\beta }_{\hat{\rho }}(k)\) given in Gomes and Martins [13].

Now, and with \(\hat{\xi }_{k,n}^\textrm{H}\) and \(\hat{\xi }_{k,n}^\textrm{PPWM}\) respectively defined in (6) and (7), the algorithm goes on with the following steps:

-

5.

Compute \(\hat{\xi }_{k,n}^{\bullet }\), \(k=1,\dots ,n-1\), \(\bullet =\textrm{H}\ \text{ and/or }\ \textrm{PPWM}\).

-

6.

Next, consider a sub-sample size \(n_1=o(n)\), and \(n_2=[n_1^2/n]+1\).

-

7.

For l from 1 until B, generate independently B bootstrap samples \((x_1^{*},\dots ,x_{n_2}^{*})\) and \((x_1^{*}, \dots ,x_{n_2}^{*},x_{n_2+1}^{*},\dots ,x_{n_1}^{*})\), of sizes \(n_2\) and \(n_1\), respectively, from the empirical CDF, \(F_n^{*}(x)=\frac{1}{n}\sum _{i=1}^{n} I_{\{X_i\le x\}}\), associated with the observed sample \((x_1, \dots , x_n)\).

-

8.

Denoting by \(T_{k,n}^{\bullet ,*}\) the bootstrap counterpart of \(T_{k,n}^{\bullet }\), defined in (11), obtain \((t_{k,n_1,l}^{\bullet , *},\ t_{k,n_2,l}^{\bullet , *})\), \(1\le l \le B\), the observed values of the statistic \(T_{k,n_i}^{\bullet , *},\ i=1,2\). For \(k=2,\dots ,n_i-1\), compute

$$ \textrm{MSE}^{\bullet , *}(n_i,k)=\frac{1}{B}\sum \limits _{l=1}^B\big (t_{k,n_i,l}^{\bullet , *}\big )^2,\ $$and obtain

$$ \hat{k}_{{0|T}}^{\bullet , *}(n_i) := \arg \min _{1< k < n_i} \textrm{MSE}^{\bullet , *} (n_i,k),\quad i=1,2. $$ -

9.

Compute the threshold estimate \(\hat{k}_{0}^{\bullet , *}\), in (12).

-

10.

Finally obtain

$$\hat{\xi }^{\bullet ,*}\equiv \hat{\xi }^{\bullet ,*}({n;n_1}) = \hat{\xi }_{\hat{k}_{{0|T}}^{\bullet ,*}(n;n_1), n},$$already provided in (13).

Such an algorithm needs to be computationally validated, a topic we deal with in the next section. Further, note that bootstrap confidence intervals (CIs) are easily associated with the estimates presented through the replication of this algorithm r times.

3 A Small-Scale Simulation Study

In this section, we have implemented a multi-sample Monte Carlo simulation experiment of size 1000, to obtain the distributional behaviour of the EVI adaptive bootstrap estimates \(\hat{\xi }^{H,*}\) and \(\hat{\xi }^{PPWM,*}\) in (6) and (7), respectively. We have considered a re-sample of size \(n_1=[n^{0.955}]\) for samples of size \(n=100\), 200, 500, 750, 1000, 2000 and 5000 from the following models:

-

the Fréchet model, with d.f.

$$F(x)=\exp (-x^{-1/\xi }),\quad x>0,\quad \xi >0,$$with \(\xi =0.25\) (\(\rho =-1\));

-

the Burr model, with d.f.

$$F(x)=1-(1+x^{-\rho /\xi })^{1/\rho },\quad x>0,$$with \((\xi ,\rho )=(0.25,-0.75)\);

-

the Half-\(t_4\) model, i.e., the absolute value of a Student’s t with \(\nu =4\) degrees of freedom (\(\xi =0.25\), \(\rho =-0.5\)).

In Table 2 we present, for the above mentioned models, the multi-sample simulated (mean) double bootstrap optimal sample fraction (OSF), the mean (E) and median (med) of the EVI-estimates and the simulated RMSE for both EVI-estimators, as a function of the sample size n. The less biased EVI-estimate and the smallest RMSE is presented in bold. Although both estimators over-estimate the EVI, the consideration of the PPWM EVI-estimator leads to a less biased EVI-estimate, as expected. The PPWM estimation can also lead to a smaller RMSE, for models with \(|\rho |<1\).

4 A Case Study

Here, the performance of the adaptive double bootstrap procedure is illustrated through the analysis of a real dataset. The analysis was made in R software with the computer code developed in Caeiro and Gomes [14]. We used the dataset AutoClaims from a motor insurance portfolio. The data is available in the R package insuranceData [15]. The variable of interest is the amount paid on a closed claim, in dollars. There are \(n = 6773\) claims available. Since large claims are a topic of great concern in the Insurance Industry, accurate modelling of the right tail of the underlying distribution is extremely important. The Histogram and the Pareto Quantile-Quantile (QQ) Plot, in Fig. 2, are compatible with a Pareto-type underlying distribution.

In Fig. 3, we present the EVI-estimates provided by the Hill and the PPWM EVI-estimators in (6) and (7), respectively. Both estimators are upward biased for large k.

In Fig. 4, as a function of the sub-sample size \(n_1\), ranging from \(n_1=3990\) until \(n_1=6700\), we picture, at the left, the estimates \(\hat{k}_{0}^{\bullet ,*}(n_1)/n\) of the optimal sample fraction, \(k_0^\bullet /n\), for the adaptive double bootstrap estimation of \(\xi \) through the H and the PPWM estimators. Associated bootstrap EVI-estimates are pictured at the right. Contrarily to the bootstrap Hill, the bootstrap PPWM EVI-estimates are quite stable as a function of the sub-sample size \(n_1\) (see Fig. 4, right).

For a re-sample size \(n_1=[n^{0.955}] = 4554\), and \(B=250\) bootstrap generations, we were led to \(\hat{k}_{0}^{H,*} = 67\) and to \(\hat{\xi }^{H,*} = 0.3463\). This same algorithm applied to the PPWM estimator provide the bootstrap estimates \(\hat{k}_{0}^{PPWM,*} = 88\) and \(\hat{\xi }^{PPWM,*}=0.3301\).

5 Conclusions

In this paper we addressed the adaptive estimation of the EVI with the double bootstrap methodology associated to the Hill and the PPWM estimators. The presented simulation study shows that the adaptive PPWM EVI-estimator is usually less biased and provides a similar or a smaller RMSE than the adaptive Hill EVI-estimator. Moreover, the efficiency of the adaptive PPWM estimator relatively to the adaptive Hill estimator seems to improve as the asymptotic bias of estimators increases (as \(\rho \) increases). Further research concerning the sensitivity of the method on the choice of \(n_1\) will be addressed in the future.

References

Jenkinson, A.F.: The frequency distribution of the annual maximum (or minimum) values of meteorological elements. Q. J. R. Meteorol. Soc. 81, 158–171 (1955)

Von Mises, R.: La distribution de la plus grande de \(n\) valuers. Rev. Math. Union Interbalcanique 1, 141–160 (1936)

Hill, B.M.: A simple general approach to inference about the tail of a distribution. Ann. Stat. 3(5), 1163–1174 (1975)

Caeiro, F., Gomes, M.I.: Semi-parametric tail inference through probability-weighted moments. J. Stat. Plann. Inference 141(2), 937–950 (2011)

Beirlant, J., Herrmann, K., Teugels, J.: Estimation of the extreme value index. In: Extreme Events in Finance, pp. 97–115. Wiley (2016)

Fedotenkov, I.: A review of more than one hundred Pareto-tail index estimators. Statistica (Bologna) 80(3), 245–299 (2020)

Beirlant, J., Caeiro, F., Gomes, M.I.: An overview and open research topics in statistics of univariate extremes. Revstat-Stat. J. 10(1), 1–31 (2012)

Gomes, M.I., Oliveira, O.: The bootstrap methodology in statistics of extremes–choice of the optimal sample fraction. Extremes 4(4), 331–358 (2001)

Gomes, M.I., Mendonça, S., Pestana, D.: Adaptive reduced-bias tail index and var estimation via the bootstrap methodology. Commun. Stat.-Theory and Methods 40(16), 2946–2968 (2011)

Caeiro, F., Gomes, M.I., Vandewalle, B.: Semi-parametric probability-weighted moments estimation revisited. Methodol. Comput. Appl. Probab. 16(1), 1–29 (2014)

Hall, P.: Using the bootstrap to estimate mean squared error and select smoothing parameter in nonparametric problems. J. Multivar. Anal. 32(2), 177–203 (1990)

Fraga Alves, M.I., Gomes, M.I., De Haan, L.: A new class of semi-parametric estimators of the second order parameter. Portugaliae Math. 60(2), 193–213 (2003)

Gomes, M.I., Martins, M.J.: Asymptotically unbiased estimators of the tail index based on external estimation of the second order parameter. Extremes 5(1), 5–31 (2002)

Caeiro, F., Gomes, M.: Threshold selection in extreme value analysis. In: Extreme Value Modeling and Risk Analysis. Chapman and Hall/CRC, Boca Raton, FL

Wolny-Dominiak, A., Trzesiok, M.: insuranceData: A collection of insurance datasets useful in risk classification in non-life insurance (2014). R package version 1.0

Acknowledgements

Research partially supported by National Funds through FCT—Fundação para a Ciência e a Tecnologia, projects UIDB/00006/2020 and UIDP/00006/2020 (CEA/UL) and UIDB/00297/2020 and UIDP/00297/2020 (CMA/UNL).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Caeiro, F., Gomes, M.I. (2022). Computational Study of the Adaptive Estimation of the Extreme Value Index with Probability Weighted Moments. In: Bispo, R., Henriques-Rodrigues, L., Alpizar-Jara, R., de Carvalho, M. (eds) Recent Developments in Statistics and Data Science. SPE 2021. Springer Proceedings in Mathematics & Statistics, vol 398. Springer, Cham. https://doi.org/10.1007/978-3-031-12766-3_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-12766-3_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-12765-6

Online ISBN: 978-3-031-12766-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)