Abstract

The output of CT imaging (after reconstruction) of soil samples are grayscale images with a series of gray values corresponding to soil components with different densities. Most current techniques to quantify the soil pore system rely on binary images, with the target objects or foreground pixels (e.g. pores) labelled as 1 and the background (e.g. soil) labelled as 0. This means the grayscale images need to be segmented to binary images before further quantification. However, because of the partial volume effect and noise in the images, in most cases the histogram of the grayscale image does not exhibit clear, distinct peaks, which makes image segmentation a very challenging task. Many filtering techniques have been developed to smooth image noise and enhance the contrast between the target and background, which can greatly help image segmentation if correctly used. Numerous image segmentation techniques have also been developed to deal with specific problems in a variety of disciplines. In soil science, a range of global and local segmentation methods have been used and their performances compared and summarized in previous studies. Recently, artificial intelligence (AI) has been used to segment soil CT images and showed great potential in extracting not only soil pore space, but also other soil components. In this chapter, we briefly summarize the widely used traditional global and local segmentation methods and then present a new segmentation protocol that combines machine learning and deep learning strategies to segment pore space in soil aggregate images. The results of the AI-based method are very promising, and we propose the AI techniques will soon be extensively used in the processing of the soil CT images.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

5.1 Image Processing: Cropping and Filtering

Depending on the type and the size of the sample, different decisions on the selection of a region of interest (ROI), i.e. cropping of X-ray CT images for subsequent image processing will be necessary. For instance, large samples collected in plastic tubes might present some structural damage in their peripheries depending on how the sample has been collected and it may be desirable to avoid such regions in any subsequent analysis (see Chap. 3 on soil sampling). In this case, extracting (or cropping) a regular-shaped ROI (cubic or cylindrical) in the adjacencies of the centre of the reconstructed image, which corresponds to the core of the sample, is frequently carried out (Galdos et al., 2020; Borges et al., 2019; Ferreira et al., 2018; Pires et al., 2017; Backeberg et al., 2017; Vogel, 1997). In contrast, if the intention is to evaluate the effects of the sampling procedure on the soil structure itself, it is often more appropriate to choose a ROI that encompasses the sample’s external surroundings. Some software (e.g. Avizo) even offer options for selecting irregular shaped ROI, based on a free-hand drawing of the desired region (Ferreira et al., 2019). When the sample is irregular, such as natural soil aggregates, it is more appropriate to consider the entire volume for the image processing since closed and open intra-aggregate pores can be concomitantly investigated. In this analysis, the aggregate must be carefully separated from its background instead of simply extracting a regular or irregular ROI (Bacq-Labreuil et al., 2019; Khan et al., 2012; Wang et al., 2012).

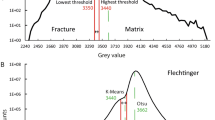

As previously described, after scanning and reconstruction, a set of 2-D digital images, each represented by a matrix of elementary data (pixels), is obtained. When stacked, the 2-D images slices form a 3-D image volume, which consists of elementary units called voxels. Usually, for visualization and subsequent analysis of the X-ray CT images, materials of diverse attenuation coefficients are linked to distinct shades of grey that characterizes the radiodensity of each voxel. X-ray CT images are normally generated with 256 (28) or 65,536 (216) grey values, which represent images with greyscale depths of 8- and 16-bits, respectively (Galdos et al., 2019; Marcheschi, 2008; Gillespy 3rd & Rowberg, 1994). Figure 5.1 presents a greyscale histogram in a typical soil X-ray CT image (16-bit). The frequency of appearance of a given grey value and consequently the presence of peaks in the histogram represent differences in the attenuation properties of the material: lower and higher grey values in the greyscale represent the photon attenuation by low and high-density materials in the soil, respectively. The presence of two noticeable peaks in the histogram is characteristic of a two-phase porous medium.

The correct delimitation of the peaks permits the identification of the phases of interest (segmentation) in the X-ray CT image. In the case of a ‘dried’ soil sample, the left and the right peak are associated with the soil pore space and the soil solid matrix, respectively (Fig. 5.1). However, it is common to find histograms from soil images presenting overlapping peaks, which requires more effort, and on some occasions considerably so, to define the peaks and identify the sample phases in the image. Among the factors that affect the possibility of delimiting the peaks in the histogram of an X-ray CT image is the signal-to-noise ratio attained during the acquisition of the image due to non-ideal photon statistics and the partial volume effect caused by the averaging of the X-ray intensity on the corresponding detector pixel that has to image a sharply contrasting boundary of the scanned object (Buzug, 2008). The use of specific filters, after image reconstruction, is recommended for denoising the image while preserving phase edges. Filters aimed at smoothing, sharpening, edge detection, and contrast enhancement generally improve the separation of peaks in the greyscale histogram and facilitate subsequent segmentation. In particular, edge detection filters can have significant impacts on the identification of phases and consequently on the obtention of reliable quantitative results. Thus, having the segmentation step in mind, the choice on the best filtering technique should consider its capability of producing a high-quality image without losing relevant original details.

Denoising algorithms can be classified as filtering methods in the spatial domain (operations are applied directly on the image matrix), transform domain (operations are applied after the image matrix is transformed into the frequency domain), or other domains (based, for instance, on statistical models schemes and random fields) (Goyal et al., 2020). Spatial domain methods for image denoising are classical and often applied when considering X-ray CT images. These methods exploit similarities between pixels or patches of an image through local filters, in which the denoising of a pixel is restricted by spatial distance, or non-local filters, which take advantage of the correlation amongst the entire range of pixels in an image (Goyal et al., 2020). The image filtering (in the spatial domain) is based on a spatial convolution operation between the image itself, represented by an M × N (in the simplest 2-D case) dimension matrix, and a pre-defined K × K matrix, known as the kernel or mask, which results in an image with the same original dimension (M × N). In other words, the filtering process consists of multiplying pixel values from an image by a kernel matrix that represents the filter (Marcheschi, 2008). In the filtering computation, the kernel is virtually moved pixel by pixel (voxel by voxel, in 3-D) over the input image. Commonly applied filters for X-ray CT image enhancement are: low-pass, Gaussian, median, non-local means, unsharp mask, among others (Tuller et al., 2013; Marcheschi, 2008).

The low-pass filter, sometimes called box filter, involves replacing each pixel of the image by the average of the neighbourhood pixels specified by the kernel (Keselbrener et al., 1992). Only low spatial frequencies are maintained in the digital images after the filter application. The elimination of high frequencies, associated with image noise, has the disadvantage of also eliminating well-defined edges, consequently blurring the resulted image (Marcheschi, 2008). The Gaussian filter considers a discrete 2-D Gaussian function to define the kernel (Pathmanabhan & Dinesh, 2007), so that the kernel coefficients are inversely proportional to the central value of the matrix (K × K). In this way, the pixels located in the centre have a higher weighting, i.e. greater importance, than those at the image border. One of the drawbacks of this method is there is no preservation of the image edges, producing a blurred and unclear filtered image (Marcheschi, 2008). The median filter represents a nonlinear method that, instead of considering weights as the kernel coefficients, computes the median of the pixel values in the specified kernel neighbourhood (Heinzl et al., 2018). For being characterized by preserving the edges between the different phases found in the digital images (Müter et al., 2012; Sun & Neuvo, 1994), the median filter is particularly useful and one of the most popular filters for processing of soil CT images (Oliveira et al., 2021; Zhou et al., 2020; Jarvis et al., 2017; Burr-Hersey et al., 2017).

The non-local means (NLM) filter was first introduced by Buades et al. (2005) and seeks to exploit similarity amongst pixels in a non-local manner. It is a linear filter that, instead of using small-sized kernels, potentially utilizes the entire image as a search window (Schlüter et al., 2014). The presence of similar features or patterns in the image is explored by the NLM filter (Goyal et al., 2020). Buades et al. (2011) highlighted that the most similar pixels to a given pixel have no reason to be spatially close, which is the assumption for the previously described local filters; instead, similar pixel neighbourhoods can occur anywhere in the image. This justifies the necessity to scan a large portion of the image in search of the pixels that are similar to the pixel under denoising. Thus, the neighbourhood of a pixel i can be defined as any set of pixels j in the image such that a window around j resembles a window around i (this can be performed in both 2-D and 3-D approaches). Therefore, the pixels in that 2-D or 3-D neighbourhoods are averaged to determine the new intensity value at i (the denoised pixel) (Buades et al., 2004). The NLM filter is known to be very efficient at reducing image noise without significantly losing information at phase edges and, therefore, has been the choice of many authors to filter X-ray CT images of porous media such as rocks (Garfi et al., 2020) and soils (Gao et al., 2019; Pöhlitz et al., 2019; Ferreira et al., 2018, 2019). However, a sharpening filter, for instance, the unsharp masking and sharpening high-boost filter, is often necessary after the image denoising to accentuate the high frequencies present in the image, making the discontinuous structures (e.g., contours) sharper (Pires et al., 2020; Distante & Distante, 2020; Tuller et al., 2013).

5.2 Image Segmentation

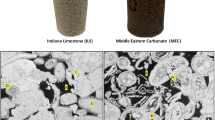

After filtering, the next step in image processing is usually segmentation, that is, the identification or separation of the phases of interest (e.g. solid matrix, pores, water, organic matter). This is the most important step for obtaining reliable quantitative information. A digital greyscale image is characterized by pixels each containing intensity value information. As mentioned, the grey values are distributed among pixels between black (0) and white (65,535) (assuming a 16-bit image) (Fig. 5.1). Depending on the objectives and the characteristics of the scanned material, different segmentation methods can be applied using either manual, semi-automatic, or full-automatic procedures (Yen et al., 1995). The manual procedure is limited by the time required for the segmentation of the samples. This is mainly due to the necessity to carefully check the edges that separate the phases of interest (e.g. pores from the solid matrix when the soil porous system is analysed to ensure an accurate segmentation). Semi-automatic procedures have the advantage that specific functions can be chosen to define the regions occupied by specific phases. These functions are used to select pixels having similar grey values and classifying them as pores or solids. This procedure speeds up the segmentation procedure, but typically requires powerful computer processing for the analysis of 3-D images. For studies on porous media, such as soils and rocks, global and locally adaptive segmentation methods are the most frequently used (Schlüter et al., 2014; Houston et al., 2013; Tuller et al., 2013; Iassonov et al., 2009; Wang, 2008; Jan, 2006), although machine learning-based segmentation has also been applied recently (Han et al., 2019; Chauhan et al., 2016).

5.2.1 Global Segmentation

Global segmentation uses a single threshold value for all pixels in the images. The value 1 (white) is assigned to pixels whose grey values are higher than the pre-defined threshold value, while 0 (black) is assigned to the remaining pixels (those whose grey values are equal or lower than the threshold value) (Iassonov et al., 2009). Methods to determine the threshold value are based on the greyscale histogram of the digital image in question (Fig. 5.1). Several widely used methods of global segmentation are based on maximum between-class variances, minimum error, maximum entropy, or Fuzzy C-Means (FCM) (Schlüter et al., 2014).

Otsu’s (1979) method is one of the most popular global segmentation methods used in soil sciences (Oliveira et al., 2020; Pires et al., 2019; Pöhlitz et al., 2019; Leue et al., 2019). The Otsu method was created to find a value that provides better separation between the background and foreground (i.e. object or phase of interest) by minimizing their weighted within-class variance. The method works better for images with good contrast between background and foreground, images with bimodal histograms, and uniform lighting conditions (Iassonov et al., 2009). The minimum error thresholding method assumes the existence of two Gaussian functions related to the background and the foreground, and the optimum threshold is determined by optimizing a criterion function related to the Bayes risk (Jiulun & Winxin, 1997; Kittler & Illingworth, 1986). The maximum entropy method is based on the calculation of the entropy of the object and background (Gull & Skilling, 1984). The FCM is related to a combination of the classic k-means, which is a hard clustering technique in which each pattern is allocated to a single cluster, and the fuzzy set theory (Landini et al., 2008). The FCM is an iterative clustering method that involves minimizing the objective function (weighted squared root error function) (Schlüter et al., 2014). The minimization of the c-means functional, which is represented by a nonlinear optimization problem, is solved by different methods such as genetic algorithms, iterative minimization, etc. The objective function is minimized to a fuzzy membership (U) and a set of cluster centroids. The fuzzy membership is recomputed for each iterative step until an unchangeable U is minimized.

Although the threshold values can be determined automatically by the above-mentioned global segmentation methods and others, they might fail to segment an object from the background when there are no distinct peaks in the histogram because of the complexity of the soil, i.e. heterogeneity in the soil fabric. In some cases, researchers have determined the threshold value via visual inspection of the soil images; however, this is subjective and lacks consistency and reproducibility, therefore great caution is needed to ensure consistent phase allocation when choosing the threshold values for different samples.

Despite their simplicity and low computational cost, global segmentation methods do not consider how the grey values of the pixels/voxels are spatially distributed in images (Schlüter et al., 2014). The quality of the generated binary image greatly depends on the extent and possibility of separation between the peaks corresponding to the foreground and background in the histogram. Frequently, even departing from properly filtered X-ray CT images, global methods fail in providing a good quality segmentation, which justifies the need for alternative approaches.

5.2.2 Local Segmentation

Different from the global methods, locally adaptive segmentation methods are known to account for pixel neighbourhood statistics in the class assignment problem (Schlüter et al., 2014). Examples of local segmentation methods are the hysteresis, indicator kriging, and watershed methods. The hysteresis segmentation is employed in problems that involve two-class segmentation (Pini & Madonna, 2016). The procedure is carried out first segmenting the digital image with an upper threshold (high-edge) to identify object pixels for which the uncertainty of class assignment is highest. Thus, pixels brighter than the upper threshold value are considered as belonging to the object. These pixels are assigned as true foreground pixels. The second threshold (low-edge) identifies pixels that belong to a low-intensity class. Pixels darker than this lower threshold will be assigned as the background. The use of a pre-defined kernel (for example, a square) is employed for connecting the pixels defined as high- and low-edges in the segmentation procedure (Pini & Madonna, 2016). Pixels with intermediate grey values (uncertain) are assigned to the low-intensity class when their neighbouring pixels belong to the low-intensity class. Otherwise, they are considered as objects when connected to pixels of this class (Schlüter et al., 2014). Unassigned pixels are classified as high-intensity class pixels. The quality of the segmentation by this method depends mainly on the choice of the low- and high-edge thresholds.

The indicator kriging method completes the segmentation via the calculation of the indicator covariance functions from the image-data (Oh & Lindquist, 1999). In the method, a lower threshold value is defined and the pixels with grey values below this cut-off belong to the background, while the second class includes those pixels with grey values larger than a defined higher threshold value, for example, the objects (Oh & Lindquist, 1999). Unassigned pixels, with grey values between the higher and lower threshold values, will be assigned to the first or second classes depending on the probability that the voxel belong to the background or foreground. The probability is estimated employing the indicator kriging method, which considers the spatial location of the undefined pixel and its neighbours (Oh & Lindquist, 1999).

The watershed segmentation method considers a digital image as a topographic surface, and the grey value of the pixels is interpreted as altitudes on the surface (Jan, 2006). By applying the gradient operator on the greyscale image, an output image is obtained in which the valleys (local minimum) correspond to the interior of phases (e.g. pores or solids) and are surrounded by ‘mountains’ that correspond to the edges of phases (boundaries between pores and solids) (Fig. 5.2) (Beucher & Meyer, 1993). In other words, the watershed transform decomposes an image into catchment basins that are filled with ‘water’ in a ‘flooding process’. When the waterfront from different basins reaches the highest altitudes, they are prevented from merging by the dams represented in the gradient image by the phase edges (Jan, 2006). The selection of markers (seeds selected to start the immersion process) inside the catchment basins is a crucial step for this method and can be manually or automatically defined, considering specific knowledge of the objects (phases) (Fig. 5.2). The method generally succeeds, even when there is poor contrast between regions of the digital image.

Schematics of watershed segmentation: (a) Greyscale 2-D section of a soil X-ray CT image showing the pore and solid phases in darker and brighter grey tones, respectively. (b) Output image of the gradient operator applied on the greyscale image; dark regions represent the ‘valleys’ (local minimum) corresponding to the interior of pores and solids; bright lines represent the ‘mountains’ or ‘dams’ corresponding to the boundaries of these pores and solids. (c, d) Catchment basins filled with markers (seeds) representing the solids (I) and pores (II). (e) Result of the ‘flooding’ process applied in the watershed segmentation

5.2.3 Machine Learning-Based Segmentation as a Solution to New X-ray CT Imaging Challenges

In recent years, artificial intelligence (AI) has become an interesting and powerful tool for the segmentation and analysis of tomographic images (Weikert et al., 2020). Among several definitions of AI, the following by Ertel (2017) is most appropriate: ‘the study of how to make computers do things at which, at the moment, people are better’. Machine learning is one of the techniques used by AI to achieve this purpose (Chowdhary, 2020). Machine learning algorithms are usually divided into unsupervised and supervised learning algorithms, among which the latter is currently more widely applied (Joshi, 2020). For a classification problem such as the segmentation of a digital image in different classes (phases), supervised algorithms need samples with labelled classes (training data) from which a mathematical model learns relevant parameters and becomes able to make predictions on new samples. Supervised learning comprises different approaches based on, for example, the concepts of decision trees, support vector machines (SVM), and artificial neural networks (Joshi, 2020; Chauhan et al., 2016).

Decision trees consist of a hierarchical decision-making process at each node of a tree-type structure, so that the classification task is guided through multiple branches of alternative decisions. Thus, creating and aggregating multiple trees of similar architecture results in an ensemble method (e.g., bagging, random forest, and boosting ensembles) that permits producing robust predictive models with greater classification performances (Joshi, 2020; Polikar, 2012). The SVM, on the other hand, was originally developed to perform binary classification based on the construction of an optimal hyperplane that imposes a maximum separation between two classes. Some other approaches are currently being considered to extend the SVM method for multi-class classification. Despite increasing the complexity of the problem, which requires tuning of some hyperparameters, it may provide high accuracy and generalization capabilities (Tzotsos & Argialas, 2008). With the recent technological advances, neural networks are emerging in the context of the learning process called deep learning, which is a particular area of machine learning. Deep artificial neural networks contain multiple hidden layers of neurons aimed at learning complex patterns in large volumes of data (Wani et al., 2020). It can only converge for satisfying accuracy if trained with enough data followed by consistent computational manipulation. The full potential of this technology has been enabled by the advent of graphics processor-based computation since it allows parallel training of deep networks (Joshi, 2020).

Known challenges when applying X-ray CT to soil-related investigations are (1) satisfying the repetition/replication requirements for appropriate statistical analysis, since X-ray CT measurements of dense samples (those substantially composed of high attenuation materials) are time-consuming and often costly, and (2) processing and segmenting the whole image dataset to obtain quantitative information. The former challenge will no longer be an issue for those with access to fourth-generation Synchrotron Light Source imaging as the high photon flux provided at these facilities, in the hard X-ray range, enables fast image acquisition even of materials composed of high Z (atomic number) elements (Craievich, 2020). This characteristic is essential for 4-D (time-resolved three-dimensional) experiments which consist of acquiring a sequence of 3-D images that allows understanding dynamic processes (Ferreira et al., 2022). For instance, Pak et al. (2020) recently demonstrated that this experimental approach, with a scanning time of ~6 min per 3-D image, had great importance for observations of pore-scale processes governing the multiphase flow in porous media. Comparatively, at MOGNO beamline (MicrO and NanO Tomography beamline at the Brazilian Synchrotron Light Source, SIRIUS), it is expected that for future research 3-D imagery will be possible in few seconds (1–5 s) (Costa et al., 2017; Ferreira et al., 2022). Thus, the necessity of fast processing and segmentation of very large image datasets is going to be a reality at MOGNO, and in upcoming beamlines dedicated to X-ray CT throughout the world (though it is worth noting the majority of studies using CT to explore soils are currently undertaken using benchtop systems).

In this chapter we propose the application of strategies based on Machine Learning followed by Deep Learning (Pinto et al., 2022; Spina et al., 2018; Vasconcelos et al., 2018) to segment soil 3-D images into its pore and solid phases. This will serve as a preparation to deal with large soil image datasets such as those used in time-resolved soil studies.

5.3 An X-ray CT Image Segmentation Protocol Based on Machine Learning and Deep Learning Strategies: A Case Study

5.3.1 X-ray CT Image Acquisition and Preparation for the Machine Learning Pipeline

3-D images of soil aggregates (~3 mm diameter each) generated at the X-ray microtomography beamline (IMX)—second-generation light source—at the Brazilian Synchrotron Light Laboratory, Brazilian Center for Energy Research and Materials (LNLS/CNPEM) were selected as demonstration specimens. The samples (33 in total) were scanned using a polychromatic beam, filtered by a 550 μm thick Si filter, and 1024 projections were acquired over 180° rotation of the sample. The exposure time for each projection was 2 s, leading to a total scan time of ~35 min per sample. An in-house filtered back projection-based algorithm (Miqueles et al., 2018) was applied to reconstruct the images in 16-bit, raw type, which had a maximum array of 2048 × 2048 × 2048 voxels, with a voxel side length of 1.64 μm, resulting in image volumes of 3.36 × 3.36 × 3.36 mm3 each.

For the development of the current segmentation protocol, a series of steps was adopted and is illustrated in Figs. 5.3, 5.4, 5.5. The steps presented in Fig. 5.3 were performed using the Avizo software v. 2019.4. They served for preparing the images for examination via the machine learning techniques (Note: this task can be performed using other software or included in the machine learning pipeline). To start, one 3-D image volume among the entire dataset was randomly selected, and a sub-volume of 2048 × 2048 × 1938 voxels (x, y, z coordinates), containing the aggregate, was extracted to avoid unnecessary computational processing in regions exclusively containing background information. Fig. 5.3a shows a slice from the raw image at the central region of the aggregate (z = 900). Intrinsically X-ray CT images are characterized by having a certain level of noise, mainly introduced by an unfavourable conversion rate of X-ray photons to visible light when scintillator-based detection systems are used (Banhart, 2008). Before any analysis, the image noise needs to be reduced to allow a more accurate segmentation, especially if the noise is too prominent, as the case presented in Fig. 5.3a. In this study, the image was denoised by a non-local means filter (INLM) (Mode: GPU Adaptive Manifold 3-D—spatial standard deviation: 1, intensity standard deviation: 0.3, search window: 10, local neighbourhood: 5) (Fig. 5.3b) and subsequently had its edges enhanced by an unsharp mask (INLM + UM) filter (Edge size: 4, Edge contrast: 0.7, Brightness threshold: 0) (Fig. 5.3c). This produced evident effects on the greyscale histogram associated with the images (Fig. 5.3d), imposing a separation between the peaks corresponding to the air (left peak) and the solid (right peak) phases. It is seen that the effect was more pronounced for INLM than for INLM + UM.

Tomographic slice at the central region of a soil aggregate (z = 900): (a) raw image, (b) after non-local means filter (INLM), and (c) non-local means filter followed by the unsharp mask filter (INLM + UM). (d) Greyscale histograms of the raw, INLM, and INLM + UM images. (e) Seeds for an immersion process at the aggregate (purple) and background (pink). (f) INLM after background masking and simple thresholding based on the valley between peaks (33,289) of INLM histogram (blue represents grey values <33,289)

(a) Tomographic slice at the central region of a soil aggregate (z = 900) after non-local means filter followed by unsharp mask filter (INLM + UM) and background masking (in black). Waterpixels in a zoomed fraction of (a) with seed spacing and compactness values, respectively, set to (b) 9 and 1000, and (c) 3 and 10. (d) Handcrafted annotations on (a) at background (pink), solid matrix (green) and pores (blue). (e) Classification result of (a) via machine learning, where pores and solid matrix are in royal and turquoise blue, respectively. (f) Seeds for an immersion process at the background (pink), solid matrix (turquoise blue), and pores (royal blue). (g) Result of watershed algorithm computation based on (f)

(a) Orthogonal tomographic slices of a soil aggregate after non-local means filter (INLM), and background masking (in black), constituting the Data (see Table 5.1). (b) Same orthogonal slices showing pores in blue from the corresponding label image resulted from Fig. 5.4g. (c) Slice z = 900 of (a). (d) Greyscale histogram of the (e) inference probability distribution output for the pore class, with the threshold of 9.5 × 108. (f) The final classification of pores in blue with the threshold of 10.5 × 108. (g) Same orthogonal slices of (a) showing pores in blue from the final classification via deep learning. (h) Orthogonal tomographic slices of another soil aggregate (INLM) with the masked background (in black) for a blind inference test and (i) the resulting classification of pores with the threshold of 10.5 × 108

It is important to explain that restricting the X-ray CT analysis to a regular ROI (e.g. cube) inside a naturally irregular soil aggregate image, something frequently performed in studies involving soil aggregate image analysis (Camargo et al., 2022; Ma et al., 2020; Ferreira et al., 2019; Peth et al., 2008), disregards some information from the borders of the aggregate. An interesting way of exploring the entire aggregate volume is to assign a label to the background to account for its volume whenever necessary (e.g. in Zhao et al., 2020). For that, markers slightly smaller (purple-coloured, Fig. 5.3e) and larger (pink-coloured) than the aggregate boundaries were generated, representing seeds for the aggregate and background, respectively, to be subsequently considered in an immersion process. The markers were created using a combination of simple thresholding, morphological filters such as erosion/dilation, and fill-holes operations. High-gradient zones in the greyscale image were identified by an algorithm of edge detection (default Canny method in Avizo) and the unclassified zones were then filled by applying a watershed algorithm (Schlüter et al., 2014; Beucher & Lantuejoul, 1979), resulting in well-defined labels for the background and the aggregate regions. In Fig. 5.3f, the black area (zero value assigned) represents the background region after using the result from the watershed method to mask the INLM (Fig. 5.3b). Figure 5.3f shows, in blue, the result of simply considering the grey value between the air and solid peaks (33,289, INLM curve, Fig. 5.3d) as a threshold value to segment the pore and solid phases. The outcome of this choice is, in general, regions of under and overestimation of pores mainly at the centre and close to the borders of the aggregate, respectively. Similar strategies using thresholding, morphological filters, and fill-holes operations failed in the attempt of generating accurate markers for the pore and solid phases, to apply a watershed algorithm. This happened as slight variations in the chosen threshold value eliminated important markers inside pores or created fake markers in the solid matrix. Thus, this reinforces the necessity of alternative segmentation solutions that not only make the traditional methods faster but also provide reliable results.

5.3.2 Machine Learning Pipeline

Aiming to accomplish the real-time segmentation of large 4-D X-ray CT data especially at SIRIUS synchrotron facility, Annotat3D*, a software based on Machine Learning and High-Performance Computing (HPC) techniques (Pinto et al., 2022; Spina et al., 2018; Vasconcelos et al., 2018) has been developed by the Scientific Computing Group at the Brazilian Synchrotron Light Laboratory, Brazilian Center for Energy Research and Materials (LNLS/CNPEM) (*in-house software, i.e. not currently released for wider use). Annotat3D was employed to conduct the steps illustrated in Fig. 5.4a-e. Different from the available machine learning-based segmentation algorithms, such as Waikato Environment for Knowledge Analysis (WEKA) (Arganda-Carreras et al., 2017), which works on a pixel-level classification, the Annotat3D software is based on a superpixel classification (Vasconcelos et al., 2018). A superpixel is formed by a group of neighbouring pixels with similar intensities, meaning that fewer data, composed of local contextual information, need to be processed for the final segmentation. In addition, since the method is implemented using HPC with parallel programming via multiple GPUs, the superpixel classification requires much less computational time than a pixel-level classification. In this context, a method to classify superpixels in soil images, belonging to either solid or pore space, must be optimized by setting important parameters related to: feature extraction, superpixel arrangement, and the type of classifier to be used.

Feature extraction consists of filtering the input greyscale image to enrich the information captured by the superpixels, i.e. ensuring that relevant properties of the objects of interest are considered. In other words, extracted feature maps are filtered versions of the input image. The following filter options are currently available: None (Original Image), Fast Fourier Transform (FFT) Gauss, FFT Gabor, FFT DoG, Sobel, Membrane Projections, Minimum, Maximum, Average, Variance, Median, and LBP (local binary patterns). Also, a feature selection procedure can be enabled to impose a percentage threshold criterion (1% is the default) on the importance of features for classification accuracy. In this way, those contributing with less than the importance threshold value may be disregarded, resulting in less computational processing time without a reduction in the classification quality. The current version of the Annotat3D software offers two approaches to estimate the superpixels using multi-GPU code: the Simple Linear Iterative Clustering (SLIC) algorithm (Achanta et al., 2012) and a method based on the watershed transformation (Gonçalves et al., 2019). In the latter case, the resulting superpixels are called ‘waterpixels’ and they may be computed in 2-D, for each of the z-slices, or in 3-D. Both approaches allow controlling the spatial distribution of the superpixels by setting the two following parameters: seed spacing (increasing this number generates a lower amount of superpixels) and compactness (increasing this number smooths the borders of superpixels, making them more regular). Additionally, two types of classifiers are currently available: the Random Forest and the Support Vector Machines (Ho, 1998; Cortes & Vapnik, 1995).

Figure 5.4a shows the same slice (z = 900) presented in Fig. 5.3, after using the result from the watershed method (Fig. 5.3e) to mask the background (zero value assigned) of INLM + UM (Fig. 5.3c). Hence, the masked INLM + UM was used as an input image for the machine-learning pipeline. INLM + UM was chosen over INLM for this study but there would be no restriction to use the INLM, which as well demonstrated good results. For the tested protocol, all filters were enabled for feature extraction with the ‘default feature selection procedure’. The watershed transform-based method was selected to compute 2-D waterpixels and the Random Forest classifier with 200 trees was selected to be trained.

It is important to emphasize that when choosing among the approaches for superpixel estimation, one must keep in mind that to avoid errors in the subsequent classification, the superpixels should fit the edges of the object of interest in the image, e.g. pores in the case of the soil. For instance, for better visualization, Fig. 5.4b shows the waterpixels formed in a zoomed fraction of the same slice with seed spacing and compactness values set to 9 and 1000, which seemed to be well adapted to the pore borders at first glance. However, this approach provided low-quality classification, especially for narrower pores (not presented). The best classification result was obtained by setting seed spacing and compactness values to 3 and 10, respectively. This produced a narrower distribution of waterpixels (Fig. 5.4c), which was tested to confirm its functionality.

Once the superpixels were generated, the next step consisted in declaring the classes (labels) for image segmentation, which were in the present case: background, soil solid matrix, and pores. As a supervised method, the Annotat3D software operator uses his/her judgement to add markers (or annotations) on a given slice of the input image in regions of background (pink), solid matrix (green), and soil pores (blue), as exemplified in Fig. 5.4d (superpixels not shown). The marked superpixels serve as a training set for the classifier. In other words, the classifier learns from the input labelled superpixels, which are mathematically correlated with the extracted feature maps and provide a full classification (segmentation) of the remaining superpixels of that particular slice or of the image as a whole. As the classifier takes longer in the latter case, the best-tested approach was to successively check the classification result for slices where markers were added (saving the cumulative markers at the end of the optimization for each slice), until the operator judges it is time to check the result for the entire image. In this process, which is optimized as more slices are marked, it is important to identify the existence of frequently misclassified regions and make sure that correct markers are placed on them, so that the classifier can be optimized.

One can assume that the necessity of few markers for a good classification makes the classifier more efficient and consequently less operator time will be required in the process. For the tested protocol, markers were added in approximately 200 out of 1938 slices when the classification quality for the whole image was considered reasonable. It is important to mention that reaching an acceptable classification for the whole image allows the operator to save the classifier method and/or the training features as different files. One of these files can be later uploaded and used for classifying any other image from the original dataset. Nonetheless, these images need to undergo the same preparation steps (i.e. filtering and background masking, Fig. 5.3). The file containing the classifier model cannot be further modified for other input images, whereas the file containing the training features allows the operator to change the superpixel estimation algorithm, the classifier type, and, if non-reliable results are achieved, add new markers to improve the classification.

Figure 5.4e shows the classification result for the previously mentioned slice (z = 900) which shows pores were better delimited via machine learning than by simple thresholding (Fig. 5.3f). There was less under and overestimation of pores at the centre and the outer regions of the aggregate, respectively. Nevertheless, it is still possible to identify some flaws by carefully comparing Fig. 5.4e with Fig. 5.4a. Therefore, the resultant labels from the machine learning classification were used to define seeds for a final watershed segmentation of INLM + UM in Avizo. Aiming to leave an unclassified zone (grey) for the watershed algorithm computation (Fig. 5.4f), the pore (royal blue) and solid matrix (turquoise blue) labels were minimally eroded, while the background (pink) was not modified. The result of this procedure is depicted in Fig. 5.4g, which demonstrates a very good agreement of pore delimitations when compared with Fig. 5.4a.

With respect to the segmentation protocol described so far, one aspect worth noting is that the steps illustrated in Fig. 5.3, which were conducted using the Avizo software v. 2019.4, could alternatively have been included in the machine learning pipeline. One alternative would be to train the classifier from scratch, that is, without masking the background of INLM + UM. However, this imposes a higher level of difficulty for the optimization of the classifier, and, based on the current experience, it would be more time-consuming. Another option would be to split the machine learning pipeline, creating two different classifiers: one specialized in classifying the soil aggregate and background, which would replace the steps described in Fig. 5.3 to create the background mask (not tested here), and a second one specialized in classifying the solid matrix and pores, just like that described in Fig. 5.4.

Another relevant aspect to consider is that approximately 10 days were required to reach the result presented in Fig. 5.4g. In these 10 days, the conducted steps were: the preparation of the input image for the machine learning pipeline, the addition of markers in ~200 slices, and final watershed segmentation. The addition of markers was the most time-consuming step and was more related to the current efficiency of the machine learning algorithms than with computer processing power limitations. Presently, this operation is far from being a fast process, but the great advantage lies in the possibility of applying the trained classifier or training features originated from this laborious process for fast segmentation of the remaining images from the same dataset (this currently takes just few minutes per image), which was tested and presented a visual classification quality similar to that obtained in Fig. 5.4e (not presented here). Annotat3D software is under continuous development and has been shown to become faster and more efficient with the on-going improvements, thus requiring fewer markers per slice and fewer slices in total containing markers, which is currently what slows the process. Although there are alternative machine learning tools available that can be used for image processing, such as ImageJ (WEKA) and Dragonfly, Annotat3D is being specifically designed to handle large amounts of data in a multi-node/multi-GPU HPC environment. The software encompasses highly optimized tools to visualize, process, and segment these data in timely manner, preferably faster than other available alternatives.

5.3.3 Deep Learning Pipeline

At this point, the proposed segmentation protocol advances from the classification result obtained via machine learning and watershed methods (Fig. 5.4g) to the application of deep learning techniques to make the whole process more general, robust, and automated. More specifically, deep learning techniques aim at finding good representations of the input data as a hierarchy of features, with more abstract representations computed in terms of less abstract ones (Goodfellow et al., 2016). While traditional machine learning algorithms cannot learn features directly from the data and thus rely more on human effort (Fig. 5.4d), deep learning algorithms perform both feature extraction and classification from previously computed training label images (Bengio, 2009). The same in-house software, Annotat3D, was used to apply deep learning techniques to train a convolutional neural network to reproduce a classification considered as reference (ground truth), and also classify new similar data. Thus, it was necessary to methodically test the parameters listed in Table 5.1 to reach an optimized set of training constraints.

In this study, as a start, a workspace was created, which consists of a directory automatically structured by the Annotat3D software to save all the decisions from the deep learning pipeline. Next, a dataset was built to serve as a basis for the training step (Table 5.1). The dataset included: the masked INLM as the input data (16-bit; raw type—orthogonal planes of the image shown in Figs. 5.5a, c), the corresponding label image resulting from the machine learning and watershed methods (8-bit; raw type—orthogonal planes of the image with pores represented in blue, Fig. 5.5b), and the weight map (8-bit; raw type) based on the label image. The label image consists of values 0, 1, and 2, attributed to the background, pores, and solid matrix, respectively, and these values essentially identify the different classes present in the label image. On the other hand, the weight map was used to compel the network to focus on the pores, as this class represented, in the analysed case, only 1% of the entire image and consequently represented a more challenging task in the performed classification. In the weight map from the investigated image, intensities of 1, 255, 100, and 20 were attributed to the background, centre of pores, borders of pores, and solid matrix, respectively. After loading the data, label, and the weight map (images with dimensions of 2048 × 2048 × 1964 voxels and a voxel side length of 1.64 μm), the sampling was arranged by assigning the following values to the number of classes, sample size, and patch size: 3, 40, and 500 × 500 × 500, respectively, resulting in a dataset of 30.7 GB. In the Annotat3D software, the sample size characterizes the number of sub-images, called patches, to be selected from the input images (data, label, and weight map) to subsequently feed the neural network training, while the patch size represents the fixed 3-D dimensions of each of these sub-images. One can also include image augmentation strategies such as vertical/horizontal flip, contrast variation, additional Gaussian blur, and elastic deformation (Table 5.1) to enrich the training data (not tested here). However, some precautions should be taken as the size of the dataset may be dramatically increased by doing this.

Convolution Neural Networks (CNNs) are the most successful artificial neural networks for extracting features from sub-images used for image classification (Peixinho, 2017). In essence, CNNs are composed of a sequence of layers that combine linear convolution and non-linear operations such as activation, pooling, and normalization. The Annotat3D software offers two different CNN architectures: the Unet (2-D and 3-D approaches) and the Vnet (only for the 3-D approach) (Milletari et al., 2016; Ronneberger et al., 2015). It was observed that Vnet provided the best classification results for the current segmentation protocol. After choosing the neural network architecture (Vnet), the dataset was loaded, and, at this point, the Annotat3D software shows a list of the dataset’s characteristics (Table 5.1) that allows the verification of its correctness. Afterwards, in the settings menu, four GPUs (NVIDIA Tesla V100 32 Gb) were selected for the training step. It is known that the gradient descent-based backpropagation is a useful learning algorithm that aims to minimize a loss function calculated using the desired output (the label image) and the obtained output classification (Wani et al., 2020). The loss function can take into consideration one, all, or a fixed intermediate number of patches (the batch size) per iteration of the backpropagation algorithm (Peixinho, 2017). The loss function tends to provide lower values as the number of iterations increases. Nonetheless, one needs to find a balance among error minimization, training time, and visual accuracy between the obtained classification and the label image. In the present case, the batch size, number of iterations, learning rate, and type of loss function that provided the best results were: 1, 60,000, 10−5, cross-entropy, respectively. It resulted in a training time of ~24 h. It is important to mention that, in the current implementation of the Annotat3D software, each GPU processed one batch, which means that setting a batch size equal to one, and using four GPUs, resulted in a real batch size of 4.

When the training was finished, the network was exported and loaded together with the greyscale INLM (same data used for training, Figs. 5.5a, c) in the inference menu (Table 5.1). Four GPUs were selected to perform the inference task. By default, the inference generates a 32-bit float tiff greyscale image for each class. Each image contains the probability distribution over the specified class (the brighter the regions, the higher the probability of that region to belong to the specified class: 0—background, 1—pores, or 2—solid matrix). For instance, Fig. 5.5d shows the greyscale histogram of the probability distribution output for class 1 (pores) (Fig. 5.5e), after converting it to a 32-bit unsigned raw image type, for convenience. To reach a final segmentation and consequently limit the pore regions, a threshold value was carefully chosen from the greyscale histogram. For example, the threshold value of 9.5 × 108 overestimated the pore class (Fig. 5.5e) while the threshold value of 10.5 × 108 provided an excellent classification of pores (Fig. 5.5f). Figure 5.5g shows orthogonal slices of the resulting segmentation, which showed excellent visual agreement with the label used for training of the neural network (Fig. 5.5b).

As the main purpose of the developed protocol was to classify new data, a greyscale tomographic image from another soil aggregate (Fig. 5.5h), belonging to the same experimental set described at the beginning of this section, was submitted to the trained CNN, in a blind test mode. The segmentation result for this blind test, using the same threshold value of 10.5 × 108, is shown in Fig. 5.5i. Visually the pore class was also correctly segmented for this other soil aggregate. The remaining 31 tomographic images were also segmented, taking 2 to 4 hours of inference per image, depending on the volume occupied by the soil aggregate. So, all images could be segmented with good quality within a few days. Although this trained CNN has demonstrated to be suitable for the segmentation of a specific set of images, which were all generated in approximately the same experimental conditions, this can be useful for other sets of similar data, e.g., soil images generated with other magnifications (different voxel side length) and other X-ray beam and detector characteristics. Occasionally, the segmentation result might not be as good as it was for the original dataset, but the user can add new datasets as examples to fine-tune the CNN training and optimize its performance on the inference of new data.

Another important aspect is that, for this protocol, a whole segmented image was provided to build the dataset, but this is not mandatory. In other words, smaller fractions of the image could have been tested to investigate the potential of training a CNN. For certain, it would demand less prior effort to generate a target label via machine learning and watershed techniques. Similarly, extending its applicability to other sets of images can be performed by providing small datasets based on such different images. The timeframe for training (24 hours) and inference (a few days for 31 images) has already accelerated considerably compared to traditional segmentation methods (where months might have been necessary). Nonetheless, Annotat3D software is being improved to handle an increasing number of image repetitions and new time-resolved imaging demands at Sirius Synchrotron Facility. It is worth mentioning that the current version of Annotat3D is being deployed for usage by the beamlines of Sirius to enhance the user experience and do final debugging/optimization. The work presented in this chapter was paramount for aiding in this process. It is expected that the software will become available to the community in the near future.

5.4 Conclusions

X-ray CT imaging has become an important tool for soil sciences research. Performing high-quality segmentation is a critical step for revealing details of inner sections or volumes of soil X-ray CT images. This can open up the possibility of modelling and/or characterizing morphometric characteristics of the intricate structure of the porous system. Frequently, depending on some characteristics of the X-ray CT measurement and the condition of the sample, the related greyscale histogram does not exhibit a clear distinction between peaks associated with its phases (air and solids in general). In such cases, smoothing the image noise and enhancing phase edges by filtering are necessary, but often not sufficient to allow segmentation by a traditional global threshold method. Even local segmentation methods such as the watershed transform, which usually outperform global thresholding, becomes challenging as it depends on accurate markers for the immersion process.

Recent developments have presented complementary, or perhaps alternative, properties to the global and local segmentation methods, some of which have been employing AI for the segmentation process. In this chapter, we presented the use of a new software (Annotat3D) and protocols, based on machine learning and deep learning, that permitted the investigation, with good results, of samples with poor phase distinction. Besides providing good quality segmentation for challenging images, the followed pipelines led to faster than the usual segmentation which will extend the analysis capacity and facilitate the conduction of time-resolved studies.

References

Achanta, R., Shaji, A., Smith, K., Lucchi, A., Fua, P., & Süsstrunk, S. (2012). SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Transactions on Pattern Analysis and Machine Intelligence, 34, 2274–2281. https://doi.org/10.1109/TPAMI.2012.120

Arganda-Carreras, I., Kaynig, V., Rueden, C., Eliceiri, K. W., Schindelin, J., Cardona, A., & Seung, H. S. (2017). Trainable Weka segmentation: A machine learning tool for microscopy pixel classification. Bioinformatics, 33, 2424–2426.

Backeberg, N. R., Iacoviello, F., Rittner, M., Mitchell, T. M., Jones, A. P., Day, R., Wheeler, J., Shearing, P. R., Vermeesch, P., & Striolo, A. (2017). Quantifying the anisotropy and tortuosity of permeable pathways in clay-rich mudstones using models based on X-ray tomography. Scientific Reports, 7, 14838.

Bacq-Labreuil, A., Crawford, J., Mooney, S. J., Neal, A. L., & Ritz, K. (2019). Cover crop species have contrasting infuence upon soil structural genesis and microbial community phenotype. Scientific Reports, 9, 7473.

Banhart, J. (2008). Advanced tomographic methods in materials research and Engeneering. Oxford University Press.

Bengio, Y. (2009). Learning deep architectures for AI. Found. Trends® Mach. Learn., 2, 1–127. https://doi.org/10.1561/2200000006

Beucher, S., & Lantuejoul, C. (1979). Use of watersheds in contour detection. In International Workshopon image processing: Real-time edge and motion detection/estimation (pp. 12–21). Rennes.

Beucher, S., & Meyer, F. (1993). The morphological approach to segmentation: The watershed transformation. In E. Dougherty (Ed.), Mathematical morphology in image processing (pp. 433–481). Marcel Dekker.

Borges, J. A. R., Pires, L. F., Cássaro, F. A. M., Auler, A. C., Rosa, J. A., Heck, R. J., & Roque, W. L. (2019). X-ray computed tomography for assessing the effect of tillage systems on topsoil morphological attributes. Soil & Tillage Research, 189, 25–35. https://doi.org/10.1016/j.still.2018.12.019

Buades, A., Coll, B., & Morel, J.-M. (2011). Non-local means Denoising. Image Processing On Line, 1, 208–212. https://doi.org/10.5201/ipol.2011.bcm_nlm

Buades, A., Coll, B., & Morel, J. M. (2005). A non-local algorithm for image denoising, in: Proceedings of the IEEE computer society conference on computer vision and pattern recognition (pp. 60–65). IEEE Comput. Soc. https://doi.org/10.1109/CVPR.2005.38

Buades, A., Coll, B., Morel, J.M., (2004). On image denoising methods. Technical Report 2004, Centre de Mathematiques et de Leurs Applications (CMLA), 2004.

Burr-Hersey, J. E., Mooney, S. J., Bengough, A. G., Mairhofer, S., & Ritz, K. (2017). Developmental morphology of cover crop species exhibit contrasting behaviour to changes in soil bulk density, revealed by X-ray computed tomography. PLoS One, 12, e0181872. https://doi.org/10.1371/journal.pone.0181872

Buzug, T. M. (2008). Fundamentals of X-ray physics. In Computed tomography: From photon statistics to modern cone-beam CT (pp. 15–73). Springer. https://doi.org/10.1007/978-3-540-39408-2_2

Camargo, M. A., Cássaro, F. A. M., & Pires, L. F. (2022). How do geometric factors influence soil water retention? A study using computerized microtomography. Bulletin of Engineering Geology and the Environment, 81, 137. https://doi.org/10.1007/s10064-022-02632-z

Chauhan, S., Rühaak, W., Khan, F., Enzmann, F., Mielke, P., Kersten, M., & Sass, I. (2016). Processing of rock core microtomography images: Using seven different machine learning algorithms. Computers & Geosciences, 86, 120–128.

Chowdhary, K. R. (2020). Introducing artificial intelligence, in: Fundamentals of artificial intelligence (pp. 1–23). Springer. https://doi.org/10.1007/978-81-322-3972-7_1

Cortes, C., & Vapnik, V. (1995). Support-vector networks. Machine Learning, 20, 273–297. https://doi.org/10.1007/bf00994018

Costa, G. S. R., Vasconcelos, G. J. Q., O’Dowd, F. P., & Archilha, N. L. (2017). Automation solutions and prototypes for the X-ray tomography beamline of Sirius, the new brazilian synchrotron light source. In I. Costa (Ed.), ICALEPCS 2017 (pp. 923–927).

Craievich, A. F. (2020). Synchrotron radiation in Brazil. Past, present and future. Radiation Physics and Chemistry, 167, 108253. https://doi.org/10.1016/j.radphyschem.2019.04.003

Distante, A., & Distante, C. (2020). Handbook of image processing and computer vision: Volume 2: From image to pattern. Springer International Publishing. https://doi.org/10.1007/978-3-030-42374-2

Ertel, W. (2017). Introduction to artificial intelligence (2nd ed.). Springer International Publishing. https://doi.org/10.1007/978-3-319-58487-4_1

Ferreira, T. R., Pires, L. F., & Reichardt, K. (2022). 4-D X-ray computed tomography in soil science: An overview and future perspectives at Mogno/Sirius. Brazilian Journal of Physics, 52, 33. https://doi.org/10.1007/s13538-021-01043-x

Ferreira, T. R., Pires, L. F., Wildenschild, D., Brinatti, A. M., Borges, J. A. R., Auler, A. C., & dos Reis, A. M. H. (2019). Lime application effects on soil aggregate properties: Use of the mean weight diameter and synchrotron-based X-ray μCT techniques. Geoderma, 338, 585–596.

Ferreira, T. R., Pires, L. F., Wildenschild, D., Heck, R. J., & Antonino, A. C. D. (2018). X-ray microtomography analysis of lime application effects on soil porous system. Geoderma, 324, 119–130. https://doi.org/10.1016/j.geoderma.2018.03.015

Galdos, M., Brown, E., Rosolem, C., Pires, L. F., Hallett, P., & Mooney, S. J. (2020). Brachiaria species influence nitrate transport in soil by modifying soil structure with their root system. Scientific Reports, 10, 5072.

Galdos, M. V., Pires, L. F., Cooper, H. V., Calonego, J. C., Rosolem, C. A., & Mooney, S. J. (2019). Assessing the long-term effects of zero-tillage on the macroporosity of Brazilian soils using X-ray computed tomography. Geoderma, 337, 1126–1135.

Gao, W., Schlüter, S., Blaser, S. R. G. A., Shen, J., & Vetterlein, D. (2019). A shape-based method for automatic and rapid segmentation of roots in soil from X-ray computed tomography images: Rootine. Plant and Soil, 441, 643–655. https://doi.org/10.1007/s11104-019-04053-6

Garfi, G., John, C. M., Berg, S., & Krevor, S. (2020). The sensitivity of estimates of multiphase fluid and solid properties of porous rocks to image processing. Transport in Porous Media, 131, 985–1005. https://doi.org/10.1007/s11242-019-01374-z

Gillespy, T., 3rd, & Rowberg, A. H. (1994). Dual lookup table algorithm: An enhanced method of displaying 16-bit-grey-scale images on 8-bit RGB graphic systems. Journal of Digital Imaging, 7, 13–17.

Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT Press.

Goyal, B., Dogra, A., Agrawal, S., Sohi, B. S., & Sharma, A. (2020). Image denoising review: From classical to state-of-the-art approaches. Information Fusion, 55, 220–244. https://doi.org/10.1016/j.inffus.2019.09.003

Gonçalves, H. M., Vasconcelos, G. J. Q., Rosa, P. R. R., Carvalho, M., Archilha, N. L., Spina, T. V., 2019. cudaIFT: 180x faster image foresting transform for Waterpixel estimation using CUDA. In: 14th International Conference on Computer Vision Theory and Applications (VISAPP), 395–404.

Gull, S. F., & Skilling, J. (1984). Maximum entropy method in image processing. IEE Proceedings F, 131, 646–659.

Han, Q., Zhao, Y., Liu, L., Chen, Y., & Zhao, Y. (2019). A simplified convolutional network for soil pore identification based on computed tomography imagery. Soil Science Society of America Journal, 83, 1309–1318. https://doi.org/10.2136/sssaj2019.04.0119

Heinzl, C., Amirkhanov, A., & Kastner, J. (2018). Processing, analysis and visualization of CT data. In S. Carmignato, W. Dewulf, & R. Leach (Eds.), Industrial X-ray computed tomography (pp. 99–142). Springer International Publishing.

Ho, T. K. (1998). The random subspace method for constructing decision forests. IEEE Transactions on Pattern Analysis and Machine Intelligence, 20, 832–844. https://doi.org/10.1109/34.709601

Houston, A. N., Schmidt, S., Tarquis, A. M., Otten, W., Baveye, P. C., & Hapca, S. M. (2013). Effect of scanning and image reconstruction settings in X-ray computed microtomography on quality and segmentation of 3-D soil images. Geoderma, 207(208), 154–165.

Iassonov, P., Gebrenegus, T., & Tuller, M. (2009). Segmentation of X-ray computed tomography images of porous materials: A crucial step for characterization and quantitative analysis of pore structures. Water Resources Research, 45, W09415.

Jan, J. (2006). Medical image processing reconstruction and restoration: Concepts and methods. Taylor & Francis.

Jarvis, N., Larsbo, M., & Koestel, J. (2017). Connectivity and percolation of structural pore networks in a cultivated silt loam soil quantified by X-ray tomography. Geoderma, 287, 71–79. https://doi.org/10.1016/j.geoderma.2016.06.026

Jiulun, F., & Winxin, X. (1997). Minimum error thresholding: A note. Pattern Recognition Letters, 18, 705–709.

Joshi, A. V. (2020). Machine learning and artificial intelligence. Springer International Publishing. https://doi.org/10.1007/978-3-030-26622-6_4

Keselbrener, L., Shimoni, Y., & Akselrod, S. (1992). Nonlinear filters applied on computerized axial tomography: Theory and phantom images. Medical Physics, 19, 1057–1064.

Khan, F., Enzmann, F., Kersten, M., Wiegmann, A., & Steiner, K. (2012). 3-D simulation of the permeability tensor in a soil aggregate on basis of nanotomographic imaging and LBE solver. Journal of Soils and Sediments, 12, 86–96. https://doi.org/10.1007/s11368-011-0435-3

Kittler, J., & Illingworth, J. (1986). Minimum error thresholding. Pattern Recognition, 19, 41–47.

Landini, L., Positano, V., & Santarelli, M. F. (2008). 3-D medical imaging processing. In E. Neri, D. Caramella, & C. Bartolozzi (Eds.), Image processing in radiology. Springer-Verlag.

Leue, M., Uteau-Puschmann, D., Peth, S., Nellesen, J., Kodešová, R., & Gerke, H. H. (2019). Separation of soil macropore types in three-dimensional X-ray computed tomography images based on pore geometry characteristics. Vadose Zone Journal, 18, 180170. https://doi.org/10.2136/vzj2018.09.0170

Ma, R., Jiang, Y., Liu, B., & Fan, H. (2020). Effects of pore structure characterized by synchrotron-based micro-computed tomography on aggregate stability of black soil under freeze-thaw cycles. Soil & Tillage Research, 104855. https://doi.org/10.1016/j.still.2020.104855

Marcheschi, P. (2008). Elaboration of the images in the spatial domain. In E. Neri, D. Caramella, & C. Bartolozzi (Eds.), Image processing in radiology. Springer-Verlag.

Milletari, F., Navab, N., & Ahmadi, S.-A. (2016). V-Net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the fourth international conference on 3-D vision (3-DV) (pp. 565–571).

Miqueles, E., Koshev, N., & Helou, E. S. (2018). A Backprojection slice theorem for tomographic reconstruction. IEEE Transactions on Image Processing, 27, 894–906. https://doi.org/10.1109/TIP.2017.2766785

Müter, D., Pedersen, S., Sϕrensen, H. O., Feidenhans’l, R., & Stipp, S. L. S. (2012). Improved segmentation of X-ray tomography data from porous rocks using a dual filtering approach. Computers & Geosciences, 49, 131–139.

Oh, W., & Lindquist, W. B. (1999). Image thresholding by indicator kriging. IEEE Transactions on Pattern Analysis and Machine Intelligence, 21, 590–602.

Oliveira, J. A. T., Cássaro, F. A. M., & Pires, L. F. (2020). The porous size distribution obtained and analyzed by free acess software. Revista Brasileira de Ensino de Física, 42, e20200192.

Oliveira, J. A. T., Cássaro, F. A. M., & Pires, L. F. (2021). Estimating soil porosity and pore size distribution changes due to wetting-drying cycles by morphometric image analysis. Soil & Tillage Research, 205, 104814.

Otsu, N. (1979). A threshold selection method from grey-level histograms. IEEE Transactions on Systems, Man, and Cybernetics, 9, 62–66.

Pak, T., Luz, L. F., Tosco, T., Costa, G. S. R., Rosa, P. R. R., & Archilha, N. L. (2020). Pore-scale investigation of the use of reactive nanoparticles for in situ remediation of contaminated groundwater source. Proceedings of the National Academy of Sciences, 2019, 18683. https://doi.org/10.1073/pnas.1918683117

Pathmanabhan, A., & Dinesh, S. (2007). The effect of Gaussian blurring on the extraction of peaks and pits from digital elevation models. Discrete Dynamics in Nature and Society, 62137, 1–12.

Peixinho, A.Z., 2017. Learning image features by convolutional networks under supervised data constraint. Ph.D. Thesis (Unicamp).

Peth, S., Horn, R., Beckmann, F., Donath, T., Fischer, J., & Smucker, A. J. M. (2008). Three-dimensional quantification of intra-aggregate pore-space features using synchrotron-radiation-based microtomography. Soil Science Society of America Journal, 72, 897–907.

Pini, R., & Madonna, C. (2016). Moving across scales: A quantitative assessment of X-ray CT to measure the porosity of rocks. Journal of Porous Materials, 23, 325–338.

Pinto, A., Borin, G., Carlos, B., Bernardi, M., Sarmento, M., Peixinho, A., Spina, T., & Miqueles, E. (2022). Annotat3D: a modern web application for interactive segmentation of volumetric images at Sirius/LNLS. Synchrotron Radiation News. https://doi.org/10.1080/08940886.2022.2112501

Pires, L. F., Auler, A. C., Roque, W. L., & Mooney, S. J. (2020). X-ray microtomography analysis of soil pore structure dynamics under wetting and drying cycles. Geoderma, 362, 114103.

Pires, L. F., Roque, W. L., Rosa, J. A., & Mooney, S. J. (2019). 3-D analysis of the soil porous architecture under long term contrasting management systems by X-ray computed tomography. Soil & Tillage Research, 191, 197–206. https://doi.org/10.1016/j.still.2019.02.018

Pires, L. F., Borges, J. A. R., Rosa, J. A., Cooper, M., Heck, R. J., Passoni, S., & Roque, W. L. (2017). Soil structure changes induced by tillage systems. Soil & Tillage Research, 165, 66–79.

Pöhlitz, J., Rücknagel, J., Schlüter, S., Vogel, H. J., & Christen, O. (2019). Computed tomography as an extension of classical methods in the analysis of soil compaction, exemplified on samples from two tillage treatments and at two moisture tensions. Geoderma, 346, 52–62. https://doi.org/10.1016/j.geoderma.2019.03.023

Polikar, R. (2012). Ensemble learning. In Y. Ma (Ed.), Zhang, C (pp. 1–34). Ensemble Machine Learning. https://doi.org/10.1007/978-1-4419-9326-7_1

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-net: Convolutional networks for biomedical image segmentation. In N. Navab, J. Hornegger, W. Wells, & A. Frangi (Eds.), Medical image computing and computer-assisted intervention–MICCAI 2015 (pp. 234–241). Springer. https://doi.org/10.1007/978-3-319-24574-4_28

Schlüter, S., Sheppard, A., Brown, K., & Wildenschild, D. (2014). Image processing of multiphase images obtained via X-ray microtomography: A review. Water Resources Research, 50, 3615–3639. https://doi.org/10.1002/2014WR015256

Spina, T. V., Vasconcelos, G. J. Q., Gonçalves, H. M., Libel, G. C., Pedrini, H., Carvalho, T. J., & Archilha, N. L. (2018). Towards real time segmentation of large-scale 4-D micro/Nanotomography images in the Sirius synchrotron light source. Microscopy and Microanalysis, 24, 92–93. https://doi.org/10.1017/s1431927618012849

Sun, T., & Neuvo, Y. (1994). Detail-preserving median based filters in image processing. Pattern Recognition Letters, 15, 341–347.

Tzotsos, A., & Argialas, D. (2008). Support vector machine classification for object-based image analysis. In T. Blaschke, S. Lang, & G. J. Hay (Eds.), Object-based image analysis (pp. 663–677). Springer. https://doi.org/10.1007/978-3-540-77058-9_36

Tuller, M., Kulkarni, R., & Fink, W. (2013). Segmentation of X-ray CT data of porous materials: A review of global and locally adaptive algorithms. In S. H. Anderson & J. W. Hopmans (Eds.), Soil–water–root processes: Advances in tomography and imaging (pp. 157–182). John Wiley & Sons, Ltd. https://doi.org/10.2136/sssaspecpub61.c8

Vasconcelos, G. J. Q., Antonieti, G., Libel, G. C., Rosa, P. R. R., Archilha, N. L., Carvalho, T. J., Pedrini, H., & Spina, T. V. (2018). Evaluation of segmentation methods based on classification patterns for micro-tomography applications in rock analysis. In Conference on Graphics, Patterns and Images, 31. (SIBGRAPI). Sociedade Brasileira de Computação, Foz do Iguaçu, PR, Brazil.

Vogel, H. J. (1997). Morphological determination of pore connectivity as a function of pore size using serial sections. European Journal of Soil Science, 48, 365–377.

Wang, W. (2008). Rock particle image segmentation and systems. In P.-Y. Yin (Ed.), Pattern recognition techniques, technology and applications. I-Tech.

Wang, W., Kravchenko, A. N., Smucker, A. J. M., Liang, W., & Rivers, M. L. (2012). Intra-aggregate pore characteristics: X-ray computed microtomography analysis. Soil Science Society of America Journal, 76, 1159. https://doi.org/10.2136/sssaj2011.0281

Wani, M. A., Bhat, F. A., Afzal, S., & Khan, A. I. (2020). Introduction to deep learning, in: Advances in deep learning (pp. 1–11). Springer. https://doi.org/10.1007/978-981-13-6794-6_1

Weikert, T., Cyriac, J., Yang, S., Nesic, I., Parmar, V., & Stieltjes, B. (2020). A practical guide to artificial intelligence–based image analysis in radiology. Investigative Radiology, 55, 1–7. https://doi.org/10.1097/RLI.0000000000000600

Yen, J.-C., Chang, F. J., & Chang, S. (1995). A new criterion for automatic multilevel thresholding. IEEE Transactions on Image Processing, 46, 82–95.

Zhao, Y., Hu, X., & Li, X. (2020). Analysis of the intra-aggregate pore structures in three soil types using X-ray computed tomography. Catena, 193, 104622. https://doi.org/10.1016/j.catena.2020.104622

Zhou, H., Whalley, W. R., Hawkesford, M. J., Ashton, R. W., Atkinson, B., Atkinson, J. A., Sturrock, C. J., Bennett, M. J., & Mooney, S. J. (2020). The interaction between wheat roots and soil pores in structured field soil. Journal of Experimental Botany. https://doi.org/10.1093/jxb/eraa475

Acknowledgements

This research used resources of the Brazilian Synchrotron Light Laboratory (LNLS), an open national facility operated by the Brazilian Center for Research in Energy and Materials (CNPEM) for the Brazilian Ministry for Science, Technology, Innovations, and Communications (MCTIC). The IMX beamline staff is acknowledged for assistance during the experiments. The Scientific Computing Group is acknowledged for assistance on the use of the in-house software (Annotat3D). TRF thanks Lucas Portes Ramos, participant student in the 29° Summer Scholarship Program held by CNPEM, for collaborating in the methodical testing of the deep learning parameters. LFP would like to acknowledge the financial support provided by the Brazilian National Council for Scientific and Technological Development (CNPq) and the Coordination for the Improvement of Higher Education Personnel (Capes) through the Grants 304925/2019-5 (Productivity in Research) and 88881.119578/2016-01 (Visiting Professor).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Ferreira, T.R., Cássaro, F.A.M., Zhou, H., Pires, L.F. (2022). X-ray Computed Tomography Image Processing & Segmentation: A Case Study Applying Machine Learning and Deep Learning-Based Strategies. In: Jon Mooney, S., Young, I.M., Heck, R.J., Peth, S. (eds) X-ray Imaging of the Soil Porous Architecture. Springer, Cham. https://doi.org/10.1007/978-3-031-12176-0_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-12176-0_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-12175-3

Online ISBN: 978-3-031-12176-0

eBook Packages: Physics and AstronomyPhysics and Astronomy (R0)