Abstract

In this paper, we present a Fast Motion Deblurring-Conditional Generative Adversarial Network (FMD-cGAN) that helps in blind motion deblurring of a single image. FMD-cGAN delivers impressive structural similarity and visual appearance after deblurring an image. Like other deep neural network architectures, GANs also suffer from large model size (parameters) and computations. It is not easy to deploy the model on resource constraint devices such as mobile and robotics. With the help of MobileNet [1] based architecture that consists of depthwise separable convolution, we reduce the model size and inference time, without losing the quality of the images. More specifically, we reduce the model size by 3–60x compare to the nearest competitor. The resulting compressed Deblurring cGAN faster than its closest competitors and even qualitative and quantitative results outperform various recently proposed state-of-the-art blind motion deblurring models. We can also use our model for real-time image deblurring tasks. The current experiment on the standard datasets shows the effectiveness of the proposed method.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Image degradation by motion blur generally occurs due to movement during the capture process from the camera or capturing using lightweight devices such as mobile phones and low intensity during camera exposure. Blur in the images degrades the perceptual quality. For example, blur distorts the object’s structure (Fig. 1).

Image Deblurring is a method to remove the blurring artifacts and distortion from a blurry image. Human vision can easily understand the blur in the image. However, it is challenging to create metrics that can estimate the blur present in the image. Image degradation model using non-uniform blur kernel [4, 5] is given in Eq. 1.

where, \(I_B\) denotes a blurred image, K(M) denotes unknown blur kernels depending on M’s motion field. \(I_S\) denotes a latent sharp image, \(*\) denotes a convolution operation, and N denotes the noise. As an inverse problem, we retrieve sharp image \(I_S\) from blur image \(I_B\) during the deblurring process. The deblurring problem generally classified as non-blind deblurring [9] and blind deblurring [10, 11], according to knowledge of blur kernel K(M) is known or not.

Our work aims at a single image blind motion deblurring task using deep-learning. The deep-learning methods are effective in performing various computer vision tasks such as object removal [15, 16], style transfer [17], and image restoration [3, 19, 20]. More specifically, convolution neural networks (CNNs) based approaches for image restoration tasks are increasing, e.g., image denoising [18], super-resolution [19], and deblurring [3, 20].

The applications of Generative Adversarial Networks (GANs) [30] are increasing immensely, particularly image-to-image conversion GANs [7] have been successfully used on image enhancement, image synthesis, image editing and style transfer. Image deblurring could be formulated as an image-to-image translation task. Generally, applications that interact with humans (e.g., Object Detection) require to be faster and lightweight for a better experience. Image deblurring could be useful pre-processing steps of other computer vision tasks such as Object Detection (Fig. 1 and Fig. 2).

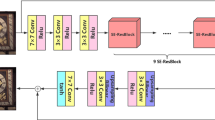

In this paper, we propose a Fast Motion Deblurring conditional Generative Adversarial Network architecture (FMD-cGAN). Our FMD-cGAN architecture is based on conditional GANs [40] and the resnet network architecture [6] (Fig. 5). We also used depthwise separable convolution (Fig. 3) inspired from MobileNet to improve efficiency. A MobileNet network [1] has fewer Multiplications and Additions (smaller complexity) operations, and fewer parameters (smaller model size) compare to the same network with regular convolution operation.

Unlike other GAN frameworks, where we give the sharp image (real example) and output image from generator network (fake example) as the inputs into Discriminator network [7, 40], we train our Discriminator (Fig. 4) by providing input as combining blurred image with the output image from the generator network (or blurred image with sharp image).

Different from previous work, we propose to use Hinge loss [31] and Perceptual loss [8] to improve the quality of the output image. Hinge loss improves the fidelity and diversity of the generated images [49]. Using the Hinge loss in our FMD-cGAN allows building lightweight neural network architectures for the single image motion deblurring task compared to standard Deep ResNet architectures. The Perceptual loss [8] is used as content loss to generate photo-realistic images in our GAN framework.

Contributions: The major contributions are summarized as below.

-

We propose a faster and light-weight conditional GAN architecture (FMD-cGAN) for blind motion deblurring tasks. We show that FMD-cGAN (ours) is efficient with lesser inference time than DeblurGAN [3], DeblurGANv2 [27], and DeepDeblur [22] models (Table 1).

-

We have performed extensive experiments on GoPro dataset and REDS dataset (Sect. 6). The results shows that our FMD-cGAN outputs images with good visual quality and structure similarity (Fig. 6, Fig. 7, and Table 2).

-

We also provide two variants (WILD and Comb) of FMD-cGAN to show that image deblurring task could be improved by pre-training network (Table 1 and Sect. 5).

-

We have also performed ablation study to illustrate that our network design choices improves the deblurring performance (Sect. 7).

2 Background

2.1 Image Deblurring

Images can have different types of blur problems, such as motion blur, defocus blur, and handshake blur. We have described that image deblurring is classified into two types: Non-blind image deblurring and Blind image deblurring (Sect. 1).

Non-blind deblurring is an ill-posed problem. The noise inverse process is unstable; a small quantity of noise can cause critical distortions. Most of the earlier works [12,13,14] aims to perform non-blind deblurring task by assuming that blur kernels K(M) are known. Blind deblurring techniques for a single image, which use Deep-learning based approaches, are observed to be effective in single image deblurring tasks [22, 39] because most of the kernel-based methods are not sufficient to model the real world blur [37]. The task is to estimates both the sharp image \(I_S\) and the blur kernel K(M) for image restoration. There are also classical approaches such as low-rank prior [46] and dark channel prior [47] that are useful for deblurring, but they also have shortcomings.

2.2 Generative Adversarial Networks

Generative Adversarial Network (GAN) was initially developed and introduced by Ian Goodfellow and his fellow workers in 2014 [30]. GAN framework includes two competing network architectures: a generator network G and a discriminator network D. Generator (G) task is to generate fake samples similar to input by capturing the input data distribution, and on the opposite side, the Discriminator (D) aims to differentiate between the fake and real samples; and pass this information to the G so that G can learn. Generator G and Discriminator D follows the minimax objective defined as follows.

Here, in Eq. 2, the generator G aims to minimize the value function V, and the discriminator D tries to maximize the value function V. Moreover, the generator G faces problems such as mode collapse and gradient diminishing (e.g., Vanilla GAN).

WGAN and WGAN-GP: To deal with mode collapse and gradient diminishing, WGAN method [25] uses Earth-Mover (Wasserstein-1) distance in the loss function. In this implementation, the discriminator output layer is a linear one, not sigmoid (discriminator output’s a real value). WGAN [25] performs weight clipping \([{-c},\ c]\) to enforce the Lipschitz constraint on the critic (i.e., discriminator). This method faces the issue of gradient explosion/vanishing without proper value of weight clipping parameter c. WGAN with Gradient penalty (WGAN-GP) [26] resolve above issues with WGAN [25]. WGAN-GP enforces a penalty on the gradient norm for random samples \(\tilde{x} \sim P_{\tilde{x}}\). The objective function of WGAN-GP is as below.

WGAN-GP [26] makes the WGAN [25] training more stable and does not require hyperparameter tuning. The DeblurGAN [3] used WGAN-GP method (Eq. 3) for single image blind motion deblurring.

Hinge Loss: In our method, we used Hinge loss [31, 32] which is giving better result as compared to WGAN-GP [26] based deblurring method. Hinge loss output also a real value. Generator loss \(L_G\) and Discriminator loss \(L_D\) in the presence of Hinge loss is defined as follows.

Here, D tries that a real image will get a large value, and a fake or generated image will get a small value.

3 Related Works

The deep learning-based methods attempt to estimate the motion blur in the degraded image and use this blurring information to restore the sharp image [21]. The methods which use the multi-scale framework [22] to recover the deblurred image are computationally expensive. The use of GANs also increasing in blind kernel free single image deblurring tasks such as Ramakrishnan et al. [24] used image translation framework [7] and densely connected convolution network [23]. The methods above performs image-deblurring task, when input image may have blur due to multiple sources. Kupyn et al. [3] proposed the DeblurGAN method, which uses the Wasserstein GAN [25] with gradient penalty [26] and the Perceptual loss [8]. Kupyn et al. [27] proposed a new method DeblurGAN-v2, which is faster and has better results than the previously proposed method; this method uses the feature pyramid network [28] in the generator. A study of various single image blind deblurring methods is provided in [29].

4 Our Method

In our proposed method, the blur kernel knowledge is not present, and from a given blur image \(I_B\) as an input, our purpose is to develop a sharp image \(I_S\) from \(I_B\). For the deblurring task, we train a Generator network denoted by \(G_{\theta _G}\). During the training period, along with Generator, there is one another CNN also present \(D_{\theta _D}\) referred to as the critic network (i.e., Discriminator). The Generator \(G_{\theta _G}\) and the Discriminator \(D_{\theta _D}\) are trained in an adversarial manner. In what follows, we describe the network architecture and the loss functions for our method.

4.1 Network Architecture

The generator network, a chief component of proposed model, is a transformed version of residual network architecture [6] (Sect. 4.1). The discriminator architecture, which helps to learn the Generator, is a transformed version of Markovian Discriminator (PatchGAN) [7] (Sect. 4.1). The residual network architecture helps us to build deeper CNN architectures. Also, this architecture is effective because we want our network to learn only the difference between pairs of sharp and blur images as they are almost alike in values.

We used the depthwise separable convolution in place of the standard convolution layer to reduce the inference time and model size [1]. Generator aims to generate sharp images given the blurred images as input. Note that generated images need to be realistic so that the Discriminator thinks that generated images are from the real data distribution. In this way, the Generator helps to generate a visually attractive sharp image from an input blurred image. Discriminator goal is to classify if the input is from the real data distribution or output from the generator. Discriminator accomplish this by analyzing the patches in the input image for making a decision. The changes which we made in the resnet block displayed in Fig. 3, we convert structure (a) into structure (b).

Generator Architecture. The Generator’s CNN architecture is displayed in Fig. 5. This architecture is alike to the style transfer architecture which is proposed by Johnson et al. [8]. The generator network has two strided convolution blocks in begining with stride 2, nine depthwise separable convolutions based residual blocks (MobileResnet Block) [1, 6], and two transposed convolution blocks, and the global skip connection. In our architecture, most of the computation is done by MobileResNet-Block. Therefore, we use depthwise separable convolution here to reduce computation cost without affecting accuracy.

Every convolution and transposed convolution layer have an instance normalization layer [33] and a ReLU activation layer [34] behind it. Each Mobile Resnet block consists of two depthwise separable convolutions [1], a dropout layer [35], two instance normalization layers after each separable convolution block, and a ReLU activation layer. In each mobile resnet block, after the first depth-wise separable convolution layer, a dropout regularization layer with a probability of zero is added. Furthermore, we add a global skip connection in the model, also referred to as ResOut.

When we use many convolution layers, it will become difficult to generalize over first-level features, deep generative CNNs often unintentionally memorize high-level representations of edges. The network will be unable to retrieve sharp boundaries at proper positions from the blur photos as a result of this. We combine the head and tail of the network. Since the gradients value now can reach from the tail straight to the beginning and affect the update in the lower layers, generation efficiency improves significantly [36]. In the blurred image \(I_B\), CNN learns residual correction \(I_R\), so the resulting sharp image is \(I_S = I_B + I_R\). From experiments, we come to know that such formulation improves the training time, and generalizes the resulting model better.

Discriminator Architecture. In our model, we create a critic network \(D_{\theta _D}\) also refer to as Discriminator. \(D_{\theta _D}\) guides Generator network \(G_{\theta _G}\) to generate sharp images by giving feedback on the input is from real data distribution or generator output. The architecture of Discriminator network is shown in Fig. 4. We avoid high-depth Discriminator network as it’s goal is to perform the classification task unlike image synthesis task of Generator network. In our FMD-cGAN framework, the Discriminator network is similar to the Markovian patch discriminator, also refer to as PatchGAN [7]. Except for the last convolutional layer, InstanceNorm layer and LeakyReLU with a value of 0.2, follow all convolutional layers of the network. This architecture looks for explicit structural characteristics at many local patches. It also ensures that the generated raw images have a rich color.

4.2 Loss Functions

The total loss function for FMD-cGAN deblurring framework is the mixture of adversarial loss and content loss.

In Eq. 6, \(L_{GAN}\) represents the advesarial loss (Sect. 4.2), \(L_X\) represents the content loss (Sect. 4.2) and \(\lambda \) represents the hyperparameter which controls the effect of \(L_X\). The value of \(\lambda \) is equal to 100 in the current experiment.

Adversarial Loss. To train a learning-based image restoration network, we need to compare the difference between the restored and the original images during the training stage. Many image restoration works are using an adversarial-based network to generate sharp images [19, 20]. During the training stage, the adversarial loss after pooling with other losses helps to determine how good the Generator is working against the Discriminator [22]. Initial works based on conditional GANs use the objective function of the vanilla GAN as the loss function [19]. Lately, least-square GAN [42] was observed to be better balanced and produce the good quality desired outputs. We apply Hinge loss [31] (Eq. 4 and Eq. 5) in our model to provide good results with the generator architecture [49]. Generator loss (\(L_G\)) and Discriminator loss (\(L_D\)) are computed as follows (Eq. 7 and Eq. 8).

If we do not use adversarial loss in our network, it still converges. However, the output images will be dull with not many sharp edges, and these output images are still blurry because the blur at edges and corners is still intact. If we only use adversarial loss in our network, edges are retained in images, and more practical color assignment happens. However, it has two issues: still, it has no idea about the structure, and Generator is working according to the guidance provided by Discriminator based on the generated image. We remove these issues with the adversarial loss by combining adding with the Perceptual loss.

Content Loss. Generally, there are two choices for the pixel-based content loss: (a) L1 or MAE loss and (b) L2 or MSE loss. Moreover, above loss functions may produce blurry artifacts on the generated image due to the average of pixels [19]. Due to this issue, we used Perceptual loss [8] function for content loss. Unlike L2 Loss, Perceptual compares the difference between CNN feature maps of the restored image and the original image. This loss function puts structural knowledge into the Generator, which helps it against the patch-wise decision of the Markovian Discriminator. The equation of the Perceptual loss is as follows:

where \(W_{i,j}\) and \(H_{i,j}\) are the width and height of the \((i,j)^{th}\) ReLU layer of the VGG-16 network [43], here i and j denote \({j^{th}}\) convolution ( after activation) before the \(i^{th}\) max-pooling layer. \(\phi _{i,j}\) denotes the feature map. In our current method, we use the output of activations from \(VGG_{3,3}\) convolutional layer. The output from activations of the end layers of the network represents more features information [19, 44]. The Perceptual loss helps to restore the general content [7, 19]; on the other side adversarial loss helps to restore texture details. If we do not use the Perceptual loss in our network or use simple MSE based loss on pixels, the network will not converge to a good state.

4.3 Training Datasets

GoPro Dataset. The images of the GoPro dataset [22] are generated using the GoPro Hero 4 camera. The camera captures 240 frames per second video sequences. The blurred images are captured by averaging consecutive short-exposure frames. It is the most commonly used benchmark dataset in motion deblurring tasks, containing 3214 pairs of blur and sharp images. We use 1111 pairs of images for testing purposes and the remaining 2103 pairs of images for training [22].

REDS Dataset. The Realistic and Dynamic Scenes dataset [38] was designed for video deblurring and super-resolution, but it is also helpful in the image deblurring. The dataset comprises 300 video sequences having a resolution of 720 \(\times \) 1280. Here, the training set contains 240 videos, the validation set contains 30 videos, and the testing set contains 30 videos. Each video has 100 frames. REDS dataset is generated from 120 fps videos, synthesizing blurry frames by merging subsequent frames. We have 240*100 pairs of blur and sharp images for training, 30*100 pairs of blur and sharp images for testing.

5 Training Details

The PytorchFootnote 1 deep learning library is used to implement our model. The training of the model is accomplished on a single Nvidia Quadro RTX 5000 GPU using different datasets. The model takes image patches as input and fully convolutional to be used on images of arbitrary size. There is no change in the learning rate for the first 150 epochs; after it, we decrease the learning rate linearly to zero for the subsequent 150 epochs. We used Adam [45] optimizers for loss functions in both the Generator and the Discriminator with a learning rate of 0.0001. During the training time, we kept the batch size of 1, which gives a better result. Furthermore, we used the dropout layer (rate = 0) and the Instancenormalization layer instead of the batch-normalization layer concept both for the Generator and the Discriminator [7]. The training time of the network is approximately 2.5 days, which is significantly less than its competitive network. We have provided training details in Table 3. We discuss the two variants of FMD-cGAN as follows.

(1) FMD-cGAN\(_{wild}\): our first trained model is WILD, which represents that the model is trained only on a single dataset such as GoPro and REDS dataset on which we are going to evaluate it. For example, in the case of the GoPro dataset model is trained on 2103 pairs of blur and sharp images of the GoPro dataset.

(2) FMD-cGAN\(_{comb}\): The second trained model is Comb, which is first trained on the REDS training dataset; after training, we evaluate its performance on the REDS testing dataset. Now we train this pre-trained model on the GoPro dataset. We test both trained models Comb and WILD final performance on the GoPro dataset’s 1111 test images.

6 Experimental Results

We compare the results of our FMD-cGAN with relevant models using the standard performance metrics (PSNR, SSIM). We also show inference time of each model (i.e., average running time per image) on a single GPU (Nvidia RTX 5000) and CPU (2 X Intel Xeon 4216 (16C)). To calculate Number of parameters and Number of MACs operations in PyTorch based model, we use pytorch-summaryFootnote 2 and torchprofileFootnote 3 libraries.

6.1 Quantitative Evaluation on GoPro Dataset

Here, we discuss the performance of our method on GoPro Dataset. We used 1111 pairs of blur and sharp images from GoPro test dataset for evaluation. We compare our model’s results with other state-of-the-art model’s results: where Sun et al. [5] is a traditional method, while others are deep learning-based methods: Xu et al. [41], DeepDeblur [22], DeepFilter [24], DeblurGAN [3], DeblurGANv2 [27] and SRN [39]. We use PSNR and SSIM value of other methods from their respective papers.

We show the results in Table 1. It could be observed that FMD-cGAN (ours) has high efficiency in terms of performance and inference time. FMD-cGAN also has the lowest inference time, and in terms of no. of parameters and macs operations also has the lowest value. Furthermore, FMD-cGAN output PSNR and SSIM values comparable to the other models in comparison.

6.2 Quantitative Evaluation on REDS Dataset

We also show the performance of our framework on the REDS dataset. We used 3000 pairs of blur and sharp images from REDS test dataset for evaluation. We compare the performance of FMD-cGAN (ours) with the DeepDeblur model [22]. We used the results of DeepDeblur from official GitHub repository - DeepDeblur-PyTorchFootnote 4.

We show the results in Table 2. It could be observed that our method achieves high SSIM and PSNR values which are comparable to DeepDeblur [22]. We emphasise that our network has a significantly lesser size as compared to DeepDeblur [22]. Currently, only the DeepDeblur model used the REDS dataset for training and performance evaluation.

6.3 Visual Comparison

Figure 6 shows the visual comparison on the REDS dataset. It could be observed that FMD-cGAN (ours) restore images comparable to the relevant top-performing works such as DeepDeblur [22] and SRN [39]. For example, row 1 of Fig. 6 shows that our method preserves the fine object structure details (i.e., building) which are missing in the blurry image.

Figure 7 shows the visual comparison results on the GoPro dataset. It could be observed that the output of our method is visually appealing in the presence of motion blur in the input image (see Example 3 of Fig. 7). To provide more clarity, we show the results for both FMD-cGAN\(_{Wild}\) and FMD-cGAN\(_{Comb}\) (Sect. 5). FMD-cGAN (ours) is faster and output better reconstruction than other motion deblurring methods even though our model has fewer parameters (Table 1). We have provided the extended versions of Fig. 6 and Fig. 7 in the supplementary material for better visual comparisons.

7 Ablation Study

Table 4 shows an ablation study on the generator network architecture for different design choices. Here, we train and test our network’s performance only on the GoPro dataset. Suppose #ngf denotes the initial layer’s filters count in the generator network, affecting filters count of subsequent layers. Table 4 demonstrates how #ngf affects model performance. It could be observed that if we increase the #ngf then image quality (PSNR) will increase. However, it increases #parameters and MACs operations also, affecting inference time and model size.

We divide our generator network into three parts according to its structure: Downsample (two 3 \(\times \) 3 convolutions), ResnetBlocks (9 blocks), and Upsample (two 3 \(\times \) 3 deconvolutions). To check the network performance, we put separable convolution into different parts. Table 5 demonstrates model performance after applying convolution decomposition in different parts of the generator network. ResNet blocks do most of the computation in the network; from Table 5, we can see applying convolution decomposition in this part giving better performance.

8 Conclusion

We proposed a Fast Motion Deblurring method (FMD-cGAN) for a single image. FMD-cGAN does not require knowledge of the blur kernel. Our method uses the conditional generative adversarial network for this task and is optimized using the multi-part loss function. Our method shows that using MobileNetv1 architecture consists of depthwise separable convolution to reduce computational cost and memory requirement without losing accuracy. We also proposed that using Hinge loss in the network gives good results. Our method produces better blur-free images, as confirmed by the quantitative and visual comparisons. FMD-cGAN is faster with low inference time and memory requirements, and it outperforms various state-of-the-art models for blind motion deblurring of a single image (Table 1). We propose as future work to deploy our model in lightweight devices for real-time image deblurring tasks.

References

Howard, A.G., et al.: MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv preprint arXiv:1704.04861v1 (2017)

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You Only Look Once: Unified, Real-Time Object Detection. arXiv e-prints, June 2015

Kupyn, O., Budzan, V., Mykhailych, M., Mishkin, D., Matas, J.: DeblurGAN: blind motion deblurring using conditional adversarial networks. In: CVPR (2018)

Gong, D., et al.: From motion blur to motion flow: a deep learning solution for removing heterogeneous motion blur. IEEE (2017)

Sun, J., Cao, W., Xu, Z., Ponce, J.: Learning a convolutional neural network for non-uniform motion blur removal. In: CVPR (2015)

He, K., Hang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR (2016)

Isola, P., Zhu, J.Y., Zhou, T., Efros, A.: Image-to-image translation with conditional adversarial networks. In: CVPR (2017)

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 694–711. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_43

Hansen, P.C., Nagy, J.G., O’Leary, D.P.: Deblurring images:matrices, spectra, and filterin. SIAM (2006)

Almeida, M.S.C., Almeida, L.B.: Blind and semi-blind deblurring of natural images. IEEE (2010)

Levin, A., Weiss, Y., Durand, F., Freeman, W.T.: Understanding blind deconvolution algorithms. IEEE (2011)

Szeliski, R.: Computer Vision: Algorithms and Applications. Springer, London (2011). https://doi.org/10.1007/978-1-84882-935-0

Richardson, W.H.: Bayesian-based iterative method of image restoration. JoSA 62(1), 55–59 (1972)

Wiener, N.: Extrapolation, interpolation, and smoothing of stationary time series, with engineering applications. Technology Press of the MIT (1950)

Cai, X., Song, B.: Semantic object removal with convolutional neural network feature-based inpainting approach. Multimedia Syst. 24(5), 597–609 (2018)

Chen, J., Tan, C.H., Hou, J., Chau, L.P., Li, H.: Robust video content alignment and compensation for rain removal in a CNN framework. In: CVPR (2018)

Luan, F., Paris, S., Shechtman, E., Bala, K.: Deep photo style transfer. In: CVPR (2017)

Zhang, K., Zuo, W., Che, Y., Meng, D., Zhang, L.: Beyond a gaussian denoiser: residual learning of deep CNN for image denoising. IEEE (2017)

Ledig, C., et al.: Photo-realistic single image super-resolution using a generative adversarial network. In: CVPR (2017)

Zhang, J., et al.: Dynamic scene deblurring using spatially variant recurrent neural networks. In: CVPR (2018)

Sun, J., Cao, W., Xu, Z., Ponce, J.: Learning a convolutional neural network for non-uniform motion blur removal. IEEE (2015)

Nah, S., Kim, T.H., Lee, K.M.: Deep multi-scale convolutional neural network for dynamic scene deblurring. In: CVPR (2017)

Huang, G., Liu, Z., van der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: CVPR (2017)

Ramakrishnan, S., Pachori, S., Gangopadhyay, A., Raman, S.: Deep generative filter for motion deblurring. In: ICCVW (2017)

Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein GAN. arXiv preprint arXiv:1701.07875 (2017)

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., Courville, A.C.: Improved training of wasserstein GANs. In: Advances in Neural Information Processing Systems (2017)

Kupyn, O., Martyniuk, T., Wu, J., Wang, Z.: DeblurGAN-v2: deblurring (orders-of-magnitude) faster and better. In: ICCV, August 2019

Lin, T., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongieg, S.: Feature pyramid networks for object detection. In: CVPR, July 2017

Lai, W., Huang, J.B., Hu, Z., Ahuja, N., Yang, M.H.: A comparative study for single image blind deblurring. In: CVPR (2016)

Goodfellow, I.J., et al.: Generative adversarial nets. In: NIPS (2014)

Lim, J.H., Ye, J.C.: Geometric GAN. arXiv preprint arXiv:1705.02894 (2017)

Zhang, H., Goodfellow, I., Metaxas, D., Odena, A.: Self-Attention GANs. arXiv:1805.08318v2 (2019)

Ulyanov, D., Vedaldi, A., Lempitsky, V.S.: Instance normalization: the missing ingredient for fast stylization. CoRR, abs/1607.08022 (2016)

Fred, A., Agarap, M.: Deep Learning using Rectified Linear Units (ReLU). arXiv:1803.08375v2, February 2019

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929–1958 (2014)

He, K., Zhang, X., Ren, S., Sun, J.: Identity mappings in deep residual networks. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9908, pp. 630–645. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46493-0_38

Liang, C.H., Chen, Y.A., Liu, Y.C., Hsu, W.H.: Raw image deblurring. IEEE Trans. Multimedia (2020)

Nah, S., et al.: NTIRE 2019 challenge on video deblurring and super-resolution: dataset and study. In: CVPR Workshops, June 2019

Tao, X., Gao, H., Shen, X., Wang, J., Jia, J.: Scale-recurrent network for deep image deblurring. In: CVPR (2018)

Mirza, M., Osindero, S.: Conditional Generative Adversarial Nets. arXiv preprint arXiv:1411.1784v1, November 2014

Xu, L., Zheng, S., Jia, J.: Unnatural L0 sparse representation for natural image deblurring. In: CVPR (2013)

Mao, X., Li, Q., Xie, H., Lau, R.Y.K., Wang, Z.: Least squares generative adversarial networks. arxiv:1611.04076 (2016)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: ICLR (2015)

Zeiler, M.D., Fergus, R.: Visualizing and understanding convolutional networks. CoRR, abs/1311.2901 (2013)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. CoRR, abs/1412.6980 (2014)

Ren, W., Cao, X., Pan, J., Guo, X., Zuo, W., Yang, M.H.: Image deblurring via enhanced low-rank prior. IEEE Trans. Image Process. 25(7), 3426–3437 (2016)

Pan, J., Sun, D., Pfister, H., Yang, M.H.: Blind image deblurring using dark channel prior. In: CVPR, June 2016

Nah, S.: DeepDeblur-PyTorch. https://github.com/SeungjunNah/DeepDeblur-PyTorch

Gong, X., Chang, S., Jiang, Y., Wang, Z.: AutoGAN: neural architecture search for GANs. In: ICCV (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Kumar, J., Mastan, I.D., Raman, S. (2022). FMD-cGAN: Fast Motion Deblurring Using Conditional Generative Adversarial Networks. In: Raman, B., Murala, S., Chowdhury, A., Dhall, A., Goyal, P. (eds) Computer Vision and Image Processing. CVIP 2021. Communications in Computer and Information Science, vol 1568. Springer, Cham. https://doi.org/10.1007/978-3-031-11349-9_32

Download citation

DOI: https://doi.org/10.1007/978-3-031-11349-9_32

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-11348-2

Online ISBN: 978-3-031-11349-9

eBook Packages: Computer ScienceComputer Science (R0)