Abstract

Object removal is a popular image manipulation technique, which mainly involves object segmentation and image inpainting two technical problems. In the conventional object removal framework, the object segmentation part needs a mask or artificial pre-processing; and the inpainting technique still requires further improving the quality. In this paper, we propose a new framework of object removal using the techniques of deep learning. Conditional random fields as recurrent neural networks (CRF-RNN) is used to segment the target in sematic, which can avoid the trouble of mask or artificial pre-processing for object segmentation. In inpainting part, a new method for inpainting the missing region is proposed. Besides, the representation features are calculated from the convolutional neural network (CNN) feature maps of the neighbor regions of the missing region. Then, large-scale bound-constrained optimization (L-BFGS) is used to synthesize the missing region based on the CNN representation features of similarity neighbor regions. We evaluate the proposed method by applying it to different kinds of images and textures for object removal and inpainting. Experimental results demonstrate that our method is better than the conventional method in terms of inpainting applications and object removal.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Object removal is an important tool in digital photography for image restoring, which aims to remove the object and recovery of missing parts of an image, so that the restored image looks natural. In object removal task, Object segmentation and image inpainting are the key parts.

For the traditional object segmentation which is conducted by artificial or assisted by a mask and so on, the development of research on semantic segmentation based on deep learning [1,2,3,4,5,6] makes the pre-process of segmentation by artificial or mask no longer needed. Zheng et al. [7] proposed a conditional random fields with Gaussian pairwise potentials as recurrent neural networks (CRF-RNN) for semantic segmentation which is competitive in accuracy for semantic object segmentation. Besides, CRF as a classic algorithm applied to image segmentation has been relatively mature and robust. Therefore, in this paper, the method of CRF-RNN is used to segment the object in semantic, which can avoid the trouble of mask or artificial pre-processing for object segmentation. In the research field of inpainting, exemplar-based inpainting methods have made great achievements in recent years. There are a lot of improvements in this area [8,9,10,11]. However, the restored image inpainted by exemplar-based method remains some kind of blur problem in inpainting region. Besides, inpainting becomes increasingly more difficult if missing region is large or if scenes are complex. In recent years, there are many of interesting research results based on the convolutional neural network [12,13,14,15]. One of interesting studies is Gatys et al. [16] generating texture using the convolutional neural network (CNN) features at different layers through matching the CNN features of an input texture. Another way of generating images is Simonyan [17, 18], which maximizes the responses of CNN units. There are already some studies of inpainting based on deep learning[19, 20], but so far still need to further improve the quality of the inpainting of such kind of algorithm.

Inspired by the prosperity of texture synthesis based on CNN features, we consider inpainting image based on convolutional neural network. Moreover, in light of the similarities between performance of convolutional neural networks and biological vision [21,22,23], our work takes the feature maps in forward processes of convolutional neural network as the important information that how humans understand and perceive image content.

In inpainting region, the CNN features are extracted at convolution and pooling layers for missing region and corresponding neighbor region in image. Then, we set the feature-based loss energy to be the square of the difference between the feature maps of missing region and corresponding neighbor region. Besides, we set the Gram matrix-based loss energy to be the square of the difference between the Gram matrix of missing region and corresponding neighbor region. The total loss function is weighted sum of two finite distortion functions, which is a convex optimization problem. Therefore, we use large-scale bound-constrained optimization (L-BFGS) method to optimize the target function and obtain the ultimate inpainting region. Since we take feature maps as a whole unit to optimize, the local optimal problem is avoided.

The remainder of this paper is organized as follows. Section 2 reviews related work and symmetry group theory. In Sect. 3, we first present the framework of our method of semantic object removal and introduce the details of representation features of our method in Sect. 3.1. Section 3.2 explains how we can generate inpainting image and Sect. 4 provides several experiment results in our method. We conclude this paper in Sect. 5.

2 Previous work

2.1 CRF-RNN for object segmentation

Conditional random fields (CRF) is often used for pixel-wise label prediction. CRFs can model these labels when the label of the pixel is used as a random variable to the Markov field and can be globally observed. This global observation is usually the input image. Zheng et al. [7] introduced a new form of convolutional neural network which combines the strengths of convolutional neural networks (CNNs) and CRFs-based probabilistic graphical modelling. This network is well designed for sematic segmentation. To segment the object automatically, we use this network in our framework. In the framework of CRF-RNN, the features for the image are derived by CNN. In the CNN layers, the filters are replaced by edge-preserving Gaussian filters [24] which coefficients depend on the original spatial and appearance information of the image. Besides, CRF is used for pixel-wise labelling. The high-level architecture of CRF-RNN which uses caffe model and network is based on the VGG-16 network.

2.2 Image inpainting

The classical inpainting methods are often based on either local or non-local information in the input image. Total variation (TV) [25]-based approaches, low rank (LR) [26] and PatchMatch (PM) [27], are these kinds of successful inpainting methods. However, these methods are unable to recover the missing information when the missing region is large or arbitrary shape. The inpainting method based on generative models and deep learning become popular recently. Generative adversarial networks (GANs) have been applied in image generating, which can produce high-quality images [28]. However, GANs cannot be directly applied to image inpainting. GAN can only be used to produce an entire image. Variational autoencoders (VAEs) [29] are another popular approach to generate overly smooth images. The context encoder (CE) [19] can be viewed as an autoencoder technique for image inpainting. However, it results in blurry or unrealistic images.

In recent years, CNN-based method for texture synthesis becomes popular. Gatys et al. [30] proposed a new parametric texture model which used CNN as the foundation for their texture model. Cimpoi et al. [31, 32] have provided a fruitful new analysis tool for studying visual perception with CNN. Based on their work, the feature representation in this paper is represented with CNN. The VGG network was extensively used and introduced in recent object recognition research works which are on the basis of convolutional neural network. Considering the well design of VGG, we also use VGG-16 (VGG-19 is suitable in this paper, and we are just randomly choosing one of them) network in our work.

Inspired by VGG-16 network’s architecture, our convolutional neural network computations are mainly based on linearly rectified convolution and average pooling. The convolution filters have the size of \(3\times 3\times k\), where k is the number of input feature maps. The size of pooling windows is \(2\times 2\) in non-overlapping regions and the convolutional layer is followed by an average pooling layer.

3 Object removal using deep learning

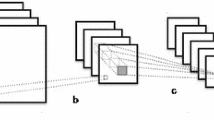

The proposed framework of our method is shown in Fig. 1. To segment the object semantically, the CRF-RNN method is used in segmentation part in our framework. Our others contributions are focused on inpainting the removed parts in which each removed region is divided into two inpainting parts (see Fig. 2). For example, in Fig. 2, we divide the image into three kinds of regions. The region to be inpainted is combined by the central region and the surrounding region. We call the rest part in the image the neighbor region. The central region is a white area without any information and the surrounding region contains some information. These two kinds of regions are inpainted separately. We take the surrounding region as the transition region between central region and neighbor region. In the step of inpainting the surrounding region, in general, the surrounding region is divided into four sub-parts. The most similar region in the neighbor region is found for each sub-part. The similarity is defined by sum absolute difference (SAD). We use the method of [33] to obtain the best candidate patch for the surrounding neighbor region. Then, the CNN features are extracted at convolution and pooling layers (layers of Conv1-1, pool1, pool2, pool3, and pool4) for the surrounding region and its corresponding neighbor region. Based on the CNN features, we design loss function. At last, large-scale bound-constrained optimization (L-BFGS, [34]) method is used to optimize the loss function and get the ultimate result. In the step of inpainting the central region, to avoid the discontinuity between central region and the surrounding region, there exists overlaps region between them. The overlap region is usually 5 pixels. The treatment process of central region is similar to the process of surrounding region and the flow chart which we described before is shown in Fig. 3. The details of extracting CNN features and optimization method are separately introduced in Sects. 3.1 and 3.2.

3.1 Loss function based on feature maps of CNN

We obtain feature representation for one image using the feature space provided by the convolutional and pooling layers in convolutional neural network. Briefly, convolutional neural network is described in previous in this paper. Based on VGG network, we use the feature space provided by the convolutional and pooling layers. The fully connected layers are not used in our method. Besides, the caffe model and trained network can be publicly available and be explored in the convolutional architecture for fast feature embedding caffe framework [35].

Vector \(\mathbf {x}\) is the image block which we want to extract features. Then, \(\mathbf {x}\) is passed through the convolutional neural forward network and compute the activations for each layer l in the network. If a layer has \(N_{l}\) distinct filters, then there are \(N_{l}\) feature maps in one layer. We set the size of feature map as \(S_{l}\) which is the product of height and width of the feature map. The activation of the ith filter at position j in layer l is \(f^{l}_{ij}\). Therefore, the responses in a layer l are a matrix \({F^{l}} \in {R^{N_{l} \times {M_{l}}}}\), and the elements are \(f^{l}_{ij}\).

When we inpaint the surrounding region, on one hand, we want to partially preserve the information of the surrounding region. On the other hand, we are synthesizing new textures based on neighborhood information. Therefore, the existing texture of surrounding region c is treated as the content control information. In addition, the texture in candidate neighbor region S is treated as the style control information. Correspondingly, two kinds of loss functions are calculated. One is feature map-based loss function which is used to be the initial image optimized to be similar to the existing texture of surrounding region in content. Another one is Gram matrix-based loss function which is used to be the initial image optimized to be similar to the texture of candidate neighbor region in style.

Supposing that t is the image block that we want to generate inpainted region. It is randomly initialized. Let \(\mathbf {c}\) is one image block in the surrounding region image which is treated as the content control texture, and \(F_{c}\) and \(F_{t}\) are, respectively, feature maps, representing c and t in layer l. Then, the squared-error loss between the two feature map representations in layer l can be defined by following equation:

The feature map-based total loss is as follows:

where \(w_{c,l}\) are weighting factors of the contribution of each layer to the feature map-based total loss. The content weight for each layer equals 1.

Supposing that s is one of best match image blocks in neighbor region. We put s through convolutional neural network. On top of the CNN responses in each feature map of the network, a style representation is built which computes the spatial correlations reflecting the image information of the feature map to some extent. These feature correlations are provided by the Gram matrix \({G^{sl}} \in {R^{N_{l} \times {M_{l}}}}\), where \(G^{sl}_{ij}\) is the inner product between the vectorised feature map i and j in layer l:

To compute the correlations between the different filter responses, the feature correlations are given by the \({G^{fl}} \in {R^{N_{l} \times {M_{l}}}}\) either, where \(G^{fl}_{ij}\) is the inner product between the vectorised feature map i and j in layer l:

It can be found that:

\(G^{sl}_{ij}\) is the transpose of \(G^{fl}_{ij}\). Therefore, if we set \(G^{l}=G^{sl}=(G^{fl})^{T}\), the \(G^{l}\) not only represents the spatial correlation information but also stands for the correlation information between the different filter responses.

To generate a new inpainting region that is similar to the candidate image block in neighbor region in style, we use gradient descent from one initial image to find another image that matches the style representation of the neighbor region. This is done through minimizing the mean-squared distance between the entries of the Gram matrix [36,37,38].

Therefore, let \(\mathbf {s}\) and \(\mathbf {t}\) be the best match image block in neighbor region and the inpainting region to be generated, and set \(S^{l}=S^{sl}=(S^{fl})^{T}\) and \(T^{l}=T^{sl}=(T^{fl})^{T}\) are their respective Gram matrixes in layer l. The contribution of that layer to the loss is then as follows:

The Gram matrix-based total loss is as follows:

where \(w_{s,l}\) are weighting factors of the contribution of each layer to the style control total loss. Style control weight equals 5 for each layer in our experiment.

If we want to calculate the values of loss functions between central region and corresponding candidate neighbor region, we just replace \(\mathbf {c}\) and \(\mathbf {s}\) in Eqs. (1) and (6) with the padded central region (central region added overlap region) and the candidate neighbor region corresponded to padded central region.

3.2 Inpainting region generated using L-BFGS

3.2.1 The total loss function

At the beginning of Sect. 3, we have presented that we will inpaint surrounding and central regions separately. The core idea is based on the original texture information to generate new texture in white space. For the central region, although it is a white space, in the practical process, we add a padding to the central region. Besides, the padding region is the overlap region and the purpose of doing this is to concern the continuity of texture.

Based on the loss functions, we calculated in Sect. 3.1 which we define the total loss function as follows:

where \(\alpha =0.0001\) and \(\beta =1\). We set \(\alpha \ll \beta \), because, on one hand, we want to make sure that the style image works in the optimization process, and on the other hand, we expect the generated image to be statistically more similar to the target image rather than the content consistent with the target image. When we are inpainting the surrounding region, c is the image block in surrounding region and s is the corresponding image block in neighbor region. We replace c and s accordingly with padded central region and corresponding image block in neighbor region when we are inpainting the central region. t refers to our ultimate synthesized inpainted image block.

From Eq. (8), we can find that the object function is weight sum of two finite distortion function. The boundaries are in the control of two source textures of image. Therefore, finding the best inpainted image block \(\mathbf {t}\) for \(f(\mathbf {t})\) is a convex optimization problem. Then, our target is to find \(\mathbf {t}\) to minimize \(f(\mathbf {t})\), which is

We perform gradient descent on \(f(\mathbf {t})\) to find the Hessian matrix satisfied the minimization and the gradient of \(f(\mathbf {t})\) is as follows:

From Eq. (1), the derivative of content control total loss \(E_{c,l}\) with respect to the activations in layer l equals:

From Eq. (6), the derivative of Gram matrix-based total loss \(E_{s,l}\) with respect to the activations in layer l equals:

To use L-BFGS to optimize \(f(\mathbf {t})\), we save the 20 (this value is usually between 3 and 20) times iterative values of \(f(\mathbf {t})\) and \(\frac{\partial f}{\partial \mathbf {t}}\). The inverse Hessian matrix \(B_{i}=H^{-1}_{i}\) has the iterative relation:

where

where \(S_i=f(\mathbf {t}_{i+1})-f(\mathbf {t}_{i})\), and \(r_i=\frac{1}{(\frac{{\partial f}}{{\partial \mathbf {t}_{i+1}}} -\frac{{\partial f}}{{\partial \mathbf {t}_{i}}})^{T}S_i}\) is the learn rate. According to the equation, we described before we send the total loss function \(f(\mathbf {t})\) and gradient value \(\frac{{\partial f}}{{\partial \mathbf {t}}}\) to the L-BFGS model to calculate the best value t to satisfy Eq. (9).

3.2.2 Large-scale bound-constrained optimization

Large-scale bound-constrained optimization which is a limited-memory algorithm for solving large nonlinear optimization problems subjects to simple bounds on the variables developed from Quasi-Newton method. Before the application of Quasi-Newton method, there were gradient descent method, Newton method, and conjugate gradient method. All the methods mentioned before are designed to solve the optimization problem. Meanwhile, these methods are through calculating Hessian matrix or gradient descent to find the optimize result. In Quasi-Newton method, let g(x) be the objective function and the g(x) can be represented in the following equation:

where \(x_{i+1}\) is one value in domain and \(H_{i+1}\) is the Hessian matrix in \(x_{i+1}\).

From Eq. (14) formula derivation and ignoring high small order item, we can get

Let \(x=x_{i}\), then

If we let \(B_{i+1}=H_{i+1}^{-1},\) then we need to calculate the \(B_{i}\) for the optimized result. Let

and

and we save the nearest number of m \(t_{i}\) and \(s_i\). Therefore, L-BFGS has

where \(r_i=\frac{s_{i-1}^{T}t_{i-1}}{t_{i-1}^{T}t_{i-1}}\). We set the initial value \(B_{i}^{0}=r_{i}I\). In our method of inpainting image, we will use Eq. (15) to calculate the restored regions. \(\mathbf {c}\) is the surrounding region and \(\mathbf {s}\) is candidate neighbor region in Eq. (8) when we inpaint the surrounding region. \(\mathbf {t_1}\) is random initialization in the size of the \(\mathbf {c}\). Besides, the corresponding total loss function \(f(\mathbf {t_1})\) and gradient value \(\frac{{\partial f_1}}{{\partial \mathbf {t_1}}}\) are used to calculate \(B_{1}\) in Eq. (13). Based on the equations in L-BFGS we described before calculating the \(\mathbf {t_2}\). Then, \(\mathbf {t_2}\) is used to calculate \(B_{2}\). This iterative process will continue until it reaches to the maximum steps of the iteration or the value of \(B_{i}\) is nearly unchanged. The last value of \(\mathbf {t_i}\) is the ultimate result that we needed. We transfer the \(\mathbf {t_i}\) to an image which is the inpainting region for surrounding region. \(\mathbf {c}\) is the padded central region and \(\mathbf {s}\) is corresponding candidate neighbor region in Eq. (8) when we inpaint the central region. The following processing is the same as the process of surrounding region. We transfer the \(\mathbf {t_i}\) to an image which is the inpainting region for central region. The whole inpainting region is combined by these two inpainting regions and our method results are shown in Sect. 4.

4 Results and analysis

We use VGG filters of Simonyan and Zisserman [17], and use the platform of caffe framework [35]. We implemented our algorithm in python and ipython. Our implementation is on graphic processing unit (GPU) of NVIDIA GTX980 and our computer is a 24-core Dell server with an Intel Xeon CPU of 2.5-GHz and 64-GB RAM. Here are the listed parameters in experiments: content layers: [’conv1\(\_\)1’, ’ conv2\(\_\)1’, ’ conv3\(\_\)1’, ’ conv4\(\_\)1’, and ’ conv5\(\_\)1’]

content weights: [1,1,1,1,1]

learning rate: 0.001

loss network: ’models/vgg16.t7’

LBFGS \(\hbox {num}\_\hbox {iterations}\): 2000

padding type: ’reflect-start’

pre-processing: ’vgg’

style layers: [’conv1\(\_\)1’, ’ conv2\(\_\)1’, ’ conv3\(\_\)1’, ’ conv4\(\_\)1’, ’ conv5\(\_\)1’]

style weights: [5, 5, 5, 5,5]

use cudnn: 1

We first compare our method with the classical exemplar-based method [39] and then compare our method with the method of context encoders [19] and nearest neighbor (NN) inpainting (which forms the basis of Hays et al. [40]). The method of context encoders is based on deep learning. For semantic inpainting, we compare against NN-inpainting. NN-inpainting is the classical semantic inpainting. At last, we shown the compared results of peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) of our method and the other three kind methods. The images in our paper are derived from the site http://www.cgtextures.com/ and PASCAL VOC 2007 [41].

In Figs. 4 and 5, we experiment with images from ImageNet data set. We randomly select the images containing object of single or multi. In Fig. 4c exists obviously blur problem in the inpainting region. While compared exemplar-based method, the result of our method is more clear. More results are shown in Fig. 5. In Fig. 5, Column (a) is the original image; column (b) is the result inpainted by exemplar-based method which exists blur problem; and column (c) is the result synthesized by our method. Rows 1, 2, and 4 are experiment results for single object. Row 3 and 5 are experiment results for multi objects. Compared method of exemplar-based method our method is more clear in inpainting region.

To be compared with the exemplar-based method in quality assessment, we randomly remove one part region from original image and inpaint it separately using method of ours and exemplar-based. We use PSNR to evaluate restored image quality and the experimental results are shown in Fig. 6. The example images are choose from the data sets of Paris StreetView and ImageNet. Where column (a) is the original image; column (b) is the damaged image; column (c) is the result inpainted by exemplar-based method; column (d) is the result synthesized by our method. In these experiment results, the PSNR of our inpainting image is a little higher than exemplar-based one. In row one, the stone pillars inpainted by exemplar-based method are twist. Such kind of synthesized errors appeared in other rows of result of exemplar-based method. Besides, these errors do not appear in the image restored by our method.

Figure 7 shows two example results of our method compared with NN-inpainting and context encoders. As shown in Fig. 7, that our method improve the improve results over a hand-designed damaged image. Table 1 reports quantitative results on StreetView and ImageNet data sets. Figure 8 is the quantitative results of PSNR and SSIM. Where the 30 images are randomly selected from the data sets. These results show that our method is competitive for image inpainting.

Our method can be used to generate a transition region by combining two textures. The textures in Figs. 9 and 10 are derived from the site http://www.cgtextures.com/. Figures 9, 10 are experimental results that textures from one kind gradually to another one. Figure 9 is the experimental result that texture of regular changes gradually to irregular one. In texture exemplar1, the bricks are arranged in a rule. These bricks graded into different shapes of pebbles. Figure 9a is the experimental result of exemplar-based method, in which the brick twisted in transition zone, and there are some large-scale blocks which not exist in texture exemplar1. Figure 9b is experimental result of our method. From Fig. 9b, we can find that our synthesized texture not exists such kind of artificial. In Fig. 10a, there are blurred areas in transition zone, which is the disadvantage in method of exemplar-based method. The mistake in the experimental results for the method of exemplar-based origins from the patch-based synthesis method always brings about large-scale structures in synthesized texture. Their method is easy to fall in local optimal. Since we take feature maps as a whole unit to optimize, the local optimal problem is avoided. From the experimental results shown above, we can find that our method can generate more comfortable biological vision texture than the method of exemplar-based.

5 Conclusion

In this conceptual paper, we explore a new framework for semantic object removal with inpainting based on CNN feature maps, and illustrate that it is robust for most kind of images. Our method can remove the semantic object automatically without a mask or artificial pre-processing. However, the sematic segmentation uses method of CRF-RNN whose quality needed a further improvement. The next step of our works maybe focus on the quality improvement on sematic segmentation. Besides, the optimization efficiency of L-BFGS is not high, so, in the next step, we consider optimizing this step.

References

Li, Z., Tang, J.: Weakly supervised deep matrix factorization for social image understanding. IEEE Trans. Image Process. 26(1), 276–288 (2017)

Girshick, R., Donahue, J., Darrell, T., et al.: Region-based convolutional networks for accurate object detection and segmentation. Pattern Anal. Mach. Intell. IEEE Trans. 38(1), 142–158 (2016)

Alexe, B., Deselaers, T., Ferrari, V.: Measuring the objectness of image windows. IEEE Trans. Pattern Anal. Mach. Intell. 34(11), 2189C2202 (2012)

Han, J., Zhang, D., Cheng, G., Guo, L., Ren, J.: Object detection in optical remote sensing images based on weakly supervised learning and high-level feature learning. IEEE Trans. Geosci. Remote Sens. 53(6), 3325–3337 (2015)

Zhang, D., Han, J., Li, C., Wang, J., Li, X.: Detection of co-salient objects by looking deep and wide. Int. J. Comput. Vis. 120(2), 215–232 (2016)

Zhang, D., Han, J., Han, J., Shao, L.: Cosaliency detection based on intrasaliency prior transfer and deep intersaliency mining. IEEE Trans. Neural Netw. Learn. Syst. 27(6), 1163–1176 (2016)

Zheng, S., Jayasumana, S., Romera-Paredes, B.,Vineet, V., Su, Z., Du, D.: Conditional random fields as recurrent neural networks. In: Proceedings of the IEEE international conference on computer vision, pp 1529–1537 (2015)

Darabi, S., Shechtman, E., Barnes, C., Goldman, D. B., Sen, P.: Image melding: combining inconsistent images using patch -based synthesis. Trans. Gr. 31(3), article 82 (2012)

Liang, Z., Yang, G., Ding, X., et al.: An efficient forgery detection algorithm for object removal by exemplar-based image inpainting. J. Vis. Commun. Image Represent. 30, 75–85 (2015)

Ruzic, T., Pizurica, A.: Context-aware patch-based image inpainting using Markov random field modeling. Image Process. IEEE Trans. 24(1), 444–456 (2015)

Carreira, J., Sminchisescu, C.: CPMC: automatic object segmentation using constrained parametric min-cuts. IEEE Trans. Pattern Anal. Mach. Intell. 34(7), 1312C1328 (2012)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105 (2012)

Taigman, Y., Yang, M., Ranzato, M., Wolf, L.: Deepface: Closing the gap to human-level performance in face verification. In: Computer vision and pattern recognition (CVPR),2014 IEEE conference on, 1701C1708 (2014)

Cheng, G., Zhou, P., Han, J.: Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 54(12), 7405–7415 (2016)

Yao, X., Han, J., Cheng, G., Qian, X., Guo, L.: Semantic annotation of high-resolution satellite images via weakly supervised learning. IEEE Trans. Geosci. Remote Sens. 54(6), 3660–3671 (2016)

Gatys, L.A., Ecker, A.S., Bethge, M.A.: Neural algorithm of artistic style. arXiv preprint arXiv:1508.06576 (2015)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Simonyan, K., Vedaldi, A., & Zisserman, A.: Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034 (2013)

Pathak, D., Krahenbuhl, P., Donahue, J., et al.: Context encoders: Feature learning by inpainting. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 2536–2544 (2016)

Li, Z., Tang, J.: Weakly supervised deep metric learning for community-contributed image retrieval. IEEE Trans. Multimed. 17(11), 1989–1999 (2015)

Cadieu, C.F., Hong, H., Yamins, D.L.K., et al.: Deep neural networks rival the representation of primate IT cortex for core visual object recognition. PLoS Comput. Biol. 10(12), e1003963 (2014)

Gl, U., van Gerven, M.A.J.: Deep neural networks reveal a gradient in the complexity of neural representations across the ventral stream. J. Neurosci. 35(27), 10005–10014 (2015)

Khaligh-Razavi, S.M., Kriegeskorte, N.: Deep supervised, but not unsupervised, models may explain IT cortical representation. PLoS comput. biol. 10(11), e1003915 (2014)

Paris, S., Durand, F.: A fast approximation of the bilateral filter using a signal processing approach. IJCV 81(1), 24C52 (2013)

Afonso, M.V., BioucasDias, J.M., Figueiredo, M.A.T.: An augmented Lagrangian approach to the constrained optimization formulation of imaging inverse problems. IEEE Trans. Image Process. 20(3), 681 (2011)

Hu, Y., Zhang, D., Ye, J., Li, X., He, X.: Fast and accurate matrix completion via truncated nuclear norm regularization. IEEE Trans. Pattern Anal. Mach. Intell. 35(9), 2117 (2013)

Barnes, C., Shechtman, E., Dan, B. G., Dan, B.G.: PatchMatch: a randomized correspondence algorithm for structural image editing. ACM SIGGRAPH 28, 24 (2009)

Zhu, J.Y., Kr?henbhl, P., Shechtman, E., Efros, A.A.: Generative visual manipulation on the natural image manifold. Comput. Vis. ECCV 2016. Springer, Berlin (2016)

Kingma, D. P., Welling, M.: Auto-encoding variational bayes. arXiv preprint 1312.6114 arXiv:1312.6114 (2013)

Gatys, L., Ecker, A.S., Bethge, M.: Texture synthesis using convolutional neural networks. In: Advances in Neural Information Processing Systems, pp 262–270 (2015)

Cimpoi, M., Maji, S., Kokkinos, I., Mohamed, S., Vedaldi, A.: Describing textures in the wild. In: IEEE Conference in Computer Vision and Pattern Recognition (CVPR), pp 3606–3613 (2014)

Cimpoi, M., Maji, S., Kokkinos, I., Vedaldi, A.: Deep filter banks for texture recognition, description, and segmentation. Inter. J. Comput. Vis. 118(1), 65–94 (2016)

Zhu, S., Ma, K.-K.: A new diamond search algorithm for fast block matching motion estimation. Image Process. IEEE Trans. 9(2), 287–290 (2000)

Zhu, C., Byrd, R.H., Lu, P., Nocedal, J.: Algorithm 778: L-BFGS-B: Fortran subroutines for large-scale bound-constrained optimization. ACM Trans. Math. Softw. TOMS 23(4), 550C560 (1997)

Jia, Y., Shelhamer, E., Donahue, J., Karayev, S., Long, J., Girshick, R., Darrell, T.: Caffe: Convolutional architecture for fast feature embedding. In: Proceedings of the 22nd ACM international conference on Multimedia. ACM, Orlando, Florida, USA. pp 675–678 (2014)

Heeger, D.J., Bergen, J.R.: Pyramid-based texture analysis/synthesis. In: Proceedings of the 22nd annual conference on Computer graphics and interactive techniques. pp 229–238 (1995)

Portilla, J., Simoncelli, P.: A parametric texture model based on joint statistics of complex wavelet coefficients. Int. J. Comput. Vis. 40(1), 49–71 (2000)

Xie, X., Tian, F., Seah, H.S.: Feature guided texture synthesis (fgts) for artistic style transfer. In: Proceedings of the 2nd international conference on Digital interactive media in entertainment and arts. pp 44–49 (2007)

Criminisi, A., Prez, P., Toyama, K.: Region filling and object removal by exemplar-based image inpainting. IEEE Trans. image Process. 13(9), 1200–1212 (2004)

Hays, J., Efros, A.A.: Scene completion using millions of photographs. Commun. ACM 51(10), 87–94 (2008)

Everingham, M., Eslami, S. A., Van Gool, L., Williams, C. K., Winn, J., Zisserman, A.: The pascal visual object classes challenge: A retrospective. Int. J. comput. vis. 111(1), 98–136 (2015)

Acknowledgements

We thank the anonymous reviewers and the editor for their valuable comments. This work has been supported by The National Natural Science Foundation of China (Nos. 61772387 and 61372068), the Research Fund for the Doctoral Program of Higher Education of China (No. 20130203110005), the Fundamental Research Funds for the Central Universities (No. K5051301033), the 111 Project (No. B08038), and also supported by the ISN State Key Laboratory.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by C. Xu.

Rights and permissions

About this article

Cite this article

Cai, X., Song, B. Semantic object removal with convolutional neural network feature-based inpainting approach. Multimedia Systems 24, 597–609 (2018). https://doi.org/10.1007/s00530-018-0585-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-018-0585-x