Abstract

Ensuring correct functionality of an integrated system requires accurate evaluation of the on-chip electrical characteristics. General purpose analysis methods, based on modified nodal analysis (MNA) and the partial element equivalent circuit method, enable accurate analysis of the behavior of power networks. The runtime of these methods however grows superlinearly with the size of the system, rendering these techniques impractical for analyzing modern VLSI systems. Different methods for accelerating MNA are reviewed in this chapter. In domain decomposition, for example, circuit analysis is efficiently parallelized, enabling faster processing if large computational resources are available. Using hierarchical matrices, the sparsity pattern of the Laplacian matrix is exploited to reduce the complexity of the analysis process. Alternative algorithms for circuit analysis are also reviewed, including the infinite mesh grid model and random walks. These techniques reduce the complexity of the circuit analysis process by avoiding costly MNA to evaluate the electrical behavior of an integrated system.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- History of circuit analysis

- Modified nodal analysis

- Laplacian matrix

- Capacitance matrix

- Inductance matrix

- Transient analysis

- Scattering parameters (S-parameters)

- Random walk based circuit analysis

- Multigrid techniques

- Domain decomposition

- Iterative numerical methods

- Incidence matrix

- Degree matrix

- S − parameters

- Algebraic multigrid

- Geometric multigrid

- Hierarchical matrix

- History of circuit analysis tools

- Infinite grid

- Circuit analysis

High level design of VLSI systems assumes correct functionality of the underlying electrical circuits. Digital systems, for example, utilize clearly distinguishable binary signals. At lower abstraction layers, however, the electrical signals behave similar to analog signals. The electrical waveforms therefore satisfy stringent requirements, such as propagation delay, slew rate, and power dissipation, to satisfy signal integrity requirements. To evaluate these waveforms, circuit level analysis of VLSI systems is necessary. Due to the significant increase in the speed and complexity of integrated systems, accurate and computationally efficient circuit analysis has gained critical importance over the past decades.

Since the establishment of a mathematical structure for circuit theory in 1827 by G. S. Ohm [394], different methods for circuit analysis have been reported in the literature. A graph theoretic basis for circuit analysis was described in 1847 by G. R. Kirchhoff [256] by postulating two laws governing the current and voltage relationship within an arbitrary electrical circuit. By the late 19th century, the theory of transient and alternating current was developed, incorporating the concepts of capacitance and inductance into the circuit analysis process [395]. Nonlinear circuit theory emerged in the early 20th century, driven by the advent of nonlinear devices, particularly vacuum tubes [396].

Entering the era of integration, the need for accurate analysis of complex systems motivated the development of circuit simulation tools. Tensor analysis of electrical circuits, pioneered in 1934 by G. Kron [397], was a crucial precursor of early circuit simulators. The Transistor Analysis Program (TAP), developed in 1959 [398], is considered the earliest circuit simulation program [399]. Based on TAP, more advanced simulation tools were developed, including NET1 in 1963 [400] and SCEPTRE in 1967 [401], capable of handling a wide range of circuits, including both passive and nonlinear components. Important advancements in numerical integration, driven primarily by H. Shichman [402, 403], were vital in creating CIRcuit analysis PACkage (CIRPAC) [403] which exhibited an order of magnitude speedup as compared to other simulators of the time.

The application of sparse matrix analysis to circuit simulation was a crucial advancement in the Advanced STatistical Analysis Program (ASTAP), developed in IBM in 1971 [404], significantly reducing memory requirements. Variable time step integration further improved accuracy and runtime by increasing or reducing the time resolution if the rate of change in the parameters is, respectively, high or low [404]. Other notable circuit simulators of the early 1970’s include Computer Analysis of Nonlinear Circuits, Excluding Radiation (CANCER) [260], and Simulator for Linear Integrated Circuits (SLIC) [261], which utilized advanced linear algebraic methods for linearization, numerical stability, and accuracy control. An important feature of CANCER and SLIC was the user friendly input description language that contributed to the widespread adoption of these tools in both the industrial and academic communities. An example of a circuit described in the SLIC language is shown in Fig. 5.1. Note the similarity with current circuit simulation tools. Since an electrical circuit is fundamentally a graph, only connectivity information is required to describe a circuit, enabling efficient textual representation of the system.

An example of AC analysis of a low pass filter using SLIC [261]. a) Target circuit, and b) SLIC code. Resistor R1, inductor L1, and capacitor C1 are described on lines 4 to 6. Note the similarity with SPICE syntax. The TEMP statement specifies the operating temperature of the circuit. The GAIN statement specifies the output (03 and 00) and input (01 and 00) ports and the type of analysis (e.g., AC voltage transfer function). The range of frequencies is 0.1 to 100 MHz with ten points per decade, as specified in the FREQ statement on line 3. The input is terminated with the END statement.

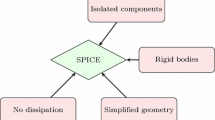

The popularity of CANCER in the academic community motivated the development in 1973 of the open source Simulation Program with Integrated Circuit Emphasis, commonly known today as SPICE [399]. The second version of SPICE, released in 1975 [50], became the worldwide standard for circuit simulation. The success of SPICE2 can be largely attributed to the applicability of the tool to a wide range of linear and nonlinear circuits. This crucial feature of SPICE2 is achieved by utilizing modified nodal analysis (MNA), a robust method for numerical circuit analysis [405].

5.1 Modified nodal analysis

First presented in 1975 [64], MNA is a versatile method for analyzing linear circuits. The impedances, current sources, voltage sources, and nonlinear devices within a circuit are described in matrix form. If the conductance of each wire, voltage of each voltage source, and current of each current source is known, the potential difference across each edge (i.e., a circuit element, such as a resistor or current source) can be determined.

Suppose a circuit is represented by a directed multigraph G = (V, E), the direction of the edges is arbitrary chosen, and the edge set is composed of five subsets,

each representing, respectively, independent voltage sources, independent current sources, resistors, capacitors, and inductors. Recall from Subsection 3.4.1 that Y d is the incidence matrix of a directed graph where an entry is

The elements within the network can be ordered such that

where \(\mathbf {v} \in \mathbb {R}^{|E|}\) and \(\mathbf {i} \in \mathbb {R}^{|E|}\) are vectors of, respectively, the voltage across and current through the corresponding element, and subscripts v, i, r, c, and l indicate the type of circuit element, respectively, the independent voltage and current sources, resistors, capacitors, and inductors. The elements of \({\mathbf {i}}_i \in \mathbb {R}^{|E_i|}\) represent the current through the independent current sources and are known a priori. The remaining current and voltage vectors are related via the following relationships [406],

where \(\mathcal {G} \in \mathbb {R}^{|E_r|\times |E_r|}\) and \(\mathcal {C} \in \mathbb {R}^{|E_c|\times |E_c|}\) are diagonal matrices representing, respectively, the conductance and capacitance of the respective elements, and \(\mathcal {L} \in \mathbb {R}^{|E_l|\times |E_l|}\) is the inductance matrix representing the self- and mutual inductance within a circuit. Note that \(\mathcal {L}\) is a diagonal matrix if the mutual inductances are ignored.

The primary equation governing the static analysis of circuits without dependent sources can be formulated as

where \(\mathbf {e}\in \mathbb {R}^{|V|}\) is the vector of voltage at each node, and \(G = Y_g\mathcal {G}Y_g^T\) is the conductance matrix of a resistive network.

By constructing and solving (5.9), the steady state voltage at each node can be determined. Practical VLSI circuits however contain circuit elements that display transient behavior. These elements include linear primitives, such as capacitors and inductors, and nonlinear elements, such as transistors and memristors. To model the behavior of these elements, numerical differentiation is applied. Each transient element is replaced by an equivalent circuit element called a companion model that includes resistors and independent sources. For example, the transient current i C(t) through a capacitor as a function of time t is

where C is the capacitance and v C is the voltage across the capacitor. Discretization by the Backward Euler method yields

where t k−1 and t k are consecutive discrete time instants, and h is the time step. This expression is equivalent to

where g eq is the equivalent instantaneous conductance of the capacitor,

and i eq is the equivalent current source across the capacitor,

During transient analysis, a capacitor is replaced by an equivalent companion model, as shown in Fig. 5.2a. During each time step, the transient parameters within the model are adjusted, modeling the instantaneous behavior of the element.

In matrix form, the companion models transform (5.9) into

or, in a more compact form,

where

and \(C = Y_c\mathcal {C}Y_c^T\) is the capacitance matrix [406].

Discretizing (5.15) yields

where k is the iteration number. Equation (5.19) is a system of linear equations. MNA-based transient analysis therefore requires an iterative solution of a system of linear matrix equations for each time step.

The primary advantage of MNA is versatility. Any linear circuit can be analyzed with MNA. To analyze nonlinear devices within a circuit, such as transistors, memristors, and magnetic tunnel junctions, linearized models are used [407, 408]. These models approximate the device behavior around a specific operating point. The computational and memory complexity of MNA is however of great concern. The runtime to solve a linear equation grows superlinearly with the number of nodes, requiring significant computational time for large systems, as in VLSI systems [145]. Furthermore, matrices \(\tilde {G}\) and \(\tilde {C}\) lose the symmetric positive definite (SPD) property in the presence of independent voltage sources. Efficient algorithms suited for SPD matrices, such as Cholesky factorization [409] or conjugate gradient method [410], can therefore no longer be used to solve (5.15), requiring more expensive algorithms such as LU factorization [411].

To preserve the SPD property, those circuit elements producing the voltage source and additional nodes within the network can be transformed using a Norton equivalent circuit to eliminate the voltage source and any associated rows and columns. An example of a Norton equivalent of an independent voltage source connected in series with a resistor is shown in Fig. 5.2b [412]. By eliminating the independent voltage sources, (5.15) becomes

yielding the SPD matrix \(\left (\tilde {G} + \frac {2}{h}\tilde {C}\right )\) in (5.19) [406].

Despite restoring the SPD property, the analysis of large circuits requires significant computational resources. Direct linear matrix solvers place excessive demand on the memory during computations of extremely large networks. Alternative methods have been introduced to circumvent the superlinear complexity of the circuit analysis process. For example, a significant structural similarity exists between linear electrical circuits and finite element discretization of partial differential equations (PDE). Methods for accelerated solution of PDEs are therefore often applicable to the analysis of linear circuits. Many approaches utilize graph theory to accelerate the analysis process. A variety of techniques for fast circuit analysis is described in the upcoming sections.

5.2 Iterative numerical methods

Both DC and transient forms of MNA can be represented as a standard system of linear equations [65],

where x is the vector representing the voltage at each node and the current through each voltage source within a network, and b is the vector of the current being injected and the voltage sources. Network models of modern ICs are prohibitively large, disallowing the use of direct solution methods such as LU factorization or Cholesky factorization [411]. Iterative solvers, such as the conjugate gradient (CG) method [410] or generalized minimal residual method (GMRES) [413], should therefore be used to circumvent this limitation.

Reformulating (5.21) yields

If vector x is replaced by vector x ′, (5.21) becomes

where r is called a residual. Observe that the norm of the residual ||r|| becomes smaller if x ′ is close to x. Iterative linear equation solvers attempt to minimize ||r|| by iteratively adjusting vector x ′, thereby closely approximating the exact solution x.

Classic iterative algorithms are stationary methods [414] that represent a system of equations as

where x k is the approximation of the solution after the k th iteration, and matrix B and vector c depend upon the chosen iterative method. Note that matrix B and vector c remain invariant during the iterative process, hence the methods are called stationary [415]. Classical stationary methods operate by splitting matrix A into two matrices [415],

The k th iteration of these methods is

Common iterative methods suggest different methods for splitting matrix A [416],

where ω is the relaxation parameter, E and F are, respectively, the strictly lower and strictly upper triangular parts of A, and D is the diagonal part of A.

Advanced iterative methods are typically not stationary, i.e., the terms of (5.24) are not maintained constant. The CG method [410] is one of the most common non-stationary iterative method for solving systems of the form described by (5.21) when matrix A is symmetric positive definite (SPD). The algorithm is based upon the observation that the exact solution x minimizes a convex function,

The solution of (5.21) is determined by minimizing f(x) via gradient descent [417]. The gradient of function f(x) is

The solution is found by iteratively shifting f(x) in the direction of steepest descent,

or, alternatively,

where α k is the step size during iteration k.

A limitation of the CG method is the limited applicability of the algorithm, since only SPD matrices are considered. Furthermore, the upper bound on the number of CG iterations before converging is equal to the size of the matrix, impractical for large systems. A large variety of solvers is proposed that partially or fully overcome these limitations, including the BIConjugate Gradient (BICG), BIConjugate Gradient STABilized (BICGSTAB), MINimal RESidual (MINRES), and Generalized Minimal RESidual methods (GMRES) [418].

Preconditioning is often used to accelerate the convergence of the iterative methods. During preconditioning, a linear matrix equation is transformed into

where

and M = M 1 M 2 is a nonsingular matrix called a preconditioner [418]. After solving (5.32), (5.34) is solved to determine x. Proper choice of the preconditioner accelerates the convergence of the iterative solvers, ensuring that determining M and solving (5.32) and (5.34) are more efficient than solving the original system. The computational cost of producing the preconditioner should however be small since the difficulty in producing matrix M negates the computational benefits.

Matrix M, described in (5.25), and (5.27a) to (5.27d) can be used as a preconditioner during the circuit analysis process [416]. Several features make methods (5.27a) to (5.27d) attractive. Systems involving matrix M are relatively easy to solve, since M is either diagonal or triangular. Systems produced in practical VLSI systems are sparse diagonally dominant matrices. The diagonal elements of a Laplacian matrix of an underlying graph are typically larger than the non-diagonal elements. The number of nodes of an underlying circuit graph is proportional to the number of edges, since most nodes are only connected to the immediate neighbors. Systems composed of sparse diagonally dominant matrices are well suited for preconditioning using split matrices, significantly accelerating the convergence process [418].

Other popular preconditioning approaches exist that include incomplete factorization and approximate inverse. Incomplete LU (ILU) factorization, for example, is based on approximating \(A \approx \tilde {L}\tilde {U}\) [416], where \(\tilde {L}\) and \(\tilde {U}\) are sparse upper and lower triangular matrices, yielding a preconditioned system,

Incomplete Cholesky decomposition is a similar procedure restricted to positive definite matrices where the sparse approximation A ≈ R T R is determined. The SParse Approximate Inverse (SPAI) [419] preconditioner exhibits performance superior to incomplete factorization methods [420] when applied to diagonally dominant problems.

The iterative methods and preconditioners described in this section are considered general purpose, effectively handling a wide range of problems while significantly reducing the memory requirements. Superior performance can however be achieved by applying advanced analysis methods, exploiting special features of practical circuit graphs, such as sparsity, smoothness, and graph partitioning. The upcoming subsections describe enhancements to MNA-based circuit analysis, including domain decomposition, multigrids, and hierarchical matrices.

5.2.1 Domain decomposition

Due to the superlinear complexity of linear system solvers, the divide-and-conquer approach [421] can be effective in tackling these problems. Two advantages make divide-and-conquer algorithms particularly attractive for circuit analysis. If solving a problem requires \(O\left (n^p\right )\) time, where n is the problem size and p > 1, decomposing the problem into m sequentially solved parts yields a runtime of \(O\left (\frac {n^p}{m^{p-1}}\right )\), assuming negligible computational overhead. Due to the superlinear complexity of linear system solvers, i.e., p > 1, this approach can be effective in reducing the computational burden. Furthermore, these m parts can be processed in parallel, further reducing the runtime.

Domain decomposition (DD) is one of the most successful divide-and-conquer strategies for circuit analysis. The main principle of the DD technique is partitioning a circuit graph G = (V, E) into multiple subgraphs G i = (V i, E i), i ∈{1, …, m} and a subgraph of interface nodes G 0 = (V 0, E 0). An illustrative example is shown in Fig. 5.3. Observe that the interface nodes do not belong to any subgraph, and no node belongs to more than one partition. Note that this limitation not only prohibits conduction between the subgraphs, but also forbids capacitive and inductive coupling between subdomains. The standard linear equation of (5.21) can therefore be represented as an “arrowhead matrix” [422],

where A i represents the connectivity within subgraph G i, E i and F i represent the connectivity between G i and interface G 0, A 0 represents the connectivity among the interface nodes, x i denotes the unknown voltages and currents within G i, and b i is the vector of current and voltage sources connected to G i. Equation (5.37) can be split into two parts,

where A is a block diagonal matrix produced from subgraph matrices A i, and F, E, x, and b are produced by concatenating F i, E i, x i, and b i. Solving (5.38) for x and substituting into (5.39) yields

To solve (5.41), P = A −1 E and q = A −1 b are determined. Due to the block diagonal structure of A, these equations can be decomposed into m independent equations,

and

Matrix P and vector q are substituted into (5.41), yielding

Since the size of the interface set |V 0| is typically much smaller than the size of any subgraph |V i|, (5.44) requires relatively small computational resources. Equation (5.40) is transformed into

Similar to (5.42) and (5.43), (5.45) can be decomposed into m independent systems,

Due to the mutual independence of these expressions, (5.42), (5.43), and (5.46) can be solved in parallel. Furthermore, due to the small dimensions (i.e., relatively few interface nodes), the total runtime to solve m systems is smaller than the runtime to solve the original system, yielding additional performance improvement.

Domain decomposition process within a grid. a) A large mesh is divided into m = pq subdomains { Ω1, …, Ωpq} (gray nodes) and interfaces (black nodes). b) Connectivity within domain i is described by matrix A i, while connections with the interface are described in E i and F i. A Γ encodes the connectivity within the interface. The dimensions of A Γ are typically smaller than the dimensions of A i.

One of the earliest applications of domain decomposition in circuit analysis is discussed in [423]. A DRAM system composed of 130,000 transistors was successfully analyzed using 27 connected workstations operating in parallel. On-chip power delivery system analysis using domain decomposition is proposed in [422]. Domain decomposition combined with direct LU factorization of the subdomains achieved the maximum performance in case studies, completing a DC analysis of a ten million node system in 450 seconds.

The major advantage of domain decomposition is parallelization. Increasing the number of subdomains however increases the size of the interface graph G 0, potentially negating any performance gains. Overlapping domain decomposition modifies the original non-overlapping technique by allowing partitions to overlap [424], as illustrated in Fig. 5.4. The combined analysis of overlapping domains is based on the Schwarz method [425] and is used in [426] to complete the analysis of a power grid with 192 million nodes in five minutes while utilizing 1,200 processors operating in parallel.

5.2.2 \(\mathcal {H}\)-matrix

Another divide-and-conquer approach to circuit analysis is the application of the hierarchical matrix (\(\mathcal {H}\)-matrix) technique. Assume the target graph G = (V, E) is described by a nodal analysis matrix A. The method commences with hierarchical clustering of the entries within matrix A, yielding cluster tree T, as illustrated in Fig. 5.5d. The first step of top-down clustering [427] splits matrix A into multiple submatrices,

Blocks \(A_{i,j}\in \mathbb {R}^{p\times q}\) become the children of the root node of cluster tree T. The diagonal blocks A i,i, i ∈{1, …, m} are typically full rank matrices, while the off diagonal blocks are rank deficient. If rank k i,j of block A i,j is smaller than the specified threshold \(k_{\min }\), the matrix can be efficiently factorized as the product of two small matrices,

where \(M\in \mathbb {R}^{p\times k}\), \(N\in \mathbb {R}^{q\times k}\), and k ≪ p, q. Any block within T is split if the size of the block is greater than the specified minimum size \(m_{\min }\) and if the rank of the matrix is greater than \(k_{\min }\). Otherwise, the block is not split and is stored in factored form.

Construction of an \(\mathcal {H}\)-matrix of a sparse matrix describing a circuit with 256 nodes and 471 edges. The black dots represent the sparsity pattern of the original matrix. a) The original matrix is divided into four submatrices. b) Submatrices 1 and 2 are significantly rank deficient and are therefore not divided. Submatrices 0 and 3 are further split into four submatrices. c) \(\mathcal {H}\)-matrix after the third iteration. d) Cluster tree T after three iterations. e) Final \(\mathcal {H}\)-matrix after five iterations. The densest regions along the diagonal are split until the minimum submatrix size is achieved.

The main purpose of an \(\mathcal {H}\)-matrix is a cluster tree representation of matrix A. The resulting block matrix is illustrated in Fig. 5.5c. Those leaves stored in factored form require relatively low processing runtime. These features enable an efficient approximation of the LU factorization and inverse of matrix A, yielding significant improvement in runtime and memory. For example, the complexity of LU factorization is reduced from \(O\left (n^3\right )\) to \(O\left (n\left (\log {n}\right )^2\right )\). Partial element equivalent circuit analysis of a power supply layout is presented in [428], achieving up to four orders of magnitude speedup and up to a 50 fold reduction in memory requirements. The compatibility of the \(\mathcal {H}\)-matrix with finite element analysis enables efficient fine grained thermal analysis of a three-dimensional IC with more than a million discrete points [429].

5.2.3 Multigrid methods

The earliest works on multigrids date back to the 1960’s when R. P. Fedorenko suggested doubling the mesh spacing to solve the Poisson’s equation [430]. This approach allowed an approximate solution of a coarse grid to be efficiently determined. The coarse grid solution is subsequently mapped onto the original grid, providing a good initial point for solving the original grid. An order of magnitude reduction in the number of iterations was reported when using a coarser grid [430]. The method, applied in [430], was subsequently formalized in the 1970’s by A. Brandt [431] and W. Hackbusch [432].

Three cornerstone operations constitute the multigrid method, namely smoothing, restriction, and prolongation. Fundamentally, smoothing is the partial application of an iterative solver, as described in Section 5.2. Several iterations of an iterative solver significantly reduce the residual error, thereby shifting the approximate solution closer to the exact solution. Consider, for example, a one-dimensional Poisson’s equation,

with boundary conditions f(0) = f(1) = 0. The problem is discretized using the trapezoidal rule,

where h is the discretization step. In matrix form, the equation becomes

The system is solved using the Loose GMRES (LMGRES) method [433]. Convergence of the algorithm is depicted in Fig. 5.6. Observe that any difference between the adjacent points is quickly reduced, i.e., the high frequency errors are significantly dampened during each iteration.

Convergence of Poisson’s equation using LMGRES method. The one-dimensional space is discretized using 1,001 points. The initial guess is a vector of random numbers. After the first iteration, the high frequency components of the initial vector are significantly reduced, producing a smoother curve. To eliminate the remaining low frequency components, additional iterations are necessary. In this example, the solution is achieved after 62 iterations.

Eliminating low frequency errors however requires significantly longer runtime. To overcome this issue, a restriction step is performed to coarsen the domain of the system. The frequency of the error is therefore effectively increased, permitting efficient elimination by smoothing. Formally, restriction of function f : E → F is function f|A : A → F, where A ⊂ E and f(x) = f|A(x)∀x ∈ A. In the context of multigrids, this operation is effectively coarsening, reducing the number of points within the grid. Suppose the grid is described as a graph G 0 = (V 0, E 0), and function \(v: V \rightarrow \mathbb {R}\) maps each node to the node voltage. The goal of the coarsening operation is a reduced version of an initial grid G 1 = (V 1 ⊂ V 0, E 1), where the voltage within the original system v 0(n) is equal to the voltage within coarse system v 1(n) at any node n ∈ V 1. To recover the original solution from the approximate coarse solution, a prolongation operation is performed. Using interpolation, the solution of a coarse grid is mapped onto a fine grid. The resulting vector is typically a close approximation of a solution requiring few iterations to converge.

A single restriction procedure is often insufficient for significant acceleration. The restriction process is therefore repeatedly applied to further reduce the size of the mesh. Multiple prolongation operations are therefore necessary to recover the original grid. These procedures are formalized in cycles where a system undergoes a series of restrictions, prolongations, and smoothing. The most common cycles are V-cycle, W-cycle, and F-cycle [434], as illustrated in Fig. 5.7. These techniques tradeoff robustness with computational speed. The V-cycle is typically faster than the other cycle types but may fail to converge to a correct result. In contrast, the F-cycle requires more operations but is highly robust and accurate.

One of the earliest applications of geometric multigrids to VLSI systems is described in [435]. A relatively straightforward application of geometric multigrids in [435] yields a 300 fold improvement in runtime with a peak error of over 20%. Geometric multigrids are highly suitable for analyzing regular physical layouts, supporting the efficient analysis of circuits with tens of millions of nodes [436].

The theoretical computational complexity of the geometric multigrid is O(|V |) [437], an attractive feature in the analysis of large systems. A major limitation of geometric multigrids is reliance on the structural regularity of the problem domain. The algebraic multigrid (AMG) is an important generalization of geometric multigrids where no structural information is required for restriction and prolongation. One of the earliest applications of AMG to circuit analysis is proposed in [438], where a 16 to 20 fold improvement in runtime is achieved. Another notable result is PowerRush, an AMG-based DC and transient simulator exhibiting linear complexity [439, 440]. Up to nine levels of grid reduction are reported in [439], completing the analysis in 169 seconds after reducing a circuit with 38 million nodes to 264 nodes. The efficiency of AMG simulation enables large scale circuit optimization. For example, in [441], decoupling capacitor allocation is performed using AMG, optimizing circuits with up to a million nodes.

Restriction, smoothing, and prolongation are highly parallelizable due to the small number of steps with few dependencies [442]. These features enable GPU-based geometric multigrid acceleration of the circuit analysis process, achieving up to two orders of magnitude speedup [442, 443].

5.3 Non-MNA techniques

MNA and associated enhancements enable the efficient analysis of a wide range of complex circuits. Alternative techniques however exist that avoid MNA-based equations, often yielding superior performance as compared to MNA-based methods. Three techniques are presented in this section, namely, scattering parameters, random walks, and lattice graph analysis.

5.3.1 Scattering parameters

The detailed structure of an IC component is often unknown. This situation frequently occurs in two cases. If the components of the integrated system are supplied by a third party vendor, the internal structure of the components is treated as intellectual property (IP) and is typically not described or is purposely obfuscated [444, 445]. The structure of a component can also be highly complex, complicating the construction of a distributed model [75]. A scattering parameter (S parameter) model is often utilized in these cases, characterizing the frequency response of a circuit to input stimuli without revealing the internal structure (a black box). Examples of an S parameter model with two and n ports are depicted in Fig. 5.8. Parameters a k and b k correspond to normalized power waves [446],

where Z k is the reference impedance at port k. \(Z_k^*\) denotes the complex conjugate of Z k and

By measuring the response b m of a circuit at port m in response to a unit power wave at port k, scattering parameter s k,m is

In a multiport network, scattering parameter matrix S is produced that describes the relationship among the signals at different ports,

Note that any scattering parameter is a function of frequency. Measurements should therefore be performed at different frequencies to evaluate the response over the entire bandwidth of interest.

Scattering parameter (S parameter) model of a component. a) Two port network, and b) multiport network. The component is treated as a black box with no knowledge of the internal structure, as opposed to a grey or white box utilizing, respectively, partial or complete structural information [447]. By applying stimuli at different ports of the components, the response of the system at each port is determined. The relationship between an excitation at port i and the response at port j is described by S parameters.

A major advantage of the S parameter model is the applicability of the model to an arbitrary system. The S parameter model requires no information describing the internal structure of the system. Furthermore, based on an S parameter matrix, other electromagnetic characteristics of a system can be determined [446]. Based on open circuit impedance (Z) parameters, for example, crucial parameters can be determined such as the self- and mutual inductances within a network [332].

where I is the identity matrix,

and

Other parameters widely used during the design of analog circuits, such as Y , ABCD, or h parameters [446], can also be derived from the S parameters.

5.3.2 Random walks

A random walk is a stochastic process that describes a succession of steps of an object within a mathematical space [448]. A classic example of a random walk is a random walk along a one-dimensional integer axis, as illustrated in Fig. 5.9a. The particle is initially at position 0 and, every time step, the particle moves in a random direction. Different types of space and probability distributions of the transitions in a random walk exist, such as a discrete two-dimensional space, continuous two-dimensional space with a variable step length (such as Lévy Flight [449]), or a biased, continuous walk in three-dimensional space (see Figs. 5.9b to 5.9d). Common issues relating to a random walk include the expected distance of an object from the source after n steps, probability of a return to the origin after n steps, and probability of reaching a before reaching b, where a and b are arbitrary points within the space.

Examples of a random walk. a) Discrete time one-dimensional random walk on an integer axis. b) Three unbiased random walks within a two-dimensional integer grid. c) Unbiased random walk within a continuous two-dimensional space. The direction of the step is uniformly random. The step size follows a Cauchy distribution. This type of random walk is commonly referred to as Lévy Flight [449]. d) Biased random walk within a three-dimensional integer space. The probability of a transition toward + ∞ is greater than the probability of a transition toward −∞ along the x, y, and z axes.

Manifestations of a random walk in physical systems have been studied before this term was first coined. In 1880, Lord Rayleigh studied the amplitude of oscillations due to multiple strings vibrating at the same frequency with a random phase [450]. This problem is analogous to a random walk on a one-dimensional axis. The erratic movement of dust particles, what will later be called Brownian motion, was discovered as early as 1784 by the Dutch scientist, J. Ingen-Housz [451]. A formal study of random walks has been applied to different physical phenomena, including diffusion in molecular physics [452], genetic drift in genetics [453], and measuring certain features of the World Wide Web [454, 455].

One of the most extensively studied spaces of a random walk is a graph, where a particle moves towards the neighboring vertex at each time step. The probability of moving from vertex a toward vertex b is proportional to the weight of the edge (a, b). The analogy between a random walk and an electrical network was studied by C. St. J. A. Nash-Williams in [456]. The random walk equivalent of the effective resistance between a and b is the commute time between nodes a and b, i.e., the expected number of steps in a random walk starting at a, visiting b, and returning to a (see Fig. 5.10). The conductance of a resistor is equivalent to the weight of an edge within a network. The probability of a transition along a specific edge is proportional to the weight of that edge. A particle moving in a random walk is therefore less likely to transition along a high resistance edge (or path).

Effective resistance between arbitrary nodes a and b is equivalent to the expected number of steps for a random walk to visit node b while starting and returning to node a, a) electrical circuit, and b) equivalent graph. The probability of transitioning towards a neighboring node is proportional to the conductance of the corresponding edge.

The analogy between electrical circuits and random walks can be exploited in the analysis of electrical circuits. Different simulation tools based on random walks have been explored in the literature. By performing a random walk experiment multiple times, the average number of steps converges towards the commute time which corresponds to the effective resistance. The earliest application of a random walk to linear circuit analysis is described in [70]. A major advantage highlighted in [70] is the linear relationship between circuit size and computational complexity. Different circuit simulation tools have been described in the literature, achieving a significant speedup as compared to conventional circuit analyses [70, 457,458,459]. Another aspect is the locality of the random walk. If the target nodes are located close to each other within a network, the random walk is more likely to terminate while exploring only a small portion of the network. This result is highly desirable when studying system perturbations, since only the affected portion of the system is analyzed. This advantage is exploited in [460] in the analysis of incremental changes in power grids.

A major issue pertaining to random walk-based simulation tools is the number of random walk experiments required to achieve a sufficiently small error. The error ε of a random walk is inversely proportional to the square root of the number of experiments M,

To reduce the error by 50%, the number of experiments should be increased four fold. To overcome this issues, the ’importance sampling’ technique is introduced in [461], significantly improving the speed of convergence. Another challenge of random walk-based tools is the possibility of excessively long walks, negating any computational speedup [70]. This issue can be eliminated by limiting the length of the random walk. The accuracy of the solution is however degraded by limiting the length.

Random walks are found in a wide variety of VLSI applications. A sensitivity analysis of VLSI power networks [462], for example, is a notable application, where the critical parameters affecting a power grid are evaluated. Matrix preconditioning based on random walks is described in [463], significantly accelerating the circuit analysis process. Other notable applications of random walks in VLSI include modeling of thermal behavior [464], decoupling capacitor placement [465], and electromigration analysis [466].

5.3.3 Lattice graph

Due to the large scale of VLSI systems, the physical structures are often highly regular, composed of millions to billions of identical elements distributed within a system. The on-chip power grid is a prominent example of a regular structure composed of two or more layers of identical interconnects. An example of a power grid is shown in Fig. 5.11a. Grids are highly reliable due to the many redundant paths. The number of paths connecting two corners of a grid is [467]

where x and y are, respectively, the horizontal and vertical dimensions of a grid. The number of paths in (5.61) grows superlinearly with x and y, yielding a high degree of redundancy even in relatively small grids. The failure of a single or multiple wire segments can be tolerated since the remaining wires provide the necessary connections. Additional benefits of a grid include shielding and decoupling that reduce parasitic capacitive and inductive coupling in global clock and data lines [468]. A grid structured power network can be modeled as a two-dimensional resistive lattice, as shown in Fig. 5.11b [469]. Depending upon the metal pitch and die size, the dimensions of a grid can vary from hundreds to tens of thousands of segments [72]. The large dimensions enable the use of infinite mesh methods for analyzing grid structured power networks.

Multidimensional mesh structures assuming infinite mesh dimensions have been extensively studied in the literature. In 1936, W. H. McCrea studied the following problem [470],

“In a rectangular lattice, at every time instant a point P moves from one lattice point to one of the neighboring points. Each adjacent point has equal probability of being selected. Determine the probability that the particular boundary point is ultimately reached.”

Variations of this problem on different two- and three-dimensional lattices were solved by W. H. McCrea and F. J. W. Whipple in 1940 [471], where different finite and infinite rectangular lattices are analyzed. A notable result is an expression for the average flow of particles between the source and target points within an infinite two-dimensional lattice,

where \(\cos {}(\lambda )+\cosh (\mu )=2\), and x and y denote the number of resistors separating the source and target points in, respectively, the horizontal and vertical direction.

The link between random walk and circuit theory was not widely recognized in the 1940’s. An electrical formulation of the problem solved by W. H. McCrea and F. J. W. Whipple in [471] is

Determine the effective resistance between two arbitrary points (x 0, y 0) and (x, y) within a two dimensional grid of resistors with resistance r (see Fig. 5.11 b)

An easier problem of determining the effective resistance between adjacent nodes in an infinite resistive lattice was solved in 1949 [472] based on the principles of symmetry and superposition. Suppose current 4i is injected at an arbitrary node a, as illustrated in Fig. 5.12a. Due to symmetry, the current through each of the four adjacent branches is i. Now withdraw current 4i from neighboring node b. The current though each adjacent branch is also i, as shown in Fig. 5.12b. By superimposing these solutions, the current through a resistor connecting a and b is 2i, as illustrated in Fig. 5.12c. The effective resistance is found by equating the voltage drop across resistor ab with the voltage drop across the effective resistance of the grid,

yielding

Superposition applied to determine the effective resistance between adjacent nodes a and b in an infinite two-dimensional resistive lattice. a) Current 4i is injected into node a. Due to symmetry, the current through each adjacent resistor is i, flowing away from node a. b) Current 4i is drawn from node b. Due to symmetry, the current through each adjacent resistor is i, flowing towards node b. c) Superposition of current injection and withdrawal. The current through resistor ab is 2i.

Despite the relative simplicity of the problem for adjacent nodes, the general problem requires advanced mathematical methods. Different alternative solutions to the problem of determining the effective resistance within a grid have been presented in the literature [472] in the context of operational calculus [473], discrete analytic functions [474], partial differential equations [475,476,477], random walks [471, 478], and lattice Green’s function [479]. Notable examples include the expressions by A. Stöhr [475],

and

F. Spitzer [478],

B. van der Pol [473],

and W. H. McCrea and F. J. W. Whipple [471], later rediscovered by G. Venezian [476] and J. Cserti [479],

Expressions (5.65) to (5.71) describe uniform resistive lattices. Many practical VLSI grids are anisotropic, i.e., the resistance along the horizontal dimension is not the same as the resistance along the vertical dimension. An expression for the resistance within an infinite anisotropic resistive grid is presented in [469],

where k is the ratio of the horizontal resistance to the vertical resistance, and

This result has significant value for the analysis of power grids. To determine the equivalent resistance within an M × N grid using MNA, a solution of the linear equation of size MN × MN is necessary, requiring prohibitive computational time. In contrast, the effective resistance between two nodes within a grid can be found in constant time, assuming these nodes are sufficiently far from the grid boundaries. A linear complexity, IR voltage drop analysis algorithm is introduced in [480]. The contribution of the voltage sources and current loads to IR voltage drops is evaluated separately based on the effective resistance computed in constant time. The solutions are superimposed to determine the total IR voltage drop within a circuit. The solution is further accelerated by observing that the IR voltage drop contribution of distant voltage sources and loads is negligible. By restricting the analysis to the vicinity of a node, the runtime can be drastically reduced while maintaining the error below 0.5%.

5.4 Summary

Due to the stringent performance requirements of modern VLSI systems, the demand for accurate circuit analysis has drastically increased over the past decades. The immense complexity of modern VLSI systems however makes standard circuit analysis based on MNA impractical. A wide range of algorithms have been proposed to reduce the runtime of the circuit analysis process while maintaining sufficient accuracy. The most prominent techniques are described in this chapter.

Domain decomposition methods split a circuit into multiple independent domains, thereby reducing the computational complexity and enabling parallelization. In the \(\mathcal {H}\)-matrix representation, the sparsity of practical matrices is exploited to produce a cluster tree, enabling efficient algorithms with less memory requirements and lower computational complexity. Using multigrid techniques, a solution is initially approximated using a coarse version of the system. The solution is subsequently determined after interpolation and smoothing operations.

Alternative circuit analysis techniques attempt to accelerate the circuit analysis process by avoiding costly MNA-based analysis. A complex or obfuscated circuit can be represented by a multiport network model, efficiently described by S parameters. In random walk-based methods, the voltage within a grid is determined statistically, yielding linear computational complexity at a fixed accuracy. The infinite lattice model can often be used to analyze large grids, often encountered in on-chip power distribution systems.

Common circuit analysis methods are discussed in this chapter. These methods enhance traditional MNA processes or follow alternative approaches. Despite the immense potential, few of these techniques are used in mainstream circuit analysis methodologies. Further research is required to improve the versatility and performance of the advanced circuit analysis techniques discussed in this chapter. For example, a significant limitation of infinite lattice analysis is poor accuracy near the boundaries of the grid. This limitation is overcome by applying the image method [71] and infinity mirror technique [72], described, respectively, in Chapters 6 and 7.

References

L. W. Nagel, “SPICE2: a Computer Program to Simulate Semiconductor Circuits,” Memorandum No. ERL-M520, University of California, Berkeley, May 1975.

C. Ho, A. Ruehli, and P. Brennan, “The Modified Nodal Approach to Network Analysis,” IEEE Transactions on Circuits and Systems, Vol. 22, No. 6, pp. 504–509, June 1975.

R. Bairamkulov, A. Roy, M. Nagarajan, V. Srinivas, and E. G. Friedman, “SPROUT - Smart Power ROUting Tool for Board-Level Exploration and Prototyping,” Proceedings of the ACM/IEEE Design Automation Conference, pp. 283–288, December 2021.

H. Qian, S. R. Nassif, and S. S. Sapatnekar, “Random Walks in a Supply Network,” Proceedings of the ACM/IEEE Design Automation Conference, pp. 93–98, June 2003.

R. Bairamkulov and E. G. Friedman, “Effective Resistance of Two-Dimensional Truncated Infinite Mesh Structures,” IEEE Transactions on Circuits and Systems I: Regular Papers, Vol. 66, No. 11, pp. 4368–4376, November 2019.

R. Bairamkulov and E. G. Friedman, “Effective Resistance of Finite Two-Dimensional Grids based on Infinity Mirror Technique,” IEEE Transactions on Circuits and Systems I: Regular Papers, Vol. 67, No. 9, pp. 3224–3233, September 2020.

R. Bairamkulov, K. Xu, M. Popovich, J. S. Ochoa, V. Srinivas, and E. G. Friedman, “Power Delivery Exploration Methodology based on Constrained Optimization,” IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, Vol. 39, No. 9, pp. 1916–1924, September 2020.

R. Bairamkulov, A. Roy, M. Nagarajan, V. Srinivas, and E. G. Friedman, “SPROUT - smart power routing tool for board-level exploration and prototyping,” IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, Vol. 41, No. 7, pp. 2263–2275, July 2022

G. R. Kirchhoff, “Ueber die Auflösung der Gleichungen, auf welche man bei der Untersuchung der linearen Vertheilung galvanischer Ströme geführt wird,” Annalen der Physik, Vol. 148, No. 12, pp. 497–508, October 1847.

L. Nagel and R. Rohrer, “Computer Analysis of Nonlinear Circuits, Excluding Radiation (CANCER),” IEEE Journal of Solid-State Circuits, Vol. 6, No. 4, pp. 166–182, August 1971.

W. J. McCalla and W. G. Howard, “BIAS-3-A Program for the Nonlinear D.C. Analysis of Bipolar Transistor Circuits,” IEEE Journal of Solid-State Circuits, Vol. 6, No. 1, pp. 14–19, February 1971.

C.-T. Tsai and W.-Y. Yip, “An Experimental Technique for Full Package Inductance Matrix Characterization,” IEEE Transactions on Components, Packaging, and Manufacturing Technology: Part B, Vol. 19, No. 2, pp. 338–343, May 1996.

G. S. Ohm, Die galvanische Kette, mathematisch bearbeitet, Bei T. H. Reimann, Berlin, 1827.

J. C. Maxwell, A Treatise on Electricity and Magnetism, Clarendon Press, 1873.

J. R. Carson, “A Theoretical Study of the Three-Element Vacuum Tube,” Proceedings of the Institute of Radio Engineers, Vol. 7, No. 2, pp. 187–200, April 1919.

G. Kron, “Non-Riemannian Dynamics of Rotating Electrical Machinery,” Journal of Mathematics and Physics, Vol. 13, No. 1–4, pp. 103–194, April 1934.

F. H. Branin, “D-C and Transient Analysis of Networks using a Digital Computer,” Proceedings of the SHARE Design Automation Workshop, p. 4.1–4.23, January 1964.

D. O. Pederson, “A Historical Review of Circuit Simulation,” IEEE Transactions on Circuits and Systems, Vol. 31, No. 1, pp. 103–111, January 1984.

A. F. Malmberg and F. L. Cornwell, NET-1 Network Analysis Program, Los Alamos Scientific Laboratory of the University of California, Los Alamos, New Mexico, 1963.

H. W. Mathers, S. R. Sedore, and J. R. Sents, Automated Digital Computer Program for Determining Responses of Electronic Circuits to Transient Nuclear Radiation (SCEPTRE), IBM Federal Systems Division, Electronics Systems Center, Oswego, New York, 1967.

H. Shichman, “Computation of DC Solutions for Bipolar Transistor Networks,” IEEE Transactions on Circuit Theory, Vol. 16, No. 4, pp. 460–466, November 1969.

H. Shichman, “Integration System of a Nonlinear Transient Network-Analysis Program,” IEEE Transactions on Circuit Theory, Vol. 17, No. 3, pp. 378–386, August 1970.

G. Hachtel, R. Brayton, and F. Gustavson, “The Sparse Tableau Approach to Network Analysis and Design,” IEEE Transactions on Circuit Theory, Vol. 18, No. 1, pp. 101–113, January 1971.

A. R. Newton and D. O. Pederson, “Analysis Time, Accuracy and Memory Requirement Tradeoffs in Spice2,” Proceedings of the IEEE Asilomar Conference on Circuits, Systems and Computers, pp. 6–9, November 1977.

T.-H. Chen, C. Luk, and C. C.-P. Chen, “INDUCTWISE: Inductance-Wise Interconnect Simulator and Extractor,” IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, Vol. 22, No. 7, pp. 884–894, July 2003.

A. B. Bhattacharyya, Compact MOSFET Models for VLSI Design, John Wiley & Sons (Asia), 2009.

Y. Zhang, X. Wang, Y. Li, and E. G. Friedman, “Memristive Model for Synaptic Circuits,” IEEE Transactions on Circuits and Systems II: Express Briefs, Vol. 64, No. 7, pp. 767–771, July 2017.

C. A. Floudas and P. M. Pardalos, Encyclopedia of Optimization, Springer Science & Business Media, 2008.

M. R. Hestenes and E. Stiefel, “Methods of Conjugate Gradients for Solving Linear Systems,” Journal of Research of the National Bureau of Standards, Vol. 49, No. 6, pp. 409–435, December 1952.

A. Buttari, J. Langou, J. Kurzak, and J. Dongarra, “A Class of Parallel Tiled Linear Algebra Algorithms for Multicore Architectures,” Parallel Computing, Vol. 35, No. 1, pp. 38–53, January 2009.

T.-H. Chen and C. C.-P. Chen, “Efficient Large-Scale Power Grid Analysis based on Preconditioned Krylov-Subspace Iterative Methods,” Proceedings of the ACM/IEEE Design Automation Conference, pp. 559–562, June 2001.

Y. Saad and M. H. Schultz, “GMRES: a Generalized Minimal Residual Algorithm for Solving Nonsymmetric Linear Systems,” SIAM Journal on Scientific and Statistical Computing, Vol. 7, No. 3, pp. 856–869, July 1986.

R. Barrett, M. Berry, T. F. Chan, J. Demmel, J. Donato, J. Dongarra, V. Eijkhout, R. Pozo, C. Romine, and H. Van der Vorst, Templates for the Solution of Linear Systems: Building Blocks for Iterative Methods, SIAM, 1994.

Y. Saad, Iterative Methods for Sparse Linear Systems, Second Edition, PWS Publishing, 1996.

D. S. Watkins, Fundamentals of Matrix Computations, Vol. 64, John Wiley & Sons, 2004.

D. Mandic, “A Generalized Normalized Gradient Descent Algorithm,” IEEE Signal Processing Letters, Vol. 11, No. 2, pp. 115–118, February 2004.

C. F. Van Loan and G. Golub, Matrix Computations, The Johns Hopkins University Press, 1996.

M. J. Grote and T. Huckle, “Parallel Preconditioning with Sparse Approximate Inverses,” SIAM Journal on Scientific Computing, Vol. 18, No. 3, pp. 838–853, May 1997.

M. Benzi and M. Tûma, “A Comparative Study of Sparse Approximate Inverse Preconditioners,” Applied Numerical Mathematics, Vol. 30, No. 2, pp. 305–340, June 1999.

W. Shi and F. Yu, “A Divide-and-Conquer Algorithm for 3-D Capacitance Extraction,” IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, Vol. 23, No. 8, pp. 1157–1163, July 2004.

K. Sun, Q. Zhou, K. Mohanram, and D. C. Sorensen, “Parallel Domain Decomposition for Simulation of Large-Scale Power Grids,” Proceedings of the IEEE/ACM International Conference on Computer-Aided Design, pp. 54–59, November 2007.

U. Kleis, O. Wallat, U. Wever, and Q. Zheng, “Domain Decomposition Methods for Circuit Simulation,” Proceedings of the Workshop on Parallel and Distributed Simulation, pp. 183–186, July 1994.

Y. Zhong and M. D. F. Wong, “Fast Block-Iterative Domain Decomposition Algorithm for IR Drop Analysis in Large Power Grid,” Proceedings of the IEEE International Symposium on Quality Electronic Design, pp. 277–283, March 2010.

D. E. Keyes, A. Sameh, and V. Venkatakrishnan, Parallel Numerical Algorithms, Vol. 4, Springer Science & Business Media, 2012.

T. Yu, Z. Xiao, and M. D. F. Wong, “Efficient Parallel Power Grid Analysis via Additive Schwarz Method,” Proceedings of the IEEE/ACM International Conference on Computer-Aided Design, pp. 399–406, November 2012.

S. Le Borne and L. Grasedyck, “\(\mathcal {H}\)-Matrix Preconditioners in Convection-Dominated Problems,” SIAM Journal on Matrix Analysis and Applications, Vol. 27, No. 4, pp. 1172–1183, December 2006.

M. L. Zitzmann, Fast and Efficient Methods for Circuit-Based Automotive EMC Simulation, Ph.D. Thesis, University of Erlangen-Nuremberg, 2007.

H.-B. Chen, Y.-C. Li, S. X.-D. Tan, X. Huang, H. Wang, and N. Wong, “\(\mathcal {H}\)-Matrix-Based Finite-Element-Based Thermal Analysis for 3D ICs,” ACM Transactions on Design Automation of Electronic Systems, Vol. 20, No. 4, September 2015.

R. P. Fedorenko, “A Relaxation Method for Solving Elliptic Difference Equations,” USSR Computational Mathematics and Mathematical Physics, Vol. 1, No. 4, pp. 1092–1096, September 1962.

A. Brandt, “Multi-Level Adaptive Solutions to Boundary-Value Problems,” Mathematics of Computation, Vol. 31, No. 138, pp. 333–390, April 1977.

W. Hackbusch, “On the Multi-Grid Method Applied to Difference Equations,” Computing, Vol. 20, No. 4, pp. 291–306, December 1978.

A. H. Baker, E. R. Jessup, and T. Manteuffel, “A Technique for Accelerating the Convergence of Restarted GMRES,” SIAM Journal on Matrix Analysis and Applications, Vol. 26, No. 4, pp. 962–984, 2005. ;

J. Mandel and S. V. Parter, “On the Multigrid F-Cycle,” Applied Mathematics and Computation, Vol. 37, No. 1, pp. 19–36, May 1990.

S. R. Nassif and J. N. Kozhaya, “Fast Power Grid Simulation,” Proceedings of the ACM/IEEE Design Automation Conference, pp. 156–161, June 2000.

S. Williams, D. D. Kalamkar, A. Singh, A. M. Deshpande, B. Van Straalen, M. Smelyanskiy, A. Almgren, P. Dubey, J. Shalf, and L. Oliker, “Optimization of Geometric Multigrid for Emerging Multi- and Manycore Processors,” Proceedings of the International Conference on High Performance Computing, Networking, Storage and Analysis, pp. 1–11, November 2012.

R. D. Falgout, An Introduction to Algebraic Multigrid, Lawrence Livermore National Lab, Livermore, California, 2006.

J. N. Kozhaya, S. R. Nassif, and F. N. Najm, “A Multigrid-Like Technique for Power Grid Analysis,” IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, Vol. 21, No. 10, pp. 1148–1160, October 2002.

J. Yang, Z. Li, Y. Cai, and Q. Zhou, “PowerRush: a Linear Simulator for Power Grid,” Proceedings of the IEEE/ACM International Conference on Computer-Aided Design, pp. 482–487, December 2011.

J. Yang, Z. Li, Y. Cai, and Q. Zhou, “PowerRush: Efficient Transient Simulation for Power Grid Analysis,” Proceedings of the IEEE/ACM International Conference on Computer-Aided Design, pp. 653–659, November 2012.

C. Zhuo, J. Hu, M. Zhao, and K. Chen, “Fast Decap Allocation based on Algebraic Multigrid,” Proceedings of the IEEE/ACM International Conference on Computer-Aided Design, pp. 107–111, November 2006.

Z. Feng and P. Li, “Multigrid on GPU: Tackling Power Grid Analysis on Parallel SIMT Platforms,” Proceedings of the IEEE/ACM International Conference on Computer-Aided Design, pp. 647–654, November 2008.

Z. Feng and Z. Zeng, “Parallel Multigrid Preconditioning on Graphics Processing Units (GPUs) for Robust Power Grid Analysis,” Proceedings of the ACM/IEEE Design Automation Conference, pp. 661–666, June 2010.

V. V. Rao and I. Savidis, “Parameter Biasing Obfuscation for Analog IP Protection,” Proceedings of the IEEE International Symposium on Hardware Oriented Security and Trust, pp. 161–161, May 2017.

R. S. Chakraborty and S. Bhunia, “HARPOON: an Obfuscation-Based SoC Design Methodology for Hardware Protection,” IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, Vol. 28, No. 10, pp. 1493–1502, October 2009.

T. Reveyrand, “Multiport Conversions between S, Z, Y , h, ABCD, and T Parameters,” Proceedings of the International Workshop on Integrated Nonlinear Microwave and Millimetre-Wave Circuits, pp. 1–3, July 2018.

D. Schreurs and J. Verspecht, “Large-Signal Modelling and Measuring Go Hand-in-Hand: Accurate Alternatives to Indirect S-Parameter Methods,” International Journal of RF and Microwave Computer-Aided Engineering, Vol. 10, No. 1, pp. 6–18, December 2000.

G. F. Lawler and V. Limic, Random Walk: a Modern Introduction, Cambridge University Press, 2010.

B. B. Mandelbrot, The Fractal Geometry of Nature, W. H. Freeman, New York, 1982.

Lord Rayleigh F. R. S., “XII. On the Resultant of a Large Number of Vibrations of the Same Pitch and of Arbitrary Phase,” The London, Edinburgh, and Dublin Philosophical Magazine and Journal of Science, Vol. 10, No. 60, pp. 73–78, August 1880.

D. Abbott, B. R. Davis, N. J. Phillips, and K. Eshraghian, “Simple Derivation of the Thermal Noise Formula using Window-Limited Fourier Transforms and Other Conundrums,” IEEE Transactions on Education, Vol. 39, No. 1, pp. 1–13, February 1996.

D. Ben-Avraham and S. Havlin, Diffusion and Reactions in Fractals and Disordered Systems, Cambridge University Press, 2000.

J. E. Neigel and J. C. Avise, “Application of a Random Walk Model to Geographic Distributions of Animal Mitochondrial DNA Variation,” Genetics, Vol. 135, No. 4, pp. 1209–1220, December 1993.

S. J. Hardiman and L. Katzir, “Estimating Clustering Coefficients and Size of Social Networks via Random Walk,” Proceedings of the International Conference on World Wide Web, pp. 539–550, May 2013.

A. Dasgupta, G. Das, and H. Mannila, “A Random Walk Approach to Sampling Hidden Databases,” Proceedings of the International Conference on Management of Data, pp. 629–640, June 2007.

C. St. J. A. Nash-Williams, “Random Walk and Electric Currents in Networks,” Mathematical Proceedings of the Cambridge Philosophical Society, Vol. 55, pp. 181–194, April 1959.

W. Guo, S. X.-D. Tan, Z. Luo, and X. Hong, “Partial Random Walk for Large Linear Network Analysis,” Proceedings of the IEEE International Symposium on Circuits and Systems, Vol. 5, pp. 173–176, May 2004.

Z. Yu and M. D. F. Wong, “Fast Algorithms for IR Drop Analysis in Large Power Grid,” Proceedings of the IEEE/ACM International Conference on Computer-Aided Design, pp. 351–357, November 2005.

H. Qian, S. R. Nassif, and S. S. Sapatnekar, “Power Grid Analysis using Random Walks,” IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems, Vol. 24, No. 8, pp. 1204–1224, August 2005.

B. Boghrati and S. S. Sapatnekar, “Incremental Analysis of Power Grids using Backward Random Walks,” ACM Transactions on Design Automation of Electronic Systems, Vol. 19, No. 3, pp. 1–29, June 2014.

T. Miyakawa, K. Yamanaga, H. Tsutsui, H. Ochi, and T. Sato, “Acceleration of Random-Walk Based Linear Circuit Analysis using Importance Sampling,” Proceedings of the ACM Great Lakes Symposium on VLSI, pp. 211–216, May 2011.

P. Li, “Variational Analysis of Large Power Grids by Exploring Statistical Sampling Sharing and Spatial Locality,” Proceedings of the IEEE/ACM International Conference on Computer-Aided Design, pp. 645–651, November 2005.

J. Wang, “Deterministic Random Walk Preconditioning for Power Grid Analysis,” Proceedings of the IEEE/ACM International Conference on Computer-Aided Design, pp. 392–398, November 2012.

Y. Liang, W. Yu, and H. Qian, “A Hybrid Random Walk Algorithm for 3-D Thermal Analysis of Integrated Circuits,” Proceedings of the ACM/IEEE Asia and South Pacific Design Automation Conference, pp. 849–854, January 2014.

Y. Cai, L. Kang, J. Shi, X. Hong, and S. X.-D. Tan, “Random Walk Guided Decap Embedding for Power/Ground Network Optimization,” IEEE Transactions on Circuits and Systems II: Express Briefs, Vol. 55, No. 1, pp. 36–40, January 2008.

D.-A. Li, M. Marek-Sadowska, and S. R. Nassif, “T-VEMA: a Temperature- and Variation-Aware Electromigration Power Grid Analysis Tool,” IEEE Transactions on Very Large Scale Integration (VLSI) Systems, Vol. 23, No. 10, pp. 2327–2331, October 2015.

A. Çiprut, Grids in Very Large Scale Integration Systems, Ph.D. Thesis, University of Rochester, May 2019.

A. V. Mezhiba and E. G. Friedman, “Inductive Properties of High-Performance Power Distribution Grids,” IEEE Transactions on Very Large Scale Integration (VLSI) Systems, Vol. 10, No. 6, pp. 762–776, December 2002.

S. Köse and E. G. Friedman, “Effective Resistance of a Two Layer Mesh,” IEEE Transactions on Circuits and Systems II: Express Briefs, Vol. 58, No. 11, pp. 739–743, November 2011.

W. H. McCrea, “A Problem on Random Paths,” The Mathematical Gazette, Vol. 20, No. 241, pp. 311–317, December 1936.

W. H. McCrea and F. J. W. Whipple, “Random Paths in Two and Three Dimensions,” Proceedings of the Royal Society of Edinburgh, Vol. 60, No. 3, pp. 281–298, January 1940.

H. Flanders, “Infinite Networks: II–Resistance in an Infinite Grid,” Journal of Mathematical Analysis and Applications, Vol. 40, No. 1, pp. 30–35, October 1972.

B. van der Pol and H. Bremmer, Operational Calculus based on the Two-Sided Laplace Integral, Cambridge University Press, 1950.

R. J. Duffin, “Basic Properties of Discrete Analytic Functions,” Duke Mathematical Journal, Vol. 23, No. 2, pp. 335–363, June 1956.

A. Stöhr, “Über einige lineare partielle Differenzengleichungen mit konstanten Koeffizienten,” Mathematische Nachrichten, Vol. 3, No. 6, pp. 330–357, September 1950.

G. Venezian, “On the Resistance between Two Points on a Grid,” American Journal of Physics, Vol. 62, No. 11, pp. 1000–1004, November 1994.

D. Atkinson and F. J. Van Steenwijk, “Infinite Resistive Lattices,” American Journal of Physics, Vol. 67, No. 6, pp. 486–492, June 1999.

F. Spitzer, Principles of Random Walk, Vol. 34, Springer Science & Business Media, 2013.

J. Cserti, “Application of the Lattice Green’s Function for Calculating the Resistance of an Infinite Network of Resistors,” American Journal of Physics, Vol. 68, No. 10, pp. 896–906, October 2000.

S. Köse and E. G. Friedman, “Efficient Algorithms for Fast IR Drop Analysis Exploiting Locality,” Integration, the VLSI Journal, Vol. 45, No. 2, pp. 149–161, March 2012.

Author information

Authors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Bairamkulov, R., Friedman, E. (2023). Circuit analysis. In: Graphs in VLSI. Springer, Cham. https://doi.org/10.1007/978-3-031-11047-4_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-11047-4_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-11046-7

Online ISBN: 978-3-031-11047-4

eBook Packages: EngineeringEngineering (R0)