Abstract

Open relation extraction (ORE) aims to assign semantic relationships between arguments, essential to the automatic construction of knowledge graphs. The previous methods either depend on external NLP tools (e.g., PoS-taggers) and language-specific relation formations, or suffer from inherent problems in sequence representations, thus leading to unsatisfactory extraction in diverse languages and domains. To address the above problems, we propose a Query-based Open Relation Extractor (QORE). QORE utilizes a Transformers-based language model to derive a representation of the interaction between arguments and context, and can process multilingual texts effectively. Extensive experiments are conducted on seven datasets covering four languages, showing that QORE models significantly outperform conventional rule-based systems and the state-of-the-art method LOREM [6]. Regarding the practical challenges [1] of Corpus Heterogeneity and Automation, our evaluations illustrate that QORE models show excellent zero-shot domain transferability and few-shot learning ability.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Open relation extraction

- Information extraction

- Knowledge graph construction

- Transfer learning

- Few-shot learning

1 Introduction

Relation extraction (RE) from unstructured text is fundamental to a variety of downstream tasks, such as constructing knowledge graphs (KG) and computing sentence similarity. Conventional closed relation extraction considers only a predefined set of relation types on small and homogeneous corpora, which is far less effective when shifting to general-domain text mining that has no limits in relation types or languages. To alleviate the constraints of closed RE, Banko et al. [1] introduce a new paradigm: open relation extraction (ORE), predicting a text span as the semantic connection between arguments from within a context, where a span is a contiguous sub-sequence. This paper proposes a novel query-based open relation extractor QORE that can process multilingual texts for facilitating large-scale general-domain KG construction.

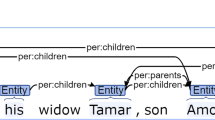

Open relation extraction identifies an arbitrary phrase to specify a semantic relationship between arguments within a context. (An argument is a text span representing an adverbial, adjectival, nominal phrase, and so on, which is not limited to an entity.) Taking a context “Researchers develop techniques to acquire information automatically from digital texts.” and an argument pair \(\left\langle {Researchers, information}\right\rangle \) , an ORE system would extract the span “acquire” from the context to denote the semantic connection between “Researchers” and “information”.

Conventional ORE systems are largely based on syntactic patterns and heuristic rules that depend on external NLP tools (e.g., PoS-taggers) and language-specific relation formations. For example, ClausIE [2] and OpenIE4 [10] for English and CORE [15] for Chinese, leverage external tools to obtain part-of-speech tags or dependency features and generate syntactic patterns to extract relational facts. Faruqui et al. [4] present a cross-lingual ORE system that first translates a sentence to English, performs ruled-based ORE in English, and finally projects the relation back to the original sentence. These pattern-based approaches cannot handle the complexity and diversity of languages well, and the extraction is usually far from satisfactory.

To alleviate the burden of designing manual features, multiple neural ORE models have been proposed, typically adopting the methods of either sequence labeling or span selection. MGD-GNN [9] for Chinese ORE constructs a multi-grained dependency graph and utilizes a span selection model to predict based on character features and word boundary knowledge. Compared with our method, MGD-GNN heavily relies on dependency information and cannot deal with various languages. Ro et al. [12] propose sequence-labeling-based Multi\(^{2}\)OIE that performs multilingual open information extraction by combining BERT with multi-head attention blocks, whereas Multi\(^{2}\)OIE is constrained to extract the predicate of a sentence as the relation. Jia et al. [7] transform English ORE into a sequence labeling process and present a hybrid neural network NST, nonetheless, a dependency on PoS-taggers may introduce error propagation to NST. Improving NST, the current state-of-the-art ORE method LOREM [6] works as a multilingual-embedded sequence-labeling model based on CNN and BiLSTM. Identical to our model, LOREM does not rely on language-specific knowledge or external NLP tools. However, based on our comparison of architectures in Sect. 4.1, LOREM suffers from inherent problems in learning long-range sequence dependencies [16] that are basic to computing token relevances to gold relations, thus resulting in less satisfactory performances compared with QORE model.

Inspired by the broad applications of machine reading comprehension (MRC) and Transformers-based pre-trained language models (LM) like BERT [3] and SpanBERT [8], we design a query-based open relation extraction framework QORE to solve the ORE task effectively and avoid the inherent problems of previous extractors. Given an argument pair and its context, we first create a query template containing the argument information and derive a contextual representation of query and context via a pre-trained language model, which provides a deep understanding of query and context, and models the information interaction between them. Finally, the span extraction module finds an open relation by predicting the start and end indexes of a sub-sequence in the context.

Besides introducing the ORE paradigm, Banko et al. [1] identified major challenges for ORE systems, including Corpus Heterogeneity and Automation. Thus, we carry out the evaluation on the two challenges from the aspects of zero-shot domain transferability and few-shot learning ability, which we interpret in the following. (a) Corpus Heterogeneity: Heterogeneous datasets form an obstacle for profound linguistic tools such as syntactic or dependency parsers, since they commonly work well when trained and applied to a specific domain, but are prone to produce incorrect results when used in a different genre of text. As QORE models are intended for domain-independent usage, we do not require using any external NLP tool, and we assess the performances in this challenge via zero-shot domain transferring. (b) Automation: The manual labor of creating suitable training data or extraction patterns must be reduced to a minimum by requiring only a small set of hand-tagged seed instances or a few manually defined extraction patterns. The QORE framework does not need predefined extraction patterns but trains on amounts of data. We conduct few-shot learning by shrinking the size of training data for the evaluation of this challenge.

To summarize, the main contributions of this work are:

-

We propose a novel query-based open relation extractor QORE that utilizes a Transformers-based language model to derive a representation of the interaction between the arguments and context.

-

We carry out extensive experiments on seven datasets covering four languages, showing that QORE models significantly outperform conventional rule-based systems and the state-of-the-art method LOREM.

-

Considering the practical challenges of ORE, we investigate the zero-shot domain transferability and the few-shot learning ability of QORE. The experimental results illustrate that our models maintain high precisions when transferring or training on fewer data.

2 Approach

An overview of our QORE framework is visualized in Fig. 1. Given an argument pair and its context, we first create a query from the arguments based on a template and encode the combination of query and context using a Transformers-based language model. Finally, the span extraction module predicts a continuous sub-sequence in the context as an open relation.

2.1 Task Description

Given a context \(\boldsymbol{C}\) and an argument pair \(\boldsymbol{A} = (A_1, A_2)\) in \(\boldsymbol{C}\), an open relation extractor needs to find the semantic relationship between the pair \(\boldsymbol{A}\). We denote the context as a word token sequence \(\boldsymbol{C} = \left\{ x_{i}^{c} \right\} _{i=1}^{l_c}\) and an argument as a text span \(A_k = \left\{ x_{i}^{a_k} \right\} _{i=1}^{l_{a_k}}\), where \(l_c\) is the context length and \(l_{a_k}\) is the k-th argument length. Our goal is to predict a span \(R = \left\{ x_{i}^{r} \right\} _{i=1}^{l_{r}}\) in the context as an open relation, where \(l_{r}\) is the length of a relation span.

2.2 Query Template Creation

Provided an argument pair \((A_1, A_2)\), we adopt a rule-based method to create the query template

having three slots, where \(\left\langle s_i \right\rangle \) indicates the i-th slot. The tokens filling a slot are separators of the adjacent arguments (e.g., double-quotes, a comma, or words of natural languages) or a placeholder for a relation span (e.g., a question mark or words of natural languages). In this paper, we design two different query templates: (1) the question-mark (QM) style \(\boldsymbol{T}_{QM}\), taking the form of a structured argument-relationship triple, and (2) the language-specific natural-language (NL) style \(\boldsymbol{T}_{NL}\), where each language has a particular template that is close in meaning. (English: En, Chinese: Zh, French: Fr, Russian: Ru.)

2.3 Encoder

BERT [3] is a pre-trained encoder of deep bidirectional transformers [16] for monolingual and multilingual representations. Inspired by BERT, Joshi et al. [8] propose SpanBERT to better represent and predict text spans. SpanBERT extends BERT by masking random spans based on geometric distribution and using span boundary objective (SBO) that requires the model to predict masked spans based on span boundaries for structure information integration into pre-training. The two language models both achieve strong performances on the span extraction task. We use BERT and SpanBERT as the encoders of QORE.

Given a context \(\boldsymbol{C} = \left\{ x_{i}^{c} \right\} _{i=1}^{l_c}\) with \({l_c}\) tokens and a query \(\boldsymbol{Q} = \left\{ x_{j}^{q} \right\} _{j=1}^{l_q}\) with \(l_q\) tokens, we employ a pre-trained language model as the encoder to learn the contextual representation for each token. First, we concatenate the query \(\boldsymbol{Q}\) and the context \(\boldsymbol{C}\) to derive the input \(\boldsymbol{I}\) of encoder:

where [CLS] and [SEP] denote the beginning token and the segment token, respectively.

Next, we generate the initial embedding \(\boldsymbol{e}_i\) for each token by summing its word embedding \(\boldsymbol{e}_{i}^{w}\), position embedding \(\boldsymbol{e}_{i}^{p}\), and segment embedding \(\boldsymbol{e}_{i}^{s}\). The sequence embedding \(\boldsymbol{E}=\{\boldsymbol{e}_1, \boldsymbol{e}_2, ..., \boldsymbol{e}_m\}\) is then fed into the deep Transformer layers to learn a contextual representation with long-range sequence dependencies via the self-attention mechanism [16]. Finally, we obtain the last-layer hidden states \(\boldsymbol{H}=\{\boldsymbol{h}_1, \boldsymbol{h}_2, ..., \boldsymbol{h}_m\}\) as the contextual representation for the input sequence \(\boldsymbol{I}\), where \(\boldsymbol{h}_i \in \mathbb {R}^{d_h}\) and \(d_h\) indicates the dimension of the last hidden layer of encoder. The length of the sequences \(\boldsymbol{I}\), \(\boldsymbol{E}\), \(\boldsymbol{H}\) is denoted as m where \(m = l_q + l_c + 3\).

2.4 Span Extraction Module

The span extraction module aims to find a continuous sub-sequence in the context as an open relation. We utilize two learnable parameter matrices (feed-forward networks) \(f_{start} \in \mathbb {R}^{d_h}\) and \(f_{end} \in \mathbb {R}^{d_h}\) followed by the softmax normalization, then take each contextual token representation \(\boldsymbol{h}_i\) in \(\boldsymbol{H}\) as the input to produce the probability of each token i being selected as the start/end of relation span:

We denote \(\boldsymbol{p}^{start} = \{p^{start}_i\}_{i=1}^m\) and \(\boldsymbol{p}^{end} = \{p^{end}_i\}_{i=1}^m\).

2.5 Training and Inference

The training objective is defined as minimizing the cross entropy loss for the start and end selections,

where \(y_k^s\) and \(y_k^e\) are respectively ground-truth start and end positions of example k. N is the number of examples.

In the inference process, an open relation is extracted by finding the indices (s, e):

3 Experimental Setup

We propose the following hypotheses and design a set of experiments to examine the performances of QORE models. We arrange the hypotheses based on the considerations as follows: (1) \(\mathbf {H_1}\): By conducting extensive comparisons with the existing ORE systems, we aim to analyze the advantages of QORE framework. (2) \(\mathbf {H_2}\) and \(\mathbf {H_3}\): As stated in the Introduction, it is significant to evaluate an open relation extractor on the challenges of Corpus Heterogeneity and Automation. Thus, we investigate the zero-shot domain transferability and the few-shot learning ability of QORE models.

-

\(\mathbf {H_1}\): For extracting open relations in seven datasets of different languages, QORE models can outperform conventional rule-based extractors and the state-of-the-art neural method LOREM.

-

\(\mathbf {H_2}\): Considering the zero-shot domain transferability, QORE model is able to perform effectively when transferring to another domain.

-

\(\mathbf {H_3}\): When the training data size reduces, QORE model shows an excellent few-shot learning ability and maintains high precision.

3.1 Datasets

We evaluate the performances of our proposed QORE framework on seven public datasets covering four languages, i.e., English, Chinese, French, and Russian (denoted as En, Zh, Fr, and Ru, respectively). In the data preprocessing, we only retain binary-argument triples whose components are spans of the contexts.

-

OpenIE4\(^\mathrm{En}\) was bootstrapped from extractions of OpenIE4 [10] from Wikipedia.

-

LSOIE-wiki\(^\mathrm{En}\) and LSOIE-sci\(^\mathrm{En}\) [13] were algorithmically re-purposed from the QA-SRL BANK 2.0 dataset [5], covering the domains of Wikipedia and science, respectively.

-

COER\(^\mathrm{Zh}\) is a high-quality Chinese knowledge base, created by an unsupervised open extractor [15] from heterogeneous web text.

-

SAOKE\(^\mathrm{Zh}\) [14] is a human-annotated large-scale dataset for Chinese open information extraction.

-

WMORC\(^\mathrm{Fr}\) and WMORC\(^\mathrm{Ru}\) [4] consist of manually annotated open relation data (WMORChuman) for French and Russian, and automatically tagged (thus less reliable) relation data (WMORCauto) for the two languages by a cross-lingual projection approach. The sentences are gathered from Wikipedia. We take WMORCauto for the training and development sets while using WMORChuman as the test data.

3.2 Implementations

Encoders. We utilize the bert-base-cased or spanbert-base-cased language models as the encoders on English datasets (SpanBERT only provides the English version up to now), and bert-base-chinese on Chinese datasets. Since there exist few high-quality monolingual LMs for French and Russian, we employ a multilingual LM bert-base-multilingual-cased on the datasets of the two languages.

Evaluation Metrics. We keep track of the token-level open relation extraction metrics of F1 score, precision, and recall.

3.3 Baselines

In the experiments, we compare QORE models with a variety of previously proposed methods, some of which were used in the evaluation of the SOTA open relation extractor LOREM [6]. We denote the English (En) and Chinese (Zh) extractors and the models capable of processing multilingual (Mul) texts using the superscripts.

-

OLLIE\(^\mathrm{En}\) [11] is a pattern-based extraction approach with complex relation schemas and context information of attribution and clausal modifiers.

-

ClausIE\(^\mathrm{En}\) [2] exploits linguistic knowledge about English grammar to identify clauses as relations and their arguments.

-

Open IE-4.x\(^\mathrm{En}\) [10] combines a rule-based extraction system and a system analyzing the hierarchical composition between semantic frames to generate relations.

-

MGD-GNN\(^\mathrm{Zh}\) [9] constructs a multi-grained dependency graph and predicts based on character features and word boundary knowledge.

-

LOREM\(^\mathrm{Mul}\) [6] is a multilingual-embedded sequence-labeling method based on CNN and BiLSTM, not relying on language-specific knowledge or external NLP tools.

-

Multi\(^{2}\)OIE\(^\mathrm{Mul}\) [12] is a multilingual sequence-labeling-based information extraction system combining BERT with multi-head attention blocks.

4 Experimental Results

4.1 H1: QORE for Multilingual Open Relation Extraction

In \(\mathbf {H_1}\), we evaluate our QORE models on seven datasets of different languages (Tables 1 and 2) to compare with the rule-based and neural baselines. By contrast, QORE models outperform all the baselines on each dataset.

For OLLIE, ClausIE, Open IE-4.x, and MGD-GNN, their dissatisfactory results are primarily due to the dependence on intricate language-specific relation formations and error propagation by the used external NLP tools (e.g., MGD-GNN utilizes dependency parser for constructing a multi-grained dependency graph.). If we contrast with the SOTA method LOREM, the neural sequence-labeling-based model outperforms the rule-based systems, but still cannot gain comparable outcomes to our QORE models. In the following, we focus on comparing the architectures of QORE and LOREM.

LOREM encodes an input sequence using pre-trained word embeddings and adds argument tag vectors to the word embeddings. The argument tag vectors are simple one-hot encoded vectors indicating if a word is part of an argument. Then LOREM utilizes CNN and BiLSTM layers to form a representation of each word. The CNN is used to capture the local feature information, as LOREM considers that certain parts of the context might have higher chances of containing relation words than others. Meanwhile, the BiLSTM captures the forward and backward context of each word. Next, a CRF layer tags each word using the NST tagging scheme [7]: \(\mathtt {S}\) (Single-word relation), \(\mathtt {B}\) (Beginning of a relation), \(\mathtt {I}\) (Inside a relation), \(\mathtt {E}\) (Ending of a relation), \(\mathtt {O}\) (Outside a relation).

Advantages of QORE over LOREM. Our QORE framework generates an initial sequence representation with word, position, and segment embeddings. Unlike the simple one-hot argument vectors of LOREM, QORE derives the argument information by creating a query template of arguments. We combine the query with the context to form the input of encoder, and the encoder outputs a contextual representation that we utilize to compute the relevance of each token to a gold relation (Eqs. 8 and 9). Moreover, by employing the self-attention mechanism of a Transformers-based encoder, QORE has the benefit of learning long-range dependencies easier and deriving a better representation for computing relevances, which we interpret in the following. Learning long-range dependencies is a key challenge in encoding sequences and solving related tasks [16]. One key factor affecting the ability to learn such dependencies is the length of the paths forward and backward signals have to traverse between any two input and output positions in the network. The shorter these paths between any combination of positions in the input and output sequences, the easier it is to learn long-range dependencies. Vaswani et al. [16] also provide the maximum path length between any two input and output positions in self-attention, recurrent, and convolutional layers, which are O(1), O(n), and \(O(\log _k(n))\), respectively. (k is the kernel width of a convolutional layer.) The constant path length of self-attention makes it easier to learn long-range dependencies than CNN and BiLSTM layers. Overall, QORE achieves substantial improvements over LOREM due to the better sequence representations with long-term dependencies, a basis of computing token relevances to gold relations.

In Table 1, if we concentrate on the BERT-encoded and SpanBERT-encoded QORE models, we find that the results from the SpanBERT-encoded models are relatively more significant than the BERT-encoded on all English datasets, which is in line with the advantage of SpanBERT over BERT on the span extraction task [8].

4.2 H2: Zero-shot Domain Transferability of QORE

A model trained on data from the general domain does not necessarily achieve an equal performance when testing on a specific domain such as biology or literature. In \(\mathbf {H_2}\), we evaluate the zero-shot domain transferability of QORE by training models on the general-domain LSOIE-wiki\(^\mathrm{En}\) and testing them on the benchmark of science-domain LSOIE-sci\(^\mathrm{En}\). We compare our QOREBERT+QM model with BERT Tagger (non-query-based, performed by Multi\(^{2}\)OIE). Table 3 illustrates that when transferring from the general to science domain, QOREBERT+QM decreases by F1 score (–0.15%) whereas BERT Tagger reduces by F1 (–14.58%). The slighter decline in QORE’s performance shows that our model has superior domain transferability.

4.3 H3: Few-Shot Learning Ability of QORE

For few-shot learning ability, we carry out a set of experiments with shrinking training data to compare our QORE model with BERT Tagger (non-query-based, performed by Multi\(^{2}\)OIE) on LSOIE-wiki\(^\mathrm{En}\). Figure 2 indicates that by reducing training samples to 50%, BERT Tagger declines by F1 (−2.75%) while QOREBERT+QM achieves an even higher F1 (+0.26%). When reducing the training set to 6.25%, QOREBERT+QM results in a decreased F1 (−1.28%) totally compared with using the whole training set, whereas BERT Tagger reduces by F1 (−7.02%) in total. The comparison results imply that our query-based span extraction framework may bring more enhanced few-shot learning ability to QORE model.

5 Conclusion

Our work targets open relation extraction using a novel query-based extraction framework QORE. The evaluation results show that our model achieves significant improvements over the SOTA method LOREM. Regarding some practical challenges, we investigate that QORE models show excellent zero-shot domain transferability and few-shot learning ability. In the future, we will explore further demands of the ORE task (e.g., extracting multi-span open relations and detecting non-existent relationships) and present corresponding solutions.

References

Banko, M., Cafarella, M.J., Soderland, S., Broadhead, M., Etzioni, O.: Open information extraction from the web. In: Veloso, M.M. (ed.) Proceedings of the 20th International Joint Conference on Artificial Intelligence (IJCAI 2007), Hyderabad, 6–12 January 2007, pp. 2670–2676 (2007). http://ijcai.org/Proceedings/07/Papers/429.pdf

Corro, L.D., Gemulla, R.: Clausie: clause-based open information extraction. In: Schwabe, D., Almeida, V.A.F., Glaser, H., Baeza-Yates, R., Moon, S.B. (eds.) 22nd International World Wide Web Conference (WWW 2013), Rio de Janeiro, 13–17 May 2013, pp. 355–366. International World Wide Web Conferences Steering Committee/ACM (2013). https://doi.org/10.1145/2488388.2488420

Devlin, J., Chang, M., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. In: Burstein, J., Doran, C., Solorio, T. (eds.) Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT 2019), Minneapolis, 2–7 June 2019, vol. 1 (Long and Short Papers), pp. 4171–4186. Association for Computational Linguistics (2019). https://doi.org/10.18653/v1/n19-1423

Faruqui, M., Kumar, S.: Multilingual open relation extraction using cross-lingual projection. In: Mihalcea, R., Chai, J.Y., Sarkar, A. (eds.) The 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL HLT 2015), Denver, 31 May–5 June 2015, pp. 1351–1356. The Association for Computational Linguistics (2015). https://doi.org/10.3115/v1/n15-1151

FitzGerald, N., Michael, J., He, L., Zettlemoyer, L.: Large-scale QA-SRL parsing. In: Gurevych, I., Miyao, Y. (eds.) Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (ACL 2018), Melbourne, 15–20 July 2018, vol. 1: Long Papers, pp. 2051–2060. Association for Computational Linguistics (2018). https://doi.org/10.18653/v1/P18-1191

Harting, T., Mesbah, S., Lofi, C.: LOREM: language-consistent open relation extraction from unstructured text. In: Huang, Y., King, I., Liu, T., van Steen, M. (eds.) The Web Conference 2020 (WWW 2020), Taipei, 20–24 April 2020, pp. 1830–1838. ACM/IW3C2 (2020). https://doi.org/10.1145/3366423.3380252

Jia, S., Xiang, Y., Chen, X.: Supervised neural models revitalize the open relation extraction. arXiv preprint arXiv:1809.09408 (2018)

Joshi, M., Chen, D., Liu, Y., Weld, D.S., Zettlemoyer, L., Levy, O.: Spanbert: improving pre-training by representing and predicting spans. Trans. Assoc. Comput. Linguist. 8, 64–77 (2020). https://transacl.org/ojs/index.php/tacl/article/view/1853

Lyu, Z., Shi, K., Li, X., Hou, L., Li, J., Song, B.: Multi-grained dependency graph neural network for Chinese open information extraction. In: Karlapalem, K., et al. (eds.) PAKDD 2021. LNCS (LNAI), vol. 12714, pp. 155–167. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-75768-7_13

Mausam. Open information extraction systems and downstream applications. In: Kambhampati, S. (ed.) Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, IJCAI 2016, New York, 9–15 July 2016, pp. 4074–4077. IJCAI/AAAI Press (2016). http://www.ijcai.org/Abstract/16/604

Mausam, Schmitz, M., Soderland, S., Bart, R., Etzioni, O.: Open language learning for information extraction. In: Tsujii, J., Henderson, J., Pasca, M. (eds.) Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL 2012), 12–14 July 2012, Jeju Island, pp. 523–534. ACL (2012). https://aclanthology.org/D12-1048/

Ro, Y., Lee, Y., Kang, P.: Multi\({}^{\text{2}}\)oie: Multilingual open information extraction based on multi-head attention with BERT. In: Cohn, T., He, Y., Liu, Y. (eds.) Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: Findings, EMNLP 2020, Online Event, 16–20 November 2020. Findings of ACL, vol. EMNLP 2020, pp. 1107–1117. Association for Computational Linguistics (2020). https://doi.org/10.18653/v1/2020.findings-emnlp.99

Solawetz, J., Larson, S.: LSOIE: A large-scale dataset for supervised open information extraction. In: Merlo, P., Tiedemann, J., Tsarfaty, R. (eds.) Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume (EACL 2021), Online, 19–23 April 2021, pp. 2595–2600. Association for Computational Linguistics (2021). https://aclanthology.org/2021.eacl-main.222/

Sun, M., Li, X., Wang, X., Fan, M., Feng, Y., Li, P.: Logician: a unified end-to-end neural approach for open-domain information extraction. In: Chang, Y., Zhai, C., Liu, Y., Maarek, Y. (eds.) Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining (WSDM 2018), Marina Del Rey, 5–9 February 2018, pp. 556–564. ACM (2018). https://doi.org/10.1145/3159652.3159712

Tseng, Y., et al.: Chinese open relation extraction for knowledge acquisition. In: Bouma, G., Parmentier, Y. (eds.) Proceedings of the 14th Conference of the European Chapter of the Association for Computational Linguistics (EACL 2014), 26–30 April 2014, Gothenburg, pp. 12–16. The Association for Computer Linguistics (2014). https://doi.org/10.3115/v1/e14-4003

Vaswani, A., et al.: Attention is all you need. In: Guyon, I., et al. (eds.) Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, 4–9 December 2017, Long Beach, pp. 5998–6008 (2017). https://proceedings.neurips.cc/paper/2017/hash/3f5ee243547dee91fbd053c1c4a845aa-Abstract.html

Acknowledgements

This work is supported by the NSFC-General Technology Basic Research Joint Funds under Grant (U1936220), the National Natural Science Foundation of China under Grant (61972047) and the National Key Research and Development Program of China (2018YFC0831500).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Yang, H., Li, DW., Li, Z., Yang, D., Qi, J., Wu, B. (2022). Open Relation Extraction via Query-Based Span Prediction. In: Memmi, G., Yang, B., Kong, L., Zhang, T., Qiu, M. (eds) Knowledge Science, Engineering and Management. KSEM 2022. Lecture Notes in Computer Science(), vol 13369. Springer, Cham. https://doi.org/10.1007/978-3-031-10986-7_6

Download citation

DOI: https://doi.org/10.1007/978-3-031-10986-7_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-10985-0

Online ISBN: 978-3-031-10986-7

eBook Packages: Computer ScienceComputer Science (R0)