Abstract

In this paper we introduce a new queueing model with a special kind of input processes. It is assumed that the number of arrivals during consecutive time intervals makes an autoregressive sequence with conditional Poisson distributions. A single server serves input flows one by one in cyclic order with instantaneous switching. A d-limited policy is used. The mathematical model of the queueing process takes form of a multidimensional discrete Markov chain. The Markov chain keeps track of the server state, recent arrival numbers and queues’ lengths. The necessary and sufficient condition for the existence of the stationary probability distribution is found. A possibility to give an explicit solution for the stationary equations for the probability generating functions is discussed.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Autoregressive Poisson process

- polling system

- cyclic service

- stationarity conditions

- probability generating functions

1 Introduction

Studies of many real flows in telecommunication networks and vehicular control at junctions made it evident that a simple Poisson model or a renewal model [1] are often statistically inadequate. In the last five decades models with different kinds of dependence between some of the flow constituents. There are a least two options to add dependence to the mode. One can think of a random arrival rate. It leads to Cox’s doubly stochastic flows [2], Markov-modulated flows of Neuts and Lucantoni [3]. On the other hand, dependence of the conditional probability distribution for inter-arrival time intervals on past arrivals can be introduced explicitely. On this way we come, for instance, to auto-regressive time series models formed by successive inter-arrival times (see [4]). In [6] following [5], a single-line queueing system with group arrivals is considered in which the group sizes make a certain Markov chain. Since any of the above-mentioned models watches after each single arrival time,

this approach can be called local [7].

In [7] a non-classical approach was proposed and started developing. According to this approach, the flow is observed only at special chosen epochs. At that, only a total random number of arrivals between two observation epochs becomes known. This approach is called non-local. Let us cite here an appropriate definition.

Definition 1

Let \(0=\tau ^{\mathrm {(obs)}}_0<\tau ^{\mathrm {(obs)}}_1<\ldots \) be a point sequence on the axis Ot (here the superscript “obs” stands for “observation”), not coinciding with (1), \(\eta ^{\mathrm {(obs)}}_i\) be a random number of requests from the flow \(\varPi \) during the time-interval \(\bigl (\tau ^{\mathrm {(obs)}}_i, \tau ^{\mathrm {(obs)}}_{i+1}\bigr ]\), and \(\nu ^{\mathrm {(obd)}}_i\) be some characteristic(a mark) of those requests that arrive during the time-interval \(\bigl (\tau ^{\mathrm {(obs)}}_i, \tau ^{\mathrm {(obs)}}_{i+1}\bigr ]\). A random vector sequence

is called a flow of non-homogeneous requests under its incomplete(non-local) description.

Informally speaking, our non-local auto-regressive flow is understood as a flow with a linear form \(a \eta ^{{(\mathrm {obs})}}_{i-1}+b\) for the regression equation of \(\eta ^{\mathrm {(obs)}}_i\) onto \(\eta ^{\mathrm {(obs)}}_0\), \(\eta ^{\mathrm {(obs)}}_1\), ..., \(\eta ^{\mathrm {(obs)}}_{i-1}\). For count time-series, this kind of stochastic processes was studied e.g. in [8].

The queueing system belongs to a class of polling systems [9]. Besides the inputs, it differs from classical polling systems by an assumption on the service process. Service time distributions are not known (in real queueing systems service times can be dependent and have different probability distributions), but the server’s sojourn time distribution for each node is given together with the upper limit on the number of services customers. It models for example a roads intersection controlled by a fixed-cycle traffic-light, and data transmission nodes governed by a Round Robin algorithm.

We will demonstrate that even under simple assumptions on the queueing system structure the equation for the stationary probability distribution generating function is hard to solve. Still we will obtain conditions for the existence of the stationary probability distribution in the system using the iterative-dominating approach [10, 11].

2 The Queueing System

Let us assume that all random variables and random elements in what follows are defined on a probability space \((\varOmega , \mathfrak F, \mathbb P)\). Then \(\mathbb E(\cdot )\) denotes the mathematical expectation with respect to the probability measure \(\mathbb P\). Set \(\varphi (x;a)=a^x e^{-a}/x!\) for \(a>0\) and \(x=0\), 1, ....

Consider a queueing system with \(m<\infty \) input flows and a single server. Customers from the j-th flow join an infinite-capacity buffer \(O_j\). Probability properties of the input flows will be defined later. The server spends a constant time \(T>0\) in front of each queue, and then an instant switch-over to the next queue occurs. After the last queue the first queue is visited. The server implements a d-limited policy: during its stay at the j-th queue the server can provide service to \(d=\ell _j\) customers at most from that queue, no matter when exactly they arrived if they have arrived before the time T expired.

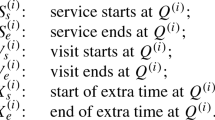

Let \(\tau _0\), \(\tau _{i+1}=\tau _i+T=(i+1)T\), \(i=0\), 1, ... be the time instants when the server switches to a next queue. Denote by \(\varGamma ^{(r)}\) the server state when is at the r-th queue, \(i=1\), 2, ..., m and let \(\varGamma =\{\varGamma ^{(1)}, \varGamma ^{(2)}, \ldots , \varGamma ^{(m)}\}\) be the server state space. Let a random variable \(\varGamma _i\in \varGamma \) be the server state during the time interval \((\tau _{i-1}, \tau _i]\) for \(i=1\), 2, ..., and \(\varGamma _0\in \varGamma \) be the random server state at time \(\tau _0\). Let \(r\oplus 1=r+1\) for \(r<m\) and \(m\oplus 1=1\). Then \(\varGamma _{i+1}=\varGamma _{i+1}(\omega )=\varGamma ^{(r\oplus 1)}\) for all \(\omega \in \varOmega \) such that \(\varGamma _i=\varGamma ^{(r)}\).

Denote by \(\eta _{j,i}\), \(i=1\), 2, ... the random number of new customers arriving from the flow \(\varPi _j\) during the time interval \((\tau _i, \tau _{i+1}]\), \(j=1\), 2, ..., m. Let \(\eta _{j,-1}\) be a non-negative integer-values random variable, \(j=1\), 2, ..., m. Let us assume that the conditional probability distribution of \(\eta _{j,i+1}\) for any given \(\eta _{j,-1}=x_{-1}\), \(\eta _{j,0}=x_0\), ..., \(\eta _{j,i}=x_i\) is the Poisson distribution with parameter \((a_jx_i+b_j)\) for some \(a_j>0\) and \(b_j>0\), so that the regression of \(\eta _{j,i+1}\) on past numbers of arrivals equals

We will call such an input flow an autoregressive Poisson flow. The previous number of arrivals, \(\eta _{j,i-1}\), can be used as a mark of requests during the time-interval \((\tau _i, \tau _{i+1}]\). Then the non-local description of the autoregressive Poisson flow \(\varPi _j\) is a marked point process

In particular, if the flow \(\varPi _j\) is a classical Poisson with intensity \(\lambda _j\) then we will have \(a_j=0\) and \(b_j=\lambda _j T\). Further, let us assume that the stochastic sequences

are independent.

Denote by \(\kappa _{j,i}\) the random number of customers in the queue \(O_j\) at time instant \(\tau _i\). Denote by \(\xi _{j,i}\) the largest number of customers which can be serviced from \(O_j\) during the time interval \((\tau _i, \tau _{i+1}]\). Then the probability

equals 0 for t\(y_j>0\) and \(y_k>0\) for some \(k\ne j\); it equals 1 for \(y_{r\oplus 1}=\ell _j\). We have

The recurrent equations and probability distributions given above prove the following claims.

Theorem 1

For a given probability distribution of the vertor

random sequences

are irreducible periodic Markov chains.

3 Analysis of the Model

The main purpose of this section is to establish necessary and sufficient conditions for the existence of the stationary probability distribution of the Markov chain \(\{(\varGamma _i, \varkappa _{j,i}, \eta _{j,i-1}); i=0, 1, \ldots \}\) for \(j=1\), 2, ..., m, since it is easy to prove then, that the Markov chain

has a stationary probability distribution if and only if each single

does. In the remainder of this section the value of the index j is fixed.

In the first place, for the existence of the stationary distributions of the Markov chains, the inputs \(\{\eta _{j,i}; i=0, 1, \ldots \}\) need to have statioinary probability distribution. This is possible only if \(0<a_j<1\) for all \(j=1\), 2, ..., m. We assume so in the rest of the section.

Let us define

Let \(I(\cdot )\) denote the indicator random variable for the event given in the parentheses. Let us introduce for \(|z|\leqslant 1\), \(|w|\leqslant 1\) and \(i=0\), 1, ... the probability generating functions

Theorem 2

The following recurrent equations with respect to \(i=0\), 1, ... hold:

for \(r\oplus 1=j\).

Proof

Let \(r\oplus 1\ne j\). Then

For \(r\oplus 1=j\),

Using methods from [10, 11] we get.

Theorem 3

For the existence of the stationary probability distribution of the Markov chain \(\{(\varGamma _i, \varkappa _{j,i}, \eta _{j,i-1}); i=0, 1, \ldots \}\) it is necessary and sufficient that

The condition in the last theorem can be easily interpreted from a physical point of view because the quantity \(mb_j(1-a_j)^{-1}\) is the stationary expected number of arrivals from the flow \(\varPi _j\) during a complete cycle of the server.

In course of the proof of Theorem 3 the following Lemma is essential.

Lemma 1

If \(0<a<1\) then the equation \(w=e^{a(wz-1)}\) has a unique solution

convergent in the open disk \(|z|<a^{-1}e^{a-1}\), such that \(w(1)=1\), \(|w(z)|<1\) for \(|z|<1\).

Proof

Let’s fix \(|z|<1\). We have an estimate from below for the magnitude of the complex quantity

So, on the circle \(|w|=1\) we have

By the classical Rouchè’s theorem, for any such z there is a unique solution \(w=w(z)\) of the equation \(w=e^{a(wz-1)}\) such that \(|w(z)|\leqslant 1\). It can be computed by evaluating the integral

where \(\mathbf {i}=\sqrt{-1}\) and \(F(z,w)=w-we^{a(zw-1)}\). We only need to prove analyticity of w(z) in the open unit disk.

Let \(|w|=1\) and \(0<r<1\) be fixed. Let us consider the function w(z) in a disk \(|z|\leqslant r\). Since

a function \(wF'_w(z,w)/F(z,w)\) is analytic inside the open disk \(|z|<r\) and with uniformly bounded absolute value as a ratio of two continuous functions in bath variables in a closed set \(\{(z,w):|z|\leqslant r, |w|=1\}\). A corollary from Vitali’s theorem, the function w(z) is an analytic function of z in the open disk \(|z|<r\), and hence in the open disk \(|z|<1\).

From inequality (4) it follows that

so that the integral can be represented by a series:

Using the Cauchy’s Integral representation, we get

So,

The convergence radius R is found from

Now let us prove that the series at \(z=1\) equals \(w(1)=1\). Any convergent series is a continuous function inside its disk of convergence. Here we focus on real values for z and \(w>0\). Then

In a neighborhood of \(w=1\) it is a continuous monotonously increasing function for \(0<w<e^{1-a}\) and it takes on value \(z=1\) at \(w=1\). Its inverse function takes on values \(w<1\) for \(z<1\), and it takes on value \(w=1\) for \(z=1\).

Proof

(to Theorem 3). 1) Necessity. Let us assume that the stationary probability distribution exists. By substituting it in place of the initial probability distribution we guarantee the existence of limits

equal to the stationary probabilities. Let r(j) be the solution to \(r\oplus 1=j\). To obtain equations for the time-stationary probability generating functions we can omit indices i and \(i+1\) in the equations in Theorem 3. Substituting there \(w=w(z)\) from Lemma 1 where \(a=a_j\) and \(b=b_j\), and denoting

we get

Summation of Eqs. (5), (6) with respect to \(r=1\), 2, ..., m results in

In the left neighborhood of \(z=1\) (on the real axis) we have Taylor expansions

There expansions substituted into (7), we get after collecting terms

Divide by \((z-1)\) and send z to 1 from the left. We get

Substituting \(z=1\) into (5) and (6) leads to \(\varPsi _j(1,1;r)=m^{-1}\) for all \(r=1\), 2, ..., m. So, we finally come to

Taking into account that \(\ell _j-x-n+\frac{na}{1-a}>0\) for those x and n which occur at summation, we draw the conclusion that for the existence of a stationary probability distribution it is necessary that

2) Sufficiency. Let us assume for now that Inequality (3) is true, but no stationary probability distribution exists. All the states of the Markov chain are essential and belong to a single class of communicating states, one must have

for all x, y, and r, It follows then that the sequence of mathematical expectations \(\mathbb E \kappa _{j,i}\) \(i=0\), 1, ... unboundly grows. We claim that, on the contrary, the mathematical expectations are bounded if the condition from the theorem holds.

Let us setup the initial probability distribution so that the probability generating functions \(\varPsi _{j,0}(z,w;r)\) are analytic in \((z,w)\in \mathbb C^2\). Then all the next probability generating functions \(\varPsi _{j,i}(z,w;r)\), \(i=1\), 2, ... can have analytical continuations onto whole \(\mathbb C^2\). Consequently, the functions \(\varPsi _{j,i}(z,w(z);r)\) will be analytic in the disk \(|z|<1+\varepsilon <1/(ae^{1-a})\) (i.e. inside the disk of convergence of the series w(z)) and will satisfy equations

Let us fix a z, \(1<z<1+\varepsilon \) and let \(r\oplus m=r=j\). The one has (\(A_j(x,n)\leqslant 1\)):

Since

the sequence

converges, and hence is bounded. At the same time, for all \(i=0\), 1, ... we have

It follows that all numbers (for this z) \(\varPsi _{j,i}(z,w(z);r)\), \(r=1\), 2, ..., m, and \(i=0\), 1, ... are bounded by some constant \(C>0\). Then,

This contradiction prove the claim.

To solve Eqs. (5), (6) for the functions \(\varPsi _j(z,w(z); r)\), \(r=1\), 2, ..., m, one needs to identify \(\ell _j(\ell _j+1)/2\) unknown constants A(x, n), \(0\leqslant x+n<\ell _j\), n, x integers. We get

Case 1. If \(\ell _j=1\), then the only unknown constant is \(A_j(0,0)\). Recalling that \(\varPsi _j(z,z;r)=1/m\) and expanding terms \(z-e^{mb_j(zw(z)-1)}\), \((1-z^{-1})\) in the left neighborhood of \(z=1\), we get

Case 2. If \(\ell _j >1\), we have \(\ell _j(\ell _j+1)/2>1\) unknown constants. Let us study the equation

It follows from the modified Rouché theorem [12] and the Lemma below that it has exactly \(\ell _j-1\) zeros inside the unit disk \(|z|<1\) when the stationarity condition (3) is fulfilled.

Lemma 2

If inequality (3) is fulfilled, then \(|e^{b_j(zw(z)-1)}|<1\) for all \(|z|=1\), \(z\ne 1\).

Proof

Let \(z=e^{\mathbf {i}u}\), \(w(z)=Re^{\mathbf {i}\varphi }\), \(0\leqslant u<2\pi \), \(0\leqslant \varphi <2\pi \). Then

Its right-hand side equals \(e^{a(R e^{\mathbf {i}(u+\varphi )}-1)}\). By comparing moduli, we get

We have \(R=1\) if and only if \(a\cos (u+\varphi )-a=0\), whence \(\cos (u+\varphi )=1\). But then \(\sin (u+\varphi )=0\) and it’s the argument value \(\varphi \) of the complex number \(Re^{i\varphi }\). Finally, from \(1=\cos (u+\varphi )=\cos u\) we get \(u=0\).

Denote these zeros by \(\beta _1\), \(\beta _2\), ..., \(\beta _{\ell _j-1}\).

Theorem 4

If inequality (3) is fulfilled then the following equations take place:

The number of linear equations given by Theorem 4 is less than the number of unknown constants. Still, it was to be expected, since Eqs. (5) and (6) are not equivalent to equations of Theorem 2 and by substituting \(w=w(z)\) there we lose evidently essential parts of information about the generating functions of interest. Moreover, once we obtain all \(A_j(x,n)\), \(0\leqslant x+n<\ell _j\), we still need to solve a functional equation relating \(\varPsi _{j}(z,w;r\oplus 1)\) to \(\varPsi _j(z,e^{a_j(zw-1)};r)\) in the polydisk \(\{(z,w):|z|\leqslant 1, |w|\leqslant 1\}\subset \mathbb C^2\).

Since the main functional transform (2) for a queue length is produces a random walk with reflection at zero, a many times studied (under a variety of assumptions) process, it is of interest to compare the assumptions on the input processes, such as input flows and service processes, in our work and in other classical works. Usually (c.f. [13]) it is assumed that the sequence (in our notation)

is a stationary process. In our case, it would imply not only that the input sequence \(\{\eta _{j,i}; i=-1,0, 1, \ldots \}\) is stationary, but also that the initial server state, \(\varGamma _0\), is random with the uniform probability distribution on \(\varGamma \). Our exposition leaves more freedom for the input flow and the initial server state.

4 Conclusion

It was shown in this work that discrete-time models of queueing systems with auto-regressive input process may lead to a challenging problem in the domain of several complex variables in terms of multivariate probability generating functions. This problem still wait for its solution. Nethertheless, the necessary and sufficient conditions on the parameters of the queueing system which guarantee the existence of the stationary probability distribution can be found by careful analysis of these (yet unsolved) equations. For the polling queueing system under study, these conditions are easily verifiable and have natural physical interpretation in terms of mean values for the basic quantities like numbers of arrivals and saturation flow intensity.

References

Klimow, G.P.: Bedienungsprozesse. Springer, Basel (1979)

Grandell, J.: Doubly Stochastic Poisson Processes. Springer, Berlin, Heidelberg, New York (1976). https://doi.org/10.1007/BFb0077758

Lucantoni, D.M.: New results on the single server queue with a batch Markovian arrival process. Commun. Stat.: Stochast. Models 1(7), 1–46 (1991)

Jagerman, D. L., Melamed, B., Willinger, W.: Stochastic modeling of traffic processes. In: Dshalalow, J.H. (ed.) Frontiers in Queueing: Models and Applications in Science and Engineering, pp. 271–320 (1997)

Hwang, G.U., Sohraby, K.: On the exact analysis of a discrete-time queueing system with autoregressive inputs. Queueing Syst. 1–2(43), 29–41 (2003)

Leontyev, N.D., Ushakov, V.G.: Analysis of a queueing system with autoregressive arrivals. Inform. Appl. 3(8), 39–44 (2014)

Fedotkin, M. A.: Incomplete description of flows of non-homogenous requests. In: Queueing Theory. Moscow, MGU-VNIISI, 113–118 (1981). (in Russian)

Heinen, A.: Modelling Time Series Count Data: An Autoregressive Conditional Poisson Model (2003). https://dx.doi.org/10.2139/ssrn.1117187

Vishnevskii, V.M., Semenova, O.V.: Mathematical methods to study the polling systems. Autom. Remote. Control. 2(67), 173–220 (2006)

Zorine, A.: Stochastic model for communicating retrial queuing systems with cyclic control in random environment. Cybern. Syst. Anal. 6(49), 890–897 (2013)

Zorine, A.V.: Study of a service process by a loop algorithm by means of a stopped random walk. Commun. Comput. Inf. Sci. 1109, 121–135 (2019)

Klimenok, V.: On the modification of Rouche’s theorem for the queueing theory problems. Queueing Syst. 38, 431–434 (2001)

Borovkov, A.A.: Stochastic Processes in Queueing Theory. Springer, New York (1976). https://doi.org/10.1007/978-1-4612-9866-3

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Zorine, A.V. (2022). On the Existence of the Stationary Distribution in a Cyclic Polling System with Autoregressive Poisson Inputs. In: Dudin, A., Nazarov, A., Moiseev, A. (eds) Information Technologies and Mathematical Modelling. Queueing Theory and Applications. ITMM 2021. Communications in Computer and Information Science, vol 1605. Springer, Cham. https://doi.org/10.1007/978-3-031-09331-9_8

Download citation

DOI: https://doi.org/10.1007/978-3-031-09331-9_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-09330-2

Online ISBN: 978-3-031-09331-9

eBook Packages: Computer ScienceComputer Science (R0)