Abstract

Glioma is a common malignant brain tumor with distinct survival among patients. The isocitrate dehydrogenase (IDH) gene mutation provides critical diagnostic and prognostic value for glioma. It is of crucial significance to non-invasively predict IDH mutation based on pre-treatment MRI. Machine learning/deep learning models show reasonable performance in predicting IDH mutation using MRI. However, most models neglect the systematic brain alterations caused by tumor invasion, where widespread infiltration along white matter tracts is a hallmark of glioma. Structural brain network provides an effective tool to characterize brain organisation, which could be captured by the graph neural networks (GNN) to more accurately predict IDH mutation.

Here we propose a method to predict IDH mutation using GNN, based on the structural brain network of patients. Specifically, we firstly construct a network template of healthy subjects, consisting of atlases of edges (white matter tracts) and nodes (cortical/subcortical brain regions) to provide regions of interest (ROIs). Next, we employ autoencoders to extract the latent multi-modal MRI features from the ROIs of edges and nodes in patients, to train a GNN architecture for predicting IDH mutation. The results show that the proposed method outperforms the baseline models using the 3D-CNN and 3D-DenseNet. In addition, model interpretation suggests its ability to identify the tracts infiltrated by tumor, corresponding to clinical prior knowledge. In conclusion, integrating brain networks with GNN offers a new avenue to study brain lesions using computational neuroscience and computer vision approaches.

Y. Wei and Y. Li—Authors are contributed equally.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 Significance of Predicting IDH Mutational Status

Gliomas are common malignant brain tumors with various prognosis [16]. The mutation status of isocitrate dehydrogenase (IDH) genes is one of the most important biomarkers for the diagnosis and prognosis of gliomas, where IDH mutants tend to have a better prognosis than IDH wild-types [29]. Due to the crucial value in clinical practice, IDH mutations have been established as one of the landmark molecular markers for glioma patients, recommended by the World Health Organization classification of tumors of the Central Nervous System for routine assessment in glioma patients [13].

Currently, the most widely used approaches to determine IDH mutation status, i.e., immunohistochemistry and targeted gene sequencing, rely on tumor samples [13], which therefore cannot be assessed on those patients who are not suitable for tumor resection or biopsy. Further, as the assays usually are time-consuming and expensive, they are not available in some institutions.

Meanwhile, the radiogenomic approach has shown promise in predicting molecular markers based on radiological images. Mounting evidence has supported the feasibility of predicting IDH mutation status using the pre-operative MRI [4, 6, 11]. The most commonly used MRI sequences include pre-contrast T1, post-contrast T1, T2, and T2-weighted-Fluid-Attenuated Inversion Recovery (FLAIR). Integrating the quantitative information from multi-modal MRI promises to provide a non-invasive approach to characterize glioma and predict IDH mutations for better treatment planning and prognostication [9, 10].

1.2 Brain Structural Networks

The tissue structure of the human brain is divided into grey matter and white matter. The grey matter, located on the brain surface, constitutes the cerebral cortex and can be parcelled into cortical/subcortical regions based on cortical gyri and sulci. The parcellation offers a more precise association between brain function with cortical structure. The white matter of the cerebral cortex contains the connecting axons among the cortical/subcortical regions. The structural network of the brain is a mathematical simplification of the connectivity of the cortical/subcortical regions [3], where the nodes represent the cortical/subcortical regions and the edges are defined as connecting white matter tracts.

Accumulating research of structural brain networks has reported significance in neuropsychiatric diseases, including stroke, traumatic brain injury, and brain tumors [5, 12, 19, 27]. On the other hand, evidence shows that glioma cells tend to invade along the white matter pathway [26] and infiltrate the whole brain [24, 27]. Therefore, investigating structural brain networks could offer a tool to investigate glioma invasion on both tumor core and normal-appearing brain regions. Further, a previous study revealed that IDH mutations could be associated with different invasive phenotypes of glioma [18]. To this end, we hypothesize that employing the structural brain networks could provide value for predicting IDH mutation status. In particular, with prior knowledge of brain structure and anatomy incorporated, a more robust prediction model could be achieved.

1.3 Graph Neural Networks

The graph neural networks (GNN) is a branch of deep learning, specialized in data formats of irregular structures, such as varying numbers of edges and random orders of nodes in graph data [14]. Unlike the traditional convolutional neural networks (CNN) that convolute elements one by one in the grid data, the GNN aggregate information into nodes from their neighbors and simultaneously learns a representation of the whole graph. By employing the GNN on structural brain networks, the topological information contained in the structural brain networks could be effectively explored, which would consequently incorporate the prior knowledge of brain organization and perceive the critical information of tumor invasion at the whole-brain level.

1.4 Related Work

Current methods of predicting IDH mutation status include radiomics/machine learning-based, deep learning-based, or a combination of both. Radiomics/machine learning-based methods extract high dimensional handcrafted features from the MRIs, e.g., tumor intensity, shape, texture, etc., to train machine learning prediction models of molecular markers, tumor grades, or patient survival [6]. Deep learning-based approaches provide end-to-end model without pre-defined imaging features in the prediction tasks [11]. Some other methods integrated the radiomic features into a deep neural network to enhance prediction performance [4]. Albeit reasonable prediction accuracy, most of these methods are mainly driven by the computer vision tasks, without considering the systematic alteration of the brain organization during tumor invasion. Incorporating the prior knowledge from the neuroscience field shows promise to improve the prediction model.

1.5 Proposed Methods

Here we propose an approach of using GNN to predict IDH mutation status, based on the structural brain networks generated from multi-model MRI and prior human brain atlases. Our contributions include:

-

A method to incorporate the prior knowledge of brain atlases with the anatomical MRI to generate structural brain networks.

-

A novel architecture of GNN with specialized graph convolutional operator for aggregating multi-dimensional latent features of the multi-model MRI.

-

To our best knowledge, this is the first study that leverages GNN on the multi-modal MRI to predict the IDH mutation status of glioma.

2 Methods

2.1 Datasets

This study included the pre-operative multi-modal MRI (pre-contrast T1, post-contrast T1, T2, and FLAIR) of 389 glioma patients. MRI images of 274 patients were downloaded from The Cancer Imaging Archive (TCIA) website [17, 20, 21], whereas 115 patients were available from an in-house cohort. 17 of 389 patients who have missing IDH mutation status or incomplete MRI modalities were excluded. For the included patients, 103 patients are IDH mutant, and 269 are IDH wild-type.

2.2 Imaging Pre-processing

We processed the multi-modal MRI following a standard pipeline [2]. Firstly, the T1, T2, and FLAIR were co-registered to the post-contrast T1 using the FMRIB’s Linear Image Registration Tool [8]. Then, brain extraction was performed on all MRI modalities to remove the skull using Brain Extraction Tool in the FMRIB Software Library (FSL) [7, 22]. We also performed histogram matching [15] and voxel smoothing with SUSAN noise reduction [23]. A neurosurgeon and a researcher performed manual correction of brain masks, cross-validated using DICE score. Finally, all modalities were non-linearly co-registered using the Advanced Normalization Tools (ANTs) [1] to the MNI152 standard space, i.e., MNI-152-T1-2MM-brain provided by the FSL (Fig. 1A).

2.3 Constructing Patient Structural Brain Networks

Brain Network Template. We leveraged the brain network template derived from healthy subjects to construct brain networks in lesioned brains [19]. First, we used the prior brain atlases in healthy subjects as the template of brain networks, generating regions of interest (ROIs) for characterizing the brain networks in patients based on multi-modal MRI. Specifically, we used the Automated Anatomical Labelling (AAL) atlas [25] as the node ROIs (Fig. 1B), which includes 90 brain cortical and subcortical regions. Further, we generated a brain connectivity atlas from ten healthy subjects scanned by high-resolution diffusion MRI to derive the edge ROIs of the structural brain networks (Fig. 1C). We used a similar approach of generating brain connectivity atlas with [5, 28]. In brief, firstly, pairwise tractography among the 90 regions of AAL atlas was performed in healthy subjects, then the resultant tract pathways were co-registered to the MNI152 standard space. Next, the corresponding tracts of all healthy subjects were averaged for each edge between two nodes. Finally, the top 5% voxels of the tract density were retained and binarized to generate robust edge ROIs. The generated edge atlas is shown in Fig. 1C.

Study workflow. Upper: the pipeline of constructing patient brain networks. A: Patient MRIs are pre-processed and co-registered to the atlas space. B: The AAL atlas of 90 ROIs is used as the node atlas. C: The edge atlas is generated from performing tractography among the 90 ROIs on the diffusion MRI of healthy subjects. D & E Multi-modal MRI voxels within the node/edge ROIs are extracted and concatenated to voxel vectors to characterize the node/edge. 90 node were from AAL atlas while 2309 edges are the edges that exist in 9 of 10 healthy subjects in tractography. F & G: Two autoencoders are trained using edge and node voxel vectors. H & I: Encoders of trained autoencoders are used to extract the low dimensional latent features z from the high dimensional node/edge voxels vector, respectively. J Latent node/edge features are then rearranged into graph format as the input of the GNN. K Graph convolutional neural networks consist of three hidden graph convolutional layers, one graph embedding layer, and two fully-connected (FC) layers.

Latent Features of Nodes and Edges from Autoencoders. MRI voxels within the ROIs of the node or edge atlases across the whole brain were extracted and then concatenated to voxel vectors (Fig. 1D & E). We then used two autoencoders to extract the latent features from the voxel vectors of node and edge, respectively. Vector size was set as 2500 (voxels) \(\times \) 4 (modalities) = 10000. For edges and nodes with few voxels, the vectors were padding with zeros. The patient cohort was shuffled and split into a 80:20 ratio for training and testing data. Two autoencoders were trained by edge and node voxel vectors of the training data (Fig. 1F & G). Finally, the latent features of edge or node voxels were derived, with the dimension of the edge or node vectors substantially decreased from 10000 to 12 (Fig. 1H & I). The 12 latent features were used as the input of the GNN (Fig. 1J). Logistic sigmoid function was applied as transfer function for both encoder and decoder. L2 regularization with coefficient of 0.001 was used. ‘msesparse’ was set as the loss function.

2.4 Predicting IDH Mutation Status Using GNN

The patient brain networks constructed above were used to train the GNN, with the multi-modal MRI latent features as inputs. In addition to the 80:20 ratio of training and testing data, training data was split again into an 80:20 ratio for cross-validation. The proposed GNN consist of three graph convolutional layers similar to the one defined in [14], one node to graph embedding layers, and two fully connected feed forward layers (Fig. 1K). We used a binary cross-entropy loss, while the optimization was done using Adam optimizer.

The graph convolutional operator is defined as follow:

where \(\mathbf {x_i^{\prime }}\) denotes the features of node i after convolution, \( \mathbf {\Theta }_1\) and \( \mathbf {\Theta }_2\) denote the trainable network weights. \(\cdot \) is the multiply operator. \(e_{j,i,z}\) represents the zth edge feature from source node j to target node i. \(j\in \mathcal {N}(i)\) denotes all indices of nodes j connecting to node i with nonzero edge features. Z denotes the size of latent edge features.

The graph embedding operator is defined as follow:

where \(\mathcal {G}^Z\) denotes the graph embedding of size Z all nodes of the graph. \( \mathbf {\Theta }\) denote the trainable network weights. N denotes the number of nodes in graph. Z denotes the size of latent node features.

Random edge drop was applied to augment data during training. The weighted loss was applied in the network to mitigate the effect of data imbalance. Learning rate decay was used to stabilize the training process. Early stopping mechanism, weight decay, and dropout layers after fully connected layers were used to prevent over-fitting.

2.5 Benchmark Models

We adopted a three-dimensional Densely Connected Convolutional Networks (3D-DenseNet) (Fig. 2A) and a three-dimensional convolutional neural networks (3D-CNN) (Fig. 2B) as the benchmarks. Specifically, a classic 121-layer version of 3D-DenseNet follows the architecture described in [11] while a traditional 3D-CNN with four hidden convolutional layers with batch normalization and pooling was applied, followed by a max-pooling layer and an output layer. Data were split using the same method as the GNN model. Weighted loss, learning rate decay, and early stopping are all applied, which was similar to the GNN settings. The same loss function and optimiser were applied to the benchmark models as the GNN model. Two experiments with different input were conducted: whole-brain MRI and MRI voxels inside tumor ROIs (contrasting-enhancing tumor core and necrosis) which are generated according to [2].

Architecture of the benchmark models. A. Classic three-dimensional Densely Connected Convolutional Networks (3D-DenseNet) consist of four convolutional layers and four densely connected blocks. B. Three-dimensional convolutional neural networks (3D-CNN) consist of four hidden convolutional layers with max-pooling and batch normalization, one global pooling layer followed by dropout, and one fully connected dense layer.

3 Results and Discussion

3.1 Model Performance

Our experiments show that the proposed model performs better than the baseline models (Table 1) for both cross-validation and testing. Interestingly, the benchmark models with tumor voxels as inputs perform better than the models with the whole brain as inputs, which suggests the potential bias from the extensive brain regions beyond the local tumor. Of note, our proposed GNN model, leveraging the brain network generated based on prior atlas and whole brain MRI, performs better than all the benchmark models, which may suggest that incorporating prior knowledge of brain networks could help the deep learning models capture more informative features regarding tumor invasion over either the local tumor or the whole brain.

3.2 Model Interpretation

To interpret the learning process of the GNN model, we applied the GNNExplainer [30]. GNNExplainer outputs a probability score that infers the importance of the edges in the prediction task and outputs a compact subnetwork of the networks. The task was achieved by maximizing both a graph neural network’s prediction and distribution of possible subnetworks. Only subnetworks with edges that have probability scores greater than \(50\%\) were retained.

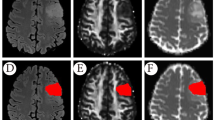

Overall, we observe that the IDH wild-type is associated with a wider distribution of edge invasion, captured by the GNN model. Figure 3 presents two typical cases of IDH mutant and wild-type, respectively, which also present the distribution of key white matter tracts (edges) that are important to the prediction accuracy. In line with our prior knowledge that IDH wild-type generally causes more widespread invasion, the results of the model interpretation could further support the usefulness the proposed GNN model.

Examples of IDH mutant and wild-type. A IDH mutant B. IDH wild-type. For both patients, the left panels indicate the T1-weighted images and the right panels show the output of GNNExplainer, illustrating the voxel distribution of edges that have over 50% and 90% probability of importance in IDH mutation prediction. The tract density of a voxel is defined as the number of tracts crossing the voxel.

4 Conclusion

In this paper, we propose a method to generate brain networks based on multi-modal MRI and predict the IDH mutation status using GNN and the generated brain networks. Numerical results demonstrate that the proposed method outperforms benchmark methods. In future work, we could use the radiomic approach to extract representative features from the node and edge ROIs. Furthermore, special end-to-end GNN models could be developed to directly take the high dimensional multi-modal MRI voxels as inputs. To conclude, combining brain networks with GNN promises to serve as a novel powerful tool for deep learning model development in radiogenomic studies.

References

Avants, B.B., Tustison, N., Song, G., et al.: Advanced normalization tools (ants). Insight J. 2(365), 1–35 (2009)

Bakas, S., et al.: Advancing the cancer genome atlas glioma MRI collections with expert segmentation labels and radiomic features. Sci. Data 4(1), 1–13 (2017)

Bullmore, E.T., Bassett, D.S.: Brain graphs: graphical models of the human brain connectome. Annu. Rev. Clin. Psychol. 7, 113–140 (2011)

Choi, Y.S., et al.: Fully automated hybrid approach to predict the IDH mutation status of gliomas via deep learning and radiomics. Neuro Oncol. 23(2), 304–313 (2021)

Fagerholm, E.D., Hellyer, P.J., Scott, G., Leech, R., Sharp, D.J.: Disconnection of network hubs and cognitive impairment after traumatic brain injury. Brain 138(6), 1696–1709 (2015)

Hyare, H., et al.: Modelling MR and clinical features in grade II/III astrocytomas to predict IDH mutation status. Eur. J. Radiol. 114, 120–127 (2019)

Jenkinson, M., Beckmann, C.F., Behrens, T.E., Woolrich, M.W., Smith, S.M.: FSL. Neuroimage 62(2), 782–790 (2012)

Jenkinson, M., Smith, S.: A global optimisation method for robust affine registration of brain images. Med. Image Anal. 5(2), 143–156 (2001)

Li, C., et al.: Multi-parametric and multi-regional histogram analysis of MRI: modality integration reveals imaging phenotypes of glioblastoma. Eur. Radiol. 29(9), 4718–4729 (2019)

Li, C., et al.: Characterizing tumor invasiveness of glioblastoma using multiparametric magnetic resonance imaging. J. Neurosurg. 132(5), 1465–1472 (2019)

Liang, S., et al.: Multimodal 3D densenet for IDH genotype prediction in gliomas. Genes 9(8), 382 (2018)

Liu, Y., et al.: Altered rich-club organization and regional topology are associated with cognitive decline in patients with frontal and temporal gliomas. Front. Hum. Neurosci. 14, 23 (2020)

Louis, D.N., et al.: The 2016 world health organization classification of tumors of the central nervous system: a summary. Acta Neuropathol. 131(6), 803–820 (2016)

Morris, C., et al.: Weisfeiler and leman go neural: Higher-order graph neural networks. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 4602–4609 (2019)

Nyúl, L.G., Udupa, J.K., Zhang, X.: New variants of a method of MRI scale standardization. IEEE Trans. Med. Imaging 19(2), 143–150 (2000)

Ostrom, Q.T., et al.: CBtrus statistical report: primary brain and other central nervous system tumors diagnosed in the united states in 2009–2013. Neuro-oncol. 18(suppl_5), v1–v75 (2016)

Pedano, N., et al.: Radiology data from the cancer genome atlas low grade glioma [TCGA-LGG] collection. Cancer Imaging Arch. (2016). https://doi.org/10.7937/K9/TCIA.2016.L4LTD3TK

Price, S.J., et al.: Less invasive phenotype found in isocitrate dehydrogenase-mutated glioblastomas than in isocitrate dehydrogenase wild-type glioblastomas: a diffusion-tensor imaging study. Radiology 283(1), 215–221 (2017)

Salvalaggio, A., De Filippo De Grazia, M., Zorzi, M., Thiebaut de Schotten, M., Corbetta, M.: Post-stroke deficit prediction from lesion and indirect structural and functional disconnection. Brain 143(7), 2173–2188 (2020)

Scarpace, L., et al.: Radiology data from the cancer genome atlas glioblastoma multiforme [TCGA-GBM] collection [data set]. Cancer Imaging Arch. (2016). https://doi.org/10.7937/K9/TCIA.2016.RNYFUYE9

Shah, N., Feng, X., Lankerovich, M., Puchalski, R.B., Keogh, B.: Data from Ivy GAP [data set]. Cancer Imaging Arch. (2016). https://doi.org/10.7937/K9/TCIA.2016.XLWAN6NL

Smith, S.M.: Fast robust automated brain extraction. Hum. Brain Mapp. 17(3), 143–155 (2002)

Smith, S.M., Brady, J.M.: Susan - a new approach to low level image processing. Int. J. Comput. Vis. 23(1), 45–78 (1997)

Stoecklein, V.M., et al.: Resting-state FMRI detects alterations in whole brain connectivity related to tumor biology in glioma patients. Neuro Oncol. 22(9), 1388–1398 (2020)

Tzourio-Mazoyer, N., et al.: Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage 15(1), 273–289 (2002)

Wang, J., et al.: Invasion of white matter tracts by glioma stem cells is regulated by a notch1-sox2 positive-feedback loop. Nat. Neurosci. 22(1), 91–105 (2019)

Wei, Y., et al.: Structural connectome quantifies tumor invasion and predicts survival in glioblastoma patients. bioRxiv (2021)

Wei, Y., Li, C., Price, S.: Quantifying structural connectivity in brain tumor patients. medRxiv (2021)

Yan, H., et al.: IDH1 and IDH2 mutations in gliomas. N. Engl. J. Med. 360(8), 765–773 (2009)

Ying, R., Bourgeois, D., You, J., Zitnik, M., Leskovec, J.: GNNExplainer: generating explanations for graph neural networks. Adv. Neural. Inf. Process. Syst. 32, 9240 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Wei, Y., Li, Y., Chen, X., Schönlieb, CB., Li, C., Price, S.J. (2022). Predicting Isocitrate Dehydrogenase Mutation Status in Glioma Using Structural Brain Networks and Graph Neural Networks. In: Crimi, A., Bakas, S. (eds) Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries. BrainLes 2021. Lecture Notes in Computer Science, vol 12962. Springer, Cham. https://doi.org/10.1007/978-3-031-08999-2_11

Download citation

DOI: https://doi.org/10.1007/978-3-031-08999-2_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-08998-5

Online ISBN: 978-3-031-08999-2

eBook Packages: Computer ScienceComputer Science (R0)