Abstract

In the 1990s Yankov published two papers containing important contributions to proof theory and based on the application of a dialogical interpretation of proofs. In both cases the method is used for providing constructive proofs of important metalogical results concerning classical logic and fundamental mathematical theories. In the first paper it is shown that impredicative extensions of intuitionistic versions of arithmetic, analysis and set theory, enriched with suitable bar induction schemata, are sufficiently strong for proving the consistency of their classical counterparts. In the second paper the same method is applied to provide a constructive proof of the completeness theorem for classical logic. In both cases a version of a one-sided sequent calculus in Schütte-style is used and cut elimination is established in the second case. Although the obtained results are important, and the applied method is original and interesting, they have not received the attention they deserve from the wider community of researchers in proof theory. In this paper we briefly recall the content of both papers. We focus on essential features of Yankov’s approach and provide comparisons with other results of similar character.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Proof theory

- Dialogical interpretation of proofs

- Consistency proofs

- Bar induction

- Arithmetic

- Analysis

- Set theory

1 Introduction

In the early 1990s, shortly after returning from internal exile in Siberia, Yankov published two papers containing important contributions to proof theory. More precisely the first of them Yankov (1994) presents constructive consistency proofs of classical arithmetic, analysis and set theory. The second one Yankov (1997) just shows the application of the general method developed in the first paper to obtain a constructive proof of the completeness of first order logic FOL. It is evident that both works were prepared in extremely difficult times of his political imprisonment where he had no access to vast literature in ordinal proof theory. It explains why both papers have limited references. Only Spector’s well known result on the consistency of analysis (Spector (1962)) is mentioned in addition to Shoenfield’s textbook (Shoenfield (1967)) and some of Kreisel’s papers on proof theory. Even Gentzen’s works are not mentioned directly although it is evident that they are known to Yankov and provide some general background to his approach. What is sad is the fact that the reception of Yankov’s work was sparse. There are no references to it in the works of leading experts in proof theory. In this paper we try to briefly characterise the results he obtained and put them in the wider context of proof-theoretic work which was conducted elsewhere.

In the next section we briefly recall the main trends and results obtained in proof theory after Gentzen’s seminal works on the consistency of arithmetic. Then we characterise the main features of Yankov’s approach as developed in these two papers. In particular, in Sect. 3.4 we present sequent calculi applied by Yankov and compare them with other ways of formalising mathematical theories. In Sect. 3.5 we describe his dialogue theory of proofs and compare it with other dialogical/game based approaches. Since Yankov’s proof of consistency is based neither on cut elimination nor on any Gentzen-like reduction procedures but on direct justification of cut applications, it requires Brouwer’s bar induction principle. In Sect. 3.6 bar induction will be generally explained and its specific forms introduced by Yankov briefly characterised. We close the presentation of his approach in Sect. 3.7 with a sketch of his proofs. The last section provides a summary and evaluation of his result in comparison to other approaches.

2 Consistency Proofs

One of the most important results in metamathematics which are known (at least by name) even to laymen are Gödel’s two incompleteness theorems. In common opinion they have shown that an ambitious version of Hilbert’s program is not realizable, at least in the original form. Gödel himself believed that his results do not destroy Hilbert’s program decisively but some other outstanding scholars, like von Neumann, were convinced that the expectations for establishing a safe basis for arithmetic were buried. The strong tradition of proof theoretic research which soon started with Gentzen is a good evidence that Gödel’s, more balanced, view was closer to truth. In fact, the result on the embeddability of classical arithmetic in intuitionistic arithmetic which was proved independently by Gödel and Gentzen may be seen as a first kind of consistency result for classical arithmetic in the sense that its consistency problem was reduced to the problem of the consistency of intuitionistic theory.

Gentzen recognized that the main problem with Hilbert’s program was with its unclear explanation of the notion of finitist methodFootnote 1 and started to investigate what kind of principles are sufficiently strong to provide a consistency proof for arithmetic, yet may be treated as sufficiently safe. He provided four proofs of consistency of suitable formalizations of Peano arithmetic PA. In fact, the first of them (unpublished after Bernays’ criticism.) was based on the application of intuitionistically acceptable principles, namely the fan theorem which is a counterpart of König’s lemma and a corollary of the bar induction theorem, which is the basis of Yankov’s approach. Later Gentzen changed the strategy and based a proof of consistency on the principle of transfinite induction up to \(\epsilon _0\).

It is well known that Gentzen (1934) introduced special kinds of calculi enabling better analysis of proofs—natural deduction and sequent calculi. Frege and Hilbert-style axiomatic systems were formally correct and easy to describe but the construction of proofs was heavy and artificial, giving no insight as to how to discover a concrete proof and far from mathematical practice. Natural deduction was, as its name suggests, constructed as a formal system which corresponds to the actual practice of proof construction, in particular it enables an introduction of temporary assumptions for conditional and indirect proofsFootnote 2 instead of formalising the derivation of theses from axioms. Moreover, Gentzen was convinced that natural deduction calculi allow for better analysis of the structure of proofs, in particular by their transformation into special normal form. Sequent calculi were at first treated as a kind of technical device but soon started independent life in the realm of proof theory. The problem for Gentzen was that he was able to obtain normalizability of proofs only for intuitionistic logic whereas in sequent calculus the corresponding result, called by him Hauptsatz (cut elimination theorem), was established for classical and intuitionistic logic in an uniform way. Normal form theorems for classical and other logics were established in the 1960s by Prawitz (1965) (and independently by Raggio 1965) whereas Gentzen’s original proof for intuitionism was rediscovered only recently by von Plato (2008). In the meantime sequent calculus became more and more popular due to the works of such logicians as Ketonen, Curry, Kleene, to mention just a few names. The earliest two proofs of the consistency of PA constructed by Gentzen (1936a) (and galley proofs added to the English translation of this paper in Szabo 1969) were established for a calculus which was a hybrid of natural deduction and sequent calculus (sequent natural deductionFootnote 3). The third Gentzen (1938) (and fourth Gentzen 1943) one was provided for the sequent calculus version of PA.

Although it may be debatable whether Gentzen provided a properly finitist proof of the consistency of PA it is evident that he provided an important result which was also an improvement of Gödel’s result. In fact, many mathematicians neglected Gödel’s result claiming that his kind of unprovable statement is artificial and not a ‘real’ arithmetic true sentence. Gentzen’s principle of transfinite induction up to \(\epsilon _0\) is an example of a ‘real’ true and nonprovable arithmetic sentence. Moreover, he has shown in Gentzen (1943) that it is the best possible since his fourth proof shows that weaker principles (for ordinals below \(\epsilon _0\)) are provable in his system. This way he opened the way for the foundation of ordinal proof theory developed by Takeuti, Schütte and his followers. It should be understood that what he obtained was not a trivial game where the ordinary induction principle is proved by means of stronger transfinite induction up to \(\epsilon _0\). His application of transfinite induction is restricted to recursive predicates; only so it has a constructive character in contrast to the nonrestricted induction principle which is a primitive rule of the system PA which is shown to be consistent.

Yet Gentzen believed that this was only the beginning and tried to prove the consistency of analysis.Footnote 4 His tragic death closed his research but other logicians continued his investigation.

Gentzen had a clear picture of three levels of infinitary involvement connected with arithmetic, analysis and set theory respectively. In his lecture (Gentzen (1936c)) elementary number theory is characterised as dealing only with the infinity of natural numbers which may be interpreted constructively in terms of potential infinity. Analysis introduces a higher level of commitments to infinity since we must deal with infinite sets of numbers. In case of set theory an unlimited freedom is allowed in dealing with infinity in the sense that it deals with infinite sets of infinite sets of any further order.

Gentzen’s quest for a proof of the consistency of mathematics was continued in many ways and with the application of several ingenious techniques. We briefly point out only some chosen achievements, namely those which—in our opinion—may serve as the most direct points of reference to Yankov’s results. More extensive information may be found in several textbooks and surveys, in particular: Takeuti (1987),Footnote 5 Pohlers (2009), Schwichtenberg (1977), Rathjen and Sieg (2018). One of the important features of Gentzen’s original consistency proofs is the remarkable fact that despite differences all are based on some reduction steps rather than on full unrestricted cut elimination which yields consistency in a trivial way like in the case of pure classical and intuitionistic logic. In particular, in the third proof (the second one published in Gentzen 1938) no full cut elimination for a suitable sequent calculus is obtained due to the additional induction rule. Instead we have a reduction of proofs having some ordinal assigned, to proofs having lesser ordinals assigned. It opens the way for searching cut-free systems where consistency will be obtained as a simple corollary, similarly like in the case of pure logic, and even arithmetic without induction.Footnote 6

Soon it appeared that the problems may be solved by extending the notion of a calculus in such a way that infinity is introduced in the realm of syntactic proofs. Independently, Novikov (1943) and Schütte (1977) showed how to avoid the difficulty with cut elimination in infinitary systems although the former work was rather unnoticed. In Schütte’s approach a so called semiformal system with the \(\omega \)-rule is introduced instead of a special rule of induction. It has the effect that cut elimination is a trivial extension of the ordinary proof but the cost is that the calculus is infinitary, i.e. proofs are well-founded trees with a denumerable number of branches. Additionally, Schütte introduced a simplified version of sequent calculus—the one-sided calculus which became very popular in proof theoretic investigations. Sequents are just collections (usually finite lists) of formulae and the number of rules is reduced. Such kind of calculus was also applied by Yankov and will be described below in detail. In fact, the level of infinity introduced to syntactical calculi by Schütte was earlier signalled by Hilbert and in fact even more economical infinitary systems are possible if we introduce also infinitary sequents. This was the solution proposed a little earlier by Novikov (1943) but wider known later due to Tait (1968). In such kind of calculi further simplifications follow since quantifiers are reduced to infinite conjunctions/disjunctions.

Different ways (avoiding the introduction of infinity to the realm of syntactic systems) of searching for cut elimination in enriched calculi were also investigated. Takeuti formulated a conjecture that cut is eliminable in the sequent calculi for full second-order logic Z2 (and logics of higher degrees) which, as is well known, is sufficient for the formulation of analysis. The troubles with Takeuti’s conjecture was that rules for introduction of second-order quantifiers (\(\forall \) into the antecedent or \(\exists \) into the succedent) lack the subformula property since terms instantiated for second-order variables may be of any complexity, which destroys standard ways of proving this result. Proofs confirming Takeuti’s conjecture were offered independently by Tait, Takahashi, Prawitz and Girard (see Rathjen 2018 for details). The problem with all these proofs is that they are not purely syntactical and highly non-constructive. Cut elimination is indirectly shown be means of applying the semantical resources due to Schütte. Problems with the lack of subformula property may be resolved by means of some stratification of formulae in the spirit of the ramified hierarchy of sets. In this way we enter into the field of predicative analysis.

In fact it is not necessary to be a conservative follower of Gentzen’s syntactic approach to obtain similar results. In the meantime Spector (1962) proved the consistency of analysis with no use of sequent calculi or natural deduction but on the basis of Gödel’s Dialectica interpretation and with the help of Brouwer’s bar induction suitably generalised. It seems that his proof strongly influenced Yankov, especially concerning the role played by bar induction. We will return to this question in Sect. 3.6.

However, most of the outcomes concerning consistency results were developed in the spirit of Schütte’s approach by the Munich school (Buchholz, Jäger, Pohlers) where several subsystems of analysis and set theory were investigated. In fact a development of so called ordinal proof analysis is strongly based on Gentzen’s result (Gentzen 1943) where it is shown that in his sequent calculus for PA all induction principles for ordinals \(< \epsilon _0\) are provable. In contemporary proof theory this approach was developed into a separate field of research which classifies systems by assigning to them the least ordinals such that suitable induction principles are not provable in them. The level of sophistication of these investigations is very high and was summarised by Rathjen (1999) as “tending to be at the limit of human tolerance”.

This sort of analysis was also successfully applied to set theory, among others by Jäger, Arai, Rathjen. It is remarkable that most of the works on the consistency of set theory is concerned with different versions of Kripke-Platek theory KP not with ZF as in Yankov’s paper. In KP the power set axiom is omitted and the axiom of separation and collection are restricted. Nonetheless this weak axiomatization is sufficient for the most of important applications of set theory and, moreover, it is amenable to ordinal analysis which makes it a favourite system for proof theoretic investigations. This branch of proof theory is sometimes called admissible proof theory since Barwise’s admissible sets provide natural models for it.

Let me conclude this section with some remarks concerning the eliminability of axioms and cut as the main technique for obtaining the consistency of mathematical theories. It is known that cut is not fully eliminable in SC with axioms. Of course if axioms are atomic this is not a serious problem. Many approaches to arithmetic resolve the problem of axioms of PA in such a way but the principle of induction is not amenable to such treatment. Gentzen provided different forms of rules corresponding to the induction axiom (see below Sect. 3.4) but they did not allow for extending his proof of cut elimination. Negri and von Plato (2001) developed techniques for changing some types of axioms (like e.g. universal geometric formulae) into one-sided rules which allows for extending standard cut elimination proofs. But induction cannot be formalised in such a way. Of course any axiomatic sequent may be transformed into rules representing several schemata (see e.g. Indrzejczak 2018) but not necessarily fitting our purposes, in particular yielding rules which are suitable for proving cut elimination. Omega rules allow for elimination of induction principles but at the cost of introducing semi-formal (infinitary) calculi. Yankov’s proof shows a different route.

I’m convinced that this short and very incomplete surveyFootnote 7 is necessary to put Yankov’s proposal in the proper context, to show that his ambitious but not recognized project belongs to a field of very active research developed before its publication and still actively continued, unfortunately without noting his interesting contribution.

3 Yankov’s Approach

Both papers discussed below represent Yankov’s conception of the so called ‘dialogue’ approach to solving metalogical problems. In Yankov (1994) it is used to prove the consistency of classical theories of Peano arithmetic, analysis and ZF set theory, whereas in Yankov (1997) the completeness of FOL is demonstrated. In both cases proofs are carried out in a constructive way by means of the notion of the defensibility of a formula (sequent). This notion is introduced in the context of a dialogue (game) between two subjects called an opponent and a proponent. Each formula (sequent) is an object of discussion in which an opponent is trying to refute it and a proponent is trying to provide a defence. A formula (sequent) is defensible if for every opponent’s attempt a proponent is able to find a true literal which is its subformula.

In case of consistency proofs the general strategy is simple. It is shown that the axioms of the theories under consideration are defensible and that every rule is defensibility-preserving from premisses to conclusions. Since no false formula is defensible it follows that the respective theories are consistent. The apparent simplicity of these proofs rests on the remarkable complexity of their concrete steps which involve the application of special versions of bar induction principles and arithmetization of the opponent’s strategies, required for application of bar induction. Theories are formalised as one-sided sequent calculi with added axiomatic sequents. Proving defensibility-preservation for all rules except cut is relatively simple. Although Yankov in (1994) is sometimes referring to part of his proof as cut elimination it cannot be treated as such, at least in the standard sense of this word. The result is shown not by cut elimination but directly by showing that cut is also defensibility-preserving. It is this case which needs very complex argument and in particular bar induction in a variety of special forms must be applied to cover the cases of the quantifiers.

In the case of the completeness of FOL, soundness is just a corrolary of the consistency of arithmetic. In order to prove that FOL is complete again the dialogue interpretation is applied to a root-first proof search procedure. But since completeness is proved by means of constructive resources the usual strategy is not applicable. Thus instead of an indirect proof by construction of a model on the basis of an open infinite branch a direct proof is provided.

There are three main components of Yankov’s method that are applied in both papers: (a) a (one-sided) cut-free sequent calculus used for the formalization of the respective theories; (b) the specific dialogical framework used for proving the defensibility of provable sequents, and (c) the application of specific bar induction principles required for the demonstration of the preservation of defensibility by cut. In the following sections we will briefly comment on all of them and sketch the proofs.

4 The Calculus

Yankov applies the one-sided sequent calculus invented by Novikov and Schütte and popularised by Tait and researchers from the Munich School (Buchholz, Pohlers) although his formalization of arithmetic is different. As we already mentioned this approach is usually based on the application of infinitary rules (so called semiformal systems), whereas Yankov’s formalization is finitary and uses only additional axioms. The standard language of FOL is used with denumerably many variables and denumerably many predicate letters of any arity with constants: \(\lnot , \wedge , \vee , \forall , \exists \), but with formulae in negation normal form, i.e. with negations applied only to atomic formulae. Thus every occurrence of \(\lnot \varphi \) with \(\varphi \) nonatomic is to be treated as an abbreviation according to the rules: \(\lnot \lnot \varphi := \varphi , \lnot (\varphi \wedge \psi ) := \lnot \varphi \vee \lnot \psi , \lnot (\varphi \vee \psi ) := \lnot \varphi \wedge \lnot \psi , \lnot \forall x\varphi := \exists x\lnot \varphi , \lnot \exists x\varphi := \forall x\lnot \varphi \). In the case of the languages for the theories considered only predicate and function symbols for recursive relations are permitted (no specification is provided which ones are preferred) so it is assumed that the law of excluded middle holds for atomic formulae. Also in case of analysis two sorts of variables are introduced, for numbers and functions from N to N (i.e. infinite sequences).

It is worth underlining that in the course of proof Yankov applies Smullyan’s trick to the effect that free variables are substituted consecutively with the objects from the domain of the constructed model so in fact he is working only with closed formulae of a special kind. What objects are substituted for free variables depends on the theory under consideration. In case of PA these are natural numbers, for ZF these are sets. In analysis there are two domains for each sort of variables: numbers and infinite sequences of numbers.

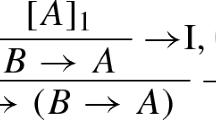

Sequents are finite lists of formulae. Restriction to negative forms allows for a very economic calculus consisting of the following rules:

\(\textstyle (W)\)\(\dfrac{\Gamma }{\Gamma , \varphi }\) | \(\textstyle (C)\)\(\dfrac{\Gamma , \varphi , \varphi }{\Gamma , \varphi }\) | \(\textstyle (P)\)\(\dfrac{\Gamma , \varphi , \psi , \Delta }{\Gamma , \psi , \varphi , \Delta }\) | \(\textstyle (Cut)\)\(\dfrac{\Gamma , \varphi \qquad \Delta , \lnot \varphi }{\Gamma , \Delta }\) |

\(\textstyle (\vee 1)\)\(\dfrac{\Gamma , \varphi }{\Gamma , \varphi \vee \psi }\) | \(\textstyle (\vee 2)\)\(\dfrac{\Gamma , \psi }{\Gamma , \varphi \vee \psi }\) | \(\textstyle (\wedge )\)\(\dfrac{\Gamma , \varphi \qquad \Gamma , \psi }{\Gamma , \varphi \wedge \psi }\) | \(\textstyle (\forall )\)\(\dfrac{\Gamma , \varphi }{\Gamma , \forall x\varphi }\) \(\textstyle (\exists )\)\(\dfrac{\Gamma , \varphi [x/t]}{\Gamma , \exists x\varphi }\) |

with the usual eigenvariable condition for x being fresh in \((\forall )\). In \((\exists )\) t is an arbitrary term of the respective theory.

In the system for FOL the only axioms are of the form: \(\varphi , \lnot \varphi \), where \(\varphi \) is atomic. Such a formalization is well known to be adequate but Yankov provided a different kind of proof which we briefly describe in Sect. 3.7. In case of arithmetic, and other systems, this system must be enriched with axioms or rules. In general Yankov provides only axiomatic additions keeping the set of rules fixed. Moreover, in case of the theories considered the only logical axiom \(\varphi , \lnot \varphi \) is formulated without restriction to atomic formulae.

In case of PA additional axioms (or rules) are needed for identity, definitions of recursive operations and for induction. Yankov did not introduce any special set of axioms except for the induction axiom. In fact, if we just postulate that every true identity \(t = s\) or its negation with closed terms is accepted as an axiom we do not even need special rules/axioms for identity since they are provable (see e.g. Mendelson 1964). Even logical axioms are provable so Yankov could safely dispense with them. Following Negri’s and von Plato’s approach (2001) we could as well express all such elementary nonlogical axioms by means of rules enabling cut elimination. In the framework of one-sided sequents they take the following form:

\(\textstyle (true)\)\(\dfrac{\Gamma , t\ne s}{\Gamma }\) for any true \(t=s\) | \(\textstyle (false)\)\(\dfrac{\Gamma , t = s}{\Gamma }\) for any false \(t=s\) |

But this is not necessary since for Yankov’s proof of consistency cut elimination is not required. The only substantial axiom express the induction principle and has the following expected form:

\(\lnot \varphi (0) \vee \exists x(\varphi (x) \wedge \lnot \varphi (sx)) \vee \forall x\varphi (x)\)

Below for comparison’s sake we recall two forms of Gentzen’s rules (but again in one-sided sequent variation, not in his original version) and \(\omega \)-rule of Schütte:

\(\textstyle (Ind1)\)\(\dfrac{\Gamma , \varphi (0) \qquad \Gamma , \lnot \varphi (a), \varphi (sa)}{\Gamma , \forall x\varphi }\) | \(\textstyle (Ind2)\)\(\dfrac{\Gamma , \lnot \varphi (a), \varphi (sa)}{\Gamma , \lnot \varphi (0), \forall x\varphi }\) |

\(\textstyle (\omega )\)\(\dfrac{\Gamma , \varphi (0) \qquad \Gamma , \varphi (1) \qquad \dots }{\Gamma , \forall x\varphi }\) |

with the usual eigenvariable condition of a being fresh variable (parameter). (Ind1) was used for the first and second consistency proof based on natural deduction system in sequent form, whereas (Ind2) was applied in the third consistency proof in the context of sequent calculus. One can easily show that the system with Yankov’s axiom is equivalent to the one with any of the Gentzen’s rules. In case of \((\omega )\) it can be shown that the induction principle is deducible but due to its infinitary character it cannot be derived in ordinary formalization of PA.

In case of analysis the language changes into a two-sorted one; in addition to ordinary individual variables denoting natural numbers we have variables denoting functions from N to N. Accordingly the rules for quantifiers are doubled. In fact we obtain a restricted form of a second-order system. The axiom of induction is preserved, additionally a principle of choice is added:

\(\lnot \forall x\exists y\varphi (x,y) \vee \exists f\forall x\varphi (x, f(x))\) where f is a functional variable.

The statement of ZF is more complicated since sequent counterparts of set-theoretic axioms of ZF are needed. We omit their formulation to save space.

5 The Dialogue Method

In all proofs Yankov consequently applies a method which he calls dialogue theory, or dialogue interpretation of proofs in respective systems. There are close affinities between Yankov’s method and the dialogical method of Lorenzen (1961) as well as other approaches which are based on some kind of game involved in searching for a proof or a model (see e.g. Hintikka 1968). In fact, initially any attempt to compare mathematical activity to games was seen as odd within the intuitionistic approach. Hilbert was happy with such a comparison and considered it to be well-suited to the formalist stance in the philosophy of mathematics, but for Brouwer any such analogy was rather offensive.

Soon this approach found its way into the constructivist framework due to Lorenzen. Despite the similarities there are serious differences between the Lorenzen-style dialogical approach and Yankov’s dialogues. It is common that in this kind of constructivist framework the roles of two game-players are assigned according to the kind of formula that is investigated. Thus, for example, we can have an \(\forall \)-player who is responsible for a choice in the case of a conjunctive or universally quantified formula, and an \(\exists \)-player who deals with disjunctions and existential formulae. The roles of both players may change during the game in the sense that any of them may either attack or defence some chosen formula at a given stage of the game.

There is an interesting paper of Coquand (1990) where such a Lorenzen-style approach is applied to Novikov’s system (1943) and his notion of regular formulae. The latter may be seen as a sort of intuitionistic truth definition for classical infinitary one-sided sequent calculus. The main result in Novikov’s paper is the original proof of admissibility of a special form of cut (or rather modus ponens). In Coquand’s approach the concept of regularity obtains a natural reading in terms of a debate between two players. In this way a game-theoretic semantics of evidence for classical arithmetic is built, and a proof of admissibility of cut is obtained, which yields the consistency of the system. Coquand is unaware of the work of Yankov and despite applying some game-theoretic solution provides an approach which is significantly different. The strategies of players are characterised similarly to the Lorenzen approach, and the proof is based on the elimination of cut. Moreover, only classical arithmetic is considered although some remarks on a possible extension to analysis are formulated in the conclusion.

In Yankov’s approach the strategies of both players are defined for all kinds of formulae but in a slightly different way. There is an opponent whose goal is to refute a formula and a proponent who tries to defend it. Moreover, an opponent is responsible for defining an (supposedly falsifying) interpretation, and she is not limited in any way in her choices. On the other hand, a proponent is only a proponent of the formula which is under consideration, but she is working with a model provided by the opponent. Moreover, a proponent is a constructive subject; her strategies are effectively computable functions of the opponent’s strategies. In particular, it is assumed that she is capable of marking the steps of calculation with some system of ordinals of sufficiently constructive character. In PA, integers are sufficient. In analysis this system must allow for the enumeration of any collections of natural numbers and functions. In ZF these are simply the ordinals of the set-theoretic domain.

More formally, the opponent’s tactics OT is an ordered pair \(\langle g, f \rangle \) with g being an opponent’s interpretation (OI), and f an opponent refutation tactics (ORT). OI depends on the theory which is being investigated. Thus in the case of arithmetic (but also first-order logic) it is simply an assignment of natural numbers to variables and extensions to predicates defined on numbers, so that all literals are definitely determined as true or false. In the case of analysis and ZF OI is determined accordingly on numbers/infinite sequences and on sets.

ORT is defined uniformly in all cases by induction on the length of a formula:

-

1.

for a literal, a choice of any object from the domain of OI (natural number for analysis);

-

2.

for \(\varphi \wedge \psi \), a choice of \(\varphi \) or \(\psi \) and an ORT for the chosen subformula;

-

3.

for \(\varphi \vee \psi \), a pair consisting of an ORT for \(\varphi \) and for \(\psi \);

-

4.

for \(\forall x\varphi \), a choice of an object o from the domain, and an ORT for \(\varphi [x/o]\);

-

5.

for \(\exists x\varphi \), a function assigning to each object o an ORT for \(\varphi [x/o]\).

Of course in analysis we have a division of cases for the quantifiers due to the two sorts of variables.

The proponent tactics PTs for some formula \(\varphi \) are constructive functionals on all possible OTs for \(\varphi \) with values being natural numbers for PA, their sequences for analysis and sets for ZF. Of course, to do this an arithmetization of OT in PA and ZF must be performed, in particular in ZF sequences of ordinals are assigned (for analysis it is not necessary).

Intuitively, every OT determines a list of subformulae of \(\varphi \) which are supposed to be refutable and then PT for this OT should determine a literal from this list which is true; the value of OT on this PT is just a number that is assigned to this literal (see item 1 in the definition of ORT). We omit a detailed description. A PT for \(\varphi \) is called a defence of \(\varphi \) if for each OT for \(\varphi \) a designated literal which is a subformula of \(\varphi \) which is true. Such a formula is called defensible. These notions are extended in a natural way to sequents. Let a sequent S be \(\varphi _1, \dots , \varphi _n\), then an OT for S is \(\langle \langle g, f_1 \rangle \dots \langle g, f_n \rangle \rangle \) where g is some fixed OI and \(f_i\) is an ORT for \(\varphi _i\). A PT for such a sequent is a computable function whose arguments are all possible OTs for S and whose values are pairs of natural numbers with the first item denoting a number of a formula in S and the second item denoting the designated number of its chosen subformula that is a literal. If PT for a sequent S designates a true literal that is a subformula of some \(\varphi _i\), then S is defensible and this PT provides a defence for it.

6 Bar Induction

The last component of Yankov’s method of proof is bar induction which appears in many specific forms suitable for the considered theories. Let us recall that bar induction was introduced by Brouwer as an intuitionistically acceptable counterpart of the ordinary induction principle. Informally bar induction is an inductive process which is running on sequences and is usually formulated in terms of well-founded trees (nodes as finite sequences) satisfying some conditions. It allows to “spread” any condition from the terminal nodes of a well-formed tree to all its nodes. The essential inductive step shows that if the condition holds for all immediate successors of some node (i.e. its one element extensions), then it holds for this node. More precisely, Brouwer’s original bar induction may be stated as follows. The basic notion of a bar may be understood as a subset B of finite sequences of natural numbers such that for every \(f:N \longrightarrow N\) there is n such that the sequence \(s = \langle f(0), \dots , f(n-1)\rangle \) is in B. It is sometimes said (in particular in Yankov 1994) that s secures B. Now, for given B and any predicate \(\varphi \) defined on sequences bar induction states that: (a) if it satisfies every sequence in B and (b) satisfies a sequence if it satisfies all its one-element extensions, then it satisfies the empty sequence (the root of a tree).

Bar induction, together with the principle of continuous choice and with the fan theorem may be seen as one of the fundamental Brouwerian principles related to his understanding of choice sequences. It is remarkable that bar induction although constructively acceptable is a very strong principle, stronger than transfinite induction considered by Gentzen. In fact the class of choice sequences assumed by bar induction is much wider than the class of general recursive functions. For example, bar induction added to a constructive version of ZF allows for proving its consistency (see Rathjen 2006) and added to classical logic yields the full second-order comprehension principle. Anyway in the context of intuitionism it is much weaker and we do not obtain the full force of the second-order system Z2. In fact, Brouwer’s original bar principle is still too strong and leads to intuitionistically unwanted conclusions. To avoid them one must add some restrictive conditions on B; either that it is decidable for every finite sequence s that it belongs to B or not (decidable or detachable bar induction), or the stronger condition that every one-element extension of a sequence in B also belongs to B (monotonic bar induction).

It seems that Yankov’s predilection to bar induction and corresponding bar recursion was influenced by Spector’s proof of the consistency of analysis. This proof belongs to a significantly different tradition than all consistency proofs and other related proof theoretic works in Gentzen’s tradition. On the basis of Gödel’s dialectica interpretation Spector defined functionals by means of bar recursion. Yankov explicitly refers to his work. In particular, he developed the family of basic and auxiliary bar induction principles for all theories he considered. It is not possible to present all his considerations so we only briefly describe Yankov’s original formulation of basic principles.

The central notion in the case of arithmetic is that of constructive functionals mapping sequences of natural numbers to natural numbers, and that of an initial segment. Let \(\phi \) be such functional which plays the role of a bar and f an arbitrary function \(N \longrightarrow N\) (sequence), then there is a finite initial segment \(\kappa = \langle k_0, \dots , k_n\rangle \) of f which secures \(\phi \) in the sense that \(\phi (f) = \phi (g)\) for any g that has this initial segment \(\kappa \) common with f. This is the basic form of the initial segment principle which is then enriched with formulations of two auxiliary forms. The latter are derivable from the basic one and are introduced only to facilitate the proof so we omit their formulation.

Now Yankov’s original bar induction principle may be formulated for any constructive functional \(\phi \) and predicate \(\varphi \) running over finite sequences of natural numbers (i.e. possible initial segments) in the following way:

Basis. \(\varphi (\kappa )\) holds for any \(\kappa \) securing \(\phi \).

Step. If \(\varphi (\kappa ')\) holds for any single-element extension \(\kappa '\) of \(\kappa \), then it holds for \(\varphi (\kappa )\).

Conclusion: \(\varphi (\curlywedge )\) holds, where \(\curlywedge \) is the empty sequence.

Two auxiliary forms of bar induction are then introduced which facilitate the proof but are derivable from the basic form.

For analysis, two bar induction principles are formulated, one for natural numbers and one for functions of the type: \(N \longrightarrow N\). Since they are similar we recall only the first. Let \(\Phi \) run over functions defined on well-ordered sets of natural numbers and let \(d(\Phi )\) denote the domain of \(\Phi \) with its well ordering, then it may be formulated as follows:

Basis. \(\varphi (\Phi )\) holds if \(d(\Phi )\) encompasses all natural numbers.

Finite step. If \(\varphi (\Phi ')\) holds for any single-element extension \(\Phi '\) of \(\Phi \) (i.e. a new number is added to \(d(\Phi )\)), then it holds for \(\varphi (\Phi )\).

Transfinite step. If \(d(\Phi ')\) is ordered by a limit ordinal and \(\varphi (\Phi ')\) holds, then there exists a \(\Phi \) such that \(d(\Phi )\) is a proper initial segment of \(d(\Phi ')\), \(\Phi \) and \(\Phi '\) coincide on \(d(\Phi )\), and \(\varphi (\Phi )\) holds.

Conclusion: \(\varphi (\curlywedge )\) holds, where \(\curlywedge \) is a function with empty domain.

It is interesting that in case of analysis the notion of constructive functional is not needed for suitable bar induction principles. Each ordinal sequence of functions is barred from above by functions which contain all the objects of the domain. Because of that the barring they introduce is called ‘natural’ by Yankov. Yankov acknowledges that his bar principles for analysis are not intuitionistically acceptable. However he believes that for an agent with somewhat extended constructive capacities dealing with potential infinity they may be as obvious as arithmetical bar induction in the finite case. In case of set theory the schema of introducing suitable bar induction principles and their assessment is similar (but with ordinals and their sequences) to the case of analysis and we omit presentation.

All bar induction principles have their corresponding bar recursion schemata.

As we mentioned in order to apply bar induction in consistency proofs an arithmetization of proof theoretic notions is required. In particular OTs are represented as “code sequences” (CS) and PTs as functionals on these sequences. We again leave out the details of the construction.

7 Proofs

As we mentioned above the structure of Yankov’s consistency proof is simple. It is just shown that all axioms are defensible and all rules (including cut) are defensibility-preserving. Since inconsistent statements are obviously not defensible, consistency follows. Note that the strategy applied is different than in the consistency proofs briefly mentioned in Sect. 3.2. In general, in all approaches based on the application of some kind of sequent calculus the results are basically consequences of the subformula property of rules and because of that the position of cut is central. The most popular strategy works by showing that cut is eliminable; it was the work done by Gentzen (1934) for logic and arithmetic without induction and by Schütte, Tait and others for systems with infinitary rules. In general this strategy fails for systems with some axioms added but it may be refined by suitably restricting the class of axioms (e.g. basic, i.e. atomic sequents of Gentzen) which makes it possible to restrict the applications of cut to inessential ones and still obtain the result. In fact axioms of many specific forms may be introduced not only as basic sequents but also as corresponding rules working on only one side of a sequent and thus enabling full cut elimination. This is a strategy developed successfully by Negri and von Plato (2001). Unfortunately it does not work for PA. The induction principle either in the form of an additional (but not basic) sequent, or as a rule, is not amenable to these strategies. Thus (as in Gentzen 1936a and 1938) some additional kinds of reduction steps must be introduced to take control of the applications of cut and limit its use only to those which are not troublesome. Yankov does not try to eliminate or restrict the use of cut, instead he shows that it is also defensibility-preserving. Unsurprisingly this is the most difficult part of his consistency proofs. Without going into details we briefly sketch how it proceeds.

Showing that all logical and structural rules (except cut) are defensibility-preserving is easy. For example consider an application of \((\wedge )\) and assume that both premisses are defensible. If an opponent is trying to disprove the left conjunct \(\varphi \) it is sufficient to apply a defense of the first premiss. Otherwise, a defense of the right premiss does the job.

In case of logical axioms \(\varphi , \lnot \varphi \) the proof goes by induction on the length of \(\varphi \) and applies a so called “mirror-chess” strategy.Footnote 8 In the basis a defense simply consists in chosing a true formula (both literals cannot be false), and this is possible since the proponent (being constructive) is assumed to be always in a position to compute the values of the involved terms. In the case of arithmetic it is obvious but it may be doubtful in the case of analysis and ZF. The induction step is easy. For example, in case of \(\varphi := \psi \vee \chi \) if the opponent attacks \(\psi \), then the proponent must choose \(\lnot \psi \) in \(\lnot \varphi := \lnot \psi \wedge \lnot \chi \) and follow a defense of \(\psi , \lnot \psi \) which holds by inductive hypothesis.

In case of nonlogical axioms we will illustrate the point with the case of the induction axiom. Any ORT f for it must consist of three components: \(f_1\) for \(\lnot \varphi (0)\), \(f_2\) for \(\exists x(\varphi (x) \wedge \lnot \varphi (sx))\) and \(f_3\) for \(\forall x\varphi (x)\). Consider \(f_3\); if the chosen number is 0, then \(f_3'\) is an ORT for \(\varphi (0)\) and the chess-mirror strategy wins against \(f_1, f_3'\). If it is some \(k \ne 0\), then \(f_3'\) is an ORT for \(\varphi (k)\) and we consider \(f_2\) to the effect that a sequence \(\varphi (0)\wedge \lnot \varphi (1), \dots , \varphi (k-1)\wedge \lnot \varphi (k)\) is obtained. Let n be the ordinal of the first conjunction in which the opponent chooses its first conjunct, i.e. \(\varphi (n-1)\) for refutation. It cannot be 1 with some \(f_3''\) since the proponent wins against \(f_1, f_3''\). Continuing this way with other values of n the proponent eventually wins with \(n=k\).

The last, and the most complex argument must be provided to show the defensibility-preservation of cut. It is again performed by induction on the length of the cut formula. Actually two proofs are given, indirect and direct but the whole proof is provided only for the latter which has the following form:

Suppose that two PTs \(\phi _1\) and \(\phi _2\) are given for both premisses of cut and \(f_1, f_2\) are ORTs for \(\Gamma \) and \(\Delta \) respectively. Then it is possible to construct ORTs \(f_3, f_4\) for \(\varphi \) and \(\lnot \varphi \) such that \(\phi _1, \phi _2\) are subject to the following conditions when applied to premisses of cut:

(a) either \(\phi _1(f_1, f_3)\) designates a literal occuring in \(\Gamma \);

(b) or \(\phi _2(f_2, f_4)\) designates a literal occuring in \(\Delta \);

(c) or \(\phi _1(f_1, f_3)\) designates a literal that is a subformula of \(\varphi \) and \(\phi _2(f_2, f_4)\) designates a literal that is a subformula of \(\lnot \varphi \) and both literals are contradictory.

It is not difficult to observe that in case \(\phi _1, \phi _2\) are defenses of premisses this assertion implies that the conclusion is also defensible since (c) is impossible in this case.

A proof is carried out by induction on the length of cut formula. In all cases the proof consists in constructing an extension of given strategies to determine a pair of contradictory literals that are subformulae of cut formulae. The basis is obvious since these are contradictory literals. The case of conjunction and its negation, i.e. the disjunction of the negated conjuncts, is also relatively easy. The main problem is with quantified formulae which additionally in case of analysis must be demonstrated twice for both kinds of bound variables. It is this point of the proof where the abilities of a constructive subject, i.e. a proponent, are needed for the construction of bar recursion. Basically a family of ORTs for \(\exists x\lnot \varphi \) is considered for all possible initial segments of respective sequences (depending on the theory under consideration). The construction is similar to the one provided by Spector in his proof of the consistency of analysis. In each case suitable bar induction principles are required to provide a verification of the recursion to the effect that the ternary disjunction (a), (b), (c) stated above holds and the recursive process terminates. Of course despite the general similarity of all proofs significant differences may be noticed. For example, in PA the computation process is uniquely determined whereas in case of analysis there is by contrast a search for suitable functions.

The general strategy of these proofs shows that Yankov’s approach is in the middle between Gentzen-like approaches based on cut elimination or ordinal reduction and Spector’s approach to consistency proof. It is also worth remarking his proof of the completeness of FOL since it is remarkably different from other proofs provided for cut-free formalizations of classical logic. The standard strategy is indirect in the sense that it is shown how to construct a proof-search tree for every unprovable sequent, with at least one, possibly infinite, open branch. Eventually such a branch provides sufficient resources for the construction of a falsifying model for the root-sequent. Similarly like in standard proofs Yankov defines some kind of fair procedure of systematic decomposition of compound formulae in the root-sequent, taking care of existentially quantified formulae since they may be invoked infinitely many times. It is carried out in terms of ORTs associated to each possible branch. But his aim is to provide an intuitionistic proof of the completeness of FOL and the general strategy sketched above is not feasible. In particular, instead of classical induction he applies bar induction and instead of König’s lemma its intuitionistic counterpart, the fan theorem,Footnote 9 and all these replacements lead to changes in the proof which make it rather direct. It is shown that if a sequent is defensible, then all branches starting with them in a root-first proof search must finish with an axiomatic sequent, hence it is defensible. By construction cut is not used, so as a corrolary we obtain a non-constructive semantic proof of cut elimination for one-sided sequent calculus for FOL. Additionally, the proof is extended to the case of derivability of a sequent from an arbitrary set of premisses. To cover the case of infinite sets the notion of a sequent is also extended to cover infinite sequents. In this way Yankov has shown that his specific dialogic interpretation of proofs can work both ways in a uniform way—to show soundness of FOL and consistency of mathematical theories, and to show completeness of FOL.

8 Concluding Remarks

Yankov started his career as a programmer and his PhD as well as one of his early papers Yankov (1963) was devoted to Kleene’s notion of realizability. His background is evident in his description of the constructive character of a proponent which is often presented in terms of computation processes. As he confesses his consistency proof for arithmetic was completed in 1968 whereas proofs for analysis and set theory were completed in 1991. For the contemporary reader two facts are very striking: the long period between the first (unpublished at the time of its discovery) proof and the final result, and very poor references. Both issues are certainly strongly connected with, not to say grounded in, the political and social activity of Yankov which had hard consequences for his life and career. It is not my role to focus on these aspects of Yankov’s activity (but see the introduction to this volume) but it should be noted here that political imprisonment certainly had serious consequences for his scientific research. At the time when he was working on his proofs Yankov was not aware of the huge literature devoted to ordinal proof theory, including consistency proofs for a variety of subsystems of analysis and set theory. His background is in fact based on very limited access to works of some authors known in the 1960s. It is evident not only in case of his method and proofs but also in some other remarks he is making, for example concerning research on nonclassical implication (see the concluding remarks of Yankov 1994). Despite the modest links of his work with main achievements in the field, his results and the method applied are of great interest. Both papers are witnesses of the phenomena that science that is developed in isolation and outside main trends of research may lead to significantly different solutions than those obtained in the mainstream.

In particular, in contrast to research developed within ordinal proof theory Yankov did not care about establishing precise bounds on proofs. He developed his own view on the constructive capabilities of an agent solving problems, extended in such a way as to allow for establishing his results. He is aware that the bar induction principles he introduced are very strong and makes a remark that perhaps it is possible to find weaker tools enabling such proofs. Special bar principles for analysis and set theory are in fact justified in a way which can hardly be seen as constructive. One should notice at this point that Spector (1962) was also doubtful if the bar recursion applied in his proof is really constructive and Kreisel was even more critical in this respect.

Such attempts at proving the consistency of mathematical theories are sometimes criticised as being a pure formalist game, without any, or very little, foundational and epistemological value. In particular, Girard (2011) is very critical, not to say rude, in commenting on the achievements of the Munich school. It is obvious that such criticism may be also directed against Yankov’s proofs. Anyway, it is a piece of very ambitious and ingenious work done in extremely hard circumstances and, as I pointed out above, in isolation. It certainly deserves high estimation and it is sad that so far it was not recognized by scholars working on proof theory and foundational problems.

We finish this short exposition of Yankov’s achievements in proof theory with a citation from Yankov (1994) which best reflects his own view of what he obtained:

“David Hilbert identified the existence of mathematical objects with the consistency of the theory of these objects. Our arguments show that the verification of such consistency is connected with the possibility of including the implied collection of objects in some “constructive” context by conceiving the field of objects as the sphere of application of some constructive means by a constructive subject. Here the means must be powerful enough so that consistency can be proved, and, on the other hand, obvious enough so that we can evaluate their constructibility, that is, the means must be an extension of our finite constructive capabilities still close to us. I attempt to express this situation by the somewhat descriptive thesis that, in the final analysis, mathematical existence is the possibility of some constructive approach to us of mathematical objects.”

Notes

- 1.

Nowadays the view of Tait is often accepted according to which finitism is identified with the quantifier-free system of primitive recursive arithmetic PRA.

- 2.

In fact, a similar system of this kind independently introduced by Jaśkowski (1934) was called ‘suppositional calculus’.

- 3.

More on the history and types of natural deduction and sequent calculi is in Indrzejczak (2010).

- 4.

In the meantime he proved in a purely finitist way the consistency of simple type theory (Gentzen 1936b) but without the axiom of infinity so it is a rather weak result.

- 5.

The second edition is preferred since it contains also valuable appendices written by leading researchers in the field.

- 6.

Such a result was already present in Gentzen (1934).

- 7.

In particular research concerning bounded arithmetic (Parikh, Buss), reverse mathematics or predicative analysis were not mentioned since they are not related to Yankov’s work.

- 8.

Illustrated with an anecdote about two chess players who have a simultaneous match with a chess master and successively repeat his previous moves.

- 9.

Classically the fan theorem is just the contrapositive of König’s lemma, but intuitionistically they are not equivalent.

References

Coquand, T. (1995). A semantics for classical arithmetic. The Journal of Symbolic Logic, 60(1), 325–337.

Gentzen, G. (1934). Untersuchungen über das Logische Schliessen. Mathematische Zeitschrift, 39, 176–210 and 39, 405–431.

Gentzen, G. (1936a). Die Widerspruchsfreiheit der reinen Zahlentheorie’. Mathematische Annalen, 112, 493–565.

Gentzen, G. (1936b). Die Widerspruchsfreiheit der Stufenlogik. Mathematische Zeitschrift, 3, 357–366.

Gentzen, G. (1936c). Die Unendlichkeitsbegriff in der Mathematik, Semester-Berichte, Münster in: W., 9th Semester, Winter 1936–37, 65–80. Mathematische Annalen, 112, 493–565.

Gentzen, G. (1938). Neue Fassung des Widerspruchsfreiheitsbeweises für die reine Zahlentheorie, Forschungen zur Logik und zur Grundlegung der exakten Wissenschaften. New Series, 4, Leipzig 19–44.

Gentzen, G. (1943). Beweisbarkeit und Unbeweisbarkeit der Anfangsfällen der transfiniten Induktion in der reinen Zahlentheorie. Mathematische Annalen, 120, 140–161.

Girard, J. Y. (2011). The blind spot. Lectures on logic: European mathematical society.

Hintikka, J. (1968). Language-games for quantifiers. In Studies in logical theory (pp. 46–72). Blackwell.

Indrzejczak, A. (2010). Natural deduction. Hybrid systems and modal logics. Springer.

Indrzejczak, A. (2018). Rule-generation theorem and its applications. The Bulletin of the Section of Logic, 47(4), 265–282.

Jaśkowski, S. (1934). On the rules of suppositions in formal logic. Studia Logica, 1, 5–32.

Lorenzen, P. (1961). Ein dialogisches Konstruktivitätskriterium. In Infinitistic methods (pp. 193–200). Warszawa: PWN.

Mendelson, E. (1964). Introduction to mathematical logic. Chapman and Hall.

Negri, S., & von Plato, J. (2001). Structural proof theory. Cambridge: Cambridge University Press.

Novikov, P. S. (1943). On the consistency of certain logical calculus. Matematiceskij Sbornik, 1254, 230–260.

von Plato, J. (2008). Gentzen’s proof of normalization for ND. The Bulletin of Symbolic Logic, 14(2), 240–257.

Pohlers, W. (2009). Proof theory. The first steps into impredicativity. Springer.

Prawitz, D. (1965). Natural deduction. Stockholm: Almqvist and Wiksell.

Raggio, A. (1965). Gentzen’s Hauptsatz for the systems NI and NK. Logique et Analyse, 8, 91–100.

Rathjen, M. (1999). The realm of ordinal analysis. In B. S. Cooper & J. K. Truss (Eds.), Sets and proofs (pp. 219–280). Cambridge.

Rathjen, M. (2006). A note on bar induction in constructive set theory. Mathematical Logic Quarterly, 52, 253–258.

Rathjen, M., Sieg, W. (2018). Proof theory. The Stanford encyclopedia of philosophy, E. Zalta (Ed.). https://plato.stanford.edu/archives/fall2018/entries/proof-theory/.

Schütte, K. (1977). Proof theory. Berlin: Springer.

Schwichtenberg, H. (1977). Proof theory. In J. Barwise (Ed.), Handbook of mathematical logic (Vol. 1). Amsterdam: North-Holland.

Shoenfield, J. R. (1967). Mathematical logic. Addison-Wesley.

Spector, C. (1962). Provably recursive functionals in analysis: A consistency proof of analysis by an extension of principles formulated in current intuitionistic mathematics. American Mathematical Society, Providence, 5, 1–27.

Szabo, M. E. (1969). The collected papers of Gerhard Gentzen. Amsterdam: North-Holland.

Tait, W. W. (1968). Normal derivability in classical logic. In The syntax and semantics of infinitary languages, LNM (Vol. 72, pp. 204–236).

Takeuti, G. (1987). Proof theory. Amsterdam: North-Holland.

Yankov, V. A. (1963). On realizable formulas of propositional logic’. Soviet Mathematics Doklady, 4, 1035–1037.

Yankov, V. A. (1995). Dialogue theory of proofs for arithmetic, analysis and set theory. Russian Academy of Science Izvestiya: Mathematics, 44(3), 571–600. [Russian version 1994].

Yankov, V. A. (1997). Dialogue interpretation of the classical predicate calculus. Izvestiya RAN: Seriya Matematicheskaya, 611, 215–224.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Indrzejczak, A. (2022). Dialogues and Proofs; Yankov’s Contribution to Proof Theory. In: Citkin, A., Vandoulakis, I.M. (eds) V.A. Yankov on Non-Classical Logics, History and Philosophy of Mathematics. Outstanding Contributions to Logic, vol 24. Springer, Cham. https://doi.org/10.1007/978-3-031-06843-0_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-06843-0_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-06842-3

Online ISBN: 978-3-031-06843-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)