Abstract

We deal with nested affine variational inequalities, i.e., hierarchical problems involving an affine (upper-level) variational inequality whose feasible set is the solution set of another affine (lower-level) variational inequality. We apply this modeling tool to the multi-portfolio selection problem, where the lower-level variational inequality models the Nash equilibrium problem made up by the different accounts, while the upper-level variational inequality is instrumental to perform a selection over this equilibrium set. We propose a projected averaging Tikhonov-like algorithm for the solution of this problem, which only requires the monotonicity of the variational inequalities for both the upper- and the lower-level in order to converge. Finally, we provide complexity properties.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Multi-portfolio selection

- Nested variational inequality

- Purely hierarchical problem

- Tikhonov method

- Complexity analysis

1 Introduction: Context and Motivation for the Nested Affine Variational Inequalities Model

Nested affine variational inequalities represent a flexible modeling tool for many real-world applications like, e.g., the renowned multi-portfolio selection (see, e.g. [5]). To introduce the general formulation of the model, we first briefly describe the specific instance of the multi-portfolio optimization problem.

Consider N accounts, with ν = 1, …, N. Each account ν’s budget \(b^\nu \in \mathbb R_+\) is invested in K assets of a market. The decision variables y ν ∈ Y ν ⊆ R K stand for the fractions of b ν invested in each asset, where Y ν is a nonempty compact polyhedron containing the feasible portfolios, e.g., the standard simplex. Let \(r\in \mathbb R^K\) indicate random variables, where r k is the return on asset k ∈{1, …, K} over a single-period investment. We define \(\mu ^\nu = \mathbb E^\nu (r) \in \mathbb R^K\) as expectations of the assets’ returns for ν, as well as the positive semidefinite covariance matrix \(\Sigma ^\nu = \mathbb E^\nu ((r - \mu ^\nu )(r - \mu ^\nu )^\top )\). We consider the following measures for portfolio income I ν and risk R ν, where we use the portfolio variance as the risk measure: \(I_\nu (y^\nu ) \triangleq b^\nu (\mu ^\nu )^\top y^\nu \), \(R_\nu (y^\nu ) \triangleq \frac {1}{2} (b^\nu )^2 (y^\nu )^\top \Sigma ^\nu y^\nu \).

When trades from multiple accounts are pooled for common execution, individual accounts can suffer the market impact that stems from a lack of liquidity. To take account of this transaction cost effect, we introduce a positive semidefinite market impact matrix \(\Omega ^\nu \in \mathbb R^{K \times K}\) whose entry at position (i, j) is the impact of the liquidity of asset i on the liquidity of asset j. For each account ν we consider a linear market impact unitary cost function. The total transaction costs term for ν is:

The multi-portfolio problem can be formulated as the following Affine Variational Inequality AVI(M low, d low, Y ): find \(y \in Y \triangleq Y_1 \times \cdots \times Y_N \) such that

where \(d^{\text{low}} \triangleq -b^\nu \mu ^\nu \) and

We assume the matrix M low to be positive semidefinite and, in turn, AVI(M low, d low, Y ) to be monotone: these properties can be guaranteed under mild assumptions, see [5, Theorem 3.3]. We denote by SOL(M low, d low, Y ) the solution set of AVI(M low, d low, Y ), which is a polyhedron (see [5, Theorem 2.4.13]). Note that AVI(M low, d low, Y ) corresponds to an equivalent Nash Equilibrium Problem (NEP), where the players’ objective functions are convex and quadratic. Since the set SOL(M low, d low, Y ) is not necessarily a singleton in the framework we consider, one has to discriminate among the solutions of AVI(M low, d low, Y ) according to some further upper level criterion. Thus, to model the resulting selection problem, we introduce the monotone nested affine variational inequality AVI(M up, d up, SOL(M low, d low, Y )), that is the problem of calculating y ∈SOL(M low, d low, Y ) that solves

where \(\mathbb R^{{NK}\times {NK}} \, \ni M^{\text{up}} \succeq 0\) and \(d^{\text{up}} \in \mathbb R^{NK}\). Problem (2), which has a hierarchical structure, includes as a special instance the minimization of the convex quadratic objective function \(\frac {1}{2} y^\top M^{\text{up}} y + {d^{\text{up}}}^\top y\), where M up is symmetric, over SOL(M low, d low, Y ). It is also worth mentioning the special instance where the N accounts form an upper-level (jointly convex) NEP to select over SOL(M low, d low, Y ); in this case, M up turns out to be nonsymmetric. We refer the reader to [1] for further information about NEPs.

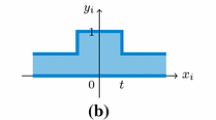

Remark Convergent solution procedures have been devised in the literature (see, e.g., [3, 4]) to address monotone nested AVIs when M up is positive semidefinte plus, i.e. M up is positive semidefinite and y ⊤ M up y = 0 ⇒ M up y = 0 (see, [2, Ex. 2.9.24]). Requiring M up to be positive semidefinite plus is restrictive: for example, taking NK = 2, any matrix

with m 1, m 2 nonnegative scalars and \(m_{3} \neq - \sqrt {m_1 m_2}\), is positive semidefinite but not positive semidefinite plus. Actually, the class of semidefinite plus matrices is “slightly” larger than the ones of symmetric positive semidefinite and positive definite matrices.

Recently, a projected averaging Tikhonov-like algorithm has been proposed in [6] to cope with monotone nested VIs allowing for matrices M up that are not required to be positive semidefinite plus.

We present a solution method for problem (2). We apply the results presented in [6] to the specific instance of monotone nested affine variational inequalities, taking full advantage of some strong properties AVIs enjoy, such as error bound results. This allows us to put forward an algorithm to address problems like the multi-portfolio selection in a more general framework with respect to the literature, where the upper level operator is invariably assumed to be monotone plus (see, e.g., [5]).

2 The Tikhonov Approach

We require the following mild conditions to hold:

-

(A1)

M up is positive semidefinite;

-

(A2)

M low is positive semidefinite;

-

(A3)

Y is nonempty and compact.

The set SOL(M low, d low, Y ) is nonempty, convex, compact and not necessarily single-valued, due to (A2) and (A3), see e.g. [2, Section 2.3]. It follows that the feasible set of the nested affine variational inequality (2) is not a singleton. Moreover, thanks to (A1), the solution set of (2) can include multiple points.

Let us introduce the Tikhonov operator:

For any τ > 0, by assumptions (A1) and (A2), Φτ is monotone and affine.

The following finite quantities will be useful in the forthcoming analysis:

We propose a Linear version of the Projected Averaging Tikhonov Algorithm (L-PATA) to compute solutions of (2).

Algorithm 1: Linear version of the Projected Averaging Tikhonov Algorithm (L-PATA)

Index i refers to the outer iterations occurring as the condition in step (S.4) is verified, which correspond to the (approximate) solutions w i+1 of the AVI subproblems

with ε sub = i −2 and τ = i. The sequence {y k} includes all the points obtained by making classical projection steps with the given diminishing stepsize rule, see step (S.2). The sequence {z k} consists of the inner iterations needed to compute (approximate) solutions of the AVI subproblem (3), and it is obtained by performing a weighted average on the points y j, see step (S.3). Index l lets the sequence of the stepsizes restart at every outer iteration, while considering solely the points y j belonging to the current subproblem for the computation of z k+1. We remark that the condition in step (S.4) only requires the solution of a linear problem.

We now deal with the convergence properties of L-PATA. With the following result we relate (approximate) solutions of the AVI subproblem (3) where ε sub ≥ 0 to approximate solutions of problem (2).

Proposition 1

Assume conditions (A1)–(A3) to hold, and let y ∈ Y satisfy (3) with τ > 0 and ε sub ≥ 0. It holds that

with ε up = ε sub τ, and

with \(\varepsilon _{\mathit{\text{low}}} = \varepsilon _{\mathit{\text{sub}}} + \frac {1}{\tau } H D\).

Proof

We have for all w ∈SOL(M low, d low, Y ):

where the first inequality is due to (3), the second one comes from (A2), and the last one is true because y ∈ Y and then \(\left (M^{\text{low}}w + d^{\text{low}}\right )^\top (y-w) \geq 0\). Hence, (4) is true.

Moreover, we have for all w ∈ Y :

where the inequality is due to (3). Therefore, we get (5). □

Here follows the convergence result for L-PATA.

Theorem 1

Assume conditions (A1)–(A3) to hold. Every limit point of the sequence {w i} generated by L-PATA is a solution of problem (2).

Proof

First of all, we show that i →∞. Assume by contradiction that this is false, hence an index \(\bar k\) exists such that either \(\bar k = 0\) or the condition in step (S.4) is satisfied at the iteration \(\bar k - 1\), and the condition in step (S.4) is violated for every \(k \geq \bar k\). In this case, it is true that \(i \to \bar \imath \), and then \(\tau ^k = \bar \tau \triangleq \bar \imath \) for every \(k \ge \bar k\).

For every \(j \in [\bar k, k]\), and for any v ∈ Y , we have

and, in turn,

Summing, we get

which implies

where the last inequality holds thanks to the monotonicity of \(\Phi _{\bar \tau }\). Indicating by z ∈ Y any limit point of the sequence {z k}, taking the limit k →∞ in the latter relation and subsequencing, the following inequality holds true:

because \(\sum _{j=\bar k}^{\infty } \frac {1}{2(j-\bar k)^{0.5}} = +\infty \) and \(\left (\sum _{j=\bar k}^{\infty } \frac {1}{4(j-\bar k)}\right )/\left (\sum _{j=\bar k}^{\infty } \frac {1}{2(j-\bar k)^{0.5}}\right ) = 0\), due to [6, Proposition 4], and then z is a solution of the dual problem

Hence, the sequence {z k} converges to a solution of problem (3) with ε sub = 0 and \(\tau = \bar \tau \), see e.g. [2, Theorem 2.3.5], in contradiction to \(\min _{y \in Y} \Phi _{\bar \tau }(z^{k+1})^\top (y - z^{k+1}) < - \varepsilon ^k = - {\bar \imath }^{-2}\) for every \(k \ge \bar k\). Therefore we can say that i →∞.

Consequently, the algorithm produces an infinite sequence {w i} such that w i+1 ∈ Y and

that is (3) holds at w i+1 with ε sub = i −2 and τ = i. By Proposition 1, specifically from (4) and (5), we obtain

and

Taking the limit i →∞ we get the desired convergence property for every limit point of {w i}. □

We consider the natural residual map for the lower-level AVI(M low, d low, Y )

Function V is continuous and nonnegative, as reminded in [4]. Also, V (y) = 0 if and only if y ∈SOL(M low, d low, Y ). Condition

with \(\widehat \varepsilon _{\text{low}} \geq 0\), is alternative to (5) to take care of the feasibility of problem (2).

Remark Since both the variational inequalities (1) and (2) are affine, then ε up and either ε low or \(\widehat \varepsilon _{\text{low}}\) give actual upper-bounds to the distances between y and SOL(M up, d up, SOL(M low, d low, Y )) and SOL(M low, d low, Y ), respectively.

Theorem 2 If y ∈SOL(M low, d low, Y ) satisfies (4), then there exists c up > 0 such that

If y ∈ Y satisfies (5), then there exists c low > 0 such that

If y ∈ Y satisfies (9), then there exists \(\widehat c_{\mathit{\text{low}}}> 0\) such that

Proof The claim follows from [2, Proposition 6.3.3] and [6, Proposition 3]. □

In view of Theorem 2, conditions (4) and either (5) or (9) define points that are approximate solutions for problem (2), also under a geometrical perspective. In particular, the lower the values of ε up and either ε low or \(\widehat \varepsilon _{\text{low}}\), the closer the point gets to the solution set of the nested affine variational inequality (2).

We give an upper bound to the number of iterations needed to drive both the upper-level error ε up, given in (4), and the lower-level error \(\widehat \varepsilon _{\text{low}}\), given in (9), under some prescribed tolerance δ.

Theorem 3

Assume conditions (A1)–(A3) to hold and, without loss of generality, \(L_\Phi \triangleq \|M^{\mathit{\text{up}}}+M^{\mathit{\text{low}}}\|{ }_2 < 1\) . Consider L-PATA. Given a precision δ ∈ (0, 1), let us define the quantity

Then, the upper-level approximate problem (4) is solved for y = z k+1 with ε up = δ and the lower-level approximate problem (9) is solved for y = z k+1 with \(\widehat \varepsilon _{\mathit{\text{low}}} = \delta \) and the condition in step (S.4) is satisfied in at most

iterations k, where η > 0 is a small number, and

Proof

See the proof of [6, Theorem 2]. □

References

Facchinei, F., Kanzow, C.: Generalized Nash equilibrium problems. Ann. Oper. Res. 175(1), 177–211 (2010)

Facchinei, F., Pang, J.S.: Finite-Dimensional Variational Inequalities and Complementarity Problems. Springer, New York (2003)

Facchinei, F., Pang, J.S., Scutari, G., Lampariello, L.: VI-constrained hemivariational inequalities: distributed algorithms and power control in ad-hoc networks. Math. Program. 145(1–2), 59–96 (2014)

Lampariello, L., Neumann, C., Ricci, J.M., Sagratella, S., Stein, O.: An explicit Tikhonov algorithm for nested variational inequalities. Comput. Optim. Appl. 77(2), 335–350 (2020)

Lampariello, L., Neumann, C., Ricci, J.M., Sagratella, S., Stein, O.: Equilibrium selection for multi-portfolio optimization. Eur. J. Oper. Res. 295(1), 363–373 (2021)

Lampariello, L., Priori, G., Sagratella, S.: On the solution of monotone nested variational inequalities. Technical report, Preprint (2021)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Lampariello, L., Priori, G., Sagratella, S. (2022). On Nested Affine Variational Inequalities: The Case of Multi-Portfolio Selection. In: Amorosi, L., Dell’Olmo, P., Lari, I. (eds) Optimization in Artificial Intelligence and Data Sciences. AIRO Springer Series, vol 8. Springer, Cham. https://doi.org/10.1007/978-3-030-95380-5_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-95380-5_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-95379-9

Online ISBN: 978-3-030-95380-5

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)