Abstract

We consider linear magneto-quasistatic field equations which arise in simulation of low-frequency electromagnetic devices coupled to electrical circuits. A finite element discretization of such equations on 3D domains leads to a singular system of differential-algebraic equations. First, we study the structural properties of such a system and present a new regularization approach based on projecting out the singular state components. Furthermore, we consider a Lyapunov-based balanced truncation model reduction method which preserves stability and passivity. By making use of the underlying structure of the problem, we develop an efficient model reduction algorithm. Numerical experiments demonstrate its performance on a test example.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Magneto-quasistatic equations

- Differential-algebraic equations

- Matrix pencils

- Model order reduction

- Balanced truncation

- Stability

- Passivity

1 Introduction

Nowadays, integrated circuits play an increasingly important role. Modelling of electromagnetic effects in high-frequency and high-speed electronic systems leads to coupled field-circuit models of high complexity. The development of efficient, fast and accurate simulation tools for such models is of great importance in the computer-aided design of electromagnetic structures offering significant savings in production cost and time.

In this paper, we consider model order reduction of linear magneto-quasistatic (MQS) systems obtained from Maxwell’s equations by assuming that the contribution of displacement current is negligible compared to the conductive currents. Such systems are commonly used for modeling of low-frequency electromagnetic devices like transformers, induction sensors and generators. Due to the presence of non-conducting subdomains, MQS models take form of partial differential-algebraic equations whose dynamics are restricted to a manifold described by algebraic constraints. A spatial discretization of MQS systems using the finite integration technique (FIT) [32] or the finite element method (FEM) [5, 19, 23] leads to differential-algebraic equations (DAEs) which are singular in the 3D case. The structural analysis and numerical treatment of singular DAEs is facing serious challenges due to the fact that the inhomogeneity has to satisfy some restricted conditions to guarantee the existence of solutions and/or that the solution space is infinite-dimensional. To overcome these difficulties, different regularization techniques have been developed for MQS systems [6, 8, 9, 15]. Here, we propose a new regularization approach which is based on a special state space transformation and withdrawal of overdetermined state components and redundant equations.

Furthermore, we exploit the special block structure of the regularized MQS system to determine the deflating subspaces of the underlying matrix pencil corresponding to zero and infinite eigenvalues. This makes it possible to extend the balanced truncation model reduction method to 3D MQS problems. Similarly to [17, 26], our approach relies on projected Lyapunov equations and preserves passivity in a reduced-order model. It should be noted that the balanced truncation method presented in [17] for 2D and 3D gauging-regularized MQS systems cannot be applied to the regularized system obtained here, since it is stable, but not asymptotically stable. To get rid of this problem, we proceed as in [26] and project out state components corresponding not only to the eigenvalue at infinity, but also to zero eigenvalues. Our method is based on computing certain subspaces of incidence matrices related to the FEM discretization which can be determined by using efficient graph-theoretic algorithms developed in [16].

2 Model Problem

We consider a system of MQS equations in vector potential formulation given by

where \(\mathbf {A}:\varOmega \times (0,T)\rightarrow \mathbb {R}^3\) is the magnetic vector potential, \(\chi :\varOmega \rightarrow \mathbb {R}^{3\times \, m}\) is a divergence-free winding function, \(\iota :(0,T)\rightarrow \mathbb {R}^m\) and \(u:(0,T)\rightarrow \mathbb {R}^m\) are the electrical current and voltage through the stranded conductors with m terminals. Here, \(\varOmega \subset \mathbb {R}^3\) is a bounded simply connected domain with a Lipschitz boundary \(\partial \varOmega \), and \(n_o\) is an outer unit normal vector to \(\partial \varOmega \). The MQS system (1) is obtained from Maxwell’s equations by neglecting the contribution of the displacement currents. It is used to study the dynamical behavior of magnetic fields in low-frequency applications [14, 27]. The integral equation in (1) with a symmetric, positive definite resistance matrix \(R\in \mathbb {R}^{m\times m}\) results from Faraday’s induction law. This equation describes the coupling the electromagnetic devices to an external circuit [28]. Thereby, the voltage u is assumed to be given and the current \(\iota \) has to be determined. In this case, the MQS system (1) can be considered as a control system with the input u, the state \([\mathbf {A}^T,\iota ^T]^T\) and the output \(y=\iota \).

We assume that the domain \(\varOmega \) is composed of the conducting and non-conducting subdomains \(\varOmega _1\) and \(\varOmega _2\), respectively, such that \(\overline{\varOmega }=\overline{\varOmega }_1\cup \overline{\varOmega }_2\), \(\varOmega _1\cap \varOmega _2=\emptyset \) and \(\overline{\varOmega }_1\subset \varOmega \). Furthermore, we restrict ourselves to linear isotropic media implying that the electrical conductivity \(\sigma \) and the magnetic reluctivity \(\nu \) are scalar functions of the spatial variable only. The electrical conductivity \(\sigma :\varOmega \rightarrow \mathbb {R}\) is given by

with some constant \(\sigma _1>0\), whereas the magnetic reluctivity \(\nu :\varOmega \rightarrow \mathbb {R}\) is bounded, measurable and uniformly positive such that \(\nu (\xi )\ge \nu _0>0\) for a.e. in \(\varOmega \). Note that since \(\sigma \) vanishes on the non-conducting subdomain \(\varOmega _2\), the initial condition \(\mathbf {A}_0\) can only be prescribed in the conducting subdomain \(\varOmega _1\). Finally, for the winding function \(\chi =[\chi _1,\ldots ,\chi _m]\), we assume that

These conditions mean that the conductor terminals are located in \(\varOmega _2\) and they do not intersect [28].

2.1 FEM Discretization

First, we present a weak formulation for the MQS system (1). For this purpose, we multiply the first equation in (1) with a test function \(\phi \in H_0(\text{ curl }, \varOmega )\) and integrate it over the domain \(\varOmega \). Using Green’s formula, we obtain the variational problem

The existence, uniqueness and regularity results for this problem can be found in [25].

For a spatial discretization of (4), we use Nédélec edge and face elements as introduced in [23]. Let \(\mathcal {T}_h(\varOmega )\) be a regular simplicial triangulation of \(\varOmega \), and let \(n_n\), \(n_e\) and \(n_f\) denote the number of nodes, edges and facets, respectively. Furthermore, let \(\varPhi ^e=[ \phi _1^e, \ldots , \phi _{n_e}^e]\) and \(\varPhi ^f=[ \phi _1^f, \ldots , \phi _{n_f}^f]\) be the edge and face basis functions, respectively, which span the corresponding finite element spaces. They are related via

where \(C\in \mathbb {R}^{n_f\times n_e}\) is a discrete curl matrix with entries

see [5, Sect. 5]. Substituting an approximation to the magnetic vector potential

into the variational Eq. (4) and testing it with \(\phi _i^e\), we obtain a linear DAE system

where \(a=\begin{bmatrix} \alpha _1, \ldots , \alpha _{n_e} \end{bmatrix}^T\) and the conductivity matrix \(M\in \mathbb {R}^{n_e\times \,n_e}\), the curl-curl matrix \(K\in \mathbb {R}^{n_e\times \,n_e}\) and the coupling matrix \(X\in \mathbb {R}^{n_e\times \,m}\) have entries

Note that the matrices M and K are symmetric, positive semidefinite. Using the relation (5), we can rewrite the matrix K as

where the entries of the symmetric and positive definite matrix \(M_\nu \) are given by

The coupling matrix X can also be represented in a factored form using the discrete curl matrix C. This can be achieved by taking into account the divergence-free property of the winding function \(\chi \), which implies \(\chi =\nabla \times \gamma \) for a certain matrix-valued function

Using the cross product rule, Gauss’s theorem as well as relations (5) and \(\phi _i^e\times n_o=0\) on \(\partial \varOmega \), we obtain

Then the matrix X can be written as \(X=C^T{\varUpsilon }\), where the entries of \({\varUpsilon }\in \mathbb {R}^{n_f\times m}\) are given by

Note that due to (3), the matrix X has full column rank. This immediately implies that \({\varUpsilon }\) is also of full column rank.

3 Properties of the FEM Model

In this section, we study the structural and physical properties of the FEM model (6). We start with reordering the state vector \(a=[a_1^T,\, a_2^T]^T\) with \(a_1\in \mathbb {R}^{n_1}\) and \(a_2\in \mathbb {R}^{n_2}\) accordingly to the conducting and non-conducting subdomains \(\varOmega _1\) and \(\varOmega _2\). Then the matrices M, K, X and C can be partitioned into blocks as

where \(M_{11}^{}\in \mathbb {R}^{n_1\times \, n_1}\) is symmetric, positive definite, \(K_{11}^{}\in \mathbb {R}^{n_1\times \, n_1}\), \(K_{21}^{}=K_{12}^T\in \mathbb {R}^{n_2\times \, n_1}\), \(K_{22}\in \mathbb {R}^{n_2\times \, n_2}\), \(X_1\in \mathbb {R}^{n_1\times \, m}\), \(X_2\in \mathbb {R}^{n_2\times \, m}\), \(C_1\in \mathbb {R}^{n_f\times \, n_1}\), and \(C_2\in \mathbb {R}^{n_f\times \, n_2}\). Note that conditions (2) and (3) imply that \(X_1=0\) and \(X_2\) has full column rank. In what follows, however, we consider for completeness a general block \(X_1\). Solving the second equation in (6) for \( \iota =-R^{-1}X^T\tfrac{\mathrm{d}}{\mathrm{d}t} a +R^{-1}u \) and inserting this vector into the first equation in (6) yields the DAE control system

with the matrices

Using the block structure of the matrices E and K, we can determine their common kernel.

Theorem 1

Assume that \(M_{11}\), R and \(M_\nu \) are symmetric and positive definite. Let the columns of \(Y_{C_2}\in \mathbb {R}^{n_2\times \, k_2}\) form a basis of \(\ker (C_2)\). Then \(\ker (E)\cap \ker (K)\) is spanned by columns of the matrix \(\begin{bmatrix} 0,\, Y_{C_2}^T\end{bmatrix}^T\).

Proof

Assume that \(w=\begin{bmatrix} w_1^T,\, w_2^T\end{bmatrix}^T\in \ker (E)\cap \ker (K)\). Then due to the positive definiteness of \(M_{11}\) and R, it follows from \(w^T E w=0\) with E as in (9) that

Therefore, \(w_1=0\) and \({\varUpsilon }^TC_2w_2=0\). Moreover, using the positive definiteness of \(M_\nu \), we get from \(w^T Kw=0\) with \(w_1=0\) that \(C_2 w_2=0\). This means that \(w_2\in \ker (C_2)=\text{ im }(Y_{C_2})\), i.e., \(w_2=Y_{C_2}z\) for some vector z. Thus, \(w=[ 0,\, Y_{C_2}^T ]^Tz\).

Conversely, assume that \(w=[0,\, Y_{C_2}^T]^T\!z\) for some \(z\in \mathbb {R}^{k_2}\). Then using (9) and \(C_2^{}Y_{C_2}=0\), we obtain \(E w=0\) and \(Kw=0\). Thus, \(w\in \ker (E)\cap \ker (K)\). \(\square \)

It follows from this theorem that if \(C_2\) has a nontrivial kernel, then

for all \(\lambda \in \mathbb {C}\) implying that the pencil \(\lambda E+K\) (and also the DAE system (8)) is singular. This may cause difficulties with the existence and uniqueness of the solution of (8). In the next section, we will see that the divergence-free condition of the winding function \(\chi \) guarantees that (8) is solvable, but the solution is not unique. This is a consequence of nonuniqueness of the magnetic vector potential \(\mathbf {A}\) which is defined up to a gradient of an arbitrary scalar function.

3.1 Regularization

Our goal is now to regularize the singular DAE system (8). In the literature, several regularization approaches have been proposed for semidiscretized 3D MQS systems. In the context of the FIT discretization, the grad-div regularization of MQS systems has been considered in [8, 9] which is based on a spatial discretization of the Coulomb gauge equation \(\nabla \cdot {\mathbf{A}}=0\). For other regularization techniques, we refer to [6, 7, 15, 22]. Here, we present a new regularization method relying on a special coordinate transformation and elimination of the over- and underdetermined parts.

To this end, we consider a matrix \(\hat{Y}_{C_2}\in \mathbb {R}^{n_2\times \, (n_2-k_2)}\) whose columns form a basis of \(\text{ im }(C_2^T)\). Then the matrix

is nonsingular. Multiplying the state equation in (8) from the left with \(T^T\) and introducing a new state vector

the system matrices of the transformed system take the form

This implies that the components of \(a_{22}\) are actually not involved in the transformed system and, therefore, they can be chosen freely. Moreover, the third equation \(0=0\) is trivially satisfied showing that system (8) is solvable. Removing this equation, we obtain a regular DAE system

with \(x_r=[a_1^T,\; a_{21}^T]^T\in \mathbb {R}^{n_r}\), \(n_r=n_1+n_2-k_2\), and

where

The regularity of \(\lambda E_r-A_r\) follows from the symmetry of \(E_r\) and \(A_r\) and the fact that \(\text{ ker }(E_r)\cap \text{ ker }(A_r)=\{0\}\).

3.2 Stability

Stability is an important physical property of dynamical systems characterizing the sensitivity of the solution to perturbations in the data. A pencil \(\lambda E_r-A_r\) is called stable if all its finite eigenvalues have non-positive real part, and eigenvalues on the imaginary axis are semi-simple in the sense that they have the same algebraic and geometric multiplicity. In this case, any solution of the DAE system (11) with \(u=0\) is bounded. Furthermore, \(\lambda E_r-A_r\) is called asymptotically stable if all its finite eigenvalues lie in the open left complex half-plane. This implies that any solution of (11) with \(u=0\) satisfies \(x_r(t)\rightarrow 0\) as \(t\rightarrow \infty \).

The following theorem establishes a quasi-Weierstrass canonical form for the pencil \(\lambda E_r-A_r\) which immediately provides information on the finite spectrum and index of this pencil.

Theorem 2

Let the matrices \(E_r\), \(A_r\in \mathbb {R}^{n_r\times n_r}\) be as in (13). Then there exists a nonsingular matrix \(W\in \mathbb {R}^{n_r\times n_r}\) which transforms the pencil \(\lambda E_r-A_r\) into the quasi-Weierstrass canonical form

where \(E_{11}\), \(-A_{11}\in \mathbb {R}^{n_s\times n_s}\) are symmetric, positive definite, and \(n_s+n_0+n_\infty =n_r\). Furthermore, the pencil \(\lambda E_r-A_r\) has index one and all its finite eigenvalues are real and non-positive.

Proof

First, note that the existence of a nonsingular matrix W transforming \({\lambda E_r-A_r}\) into (14) immediately follows from the general results for Hermitian pencils [30]. However, here, we present a constructive proof to better understand the structural properties of the pencil \(\lambda E_r-A_r\).

Let the columns of the matrices \(Y_\sigma \in \mathbb {R}^{n_r\times \, n_\infty }\) and \(Y_\nu \in \mathbb {R}^{n_r\times \, n_0}\) form bases of \(\text{ ker }(F_\sigma ^T)\) and \(\text{ ker }(F_\nu ^T)\), respectively. Then we have

Moreover, the matrices \(Y_\nu ^TE_r^{}Y_\nu ^{}\) and \(Y_\sigma ^TA_r^{}Y_\sigma ^{}\) are both nonsingular, and \(\left[ Y_\nu ,\; Y_\sigma \right] \) has full column rank. These properties follow from the fact that

Consider a matrix

where the columns of \(W_1\) form a basis of \(\text{ ker }\bigl ([E_rY_\nu ,\; A_rY_\sigma ]^T\bigr )\). First, we show that this matrix is nonsingular. Assume that there exists a vector v such that \(W^Tv=0\). Then \(W_1^Tv=0\), \(Y_\nu ^Tv=0\) and \(Y_\sigma ^Tv=0\). Thus,

and, hence, W is nonsingular.

Furthermore, using (15) and

we obtain (14) with \(E_{11}=W_1^TE_rW_1^{}\) and \(A_{11}=W_1^TA_rW_1^{}\). Obviously, \(E_{11}\) and \(-A_{11}\) are symmetric and positive semidefinite. For any \(v_1\in \text{ ker }(E_{11})\), we have \({F_\sigma ^TW_1v_1=0}\). This implies \(W_1v_1\in \text{ ker }(F_\sigma ^T)=\text{ im }(Y_\sigma )\). Therefore, there exists a vector z such that \(W_1v_1=Y_\sigma z\). Multiplying this equation from the left with \(Y_\sigma ^TE_r\), we obtain \(Y_\sigma ^TE_rY_\sigma ^{} z=Y_\sigma ^TE_rW_1v_1=0\). Then \(z=0\) and, hence, \(v_1=0\). Thus, \(E_{11}\) is positive definite. Analogously, we can show that \(-A_{11}\) is positive definite too. This implies that all eigenvalues of the pencil \(\lambda E_{11}-A_{11}\) are real and negative. Index one property immediately follows from (14). \(\square \)

As a consequence, we obtain that the DAE system (11) is stable but not asymptotically stable since the pencil \(\lambda E_r-A_r\) has zero eigenvalues.

We consider now the output Eq. (12). Our goal is to transform this equation into the standard form \(y=C_rx_r\) with an output matrix \(C_r\in \mathbb {R}^{m\times \, n_r}\). For this purpose, we introduce first a reflexive inverse of \(E_r\) given by

Simple calculations show that this matrix satisfies

Next, we show that \(\hat{Y}_{C_2}^T X_2^{}\) has full column rank. Indeed, if there exists a vector v such that \(\hat{Y}_{C_2}^T X_2^{} v=0\), then \(X_2v\in \ker (\hat{Y}_{C_2}^T)\). On the other hand,

implying \(X_2v=0\). Since \(X_2\) has full column rank, we get \(v=0\).

Using nonsingularity of \(X_2^T\hat{Y}_{C_2}^{}\hat{Y}_{C_2}^TX_2^{}\), the input matrix \(B_r\) in (13) can be represented as

with \(Z=\hat{Y}_{C_2}^T X_2^{} (X_2^T\hat{Y}_{C_2}^{}\hat{Y}_{C_2}^T X_2^{})^{-1}\). Then employing the first relation in (18) and the state Eq. (11), the output (12) can be written as

It follows from the first relation in (18) and (19) that

Thus, the output takes the form

with \(C_r=-B_r^TE_r^-A_r^{}\).

3.3 Passivity

Passivity is another crucial property of control systems especially in interconnected network design [1, 33]. The DAE control system (11), (20) is called passive if for all \(t_f>0\) and all inputs \(u\in L_2(0,t_f)\) admissible with the initial condition \(E_r x_r(0)=0\), the output satisfies

This inequality means that the system does not produce energy. In the frequency domain, passivity of (11), (20) is equivalent to the positive definiteness of its transfer function

meaning that this function is analytic in \(\mathbb {C}_+=\{z\in \mathbb {C}\; :\; \text{ Re }(z)>0\}\) and \(H_r(s)+H_r^*(s)\ge 0\) for all \(s\in \mathbb {C}_+\), see [1]. Using the special structure of the system matrices in (13), we can show that the DAE system (11), (20) is passive.

Theorem 3

The DAE system (11), (13), (20) is passive.

Proof

First, observe that the transfer function \(H_r(s)\) of (11), (13), (20) is analytic in \(\mathbb {C}_+\). This fact immediately follows from Theorem 2. Furthermore, using the relations

we obtain for \(F(s)=(sE_r -A_r )^{-1}B_r\) and all \(s\in \mathbb {C}_+\) that

holds. In the last inequality, we utilized the property that the matrices \(E_r^{} E_r^- (-A_r^{} )E_r^- E_r^{} \) and \(A_r^{} E_r^- A_r^{} \) are both symmetric and positive semidefinite. Thus, \(H_r(s)\) is positive real, and, hence, system (11), (13), (20) is passive. \(\square \)

4 Balanced Truncation Model Reduction

Our goal is now to approximate the DAE system (11), (13), (20) by a reduced-order model

where \(\tilde{E}_r\), \(\tilde{A}_r\in \mathbb {R}^{\ell \times \ell }\), \(\tilde{B}_r\), \(\tilde{C}_r^T\in \mathbb {R}^{\ell \times m}\) and \(\ell \ll n_r\). This model should capture the dynamical behavior of (11). It is also important that it preserves the passivity and has a small approximation error. In order to determine the reduced-order model (21), we aim to employ a balanced truncation model reduction method [3, 20]. Unfortunately, we cannot apply this method directly to (11), (13), (20) because, as established in Sect. 3.2, this system is stable but not asymptotically stable due to the fact that the pencil \(\lambda E_r -A_r \) has zero eigenvalues. Another difficulty is the presence of infinite eigenvalues due to the singularity of \(E_r\). This may cause problems in defining the controllability and observability Gramians which play an essential role in balanced truncation.

To overcome these difficulties, we first observe that the states of the transformed system \((W^TE_rW, W^TA_rW, W^TB_r, C_rW)\) corresponding to the zero and infinite eigenvalues are uncontrollable and unobservable at the same time. This immediately follows from the representations

with \(B_1=W_1^TB_r\) and \(C_1=-B_r^TE_r^{-}A_r^{}W_1^{}=-B_1^TE_{11}^{-1}A_{11}^{}\). Therefore, these states can be removed from the system without changing its input-output behavior. Then the standard balanced truncation approach can be applied to the remaining system. Since the system matrices of the regularized system (11), (20) have the same structure as those of RC circuit equations studied in [26], we proceed with the balanced truncation approach developed there which avoids the computation of the transformation matrix W.

For the DAE system (11), (20), we define the controllability and observability Gramians \(G_c\) and \(G_o\) as unique symmetric, positive semidefinite solutions of the projected continuous-time Lyapunov equations

where \({\varPi }\) is the spectral projector onto the right deflating subspace of \(\lambda E_r -A_r \) corresponding to the negative eigenvalues. Using the quasi-Weierstrass canonical form (14) and (16), this projector can be represented as

where \(\hat{W}_1\in \mathbb {R}^{n_r\times \,n_s}\) satisfies

Similarly to [17, Theorem 3], a relation between the controllability and observability Gramians of system (11), (13), (20) can be established.

Theorem 4

Let \(G_c\) and \(G_o\) be the controllability and observability Gramians of system (11), (13), (20) which solve the projected Lyapunov Eqs. (23) and (24), respectively. Then

Proof

Consider the reflexive inverse \(E_r^-\) of \(E_r\) given in (17) and the reflexive inverse of \(A_r\) given by

Then multiplying the Lyapunov Eq. (23) (resp. (24)) from the left and right with \(E_r^-\) (resp. with \(A_r^-\)) and using the relations

we obtain

Since \(E_r^-\) and \(-A_r^-\) are symmetric and positive semidefinite and \({\varPi }^T\) is the spectral projector onto the right deflating subspace of \(\lambda E_r^- -A_r^- \) corresponding to the negative eigenvalues, the Lyapunov Eqs. (27) and (28) are uniquely solvable, and, hence, \(E_r G_o E_r=A_rG_cA_r\). \(\square \)

Theorem 4 implies that we need to solve only the projected Lyapunov Eq. (23) for the Cholesky factor \(Z_c\) of \(G_c=Z_c^{}Z_c^T\). Then it follows from the relation

that the Cholesky factor of the observability Gramian \(G_o=Z_o^{}Z_o^T\) can be calculated as \(Z_o=-E_r^- A_rZ_c\). In this case, the Hankel singular values of (11), (20) can be computed from the eigenvalue decomposition

where \(\begin{bmatrix} U_1,\; U_2 \end{bmatrix}\) is orthogonal, \(\varLambda _1=\text{ diag }(\lambda _1,\ldots ,\lambda _{\ell })\) and \(\varLambda _2=\text{ diag }(\lambda _{\ell +1},\ldots ,\lambda _{n_r})\) with \(\lambda _1\ge \ldots \ge \lambda _{\ell }\gg \lambda _{\ell +1}\ge \ldots \ge \lambda _{n_r}\). Then the reduced-order model (21) is computed by projection

with the projection matrices \(V=Z_c^{}U_1^{}\varLambda _1^{-\frac{1}{2}}\) and \(U=Z_o^{}U_1^{}\varLambda _1^{-\frac{1}{2}}=-E_r^- A_rV\). The reduced matrices have the form

The balanced truncation method for the DAE system (11), (13), (20) is presented in Algorithm 1, where for numerical efficiency reasons, the Cholesky factor \(Z_c\) of the Gramian \(G_c\) is replaced by a low-rank Cholesky factor \(\tilde{Z}_c\) such that \(G_c\approx \tilde{Z}_c^{}\tilde{Z}_c^T\).

Note that the matrices \(\tilde{E}_r\) and \(-\tilde{A}_r\) in (29) are both symmetric and positive definite. This implies that the reduced-order model (21), (29) is asymptotically stable. Then the transfer function \(\tilde{H}_r(s)=\tilde{C}_r(s\tilde{E}_r-\tilde{A}_r)^{-1}\tilde{B}_r\) is analytic in \(\mathbb {C}_+\) and for all \(s\in \mathbb {C}_+\), it satisfies

Thus, \(\tilde{H}_r(s)\) is positive real and, hence, the reduced-order model (21) is passive. Moreover, taking into account that the controllability and observability Gramians \(\tilde{G}_c\) and \(\tilde{G}_o\) of (21) satisfy \(\tilde{G}_c=\tilde{G}_o=\varLambda _1>0\), we conclude that (21) is balanced and minimal. Finally, we obtain the following bound on the \(\mathcal {H}_\infty \)-norm of the approximation error

which can be proved analogously to [11, 12]. Here, \(\Vert \cdot \Vert \) denotes the spectral matrix norm. Using (14) and (22), the error system can be written as

with

Since \(E_e\) and \(-A_e\) are both symmetric, positive definite and \(B_e^{}=C_e^T\), it follows from [26, Theorem 4.1(iv)] that

Using the output Eq. (12) instead of (20), the transfer function \(H_r(s)\) can also be written as

Then the computation of the \(\mathcal {H}_\infty \)-error is simplified to

We will use this relation in numerical experiments to verify the efficiency of the error bound (30).

Note that the presented model reduction method for the DAE system (11), (13), (20) is not balanced truncation applied to the frequency-inverted system with the transfer function

as it might be presumed at first glance. Our method can rather be interpreted as balanced truncation applied to the transformed system obtained by multiplication the state Eq. (11) from the left with the nonsingular transformation matrix \(T_r=(-A_r+E_r{\varPi }_0)(E_r+A_r{\varPi }_\infty )^{-1}\), where

are the spectral projectors onto the right deflating subspaces of \(\lambda E_r-A_r\) corresponding to the zero and infinite eigenvalues, respectively. Observe that the transformed system with the system matrices

has the same transfer function as (11), (20) and is symmetric in the sense that \(\hat{E}\) and \(\hat{A}\) are both symmetric and \(\hat{B}=\hat{C}^T\). Then projecting this system with the projection matrix \(V={\varPi }V\), we obtain

Consequently, the model reduction method in Algorithm 1 inherits the properties of the balanced truncation method for symmetric systems [18, 26]. In particular, it provides a symmetric reduced-order model which is exact at the frequency \(s=\infty \) and, as follows from (31), achieves the maximal error at \(s=0\).

5 Computational Aspects

In this section, we discuss the computational aspects of Algorithm 1. This includes solving the projected Lyapunov Eq. (23) and computing the basis matrices for certain subspaces.

For the numerical solution of the projected Lyapunov Eq. (23) in Step 1 of Algorithm 1, we apply the low-rank alternating directions implicit (LR-ADI) method as presented in [29] with appropriate modifications proposed in [4] for cheap evaluation of the Lyapunov residuals. First, note that due to (22) the input matrix satisfies \({\varPi }^TB_r=B_r\). Then setting

the LR-ADI iteration is given by

with negative shift parameters \(\tau _k\) which strongly influence the convergence of this iteration. Note that they can be chosen to be real, since the pencil \(\lambda E_r-A_r\) has real finite eigenvalues. This also enables to determine the optimal ADI shift parameters by the Wachspress method [31] ones the spectral bounds \(a=-\lambda _{\max }(E_r,A_r)\) and \(b=-\lambda _{\min }(E_r,A_r)\) are available. Here, \(\lambda _{\max }(E_r,A_r)\) and \(\lambda _{\min }(E_r,A_r)\) denote the largest and smallest nonzero eigenvalues of \(\lambda E_r-A_r\). They can be computed simultaneously by applying the Lanczos procedure to \(E_r^-A_r^{}\) and \(v={\varPi }v\), see [13, Sect. 10.1]. As a starting vector v, we can take, for example, one of the columns of the matrix \(E_r^-B_r^{}\). In the Lanczos procedure and also in Step 3 of Algorithm 1, it is required to compute the products \(E_r^-A_r{\varPi }v\). Of course, we never compute and store the reflexive inverse \(E_r^-\) explicitly. Instead, we can use the following lemma to calculate such products in a numerically efficient way.

Lemma 1

Let \(E_r\) and \(A_r\) be given as in (13), \(Z=\hat{Y}_{C_2}^TX_2^{}(X_2^T\hat{Y}_{C_2}^{}\hat{Y}_{C_2}^TX_2^{})^{-1}\), and \(v\in \mathbb {R}^{n_r}\). Then the vector \(z=E_r^-A_r^{}{\varPi }v\) can be determined as

where \({\varPi }_\infty \) is the spectral projector as in (38), and

is a basis matrix for \(\text{ im }(F_{\sigma })\).

Proof

We show first that the full column matrix \(\hat{Y}_\sigma \) in (36) satisfies the equation \(\text{ im }(\hat{Y}_\sigma )=\text{ im }(F_{\sigma })\). This property immediately follows from the relation

Since \(F_\sigma ^T\hat{Y}_\sigma ^{}\) has full column rank, the matrix \(\hat{Y}_\sigma ^TE_r^{}\hat{Y}_\sigma ^{}=\hat{Y}_\sigma ^TF_\sigma ^{}F_\sigma ^T\hat{Y}_\sigma ^{}\) is nonsingular, i.e., z in (35) is well-defined. Obviously, this vector fulfills \({\varPi }_\infty z=0\). Furthermore, we have

Then

Since \([\hat{Y}_\sigma , \,Y_\sigma ]\) is nonsingular, these equations imply \(E_rz=A_r^{}{\varPi }v\). Multiplying this equation from the left with \(E_r^-\), we get

This completes the proof. \(\square \)

Using (36), we find by simple calculations that

Next, we discuss the computation of \(Y_{\sigma }(Y_{\sigma }^TA_rY_{\sigma })^{-1}Y_{\sigma }^Tv\) for a vector v. By taking \(v=A_rw\), this enables to calculate the product \({\varPi }_\infty w=Y_{\sigma }^{}(Y_{\sigma }^TA_r^{}Y_{\sigma }^{})^{-1}Y_{\sigma }^TA_r^{}w\) required in (35).

Lemma 2

Let \(A_r\) be as in (13) and let \(Y_{\sigma }\) be a basis of \(\,\ker (F_{\sigma }^T)\). Then for the vector \(v=[v_1^T,\, v_2^T ]^T\in \mathbb {R}^{n_r}\), the product

can be determined as \(z=[0,\, z_2^T]^T\), where \(z_2\) satisfies the linear system

Proof

We first show that \(z=Y_{\sigma }(Y_{\sigma }^TA_rY_{\sigma })^{-1}Y_{\sigma }^Tv\) if and only if

where \(\hat{Y}_{\sigma }\) is as in (36). Let \([z^T,\, \hat{z}^T]^T\) solves Eq. (39). Then \(\hat{Y}_\sigma ^T z=0\) and, hence, \(z\in \text{ ker }(\hat{Y}_\sigma ^T)=\text{ im }(Y_\sigma )\). This means that there exists a vector \(\hat{w}\) such that \(z=Y_\sigma \hat{w}\). Inserting this vector into the first equation in (39), we obtain \(A_rY_\sigma \hat{w} + \hat{Y}_\sigma \hat{z}=v\). Multiplying this equation from the left with \(Y_\sigma ^T\) and solving it for \(\hat{w}\), we get \(z=Y_\sigma ^{}(Y_\sigma ^TA_r^{} Y_\sigma ^{})^{-1}Y_\sigma ^T v\).

Conversely, for z as in (37) and \(\hat{z}= (\hat{Y}_{\sigma }^T\hat{Y}_{\sigma }^{})^{-1}\hat{Y}_{\sigma }^T(v-A_r^{}z)\), we have \(\hat{Y}_{\sigma }^T z=0\) and

Using \(\hat{Y}_{\sigma }^{}(\hat{Y}_{\sigma }^T\hat{Y}_{\sigma }^{})^{-1}\hat{Y}_{\sigma }^T+ Y_{\sigma }^{}(Y_{\sigma }^TY_{\sigma }^{})^{-1}Y_{\sigma }^T=I\) twice, we obtain

Thus, \([z^T,\, \hat{z}^T]^T\) satisfies Eq. (39).

Equation (39) can be written as

with \(z=[z_1^T,\, z_2^T]^T\), \(\hat{z}=[z_3^T,\, z_4^T]^T\) and \(v=[v_1^T,\, v_2^T]^T\). The third equation in (40) yields \(z_1=0\). Furthermore, multiplying the fourth equation in (40) from the left with \(X_2^T\hat{Y}_{C_2}^{}\hat{Y}_{C_2}^TX_2^{}\) and introducing a new variable \(\hat{z}_2=(X_2^T\hat{Y}_{C_2}^{}\hat{Y}_{C_2}^TX_2^{})^{-1}z_4\), we obtain Eq. (38) which is uniquely solvable since \(\hat{Y}_{C_2}^TK_{22}\hat{Y}_{C_2}\) is symmetric, positive definite and \(\hat{Y}_{C_2}^TX_2^{}\) has full column rank. Thus, \(z =[0,\, z_2^T]^T\) with \(z_2\) satisfying (38). \(\square \)

We summarize the computation of \(z=E_r^-A_r^{}v\) with \(v={\varPi }v\) in Algorithm 2.

The major computational effort in the LR-ADI method (34) is the computation of \((\tau _k E_r+A_r)^{-1}w\) for some vector w. If \(\tau _k E_r+A_r\) remains sparse, we just solve the linear system \((\tau _k E_r+A_r)z=w\) of dimension \(n_r\). If \(\tau _k E_r+A_r\) gets fill-in due to the multiplication with \(\hat{Y}_{C_2}\), then we can use the following lemma to compute \(z=(\tau _k E_r+A_r)^{-1}w\).

Lemma 3

Let \(E_r\) and \(A_r\) be as in (13), \(w=[w_1^T,\,w_2^T]^T\in \mathbb {R}^{n_r}\), and \(\tau <0\). Then the vector \(z=(\tau E_r+A_r)^{-1}w\) can be determined as

where \(z_1\) and \(z_2\) satisfy the linear system

of dimension \(n+m+k_2\).

Proof

First, note that due to the choice of \(Y_{C_2}\) the coefficient matrix in system (41) is nonsingular. This system can be written as

It follows from (42d) that \(z_2\in \ker (Y_{C_2}^T)=\text{ im }(\hat{Y}_{C_2})\). Then there exists \(\hat{z}_2\) such that \(z_2=\hat{Y}_{C_2}\hat{z}_2\). Since \(\hat{Y}_{C_2}\) has full column rank, it holds

Further, from Eq. (42c) we obtain \(z_3=\tau R^{-1}X_1^T z_1 +\tau R^{-1}X_2^T z_2\). Substituting \(z_2\) and \(z_3\) into (42a) and (42b) and multiplying Eq. (42b) from the left with \(\hat{Y}_{C_2}^T\) yields

This equation together with (43) implies that

that completes the proof. \(\square \)

Finally, we discuss the computation of the basis matrices \(Y_{C_2}\) and \(\hat{Y}_{C_2}\) required in Algorithm 2 and the LR-ADI iteration. To this end, we introduce a discrete gradient matrix \(G_0\in \mathbb {R}^{n_e\times n_n}\) whose entries are defined as

Note that the discrete curl and gradient matrices C and \(G_0\) satisfy the relations \(\text{ rank }(C)=n_e-n_n+1\), \(\text{ rank }(G_0)=n_n-1\) and \(CG_0=0\), see [5]. Then by removing one column of \(G_0\), we get the reduced discrete gradient matrix G whose columns form a basis of \(\text{ ker }(C)\). The matrices C and \(G^T\) can be considered as the loop and incidence matrices, respectively, of a directed graph whose nodes and branches correspond to the nodes and edges of the triangulation \(\mathcal {T}_h(\varOmega )\), see [10]. Then the basis matrices \(Y_{C_2}\) and \(\hat{Y}_{C_2}\) can be determined by using the graph-theoretic algorithms as presented in [16].

Let the reduced gradient matrix \(G=\begin{bmatrix} G_1^T&G_2^T\end{bmatrix}^T\) be partitioned into blocks according to \(C=\begin{bmatrix}C_1, \; C_2\end{bmatrix}\). It follows from [16, Theorem 9] that

where the columns of the matrix \(Z_1\) form a basis of \(\ker (G_1)\). Then \(\hat{Y}_{C_2}\) can be determined as \(\hat{Y}_{C_2}=\texttt {kernelAk}(Z_1^TG_2^T)\) with the function \(\texttt {kernelAk}\) from [16, Sect. 4.2], where the basis \(Z_1\) is computed by applying the function \(\texttt {kernelAT}\) from [16, Sect. 3] to \(G_1^T\).

6 Numerical Results

In this section, we present some results of numerical experiments demonstrating the balanced truncation model reduction method for 3D linear MQS systems. For the FEM discretization with Nédélec elements, the 3D tetrahedral mesh generator NETGENFootnote 1 and the MATLAB toolboxFootnote 2 from [2] were used as described in [21]. All computations were done with MATLAB R2018a.

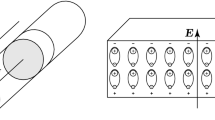

As a test model, we consider a coil wound round a conducting tube surrounded by air. Such a model was studied in [24] in the context of optimal control. A bounded domain

consists of the conducting subdomain \(\varOmega _1 = \varOmega _\mathrm{iron}\) of the iron tube and the non-conducting subdomain \(\varOmega _2 = \varOmega _\mathrm{coil}\cup \varOmega _\mathrm{air}\), where

with \(r_1<r_2<r_3<r_4\) and \(z_1<z_3<z_4<z_2\), see Fig. 1(a). The divergence-free winding function \(\chi :\varOmega \rightarrow \mathbb {R}^3\) is defined by

where \(N_c\) is the number of coil turns and \(S_c\) is the cross section area of the coil. The dimensions, geometry and material parameters are given in Fig. 1(b).

The DAE system (8) has \(n_1+m=1267\) differential variables, \(n_2-m-k_2=2643\) algebraic variables and \(k_2=399\) singular variables. The regularized pencil \(\lambda E_r-A_r\) has \(n_s=1004\) negative eigenvalues and \(n_0=263\) zero eigenvalues. It seems that the interface conditions between the conducting and non-conducting subdomains are responsible for the zero eigenvalues. This follows from the fact that the number of zero eigenvalues is equal to \(n_{n,\mathrm{iron/air}}-1\), where \(n_{n,\mathrm{iron/air}}\) is the number of nodes on the interface boundary between the iron tube and the surrounding air.

The controllability Gramian was approximated by a low-rank matrix \(G_c\approx Z_{n_c}^{}Z_{n_c}^T\) with \(Z_{n_c}\in \mathbb {R}^{n_r\times \, n_c}\) with \(n_c=24\). The normalized residual norm

for the LR-ADI iteration (34) is presented in Fig. 2a. Here, \(\Vert \cdot \Vert _F\) denotes the Frobenius matrix norm. Figure 2b shows the Hankel singular values \(\lambda _1,\ldots ,\lambda _{n_c}\). We approximate the regularized MQS system (11), (12) of dimension \(n_r=3910\) by a reduced model of dimension \(\ell =5\). In Fig. 3a, we present the absolute values of the frequency responses \(|H_r(i\omega )|\) and \(|\tilde{H}_r(i\omega )|\) of the full and reduced-order models for the frequency range \(\omega \in [10^{-4}, 10^6]\). The absolute error \(|H_r(i\omega )-\tilde{H}_r(i\omega )|\) and the error bound computed as

are given in Fig. 3b. Furthermore, using (32) we compute the error

showing that the error bound is very tight.

In Fig. 4a, we present the outputs y(t) and \(\tilde{y}(t)\) of the full and reduced-order systems on the time interval [0, 0.08]s computed for the input \(u(t)=5\cdot 10^4 \sin (300\pi t)\) and zero initial condition using the implicit Euler method with 300 time steps. The relative error

is given in Fig. 4b. One can see that the reduced-order model approximates well the original system in both time and frequency domain.

Notes

- 1.

https://sourceforge.net/projects/netgen-mesher/.

- 2.

References

Anderson, B., Vongpanitlerd, S.: Network Analysis and Synthesis. Prentice Hall, Englewood Cliffs, NJ (1973)

Anjam, I., Valdman, J.: Fast MATLAB assembly of FEM matrices in 2D and 3D: edge elements. Appl. Math. Comput. 267, 252–263 (2015)

Antoulas, A.: Approximation of Large-Scale Dynamical Systems. SIAM, Philadelphia, PA (2005)

Benner, P., Kürschner, P., Saak, J.: An improved numerical method for balanced truncation for symmetric second order systems. Math. Comput. Model. Dyn. Systems 19(6), 593–615 (2013)

Bossavit, A.: Computational Electromagnetism. Academic Press, San Diego (1998)

Bossavit, A.: “Stiff’’ problems in eddy-current theory and the regularization of Maxwell’s equations. IEEE Trans. Magn. 37(5), 3542–3545 (2001)

Cendes, Z., Manges, J.: A generalized tree-cotree gauge for magnetic field computation. IEEE Trans. Magn. 31(3), 1342–1347 (1995)

Clemens, M., Schöps, S., Gersem, H.D., Bartel, A.: Decomposition and regularization of nonlinear anisotropic curl-curl DAEs. COMPEL 30(6), 1701–1714 (2011)

Clemens, M., Weiland, T.: Regularization of eddy-current formulations using discrete grad-div operators. IEEE Trans. Magn. 38(2), 569–572 (2002)

Deo, N.: Graph Theory with Applications to Engineering and Computer Science. Prentice-Hall, Englewood Cliffs, N.J. (1974)

Enns, D.: Model reduction with balanced realization: an error bound and a frequency weighted generalization. In: Proceedings of the 23rd IEEE Conference on Decision and Control, pp. 127–132. Las Vegas (1984)

Glover, K.: All optimal hankel-norm approximations of linear multivariable systems and their \(\rm L^\infty \)-error bounds. Internat. J. Control 39, 1115–1193 (1984)

Golub, G., Van Loan, C.: Matrix Computations, 4th edn. The Johns Hopkins University Press, Baltimore (2013)

Haus, H., Melcher, J.: Electromagnetic Fields and Energy. Prentice Hall, Englewood Cliffs (1989)

Hiptmair, R.: Multilevel gauging for edge elements. Computing 64(2), 97–122 (2000)

Ipach, H.: Grafentheoretische Anwendung in der Analyse elektrischer Schaltkreise. Bachelor thesis, Universität Hamburg (2013)

Kerler-Back, J., Stykel, T.: Model reduction for linear and nonlinear magneto-quasistatic equations. Int. J. Numer. Meth. Eng. 111(13), 1274–1299 (2017)

Liu, W., Sreeram, V., Teo, K.: Model reduction for state-space symmetric systems. Syst. Control Lett. 34(4), 209–215 (1998)

Monk, P.: Finite Element Methods for Maxwell’s Equations. Numerical Mathematics and Scientific Computation, Oxford University Press (2003)

Moore, B.: Principal component analysis in linear systems: controllability, observability, and model reduction. IEEE Trans. Automat. Control AC-26(1), 17–32 (1981)

Muetzelfeld, I.: Model order reduction of magneto-quasistatic equations in 3D domains. Master thesis, Universität Augsburg (2017)

Munteanu, I.: Tree-cotree condensation properties. ICS Newsletter (International Compumag Society) 9, 10–14 (2002)

Nédélec, J.: Mixed finite elements in \(\mathbb{R}^3\). Numerische Mathematik 35(3), 315–341 (1980)

Nicaise, S., Stingelin, S., Tröltzsch, F.: On two optimal control problems for magnetic fields. Comput. Methods Appl. Math. 14(4), 555–573 (2014)

Nicaise, S., Tröltzsch, F.: A coupled Maxwell integrodifferential model for magnetization processes. Mathematische Nachrichten 287(4), 432–452 (2013)

Reis, T., Stykel, T.: Lyapunov balancing for passivity-preserving model reduction of RC circuits. SIAM J. Appl. Dyn. Syst. 10(1), 1–34 (2011)

Rodriguez, A., Valli, A.: Eddy Current Approximation of Maxwell Equations: Theory Algorithms and Applications. Springer, Mailand (2010)

Schöps, S., Gersem, H.D., Weiland, T.: Winding functions in transient magnetoquasistatic field-circuit coupled simulations. COMPEL 32(6), 2063–2083 (2013)

Stykel, T.: Low-rank iterative methods for projected generalized Lyapunov equations. Electron. Trans. Numer. Anal. 30, 187–202 (2008)

Thompson, R.: The characteristic polynomial of a principal subpencil of a Hermitian matrix pencil. Linear Algebra Appl. 14, 135–177 (1976)

Wachspress, E.: The ADI Model Problem. Springer, New York (2013)

Weiland, T.: A discretization method for the solution of Maxwell’s equations for six-component fields. Electron. Commun. 31(3), 116–120 (1977)

Willems, J., Takaba, K.: Dissipativity and stability of interconnections. Int. J. Robust Nonlinear Control 17, 563–586 (2007)

Acknowledgements

The authors would like to thank Hanko Ipach for providing the MATLAB functions for computing the kernels and ranges of incidence matrices and Inga Muetzelfeldt for providing the semidiscretized MQS model.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Kerler-Back, J., Stykel, T. (2022). Balanced Truncation Model Reduction for 3D Linear Magneto-Quasistatic Field Problems. In: Beattie, C., Benner, P., Embree, M., Gugercin, S., Lefteriu, S. (eds) Realization and Model Reduction of Dynamical Systems. Springer, Cham. https://doi.org/10.1007/978-3-030-95157-3_15

Download citation

DOI: https://doi.org/10.1007/978-3-030-95157-3_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-95156-6

Online ISBN: 978-3-030-95157-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)