Abstract

Irrespective of the elegance or mathematical complexity of one’s modelling framework, it has little practical usefulness without proper parametrization. This observation is particularly pertinent in the credit-risk setting. Transition and default probabilities, factor correlations and loadings, systemic weights, default dependence, recovery dispersion, spread duration, and credit spreads all need to be estimated and specified to produce meaningful economic-capital estimates. This necessarily lengthy and detailed chapter (metaphorically) rolls up its sleeves and sequentially addresses, explains, and motivates our choices with respect to each of these key parameter families. Where possible and relevant, alternatives and implications are presented. Finally, during the discussion, the consequences of the generally accepted and centrally important through-the-cycle perspective are also explored.

If you have a procedure with ten parameters, you probably missed some.

(Alan Perlis)

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

We can loosely think of a firm-wide credit-risk economic-capital model as a complicated industrial system, such as a power plant, an air-traffic control system, or an interconnected assembly line. The operators of such systems typically find themselves—at least, conceptually—sitting in front of a large control panel with multiple switches, levers, and buttons.Footnote 1 Getting the system to run properly, for their current objective, requires setting everything to its proper place and level. Failure to align the controls correctly can lead to inefficiencies, defective results, or even disaster. In a modelling setting, unfortunately, there is no exciting control room. There are, however, invariably a number of important parameters to be determined. It is useful to imagine the parameter selection process as being roughly equivalent to adjusting the switches, levers, and buttons in an industrial process. There are many possible combinations and their specific constellation depends on the task at hand. Good choices require experience, reflection, and judgement. Transparency and constructive challenge also contribute importantly to this process.

The previous chapter identified a rich array of parameters associated with our large-scale industrial model. The principal objective of this chapter is to motivate our actual parameter choices. It is neither particular easy nor terribly relaxing to walk through a detailed explanation of such a complex system. The labyrinth of questions that need to be answered can easily lead us astray or obscure the thread of the discussion. Figure 3.1 attempts to mitigate this problem somewhat through the introduction of a parameter tree. It highlights five main categories of parameters: credit states, systemic factors, portfolio-level quantities, recovery rates, and credit migration. Each of the five divisions of Fig. 3.1 corresponds to one of the main sections of this chapter. These categories are further broken down into a number of sub-categories identifying the specific parameter questions to be answered. Overall, there more than a dozen avenues of parameter investigation to be covered. Figure 3.1 thus forms not only a rough outline of this chapter, but also a logical guide for navigating the complexity of our credit-risk economic capital control panel.

A parameter tree: This schematic highlights five main classes of parameters and further illustrates the key questions associated with each grouping. This parameter tree forms a rough outline of this chapter—providing the main sections—and acts as a logical guide through the complexity of our credit-risk economic capital control panel.

Before jumping in and answering the various parameter questions raised in Fig. 3.1, it is useful to establish a few ground rules. The first rule, already highlighted in previous chapters, is that economic capital is intended to be a long-term, unconditional, or through-the-cycle estimate of asset riskiness. This indicates that we need to ensure a long-term perspective in our parameter selection. A second essential rule is that, wherever possible, we wish to select our parameters in an objective, defensible fashion. In other words, we need to rely on data. Data, however, brings its own set of challenges. We will not always be able to find the perfect dataset to precisely measure what we wish; as a small institution with limited history, NIB often finds itself faced with such challenges. This suggests the need to employ some form of logical proxy. Moreover, consistency of data for different parameters types, the choice of time horizons, and the relative weight on extreme observations are all tricky questions to be managed. We will not pretend to have resolved all of these questions perfectly, but will endeavour to be transparent about our choices.

A final rule, or perhaps guideline, is that a model owner or operator needs to be keenly aware of the sensitivity of model outputs to various parameter choices. This is exactly analogous to our industrial process. The proper setting for a lever is hard to determine absent knowledge of how its setting impacts one’s production process. Such analysis is a constant component of our internal computations and regular review of parameter choices. It will not, however, figure much in this chapter.Footnote 2 The reason is twofold. First of all, such an analysis inevitably brings one into the specific details of the portfolio: regional and industrial breakdowns and the impact on specific clients. Such information is naturally sensitive and, as such, cannot be shared in such a forum. The second, more practical, reason relates to the volume of material. This chapter is already extremely long and detailed—perhaps too much so—and adding analysis surrounding parameter sensitivity will only make matters worse. Let the reader be nonetheless assured that parameter sensitivity is an important internal theme and forms a central component of the parameter-selection process.

The mathematical structure of any model is deeply important, but it is the specific values of the individual parameters that really bring it to life. The remaining sections of this chapter will lean upon the structure introduced in Fig. 3.1 to describe and motivate our parametric choices. Necessarily very NIB-centric, the general themes and key questions apply very widely to a broad range of financial institutions.

1 Credit States

As is abundantly clear in the previous chapter, the default and migration risk estimates arising from the t-threshold model depend importantly on the obligor’s credit state. One can make a legitimate argument that this is the most important aspect of the credit-risk economic capital model. The corollary of this argument is the treatment of credit states—and their related properties—is the natural starting point for any discussion on parameterization.

1.1 Defining Credit Ratings

NIB has, for decades, made use of a 21-notch credit-rating scale.Footnote 3 This is a fairly common situation among lending institutions; control over one’s internal rating scale is a very helpful tool in loan origination and management. For large institutions, it is also the source of rich internal firm-specific data on default, transition, and recovery outcomes. NIB—as are many other lending organizations—is unfortunately too small to enjoy such a situation. The consequence is a lack of sufficient internal credit-rating data and history to estimate firm-specific quantities. It is thus necessary to identify and use longer and broader external credit-state datasets for this purpose. A natural source for this data is the large credit-rating agencies: S&P, Moodys, or Fitch. We have taken the decision to generally rely upon S&P transition probabilities, but we also examine Moodys’ data for comparison purposes. One can certainly question the representativeness of this data for our specific application, but there are unfortunately not many legitimate alternatives. We will attempt to make adjustments for data representation as appropriate.

Both Moody’s and S&P also allocate 19 and 20 non-default credit ratings, respectively; each is described with a different alphanumeric identifier as shown in Table 3.1. Typically, we refer to the top-ten ratings—in either scale—as investment grade. The remaining lower groups are usually called speculative grade. The most obvious solution—for small- to medium-sized firms—would be to create a one-to-one mapping between one’s internal scale and the S&P and Moody’s categories; this would ultimately preclude managing different numbers of groupings. This is, however, a problem for NIB (and many other lending institutions), since the very lower end of the S&P and Moody’s scales are simply too far outside of our typical lending risk appetite.Footnote 4 These categories are referred to as either “extremely speculative” or in “imminent risk of default.” This is simply not representative of our business mandate and practice. For this reason, everything below S&P’s B- and Moody’s B3 categories are lumped together into the final PD20 NIB credit rating. This makes the mapping rather more difficult. We need to link 20 (non-default) NIB categories into 17 (non-default) S&P and Moody’s credit notches.

Finding a sensible mapping between these two mismatched scales requires some thought. As an over-specified problem, a unique mapping solution naturally does not exist. Some external logic or justification is required to move forward.Footnote 5 The first ten (investment-grade) categories are easy. They are simply matched on a one-to-one basis among the S&P, Moody’s and NIB scales. The hard part relates to the speculative grade. Our practical solution to this mapping problem involves sharing of a pair of S&P and Moody’s rating categories between three triplets of NIB groupings. This links six credit-rating agency groups into nine NIB categories; the three-notch mismatch is thus managed. The overlap occurs for rating triplets PD11-13, PD14-16, and PD17-19. The specific values are summarized in Table 3.1. As a final step, PD20 maps directly to the CCC and Caa S&P and Moody’s categories, respectively.

Colour and Commentary 25

(Credit Rating Scale and Mappings) : NIB’s internal 21-notch credit scale is not a modelling choice. It was designed, and has evolved over the years, to meet its institutional needs. This includes the creation of a common language around credit quality, the need to support the loan-origination process, and also to aid in risk-management activities. a Our modelling activities need to reflect this internal reality. Our position as a small lending institution nonetheless requires looking outwards for data. To do this, explicit decisions about the relationships between the internal scale and external ratings need to be established. This is a modelling question. The solution is a rather practical mapping between NIB’s 20 non-default notches and the first 17 non-default credit ratings for S&P and Moody’s. Its ultimate form, summarized in Table 3.1 , was designed to manage the notch mismatch and the nature of our internal rating scale. We will return to this decision frequently in the following parameter discussion as well as in subsequent chapters.

aInternal rating scales are a common tool among lending institutions all over the world.

1.2 Transition Matrices

The previous credit-rating categorization has some direct implications. In particular, it implicitly assumes that the credit quality of its obligors can be roughly described by a small, discrete and finite set of q-states. From a mathematical modelling perspective, this strongly suggests the idea of a discrete-time, discrete-state Markov-chain process.Footnote 6 While there are limits to how far we can push this idea—Chap. 7 will explore this question in much more detail—treating the collection of credit states as a Markov chain is a useful starting point. It immediately provides a number of helpful mathematical results. In particular, the central parametric object used to describe a Markov chain is the transition matrix. Denoted as \(P\in \mathbb {R}^{q\times q}\), it provides a full, parsimonious characterization of the one-period movements from any given state to all other states (including default). Chapter 2 has already demonstrated the central role that the transition matrix plays in determining default and migration thresholds. The question we face now is how to defensibly determine the individual entries of P.

One might, very reasonably, argue for the use of multiple transition matrices differentiated by the type of credit obligor. There are legitimate reasons to expect that transition dynamics are not constant across substantially different business lines. A very large lending institution, for example, may employ a broad range of transition matrices for different purposes. In our current implementation, however, only two transition matrices are employed for all individual obligors; one for corporate borrowers and another for sovereign obligors. This allows for a slight differentiation in transition dynamics between these two classes of credit counterparty.Footnote 7 With q × q individual entries, a transition matrix is already a fairly high-dimensional object. This discussion will focus principally on the corporate transition matrix; the same ideas, it should be stressed, apply equally in the sovereign setting.

Prior to the data analysis, we seek some clarity on what we expect to observe in a generic credit-migration transition matrix. Kreinin and Sidelnikova [31], a helpful source in this area, identify four properties of a regular credit-migration model.Footnote 8 These include:

-

1.

There exists a single absorbing, default state. In other words, once default occurs, it is permanent. While in real life there may be the possibility of workouts or negotiations, this is an extremely useful abstraction in a modelling setting.

-

2.

There exists some \(\tilde {t}\) such that \(p_{qi}(\tilde {t})>0\) for all i = 1, …, q − 1. In words, this means that all states will, at some time horizon, possess a positive probability of default. Empirically, \(\tilde {t}\) is somewhere between one to three or four years, even for AAA rated credit counterparts.

-

3.

The \(\det (P)>0\) and all of the eigenvalues of P are distinct. These are necessary conditions for the computation of the matrix logarithm of P, which is important if one wishes to compute a generator matrix to lengthen (or shorten) the time perspective.Footnote 9

-

4.

The matrix, P, is diagonally dominant. Since each entry, p ij ∈ (0, 1), for all i, j = 1, .., q and each row sums to unity, this implies that the majority of the probability mass is assigned to a given entity remaining in their current credit state. Simply put, credit ratings are characterized by a relatively high degree of inertia.

These specific properties, for credit-risk analysis, are useful to keep in mind. An important consequence of the second point, and the existence of an absorbing default state is that,

That is, eventually everyone defaults.Footnote 10 In practice, of course, this can take a very long time and, as a consequence, this property does not undermine the usefulness of the Markov chain for modelling credit-state transitions.

Discussing and analyzing actual transition matrices is a bit tricky given their significant dimension. Our generic transition matrix, \(P\in \mathbb {R}^{21 \times 21}\), has 441 individual elements. This does not translate into 441 model parameters, but it is close. The absorbing default state makes the final row redundant reducing the total parameter count to 420. The final column, which includes the default probabilities, is specified separately. This is discussed in the next section. This still leaves a dizzying 400 transition probability parameters to be determined.

How do we go about informing these 400 parameters? Despite seeming like an insurmountable task, the answer is simple. These values are borrowed, and appropriately transformed, from rating agency estimates.Footnote 11 The principal twist is that some logic is required, as previously discussed, to map the low-dimensional rating agency data into NIB’s 21-notch scale. This important question, along with a number of other key points relating to default and transition properties, is relegated to Chap. 7.Footnote 12 In this parametric discussion, we will examine the basic statistical properties of these external transition matrices. In doing so, an effort will be made to verify, where appropriate, Kreinin and Sidelnikova [31]’s properties. We will also decide if there is any practical difference in employing S&P or Moody’s transition probabilities.

Such a large collection of numbers does not really lend itself to conventional analysis. In such situations, it consequently makes sense to make an attempt at dimension reduction. For each rating category, over a given period of time, there are only four logical things that can happen: an entity can downgrade, upgrade, move into default, or stay the same. While there is only way to default or stay the same, there are typically many ways to upgrade or downgrade. If we sum over all of these potential outcomes, and stick with the four categories, the result is a simpler viewpoint. Figure 3.2 illustrates these four possibilities for each of the pertinent 18 S&P and Moody’s credit ratings.

Figure 3.2 illustrates a number of interesting facts. First, far and away the most probable outcome is staying put. This underscores our fourth property; each transition matrix is diagonally dominant. As an additional point, the probability of downgrade increases steadily as we move down the credit spectrum. Downgrade remains nonetheless substantially more probable than default until all but the lowest credit-quality categories. Indeed, the probability of default is not even visible until we reach the lower five rungs on the credit scale. Interestingly, the probability of upgrade tends down as credit quality decreases, but it appears to be generally more flat than for downgrades. The final, and perhaps most important, conclusion is that there does not appear to be any important qualitative differences between the S&P and Moody’s transition-probability estimates. This does not immediately imply that there are no differences, but suggests broad similarity at this level of analysis.

Figure 3.3 employs the notion of a heat map to further illustrate the long-term one-year, corporate S&P and Moody’s transition matrices. A heat map uses colours to represent the magnitude of the 400+ individual elements in both matrices; lighter colours indicate a high level of transition probability, whereas darker colours represent lower probabilities. The predominance of light colors across the diagonal of both heat maps underscores the strong degree of diagonal dominance in both estimates already highlighted in Fig. 3.2; indeed, most of the action is in the neighbourhood of the diagonal. Comparison of the right- and left-hand side graphics in Fig. 3.3 reveals that, visually at least, the two matrices seem to be quite similar. This is additional evidence suggesting that we need not be terribly concerned which of these two data sources is employed.

One final technical property needs to be considered. Figure 3.4 illustrates the q eigenvalues associated with our S&P and Moody’s transition matrices. All eigenvalues are comfortably positive, distinct, and less than or equal to unity. The eigenvalue structure of our two alternative transition-matrix estimates are quite similar. Moreover, the determinant of both matrices is a small positive number with condition numbers of less than 3.Footnote 13 All of these attributes—consistent with Kreinin and Sidelnikova [31]’s third property—can be interpreted in many ways when combined with the theory of Markov chains. For our purposes, however, we may conclude that P is non-singular and can be used with both the matrix exponential and natural logarithm. These technical properties are not of immediate usefulness, but will prove very helpful in Chap. 7 when building forward-looking stress scenarios.

Colour and Commentary 26

(The Transition Matrix) : Our t-threshold credit-risk economic capital model, as the name clearly indicates, requires the specification of a large number of default and migration thresholds . These are inferred from estimated transition probabilities, which are traditionally stored and organized in a transition matrix. A transition matrix is a wonderfully useful object, which has a number of important properties. The most central is the existence of a permanent absorbing default state that is accessible from all states. Despite all of its benefits, the transition matrix has one drawback: it includes a depressingly large number of parameters. With 20 (non-default) credit states, about 400 transition probabilities need to be determined. We, like (almost) all small lending institutions, simply do not have sufficient internal data to defensibly estimate these values. The solution is to look externally. Examination of comparable S&P and Moody’s transition matrices happily reveals that both sources provide results that are qualitatively very similar. This supports our decision to adopt—subject to an appropriate transformation to our internal scale—the long-term, through-the-cycle transition probabilities published by S&P.

1.3 Default Probabilities

Default probabilities are quietly embedded in the final column of our transition matrix. For credit-risk economic-capital they play a starring role; their determination of the default threshold is tantamount to describing each obligor’s distance to default. As a consequence, they merit special examination and attention. We have thus constructed a separate logical approach to their determination. This is an important distinction from the remaining transition probabilities, which are adopted (fairly) directly from external rating agency estimates.

Default is—at least, for investment-grade loans—a rather rare event. To this point, given their relatively small values, it is difficult for us to assess the default-probability assumptions embedded in our transition matrix. Indeed, they were hardly visible in Fig. 3.2. Figure 3.5 rectifies this situation with a close up of the S&P and Moody’s default probabilities. Unlike our previous analysis, these 18 credit notches have been projected onto the 20-step NIB scale using the mapping logic from Table 3.1.Footnote 14 The right-hand graphic illustrates the raw values, which exhibit an exponentially increasing trend in default probabilities as we move down the credit spectrum. This is the classical form of default probabilities; each step out the credit-rating scale leads to a multiplicative increase in the likelihood of default.Footnote 15

Default probabilities: The raw values in the left-hand graphic illustrate the exponentially increasing trend in default probabilities as we move down the credit spectrum. The right-hand graphic performs a natural logarithmic transformation of these values; its linear form verifies the exponential observation. Internal NIB values are compared to the implied S&P and Moody’s estimates.

Exponential growth is tough to visualize. It is difficult, for example, to verify the magnitude and strict positivity of the default probabilities of strong credit ratings. The right-hand graphic thus performs a natural logarithmic transformation of the default probabilities. Since the natural logarithm is undefined for non-positive values, the highest two or three external rating default probabilities are assigned to identically zero. Incorporating this directly into our model would imply that, at a one-year horizon, default would be impossible for the stronger credits. This is a difficult point. Simply because default, over one-year horizon, has never been observed for such counterparties does not imply that it is impossible. Rare, certainly, but not impossible. Setting these values to zero, as a model parameter, is simply not a conservative choice. We resolve this issue by assigning small positive values to the first three default-probability classes.

How does this assignment occur? The data provides a helpful clue. If we divide each successive (non-zero) default probability by its adjacent value, we arrive at a constant ratio of roughly 1.5; in other words, the exponential growth factor is about 1.5. Extrapolating forwards and backwards using this trend yields the blue line in Fig. 3.5. This might seem a bit naive, but it actually yields values that are highly consistent with—and even slightly more conservative than–those produced by S&P and Moody’s. The only exception is the final category, which is termed PD20. This corresponds to S&P’s CCC and Moody’s Caa, which is basically an amalgamation of lowest rungs in their credit scale. It is simply not representative of our lending business. For this reason, the PD20 default-probability value has been capped at 0.2. This is an example of using modelling judgement and business knowledge to tailor the results to one’s specific circumstances.

Colour and Commentary 27

(Default Probabilities) : Not all transition probabilities—from an economic-capital perspective, at least—are created equally. Default probabilities, used to determine the distance to default, play a disproportionately important role in our risk computations. Their central importance motivates a deviation from broad-based adoption of external credit-rating agency estimates. The set of default probabilities is determined by exploiting the empirical exponential form. Each subsequent rating is simply assumed to be a fixed multiplicative proportion of the previous value. Some additional complexity occurs at the end points. External rating agencies’ estimates—due to lack of actual observations—imply zero (one-year) default probabilities for the highest credit-quality obligors. In the spirit of conservatism, we allocate small positive values—consistent with the multiplicative definition—to these rating classes. At the other end of the spectrum, the lowest rating category is simply not representative of the credit quality in our portfolio. Consequently, the 20th NIB default-probability estimate is capped at 0.2. The end product is a logically consistent, conservative, and firm-specific set of default-probability estimates.

2 Systemic Factors

Although the assumption of default independence would dramatically simplify our computations, it is inconsistent with economic reality. A modelling alternative is an absolute necessity. The introduction of systemic factors is the mechanism used to induce default (and migration) correlation among the credit obligors in our portfolio. While conceptually clear and rather elegant, it immediately raises a number of practical questions. What should be the number and composition of these factors? How do we inform their dependence structure? What is the relative importance of the individual factors for a given credit obligor. All of these important queries need to be answered before the model can be implemented. This section addresses each in turn.

2.1 Factor Choice

The systemic factors driving default correlation are—unlike many popular model implementations—assumed to be correlated. With orthogonal factors, it is possible to maintain a latent (or unobservable) form. This choice is unavailable in this setting. Since we ultimately need to estimate the cross correlations between these factors, it will be necessary to give them concrete identities. Our credit-risk economic-capital model thus includes a total of J = 24 systemic factors; these fall into industrial and regional (or geographic) categories. There are 11 industrial sectors and 13 geographic regions. The dependence structure between these individual factors is, as is often the case in practice, informed by analysis of equity index returns. This means that we require historical equity indices for each of our systemic factors; this constrains somewhat the choice.

Even within these constraints, there is a broad range of choice. Were you to place five people into a room and ask them to give their opinion on the correct set of systemic factors, you would likely get five, or more, opinions. The reason is simple; there is no one correct answer. On one hand, completeness argues for the largest possible factor set. Too many factors, however, will be difficult to manage. Judiciously managing the age-old trade-off between granularity and parsimony is not easy. It ultimately comes down to a consideration of the costs and benefits of including each systemic factor.

These specific sector systemic-factor choices are summarized in Table 3.2. Such an approach requires some kind of internal or external industrial taxonomy, which may, or may not, be specialized for one’s purposes.Footnote 16 With one exception, our industrial classification is mapped to a rather high level. Paper and forest products, which can probably be best viewed as a sub-category of the material or industrial groupings, is further broken out due to its importance to the Nordic region. This is another clear example of tuning the level of model granularity required for the analysis of one’s specific problem.

Table 3.3 provides an visual overview of the set of 13 geographic systemic factors. A very broad or detailed partition along this dimension is possible, but the granularity is tailored to meet specific business needs. Roughly half of the regional systemic factors, as one would expect with our mandate-driven focus, fall into the Baltic and Nordic sectors. Europe is broken down into two main categories: Europe and new Europe. The latter category relates primarily to Eurozone ascension countries in central and eastern Europe. The remaining geographic factors split the globe into a handful of large, but typical, zones.

Colour and Commentary 28

(Number of Systemic Factors) : Selecting the proper set of systemic risk factors is not unlike putting together a list of invitees for a wedding. The longer the list, the greater the cost. Some people simply have to be there, some would be nice to have but are unnecessary, while others might actually cause problems. Finally, different people are likely to have diverging opinions. We have opted for 24 industrial and regional factors. This is a fairly large wedding, but it is hard to argue for a much smaller one. With one exception, the lowest level of granularity is used for the industrial classification. On the regional side, roughly one half of the factors relate to NIB member countries. The remaining geographic factors are important for liquidity investments on the treasury side of the business. While there is always scope for discussion and disagreement, from a business perspective, the majority of the selected systemic factors are necessary guests.

2.2 Systemic-Factor Correlations

Having determined the identity of our systemic factors, we move to the central question of systemic-factor dependence. These so-called asset correlations are sadly not observable quantities. Default data can be informative in this regard.Footnote 17 We have elected to follow the well-accepted approach of informing asset correlation through a readily available proxy: equity prices. This is not a crazy idea. Equity prices, and more particularly returns, communicate information about the value of a firm. Cross correlations between movements in a given firm’s value and other firm-value movements provide some insight into the question at hand. Moreover, a broad range of firm level, geographic, and industry equity return data is available.

The logical reasonableness of the link between asset and equity data, as well as its ready availability, should not lure us into believing that equity data does not have its faults. Equities are bought and sold in markets; these markets may react to general macroeconomic trends, supply and demand, and investor sentiment in ways not entirely consistent with asset-value dynamics. The inherent market-based interlinkages between individual equities will tend to overstate the true dependence at the firm level. By precisely how much, of course, is rather difficult to state with any degree of accuracy.Footnote 18 The granularity and dimensionality of the model construction, however, leaves us with no other obvious alternatives. It is nonetheless important to be frank and transparent about the quality of equity data as a proxy. In short, it is useful, available, and logically sensible, but far from perfect.

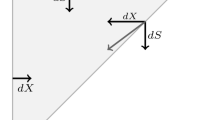

Risk-factor correlations are thus proxied by equity returns; these returns are, practically, computed as logarithmic differences of equity indices. Armed with a matrix of equity returns, \(X\in \mathbb {R}^{\tau \times J}\), where τ represents the number of collected equity return time periods, we may immediately write \(S=\mathrm {cov}(X)\in \mathbb {R}^{J\times J}\). Technically, it is straightforward to decompose our covariance matrix, S, as follows:

where \(V\in \mathbb {R}^{J\times J}\) is a diagonal matrix collecting the individual volatility terms associated with each systemic factor and \(\Omega \in \mathbb {R}^{J\times J}\) is a positive-definite, symmetric correlation matrix. τ, for this analysis, is set to 240 months or 20 years of index data.

Analogous to the transition-matrix setting, it is not particularly informative to examine large-scale tables with literally hundreds of pairwise correlation estimates. Figure 3.6 attempts to gain visual insight into this question through another application—in the left-hand graphic—of a heat map. The lighter the shading of the colour in Fig. 3.6, the closer the correlation is to unity. This explains the light yellow—or almost white—stripe across the diagonal. The right-hand graphic examines the distribution of all (roughly 300) distinct off-diagonal elements.Footnote 19 Overall, the smallest pairwise correlation between two systemic factors is roughly 0.15, with the largest exceeding 0.90. The average lies between 0.5 and 0.6. This supports the general conclusion that there is a strong degree of positive correlation between the selected systemic factors.

(Linear) Systemic correlations: This figure attempts to graphically illustrate the roughly 300 distinct pairwise estimated product-moment (or Pearson) correlation-coefficient outcomes associated with our system of J = 24 industrial and geographic systemic factors. The left-hand side provides a heat map of the correlation matrix, while the right-hand side focuses on the cross section.

This is hardly a surprising observation. All variables are equity indices, which have structural similarities due to typical co-movements of equity markets. The high concentration in the Nordic sector, with strong regional interdependencies, further complicates matters. Many institutions would gather together all Nordic and Baltic factors under the umbrella of a single European risk factor. NIB does not have this luxury. The granularity in this region can hardly be avoided if we desire to distinguish between member country contributions to economic capital—its centrality to our mission makes it a modelling necessity. Nevertheless, this is a highly positively correlated collection of random financial-market variables. It will be important to recall this fact when we turn to the question of systemic-factor loadings.

The next step involves examining the factor volatilities. Figure 3.7 illustrates the elements of our diagonal volatility matrix; each can be allocated to an individual equity return series. The average annualized return volatility is approximately 19%, which appears reasonable for equities. Consumer staples, health care and utilities—as one might expect—appear to have the most stable returns with volatility in the 10–15% range. On the upper end of the volatility scale, exceeding 20%, are paper-and-forest products and IT. Generally, deviations of the regional indices from the overall average level are relatively modest. The exception is Iceland with annualized equity return volatility of approximately 45%, which is approaching three times the average. This is certainly influenced by the relative small size of the Icelandic economy, its large financial sector, and Iceland’s macroeconomic challenges in the period following the 2008 financial crisis.

Factor volatilities: This figure illustrates the annualized-percentage factor (i.e., equity index return) volatility of each of the J = 24 industrial and geographic systemic factors. This information is only pertinent if we employ the covariance matrix rather than the correlation matrix for describing the systemic-risk factor dependence.

A legitimate question, which will be addressed in the following sections, is whether or not we have any interest in the volatility of these systemic risk factors. Equity return data is a convenient and sensible proxy for our systemic risk factors. In principle, however, this proxy seeks to inform the dependence between these factors, not their overall level of uncertainty. Volatility is centrally important in market-risk computations, but the threshold-model approach actually involves normalizing away these volatilities.

Outfitted with 20 years of monthly equity index data for 24 logically sensible geographical and industrial systemic-risk factors, a number of practical decisions still need to be taken. Indeed, one could summarize these decisions in the form of three, important, and interrelated questions:

-

1.

Should we employ the covariance or correlation matrices to model the dependence structure of our systemic risk-factor system?

-

2.

If we opt to use correlation, should we use the classical, product-moment Pearson correlation coefficient or the rank-based, Spearman measure?

-

3.

Should we employ our full 20-year dataset or some sub-period?

While these are all pertinent questions that need answering, let us begin with the first question. This is more of a mathematical choice and, once answered, we may turn our attention of the latter two more empirical decisions.

2.2.1 Which Matrix?

Should we employ a covariance or a correlation matrix? This might, to the reader, appear to be a rather odd question.Footnote 20 In principle, if managed properly, it should not really matter. Equation 3.2 illustrates, rather clearly, that the same information is found in both matrices. The only difference is scaling; covariances are adjusted, in a quadratic manner, for the factor volatility. The correlation matrix summarizes raw correlation values.

What would be the benefit of using covariances rather correlations? There does not appear to be any concrete advantage. The factor volatilities play, in the credit-risk economic-capital calculation, absolutely no role. Indeed, their presence requires an additional adjustment. That is, when using the covariance matrix, we must take extra pains to exclude these elements through their inclusion in the normalization constant. It is the correlation between these common systemic factors that bleeds through to our latent creditworthiness index, ΔX, and ultimately, induces default and migration correlations among our individual risk owners. At best, the factor volatilities are a distraction, while at worst, they might possibly have some unforeseen scaling impact on our latent creditworthiness indices. As a consequence, we may as well eliminate them at the outset.

Use of the covariance matrix may also confuse the interpretation of the factor-loading parameters. One would conceptually prefer that, in the final implementation, the factor loadings are not quietly being modified by the factor volatilities. Overall, the difference is not dramatic, but anything that simplifies our understanding of the model and the interpretation of model parameters—without undermining the basic requirements of the model—is difficult to argue against. For this reason, we have definitively elected to use the correlation matrix as the fundamental measure of systemic risk-factor dependence.

2.2.2 Which Correlation Measure?

The classical notion of correlation, often referred to as the product-moment definition, is typically called Pearson correlation.Footnote 21 It basically compares, for a set of observations associated with two random variables, how each pair of joint outcomes deviates from their respective means. The classic construction, for two arbitrary random variables X and Y , is described by the following familiar (and already employed) expression

An alternative approach, termed rank correlation, approaches the computation in an alternative manner. The so-called Spearman’s rank correlation is defined as

where r(⋅) denotes the rank outcome of random variable.Footnote 22 Instead of comparing the distance of each observation from its mean, it compares the rank of each observation to the mean rank. It is thus basically an order-statistic version of the correlation coefficient.Footnote 23 Otherwise, Eqs. 3.3 and 3.4 are structurally identical.

Spearman’s coefficient is popular due to its lower degree of sensitivity to outliers; broadly speaking, it has many parallels, in this regard, to the median. For relatively well-behaved random variables, there is only a modest amount of difference between the two measures. Indeed, for bivariate Gaussian random variates, the relationship between Spearman’s r and Pearson’s ρ is given as,Footnote 24

which directly implies that

This may appear to be a complicated relationship, but practically, over the domain of the coefficients, [−1, 1], there is not much difference. Figure 3.8 provides a graphical perspective on Eq. 3.7. While they do not match perfectly, the differences are minute; consequently, imposition of Spearman’s rank correlation—in a Gaussian setting—will imply an extremely similar level of correlation along the typical Pearson definition. The point of this discussion is to indicate that the decision to use rank or product-moment correlation, in a Gaussian setting, is more a logical than an empirical choice. In a non-Gaussian setting, however, differences can be more important. It becomes particularly pertinent—as is common in financial-market data—in the presence of large, and potentially, distorting outlier observations.

Comparing Pearson’s ρ to Spearman’s r: The preceding figure examines, across the range of − 1 to 1, the link between two notions of correlation for bivariate Gaussian data: Pearson’s ρ and Spearman’s r. While they do not match perfectly, the differences are minute; consequently, imposition of Spearman’s rank correlation—in a Gaussian setting—will imply a rather similar level of correlation relative to the typical Pearson definition. In non-Gaussian settings, the differences can be more important.

Figure 3.9 provides two heat maps comparing the roughly 300 pairwise linear and rank correlation coefficients across our 24 separate systemic risk variables. While there is little in the way of qualitative difference between the two measures, due to their lower sensitivity to outliers, the rank correlation coefficients are slightly lower than their linear compatriots. The rank correlation coefficients have distinctly, albeit not dramatically, more darker colour in their heat map. Both figures are computed using 20 years of monthly equity return data; this leads to a total of 240 observations for each pairwise correlation estimate. The first twenty years of the twenty-first century have not been, from a financial perspective at least, particularly calm. Equity markets have experienced a number of rather extreme events during this period. The rank correlation will capture these extremes, but in a less dramatic way, given the relative stability of the rank of return relative to its level. This stability property is rather appealing.

Competing heat maps: The preceding heat maps compare the roughly 300 pairwise linear and rank correlation coefficients across our 24 separate systemic risk variables. There is little in the way of qualitative difference between the two measures, but due to their lower sensitivity to outliers, the rank correlation coefficients are slightly lower than their linear compatriots.

We wish these crisis periods to have an impact on the final results, but not to potentially dominate them. To judge this question, however, we need a bit more information than is found in Fig. 3.9. Figure 3.10 accordingly attempts to help by examining the cross section of off-diagonal elements of Ω. The centres of these two cross-section correlation-coefficient distribution are rather close: in the linear case it is 0.57 and 0.52 in the rank setting. The range of correlation values are qualitatively quite similar, spanning the values of about 0.1 to 0.9. There is, however, a slight difference. The cross section of rank correlation coefficients appears to be somewhat more symmetric than with the linear estimates. Both sets of values lean somewhat towards the upside of the unit interval, but the rank correlation graphic is less extreme.Footnote 25 This is, in fact, exactly how the rank correlation is advertised.

There does not appear to be an unequivocally correct choice. The product-moment definition of correlation allows the extremes to exert more influence on the final results; as a risk manager, this has some value, because crisis episodes receive a larger weight. The rank correlation approach—like its sister measure, the median—attempts to generate a more balanced view of dependence. The lower impact of data outliers—typically, in this case, stemming from crisis outcomes—implies slightly lower and more symmetric correlation estimates. We seek, in our economic-capital estimates, to construct a long-term, unconditional estimate of risk consumption.Footnote 26 With this in mind, given the preceding conclusions, we have a preference for the rank correlation.

There are also more technical arguments. Both correlation matrices, as previously indicated, exhibit a significant number of individual correlation coefficient entries exceeding 0.75. Such a highly correlated system is often referred to as multicollinear.Footnote 27 If we were using this system to estimate a set of statistical parameters, this could potentially pose serious problems. Although this is not our specific application of Ω, even a slight dampening of the high level of correlations implies a greater numerical distinction between our J = 24 individual systemic risk factors. Many models, after all, impose orthogonality on their systemic state variables to avoid such issues. On this dimension, therefore, we also have a slight preference for the rank-correlation measure.

Colour and Commentary 29

(Flavour of Correlation) : Correlation refers to the interdependence between a pair of random variables. There are a variety of ways that it might be practically measured. Two well-known alternatives are the product-moment and rank correlation coefficients. Although they attempt to address the same question, they approach the problem from different angles. Since we find ourselves in the business of computing a large number of pairwise systemic-factor correlations, this distinction is quite relevant for us. On the basis of lower sensitivity to extreme events, greater cross-sectional symmetry, and a modest dampening of the highly collinear nature of our systemic risk-factor system, we have opted to employ the rank-correlation measure to estimate systemic-factor correlations. In the current analysis, the difference is relatively small. Moreover, nothing suggests that these two measures will deviate dramatically in the future. Our choice is thus based upon the perceived conceptual superiority of the rank-correlation measure.

2.2.3 What Time Period?

There are two time-related extremes to be considered in the measurement of risk: long-term unconditional and short-term conditional. A virtual infinity of possible alternatives exists for a spectrum between these two endpoints. Following basic regulatory principles, an economic-capital model is a long-term, unconditional, through-the-cycle risk estimator. This implies that a relatively lengthy time period should be employed for the estimation of our systemic risk-factor correlations. While helpful to understand this choice, it does not entirely answer our question. We have managed to source 20 years of monthly equity time-series data for each of our J = 24 systemic risk factors. Should we use it all, or should we employ some subset of this data? A 10-, 15-, or 20-year period would still be consistent, at least in principle, with through-the-cycle estimation.

Figure 3.11 illustrates, one final time, two rank correlation heat maps for our J = 24 systemic risk factors. The only distinction between the two heat maps is that they are estimated using varying time periods. The 10-year values are based on the months from January 2010 to December 2019, while the 20-year period utilizes the 240 monthly observations from January 2000 to December 2019. On a superficial level, the colour patterns in the two heat maps look highly similar. The 20-year estimates, however, look to be, on average, a bit lighter. This implies higher levels of correlation, which is not terribly surprising, given that the longer period incorporates the most severe parts of the great financial crisis. There is general agreement that financial-variable correlations tend to trend higher—thereby reducing the value of diversification—during periods of turmoil.Footnote 28

Time-indexed heat maps: These heat maps compare the roughly 300 pairwise rank correlation coefficients—over the last 10 and 20 years working backwards from December 2019—across our 24 separate systemic risk variables. The most recent 10-year period appears structurally similar, but exhibits significantly lower levels of correlation than the associated 20-year time horizon.

Figure 3.12 illustrates, again using only the rank dependence measure, the cross section of pairwise (rank) correlation coefficients over our 10- and 20-year periods, respectively. Average correlation, as we’ve seen before, is roughly 0.52 over the full 20-year period. It falls to 0.46 when examining only the last 10 years. We further observe that both the location and dispersion of the two cross sections appear to differ; the most recent 10-year period exhibits generally lower levels of systemic-risk factor dependence.

Time-indexed cross sections: This figure illustrates, again using the rank dependence measure, the cross section of pairwise correlation coefficients over the 10- and 20-year periods working backwards from December 2019, respectively. Both the location and dispersion the two cross sections appear to differ; the last 10-year period exhibits generally lower levels of systemic-risk factor dependence.

Few logical arguments speak for the preference of the 20-year period, relative to the shorter 10-year time span, other than conservatism. Either time interval fulfils the basic requirements of a through-the-cycle risk estimator. A 20-year period could, of course, be considered more appropriate for a long-term unconditional correlation estimate. More importantly, the full 20-year period includes a broader range of equity return outcomes—including the great financial crisis—and consequently generates slightly more conservative estimates. For this reason, the decision is to use the full 20-year period and, over time, simply continue to add to the existing dataset. Each year, this decision on the overall span of data is revisited to ensure both representativeness and consistency with our economic-capital objectives.

Colour and Commentary 30

(Length of Parameter-Estimation Horizon) : When estimating parameters for a long-term through-the-cycle perspective, we would theoretically prefer the longest possible collection of historical time series. Such a dataset is likely to permit an average of the greatest possible number of observed business cycles. There are two catches. First, pulling together such a dataset can be both difficult and expensive. Second, even if you succeed, there are dangers in going far back in time. Too far into the past and—due to structural changes in economic relationships—the data may not be representative of current conditions. This forces the analyst into an awkward dance: the through-the-cycle requires lengthy data history, but not too long. Our approach is, where available, to start with a roughly 20-year time horizon. We then work with this data and carefully examine various sub-periods to understand the implications of different choices. Endeavouring to find conservative and defensible parameters, an appropriate horizon is ultimately selected.

2.3 Distinguishing Systemic Weights and Factor Loadings

Systemic-factor weights and loading parameters, while related, are asking two slightly different questions. The systemic weight attempts to answer the following query:

How important is the overall systemic component, in the determination of the creditworthiness index, relative to the idiosyncratic dimension?

The systemic weight is, following from this point, simply a number between zero and one. These are the parameter values, {α 1, …, α I}, introduced in Chap. 2. A value of zero, for a given credit obligor, suggests that only idiosyncratic risk matters for determination of its migration and default risk outcomes. Were this to apply to all credit counterparties, we would, in essence, have an independent-default model. On the other hand, a systemic weight of unity places all of the importance on the systemic factor. Gordy [18]’s asymptotic single-risk factor model is an example of an approach that implies the presence of only systemic risk.Footnote 29 A defensible position, of course, lies somewhere in between these two extremes.

Factor loadings focus on a different, but again related, dimension. They seek to answer the following question:

How important is each of the J individual systemic risk factors—again, for the calculation of the creditworthiness index—to a given credit obligor?

Factor loadings, for a given counterparty, are thus not, as in the systemic-weight case, a single value, but rather a vector in \(\mathbb {R}^{J}\). Let’s continue to refer to each of these vectors as \(\beta _i\in \mathbb {R}^{1\times J}\) for the ith credit counterpart; this allows us to consistently write the entire matrix of factor loadings as,

Such a constellation of parameters, for each i = 1, …, I, implies a potentially very rich mathematical structure. With J = 24 and I ≈ 600, our β factor loading matrix contains almost 15,000 individual entries. Such flexibility can offer benefits, but it may also dramatically complicate matters.

The structure of each β i essentially creates a linear combination of our J risk factors to act as the systemic contribution for that obligor. Let’s consider a few extreme cases, since they might provide a bit of insight. Imagine that each β i was constant—however, one might desire to define it—across all counterparties. The consequence would be, in fact, a single-factor model. We could easily, in this case, simply replace our J factors with a single linear combination of these variables. Each pair of obligors in the model would thus have a common level of factor-, asset-, and default correlation. Such an approach would, of course, undermine the whole idea of introducing a collection of J systemic variables.

At the opposite end of the spectrum, one could assign distinctly different non-zero values to each of the roughly 15,000 elements in β. The implication—setting aside the practical difficulties of such an undertaking for the moment—is that each obligor’s systemic contribution would be a unique linear combination of our J systemic variables. Given two obligors, i and j, the vectors β i and β j would play a central role in determining their level of default correlation. Indeed, this latter point holds in a general sense.

Once again, an appropriate response to this challenge certainly lies somewhere between these two extreme ends of the spectrum. The actual estimation of the factor loadings and systemic weights should also be informed, in principle, by empirical data. A significant distinction between the factor loadings and systemic weights is that the former possesses dramatically greater dimensionality. The specific data that one employs and, perhaps equally importantly, how the structure of these parameters are organized are important questions. The following sections outline our choices, and the related thought processes, in both of these related areas.

2.4 Systemic-Factor Loadings

Let us begin with the factor loadings; these values are—algebraically speaking—closer to the actual systemic factor. Determination of our factor loadings will, as a consequence, turn out to be rather important in the specification of the systemic weights. As indicated, each β i vector determines the relative weight of the individual systemic factors in the creditworthiness of the ith distinct credit obligor. Some procedure is required to determine the magnitude of each β ij parameter for i = 1, …, I and j = 1, …, J.

2.4.1 Some Key Principles

When beginning any modelling effort, it is almost invariably useful to give some thought to one’s desired conceptual structure. In this case, there is a potentially enormous number of parameters involved, which will create problems of dimensionality and, more practically, statistical identification. Some direction would be helpful. A bit of reflection reveals a few logical principles that we might wish to impose upon this problem:

-

1.

Obligors with the same sectoral and geographical profiles should share the same loadings onto the systemic factors. If this did not hold, it would be very difficult, or even impossible, to interpret and communicate the results.Footnote 30

-

2.

A given obligor should load only onto those sectoral and geographical factors to which it is directly exposed. The correlation matrix, Ω, describes the dependence structure of the underlying equity return factors. As a consequence, the interaction between the factors is already captured. If an obligor then proceeds to load onto all factors, untangling the dependence relationships would become rather messy. This principle can thus inform sensible parameter restrictions.

-

3.

Factor loadings should be both positive and restricted to the unit interval. There is no mathematical or statistical justification for this principle; it stems solely from a desire to enhance our ability to interpret and communicate these choices.

-

4.

A minimal number of systemic factors should be targeted; this is the principal of model parsimony. Not only does this facilitate implementation—in terms of dimensionality and computational complexity—but it also minimizes problems associated with collinearity.

-

5.

To the extent possible, the factor loadings should be informed by empirical data. Since asset returns are not, strictly speaking, observable, it is necessary to identify a sensible proxy. Moreover, the previous principles may restrict the goodness of fit to this proxy data. Nonetheless, this would form an important anchor for the estimates.

While each of these principles make logical sense, nothing suggests that all can be simultaneously achieved. It may be the case, for example, that some of these principles are mutually exclusive.

2.4.2 A Loading Estimation Approach

The structure of the threshold model provides clear insights into a possible estimation procedure for the factor loadings. In particular, for a given obligor i, we have that

where \(\Delta z\in \mathbb {R}^{J\times 1}\). The right-hand side of Eq. 3.9 clearly illustrates the fact that each column of the β i row vector is a linear weight upon the systemic factors. Imagine that we could identify K individual equities with similar properties. That is, stemming from the same geographic region, operating in the same region, and possessing similar overall size. Given T return observations of the kth equity—corresponding to the equivalent time periods for our systemic factors—we could construct the following equation:

for t = 1, …, τ and k = 1, …, K and where r kt is the t-period return of the kth equity in our collection. This is, of course, an ordinary least squares (OLS) problem.Footnote 31 In this setting, the β’s simply reduce to regression coefficients. Further inspection of Eq. 3.10 reveals a certain logic; the return of the kth equity is written as a linear combination of the set of systemic factors plus an idiosyncratic component. This is rather close to what we seek. Again, this amounts to using equity behaviour to estimate asset returns.

Equation 3.10, while logically promising, is not without problems. First of all, there are K equities in each category. The natural consequence is thus K × J individual factor-loading estimates. One could presumably solve this problem by taking an average—basically integrating out the K dimension—of the individual \(\hat {\beta }_{ijk}\) parameters over each j in J.

The second problem, however, is dimensionality. Use of Eq. 3.10 implies a separate weight on each of the J systemic risk factors. Such a complex structure is not easy to interpret. The larger number of parameters also raises issues of parameter robustness. Estimating J = 24 separate parameters with perhaps 10 or 20 years of monthly data is certainly possible, but the sheer number of regression coefficients, the high degree of collinearity between the systemic-risk explanatory variables, and the necessity of averaging estimates collectively represent significant estimation challenges. Computing standard errors is not easy is such a setting and, more importantly, they are unlikely to be entirely trustworthy.

A third problem, making matters even worse, is the fact that nothing in the OLS framework hinders individual β ij values from taking negative values further complicating clarification. Some additional normalization is possible to force positivity, of course, but this only adds to the overall complexity and adds an ad hoc, and difficult-to-justify, element to the estimation procedure.

There are, therefore, at least three separate problems: averaging, dimensionality, and non-positivity. Some of these problems can be mitigated with clever tricks, but the point is that estimating factor-loading coefficients—even with strong simplifying assumptions—is fraught with practical headaches. The results are neither particularly robust nor satisfying. We will, in a few short sections, find ourselves in a similar situation with regard to the systemic weights. In this case the complexity and dimensionality is unavoidable, which argues for maximal simplicity.

2.4.3 A Simplifying Assumption

These sensible reasons to strongly restrict systemic-factor loadings lead to the fairly reasonable question: should we even formally estimate these parameters? One might simply load—for these non public-sector cases—equally onto each obligor’s geographic and industrial systemic factors. The consequence of this legitimate reflection is the following extremely straightforward set of factor-loading coefficients:

-

only a credit entity’s geographic and industrial factor loadings are non-zero;

-

each non-zero factor loading is set to 0.5; and

-

the only exception is a weight of unity on a public-sector counterpart’s geographic loading.

The consequence is a sparse β-matrix entirely populated with non-estimated parameters.Footnote 32 This approach allows us to fulfil four of our five previously highlighted principles. It controls the number of parameters, creates consistency, ensures positivity, and dramatically aids interpretability. The only shortcoming is that the parameter values are not informed by empirical data. This would appear to be the price to be paid for the attainment of the other points.

There is another constructive way to think about this important simplifying assumption. The systemic risk-factor correlation matrix, Ω, possesses a certain dependence structure. The choice of factor loadings further combines our systemic factors to establish some additional variation of obligor-level correlations. There are, however, many possible combinations of factor-loading parameters that achieve basically the same set of results. In a statistical setting, such a situation is referred to as overidentification.Footnote 33 To simplify this fancy term, we can imagine a situation of trying to solve two equations in three unknowns. The problem is not it cannot be solved, but rather the existence of an infinity of possible solutions. Resolving such a situation typically requires restricting it somewhat through the imposition of some kind of constraint.Footnote 34 The current set of non-estimated factor loadings can thus be thought of as a collection of constraints, or over-identifying restrictions, used to permit a sensible model specification.

Colour and Commentary 31

(Factor-Loading Choices) : Having established a set of five key principles for the specification of factor-loading parameters, a number of potential estimation options are found wanting. They require averaging, lead to relatively high degrees of dimensionality, and pose difficulties for statistical inference. Dimension reduction and normalization solve some of these problems, but this leads to relatively small degrees of (economic) variation between individual obligors. Ultimately, therefore, we have decided to employ a simple set of rules for the specification of the factor-loading parameters. This choice fulfils all of our principles, save one: the desire to empirically estimating our factor-loading values. This choice was not taken lightly and can, conceptually, be viewed as a set of overidentifying restrictions upon the usage of our collection of systemic risk factors.

2.4.4 Normalization

Introduction of correlated systemic factors, without some adjustment, will distort the variance structure of our collection of latent creditworthiness state variables. Unit variance of these threshold state variables is necessary for the straightforward determination of default and migration events. A small correction is consequently necessary to preserve this important property of each systemic random observation. Mathematically, the task is relatively simple. For the ith risk obligor, the contribution of the systemic component—abstracting, for now, from the systemic-weight parameter—is given as,

where \(\beta _i\in \mathbb {R}^{1\times J}\) is the ith row of the \(\beta \in \mathbb {R}^{I\times J}\) matrix.Footnote 35

The definition in Eq. 3.11 was already introduced in Chap. 2. Equipped with our factor correlations and loading choices, it is interesting to examine the magnitude of these normalization constants (i.e., the denominator of Eq. 3.11). Figure 3.13 displays the range of these values—for an arbitrary date in 2020—used to ensure that the systemic component has unit variance. The impact is rather a gentle. The span of adjustment factors ranges from roughly 0.75 to one; the average value is approximately 0.95. Normalization thus represents a small, but essential, adjustment to maintain the integrity of the model’s threshold structure.

2.5 Systemic Weights

We were able to avoid some complexity in the specification of the factor loadings; we are not quite as lucky in the case of system weights. A methodology is required to approximate the systemic-weight parameters associated with each group of credit counterparties sharing common characteristics. Consider, similar to the previous setting, a collection of N credit entities from the same region with roughly the same total amount of assets (i.e., firm size) and the same industry classification. Let us begin with a proposition. Imagine that the following identity holds:

What does this mean? First, we are assuming that each the N individual obligors in this sub-category have a common systemic weight, α N. The proposition holds that a reasonable estimator for α N is the average correlation between these N assets. There are, of course, N 2 possible pairwise combinations of these N entities. If we subtract the N diagonal elements, this yields N(N − 1) combinations. Only half of these elements, of course, are unique. We need only examine the lower off-diagonal elements, which explains the conditions on the double sum and the \(\frac {1}{2}\) in the denominator of the constant.

Under what conditions is Eq. 3.13 true? This requires a bit of tedious algebra and a few observations. First, the expected value of each ΔX n and ΔX m is, by construction, equal to zero for all n, m = 1, …, N. Second, the idiosyncratic factors, { Δw n;n = 1, …, N} are independent of all of the systemic factors; the expectation of the product of any idiosyncratic and systemic factor will thus vanish. Finally, we recall that only two factor loadings, as highlighted in previous discussion, are non-zero. These final happy facts eliminate many terms from our development.

Let’s begin with the correlation term in the double sum of our identity from Eq. 3.13 and see how it might be simplified. Working from first principles, we have

where I k and G k denote the industrial and geographic loading from the kth equity series, respectively. We now have a clearer idea of the condition required to establish our identity in Eq. 3.13. If the expectation equates to unity, then the identity holds. In this case, the double sum yields the same value as the denominator in the constant preceding the sum. These terms cancel one another out establishing equality between the left- and right-hand sides indicating that our proposition holds.

Under what conditions does the expectation in Eq. 3.14 reduce to one? It turns out that the collection of N equity series needs to share the same industrial and geographic factors (and factor loadings). In other words, the factor correlations among the N members of our dataset must be identical. Practically, this means that \(\mathrm {B}_{I_{m}}=\mathrm {B}_{I_{n}}\) and \(\mathrm {B}_{G_{m}}=\mathrm {B}_{G_{n}}\).Footnote 36 In this case, we have that

by construction from Eq. 3.12. Naturally, this choice of estimation is applied under the assumption that the model actually holds.

The consequence of this development is that if we organize our estimation categories into a grid with each square sharing a common industrial and geographic systemic factor, then Eq. 3.13 will provide a reasonable estimate of the equity-based systemic weight. In a more general sense, it would appear that as long as the granularity of the systemic-weight estimation matches that of the systemic factor structure, this estimator will work. The analysis thus strongly suggests the use of a regional-sectoral grid to inform the various systemic-weight parameters.

Geographical and industrial identities are sensible and desirable categorizations for systemic weights. They are not, however, the only dimensions that we would like to consider. There is, for example, a reasonable amount of empirical evidence suggesting that systemic importance is also a function of firm size.Footnote 37 Adding a size dimension to the determination of systemic weights is possible, but it transforms a region-industry grid into a cube including region, industry and firm size.

Figure 3.14 provides a schematic view of this systemic-weight cube. It clearly nests the industrial sector and geographical region grid introduced in the derivation of the systemic-weight estimator. With 11 sectors and 13 regions, this yields 143 individual systemic weights. For each firm-size dimension, therefore, it is necessary to add an additional 143 parameters. Each additional firm-size category must thus be selected judiciously. Not only would too many firm-size groups violate the principle of model parsimony, but would also create a significant data burden. As a consequence, we have opted to employ only three firm size groups:

- Small: :

-

total assets size in the interval of EUR (0, 1] billion;

Fig. 3.14 A cube of systemic weights: To include the notion of firm size and respect the estimation method introduced in Eq. 3.13, we have elected to create a cube of systemic-weight parameters.

- Medium: :

-

total firm assets from EUR (1, 10] billion; and

- Large: :

-

total firm assets is excess of EUR 10 billion.

One can clearly dispute the size of the thresholds separating these three categories, but it is hard to disagree that this represents a minimal characterization of the firm-size dimension. Any smaller than three size categories and this dimension would best be ignored; a larger set of groups, on the other hand, would only magnify existing issues surrounding data parsimony and sufficiency.

Although this cube format provides a sensible decomposition of the main factors required to inform systemic weights and offers a convenient representation—along these three dimensions—it is not without a few challenges. The following sections detail the measures taken to address them.

2.5.1 A Systemic-Weight Dataset

There is no way around it: estimation of systemic weights requires a significant amount of data. With 11 industries, 13 regions, and 3 firm-size categories, a set of K = 11 × 13 × 3 = 429 cube sub-categories sharing common characteristics is required. If we hope to have 15–30 equity time series in each sub-category—to ensure a reasonably robust estimate of its average equity return cross correlations—this would necessitate roughly 6000 to 12,000 individual equity time series.

While the actual parameters are determined annually based on an extensive internal analysis, we will use a sample dataset to illustrate the key elements of the estimation procedure.Footnote 38 Our starting point is a collection of 20,000 individual, 120-month, equity index time series across various industries, regions, and firm sizes.Footnote 39 At first glance, this would appear to fall approximately within our (overall) desired dataset size. This is only true, of course, if the underlying equity time series are uniformly distributed across our 429 cube entries. The first order of business, therefore, is to closely examine our dataset to understand how it covers our three cube dimensions.

Table 3.4 takes the first step in exploring our dataset.Footnote 40 It examines, from a marginal perspective, the total number of equity series within each region, industry, and firm-size classification. Our hope of a uniform distribution along our key dimensions does not appear to be fulfilled. Along the regional front, Developed Asia and North America dominate the equity series; they account for roughly 40% of the total. Some important regions for NIB—such as the Baltics and Iceland—are only very lightly represented. With only two equity series, for example, we have virtually no information for Iceland. Indeed, both the Nordic and Baltic regions—as one might expect by virtue of their size—exhibit only a modest amount of data. Given the central importance of these member countries to our mandate, and the need to have granularity for these regions within the economic capital model, it will be necessary to adapt to these data deficiencies.

The industrial and size dimensions look to have, in general, a rather broader range of equity series. Financials and industrials are, by a sizable margin, the largest categories accounting for almost one half of all series. The paper industry looks somewhat thin, but appears to be in better shape than the worst regional categories. Finally, the size decomposition is not exactly split into equally sized groups, but there are substantial numbers of series observations in each group.

While the marginal perspective is a useful starting point, it does not tell the full story. Table 3.5 illustrates the first of three pairwise joint perspectives. It shows the number of equities found within a two-dimensional grid of geographic and industry categories. Again, we see our roughly 20,000 time series in a manner that helps us understand how uniformly distributed they are along our dimensions of interest. 15 grid entries, or about 10% of the total, are empty. More than half of these, of course, stem from the Icelandic region. Numerous individual grid points have hundreds of observations. About one third of the grid entries, conversely, has five or less equity series. It is possible to construct an estimate in this cases, but it will not necessarily be the strongest signal of equity return correlations in this sub-sector.

Since we are considering a cube, there are two other possible two-dimensional viewpoints to be examined: region versus size and sector versus size. Table 3.6 outlines the equity counts for these perspectives. Once again, the regional aspect is the most problematic. Slicing each region into three size categories does not, of course, help out the situation in Iceland and the Baltics. Denmark, Finland, and Norway also exhibit a rather small number of individual series within the largest size category. The sector-size breakdown is somewhat less problematic; again, the paper industry has relatively few equity series. From each of our three two-dimensional perspectives, the number of equity series is far from equally spread among our three dimensions. This is less a data quality issue and more a feature of the regional, sectoral, and size distributions of listed equities.

With 20,000 total series and 429 entries, a uniform distribution would place about 45 equity series in each individual cube entry. Our preliminary analysis has made it clear that this will not occur. Figure 3.15 thus attempts to examine how far we are from this ideal. It provides a visualization of the number of equity series associated with each of the 429 individual cube elements. Colours—using a familiar traffic-light scheme—are used to organize entries into three categories. Red, the most problematic, represents zero equity series associated with a given entry. Yellow, which suggests that an estimate is possible although perhaps not terribly robust, is applied for cases of 2 to 5 equity series. Finally, the colour green indicates the presence of more than 5 equity series; this is a desired outcome. The results are as expected. While we observe substantial amounts of green and yellow, the number of problematic red dots clearly increases as we move from small to large firm size. It is consequently difficult to imagine that each of our cube points will be equally well informed by our dataset.