Abstract

Context: Recent progress in the use of commit data for software defect prediction has driven research on personalized defect prediction. An idea applying one personalized model to another developer came in for seeking an alternative model predicting better than one’s own model. A question arose whether such exemplary developer (bellwether) existed as observed in traditional defect prediction. Objective: To investigate whether bellwether developers existed and how they behaved. Method: Experiments were conducted on 9 OSS projects. Models based on active developers in a project were compared with each other to seek bellwethers, whose models beaten models of the other active developers. Their performance was evaluated with new unseen data from the other active developers and the remaining non-active developers. Results: Bellwether developers were identified in all nine projects. Their performance on new unseen data from the other active developers was not higher than models learned by those developers. The bellwether was only a practical choice for the non-active developers. Conclusion: Bellwethers were a useful prediction model for the non-active developers but not for the other active developers.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Software defect prediction (SDP) is an active research area in software engineering. Traditionally it uses static code metrics from source files to represent characteristics of modules like classes. Machine learning approaches are often used to train prediction models with pairs of the metrics and historical records of bugs identified. Tremendous prediction approaches have still been proposed so far.

A recent study [10] coined just-in-time software defect prediction (JIT SDP) that utilizes a change in a version control system as a unit of prediction. JIT SDP extracts metrics such as the number of adding lines from a commit and trains a prediction model to specify bug-inducing commits. An immediate and finer prediction when a developer makes a commit is an advantage of JIT SDP. It also bring another advantage that prediction models can utilize developer-related information such as experience on a system in addition to code-related metrics. For those reasons, JIT SDP has been a popular research topic [7, 12, 21, 22]. The feature of JIT SDP implies that prediction models can be trained with the commit records of an individual developer. Personalized software defect prediction focuses on developer’s personal data to train and predict the fault proneness of changes [8]. It was expected to improve the prediction performance focusing on and capturing developers’ unique characteristics.

A common issue, regardless of a unit of software defect prediction, is a small amount of data available for training prediction models. The shortage of training data might result in poor performance or abandonment of using software defect prediction in practice. Cross-project defect prediction tackles this issue by using data outside a project for training prediction models. Many CPDP approaches have also been proposed so far [5, 6, 23]. Most of those studies had assumed the traditional software defect prediction that used static code metrics. Recent studies have also tried to improve JIT SDP in the context of CPDP [2, 9, 18, 24]. One of the topics on CPDP is what kind of cross-project data is to be chosen for training among multiple cross-projects. Some studies assumed a single project and did not care about it or combined them into a single one. Other studies proposed selection approaches among the cross-projects [4, 5, 25].

Krishna et al. [11] proposed a bellwether method for the cross-project selection issue. They defined the bellwether method that searches for an exemplar project from a set of projects worked by developers in a community and applies it to all future data generated by that community. The authors then demonstrated with OSS projects that such exemplar projects were found and effective for predicting faulty modules of the other projects. A question arose here whether seeking an exemplary developer was beneficial in the context of cross-personalized software defect prediction. Many contributors to OSS projects are non-active developers and make a small number of commits in a short term. No personalized defect prediction model can be built for them. If an exemplar developer exists, it would be helpful to predict the fault-proneness of their commits. On the one hand, bellwether candidates, who are active developers making commits enough to train personalized prediction models, had worked on the same project together. On the other hand, they are very different from each other [17]. Therefore, an exemplar developer was expected to be found as well as an exemplar project.

In this paper, for cross-personalized software defect prediction, we set out to search for bellwether developers. Through empirical experiments, we addressed the following research questions:

-

RQ\(_1\) How often bellwethers exist among active developers in a project?

-

RQ\(_2\) How are the bellwethers effective for predicting faults made by the other active developers in a project?

-

RQ\(_3\) How are the bellwethers effective for predicting faults made by the rest of the developers in a project?

To answer these research questions, we applied the bellwether method to developers of 9 OSS projects. Bellwethers found were used to train personalized software defect prediction models and applied to unseen commit data of the other active developers. Personalized software defect prediction models by the active developers, including the bellwethers, were also compared with each other regarding the prediction performance on the commit data made by the rest of the developers.

The rest of this paper was organized as follows: Sect. 2 describes past studies related to personalized software defect prediction and bellwethers. Section 3 explains the methodology we adopted. Section 4 shows the experiment results with figures and tables and answers the research questions. Section 5 discusses the threats to the validity of our experiments. Section 6 provides a summary of this paper.

2 Related Work

Software defect prediction (SDP) aims to prioritize software modules regarding the fault-proneness for efficient software quality assurance activities. Different granularity levels, such as function and file, have been considered in past studies. As software version control systems had been prevalent, SDP at change-level (often called just-in-time (JIT) SDP [10]) got popular in software engineering research. An advantage of JIT SDP is that a faulty change can be attributed to a developer as changes are recorded with the information of the authors. Another advantage is that developers’ characteristics can be utilized for prediction in addition to code changes.

Building JIT SDP for each developer was promising as the relationships between developer characteristics and faults were also revealed. For instance, Schröter et al. [17] reported that the defect density by developers was very different from each other. Rahman et al. [16] also showed that an author’s specialized experience in the target file is more important than general experience. Jiang et al. [8] constructed a personalized defect prediction approach based on characteristic vectors of code changes. They also created another model that combines personal data and the other developers’ change data with different weights. Furthermore, they created a meta classifier that uses a general prediction model and the above models. Empirical experiments with OSS projects showed the proposed models were better than the general prediction model. Xia et al. [20] proposed a personalized defect prediction approach that combines a personalized prediction model and other developers’ models with a multi-objective genetic algorithm. Empirical experiments with the same data as [8] showed better prediction performance. These personalized defect prediction approaches utilized other developer’s data to improve the prediction performance.

Cross-project defect prediction is a research topic that uses data from outside of a target to overcome the small amount of dataset obtained. Many CPDP approaches have also been proposed so far [5, 6, 23]. Combining defect prediction models based on other projects was also studied as CPDP [15]. Therefore, the personalized defect prediction approaches in the above can be considered as cross-personalized defect prediction approaches. Cross-personalized defect prediction has not been studied well yet, and it seems a promising research topic.

Krishna et al. proposed a cross-project defect prediction approach based on the bellwether effect [11]. Their bellwether method searches for an exemplar project and applies it to all future data generated by that community. This approach is so simple that a part of developers in a project is simply specified as bellwethers. We thus focused on this approach first to see whether the bellwether effect was observed in the context of cross-personalized defect prediction.

3 Methodology

3.1 Bellwethers Approach

According to [11], we defined the following two operators for seeking bellwether developers in a project:

-

GENERATE: Check if the project has bellwether developers using historical commit data as follows.

-

1.

For all pairs of developers from a project \(D_i, D_j \in P\), predict the fault-proneness of historical commits of \(D_j\) using a prediction model learned with past commits of \(D_i\)

-

2.

Specify a bellwether developer if any \(D_i\) made the most accurate prediction in a majority of \(D_j \in P\)

-

1.

-

APPLY: Predict the fault-proneness of new commit data using the prediction model learned on the past commit data of the bellwether developer.

GENERATE operator is a process to find a bellwether developer. Each developer model was applied to each training data of the other developer models. The most accurate prediction was specified using a statistical method described in Sect. 3.4.

APPLY operator is a process to validate whether a bellwether can really make a good prediction on future commit data. As the bellwether was defined in the context of cross-personalized defect prediction, the prediction was made on the commit data of the other developers only.

Finally, we omitted MONITOR operator defined in [11] as we set aside only one testing commit data set from each developer. Such chronological evaluation needed to be conducted in future work.

3.2 Datasets

We used commit datasets collected from 9 OSS projectsFootnote 1 in a past study [1]. The nine datasets were available through a replication packageFootnote 2. Table 1 describes the definitions of change metrics in the datasets. The change metrics consist of 14 metrics of 5 dimensions defined in [10].

The datasets had no author information, and commits were linked to authors through UNIX timestamps recorded in the datasets and the commits of their corresponding git repositories. Commits with the same timestamp were all removed as it was impossible to connect those commits and their authors. The datasets contained cases having negative values in metrics that should have recorded counting numbers. We also removed suspicious cases that had zero values, meaning nothing committed.

In general, not a few OSS developers made a small number of commits, not enough to build personalized defect prediction models. We thus needed to identify active developers who had commits enough to build a personalized defect prediction model (i.e., training data) and to validate the model (i.e., testing data) using a git repository and a bug-fixing history. GENERATE and APPLY operators required older commits for training and newer commits for testing, respectively. Commit data of each developer were thus separated into two parts according to their timestamps. Training data and testing data had to have enough faulty and non-faulty commits. To this end, we decided to select developers having at least 20 faulty commits and 20 non-faulty commits in training data and having at least 10 faulty commits and 10 non-faulty commits in testing data. A separation was found as follows: Commits of an author were aligned chronologically, and then a separator moved from the latest commit to the previous one until the above condition was satisfied. Note that the separations did not assure that the training data of active developers had the same number of commits.

Table 2 shows statistics of the original datasets, the number of selected commits, and the number of active developers identified. These numbers were varied among the datasets, and it was suitable for evaluation. Note that the commit data of non-active developers were also set aside to address RQ3.

3.3 Prediction

We followed the prediction approach in [11]. Random Forests were employed to predict the fault-proneness of commits. SMOTE [3], a well-known over-sampling technique, was also used to mitigate the issue caused by the imbalance of class labels. We followed to use these two techniques for prediction. We used SMOTE of imblearn package as SMOTE and RandomForestClassifer of scikit-learn package as Random Forests. No parameter optimization was applied. As randomness came in due to SMOTE, the model construction and prediction were repeated 40 times.

3.4 Performance Evaluation

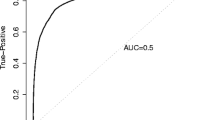

This study adopted distance from perfect classification (ED) [13] as well as [11]. The ED measures the distance between a pair of Pd (recall) and Pf (false alarm) and the ideal point on the ROC (1, 0), weighted by cost function \(\theta \) as follows.

where TP, FN, FP, TN represent true positive, false negative, false positive, and true negative, respectively. The smaller the ED, the better the personalized prediction model. \(\theta \) was set to \(0.6\) as well as [11].

The Scott-Knott test [14] was used to statistically compare the performance of methods on each dataset. This test makes some clusters, each of which consists of homogeneous personalized software defect prediction models regarding their prediction performance. A cluster with the highest performance holds treatments that are clearly better than the others while the performance of those treatments is equivalent.

4 Results

4.1 RQ1: Is There a Bellwether Developer in a Project?

Approach: To address RQ1, we first plotted the prediction performance of active developers in boxplots to see how they were similar to and different from each other. Then, GENERATE operator defined in Sect. 3.1 was applied to those active developers to obtain a bellwether. As we occasionally found no statistical difference among some developers while they were significantly better than the others, we grouped them as a flock of bellwethers, which held bellwether developers with the same prediction performance. The sizes of flocks were observed to see whether they were a majority of the active developers or not.

Results: Figure 1 shows the performance variations among developers. Each subfigure in Fig. 1 corresponds to each project and shows boxplots of EDs of developers on the training data of other active developers. Developer names were anonymized in the subfigures. The boxplots were ordered according to the median ED performance. The left-most developer provided the best prediction model while the right-most developer did not. We observed trends of the performance distributions as follows:

Brackets: Figure 1(a) shows the median prediction performance varied between 0.32 to 0.71. A trend was gently upward from the left side to the right side. No chasm was found between any adjacent developers except for the right two developers.

Broadleaf: Figure 1(b) shows the median prediction performance varied between 0.36 to 0.76. A trend was gently upward, and a chasm was found on the right side.

Camel: Figure 1(c) shows the median prediction performance varied between 0.32 to 0.66. Some left-side developers looked similarly. Their performance was not different from each other. The performance of the others got worsen steadily.

Fabric: Figure 1(d) shows the median prediction performance varied between 0.32 to 0.71. The left two developers were apparently better than the others. The others formed a gentle slope with no apparent chasm.

Neutron: Figure 1(e) shows the median prediction performance varied between 0.31 to 0.54. They formed a gentle slope, and some developers did not look different from each other. The performance of the others got worsen steadily.

Nova: Figure 1(f) shows the median prediction performance varied between 0.36 to 0.73. They formed a gentle slope, and not a few developers did not look different from each other. No clear chasm was not appeared except for the rightmost developer.

Npm: Figure 1(g) shows the median prediction performance varied between 0.5 to 0.64. The range was narrow, but the boxes were thin. The left-most developer thus looked significantly better than the others.

Spring-integration: Figure 1(h) shows the median prediction performance varied between 0.32 to 0.65. The left three developers looked significantly better than the others. No clear chasm did not appear among the others.

Tomcat: Figure 1(i) shows the median prediction performance varied between 0.32 to 0.68. The left-most developer was apparently better than the others. No clear chasm did not appear among the others.

These observations shared some characteristics. The median performance values among developers got changed constantly from the left side to the right side. Steep changes were occasionally observed to figure out the best and the worst prediction models. The ranges of prediction performance were not so different among projects. The median performance varied between 0.3 to 0.8 approximately. Some projects showed narrower ranges to suggest a group of personalized defect prediction models of equivalent performance. However, the best models often made significantly better predictions.

Table 3 shows the number of bellwether developers found as a result of the Scott-Knott test. A single bellwether was found in four out of the nine projects. A flock of bellwether developers was specified in the other projects. Figure 1 visually supported these results.

An interesting observation was that the number of bellwethers was not necessarily relevant to the number of active developers shown in Table 2. For instance, Brackets had a single bellwether developer while Neutron specified six bellwethers though they had a similar number of active developers. From this point of view, Neutron and Nova projects were different from the other projects. They had more than half the number of active developers in a project. The difference seemed due to the homogeneity of development activities among active developers.

4.2 RQ2: How Are the Bellwethers Effective for Predicting Faults Made by the Other Active Developers in a Project?

Approach: This research question asked whether a bellwether model could replace models of other active developers that were applied to their own commits. To address RQ2, we applied the APPLY operator shown in Sect. 3.1. We evaluated the prediction performance of the bellwethers as follows:

-

1.

Prepare local models, each of which was learned on training data of each the other active developers

-

2.

Predict the testing commit data using the local model corresponding to the active developer of the testing data

-

3.

Predict the same commit data provided from the other active developers using each of the bellwether models

-

4.

Compare the result from the bellwether and each result from the local models using the Scott-Knott test

-

5.

Make a decision on the prediction performance of the bellwethers.

We decided that a bellwether was “effective” if the bellwether made predictions significantly better than or equivalent to all the local models. A bellwether was decided as “ineffective” if the bellwether made predictions worse than all the local models. Otherwise, we decided that it was marginal. That is, the bellwether was better than some local models but worse than other local models.

Results: Table 4 shows the number of bellwether models that were decided as effective, marginal, or ineffective. We found that only one bellwether developer of NPM project made significantly better predictions than all the local models. Also, no bellwether developer was decided as ineffective. Most of the bellwether developers were decided as marginal. This result implied that active developers in the investigated projects were so diverse that bellwether developers were not effective nor ineffective. Therefore, bellwether developers were not useful to support other active developers in defect prediction. It can also be said that bellwether models might be a help for some active developers. However, it was unknown who was to be an appropriate recipient. A practical recommendation was to use their own local models.

4.3 RQ3: Do the Bellwether Developers Also Predict Faulty Commits of the Others Than the Bellwether Candidates?

Approach: To address RQ3, we compared the performance of personalized prediction models learned with training commit data of the active developers, including bellwethers. These prediction models were applied to commit data of the non-active developers defined in Sect. 3.2. Then, the prediction results were supplied to the Scott-Knott test to see whether bellwethers in RQ1 kept their places. The purpose is to observe changes between the rankings shown in RQ1 and those on the non-active developers. Therefore, we also adopted Spearman’s \(\rho \) to see how the rankings in RQ1 changed.

Results: Table 5 shows the results of the comparisons. The second column denotes values of Spearman’s \(\rho \). The bold figures mean the correlation was statistically significant at \(\alpha =0.05\). All the coefficients were high. As the insignificance of NPM project seemed due to a small number of active developers (\(n=4)\), we could say the trends observed in Fig. 1 were preserved well. The third column shows whether bellwethers in RQ1 were still a bellwether here. All the bellwethers kept their positions in four out of the nine projects, namely, Broadleaf, Fabric, NPM, and Tomcat projects. For these projects, the bellwether developers were useful to predict the fault-proneness of the non-active developers. In Brackets and Camel projects, the bellwethers were no longer the best choice for defect prediction for the non-active developers. The fourth column of Table 5 shows to which ranks those bellwethers moved. They kept second or third places and thus were practically better choices among more than ten active developers. The same logic went to the rest of the projects. Neutron, Nova, and Spring-integration projects had both types of bellwethers, but they totally kept better places.

5 Threats to Validity

Our study suffered from some threats to validity that were often observed in data-oriented software engineering research. First, we relied on commit data from a past study. Commits and bugs of the data were linked with Commit GuruFootnote 3. Therefore, some class of defects might miss due to a limitation of this tool. The change measures shown in Table 1 are popular but recent studies (e.g., [19]) proposed new measures to improve the predictive performance. These factors might affect our conclusions. Furthermore, these data had no information regarding developers who committed as described in Sect. 3.2. We thus linked developers and commits based on timestamps and dropped off not a few commit data, as shown in Table 2. These automatic processes might miss correct links and find incorrect links. Its accuracy affected our experiment results.

Second, our study divided commits of each developer into training data and testing data chronologically but did not set the same separation among developers. Some commits were thus predicted using commits made in the future. Furthermore, we might miss the effects of chronological proximity between commits. Experiments in a chronological online situation are desirable in future work.

Finally, the results in our study were limited to the projects investigated. Experiments on different projects might lead to different conclusions such as the absence of bellwether developer. We think this threat to external validity was slightly mitigated as OSS projects were different in size, active developers, and so on.

6 Conclusion

This study investigated the existence and performance of bellwether developers for cross-personalized defect prediction. The first experiment revealed that bellwether developers existed in all the nine projects we investigated. Their personalized defect prediction models achieved better performance on training data of personalized defect prediction models of the other active developers in a project. However, the second experiment showed that these personalized defect prediction models were rarely the best choice for new unseen commit data made by the active developers. We then found that the bellwethers were practical choices for non-active developers to predict the fault-proneness of their commits.

In future work, we will conduct experiments under chronological online situations, which is a more realistic setting for developers. The setting will enable us to analyze what time an active developer gets and step down a bellwether developer, for example. Also, comparisons to other cross-personalized defect prediction approaches are an interesting topic for improving prediction performance.

Notes

- 1.

Originally ten datasets were provided but one (JGroups) was removed because only one active developer remained after preprocessing described in this section.

- 2.

- 3.

References

Cabral, G.G., Minku, L.L., Shihab, E., Mujahid, S.: Class imbalance evolution and verification latency in just-in-time software defect prediction. In: 2019 IEEE/ACM 41st International Conference on Software Engineering (ICSE), pp. 666–676 (2019). https://doi.org/10.1109/ICSE.2019.00076

Catolino, G., Di Nucci, D., Ferrucci, F.: Cross-project just-in-time bug prediction for mobile apps: An empirical assessment. In: 2019 IEEE/ACM 6th International Conference on Mobile Software Engineering and Systems (MOBILESoft), pp. 99–110 (2019). https://doi.org/10.1109/MOBILESoft.2019.00023

Chawla, N.V., Bowyer, K.W., Hall, L.O., Kegelmeyer, W.P.: SMOTE: synthetic minority over-sampling technique. J. Artif. Intell. Res. 16, 321–357 (2002)

He, Z., Peters, F., Menzies, T., Yang, Y.: Learning from open-source projects: an empirical study on defect prediction. In: Proceedings of ESEM 2013, pp. 45–54. IEEE (2013)

Herbold, S.: Training data selection for cross-project defect prediction. In: Proceedings of PROMISE ’13, pp. 6:1–6:10. ACM (2013)

Hosseini, S., Turhan, B., Gunarathna, D.: A systematic literature review and meta-analysis on cross project defect prediction. IEEE Trans. Softw. Eng. 45(2), 111–147 (2019)

Jahanshahi, H., Jothimani, D., Başar, A., Cevik, M.: Does chronology matter in JIT defect prediction? A partial replication study. In: Proceedings of the Fifteenth International Conference on Predictive Models and Data Analytics in Software Engineering, pp. 90–99 (2019). https://doi.org/10.1145/3345629.3351449

Jiang, T., Tan, L., Kim, S.: Personalized defect prediction. In: Proceedings of International Conference on Automated Software Engineering, pp. 279–289 (2013)

Kamei, Y., Fukushima, T., McIntosh, S., Yamashita, K., Ubayashi, N., Hassan, A.E.: Studying just-in-time defect prediction using cross-project models. Empir. Softw. Eng. 21(6), 2072–2106 (2016). https://doi.org/10.1007/s10664-015-9400-x

Kamei, Y., et al.: A large-scale empirical study of just-in-time quality assurance. IEEE Trans. Softw. Eng. 39(6), 757–773 (2013). https://doi.org/10.1109/TSE.2012.70

Krishna, R., Menzies, T., Fu, W.: Too much automation? The bellwether effect and its implications for transfer learning. In: Proceedings of International Conference on Automated Software Engineering, pp. 122–131 (2016)

Li, W., Zhang, W., Jia, X., Huang, Z.: Effort-aware semi-supervised just-in-time defect prediction. Inf. Softw. Technol. 126, 106364 (2020). https://doi.org/10.1016/j.infsof.2020.106364

Ma, Y., Cukic, B.: Adequate and precise evaluation of quality models in software engineering studies. In: Proceedings of International Workshop on Predictor Models in Software Engineering, p. 9 (2007)

Mittas, N., Angelis, L.: Ranking and clustering software cost estimation models through a multiple comparisons algorithm. IEEE Trans. Softw. Eng. 39(4), 537–551 (2013)

Panichella, A., Oliveto, R., De Lucia, A.: Cross-project defect prediction models: L’Union fait la force. In: Proceedings of CSMR-WCRE ’14, pp. 164–173. IEEE (2014)

Rahman, F., Devanbu, P.: Ownership, experience and defects: a fine-grained study of authorship. In: Proceedings of International Conference on Software Engineering, pp. 491–500 (2011)

Schröter, A., Zimmermann, T., Premraj, R., Zeller, A.: Where do bugs come from? SIGSOFT Softw. Eng. Notes 31(6), 1–2 (2006)

Tabassum, S., Minku, L.L., Feng, D., Cabral, G.G., Song, L.: An investigation of cross-project learning in online just-in-time software defect prediction. In: Proceedings of International Conference on Software Engineering, New York, NY, USA, pp. 554–565 (2020). https://doi.org/10.1145/3377811.3380403

Trautsch, A., Herbold, S., Grabowski, J.: Static source code metrics and static analysis warnings for fine-grained just-in-time defect prediction. In: 2020 IEEE International Conference on Software Maintenance and Evolution (ICSME), pp. 127–138 (2020)

Xia, X., Lo, D., Wang, X., Yang, X.: Collective personalized change classification with multiobjective search. IEEE Trans. Reliab. 65(4), 1810–1829 (2016)

Yang, X., Lo, D., Xia, X., Sun, J.: TLEL: a two-layer ensemble learning approach for just-in-time defect prediction. Inf. Softw. Technol. 87, 206–220 (2017)

Yang, Y., et al.: Effort-aware just-in-time defect prediction: simple unsupervised models could be better than supervised models. In: Proceedings of the 2016 24th ACM SIGSOFT International Symposium on Foundations of Software Engineering, pp. 157–168 (2016)

Zhou, Y., et al.: How far we have progressed in the journey? An examination of cross-project defect prediction. ACM Trans. Softw. Eng. Methodol. 27(1), 1–51 (2018)

Zhu, K., Zhang, N., Ying, S., Zhu, D.: Within-project and cross-project just-in-time defect prediction based on denoising autoencoder and convolutional neural network. IET Softw. 14(3), 185–195 (2020). https://doi.org/10.1049/iet-sen.2019.0278

Zimmermann, T., Nagappan, N., Gall, H., Giger, E., Murphy, B.: Cross-project defect prediction: a large scale experiment on data vs. domain vs. process. In: Proceedings of ESEC/FSE ’09, pp. 91–100. ACM (2009)

Acknowledgment

This work was partially supported by JSPS KAKENHI Grant #18K11246, #21K11831, #21K11833, and Wesco Scientific Promotion Foundation.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Amasaki, S., Aman, H., Yokogawa, T. (2021). Searching for Bellwether Developers for Cross-Personalized Defect Prediction. In: Ardito, L., Jedlitschka, A., Morisio, M., Torchiano, M. (eds) Product-Focused Software Process Improvement. PROFES 2021. Lecture Notes in Computer Science(), vol 13126. Springer, Cham. https://doi.org/10.1007/978-3-030-91452-3_12

Download citation

DOI: https://doi.org/10.1007/978-3-030-91452-3_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-91451-6

Online ISBN: 978-3-030-91452-3

eBook Packages: Computer ScienceComputer Science (R0)