Abstract

The word cybernetics comes from the Greek word Kubernetes (κυβερνήτης), and it translates as helmsman or governor. The term was first coined in the 1800s by the French physicist André-Marie Ampère in his attempt to classify it as the science of the control governments (Britannica, 2014). The term was quickly forgotten, and it took nearly one century for it to be used again. The American mathematician Norbert Wiener described it in his book as “the science of control and communications in the animal and machine” (Britannica, 2014). Cybernetic systems, as we know today, are digitized systems embedded in living organisms, and as they continue to grow in prevalence, humans will continue to get powered with the multifaceted capabilities and health benefits unparalleled to what they had before. Most current platforms of interwoven cybernetic systems consist of electrodes being attached to or in close contact with human epithelial surfaces and brain structures, helping to transmit external signals more efficiently to human systems, and improving processing speed of humans. Neuralink, for instance, is a brain–machine interface where polymer strings and electrodes transmit electrophysiological data to the brain. Prosthetics with electromyogram (EMG) and electrodes are also frequently used to restore lost organ capabilities in humans. Likewise, artificial retinas can now restore eyesight. The future of these technologies is yet to be seen since most existing ones are prototypes. However, they hold great potential in the fields of human health and even augmenting computer intelligence to more closely mimic human capabilities. As cybernetic systems continue to grow in prevalence, certain ethical concerns arise regarding whether or not it is right to have extended capabilities with computers directly embedded into the human body and experience.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

Introduction

Cybernetic systems are defined by having two feedback loops. One allows the system to adapt and learn, while the other makes small adjustments which help make learning possible (Montouri, 2011). A third, less essential feedback loop is also used less frequently with human senses needing to replace old with newer information to allow the system to adapt. Cybernetic systems are based on feedback mechanisms at their core and have the capability to control living organisms via machines (Montouri, 2011). The potential power of cybernetic systems to revolutionize the healthcare industry, biomechanical parts, and communication in living systems is incredible (Warwick, 2020).

Currently, cybernetic systems are not fully available in the commercial market; they still remain a research topic with few prototypes. For instance, real cybernetic systems used in the real world today are heart pacemakers and prosthetic organisms, wherein the organs collect data from surrounding tissue in order to function (Weir et al., 2009). The role of large volumes of readily available data in creating these cybernetic systems is incredibly critical. For example, in the case of neural electrodes, one must acquire electrical data from the impulses in the brain and translate those signals into messages for the receiver to fully comprehend. When there is more data that is accumulated, the system learns more about its surroundings. These data essentially become the base of the machine learning and artificial intelligence systems. One of the more recent revolutions within the cybernetics industry is Neuralink Corporation, which capitalizes on the large volumes of data it is able to collect and use to work on medical advances within the neurological field (Statt, 2017). Given the rate at which this field is growing, cybernetic systems have the potential capability to enhance human power and health significantly but will have ethical implications as the field becomes more advanced, and possibly creating health inequity, as these systems are often expensive (Timmermans & Kaufman, 2020).

Existing Working Systems

How the Technology Was Created and Designed

Brain–machine interface (BMI) is also referred as brain–computer interface (BCI), which is a system that translates neuronal information into commands that can control external devices such as prosthetics (Kumarasinghe et al., 2021). It started in 1924 when the German psychiatrist and physiologist Hans Berger recorded for the first time in history human brain activity by using a method called electroencephalography (EEG) (Millett, 2001). The term brain–computer interface (BCI) was actually coined by Jacques Vidal, a faculty member at the University of California, Los Angeles (UCLA) (McFarland & Wolfpaw, 2017). It was in 1973 when during a sabbatical that he published a paper on controlling external objects using EEG signals.

Human use of BMI had started already by the time Vidal’s paper was released, and in 1978, the researcher William Dobelle was able to successfully implant a device for helping people who have previously lost their vision to see again (Lewis & Rosenfield, 2016). A similar device will be further discussed in the Human Condition Restoration section.

Future Applications on Humans

Neuralink Corporation is a neurotechnology start-up company, co-founded by billionaire Elon Musk and is in San Francisco, CA (Winkler, 2017). The company’s main goal is to provide a better integration between the human brain and machines. Neuralink started developing a new kind of brain–machine interface (BMI) that holds promise for the restoration of sensory and motor function and the treatment of neurological disorders (Musk, 2019). This BMI system is relatively small compared to existing systems. It has as many as 3072 electrodes per array distributed across 96 threads (Musk, 2019). For comparison, Neuralink claims that this number of electrodes is one order of magnitude higher than existing BMIs. Each thread contains 32 independent electrodes. Though Neuralink has created more than 20 electrode and thread types, the two designs shown in the picture below are called Linear Edge and Tree types (Fig. 1).

What is important to note about Neuralink’s ambitious promises is that the company is trying to make these BMI implants more widely usable and available, e.g., through wireless communication and charging. While existing systems are big and cumbersome, Neuralink’s main computer is the size of a large coin, and its wires are more biocompatible than existing BMI implementations, which historically caused signal interference by the scarring tissue (Wiggers, 2020). Though human trials are still on hold, waiting for FDA approval, Neuralink claims that it was able to perform 19 animal implants with 87% success rate (Wiggers, 2020).

Medical Applications

Cybernetic systems have the potential to be applied to many medical scenarios as they advance. Due to the manner in which cybernetic systems blend in with their surrounding environments, including hematogenic systems, key advances in micro-miniature technology and intra-system devices make the successful employment of these data more probable (Britannica, 2019). For example, Neuralink has many polymer strings and electrodes that convert detectable action potentials to electrophysiological data. By doing this, one can capitalize on these data to make inferences about the brain. Additionally, one can use such seamless types of wiring for biological and neural control as well (Fig. 2).

The diagram shows how the human brain connects with a cybernetic limb and the way in which they operate together (PNGJoy, n.d.)

With such seamless wireless control on the brain, it can help with many noninvasive procedures or aid in revolutionizing minimally invasive procedures by making them noninvasive. Elon Musk points out that “while these successes suggest that high fidelity information transfer between brains and machines is possible, development of BMI has been critically limited by theinability to record the signals from large numbers of neurons. Noninvasive approaches can record the average of millions of neurons through the skull, but this signal is distorted and nonspecific” (Musk, 2019).

In addition, prosthetics have great capability of mimicking organs, but are especially useful for extremities and distal limbs such as arms, hands, legs, and feet. In the most recent decade, human-cybernetic interfaces have been growing in sophistication and prevalence in the form of prosthetic limbs, wherein most of them are electromyogram (EMG) prosthetics. Electromyography is a medical technique which involves recording skeletal muscle activity data, and converting that via a transducer into a signal recordable by the electrode. Overall, the medical applications of such a system are that it allows medical professionals to seamlessly collect patient data and use these data to aid in diagnostic procedures. Without having to necessarily perform surgery or other invasive procedures, professionals can collectvaluable patient data and further improve future prosthetics designs.

Human Condition Restoration

In addition to the direct medical applications in the healthcare field where the data collected by these user–machine interfaces can aid in finding trends, the human condition can be restored or even enhanced. Recently, there has been a large surge in research analyzing artificial retinas. Emphasis has been placed on specific retinas which can capture light and relay a signal to the brain, as well as create procedures for the brain to process these light data. This research is similar to other medical advances and all others in the sense that these data are the key for these newer embedded devices to function correctly. These artificial retina devices restore electrical stimulation of the neurons in the eyes. These devices take in the artificial light impulses and activate their phosphenes through a form of cortical stimulation. For example, in a recent study done with permanent artificial retinas, though the quality of the image is not quite clear, the system is at least functional, and has the potential to become an economically viable solution once the image quality increases over time (Chichilnsiky, n.d.).

In addition to artificial retinas, myoelectric prostheses have demonstrated their abilities to aid in human condition restoration, which is providing humans with limbs with functionality mimicking their natural counterparts. They are similar to EMG prosthetics, in the sense that the electrical data could be used and manipulated to control the arm. Myoelectric prostheses have the ability to aid in such situations. The key difference between the two methods is that myoelectric prosthetics are essentially robots at their core. Previous research models involved building limbs with myoelectric prostheses and controlling them with a separate remote. Now, they have evolved to a point where the host human brain can control these limbs. Thus, the movement of these prostheses is fueled by the twitch fiber skeletal muscle electrical signals for the prosthetics.

Human Condition Enhancement

Ultrasonic Sense for the Blind

In today’s world, society is moving forward at a very rapid pace and people with disabilities are often forgotten or left behind as they are often marginalized and seen as a burden to society. According to the World Health Organization (WHO), visual impairment affects about 1% of our global population (Pascolini & Mariotti, 2012). While the WHO is monitoring and looking for ways to prevent visual impairment, others like Sylvain Cardin, Daniel Thalmann, and Frederic Vexo from the Virtual Reality Laboratory (VRlab) and the Ecole Polytechnique Fédérale de Lausanne (EPOFL) in Switzerland are looking to remediate and improve people’s mobility by giving them a new sense (Cardin et al., 2006). This wearable system alerts the user of close-range objects as they walk. In short, it uses two ultrasonic sensors for input and two vibrators for output. Later models revealed four sensors and eight vibrators (Adhe et al., 2015) (Fig. 3).

This diagram shows a representation of a shirt with sensors and vibrating actuators attached to it (Clipart Library, n.d.-a)

“So how does this system work?” – Sonars (the ultrasonic sensors) (Britannica, 2019) are used to measure the distance to an object. They use a transducer to send and to receive ultrasonic sound waves, and with information one can calculate the distance – the distance is equal to the time the sound wave takes to hit the object and come back to the sensor, divided by the speed of sound (Fig. 4).

Cardin, Thalmann, and Vexo used a jacket to hold the sonar sensors in each shoulder and vibrators on the sleeves. A microcontroller was used to calculate the distance between the person and the objects. This distance would then be transmitted to a digital-analog converter and transformed into a precise amount of how much voltage to pass to each of the vibrators. The intensity of the vibration would indicate how close an individual was to the object. Though one limitation with this system was that the sonar had roughly 60° “field of view” from where they were mounted. So relatively close objects could not be detected (Adhe et al., 2015).

Around the same time this previously mentioned system was being developed, Victor Mateevitsi, Haggadone, Leigh, Kunzer, Kenyon (2013), from the University of Illinois at Chicago, developed a similar system as Cardin’s. They called it the SpiderSense. It had 13 ultrasonic sensors, and it used servos with pressure arms attached instead of vibrators. The main advantage of this design was that the individual would have a sensory field of roughly 360°. Further development of this work could be used not only to help visually impaired people, but also to improve safety, for example: cyclists riding on the road, and police officers alone on-duty.

Deep Brain Stimulation

Similar in concept to Neuralink’s device, deep brain stimulation (DBS) is a surgical procedure that has been used in the treatment of the symptoms of Parkinson’s disease and other movement disorders (Medical Advisory Secretariat, 2005). While the cause for Parkinson’s disease is still unknown, researchers have been able to identify that it causes cell death in the midbrain region (substantia nigra) in charge of creating dopamine, an important hormone and neurotransmitter that tells one’s body how to feel and is also used to transmit messages between nerve cells (Britannica, 2020). This neurodegeneration causes an imbalance in the dopamine levels and thus creates motor control issues associated with Parkinson’s disease. The most distinctive symptoms are tremors. The only drug currently available that effectively deals with Parkinson’s disease is called Levodopa, and deep brain stimulation is used to supplement it (Medical Advisory Secretariat, 2005).

The development of deep brain stimulation started in the 1980s after scientists were able to identify how to suppress the neurological symptoms. This device is composed of three components: a neurostimulator to generate electric impulse, one lead (a thin coiled wire insulated with polyurethane), and an extension to connect the previous two devices. Depending on how severe the motor disorder is, unilateral versus bilateral, a second device may also be used (Medical Advisory Secretariat, 2005) (Fig. 5).

This diagram shows the mechanisms behind an early deep brain stimulator (DBS) (Clipart Library, n.d.-b)

Reverse Systems: Developing a Human Brain in a Robotic System

One of the purposes of designing robotic systems based on the human brain is so that the human–robot interaction is less awkward. Humans have learned to interact with each other over generations, and without realizing this, we communicate by mimicking each other’s gestures, way of speaking, or even their stance and frame of mind.

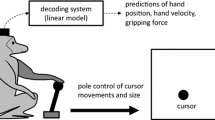

By focusing on how the brain functions and how humans behave, a group of engineers decided to look for new ways to approach the design of robots (Markram et al., 2011). Instead of programming every function of the robot, these engineers decided to create a robotic system that would mimic the human central nervous system, which gives us multiple adaptive control mechanisms that keep learning as situations change. This was observed during an experiment in which a human was asked to hold a handle controlled by a robot. As the handle was moved, the human was able to adapt to the robot’s actions by counteracting the perturbations made by the robot. This infers that the central nervous system is able to make the most effective use of the energy by creating internal predictive models to generate force in anticipation of the perturbation (Oztop et al., 2015). This goes back to the idea that cybernetics is the control loop of “input, decision, and output,” in which the predictive models are part of decision making.

To further investigate this robotic systemthat imitates the human brain, robots were given visual and sensory devices which would help their awareness. Like humans, the robot needs to perceive the current state of its body before moving to the next state. This neural representation of the body in the brain is referred to as the body schema (Naito & Morita, 2014). A new paradigm called “robot skill generation using human sensorimotor learning” (RSHL) was proposed to teach robots human skills. This paradigm integrates with the human motor control system by establishing an intuitive teleoperation system, where a human operator learns to control the joints of the robot (Oztop et al., 2015). As these operations are performed more often, the robot seems to incorporate the human operator’s body schema.

Future use of this RSHL paradigm could produce robots that could learn to perform tasks as if they were performed by other humans. The only caveat – or maybe even a future job opportunity – is that it would require a human to teach these skills to the robot. We could see the job markets changing as robots replace human laborers. Harvest CROO Robotics designed a robot to pick fruits that would do the work of 30 humans (Paquette, 2014). If we look around us, we can already see some of these labor-intensive jobs getting replaced. Robots like the Roomba can already sweep, vacuum, and mop the floors. We even also have lawn robots to mow grass (Vaglica, 2016). As one can see, the RSHL paradigm is here to change the way humans interact with the world around them.

Ethical Implications of Cybernetic Systems

Two key ethical implications of cybernetic systems are whether or not it is “natural” for humans to have extraordinary capabilities, and whether humans should have those. These ethical concerns are unlikely to become a large contention until much later, when cybernetic systems are commercially available, and biologicalenhancements using such systems start to become the new norm.

Many of these concerns are actually aligned with the key ethical considerations regarding genetic engineering changes to the human state. This is likely because both genetic engineering and embedded cybernetic systems have the ability to enhance humans beyond the natural biologic capabilities with which they are endowed. By artificially enhancing humans, one of the major questions becomes whether defying the natural course of evolution should be allowed (Almeida & Diogo, 2019).

Commensurate with the ideas of rapid enhancement, ethical concerns which should be discussed in advance are the unfair advantages presented by having first access to such technologies. We mentioned that there have been advances in helping visually impaired people “to see with their ears.” This type of echolocation technology could potentially be used in combination to give one side an unfair advantage in night battles or during events when visibility is a crucial tactical problem.

In competitive sports, doping, i.e., the use of illegal substances to enhance and improve the natural human condition, is generally considered illegal and presents grounds for disqualification (Macur, 2014). On this note, take for example cycling. If an athlete would use sensory devices to be aware of hiscompetitors, he then could efficiently focus all his energy on the race: burning extra energy when other athletes were in the front or behind, and conserving energy when other competitors fall far behind. In baseball, the batter could have a sensor on his helmet or shoulder letting him know when to swing. In car racing, the driver would just focus on the acceleration, while the system would focus on deceleration (braking). One can see that while these systems do not yet exist or are not used, ethically, using these augmenting devices to improve the human condition could be considered unfair to the ones without access to them. One recent controversy was when in 2019 Eliud Kipchoge won a marathon in less than 2 hours while wearing a running-shoes prototype. Though the shoes were not banned for upcoming competitions, it took the International Olympic Committee (IOC) nearly 6 months to make that decision. In this example, the shoes were not part of an active robotic system that made the user run faster, but the design did provide a passive feedback loop which enhanced the runner’s speed: both by providing more comfort to the runner’s legs and by adding extra spring in the step, thus adding more speed and efficiency. Goingback to the idea that cybernetics is the control loop of “input, decision, and output,” the shoe design greatly increases the athlete’s output.

Conclusion

Cybernetics is, as Norbert Wiener, defined: “the science of control and communications in the animal and machine” (Britannica, 2014). This rapidly growing field is pushing the barriers of science and medicine as we know them today. From neuroprosthetics and other data-driven artificial organs to robotic systems, cybernetic systems encompass the sphere of possibilities that occur at the direct intersection of computer systems and humans.

In this chapter, we have mostly focused on the medical uses of prosthetics. First, we focused on noninvasive systems that used electromyography to record and translate skeletal activities in prosthetic limb movements. Second, we looked at the advanced robotic myoelectric prostheses. Third, we discussed more invasive systems like artificial retinas and the Neuralink Corporation, which take cybernetic systems one step further by integrating computer and robotic machines with the human brain.

Future applications of brain–machine interfaces like the Neuralink open the field for many other possibilities that humans have yet to achieve, such as increasing human memory, psychomotor accuracy, and speed.

However, due to the extensive and untapped potential of cybernetic systems, ethicists still remain wary and cautious of the development of these tools, as they lead to questions about whether or not humans should be endowed with the enhanced potential afforded by embedded computation. Beyond ethical issues, our current knowledge about how neural processes work is still dwarfed by what we have not been able to understand and to explore.

The human acceptance and use of cybernetic systems will probably be multi-phase, where the initial phases will be focused on human reconditioning like the artificial retinas and other prosthetics like Neuralink. Then, it will be followed by human enhancement where we might be able to use systems to improve human cognition. This will most likely be the slowest phase since each government has its own laws regarding medical policies and practices (Wittes & Chong, 2014).

References

Adhe, S., Kunthewad, S., Shinde, P., & Kulkarni, M. (2015). Ultrasonic Smart Stick for Visually Impaired People. IOSR Journal of Electronics and Communication Engineering.

Almeida, M., & Diogo, R. (2019). Human enhancement: Genetic engineering and evolution. Evolution, Medicine, and Public Health., 2019(1), 183–189. https://doi.org/10.1093/emph/eoz026.

Britannica, T. Editors of Encyclopaedia (2014, January 15). Cybernetics. Encyclopedia Britannica. https://www.britannica.com/science/cybernetics

Britannica, T. Editors of Encyclopaedia (2019, May 30). Sonar. Encyclopedia Britannica. https://www.britannica.com/technology/sonar

Britannica, T. Editors of Encyclopaedia (2020, January 30). Organ. Encyclopedia Britannica. https://www.britannica.com/science/organ-biology

Cardin, S., Thalmann, D., & Vexo, F. (2006). A wearable system for mobility improvement of visually impaired people. The Visual Computer, 23, 109–118. https://doi.org/10.1007/s00371-006-0032-4.

Chichilnisky, E. (n.d.). Approach. Retrieved November 24, 2020, from https://med.stanford.edu/artificial-retina/research/approach.html

Clipart Library. (n.d.-aa). Transparent T Shirts [Image]. Retrieved from http://clipart-library.com/clip-art/transparent-t-shirts-12.htm

Clipart Library. (n.d.-bb). Clipart Brain [Image]. Retrieved from http://clipart-library.com/clipart/clip-art-brain_4.htm

Kumarasinghe, K., Kasabov, N., & Taylor, D. (2021). Brain-inspired spiking neural networks for decoding and understanding muscle activity and kinematics from electroencephalography signals during hand movements. Scientific Reports, 11, 2486. https://doi.org/10.1038/s41598-021-81805-4.

Lewis, P. M., & Rosenfeld, J. V. (2016). Electrical stimulation of the brain and the development of cortical visual prostheses: A historical perspective. Brain Research, 1630, 208–224. https://doi.org/10.1016/j.brainres.2015.08.

Markram, H., Meier, K., Lippert, T., Grillner, S., Frackowiak, R., Dehaene, S., Knoll, A., Sompolinsky, H., Verstreken, K., DeFelipe, J., Grant, S., Changeux, J., & Saria, A. (2011). Introducing the human brain project. Procedia Computer Science, 7, 39–42. https://doi.org/10.1016/j.procs.2011.12.015.

Mateevitsi, V., Haggadone, B., Leigh, J., Kunzer, B., and Kenyon, R.V. (2013). Sensing the environment through SpiderSense. Proceedings of the 4th augmented human international conference, pp. 51–57.

McFarland, D. J., & Wolpaw, J. R. (2017). EEG-based brain-computer interfaces. Current Opinion in Biomedical Engineering, 4, 194–200. https://doi.org/10.1016/j.cobme.2017.11.004.

Medical Advisory Secretariat. (2005, March 1). Deep brain stimulation for Parkinson’s disease and other movement disorders: An evidence-based analysis. Ontario health technology assessment series, 5(2), 1–56.

Millett, D. (2001). From psychic energy to the EEG. Perspectives in Biology and Medicine, 44(4), 522–542. https://doi.org/10.1353/pbm.2001.0070.

Montouri, A. (2011). Cybernetics. Retrieved November, 2020 from https://www.sciencedirect.com/topics/neuroscience/cybernetics

Musk, E. (2019). An integrated brain-machine Interface platform with thousands of channels. Journal of Medical Internet Research, 21(10). https://doi.org/10.2196/16194.

Naito, E., & Morita, T. (2014). Brain and nerve = Shinkei kenkyu no shinpo, 66(4), 367–380.

Oztop, E., Ugur, E., Shimizu, Y., et al. (2015). Chapter 2: Humanoid brain science. In G. Cheng (Ed.), Humanoid robotics and neuroscience: Science, engineering and society. Boca Raton: CRC Press/Taylor & Francis.

Paquette, Danielle. (2014). Farmworker vs. Robot. The Washington Post. Retrieved October 13, 2020, https://www.washingtonpost.com/news/national/wp/2019/02/17/feature/inside-the-race-to-replace-farmworkers-with-robots/

Pascolini, D., & Mariotti, S. P. (2012). Global estimates of visual impairment: 2010. The British Journal of Ophthalmology, 96(5), 614–618. https://doi.org/10.1136/bjophthalmol-2011-300539.

PNGJoy. (n.d.). Brain Human Anatomy [Image]. Retrieved from https://www.pngjoy.com/preview/t1x2q7w9f7v6x4_human-brain-clipart-brain-human-anatomy-png-download/

Statt, N. (2017, March 27). Elon Musk launches Neuralink, a venture to merge the human brain with AI. The Verge. Archived from the original on February 6, 2018. Retrieved September 6, 2020.

Timmermans, S., & Kaufman, R. (2020). Technologies and health inequities. Annual Review of Sociology, 46(1), 583–602. https://doi.org/10.1146/annurev-soc-121919-054802.

Vaglica, S. (2016, April 12). Does a robotic lawn mower really cut it?. The Wallstreet Journal. Retrieved October 13, 2020, from https://www.wsj.com/articles/does-a-robotic-lawn-mower-really-cut-it-1460488911.

Warwick, K. C. U. (2020, April 2). The Future of Artificial Intelligence and Cybernetics. Retrieved March 31, 2021, from https://www.technologyreview.com/2016/11/10/156141/the-future-of-artificial-intelligence-and-cybernetics/

Weir, R. F., Troyk, P. R., DeMichele, G. A., Kerns, D. A., Schorsch, J. F., & Maas, H. (2009). Implantable myoelectric sensors (IMESs) for intramuscular electromyogram recording. IEEE transactions on bio-medical engineering, 56(1), 159–171. https://doi.org/10.1109/TBME.2008.2005942.

Wiggers, K. (2020, August 31). Neuralink demonstrates its next-generation brain-machine interface. Retrieved October 12, 2020 from https://venturebeat.com/2020/08/28/neuralink-demonstrates-its-next-generation-brain-machine-interface/

Winkler, R. (2017, March 27). Elon Musk Launches Neuralink to Connect Brains With Computers. Wall Street Journal. Archived from the original on May 5, 2017.

Wittes, B., Chong, J. (2014). Our Cyborg Future: Law and Policy Implications. The Brookings Institution. Retrieved November 15, 2020 from https://www.brookings.edu/research/our-cyborg-future-law-and-policy-implications/

Acknowledgments

We thank the Department of Learning Technologies and the Biomedical AI Lab at the University of North Texas.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Pilla, P., Moreira, R.A.A. (2022). Cybernetic Systems: Technology Embedded into the Human Experience. In: Albert, M.V., Lin, L., Spector, M.J., Dunn, L.S. (eds) Bridging Human Intelligence and Artificial Intelligence. Educational Communications and Technology: Issues and Innovations. Springer, Cham. https://doi.org/10.1007/978-3-030-84729-6_11

Download citation

DOI: https://doi.org/10.1007/978-3-030-84729-6_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-84728-9

Online ISBN: 978-3-030-84729-6

eBook Packages: EducationEducation (R0)