Abstract

This paper discusses the potential benefits of images assimilation in the context of operational oceanography, with the goal of eventually exploiting the dynamical information contained in sequences of ocean images to improve ocean model predictions. Successful assimilation of ocean images will provide a positive answer to the question whether meaningful dynamical information can be extracted from sequences of satellite ocean color images for the improvement of analyses and forecasts of the ocean circulation. Because in situ observational campaigns are costly and usually very limited in space and time, and satellites with visible bands are increasing in number, coverage and providing images with very high temporal frequency. Operational centers should consider making image assimilation an integral part of their future assimilation systems. Beyond the motivation, we also discuss whether images should be assimilated directly or indirectly, the latter consisting of assimilating information derived from images.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

Velocity is a fundamental and necessary quantity in the dynamics of any fluid. Ocean currents are responsible for the transport of heat, salt, nutrients, and they also impact the movement of ships, gliders, drifting buoys, waves and ice. Currents play a significant role in the variability of ocean conditions at both regional and global scales. Accurate knowledge of ocean currents is critical for navigation, search and rescue.

The potential of ocean surface currents observations to drastically improve ocean circulation analyses and forecasts was demonstrated in recent experiments (Carrier et al. 2016; Ngodock et al. 2015; Muscarella et al. 2015). Other studies have shown improvement of ocean predictability by assimilating ocean observed velocity data (Mariano et al. 2002; Fan et al. 2004; Taillandier et al. 2006; Nilsson et al. 2012). However, apart from coastal high frequency (HF) radar and sparse moored buoys, ocean currents are hardly observed.

The majority of observations used in correcting the ocean circulation consist of sea surface temperature (SST), sea surface height (SSH), and subsurface temperature and salinity (T/S) profiles. Assimilation of these observations provides some correction to the velocity field. For example, SSH assimilation provides geostrophic correction of the velocity field for the mesoscale circulation, and T/S profiles assimilation provides correction of the velocity field through the pressure gradient. However, the spatial distribution of these observations does not allow a reliable reconstruction of the velocity field, and accurate forecasts may be needed in locations where T/S profiles cannot be sampled or in coastal waters shallower than 200 m where SSH is not available. Thus, other types of remotely sensed observations that enable the correction of the circulation need to be exploited.

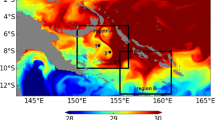

Sequences of ocean color images from satellites can capture the dynamics of the ocean, as they depict optical evolution of physical quantities and properties in the ocean. For example Fig. 1 shows a couple of Gulf Stream eddies from the infrared channel (Sea Surface Temperature) and the visible channel (Chlorophyll concentration) onboard MODIS. The similarity of the structures from those two channels shows that information from model variables (e.g. Temperature) can be obtained from other quantities (Chlorophyll concentration). Lateral displacements of these quantities are mainly due to advection from ocean currents. The evolution of images thus contains information about ocean currents, fronts and eddies. This information can be either extracted or exploited through the assimilation of image sequences, in order to provide more accurate analyses and forecasts of the circulation. There are areas where in-situ observations cannot be collected, and only satellite observations are available. Sometimes traditional SST and SSH are not enough to constrain the circulation, and velocity observations are not available either. Satellite images in this case will contain information that can be used to correct model forecasts via data assimilation.

Image of Sea Surface Temperature and Chlorophyl (Courtesy of NASA for research and educational use, oceancolor.gsfc.nasa.gov)

Ocean images from satellites are abundant and not exploited for dynamical correction of the ocean circulation. Several satellites exist that provide frequent imagery of the ocean, in different locations around the globe. They are available up to the coast, where SSH is not reliable and in-situ observations cannot be sampled. The assimilation of sequences of images will improve the analysis and forecast of ocean currents, fronts and eddies, a gap that has been identified in the US Navy operations.

Prior studies show that velocity fields can be extracted from a sequence of images. Methods of extraction ranges from the particle image velocimetry (Adrian 1991) to the assimilation of images into simple models describing the image motion (Herlin et al. 2006). Velocity derived by such methods can be used as observation of the velocity; that is the case of cloud motion vector used in data assimilation for atmospheric models (Schmetz et al. 1993). The drawback of such approach is that the model considered for the image evolution is totally decoupled from the underlying physical process, thus yield unrealistic velocities. The inferred velocity is usually not accurate because the process of inferring the velocity is not constrained, i.e., is detached from the dynamics of the modeled fluid.

Other studies show that images can be successfully assimilated directly into models like the shallow water coupled with the image evolution model (Titaud 2009; Souopgui 2010). Because of the density of velocity generated by such method, the combination of images or velocity extracted from images, directly with observations of other variables of the model (temperature, surface elevation, etc.) requires the construction of cross-covariance between variables, or a data assimilation method that can inherently handle the cross-covariances.

2 Images Source and Processing: The Ocean Example

At the present time the earth is permanently observed by a large number of satellites in several wavelengths. For the ocean, quantities of interest captured by satellites are sea surface temperature (SST), sea surface height (SSH), ocean color and other quantities in the satellite visible bands, e.g. Fig. 1.

Note that images of the ocean are two dimensional signatures of three dimensional phenomena. These images are basically a set of pixels. However, they contain visible structures such as fronts, vortex and singularities. The information in the image is transported by these structures. Therefore it can be considered from two viewpoints: the Eulerian viewpoint in which the evolution of the flow is described from a fixed frame, and the Lagrangian viewpoint in which the description is follows the evolution of the flow). A difficulty arises when a flow may have both an Eulerian and a Lagrangian character. Such is the case for Lenticularis clouds in the atmosphere: they look almost steady state, but in reality they are the signature of a strong wind. If the winds where estimated from the displacement of the lenticularis cloud then the result will be far away from the truth. Cloud are particles of water; they are gaseous phase near the ground, in liquid phase when they lift up and become visible, then turn back to vapor/gaseous state again during the descent. As a consequence, it is necessary to take into account the physics of “what is seen”. This means that images should be assimilated directly, not the effects seen in the images. It follows that sequences of images should be considered for assimilation rather than individual images, as the latter contain very limited information about the underlying dynamics. Another reason to consider sequences of images is that they contain information about the dynamics of discontinuities such as fronts and singularities. Single or flat images do not provide such information. It is thus important to isolate or extract the discontinuities and define them in a functional space with adequate topology that allows for variational calculus in conjunction with the dynamical model at hand. Care should be taken so that the topology is not too regularizing, otherwise the information in the discontinuities will be lost.

For The particular case of the ocean, images can be obtained from visible-band imagery at high temporal frequency or geosynchronous, and at high horizontal resolution from meteorological satellites. Examples of existing such satellites are listed in Table 1.

The GOES-R series satellites are geostationary meteorological observation platforms and the Advanced Baseline Imager (ABI) is the primary instrument on board. ABI is a passive imaging radiometer with spectral bands from the visible through the infrared. The horizontal spatial resolution is 500 m to 2 km and the observation frequency is 5 min in conus mode (GOES-East) and as high as 30 seconds in mesoscale mode. Despite this very high temporal frequency, the obstacle for utilization of these data for oceanographic applications has been that the ABI contains only two very broad bands in the visible (470 and 640 nm); the visible bands are not designed for detecting the comparatively weak radiant signal emanating from the surface ocean, also known as the water-leaving radiance (Lw).

For many coastal scenes, however, these limitations may be overcome by new techniques that convolve the GOES-ABI visible band data with information from coincident and dedicated ocean color radiometers (Visible and Infrared Imaging Suite [VIIRS], and the Ocean and Land Colour Imager [OLCI], Jolliff et al. 2019). These sensors are on board polar-orbiting satellites (NPP, NOAA-20, Sentinel 3A/B) and thus provide only 1 image per day (barring any cloud cover), yet, the calibration/validation activities that support these sensors enable very precise determination of Lw across the visible. When this information is used to post-process GOES-ABI data for coastal scenes, unprecedented details on coastal circulation are immediately evident in the true color image sequences. Particularly conspicuous in the high temporal frequency images (every 5 min) is the movement of turbidity plumes emanating from rivers and estuaries as well as the frontal boundaries between turbid shelf waters and the open ocean. Previous studies have shown that it is feasible to extract ocean surface velocity estimates from ocean color image sequences (Yang et al. 2015), but the major obstacle to any pragmatic application has been that without very high temporal frequency (O ~minutes) these estimates are prone to significant errors. Ideally, the frequency of color-enhanced GOES-ABI image sequences is more than sufficient to overcome this obstacle. Yet, aerosol correction, ABI signal noise, and other issues remain to be addressed and require a dedicated research effort in order to exploit the full oceanographic potential of GOES-R datasets.

3 Methods for Image Assimilation and Their Limitations

Data assimilation is the process that minimizes any discrepancies between the observed and modeled phenomena. It requires a direct relationship between the observed and modeled: the model must have variables that relate to the observed. The assimilation of images can then be classified as indirect or direct. In the indirect assimilation, observations are transformed into model variables counterparts, e.g., radiances to temperature or images to atmospheric motion vectors (AMV). In the direct assimilation, the model variables are transformed into observations or a common transformation (into the same metric space) is applied to both the model variables and the images so that they can be compared.

3.1 Indirect Assimilation of Image

Velocities are first estimated from the evolution of images, then they are assimilated as regular observations. As stated above in the introduction, the drawbacks of this approach are that the model considered for the image evolution is totally decoupled from the underlying physical process, thus yields unrealistic velocities with large observation errors in addition to being correlated. Extracting velocities assume linear dynamics from frame to frame, different from the modeled dynamics. The inferred velocity is usually not accurate because the process of inferring the velocity is not constrained, i.e., is detached from the dynamics of the modeled fluid. In the general case, transforming observations into model counterparts is an ill-posed problem (image sequence to velocity, radiances to temperature). It should thus be avoided, especially in the case of images, since they are two dimensional signatures of three dimensional phenomena, and the underlying physics and dynamics are unknown.

3.2 Direct Assimilation of Images

In the direct image assimilation, no attempt is made to extract the equivalent of model variable; rather a well-suited mathematical space of image is chosen or defined and the calculus of variation is carried out in that space. The question here is how to define such a space. There are three main difficulties in this process. The first is the definition of the space of images, i.e. what is it that is really “seen” in the images: discontinuities, fronts, vortex or singularities? The second difficulty is that images have to be defined in a metric space so that the usual optimization procedures applied to the assimilation or regular observations can also be carried out for the images. The third difficulty is that the observed-modeled relationship that is fundamental to data assimilation requires pseudo or modeled images from which the discrepancies to the observed images are computed. The latter is an expansion of the dynamical model that now includes a component simulating the image evolution.

3.2.1 Mathematical Spaces for Images

Images are a two-dimensional array of pixels. Dynamic information seen in a sequence of images are located in discontinuities and their evolution. For that reason the consideration of an image as an array of pixels is not appropriate for image assimilation; this is confirmed by prior studies (Titaud et al. 2010; Souopgui 2010) and illustrated by Fig. 2, which compares the image assimilation in the pixels and other spaces.

Analyzed initial velocity field computed by direct image sequence assimilation with different image observation operators: Identity operator (top left); curvelet decomposition and hard thresholding (top right); curvelet decomposition and scale by scale thresholding (bottom left); curvelet decomposition and hard thresholding zeroing coarsest scale (bottom right)

The first clue in the definition of a mathematical space for image is the isolation of discontinuities, which is a pre-processing stage for images. Titaud et al. (2010) defines the space associated with discontinuities in the image as the “space of structures.” Discontinuities are well characterized in spectral spaces using familiar tools such as the Fourier, wavelet or curvelet transformations. An example of curvelets is shown in Fig. 3. Another candidate in this category is the levelset method. The assimilation of images then requires two additional operators: the image-to-structure operator and the model-to-structure operator. The first operator converts the images from their original space given by the array of pixels to the space of structures, and the second operator converts the model solution to the space of structures. These two operators enable the computation of the image innovations, i.e. the discrepancy between the observed and modeled images, to be minimized in the cost function.

On the model side, the literature identifies three methods to define the model-to-structure operator: advection of passive tracer, advection of structures and Lyapunov exponents. The method of advection of passive tracer extends the model state to include a passive tracer that is advected by the model velocity and its concentration defines the model counterpart of the image. The image-to-structures operator is then used as the observation operator for image observation. The method of advection of structures extends the model state with structures of interest and advects those structures with the model velocity. The Lyapunov exponents method defines Lagrangian coherent structures (LCS, Haller, 2015) as the structures in the model and compare them to the structures in the images. In the first two methods, the advection defines the image model and the velocity field provides a coupling between the ocean model and the image model. Advection is not only the coupling mechanism between image propagation and ocean model; it is the dominant dynamical driver (Ren et al. 2011) on the short time scales between consecutive images in a sequence of high temporal frequency. In general, the image model is assumed to be two-dimensional because images are assumed to be of the surface of the ocean.

The assimilation process requires the observation operator and its transpose, so it is important to limit the degree of complexity and nonlinearity in the observation operator as much as possible. Lyapunov exponents and Level sets transformations are complex and nonlinear, and as such present a challenge for the transposition. On the other hand, wavelets and curvelets define a linear transformation and are discussed below. For more information on the Lyapunov exponents in image assimilation, see (Titaud et al. 2011; Le Dimet et al. 2015). Figures 4 and 5 show the potential of the backward Finite-Time Lyapunov Exponents (BFTLE) and the backward Finite-Time Lyapunov Vector (BFTLV) fields (Haller 2001) in extracting structures that are comparable to those present in images.

3.2.2 Multiscale Analysis of Images: Curvelets

Recent years have seen a rapid development of new tools for harmonic analysis. For geophysical flows, there are coherent structures evolving in an incoherent random background. If the flow is considered as an ensemble of structures, then the geometrical representation of flow structures might seem to be restricted to a well-defined set of curves along the singularities in the data. The first step in using images as observations in data assimilation is to separate the resolved structures, which are large, coherent and energetic, from the unresolved ones, which are supposed to be small, incoherent and bearing little energy. One of the first studies in this sense (Farge 1992) shows that the coherent flow component is highly concentrated in wavelet space. Wavelet analysis is a particular space-scale representation of signals which in the last few years has found a wide range of applications in physics, signal processing and applied mathematics. The literature is rich regarding wavelets (Mallat 1989; Coifman 1990; Cohen 1992) for example. A major inconvenience of wavelets is that they tend to ignore the geometric properties of the structure and do not account for the regularity of edges. This issue is addressed by the curvelet transform. The curvelet transform is a multiscale directional transform that allows an almost optimal nonadaptive sparse representation of objects with edges (Candès and Donoho 2004, 2005a, b; Candès et al. 2006). In \(R^{2}\), the curvelet transform allows an optimal representation of structures with \(C^{2}\)-singularities. As curvelets are anisotropic, e.g. Fig. 3, they have a high directional sensitivity and are very efficient in representing vortex edges.

A function \(f \in L\left( {R^{2} } \right)\) is expressed in terms of curvelets as follows:

where \(\psi _{{l,j,k}}\) is the curvelet function at scale j, orientation l and spatial position k (\(k=({k}_{1},{k}_{2})\)). The orientation parameter is the one that makes the major difference with the wavelet transform. The set of curvelet functions \(\psi _{{l,j,k}}\) does not form an orthonormal basis as it is the case for some families of wavelets. However, the curvelet transform satisfies the Parseval relation so that the \({L}_{2}\)-norm of the function \(f\) is given by:

where \(c_{{l,j,k}} = \left\langle {f,\psi _{{j,l,k}} } \right\rangle\) are the curvelet coefficients.

Figure 2 shows an illustrative comparison of the approximation of a circle by wavelets and by curvelets. The curvelets provide a better approximation of this perfectly anisotropic object. The convergence of curvelets is also better: the best m-term approximation \(f_{m}\) of a function \(f_{m}\) has the representation error

for wavelets and

for curvelets.

Another interesting property of curvelets in the framework of variational data assimilation is that the adjoint of the curvelet transform is the inverse of the curvelet transform. Therefore, to represent an image, we will consider the truncation of its curvelet development.

3.2.3 Level-Set

The use of the level-set theory has also been proposed for assimilating the information contained in images (Li et al. 2017). Two-dimensional shapes of features (eddies, oil slick) on the ocean surface can be represented by a subdomain \(\Omega\) whose boundary is defined by the zero level-set of the mapping \(\phi :{\text{ }}R^{2} \to R\)

with the inclusion of time, the function \(\phi (t,x)\) defines the evolution of the shape as advected by a velocity field \(v(t,x)\), following the advection–diffusion equation.

The evolution of the subdomain \(\Omega\) is thus equivalent to the evolution of a concentration given an advecting velocity field. The initial condition for this equation can either be a control variable, of which the first guess is obtained by extracting the shapes in the first image in a sequence, given a threshold of what can be seen in the images. The shape extraction is an “image-to-shape” process that also serves as the “shape” observation operator, i.e. what is now assimilated is the shape or set of shapes extracted from the image. The same process is applied to the evolution of the concentration that simulates what is seen in the images, providing the model-to-shape process. In this case, the discrepancy between the observed and modeled images is expressed as the discrepancy between the shapes extracted from the observed images and those from the evolved concentration. Note that the shape extraction in a nonlinear and non-differentiable process. Some modifications of the process are thus necessary so that it can be linearized and transposed as required by the formulation of the variational assimilation technique.

4 The Cost Function

Once the “image model” or “shape model” has been added to the dynamics, and the image space and the image observation operator (i.e. the relationship between the observed and modeled images) defined, a new cost function can be defined as the extension of the original cost function (for assimilating regular ocean observations) to include the discrepancies between the observed and modeled images. Images should only be assimilated in the context of the extended cost function as it is the only means of constraining the corrections from image observations to the regular ocean observations and dynamics of the ocean model.

The minimization of this cost function can be carried out with the existing algorithms for the assimilation of regular ocean observations. We note here that the use of sequential methods or filters such as the three-dimensional variational (3DVAR) or the ensemble Kalman filter (EnKF) should be avoided. They assume that observations are sampled at the analysis, thus freezing the time dimension in the observations and their underlying dynamics, which is essentially what the assimilation is seeking to extract from images. Methods such as the four-dimensional variational (4DVAR) or the ensemble Kalman smoother (EnKS) are therefore better suited for image assimilation. In the EnKS, the time-dependent cross-correlation between variables of the ocean and image models is inherent to the ensemble covariance and allows corrections from the images to propagate through the ocean model variables and vice versa. In 4dvar that adjoint of the image model allows the image corrections to flow back to the adjoint of ocean model through the adjoint velocity variables, and the ocean corrections also flow to the image model through the forward coupling by the ocean velocity field.

The literature abounds with the formal derivations and algorithms related to the minimization of the cost function, especially with 4DVAR. Those are not repeated here. For detailed formulation of the 4DVAR algorithm for the minimization of the cost function we refer the readers to an excellent academic resource, Bennett (2002) and references therein. Li et al. (2017) also contains derivations using both the physical and the tracer concentration evolution models.

Assimilation of image sequences with 4DVAR requires the implementation of the image evolution model that is coupled with the ocean circulation model through the velocity field. The lateral evolution of the image is assumed to result from advection by the lateral velocity field. The adjoint of the image evolution model will also need to be developed, and both the forward and adjoint of the image evolution model will be integrated with the existing 4DVAR assimilation system. This will enable the propagation of information from the image evolution to all other model variables through the adjoint of the momentum equation.

The 4DVAR data assimilation system of Ngodock and Carrier (2014) is based on the tangent linear and adjoint models of the Navy coastal ocean model (NCOM) (Martin 2000). As a numerical model, NCOM already has components for the evolution of tracer fields such as temperature and salinity. The inclusion of an additional tracer field for simulating the image evolution is thus straightforward in the model dynamics, as well as in the tangent linear and adjoint models. This is how the capability of the NCOM-4DVAR system can be extended to include a tracer evolution component that will be used assimilate ocean images directly, for the purpose of correcting the ocean circulation. The same extension of a 4DVAR system can be done at any research center to include image assimilation.

The assimilation of image sequences results in particular in the update of the velocity variable at high resolution and large coverage. The resulting velocity field can be validated against independent observations of surface currents, especially in coastal areas where such observations are available from high frequency. And, because velocity is correlated to other model variables through advection, the update of velocity also contributes to the update of other model variables. This results from an implicit cross-correlation between the image model and the dynamical model variables. This cross-correlation is usually provided by the dynamics of the tangent linear and adjoint models in 4DVAR. An interesting question is whether this dynamical cross-correlation is sufficient to propagate dynamical information from the images to all other model variables, or whether additional constraints or regularization terms are necessary to ensure that the assimilation of images provides dynamically consistent corrections of other model variables besides velocity.

5 Conclusion

This paper discussed the assimilation of images, particularly in the context of 4DVAR. The latter is better suited for image assimilation because it takes into account the model dynamics and the timeliness of observations. Images can be assimilated directly or indirectly. In either case, the dynamical model needs to be extended to include an image evolution component. The study is general enough to be applied to many fields besides oceanography. We emphasized the ocean because it is poorly observed and thus can greatly benefit from the assimilation of images arising from the plethora of earth observing satellites. Image assimilation should be an integral part of the future of operational oceanography because in situ observational campaigns are costly and usually very limited in space and time, but satellites with visible bands are increasing in number, coverage and providing images with very high temporal frequency, especially in regions where in situ instruments cannot be deployed. Although images are treated as two-dimensional for the ocean surface, their assimilation within a three-dimensional ocean model yields a correction to other ocean model variables through the coupling provided by the model velocity field. Implementation of image assimilation can be straightforward for research and operational centers that already have a 4DVAR data assimilation system.

References

Adrian RJ (1991) Particle imaging techniques for experimental fluid mechanics. Ann Rev Fluid Mech 23:261–304

Bennett AF (2002) Inverse modeling of the ocean and atmosphere. Cambridge University Press

Candes E, Donoho D (2004) New tight frames of curvelets and optimal representations of objects with piecewise-C2 singularities. Comm Pure Appl Math 57:219–266

Candes E, Donoho D (2005) Continuous curvelet transform I. Resolution of the wavefront set. Appl Comput Harmon Anal 19(3):162–197

Candes E, Donoho D (2005) Continuous curvelet transform II. Discretization and frames. Appl Comput Harmon Anal 19(3):198–222

Candes E, Demanet L, Donoho D (2006) Fast discrete curvelet transforms. Multiscale Model Simul 5(3):861–899

Carrier MJ, Ngodock HE, Muscarella PA, Smith SR (2016) Impact of assimilating surface velocity observations on the model sea surface height using the NCOM-4DVAR. Mon Wea Rev 144(3):1051–1068. https://doi.org/10.1175/MWR-D-14-00285.1

Cohen A (1992) Ondelettes et traitement numérique du signal. MASSON

Coifman RR (1990) Wavelet analysis and signal processing, signal processing, Part I: Signal processing theory. Springer, pp 59–68

Fan S, Oey LY, Hamilton P (2004) Assimilation of drifter and satellite data in a model of the Northeastern Gulf of Mexico. Cont Shelf Res 24(9):1001–1013

Farge M (1992) Wavelet transforms and their applications to turbulence. Annu Rev Fluid Mech 24:395–457

Haller G (2001) Distinguished material surfaces and coherent structures in three-dimensional fluid flows. Physica D 149:248–277

Haller G (2015) Lagrangian coherent structures. Annu Rev Fluid Mech 47(1):137–162

Herlin I, Huot E, Berroir JP, Le Dimet FX, Korotaev G (2006) Estimation of a motion field on satellite images from a simplified ocean circulation model. ICIP 2006:1077–1080

Jolliff JK, Lewis MD, Ladner S, Crout RL (2019) Observing the ocean submesoscale with enhanced-color GOES-ABI visible band data. Sensors 19:3900

Le Dimet FX, Souopgui I, Titaud O, Shutyaev V, Hussaini MY (2015) Toward the assimilation of images. Nonlinear Process Geophys 22(1):15–32. https://doi.org/10.5194/npg-22-15-2015

Li L, Le Dimet FX, Ma J, Vidard A (2017) A level-set based image assimilation method: Potential applications for predicting the movement of oil spills. IEEE Trans Geosci Remote Sens, Inst Electr Electron Eng 55(11):6330–6343. https://doi.org/10.1109/TGRS.2017.2726013

Mallat SG (1989) A Theory for Multiresolution Signal Decomposition: The Wavelet Representation. IEEE Trans Pattern Anal Mach Intell 11(7):7674–7693

Mariano AJ, Griffa A, Ozgokmen TM, Zambianchi E (2002) Lagrangian analysis and predictability of coastal and ocean dynamics 2000. J Atmos Oceanic Technol 19(7):1114–1126

Martin P (2000) Description of the navy coastal ocean model version 1.0. NRL report NRL/FR/7322—00-9961

Muscarella PA, Carrier MJ, Ngodock HE, Smith SR (2015) Do assimilated drifter velocities improve Lagrangian predictability in an operational ocean model? Mon Wea Rev 143:1822–1832. https://doi.org/10.1175/MWR-D-14-00164.1

Ngodock HE, Carrier MJ (2014) A 4DVAR system for the navy coastal ocean model. Part I: system description and assimilation of synthetic observations in Monterey Bay. Mon Wea Rev 142(6):2085–2107. https://doi.org/10.1175/MWR-D-13-00221.1

Ngodock HE, Muscarella PA, Souopgui I, Smith SR (2015) Assimilation of HF radar observations in the Chesapeake-Delaware Bay region using the Navy coastal ocean model (NCOM) and the four-dimensional variational (4DVAR) method. In Liu Y, Kerkering H, Weisberg RH (eds) Coastal ocean observing systems. Elsevier, pp 373–391. https://doi.org/10.1016/B978-0-12-802022-7.00020-1

Nilsson JAU, Dobricic S, Pinardi N, Poulain PM, Pettenuzzo D (2012) Variational assimilation of Lagrangian trajectories in the mediterranean ocean forecasting system. Ocean Sci 8(2):249–259

Ren L, Speer K, Chassignet EP (2011) The mixed layer salinity budget and sea ice in the Southern Ocean. J Geophys Res 116:C08031. https://doi.org/10.1029/2010JC006634

Souopgui I (2010) Assimilation d'images pour les fluides géophysiques. Ph.D thesis.

Schmetz J, Holmlund K, Hoffman J, Strauss B, Mason B Gaertner V, Koch A and Van De Berg L (1993) Operational cloud-motion winds from meteosat infrared images. J Appl Meteor 32(7):1206–1225https://doi.org/10.1175/1520-0450(1993)032<1206:OCMWFM>2.0.CO;2

Taillandier V, Griffa A, Molcard A (2006) A variational approach for the reconstruction of regional scale Eulerian velocity fields from Lagrangian data. Ocean Model 13(1):1–24

Titaud O, Vidard A, Souopgui I, Le Dimet FX (2010) Assimilation of image sequences in numerical models. Tellus A: Dyn Meteorol Ocean 62(1):30–47. https://doi.org/10.1111/j.1600-0870.2009.00416.x

Titaud O, Brankart JM, Verron J (2011) On the use of finite-time Lyapunov exponents and vectors for direct assimilation of tracer images into ocean models. Tellus A: Dyn Meteorol Ocean 63(5):1038–1051. https://doi.org/10.1111/j.1600-0870.2011.00533.x

Yang H, Arnone R, Jolliff J (2015) Estimating advective near-surface currents from ocean color satellite images. Remote Sens Environ 158:1–14

Acknowledgements

This work was sponsored in part by the Office of Naval Research Program Element 62435N as part of the ‘‘Local Analysis Through Tactical Ensonification’’ project. This paper is NRL paper contribution number NRL/JA/7320-20-xxxx.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Le Dimet, FX., Ngodock, H.E., Souopgui, I. (2022). Images Assimilation: An Ocean Perspective. In: Park, S.K., Xu, L. (eds) Data Assimilation for Atmospheric, Oceanic and Hydrologic Applications (Vol. IV). Springer, Cham. https://doi.org/10.1007/978-3-030-77722-7_15

Download citation

DOI: https://doi.org/10.1007/978-3-030-77722-7_15

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-77721-0

Online ISBN: 978-3-030-77722-7

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)