Abstract

Traditional approaches to cyber-security resilience, assuring the overall socio-technical system is secure from immediate known attacks and routes to potential future attacks, have relied on three pillars of people, process, and technology.

In any complex socio-technical system, human behaviour can disrupt the secure and efficient running of the system with risk accumulating through individual and system-wide errors and compromised security behaviours that may be exploited by actors with malicious intent.

Practitioners’ experience and use of different assessment methods and approaches to establish cyber-security vulnerabilities and risk are evaluated. Qualitative and quantitative methods and data are used for different stages of investigations in order to derive risk assessments and access contextual experience for further analyses. Organisational security culture and development approaches along with safety assessment methods are discussed in this case study to understand how well the people, the system, and the organisation interact.

Cyber-security Human Factors practice draws on other application areas such as safety, usability, behaviours and culture to progressively assess security posture; the benefits of each approach are discussed.

This study identifies the most effective methods for vulnerability identification and risk assessment, with focus on modelling large, dynamic and complex socio-technical systems, to be those which identify cultural factors with impact on human-system interactions.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Human factors

- Cyber-security

- Behavioural science

- Organisational culture

- Security culture

- Cyber- resilience

- Socio-technical

- Safety assessment

- Climate

- Behavioral

- Organizational culture

- Socio-behavioural

- Sociotechnical

1 Introduction

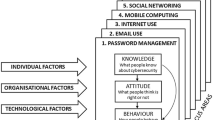

Traditional approaches to cyber-security resilience, that is assuring the overall socio-technical system is secure from immediate known attacks and routes to potential future attacks, have relied on three pillars of people, process, and technology. Historically greater emphasis has been placed on technology solutions with reduced attention placed on the human element; now human behaviours, culture and organisational human factors are considered in every cyber-resilience improvement programme.

There are multiple different understandings of ‘human factors’ (HF). The International Ergonomics Association (IEA) defines human factors as ‘…the scientific discipline of interactions among humans and other elements of a system, and the profession that applies theory, principles, data and methods to design in order to optimize human well-being and overall system performance’ [1]. Part of these human-system interactions involve understanding the drivers for behaviours e.g. the capability, opportunity and motivation. It also involves; ensuring the design supports the needs of the user, identifying where the user will find the system complex to interact with, ensuring the design minimises the likelihood of human error and ensuring the design maximises the opportunities for error tolerance.

In any complex socio-technical system, the significant risk of certain “human factors” disrupting the secure and efficient running of the system is now widely recognised. The accumulated effect of individual and system-wide human errors and compromised security behaviours lead to vulnerabilities that may be exploited by threat actors, or attackers, with malicious intent. Identifying those vulnerabilities; assessing the risk arising from them; and evidencing the argument for making improvements is critical to developing cyber-resilience.

Within safety, James Reason’s (1997) [2] ‘Swiss Cheese’ model of accident causation is used in risk analysis and risk management, where the risk of a hazard causing harm in the system is reduced as layers of defence are added. For straightforward security compromises, where layers of defence prevent the risk from developing, this model still applies to security, as demonstrated in Fig. 1.

However, in cyber-security a threat actor seeks to attack targeted systems and can manipulate these layers to exploit any vulnerabilities within the wider socio-technical system to create an attack path. Furthermore, rather than a linear path of circumstantial failure, threat actors can actively weave their way through defences to engineer a system failure as demonstrated in Fig. 2.

The Murphy’s law adage stresses that ‘what can go wrong will go wrong’, with acknowledgement that it is often considered in reality as a case of ‘what can go wrong might go wrong at some point’. However, in cyber-security assurance it is necessary to go beyond Murphy’s law as ‘what can go wrong will be actively sought out and manipulated to make it go wrong’.

When considering ‘the human’ and human behaviour in a large, complex socio-technical system, a distinction should be made between the different roles adopted by humans as ‘end-users’, attackers, defenders and bystanders. While the threats posed by individual threat actors, with the intention of actively creating and exploiting vulnerabilities, are well-documented, they can be emphasised at the expense of potential vulnerabilities posed by humans in the system carrying out their everyday work tasks.

In order to understand the human-centred activities a key element of the investigation activities focused on the day-to-day tasks of all humans in the organisation as they interact with the system. Drawing on both goal-orientated and social interactions enables the identification of vulnerabilities which can be exploited by the threat actors, and recommendation of human-centred mitigations to increase security.

From working within high-hazard domains, HF have a pedigree in understanding and identifying the potential vulnerabilities within a system, in sharing knowledge across domains, and transferring best practices to create a more rigorous cyber-security process.

Comparisons have been made across both safety and cyber-security, drawing on HF practitioner experience. On the surface, safety hazards and cyber-attack paths look very different and the processes used to identify the underlying vulnerabilities within the system are also different. A cyber threat is often perceived as an adversary deliberately targeting a system, however that is not the only way to assess vulnerabilities in a cyber-security context. As stated by Dekker [4] “…people in safety critical jobs are generally motivated to stay alive, to keep their passengers, their patients, their customers alive. They do not go out of their way to deliver overdoses; to fly into mountainsides…”. The majority of people want to do a good job both in a cyber-security and safety context. One of the most striking similarities within both domains is the requirement to understand the human element of risk and what drives risky behaviours. Most people do not intend to undertake risky security behaviour, but multiple factors can influence their actions such as time pressure, outdated system or task design, or deliberate manipulation through social engineering, all of which can result in workarounds, lapses and behaviour that leads to people unintentionally compromising security.

As HF practitioners, assessing the human cyber risks within a system requires a shift in focus from the malicious outsider threat, to incorporating a wider focus to include social engineering, organisational culture and system design that can create the opportunity for attack paths.

In the socio-technical system of interest it is the vulnerabilities that are sought out. In terms of cyber-security, a vulnerability is an element of the system which has the potential to be exploited as part of an attack path, and is assessed with an associated risk of compromise.

1.1 Case Study

This paper introduces the case study activities experienced by the authors and their organisation with a number of clients in different sectors. It explores the overlaps and differences from across domains and discusses how this knowledge can be applied to a risk investigation to identify the widest set of human-based and system vulnerabilities. The method and processes described draw upon existing best practices in technical, cyber-security and behavioural science safety approaches into one homogenised methodology.

Cyber-security investigations have been conducted into a variety of systems, ranging from single technology applications, for example an app on a mobile phone, to multiple inter-related systems, with interactions from multiple parties, based in different locations. For the purpose of this paper, an example transactional system ‘System 1’ is accessed and interacted with from two office sites, and the investigation scope is identified accordingly, as shown in Fig. 3.

People and processes are intrinsically interwoven with technology throughout its design, manufacture, installation, use and maintenance, and ultimately disposal. Cyber-security risk is therefore assessed for each of these stages, and for all interactions, within the socio-technical system in order to identify vulnerabilities which could enable an adversary to gain access to the information contained within. In the example above, authorised access to System 1’s transactions by users in office sites A and Z would be examined, along with the cases where System 1 users required approval by senior users, or needed technical support in order to complete a transaction. In addition, unauthorized use of System 1’s connection between office sites, would also be a plausible vulnerability line of enquiry. Note that other systems and interactions in both office sites are beyond the scope boundary of this investigation.

2 Recognised HF Approaches

Human Factors practitioners adopt a human-centred approach to work across different industries, and working with multi-disciplinary teams. This wide range of work allows us valuable access to a range of tools and techniques. The following sections draw upon and pull together experience of utilising HF processes within the technology domain, the safety domain, behavioural science and cultural assessment and following a human-centred assessment approach. Collating best practice from each of these domains has allowed the creation of a bespoke approach for cyber-security HF investigations to date, combining the best techniques from across multiple domains in high hazard industries.

2.1 HF Adoption of Formal Cyber-Security Methods

When investigating an organisation’s security defences against a potential cyber-security attack, it is important that the system is considered in its entirety and that potential vulnerabilities are assessed from the mindset of an attacker. This section outlines the existing methods and practices drawn on by HF and cyber-security domain experts for investigating and identifying cyber-security vulnerabilities.

The aim of the reviews, investigations and assessments is to develop an accurate, ‘real world’ view of the socio-technical system. These are required to produce a detailed analysis of the vulnerabilities which may lie within. The focal point of an investigation could be narrow, such as a piece of technical equipment, or broad, such as an establishment or group of people.

A typical security project is divided into phases namely: Familiarisation & Modelling, Investigation, Analysis and Risk Assessment. These phases are demonstrated in Fig. 4 and outlined below.

Familiarisation and Modelling

The aim of the initial phases of the investigation is to gain a primary understanding of the socio-technical system under investigation by gathering existing technical and process material, and engaging stakeholders. It is important to confirm the scope of the process and the boundaries of the socio-technical system that will be explored, and ultimately analysed.

Modelling provides a central focal point for all information found to date and reflects the initial high-level analysis of the critical components within the system, this allows the team to discuss identified high level impacts associated with those components.

Investigation

Investigation of a socio-technical system is planned and conducted in order to progressively discover and identify likely areas of potential vulnerablity. After initial identification, further qualification through deep dive exploration of all candidate areas reveals the extent of the vulnerabilities that may exist. Data is collected through a range of quantitative and qualitative methods and analysed to evaluate technical security assurance, along with security and organisational culture. As the data accumulates, a developed picture of the initial assessment outcomes and the potential impacts of vulnerabilities on the system as a whole, is made.

Analysis and Risk Assessment

During the ‘Analysis’ phase the accumulated data is analysed from the perspective of an adversary in order to establish how the vulnerabilities could be exploited and manipulated into potential attack paths to infiltrate the system. Following this, risks are quantified during the ‘Risk Assessment’ phase according to their liklihood of occurance and the impact on the organisaiton. Again this overlaps with, and leads directly into, the next phase where risk mitigation strategies are formulated and proposed.

2.2 HF Practitioner Experience

In undertaking HF activities on complex systems, HF practitioners are experienced in the application of structured, rigorous methodologies, and providing strong substantiation arguments in support of safety and security cases which are presented to regulators. This experience afforded the opportunity to select, learn and create best practice in translating methods, and rigour to new domains such as cyber-security assurance. The following outlines some of our learning:

-

System scope – the importance of bounding the system that is being assessed.

-

Work-as-imagined and work-as-done – the identification of the differences which can appear between work-as-imagined and work-as-done.

-

Risk and Resilience – The likelihood that some form of unintended outcome will have an impact on the organisation.

System Scope

It is important to ensure the scope of the system is fully bounded and the practitioner assessing the system, fully understands how the system is used, by means of developing a task analysis to identify human interaction with the system under investigation. For example, a technical system may have limited human interactions compared with a site which may have multiple digital systems within scope. From experience gained in the cyber-security domain, a system of systems approach has been adopted looking at the individual technological system and the context in which it operates. The system of system approach proposed here incorporates the features of a typical HF system of systems approach but goes beyond it in order to evaluate the scope of the accumulated risk and attack paths. Therefore, to undertake a successful cyber investigation the scope of the system should be clearly defined and the human interactions within the system understood and documented.

Work Done vs Work Imagined

From investigating human error, a significant part of the HF input is to review, understand and analyse how work is intended to be carried out (‘work-as-imagined’) compared with how work is conducted in reality (‘work-as-done’) [3]. This approach involves utilising task analyses, hierarchical and tabular task analysis (HTA,TTA) for example, behavioural and system modelling, and engagement with end-users through interviews and focus groups. In addition, observations are carried out to review ‘work-as-done’, including any workarounds to cyber-security procedures that may pose a greater risk. Evaluating the differences and variation between work-as-done and work-as-imagined, will help the client’s understanding of the interaction of many factors in the overall system, such as organisational pressure, poorly written or out of date procedures, and inadequate training to name a few. As will be discussed later, the gaps between ‘work-as-done’ and ‘work-as-imagined’ are a good indicator of where system weaknesses or potential vulnerabilities may lie that could be exploited by an adversary.

Risk and Resilience

Definitions of risk in cyber-security vary; for the purposes of this case study, it can be conceived as a form of unintended outcome that has the ability to impact the mission, whether in a commercial or defence environment. These impacts are loss of: finance, reputation, operational capability and, in some contexts, loss of life.

The risks of cyber-security attack paths and vulnerabilities being exploited are established against a standard risk matrix of risks, the impact of the unintended outcomes, and the likelihood. The level of risk however varies for each case and for maximum effectiveness, is aligned with the risk appetite of the organisation. Some may have a conservative, low security risk-appetite whereas others may be more risk tolerant in a security context. The risk-benefit analysis (RBA) is therefore unique for each organisation and system.

Furthermore there may be a set of risks associated to system ‘A’, for instance a mobile phone, which may be deemed to be acceptable, and another set of risks associated to system ‘B’, let’s say a server room, which are also deemed to be acceptable. However, when the mobile phone is in the server room the accumulated risks of the larger, combined, systems will be different, and may become unacceptable. In this context a system of systems approach can be recognised and the importance of clearly defining the boundaries of the targeted system and the scope of the investigation at the outset are highlighted.

2.3 Culture

In order to understand how well the people and systems in an organisation interact, it is increasingly important to recognize and assess how the organisational and security environment affects the operation of work done and work-as-imagined. Organisational cultures where blame is high for security breaches or those where operational focus is consistently prioritized over security issues, could be exploited as potential vulnerabilities.

By collecting data on both the security culture and organisational culture throughout the investigation process and timeframe, it is possible to standardize some responses as a basis for assessing risk, and to identify anomalous areas for further in-depth interviews. This is achieved through incorporating questions and commentary into all interviews, surveys, observed group discussions and tasks, and making use of security culture questionnaires and pulse surveys for climate. In addition, organisational change and development methods can be utilized to improve the security implications of cultural factors. Furthermore, readiness or baseline assessments of the impact of cultural factors can then be incorporated into an overall strategy for cultural change development.

Expected human-computer interactions, flows of information and security decision-making points can be identified on the socio-technical systems model, even where complex systems are in use. Identifying cultural factors that alter or interrupt those interactions, information or decisions across the breadth of the target system yielded effective vulnerability identification and risk assessment. These models and factors are then overlaid with complementary security data using robust assessment frameworks developed for the organisation.

Qualitative and quantitative methods and data were used for different stages of the investigation in order to derive risk assessments and access contextual experience for further analyses. For example, exposure to the risk of social engineering was assessed using ‘direct’ questions, whereas expectations of blame for an incident was asked ‘indirectly’ with opportunities to comment.

The primary focus of the approach is to assess the impact of cultural factors on cyber-security risk, Potential vulnerabilities can be exploited in a direct attack on an identified cultural weakness, or by engineering the situation to take advantage of cultural factors.

In activities that focus on modelling large, dynamic and complex socio-technical systems, identifying cultural factors that affect human interactions across the breadth of the system were most effective for vulnerability identification and risk assessment.

2.4 Safety Assurance Applied to Security

Safety assurance is a formal and systematic process which aims to demonstrate that an organisation, functional system, plant or process are tolerably safe. Safety assurance can result in risks being effectively managed and lead to improved system performance. HF forms an integral part of the safety assurance process. With HF specialists working alongside safety specialists to help to ensure the ‘human’ element of risk is identified and effectively managed throughout the safety assurance process.

HF are integrated into safety assurance in multiple industries such as aviation, nuclear, defence and rail. Some of the worst major accidents have highlighted the complex role of the ‘human’ within the wider complex socio-technical systems. These major accidents have helped to demonstrate the combination of system failures and human failures perfectly aligning to result in some of the worst disasters (for example, Piper Alpha, Chernobyl and Herald of the Free Enterprise). Having HF effectively integrated within the safety assurance process can help to identify human failure within the complex socio-technical system where the human is an integral part of the complex system and come up with effective mitigation solutions to minimise the likelihood of human error from occurring.

Providing HF safety assurance within a complex socio-technical system is detailed and a proportionate approach must be adapted depending on the level of ‘risk’ involved. A typical approach to HF safety assurance is presented in Fig. 5. This approach is systematic, detailed and can be time consuming. Focusing HF efforts in the areas of highest risk such as safety-critical, safety-related or complex tasks ensures efforts are proportionate to the risk. Once the overall set of these tasks are identified, task analysis is conducted on each to analyse the task detail undertaken by operators and maintainers. Error analysis is conducted to identify credible human error. The extent to which human errors are quantified with the derivation of human error probabilities (HEPs) depends on the requirements from the safety case. Regardless of whether it is done numerically or quantitatively, HF practitioners indicate the likelihood of error occurrence and identify the performance shaping factors (PSFs) that will make that error more or less likely to occur.

A key part of the process is to identify opportunities to mitigate the human error. The ‘as low as reasonably practicable’ (ALARP) approach is adopted within HF safety assurance. Therefore, whilst eliminating human error is the preference (based on the ERICPDFootnote 1), several factors are considered including cost of proposed change, consequence and likelihood of the error occurring. One option, which is used particularity within operational plants where engineered changes are more costly, is to derive procedural controls such as human-based safety claims (HBSCs) to protect against system and human error. Procedural controls rely on operators or maintainers to form a key layer of defence against an unintended consequence (see Fig. 1 to highlight layers of defence against an unintended consequence). Therefore, any HBSCs made will need to be qualitatively substantiated to ensure the necessary arrangements are in place to demonstrate that the HBSCs form a reliable layer of defence against an unintended consequence.

This HF assurance approach utilizes a number of HF tools and techniques, including hierarchical and tabular task analysis (HTA,TTA), error analysis, walk-throughs and talk-throughs with operators and maintainers, desk top reviews of documentation such as operating procedures, derivation of HEPs and qualitative substantiation of HBSCs. These HF tools and techniques are not unique to HF safety assurance and can be utilised across any domain to support HF assessment work. In addition, whilst this traditional approach to HF safety assurance has been presented here this approach can be adapted to suit a range of different domains such as cyber-security to ensure HF are integrated and ‘human’ remains a key focus in identifying human failures or vulnerabilities and any potential defence and solution can be delivered consistently and reliably.

3 Methods

From the previous sections it is apparent that there are multiple HF qualitative and quantitative methods available for HF practitioners to use when undertaking a cyber-security review. The following section outlines the process utilised by HF for cyber-security investigations drawing upon the knowledge and techniques applied across wider domains. It should be recognized the process defined below is iterative throughout, with further investigation or analysis being conducted as required, until all parties are satisfied that the socio-technical system has been analysed in full (Fig. 6).

Familiarisation

Assurances of stakeholder and user confidentiality are made during initial contact in this stage. As outlined in Sect. 2.1 the output of the familiarization stage summarises the ‘what and why’ of the existing socio-technical system as well as any vulnerabilities that immediately emerge.

The methods that are used include literature reviews and internal briefings, as well as reviews of security processes, procedures and documentation regarding the existing systems. The reviews include training records, cyber-security training, organisational charts and technical processes in order to establish the ‘work-as-imagined’ and facilitate the development of investigation strategies unique to that system.

Modelling

Human-system interactions are identified to develop the initial socio-technical model which will act as a baseline for the investigation which is updated as the process evolves. The methods used include; task analysis, system modelling and behaviour modelling.

Investigation

All interactions are examined using a number of methods including quantitative questionnaires and surveys along with qualitative interviews, discussions and observations of behaviours, work environment and system use which could include assessments of usability and human computer interaction (HCI).

After the initial engagement, thematic analysis is conducted in order to prepare a focused question set developed to encapsulate themes enabling further exploration in subsequent engagements. The data produced is used to build up a picture of how the socio-technical system actually operates and where potential vulnerabilities may lie.

Analysis

Wider practitioner experience is employed to create a full understanding of the data set by applying theory, skills, knowledge and expertise along with external application of guidance, standards and recommended good practice. Utilising a number of human factors methods enables a comprehensive overview of system vulnerabilities to be captured.

Methods include quantitative and qualitative analysis of interviews as well as gap analysis between identified ‘work-as-imagined’ and ‘work-as-done’ processes. The emergent gaps indicate where vulnerabilities may lie, such as security shortcuts and workarounds, as processes are not carried out as intended. Workshops may be held with users and stakeholders to confirm the accuracy of the models.

Risk Assessment

Based on the findings from accumulated investigation data, the overall risk matrix is evaluated for impact and likelihood of human-system vulnerabilities leading to attack paths. Methods include: human risks assessment matrix, assessed individually and as part of the wider system. The resulting matrix is then validated across the team for inter-rater consistency and socio-technical risk mitigations.

4 Discussion

The following section discusses the findings from utilising the defined process to identify vulnerabilities within a defined system. This section has been broken down into the following subsections.

The Different Humans in the System

In large, complex cyber-security socio-technical environments, it is important to consider the full range of different human roles and tasks, rather than honing in on one group. Behaviours vary for different humans in the system depending on whether they are attacker, defender, or end-users of systems with either specific tasks, or occasional use. By expanding the perspective beyond the traditional focus of preventing the harm caused by threat actors alone, the security and day-to-day work behaviours can be placed at the centre of a resilient cyber-security system.

Beyond the ‘Technology-only’ Approach

By adopting a broad HF-led human-centred approach, vulnerabilities and risks can be identified earlier than a technology-first approach would yield. Even in the most technologically complex environments, there are always some human task-related interactions that contribute to vulnerabilities.

Earlier Engagement, Wider System Scope

In early practitioner activities, HF were invited, after the initial project engagement, to review problems that were deemed beyond the bounds of the technical system. This resulted in the need to ask questions of the wider socio-technical system retrospectively, in order to identify the causes of vulnerabilities rooted in social and cultural issues. Wider understanding of the impact of human factors on the system have been incorporated into further developments of the models since, that reflect the full scope of the socio-technical system, not just the technical element.

A lesson learned from practice was that understandings of the user task could have been further developed earlier in the process, depending on the system scope, which would have helped when assessing risk.

4.1 Iterative Model Development and Validation

Building models and assurance arguments from which to generate further areas of investigation and risk mitigations, provided artefacts for discussion and feedback at stakeholder workshops. In addition, ongoing validation of re-usable instruments and tools for future investigations and to evidence recommendations were evaluated and reviewed with teams. This construction of models and validation with stakeholders can be an iterative process depending on project design. The accuracy of the model needs to be agreed and accepted by all parties, with sufficient evidence to explain differences to key stakeholders. Further data collection to confirm this accuracy of models and to eradicate discrepancies may be required at times. On other occasions, evidence may surprise individuals within the organisations being assessed who take a more macro view of operations and processes, when micro system intricacies are identified of which they may have been unaware.

4.2 Integrating Cyber and HF Approaches

A key to the success of the investigation practice set out in this study has been the adoption of cyber-security domain expertise and the integration of HF processes into the cyber-security investigation team, in order to fully assess the risks within complex socio-technical systems. HF, as a discipline, have a long standing history of successfully integrating into receptive multidisciplinary teams, for example working closely with safety specialists as part of safety assurance activities; and now a close one-team approach with cyber-security domain teams. Integrated contributions to potential vulnerabilities, system and attack path modelling, and risk assessments were produced as a collaborative effort across disciplines.

Initially the scope of some clients’ work allowed for limited integration activities, but it was important for HF to be a fully integrated part of the cyber team in order to elicit the relevant information from end-users. Effort was put into integration and collaboration activity, ensuring that HF maintained an independence but contributed practices which would support and complement the exercise as a whole. For example, HF introduced the development of a consistent, transparent positive investigation environment, where people were able to speak out, aware of the exercise ethics, confidentiality and actions for their reports, which has been critical to the success of each investigation.

Terminology Presents a Barrier

Another reason for ensuring participants were put at ease was because it was found that some cyber-security terms and the general use of ‘cyber’ could be confusing for participants outside the security domain. The term ‘cyber’ wasn’t generally understood, it was too ambiguous and somewhat misleading when in reality, the process was to analyse a complex socio-technical system. More problematic still was the term ‘investigation’, which immediately implied wrongdoing and that a perpetrator was being sought out. It was important to overcome these barriers for the participants to invest fully in the process.

Introduction of HF Ethics to Investigations

The importance of clear ethical briefings was highlighted to all investigation teams, and to participating interviewees, explaining informed consent, use and handling of data, the boundaries of anonymity and that participants could be identifiable if they divulged information that only they could know. Participants were informed that if they revealed information that could do harm to themselves or others, the team would be obliged to report it.

A key benefit of offering a safe and anonymous environment for participants to communicate their experiences is that they have the opportunity to speak freely about the workings of the system, without fear of it reflecting badly on them and damaging future prospects. Therefore, known bad security practices or potential for vulnerabilities are more likely to be revealed in the absence of the worry of reprisals. A further benefit is an understanding by all of the reasons for processes not being carried out as envisaged and the remedial actions required.

Aligning Understanding of Work-as-Done

Gap analysis between ‘work-as-done’ and ‘work-as-imagined’ reveals system weaknesses or potential vulnerabilities that could be exploited by an adversary. Both senior and security management may hold out-of-touch or over-idealised views of work in their organisation, not aligned with reality or work-as-done and they value feedback on behaviours that reveal gaps in processes. Highlighting these areas to management enables them to improve the security and efficiency of their processes.

4.3 Qualitative and Quantitative Methods

The importance and purpose of adopting individual quantitative or qualitative methods for analyses of different data is acknowledged in HF practice. Within cyber-security HF investigations, both qualitative and quantitative data can be utilised throughout the all phases of the process. This enables investigators to derive risk assessments and access contextual experience for further analyses. For example, exposure to the risk of social engineering was assessed using ‘direct’ questions, whereas expectations of blame for an incident was asked ‘indirectly’ with opportunities to comment.

Experience has shown there are significant benefits to be gained from utilising a mixed methods approach for some stages of the investigation process. For example, recording observations and behaviours, as well as self-reports of stated intention, e.g. visual cues of people looking to the locations of a password crib can be compared with their stated password practice.

Value of Quantitative Investigation Methods

The use of quantitative surveys and questionnaires has enabled the effective sampling of large populations, and provides the opportunity for statistical measurements of trends and cultural attitudes as well as validation to evidence findings. Quantitative methods are important for measuring the extent and risk of a human-centred problem and for comparison with other wider populations and overall security culture, where available.

Value of Qualitative Investigation Methods

In order to capture the socio-behavioural system in its entirety, qualitative methods, including interviews, discussion groups and observations, were also widely utilised. These methods provided valuable insights into the unique experiences of groups and individuals within the system. For example, a participant who appeared visually frustrated during discussion, not speaking due to their seniors’ presence in the room, proved to be a great source of information when interviewed alone. Comments made during discussions revealed a rich level of detail that often led to the revelation of significant vulnerabilities which may otherwise have remained undiscovered.

Use of Mixed Methods

The benefits of using mixed methods, that is collecting both quantitative and qualitative commentary responses for analysis, were significant for identifying, developing and quantifying areas for further investigation and potential vulnerabilities. Analyses were also enhanced by adopting alternative perspectives, from a cognitive approach focusing on the person purely as a rational information processing individual, to evaluating stimuli-response drivers of security behaviours. Interview texts were examined through the lens of discourse analysis [5], and primarily phenomenological approaches, using thematic analysis of first-person interviews to explore the lived experiences of individuals [6] within the socio-technical system. This was highly successful in facilitating deeper analysis, producing rich findings and a nuanced understanding of the investigation environment. Rather than asking questions that would only require quantitative, binary style ‘yes’ or ‘no’ responses, it was found that using open-ended questions, which were deliberately designed to elicit deeper responses, would provide personal insights that were invaluable to a holistic understanding of the socio-technical system.

A lesson learned was that utilising a survey covering a broad range of potential issues and vulnerabilities at the outset of an investigation, is effective in narrowing the lines of enquiry to those of most concern before physical engagements with users and stakeholders commence. By doing so, valuable interview time is maximized during initial interviews and discussion groups, resulting in greater efficiency and better evidence results being collected.

Stakeholder Workshop Feedback

Workshops held with the users and stakeholders can be a critical part of the investigation process. Once systems have been analysed, models constructed, and vulnerabilities identified, returning to the people operating within the system to validate the findings and gather end-user feedback on recommended courses of actions was important to moving forward. There would often be comments such as ‘you should speak to [this person]’ or ‘you may want to look at [this information]’ which would lead to further insight and data collection for review. It may also be that findings are disputed by a stakeholder, in which case further evidence would be collected to either bolster or alleviate the findings. Furthermore, the risks arising from some vulnerabilities discovered may be mitigated by other processes, so diminish in significance. Workshop feedback is an iterative process until all avenues have been explored within the boundaries of the system that were established at the outset.

5 Conclusion

Adopting a defined and systematic process from the start of any investigation, and ensuring that the system under investigation is well bound continues to be important for effectiveness. In addition, creating a robust HF data capture plan, before any investigations start is valuable to later success.

It remains important also that HF practitioners do not just utilise the existing technical process in place, but bring their knowledge from other domains to support and develop existing practices and enable full integration and knowledge sharing with the multi-disciplinary team.

Adopting a mixed methods approach and drawing from methodologies beyond a purely cognitive approach can add richness and insight from experience to the data collected from those who operate within the socio-technical system daily. This enables a wider data set and deeper analysis to be conducted, from which a more extensive range of vulnerabilities can be identified.

The most secure assessments of risk and resilience require evidenced analysis from both observations, and self-reports, in order to access the widest data set; and to generate, support and evidence the analysis argument for risks to resilience.

A significant take away, is ensuring the social element of the socio-technical system is investigated, by developing robust human-system models of interaction and by identifying the impact of organisational and security culture issues on vulnerabilities and risk. Risks can be mitigated, cyber-security resilience and security culture improved, once the impact of cultural issues in the organisation are identified.

Finally, it should be noted that whilst selective adoption of relevant approaches from the safety and cyber-security realms is effective, threat actors are actively seeking out vulnerabilities in order to manipulate and weave a path through them. Outcomes therefore shift from unintended failures to intended failures. This subtle difference changes the dynamic of the approach to evaluating resilience with an attacker mindset. Practitioners need to go beyond ‘Murphy’s law’ to analyse how vulnerabilities, the ‘holes in the cheese’, could be exploited, and how humans could be manipulated to unwittingly align them, aiding attack path navigation.

Notes

- 1.

Eliminate, Reduce, Isolate, Control, Personal Protective Equipment and Discipline.

References

IEA (2016). In: Shorrock, S., Williams, C.: Human Factors and Ergonomics in Practice, CRC Press, Boca Raton, p. 4 (2017)

Reason, J.: Managing the Risks of Organisational Accidents. Ashgate Publishing Limited, Aldershot (1997)

Hollnagel, E., Woods, D., Leveson, N.: Resilience Engineering: Concepts and Precepts. Ashgate, UK (2006)

Dekker, S.: The Field Guide to Understanding ‘Human Error,’ 3rd edn., p. 12. CRC Press, Boca Raton (2014)

Tileaga, C., Stokoe, E. (eds.): Discursive Psychology, Classic and Contemporary Issues. Routledge, Abingdon (2016)

Langdridge, D.: Phenomenological psychology, theory, research and method, Pearson Education Limited, Harlow (2007)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Fairburn, N., Shelton, A., Ackroyd, F., Selfe, R. (2021). Beyond Murphy’s Law: Applying Wider Human Factors Behavioural Science Approaches in Cyber-Security Resilience. In: Moallem, A. (eds) HCI for Cybersecurity, Privacy and Trust. HCII 2021. Lecture Notes in Computer Science(), vol 12788. Springer, Cham. https://doi.org/10.1007/978-3-030-77392-2_9

Download citation

DOI: https://doi.org/10.1007/978-3-030-77392-2_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-77391-5

Online ISBN: 978-3-030-77392-2

eBook Packages: Computer ScienceComputer Science (R0)