Abstract

Many parts of the globe face numerous natural disasters, including terrible earthquakes, terrible landslides, epidemics, drought and/or flooding. In recent decades, disasters have impacted the world becoming more. A higher occurrence of intense hydro-meteorological activities, most likely due to climate change, and the rise of susceptible populations, may be the key reasons for this progression. Risk eradication approach, with an accent on risk evaluation, risk mapping and threat assessment, which both have a significant spatial aspect, should be achieved further in order to reduce disaster menace. Integration of remote sensing products and Geographic Information Systems (GIS) has converted an automated, well-developed and effective disaster risk management technique today. The present chapter highlighted a critical and detailed overview of recent multi-hazard risk analysis performed using remotely sensed data and geospatial techniques, as it permits participants to be intricate in numerous phases of prototypical development. This chapter also represented the methodology of machine learning and crowd sourcing, particularly for multi-hazard modelling, as a very valuable tool for risk management and disaster vindication.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1.1 Introduction

‘Multi-hazard’ is a concept used by the United Nations as a collective initiative to encourage risk prevention and emergency management in the form of bearable growth and Agenda 21 (Pourghasemi et al., 2019), which would lead to the sustainable development of the earth (Keesstra et al., 2018). A multi-hazard strategy describes multiple threats with varying probabilities and intensities (Eshrati et al., 2015). In terms of rescue process operations during a disaster, chaos, misguidance, misallocation of workers and misunderstanding are creating immense costs, from the rescue time to the casualty toll. The lack of viable contingency planning or new evacuation plans restricts emergency management initiatives. Hazards can be described as possible risks to individuals, infrastructure and the environment resulting from ‘the intersections of human systems, natural processes and technology systems’ (Cutter 2003). They are ‘risks that can or may not escalate to a disaster or catastrophe and have been called after a disaster/emergency that could be preceded’ (Haddow et al. 2008). Such hazardous causes may emerge due to geological, meteorological, oceanographic, hydrological or biological systems on Earth, or technological intervention. Conversely, as pre-disaster prevention and readiness steps often form a critical part of the current emergency response strategy, so the scope can possibly be extended to include the word ‘planning and response’. Furthermore, while natural and human threats cause disasters, they do not need to be covert single instances. Many impoverished people, particularly in the development of nations, frequently experience ‘repeated shocks to their families and their livelihood…which can entrench any opportunities to stockpile resources and savings’, which are making similar and often concurrent (Wisner et al., 2004). These continuing humanitarian crises may be exacerbated by natural and human hazards but also are aggravated and amplified by human behaviours and social forces.

Risk may be described as ‘the combination of potentially dangerous (hazard, identified by possibility, severity and spatial size) and vulnerable elements (peoples, facilities, etc.) of a potentially harmful phénomene within the conceptual framework of the study’ (Glatron and Beck 2008). Therefore, risk arises only if all hazards and vulnerabilities are active. Vulnerability can be defined to be either the degree of susceptibility to a bio-physical (geographical/physical) hazard or the social capacity to predict and overcome an environmental problem (social vulnerability) (Cutter 2006). Appraisals of risk and susceptibility form an essential part of the continuum of hazard and disaster management (HADM) of the pre-disaster process. A number of variables, including a rapidly rising world population and highly uncertain environment and economic factors, ensure that so many fragile societies are anticipated to be at risk of natural and technical hazards in the future (IFRCRCS, 2003).

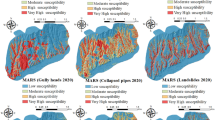

Strategies for multi-hazard risk management can be primarily divided into two methods: (a) evaluating specific threats in a certain terrestrial setting autonomously (Carpignano et al., 2009; Schmidt et al., 2011) and (b) appraising probable communications and/or cascade paraphernalia among the different conceivable hazardous measures (Garcia-Aristizabal et al., 2015; Zhang & Zhang, 2017). As a consequence, in natural disaster prevention and planning, applied multi-hazard evaluation has been under-emphasized. More significantly, most scientific investigations have focused exclusively on national hazards forecasting and monitoring, disregarding the human effects and exposure aspect (Fig. 1.1). In geographical literature and threats, risk (R) has been understood as the amalgamation of hazard exposure (H) and societal susceptibility (V). This association can be articulated in a ‘pseudo-equation’ of R = H × V (Wisner et al., 2004). There is, however, no chance if there is no association of a risky population and vulnerable population in a specific area.

An increasing number of Earth observation capabilities in the low-earth near to polar orbit have been developed in recent decades, improving our capacity to detect solid earth hazards. Almost 40 million Landsat scenes have been accessed via the US Geological Survey portal since late 2008, when Landsat Earth observation images were released publicly to all subscribers free of charge, and the rate of downloads is still growing. However, the majority of satellite observations today have restricted range and compatibility, since they are dominated by the different priorities of national space programmes. Both active radar (synthetic aperture radar-SAR) and passive optical imaging systems (multi-spectral) have undergone this continued expansion of satellite capability. The Copernicus programme of the European Space Agency (ESA) and daily Sentinel-1/2 acquisitions have been ideal for measuring such data collection more regular. The user base (abetted by the growth and facility of open software processing toolboxes) has been suggestively increased by the free and open data policies. A geographical methodology in risk management promotes disaster risk reduction (DRR) by vital data on risk source locations, future impact areas and the regional spread of (vulnerable) populations and hazardous infrastructure (Greiving et al. 2006). Spatial frameworks can also help to identify suitable areas of facilities for disaster prevention and help in relocation, response, distribution of resources and exculpatory policymaking. Consequently, the use of satellite data, GIS and social, demographic and economic data open to the public has the ability to promote modelling activities in terms of improved spatial precision, computational power, scientific rigour of quantitative techniques and profit-making information exchange (Bishop et al., 2012; Hoque et al., 2018). For example, at the International Conference on Satellite EO and Geohazards, EO implementations for threat detection, quantification and tracking for protection, resiliency, emergency management, post-emergency and recovery activities and prevention measures were an integral part of the discussions on community priorities. Moreover, Copernicus EMS provides vast volumes of DRR data and is also a very useful resource for end-users in this area. Searching at international satellite EO and DRR projects, a host of programmes, including the Geohazards Supersites and Natural Laboratories, the GEODARMA project, the GEO EO4SDG and the CEOS Working Group Disasters, is sponsored by both the Group on Earth Observation (GEO) and the Committee on Earth Observing Satellites (CEOS). In the past 60 years, an integrated and diverse area of infectious disease modelling have been developed, and our understanding of human and specific disease transmission processes, involving risk factors, pathogens and spatio-temporal disease distribution patterns has been progressed (Riley, 2007). In order to target limited preventive measures, monitoring and control services, recognizing geographical dynamics of anthropological menace of exposure to vector-borne disease agents is important (e.g. geographic vaccination targeting; administering drugs or information campaigns; using vector proliferation mitigation sentinel sites; and defining locations for pesticides to be used more efficiently). These modelling approaches vary from biostatistical strategies to large biophysical, ordinary differential equation (ODE)-dependent agent models to ecological niche models (Corley et al., 2014).

Moreover, space observations are specifically identified as significant contributions to the policy of disaster risk mitigation. Sendai Framework has goals for interventions, such as ‘reinsurance understanding’, ‘sustainability reduction investing’, and ‘preparedness enhancement of disasters’, for example, both of which can be assisted by strengthened earth observations (Fig. 1.2).

In certain cases, however, neither space-based nor in situ and airborne measurements explicitly help disaster strategic planning: instead, an indirect layer of research is recognized, which in turn tells consumers about risk management (Salichon et al., 2007). This review of the literature seeks to offer a detailed description of the technological innovations developed to minimise and avoid risk caused by natural hazards. Three academic databases which are widely used in literature reviews were chosen by the study: Scopus, Google Scholar and Web of Science. As search criteria, the key terms flood, drought, landslide, water conservation, catastrophe, sustainability, climate change and adaptation were used in accordance with other comprehensive research papers in the area. The aim of this chapter is to offer a critical and detailed overview of recent multi-hazard risk analysis performed using satellite data and geospatial methods. It provides a description of the inventory/detection of natural hazards, mapping and monitoring, vulnerability and risk mitigation on a variety of scales using geospatial data. Extents from these satellites offers useful supplementary input that can be considered for a number of emergency management applications: monitoring the intensity of tropical disturbances, including typhoons, cyclones and hurricanes worldwide; tracking the evolving nature of volcanic domes in the event of flare-ups; monitoring the diffusion of ash released during volcanic eruptions; investigating, often under overcast weather, the spatial scale of flooding regions; measuring the magnitude of forest fires and oil spills; and analysing the impact of droughts on soils, trees, and crops.

1.2 Satellite Data and Multi-hazard Assessment

For the EO environment , satellite-based emergency mapping (SEM) or fast risk and recovery mapping are widely implemented (Van Westen, 2013). For the first time, data from the TIROS-1 satellite provided meteorological predictions as early as the 1960s. This massive advance in Earth Observation opened up new horizons for catastrophe menace control, allowing improved tracking, awareness and eventually anticipation of meteorological hazards (Manna, 1985). The scientific community would be pleased to follow a flood-hazard mapping method focused purely on satellite-based observation as they it is reliable, accurate and relevant throughout the universe, predominantly in areas where ground surveys are not possible (Giustarini et al., 2015). Multi-temporal Moderate-Resolution Imaging Spectroradiometer (MODIS) imagery was used by Sakamoto et al. (2007) and Islam et al. (2010) to monitor flood inundation frequencies in the Cambodia and the Mekong Delta (Bangladesh), respectively. Thomas and Leveson (2011) used Landsat imagery to map annual floods in Australia, while the mixture of thermal (ASTER) and SAR (ENVISAT) data was efficiently used after the 2011 Tohoku (Japan) Tsunami to elucidate the overall flood intensity and to track water deteriorating in the subsequent weeks. Due to its synoptic view and rate of measurements, specifically in high mountain regions, remote sensing data and image processing methods can be used for in-depth risk mapping and monitoring. For the development of landslide susceptibility maps and landslide hazard index , Golovko et al. (2017) used several satellite data (e.g. LANDSAT, SPOT, ASTER, IRS-1C, LISS-III, and RapidEye) and automatic identification systems. For landslide detection and mapping, Lu et al. (2011) used Quickbird remotely sensed data. To establish multi-temporal landslide susceptibility maps, Guzzetti et al. (2012) used aerial imagery, high-resolution DEM (LiDAR) and satellite images (e.g. Landsat-7, IRS, IKONOS-2, Quickbird-2, WorldView-2, and GeoEye-1/2). Along with current landslide catalogue maps and the SAR and interferometric synthetic aperture radar (InSAR) image play an important role for up-dating the landslide inventory through incorporation of auxiliary data. The creation of digital elevation models (DEMs), for instance, those created from Indian remote sensing satellite (IRS) P5 images and TerraSAR-X/TanDEM-X images by InSAR-X, is among the most useful applications, such as the assessment of erosion, landslide and topographic multi-temporal differences (Du et al., 2017). For landslide change detection analysis, Hölbling et al. (2015) used remotely sensed SPOT-5 data, while Kang et al. (2017) used ALOS/PALSAR imagery and InSAR techniques for landslide detection. The National Oceanographic and Atmospheric Administration (NOAA) satellite data perceived by the Advanced Very High-Resolution Radiometer (AVHRR) was considered to gather the prevalence and track the periodic outbreak of cholera in order to establish the early warning system in Bangladesh (Lobitz et al., 2000).

Since, topography is among the most crucial components in most hazard assessment, the development of a DEM and geomorphometric evaluation is crucially important. Current topographic maps, topographic levelling, Electronic Distance Measurement (EDM), differential Global Positioning Systems (DGPS) measurements, digital photogrammetry, Interferometric Synthetic Aperture Radar (InSAR) and Light Detection and Ranging (LiDAR) can be used to obtain elevation data. GTOPO30 (Hastings & Dunbar, 1998) and Shuttle Radar Topographic Mission (SRTM) (Farr & Kobrick, 2000) are the key origins of global DEMs used in hazards assessment and risk analysis. Global optically derived optical topography is also applicable at 30 m with Panchromatic Remote-Sensing Instrument for Stereo Mapping (PRISM) Advanced World-3D Advanced (AW3D) and higher-resolution PRISM datasets accessible for the trade . The topography is based on Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER).

1.3 Spatial Modelling and Multi-hazard Risk Assessment

Over the last few decades , experimental multi-hazard experiments have been more popular (Pourghasemi & Kerle, 2016), but in the twenty-first century, they are still a problem for scientists and researchers (UN, 2002). To prepare hazard maps using geospatial technology, many approaches and models have been used (Adab et al., 2013, Teodoro and Duarte 2013; Hembram et al. 2011). Multi-faceted computational approaches, like multiple adaptive regression splines, have recently been studied for risk evaluation (Pourghasemi et al., 2018), logistic regression (Arabameri et al., 2018) and generalized additive models (Ravindra et al., 2019). In New Zealand, Schmidt et al. (2011) developed a multi-risk modelling methodology, providing an adaptable version of software that enables academicians to ‘plug in’ real progressions of interest. Pourghasemi et al. (2019) conducted a multi-hazard risk evaluation focused on artificial intelligence techniques in Fars Province, Iran. There has also been discussion of artificial intelligence and machine learning. In addition, the analytical hierarchy process (Youssef, 2015) unified with multi-criteria decision analysis (MCDA) and spatial decision support system (Ghorbanzadeh et al., 2018) has been used in evaluations of multi-hazard risk analysis. Soft-computing models, such as artificial neural networks (Yilmaz & Ercanoglu, 2019), fuzzy logic (Vakhshoori & Zare, 2016), decision trees (Wang et al., 2016), support vector machine (SVM) (TienBui et al., 2016), random forest (RF) (Youssef et al., 2016), deep learning method (Xiao et al., 2018), adaptive neuro-fuzzy inference system (ANFS) (Chen et al., 2019), kernel logistic regression (TienBui et al., 2016) and ensembles of ANFIS (RazaviTermeh et al., 2018), have been used for multi-hazard analysis and modelling. The ILWIS GIS framework assessment module allows and directs users to carry out spatial multi-criteria assessments for multi-hazard analysis. The input is a series of maps that depict the criteria’s spatial representation. They are congregated, uniform and weighted in a ‘criteria tree’. The product is one or more ‘composite index map(s)’ implying the realization of the model implemented. Several research organizations around the world have many comprehensive spatial tools, such as HAZUS, a GIS-based natural disaster analysis method built to determine flood risk; HEC-FDA, a computer programme to support crop engineers by susceptibility study of flood risk mitigation strategy. The AHP is one of the most common and effective hazard modelling techniques, such as flood forecasting, visualization and analysis of complex issues (Chen et al., 2011). In addition to AHP, the other suitable models for hazard analysis are MCDA (Samanta et al., 2016), weights of evidence (WofE) (Rahmati et al., 2016a, b), logistic regression (LR) (Tehrany et al., 2015), adaptive neuro-fuzzy interface method (Sezer et al., 2011), artificial neural networks (ANN) (Tiwari & Chatterjee, 2010) and FR model (Rahmati et al., 2016a, b). Gómez-Limón et al. (2003) proposed an innovative approach for weighting the parameters used for aversion to agricultural risk in decision-making. On a single scale, it uses numerical ratings based on multiple choices. In this approach, by utility value functions, all divergent parameters are converted into a single 0–1 scale to take the ultimate judgement. Models that show evidence of the disease’s epidemiological (population-level) features were listed together as ‘Epidemiological’. Variables directly addressing the agent or pathology have been classified under ‘Etiology’. ‘Geospatial modelling’ included models that used satellite data such as the ‘normalised difference vegetation index’ (NDVI), to quantity of live green vegetation in a target area. ‘Quantifiable’ methods, such as tillage processes, were also recognized in some models, along with ‘Temporal’ and ‘Agricultural’ factors. The associations between mechanistic disease transmission factors can be used to explain the geographical spread of disease risk (Krefis et al., 2011), early warning systems, or to create mechanistic vector population and disease transmission models. In designing logical regression models based on Multi-satellite Precipitation Analysis (TMPA) and documenting a clear correlation in those areas between tropical rainfall and the MVEV outreach, Schuster et al. (2011) use remote sensing (RS) data of the Project of Tropical Rainfall Measurement Mission (TRMM). Landsat data are used to assess and compare the green leaf area index (LAI) of rice fields to the Aedes mosquito density build-up. In 2006, Glass et al. created a logistic regression model based on 1992–2005 Landsat Thematic Mapper (TM) imagery to estimate the menace of Hanta virus pulmonary syndrome (HPS) in 2006 and recorded that augmented rainfall in northern New Mexico and southern Colorado raises the risk of HPS following the previous drought years. The spreads of VBDs, viruses, reservoirs and vectors can be modelled by using mathematical and statistical models. Numerical models use a single reckoning or series of equations that feign or describe a system and/or predict that system’s potential behaviour, while statistical models use simulation approaches that include assembling, evaluating and/or interpreting datasets (e.g. regressions) (Sadeghieh et al., 2020). New mapping apps, such as Google Earth™ and MS Virtual Earth™, offer simple and easy-to-use capabilities to produce not only spatial data overlaid on pre-existing satellite imagery or map simulations but also complex space-time sequence representations that can be played as animated films.

1.4 Integration Between Data Science and Geoinformatics: A Practical Guide to Manage Multi-hazard

The immediate need for hazard control and protection is illustrated by significant and irreparable disruption to agriculture, transport, bridges and many other facets of urban infrastructure (Mojaddadi et al., 2017). Moreover, it has been made apparent by the COVID-19 pandemic that biological and environmental hazards converge and raise the complexity of the overall impacts of disasters on communities and economies. However, in order to grab the interactions of natural and biological threats or control the aspects of connectiveness and rippling impacts on social, economic, and environmental environments, emergency response and risk analytics have been sluggish. The deficiency of viable catastrophe planning or new evacuation plans restricts alternative management initiatives. Alternatively, administrators need to be wary of all flaws and restrictions. Inadequate utilization and deployment of money is a big complication in emergency operations. To stop making the incorrect decisions that could cost the lives of individuals, emergency management must provide a clear view of the emergency circumstances reliant upon reliable data. Around the same time, dwelling on irrelevant data and deserting the flow of information due to too much meaningless data causes unsuccessful effects, for example, misidentifying the actual first responder.

Around 1.8 ZB of data was generated in late 2011, according to IDC (2014). Globally, around 1.2 ZB (1021) is generated as electronic data by various sources each year (Hilbert & López, 2011). The data is projected to hit 40 ZB by 2020 (Sagiroglu, 2013). Artificial Intelligence (AI) uses computational, mathematical approaches by software scripts and strategies to simplify decision-making that data centres use without clear instructions to the algorithm to execute a given human task effectively. Present shortcomings should be resolved in the risk assessment of roles and silos and in datasets, studies, simulation and control. Decisions historically reached largely ‘from the gut’ or by benchmarking would become data-driven and systemic. The increasing use of data science and machine learning (ML) are increasingly becoming one of the world’s greatest challenges for computer-driven enterprises, data scientists and legal staff. Today there is enormous interest in corporate data processing, primarily due to ‘the dawn of the Big Data era’ (Ekeu-wei & Blackburn, 2018).

Geospatial Artificial Intelligence (GeoAI) incorporates space science (e.g. geographic information systems or GIS), AI, data processing and high-performance computing techniques to derive useful knowledge from big spatial data. GeoAI reflects a multi-hazard intelligence-based area that combines location to extract actionable knowledge that can be used to enhance risk management. The application of novel outlets of huge volume of spatial data, for instance, social media, automated health reports, satellite data, and personal sensors, and the advancement of public health science (particularly in the framework of ‘smart and safe cities’) are a prominent idea through GeoAI applications at population and discrete level, providing new possibilities to address traditional questions more comprehensively. Figure 1.3 shows probable application of GeoAI in multi-hazard risk management. It is imperative to consider the timeline and context of enterprise data management in order to make good design choices for the emerging technology of today and tomorrow and to prevent making past mistakes. Sensitive master data is now included in the continuing rapid increase of data volume and use across various silos, both on-site and in the cloud, and in a number of data formats. Most importantly, IoT sensors and systems are new and effective forms of geo-tagged big data generation implemented in urban societies (Kamel Boulos & Al-Shorbaji, 2014).

To process, interpret and make sense of such huge amount of spatial big data in real time, it is therefore important and indispensable to implement robust GeoAI technologies. Mojaddadi et al. (2017) proposed an ensemble approach (the frequency ratio (FR) methodology combined with a radial basis function and support vector machine (SVM)) that exhibited utility in GIS-based flood modelling to produce flood likelihood indices for the catchment of the Damansara River in Malaysia. An increasing number of researches have demonstrated the possibility of using machine learning (ML)-based algorithms with spatial datasets and satellite images to create regional-scale landslide susceptibility models, such as decision trees (DT) (Tsai et al., 2013), entropy- and evolution-based algorithms (Kavzoglu et al., 2015), fuzzy-theory (Zhu et al., 2014), neural-fuzzy systems (Xu et al., 2015; Bui et al., 2012), random forest algorithms (Lai & Tsai, 2019), and the advancement of computational resources, geospatial data and technologies. For instance, to predict everyday particulate matter <2.5 μm in diameter (PM2.5) in the USA, a neural network was used to employ numerous predictors, including satellite-based optical aerosol depth (AOD) from the Moderate Resolution Imaging Spectroradiometer (MODIS) (Di et al., 2016). In another research to resolve the lack of building maps in less developed nations for development targets associated to emergency relief and poverty reduction, WorldView-2 satellite data and voluntary geographic information (VGI) were implemented to deep learning (convolutions neural networks or CNNs) to mechanize map creation for buildings in Nigeria (Yuan et al., 2018). Spence et al. (2016) also examined recent developments in social media recruitment, data analysis and public desires and preferences measurement. In China, a geographically weighted gradient boosting machine (GW-GBM) algorithm was employed to model PM2.5 acquaintances, allowing for spatial non-stationarity using spatial smoothing kernels in relations between predictors and PM2.5 (Zhan et al., 2017). GeoAI has been employed in epidemiology to identify and examine the geographical spread of viruses and to explore the consequence of location-based influences on the outcome of diseases. For example, machine learning (K-means clustering) was employed to evaluate spatio-temporal gestational age trends at distribution for 145 million births in over 3000 US counties from 1971 to 2008 using the National Centre for Health Statistics Natality Files to encourage the generation of hypotheses relevant to the aetiology of preterm births (Byrne et al., 1992). Researchers aimed to better examine the principle of the prevalence of HIV based on computer-based algorithm (support vector regression) in the Ivory Coast of Africa to derive mobility and communication data from rectified cell phone data (Brdar et al., 2016). Deep learning in genetics has been extended to fields of research for example functional genomics (e.g. envisaging the arrangement specificity of DNA- and RNA-binding proteins) (Zou et al., 2019).

Figure 1.4 demonstrates a risk assessment system that helps data science and regulatory teams to build faster, more precise and more compliant ML models. For example, data scientists could be better placed to explain key desirable effects, whereas legal workers could describe particular undesired results that could give rise to legal liability. Defence lines relate to the functions and responsibilities of data scientists and those engaged in the ML development, rollout and auditing phase. The management of this data infrastructure, from the data pipeline to the model, is one of the most important and most neglected facets of ML governance. Understanding the outputs of a model is essential to monitor its health and any potential threats, both during preparation and while in deployment .

1.5 Integration with Crowdsourcing and Geoinformatics on Multi-hazard Risk Assessment

As social networks advance , academic projects often concentrate on the use of social media for emergency relief. The main explanation is that social networks can provide not only rich data but also almost real-time information. Social networks build worlds where comments, photos and videos are exchanged within seconds, with 1.79 billion monthly active Facebook users and 500 million daily tweets (Sarvari et al., 2019). Panagiotopoulos et al. (2016) focused on using social media (Twitter) to convey threats to the public in order to help raise visibility or discourage public response from increasing. Two outlooks on risk and emergency message and the Social Amplification of Risk Framework (SARF) (Kasperson et al., 1988) and the Crisis and Emergency Risk Communication Model (CERC) are merged in the theoretical portion of this analysis (Reynolds & Seeger, 2005). Further investigations and innovative technologies have been carried out to reliably identify disaster information, such as machine learning, big data analysis and image processing. However, a detailed view of threatened fields is needed for data fusion. Fry and Binner (2016) investigated compartmental analysis and straightforward evacuation simulation. They model the actions of individuals and the influence of social media with maximal counter-strategies. They developed a Bayesian algorithm for maximal evacuation.

Unlike crowdsourced social media information discussed in the earlier segment, the word ‘crowdsensing’ is employed here to pronounce methods that rely on dedicated software systems to capture precise and organised information, as well as to leverage citizens’ interpretive and analytical skills and local awareness (Gebremedhin et al., 2020). Several ‘crowdsensing’ schemes were developed, including devoted mobile disaster control and earth observation apps (Ferster & Coops, 2013). One example of the Ushahidi framework technology is the Flood Citizen Observatory prototype deployed in Brazil to permit people to report on the local status of river levels, flooded areas and the effects of flooding (Horita et al., 2015). Some programs, like Did You Feel It?, are explicitly constructed for disaster situations. U.S. Geological Survey (USGS) is used DYFI report to measure earthquake shaking intensity. The meteorological Phenomenon Identification Near the Ground (mPING), which tracks meteorological measurements and permits operators to display observations, was set up by the US National Oceanic and Atmospheric Administration (NOAA).NOAA uses mPING data to increase its dual-polarization radar and improve winter weather models, while ground-based meteorological measurements are necessary to verify that the radar has correctly calculated the amount of precipitation (Hultquist & Cervone, 2020). A particular category of knowledge and sharing portal is another crowdsourced geoinformation: the collaborative version of geographic features to conform with internet-based digital maps. This category includes the well-established Wikimapia and OpenStreetMap (OSM) platforms, in addition to the ‘crowdsourcing’ portion of the widespreadGoogleMaps framework, known as GoogleMapMaker (de Albuquerque et al., 2016). Such imagery is a very useful source of knowledge to be used by mappers, and it also helps volunteers from all around the world to participate, not just those who are specifically in the impacted regions.

1.6 Conclusion

Crowdsourced geographic information (CGI) has tremendous capacity not just to deal with the impacts of earthquakes but also to take proactive steps to boost metropolitan areas’ exposure to natural hazards and extreme events. When vast volumes of data remain to be apprehended and gathered, data protection issues remain paramount. A range of approaches for GeoAI implementations are currently being used to assist risk management phases, such as risk recognition, risk estimation and risk assessment. In order to create machine learning models capable of supplying inputs to conventional risk management strategies, historical and real-time data are also used. Ethical mechanisms are therefore important to adequately warn research participants about risks and to protect individual privacy. In addition, in future research, the use of CGI in extenuation and planning phases should be stressed. For example, this could be achieved by exploiting initial instances of using CGI from concerted maps to sustenance catastrophe risk management practises, such as defining essential infrastructures to facilitate emergency planning. For the future work, we should follow the existing decision support system with suitable deep learning algorithms and IoT architectures. Future studies should build on existing GeoAI technologies, including location-based modelling geographies that have not previously been collected at a high spatio-temporal tenacity, or recently evolving spatial volume of data source engineering, to open novel study opportunities and accelerate our knowledge of multi-hazard risk.

References

Adab, H., DeviKanniah, K., & Solaimani, K. (2013). Modeling forest fire risk in the northeast of Iran using remote sensing and GIS techniques. Natural Hazards, 65, 1723–1743.

Arabameri, A., Pradhan, B., Rezaei, K., Yamani, M., Pourghasemi, H. R., & Lombardo, L. (2018). Spatial modelling of gully erosion using Evidential Belief Function, Logistic Regression and new ensemble EBF-LR algorithm. Land Degradation & Development, 29, 4035–4049.

Bishop, M. P., James, L. A., Shroder, J. F., Jr., & Walsh, S. J. (2012). Geospatial technologies and digital geomorphological mapping: Concepts, issues and research. Geomorphology, 137(1), 5–26.

Brdar, S., Gavric, K., Culibrk, D., & Crnojevic, V. (2016). Unveiling spatial epidemiology of HIV with mobile phone data. Scientific Reports, 6, 19342.

Bui, D. T., Pradhan, B., Lofman, O., Revhaug, I., & Dick, O. B. (2012). Landslide susceptibility mapping at HoaBinh province (Vietnam) using an adaptive neuro-fuzzy inference system and GIS. Computational Geosciences, 45, 199–211.

Byrne, D. E., Sykes, L. R., & Davis, D. M. (1992). Great thrust earthquakes and aseismic slip along the plate boundary of the Makran Subduction Zone. Journal of Geophysical Research: Solid Earth, 97, 449–478. https://doi.org/10.1029/91JB02165

Carpignano, A., Golia, E., Di Mauro, C., Bouchon, S., & Nordvik, J. P. (2009). A methodological approach for the definition of multi-risk maps at regional level: First application. Journal of Risk Research, 12(3–4), 513–534.

Chen, Y. R., Yeh, C. H., & Yu, B. (2011). Integrated application of the analytic hierarchy process and the geographic information system for flood risk assessment and flood plain management in Taiwan. Natural Hazards, 59(3), 1261–1276.

Chen, W., Panahi, M., Tsangaratos, P., Shahabi, H., Llia, L., Panahi, S., Li, S. J., Jaafari, A., & Ahmad, B. B. (2019). Applying population-based evolutionary algorithms and a neuro-fuzzy system for modelling landslide susceptibility. Catena, 172, 212–231.

Corley, C. D., Pullum, L. L., Hartley, D. M., Benedum, C., Noonan, C., Rabinowitz, P. M., et al. (2014). Disease prediction models and operational readiness. PLoS One, 9(3), e91989. https://doi.org/10.1371/journal.pone.0091989

Cutter, S. L. (2003). The vulnerability of science and the science of vulnerability. Annals of the Association of American Geographers, 93(1), 1–12.

de Albuquerque, J. P., Eckle, M., Herfort, B., & Zipf, A. (2016). Crowdsourcing geographic information for disaster management and improving urban resilience: An overview of recent developments and lessons learned. In C. Capineri, M. Haklay, H. Huang, V. Antoniou, J. Kettunen, F. Ostermann, & R. Purves (Eds.), European handbook of crowdsourced geographic information (pp. 309–321). Ubiquity Press. https://doi.org/10.5334/bax.w

Di, Q., Kloog, I., Koutrakis, P., Lyapustin, A., Wang, Y., & Schwartz, J. (2016). Assessing PM2.5 exposures with high spatiotemporal resolution across the continental United States. Environmental Science & Technology, 50(9), 4712–4721.

Du, Y., Xu, Q., Zhang, L., Feng, G., Li, Z., Chen, R., et al. (2017). Recent landslide movement in Tsaoling, Taiwan tracked by TerraSAR-X/TanDEM-X DEM time series. Remote Sensing, 9, 353.

Ekeu-wei, I. T., & Blackburn, G. A. (2018). Applications of open-access remotely sensed data for flood modelling and mapping in developing regions. Hydrology, 5, 39. https://doi.org/10.3390/hydrology5030039

Eshrati, L., Mahmoudzadeh, A., & Taghvaei, M. (2015). Multi hazards risk assessment, a new methodology. International Journal of Health System and Disaster Management, 3(2), 79–88. https://doi.org/10.4103/2347-9019.151315

Farr, T. G., & Kobrick, M. (2000). Shuttle Radar Topography Mission produces a wealth of data. Eos Transactions American Geophysical Union, 81, 583–585.

Ferster, C. J., & Coops, N. C. (2013). A review of earth observation using mobile personal communication devices. Computers & Geosciences, 51, 339–349. http://www.sciencedirect.com/science/article/pii/S0098300412003184

Fry, J., & Binner, J. M. (2016). Elementary modelling and behavioural analysis for emergency evacuations using social media. European Journal of Operational Research, 249(3), 1014–1023. https://doi.org/10.1016/j.ejor.2015.05.049

Garcia-Aristizabal, A., Gasparini, P., & Uhinga, G. (2015). Multi-risk assessment as a tool for decision-making. In Urban vulnerability and climate change in Africa. Springer.

Gebremedhin, E. T., Basco-Carrera, L., Jonoski, A., Iliffe, M., & Winsemius, H. (2020). Crowdsourcing and interactive modelling for urban flood management. Journal of Flood Risk Managemen, 13, e12602. https://doi.org/10.1111/jfr3.12602

Ghorbanzadeh, O., Feizizadeh, B., & Blaschke, T. (2018). Multi-criteria risk evaluation by integrating an analytical network process approach into GIS-based sensitivity and uncertainty analyses. Geomatics, Natural Hazards and Risk, 9(1), 127–151.

Giustarini, L., Chini, M., Hostache, R., Pappenberger, F., & Matgen, P. (2015). Flood Hazard Mapping Combining Hydrodynamic Modeling and Multi Annual Remote Sensing data. Remote Sensing, 7(10), 14200–14226.

Glatron, S., & Beck, E. (2008). Evaluation of socio-spatial vulnerability of citydwellers and analysis of risk perception:Industrial and seismic risks in Mulhouse. Natural Hazards and Earth System Sciences, 8(5). https://doi.org/10.5194/nhess-8-1029-2008

Golovko, D., Roessner, S., Behling, R., Wetzel, H.-U., & Kleinschmit, B. (2017). Evaluation of remote-sensing-based landslide inventories for hazard assessment in southern Kyrgyzstan. Remote Sensing, 9, 943. https://doi.org/10.3390/rs9090943

Gómez-Limón, J. A., Arriaza, M., & Riesgo, L. (2003). An MCDM analysis of agricultural risk aversion. European Journal of Operational Research, 151, 569–585.

Greiving, S., Fleischhauer, M., & Lückenkötter, J. (2006). A Methodology for an integrated risk assessment of spatially relevant hazards. Journal of Environmental Planning and Management, 49(1), 1–19. https://doi.org/10.1080/09640560500372800

Guzzetti, F., Mondini, A. C., Cardinali, M., Fiorucci, F., Santangelo, M., & Chang, K.-T. (2012). Landslide inventory maps: New tools for an old problem. Earth-Science Reviews, 112, 42–66. https://doi.org/10.1016/j.earscirev.2012.02.001

Haddow, G. D., Bullock, J. A., & Coppola, D. P. (2008). Introduction to emergency management, 3rd edn. Burlington, MA: Elsevier.

Hastings, D. A., & Dunbar, P. K. (1998). Development and assessment of the global land 1 km base elevation digital elevation model (GLOBE). International Archives of Photogrammetry and Remote Sensing, 32(4), 218–221.

Hembram, T. K., Paul, G. C., & Saha, S. (2011). Comparative analysis between morphometry and geo-environmental factor based soil erosion risk assessment using weight of evidence model: a study on jainti river basin, eastern India. Environmental Processes, 6(1). https://doi.org/10.1007/s40710-019-00388-5

Hilbert, M., & López, P. (2011). The world’s technological capacity to store, communicate, and compute information. Science, 332(6025), 60–65.

Hölbling, D., Friedl, B., & Eisank, C. (2015). An object-based approach for semi-automated landslide change detection and attribution of changes to landslide classes in northern Taiwan. Earth Science Informatics, 8, 327–335. https://doi.org/10.1007/s12145-015-0217-3

Hoque, M. A. A., Phinn, S., Roelfsema, C., & Childs, I. (2018). Assessing tropical cyclone risks using geospatial techniques. Applied Geography, 98, 22–33.

Horita, F. E. A., Albuquerque, J. P., Degrossi, L. C., Mendiondo, E. M., & Ueyama, J. (2015). Development of a spatial decision support system for flood risk management in Brazil that combines volunteered geographic information with wireless sensor networks. Computers & Geosciences, 80, 84–94. https://doi.org/10.1016/j.cageo.2015.04.001

Hultquist, C., & Cervone, G. (2020). Integration of crowdsourced images, USGS networks, remote sensing, and a model to assess flood depth during hurricane florence. Remote Sensing, 2020(12), 834. https://doi.org/10.3390/rs12050834

IDC. 2014. Analyze the future. http://www.idc.com/

IFRCRCS. (2003). Disaster types. International Federation of Red Cross and Red Crescent Societies (IFRCRCS). Website: http://www.ifrc.org/what/disasters/types/

Islam, A. S., Bala, S. K., & Haque, M. A. (2010). Flood inundation map of Bangladesh using MODIS time-series images. Journal of Flood Risk Management, 3, 210–222.

Kamel Boulos, M. N., & Al-Shorbaji, N. M. (2014). On the Internet of Things, smart cities and the WHO healthy cities. International Journal of Health Geographics, 13, 10.

Kang, Y., Zhao, C., Zhang, Q., Lu, Z., & Li, B. (2017). Application of InSAR techniques to an analysis of the Guanling landslide. Remote Sensing, 9(10), 1046. https://doi.org/10.3390/rs9101046

Kasperson, R. E., Renn, O., Slovic, P., Brown, H. S., Emel, J., Goble, R., Kasperson, J. X., & Ratick, S. (1988). The social amlplification of risk: A conceptual framework. Risk Analysis, 8(2), 177–187. https://doi.org/10.1111/j.1539-6924.1988.tb01168.x

Kavzoglu, T., Sahin, E. K., & Colkesen, I. (2015). Selecting optimal conditioning factors in shallow translational landslide susceptibility mapping using genetic algorithm. Engineering Geology, 192, 101–112. https://doi.org/10.1016/j.enggeo.2015.04.004

Keesstra, S., Mol, G., de Leeuw, J., Okx, J., de Cleen, M., & Visser, S. (2018). Soil-related sustainable development goals: Four concepts to make land degradation neutrality and restoration work. Land, 7(4), 133.

Krefis, A. C., Schwarz, N. G., Nkrumah, B., Acquah, S., Loag, W., et al. (2011). Spatial analysis of land cover determinants of malaria incidence in the Ashanti Region, Ghana. PLoS One, 6, e17905.

Lai, J. S., & Tsai, F. (2019). Improving GIS-based landslide susceptibility assessments with multi-temporal remote sensing and machine learning. Sensors (Basel)., 19(17), 3717. https://doi.org/10.3390/s19173717

Lobitz, B., Beck, L., Huq, A., Wood, B., Fuchs, G., et al. (2000). Climate and infectious disease: Use of remote sensing for detection of Vibrio cholerae by indirect measurement. Proceedings of the National Academy of Sciences of the United States of America, 97, 1438–1443.

Lu, P., Stumpf, A., Kerle, N., & Casagli, N. (2011). Object-oriented change detection for landslide rapid mapping. IEEE Geoscience and Remote Sensing Letters, 8(4), 701–705. https://doi.org/10.1109/LGRS.2010.2101045

Manna, A. J. (1985). 25 years of TIROS satellites. Bulletin of the American Meteorological Society, 66(4), 421–423.

Mojaddadi, H., Pradhan, B., Nampak, H., Ahmad, N., & Ghazali, A. H. B. (2017). Ensemble machine-learning-based geospatial approach for flood risk assessment using multi-sensor remote-sensing data and GIS. Geomatics, Natural Hazards and Risk, 8(2), 1080–1102. https://doi.org/10.1080/19475705.2017.1294113

Panagiotopoulos, P., Barnett, J., Bigdeli, A. Z., & Sams, S. (2016). Social media in emergency management: Twitter as a tool for communicating risks to the public. Technological Forecasting and Social Change, 111, 86–96. https://doi.org/10.1016/j.techfore.2016.06.010

Pourghasemi, H. R., & Kerle, N. (2016). Random forests and evidential belief function-based landslide susceptibility assessment in western Mazandaran Province, Iran. Environmental Earth Sciences, 75(3), 1–17.

Pourghasemi, H. R., Gayen, A., Park, S., Lee, C. W., & Lee, S. (2018). Assessment of landslide prone areas and its zonation using logistic regression, Logit Boost, and Naïve Bayes machine learning algorithms. Sustainability, 10(10), 3697.

Pourghasemi, H. R., Gayen, A., Panahi, M., Rezaie, F., & Blaschke, T. (2019). Multi-hazard probability assessment and mapping in Iran. Science of the Total Environment, 692, 556–571.

Rahmati, O., Pourghasemi, H. R., & Zeinivand, H. (2016a). Flood susceptibility mapping using frequency ratio and weights-of-evidence models in the Golastan Province, Iran. Geocarto International. https://doi.org/10.1080/10106049.2015.1041559

Rahmati, O., Zeinivand, H., & Besharat, M. (2016b). Flood hazard zoning in Yasooj region, Iran, using GIS and multi-criteria decision analysis. Geomatics, Natural Hazards and Risk, 7(3), 1000–1017. https://doi.org/10.1080/19475705.2015.1045043

Ravindra, K., Rattan, P., Mor, S., & Aggarwal, A. N. (2019). Generalized additive models: Building evidence of air pollution, climate change and human health. Environment International, 132, 104987.

RazaviTermeh, S. V., Kornejady, A., Pourghasemi, H. R., & Keesstra, S. (2018). Flood susceptibility mapping using novel ensembles of adaptive neuro fuzzy inference system and metaheuristic algorithms. Science of the Total Environment, 615, 438–451.

Reynolds, B., & Seeger, M. W. (2005). Crisis and emergency risk communication as an integrative model. Journal of Health Communication, 10(1), 43–55. https://doi.org/10.1080/10810730590904571

Riley, S. (2007). Large-scale spatial-transmission models of infectious disease. Science, 316, 1298–1301.

Sadeghieh, T., Waddell, L. A., Ng, V., Hall, A., & Sargeant, J. (2020). A scoping review of importation and predictive models related to vector-borne diseases, pathogens, reservoirs, or vectors (1999–2016). PLoS One, 15(1), e0227678. https://doi.org/10.1371/journal.pone.0227678

Sagiroglu, S.D.. 2013. Big data: A review. In Proceedings of the International Conference on Collaboration Technologies and Systems (CTS’13), pp. 42–47, San Diego, CA.

Sakamoto, T., van Nguyen, N., Kotera, A., Ohno, H., Ishitsuka, N., & Yokozawa, M. (2007). Detecting temporal changes in the extent of annual flooding within the Cambodia and the Vietnamese Mekong Delta from MODIS time-series imagery. Remote Sensing of Environment, 109, 295–313.

Salichon, J., Le Cozannet, G., Modaressi, H., Hosford, S., Missotten, R., McManus, K., Marsh, S., Paganini, M., Ishida, C., Plag, H. P., Labrecque, J., Dobson, C., Quick, J., Giardini, D., Takara, K., Fukuoka, H., Casagli, N., & Marzocchi., W. (2007). 2nd IGOS Geohazards Theme report, BRGM.

Samanta, S., Koloa, C., Pal, D. K., & Palsamanta, B. (2016). Flood risk analysis in lower part of Markham River based on multi-criteria decision approach (MCDA). Hydrology, 3(3), 29.

Sarvari, P. A., Nozari, M., & Khadraoui, D. (2019). The potential of data analyticsin disaster management. In F. Calisir et al. (Eds.), Industrial engineering in the big data era, lecture notes in management and industrial engineering. Springer. https://doi.org/10.1007/978-3-030-03317-0_28

Schmidt, J., Matcham, I., Reese, S., King, A., Bell, R., Henderson, R., Smart, G., Cousins, J., Smith, W., & Heron, D. (2011). Quantitative multi-risk analysis for natural hazards: A framework for multi-risk modelling. Natural Hazards, 58(3), 1169–1192.

Schuster, G., Ebert, E. E., Stevenson, M. A., Corner, R. J., & Johansen, C. A. (2011). Application of satellite precipitation data to analyse and model arbovirus activity in the tropics. International Journal of Health Geographics, 10, 8. https://doi.org/10.1186/1476-072X-10-8

Sezer, E. A., Pradhan, B., & Gokceoglu, C. (2011). Manifestation of an adaptive neuro-fuzzy model on landslide susceptibility mapping: Klang Valley Malaysia. Expert Systems with Applications, 38(7), 8208–8219.

Spence, P. R., Lachlan, K. A., & Rainear, A. M. (2016). Social media and crisis research: Data collection and directions. Computers in Human Behavior, 54, 667–672. https://doi.org/10.1016/j.chb.2015.08.045

Tehrany, M. S., Pradhan, B., & Jebur, M. N. (2015). Flood susceptibility analysis and its verification using a novel ensemble support vector machine and frequency ratio method. Stochastic Environmental Research and Risk Assessment, 29, 1149–1165. https://doi.org/10.1007/s00477-015-1021-9

Teodoro, A. C., & Duarte, L. (2013). Forest fire risk maps: A GIS open source application – a case study in Norwest of Portugal. International Journal of Geographical Information Science, 27(4), 699–720.

TienBui, D., Tuan, T. A., Klempe, H., Pradhan, B., & Revhaug, I. (2016). Spatial prediction models for shallow landslide hazards: A comparative assessment of the efficacy of support vector machines, artificial neural networks, kernel logistic regression, and logistic model tree. Landslides, 13, 361–378.

Tiwari, M. K., & Chatterjee, C. (2010). Uncertainty assessment and ensemble flood forecasting using bootstrap based artificial neural networks (BANNs). Journal of Hydrology, 382(1), 20–33.

Thomas, J., & Leveson, N. G. (2011). Performing hazard analysis on complex, software- and human intensive systems, in Proc. of the 29th ISSC Conference about System Safety.

Tsai, F., Lai, J.-S., Chen, W. W., & Lin, T.-H. (2013). Analysis of topographic and vegetative factors with data mining for landslide verification. Ecological Engineering, 61, 669–677. https://doi.org/10.1016/j.ecoleng.2013.07.070

UN. 2002. Johannesburg Plan of Implementation of the World Summit on Sustainable Development Technical report, United Nations.

Vakhshoori, V., & Zare, M. (2016). Landslide susceptibility mapping by comparing weight of evidence, fuzzy logic, and frequency ratio methods. Geomatics, Natural Hazards and Risk, 7(5), 1731–1752.

Van Westen, C. J. (2013). 3.10 remote sensing and GIS for natural hazards assessment and disaster risk management. In Treatise on geomorphology (pp. 259–298). Elsevier.

Wang, L.-J., Guo, M., Sawada, K., Lin, J., & Zhang, J. (2016). A comparative study of landslide susceptibility maps using logistic regression, frequency ratio, decision tree, weights of evidence and artificial neural network. Geosciences Journal, 20, 117–136.

Wisner, B., Blaikie, P., Cannon, T., & Davis, I. (2004). At risk: Natural hazards, people’s vulnerability and disasters. Routledge.

Xiao, L., Zhang, Y., & Peng, G. (2018). Landslide susceptibility assessment using integrated deep learning algorithm along the China-Nepal Highway. Sensors, 18(12), 4436.

Xu, K., Guo, Q., Li, Z., Xiao, J., Qin, Y., Chen, D., & Kong, C. (2015). Landslide susceptibility evaluation based on BPNN and GIS: A case of Guojiaba in the Three Gorges Reservoir Area. International Journal of Geographical Information Science, 29, 1111–1124. https://doi.org/10.1080/13658816.2014.992436

Yilmaz, I., and M. Ercanoglu. 2019. Landslide inventory, sampling and effect of sampling strategies on landslide susceptibility/hazard modelling at a glance. In Natural hazards GIS-based spatial modeling using data mining techniques, pp. 205–224.

Youssef, A. M. (2015). Landslide susceptibility delineation in the Ar-Rayth area, Jizan, Kingdom of Saudi Arabia, using analytical hierarchy process, frequency ratio, and logistic regression models. Environment and Earth Science, 73, 8499–8518.

Youssef, A. M., Pourghasemi, H. R., Pourtaghi, Z. S., & Al-Katheeri, M. M. (2016). Landslide susceptibility mapping using random forest, boosted regression tree, classification and regression tree, and general linear models and comparison of their performance at Wadi Tayyah Basin, Asir Region, Saudi Arabia. Landslides, 13, 839–856.

Yuan, J., Roy Chowdhury, P. K., McKee, J., Yang, H. L., Weaver, J., & Bhaduri, B. (2018). Exploiting deep learning and volunteered geographic information for mapping buildings in Kano, Nigeria. Scientific Data, 5, 180217.

Zhan, Y., Luo, Y., Deng, X., Chen, H., Grieneisen, M. L., Shen, X., Zhu, L., & Zhang, M. (2017). Spatiotemporal prediction of continuous daily PM2.5 concentrations across China using a spatially explicit machine learning algorithm. Atmospheric Environment, 155, 129–139.

Zhang, L., & Zhang, S. (2017). Approaches to multi-hazard landslide risk assessment. In Geotechnical safety and reliability.

Zhu, A.-X., Wang, R., Qiao, J., Qin, C.-Z., Chen, Y., Liu, J., Du, F., Lin, Y., & Zhu, T. (2014). An expert knowledge-based approach to landslide susceptibility mapping using GIS and fuzzy logic. Geomorphology, 214, 128–138. https://doi.org/10.1016/j.geomorph.2014.02.003

Zou, J., Huss, M., Abid, A., Mohammadi, P., Torkamani, A., & Telenti, A. (2019). A primer on deep learning in genomics. Nature Genetics, 51(1), 12–18.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Bhunia, G.S., Shit, P.K. (2022). Geospatial Technology for Multi-hazard Risk Assessment. In: Shit, P.K., Pourghasemi, H.R., Bhunia, G.S., Das, P., Narsimha, A. (eds) Geospatial Technology for Environmental Hazards. Advances in Geographic Information Science. Springer, Cham. https://doi.org/10.1007/978-3-030-75197-5_1

Download citation

DOI: https://doi.org/10.1007/978-3-030-75197-5_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-75196-8

Online ISBN: 978-3-030-75197-5

eBook Packages: Earth and Environmental ScienceEarth and Environmental Science (R0)