Abstract

Signal-based AE techniques use the entire transient waveform resulting from an AE event. As such, more information is available allowing for improved interpretation of fracture processes in a material or structure. Two signal-based approaches are presented and discussed in this chapter: Waveform analysis and quantitative analysis. The former has received increasing attention due to the recent developments and wide availability of machine learning algorithms. The latter is a classic approach that has its origin in seismology. The main approach associated with quantitative analysis is moment tensor inversion (MTI). While MTI requires accurate 3D source localization from an extensive network of sensors, waveform analysis can theoretically be performed with a single sensor. A comparison between signal- and parameter-based AE analyses is presented first. Subsequently, the measurement process is explained and its main influences on the recorded signals are discussed. Finally, waveform analysis and quantitative analysis approaches are described in detail, along with application examples from the literature.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Approaches for recording and analyzing acoustic emission (AE) signals can be divided into two main groups: parameter-based and signal-based AE techniques (AET). Both approaches are currently applied, with success for different applications. Therefore, it is useful to recognize their fundamental differences. A detailed description of parameter-based techniques is provided in chapter “Parameter Analysis”. The reason that two approaches exist is related to the rapid developments in microelectronics over the last few decades. Early on, it was not possible to record, sample, and store a large number of waveforms (signals) over a sufficiently short period of time, which is a requirement for signal-based techniques. Even though significant technical advances have been made in recent years, it is still not feasible to use some signal-based techniques to monitor large structures. For example, the relatively high monetary cost due to the large number of sensors and associated data recorder as well as the time required to apply quantitative analysis techniques, are the main reasons why parameter-based techniques are still popular. However, some of the AE recorders used for classical (parameter-based) AE analysis are now able to store the waveforms of the detected AE signals, even though this is not the primary function of these devices. For applications using quantitative analysis techniques, multi-channel high-speed transient recorders are typically used. The best instruments are those that can be adapted to different applications, and can record waveforms if a signal-based approach is being taken, or record large numbers of AE hit signals if a parameter-based approach is taken, which requires the statistical analysis of many signals. In the following two sub-sections, the two analysis approaches are defined. For a more detailed discussion about the differences between the different analysis techniques, their strengths, and limitations, the reader is referred to chapter “AE Monitoring of Real Structures: Applications, Strengths, and Limitations”, Sect. 1.6.

1.1 Parameter-Based Analysis

If AE events are recorded with one or more sensors, such that a set of parameters are extracted from the signal and later stored but the signal itself is not stored, the procedure is usually referred to as a parameter-based (or classical, qualitative) AET. The idea is that the signals can be sufficiently described by the set of characteristic parameters (or features), and storing this relatively small amount of information consumes far less time and storage space compared to when entire wave-forms are processed. Some typical parameters that can be automatically extracted by commercial AE recording equipment (see, e.g. chapter “Sensors and Instruments”, Sect. 7) are: arrival time (defined as the first crossing of a given amplitude trigger level, or threshold), maximum signal amplitude, rise time (defined as the duration between arrival time and time of maximum signal amplitude), and signal duration (defined by the last crossing of a given amplitude threshold) (ASTM E610 1982; CEN 1330-9 2009).

Figure 1 illustrates these parameters on a hypothetical AE signal. Note that some of the parameters are adopted from seismology. For example, maximum amplitude in parameter-based AE analyses for civil engineering applications is usually defined as the maximum value determined from the absolute value of the recorded signal (see, e.g. chapter “Parameter Analysis”, Fig. 1). The extracted AE parameters, and others described in chapter “Parameter Analysis”, can then be plotted over time or statistical analyses can be applied to them with the objective of capturing changes that might be associated with fracture or degradation processes occurring in a structure. The main advantages of parameter-based AET is that they can be performed with as few as one sensor and in real-time. Because of this, they are the most cost-effective way of monitoring a structure. On the other hand, taking this simplified approach only provides a very limited view of the physics of the actual sources of AE. Additionally, recording settings such as the trigger level and the type of sensor used directly affect the parameters and their perception of how they change over time. As such, parameter-based AET are also referred to as qualitative, or relative, i.e. they allow capturing changes over time for a specific structure or process. However, a comparison between datasets of different projects is often difficult or impossible, because of the limitations described above.

1.2 Signal-Based Analysis

Signal-based approaches have been adopted from seismology and introduced to AE analysis in civil engineering to overcome some of the limitations of parameter-based analysis. Using signal-based AE analysis, as many signals as possible are recorded and stored, along with their full waveforms, which are sampled, i.e. converted from analog-to-digital (A/D), at sampling rates typically in the MHz range. A more comprehensive (and time-consuming) analysis of the data is possible using this approach, but usually only in a post-processing environment, i.e. not in real-time. This chapter discusses two signal-based analyses: Waveform analysis (see Sect. 3) and quantitative AE analysis (see Sect. 4). The fundamental difference between these two is that the former can theoretically be performed with a single sensor; the latter requires an extensive sensor network in order to localize and characterize AE events.

Waveform analysis as discussed in Sect. 3 refers to techniques that analyze, characterize, and compare AE waveforms over time. The concept is that changes observable within recorded signals can be associated with changes in the structure being monitored. In contrast to parameter-based techniques, entire waveforms are analyzed and compared rather than only a few select parameters. Waveform analysis has received increasing attention because of its suitability for application of machine learning and artificial intelligence techniques.

The term ‘quantitative’, as it is used in conjunction with AE analysis, was introduced by several authors (Scruby 1985; Sachse and Kim 1987) in the 1980s to compare this approach with the classical, qualitative techniques based on waveform parameters. The objective of quantitative AE analysis (see Sect. 4) is to describe the nature of each AE source as accurately as possible, rather than concluding about their effects measured at sensor locations away from the source. This approach requires a network of high-fidelity sensors in conjunction with a sophisticated and time-consuming analysis. While considered the most accurate approach, it is still mostly only used in the laboratory, because of the significant requirements on equipment, computation, and interpretation.

When discussing the pros and cons of these two approaches it is important to keep the specific application in mind. This is true for the simple counting of the number of AE occurrences in a material under load by a single sensor, as well as for networks of many sensors used for locating and analyzing data in the time and frequency domains. A more detailed discussion of the applications and limitations of parameter and signal-based AE analysis techniques along with a comparison can be found in chapter “AE Monitoring of Real Structures: Applications, Strengths, and Limitations”, Sect. 1.6.

2 Measurement Setup and Process and Their Influence on Signal-Based AE Techniques

Signal-based AE techniques depend on the characteristics of all parts of the measurement setup used to record and analyze AE, in particular, the type of sensors used. Therefore, and in addition to chapter “Sensors and Instruments”, some general remarks about sensors are given in this section. Note that the terms “sensor” and “transducer” are used interchangeably. Technically, a transducer is a device that can both sense and actuate, while a sensor is a type of transducer that can only sense.

2.1 The Concept of Systems Theory and Transfer Functions

AE originating from actual sources such as small fractures due to cracking are of low magnitude compared to artificial sources imposed by the operator such as a pencil lead break (PLB) or an ultrasonic transducer. Thus, the signals arriving at the sensor are weak and must be amplified if they are to be detected at all. AE signals are subject to a variety of influences such as variations in the material along the ray path (heterogeneities, anisotropy) and the measurement setup (coupling, characteristics of sensors and pre-amplifiers, etc.). Note that these also change over time due to environmental factors as well as degradation processes. To consider the effects of these influences on the recorded signals, methods used in systems theory can be applied. This process is also known as linear filter theory, where an output signal is described as a sequence of linear filters, which are characterized by their complex-valued transfer functions, and are applied to an input signal. Each filter accounts for some aspect of the wave propagation and measurement process. The AE source represents the input signal and the recorded waveforms are the output signal. To apply systems theory, each of the known influences is assigned a different transfer function, which each consist of a frequency and a phase response function. In AE applications it is important to know or estimate the shape and importance of these functions as a precondition to eliminate their influence, if possible. The different influences (or system components) are depicted conceptually in Fig. 2, which is valid also for other methods, such as ultrasonic testing.

Concept of transfer functions to characterize the AE measurement process (adapted from Grosse and Schumacher 2013). The figure on the right shows recorded signals with picked P-wave arrival times, stacked vertically according to the distance between the sensor that recorded the signal and the estimated AE source. Note that this representation requires a preceding source localization. The slope of the orange line represents the mean P-wave velocity

Mathematically, the measurement process can be represented by the following function:

where Rec represents the recorded signal, S is the source function, TFG are the Green’s functions describing the wave propagation in a material, TFS is the transfer function of the sensor (incl. coupling), and TFR is the transfer function of the data recorder. As expressed here in the frequency domain, each complex-valued component is linked through simple pointwise multiplication. In the time domain, the operation linking the components would be convolution. In order to obtain a minimally-biased representation of the source function, deconvolution is employed, which excludes unwanted influences from the recorded signal. Most commonly, this would be done to remove the influence of the sensor’s response on a recorded signal—if the transfer function of the sensor is available (see Sect. 2.4 for more details).

In quantitative analysis, the source function, S is of great interest, because it represents the fracture type. Approaches to determine the fracture type are discussed in Sect. 4. While the intention of non-destructive testing and structural health monitoring methods is the investigation of material parameters or degradation and failure processes, and not the characterization of the measurement system, one has to minimize the effects related to sensors, coupling, and the recording system as much as possible. In the following subsections, components of the measurement process and their influence on the measurement are described individually along with how they can be quantified.

2.2 Sensors

Sensors (and transducers) represent the “ears” of a measurement process and can have a significant effect on the measured entity, depending on their characteristics and how they are coupled to a structure. Subsequently, an overview of sensors, how they work, and their main characteristics are provided as they are relevant to signal-based AET. Additional details about sensors and instruments are provided in chapter “Sensors and Instruments”.

Working Principle and Relevant Characteristics

The type of transducers used almost exclusively in AET are sensors that exploit the piezoelectric effect of lead zirconate titanate (PZT), which transforms displacements into a voltage. While piezoelectric sensors and their design are described in numerous books and papers (e.g. Krautkrämer and Krautkrämer 1986; Kino 1987; Hykes et al. 1992), some characteristics play an influential role in AE measurements and need to be highlighted. These features are important for the sensitive recording of AE (sensitivity) and the broadband analysis of the signals with reference to fracture mechanics (frequency).

To enhance the detection distance of piezoelectric sensors to AE sources, i.e. detect smaller signals over longer distances, sensors are often operated in resonance, i.e. the signals are recorded within a narrow frequency range due to the frequency characteristics of the transducer. The disadvantage is that an analysis of the frequencies present in the signal is of little or no value, because these frequencies are always the same. Highly damped sensors, such as those used for vibration analysis, are operated outside of their resonant frequency allowing high-fidelity analyses to be performed, but are usually less sensitive to AE signals. Progress in the development of AE techniques has led to the need for high sensitivity, wideband displacement sensors that have a flat, i.e. high-fidelity, frequency response (i.e. the sensor gives the same response over a wide frequency range).

There are many papers dealing with a solution of this problem. For many years, a NIST (National Institute for Standards and Technology) conical transducer developed by Proctor (1982, 1986) out of a Standard Reference Material (SRM) and mass-backed (600 gr.) was used as a reference for AE measurements. Several new approaches have explored other transducer materials out of polyvinylidene fluoride (PVDF) or copolymers (Hamstad 1994, 1997; Hamstad and Fortunko 1995; Bar-Cohen et al. 1996) as well as embedded sensors (Glaser et al. 1998).

However, most sensors used currently for AE applications on concrete are manufactured in a more traditional way, showing either resonant behavior or several discrete resonances. These sensors, which are called multi-resonance transducers, have a higher sensitivity than sensors with a backward mass used outside of their resonant frequency. Such sensors should not, however, be considered as (true) broadband and it is essential to know their transfer function, also referred to as calibration curve (or instrument response). Otherwise, signal characteristics from the source are not distinguishable from artifacts introduced by incorrect knowledge of the frequency response.

Figure 3 shows frequency response functions for the select types of sensors discussed above. As can be observed, there are significant differences between the responses of different types of sensors. The resonant sensor, for which the response is shown in Fig. 3 (top), is sensitive over a narrow frequency range around 40 kHz. The multi-resonant sensor (shown in the middle) is sensitive over a broader range between 120 and 350 kHz with resonances at approximately 140, 195, 230, 275, 290, and 330 kHz. The frequency response of the sensor shown at the bottom can be considered high-fidelity and broadband, as it has a relatively flat response over a wide range of frequencies.

Frequency response functions (or calibration curves) for select types of AE sensors. Amplitudes are on a linear scale and have been normalized (Grosse 2021)

A calibration of the sensors’ frequency response, as well as an understanding of its direction sensitivity, is important for many AE applications and is discussed in more detail in Sect. 2.4.

Coupling

Coupling between sensors and specimen is important because the amplitudes of AE signals are usually small, and poor coupling can result in signal loss. In addition to the different ways of coupling, various methods exist for adhering the sensors to the structure. Adhesives or gluey coupling materials, and substances like wax or grease, are often used due to their low impedance. Impedance, which is defined as the loss of signal energy as AE waves travel from the surface of the structure to the sensor, is the most important factor in the selection of a coupling technique. If the structure has a metallic surface, magnetic or immersion techniques are widely used. Different methods using a spring mechanism or rapid cement can be applied for other surfaces. In general, the coupling should reduce the loss of signal energy and have lower acoustic impedance than the material being tested. In all cases, the total volume of air between the sensor and the surface must be minimized. Some surface preparation might be necessary to remove loose particles and create a flat surface for optimal contact across the entire aperture of the sensor. Surface-coupled sensors should be tested regularly to determine if the coupling conditions have changed.

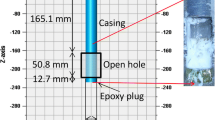

For concrete applications, embeddable transducers can also be used. These can be used both for new structures as well as existing structures, where they are inserted into a small borehole, which is then filled with expansive grout. An example of a recently developed robust embeddable ultrasonic transducer is presented in Niederleithinger et al. (2015). The advantage of these devices is that the coupling is likely to be more reliable and consistent long-term compared to surface-coupled sensors. Also, the strong surface waves, which usually mask the shear wave arrival (see Sect. 4.1), are avoided. The challenge with embedded sensors is that their location might not be accurately known and, depending on their shape and size, the point of measurement might vary and might have to be determined iteratively by locating the source first.

While commonly used in ultrasonic testing, contactless (or air-coupled) measurements are an exception in the field of AE monitoring due to the low signal amplitudes of AE sources that make detection already challenging.

2.3 Preamplifiers and Data Recorders

Commercially available AE recorders differ from general transient recorders. Some are also able to record AE events but must be treated as black-box devices—this chapter deals mainly with devices based on transient recorders (TR) or plug-in TR-boards.

As discussed in Sect. 2.2, piezoelectric sensors transform displacements into a voltage and preamplifiers are usually used to intensify these signals. Because cables from the sensor to the (primary) amplifier are subject to electromagnetic noise, specially-coated cables of short length should be used. Preamplifiers with state-of-the-art transistors should be used to minimize the amount of electronic noise. Preamplifiers with a flat response in the frequency range of interest are optimal. If available, transducers with integrated preamplifiers for an appropriate frequency band are often desirable. Another issue is the large dynamic range of AE signals, which demand gain-ranging amplifiers. Ideally they store the signal in its analog form into a buffer for best amplification adjustment prior to digitization. Unfortunately, such devices are still not yet available in commercial AE data recorders.

Regarding data acquisition, there are two main requirements concerning A/D conversion and triggering. Fast A/D units have to be used to ensure that a large number of events can be recorded—usually each channel of the recording unit is equipped with its own A/D converter. This also ensures accurate time synchronization between channels, which is key for quantitative analyses. Anti-aliasing (low pass) filters are required to avoid aliasing so that signals can be properly transformed to the frequency domain by means of Shannon’s Theorem (Rikitake et al. 1987).

If possible, applying different triggering conditions can reduce the amount of noise that is recorded. Simple fixed-threshold triggers are usually not adequate for signal-based AET because of their inability to accurately detect signals across a wide dynamic range. More sophisticated techniques such as slew-rate, slope, or reference band triggers are more appropriate. Note that the trigger time determined here is usually not an accurate wave arrival (or onset) time and is thus not directly used for source localization (see chapter “Source Localization”) or quantitative analysis (see Sect. 4).

In modern AE systems, the time it takes to convert a signal from analog to digital, and to store it to a hard disk, is in the range of microseconds, depending on signal length and sampling rate. There are still many areas, however, where AE equipment can be improved.

2.4 Sensor Calibration

The objective of sensor calibration is to determine the inherent characteristics of a sensor (or transducer), which is captured by its transfer function. Technically, a calibration usually is done across the entire measurement system, including sensors, preamplifiers, cabling, and data recorders. Since the term “sensor calibration” is commonly used in the literature, it is used herein.

In the literature (e.g. Hsu and Breckenridge 1981; Wood and Harris 1982; Miller and McIntire 1987) different methods of calibrating sensors and determining their transfer (or frequency response) functions are described. Measurements used in sensor calibration should include the frequency, as well as the phase response function. To demonstrate the basic principles, only the frequency domain is considered in the following discussion.

Most calibration methods described in the literature are based on the ‘face-to-face’ method, in which two sensors of the same kind are coupled using one as a transmitter and the other as a receiver. Another method of calibration is to use a defined sharp pulse (e.g. by breaking a glass capillary rod) while the sensor is coupled to a large block made of a homogeneous material (steel or aluminum) or to a steel rod.

Hatano and Mori (1976) suggested the use of a reciprocity method, where sensors are coupled onto a steel plate. This approach uses Rayleigh waves. Other methods described in the literature suggest using a laser vibrometer to measure the displacement of the free surface of the sensor, or a network analyzer (Weiler and Grosse 1995; Grosse 1996).

The sensor calibration process encounters several problems due to aperture effects (diameter of the sensor element is of the order of the wavelength), the mass of the sensor (which affects the measurement of the displacement) or the measurement technique itself (recording of unidirectional motions instead of a three-dimensional vector). Because of these factors, calibration is never fully absolute, and subject to some simplifications.

Subsequently, the results of the face-to-face method are compared to measurements of the displacement of the free oscillating surface of the sensor.

In Fig. 4, typical frequency response functions for a multi-resonant broadband sensor are presented, where the top curve was obtained using a laser vibrometer and the bottom one with the face-to-face method. The former calibration was obtained by sweeping through a frequency range from 0 to 500 kHz (Lyamshev et al. 1995). For the face-to-face measurements, a delta pulse was used containing all relevant frequencies in a selected range. As can be observed, the two curves are comparable and appear to be independent of the particular calibration technique with regard to the position of the natural frequencies.

McLaskey and Glaser (2012) present a procedure for absolute calibration of AE sensors, which is of particular interest for signal-based AET, in particular quantitative analysis described in Sect. 4. The calibration was performed on a thick steel plate for several different commercial AE sensors. The sources they used were steel ball impact and glass capillary fracture, which have well-established analytical solutions. Therefore, transfer functions can be estimated from theory, without requiring a reference transducer. Next, aperture effects are removed using an autoregressive-moving average (ARMA) model, which allows to recover the actual displacement time history response. The displacement time history is a key input if moment tensor inversion (MTI) techniques are to be applied on the measured signal (see Sect. 4.3).

Frequency response functions on calibration documents provided by the sensor manufactures are often displayed on a logarithmic scale. Resonances are more difficult to detect in this format and, therefore, it is advisable to ask for calibration curves on both linear and logarithmic scales. The record of the frequency responses should be available in digitized, in addition to paper, form to allow for the application of deconvolution techniques. The result of a deconvolution is the source function, S, which requires to availability of transfer functions of all components (see Eq. (1)). For more information on deconvolution applied to AE signals, see, e.g. Simmons (1991).

Additional information on sensor calibration can also be found in chapter “Sensors and Instruments”, Sect. 4.

Effect of Incident Angle on Sensor Response

There are many other influences caused by sensor characteristics that affect an AE signal. One example is that the sensitivity depends on the incident angle of the signal with respect to the sensor orientation. The described measurement techniques for frequency response assume that the signal has an angle of incidence perpendicular to the contact plane of the sensor. This assumption is often invalid for AE measurements. Analyses based on amplitude calculations are thus inconsistent if the angle dependence of the sensor is not taken into account. Note that in order to estimate the incidence angle, a source localization is necessary (see chapter “Source Localization”). This directivity effect varies from sensor to sensor, and can be measured using a cylindrical aluminum probe, as illustrated in Fig. 5. The piezo-electric sensors evaluated can show a maximum response at 90° incidence of the signal and some peaks at other incidence angles. Besides this, the aperture size of a sensor can have an effect on the high frequency sensitivity. Unfortunately, data regarding the directional sensitivity pattern of commercial AE sensors are usually not available from the manufacturer.

If the sensors are attached to the surface of a specimen, which is typically the case, the P and S waveforms must be adjusted to account for the free surface amplification using the formulae from the work of Aki and Richards (1980). The surface amplification is caused by the outgoing elastic wave constructively interfering with the wave reflected back into the specimen. These formulae are based on a plane wave incident on a plane surface. The free surface effect is a factor of 2 for SH waves, but are a function of the incidence angle and frequency for P and SV waves.

3 Waveform Analysis

In this section, approaches that consider entire AE waveforms are discussed. These signal-based techniques do not rely on 3D localizations and can theoretically be performed with a single sensor. The basic premise is that AE waveforms are recorded and their changes over time are characterized and interpreted. If possible, it is still recommended that events are localized so that signals can be grouped by their region of origin. Waveform analysis can still be performed in conjunction with quantitative analysis discussed in Sect. 4, as it might provide complementary information.

3.1 Frequency Analysis and Waveform Correlation

Frequency analysis and frequency-based correlation techniques are widely discussed in the AE community. After the introduction of the Fast Fourier Transform (FFT) in the 1960s, frequency analysis techniques became easy to apply and numerous applications for AE testing have been suggested. These range from simple filtering techniques to eliminate noise to more sophisticated approaches that aim to correlate the frequency content of a signal to the source parameters to classify the signals in terms of fracture mechanics.

For example, the similarity of two different AE waveforms can be assessed by determining their coherence functions. Such an assessment is useful because similar signals, having similar frequency content, can indicate that their source mechanisms are also similar (assuming that the influence of the medium and transducer characteristics are small). The similarity of two signals can be quantified using a mathematical tool called Magnitude Squared Coherence (MSC) (Carter and Ferrie 1979). By applying a Discrete Fourier Transform (DFT), the signals are converted from the time to the frequency domain. Consider two transient signals, x and y (e.g. signals (a) and (b) in Fig. 6). The auto spectral densities, Gxx and Gyy and the cross spectral density, Gxy of the signals must first be calculated. Then, the coherence spectrum, Cxy is defined by the squared cross spectrum divided by the product of the two autospectra, as follows:

where ν indicates frequency. A complete derivation of the formulae can be found in Balázs et al. (1993). The mean coherence, Ĉxy of the coherence functions is calculated for all channels of two signals.

An example of the mean coherence for two waveform pairs is given in Fig. 7. This figure was generated using two events with high similarity (Fig. 6a, b) and two others with less similarity (Fig. 6a, c). In the frequency range below the noise level of this experiment, which is approximately 0 to 400 kHz, high coherence is found for the first signal pair (Fig. 7, left). Perfect coherence is only obtained by two identical signals and would result in a coherence value of 1 over the entire frequency range. For comparison, the coherence function of two less similar events (Fig. 7, right) is shown. It is possible to find a value that represents the overall coherence of the signals using the mean coherence function by computing the integral over a select frequency range, as follows:

For the case of discrete signals, Grosse (1996) suggested deriving a coherence sum, Ĉxy that can be calculated as the area underneath the curves using numeric integration (i.e. summation) over the range, νmin to νmax. Here the upper frequency is chosen as the upper corner frequency where the signal is dominating compared to noise. The lower frequency limit can usually set to zero, if there is no signal offset in the data.

Selecting a frequency band of 0–400 kHz, a coherence sum, Ĉxy = 0.533 and 0.151, respectively, is calculated using Eq. 3. Figure 8 gives an example for MSC analysis using the software Signal Similarity Analysis MSC (SiSimA-MSC, University of Stuttgart).

By transforming the waveforms to the frequency domain and calculating their coherence functions, the degree of similarity between recorded waveforms can be quantified and similar source mechanisms might be identified. This method is intended to allow for rapid, systematic classification of AE signals to quantify similarities and differences in signal patterns. One recent related application of this approach is reported in Hafiz and Schumacher (2018) on active ultrasonic stress wave monitoring of concrete. In this work, the researchers use MSC to monitor internal stress variations in concrete both on laboratory specimens as well as an in-service bridge.

An extension of the above approach is reported in Kurz et al. (2004) whereby similarity matrices are constructed that allow a visual interpretation of a collection of signals and their changes over time. The approach was demonstrated on two small-scale concrete specimens, one unreinforced and the other one fiber-reinforced. Additionally, cross-spectral coherence analysis was employed to determine the minimum number of AE events required to sufficiently characterize a particular fracture type.

There are other signal classification approaches available that can be used to detect different fracture mechanisms using various measures of signal similarity. These are often referred to as ‘feature extraction algorithms’, and introduced next.

3.2 Time-Frequency Analysis

This family of techniques is well suited for non-stationary signals and includes the Wavelet transform (WT) (see, e.g. Hamstad 2001; Vallen and Forker 2001; Pazdera and Smutny 2001), short-time Fourier transform (STFT) (see, e.g. Kaphle 2012), and Hilbert-Huang transform (HHT) (see, e.g. Lu et al. 2008). The wavelet transform was developed in the 1980s by the Geophysicist Jean Morlet to generalize the STFT (Daubechies 1996). An advantage of these techniques is that they are able to relate changes in the frequency characteristics of a signal to the time domain. This is particularly useful for AE experiencing dispersive characteristics, which is the case for waves traveling in thin elements such as steel plates. Other applications are in regard to a better onset detection of AE signals (Grosse et al. 2004).

3.3 Machine Learning-Based Approaches

Over the last couple of decades, many applications of artificial intelligence (AI) techniques have been reported. A recent brief overview of machine learning (ML) techniques, a subset of AI, used in the field of NDE is provided in Harley and Sparkman (2019). The most common techniques that show promise for application for AE waveform analysis are summarized subsequently.

Pattern recognition and clustering has been proposed to discriminate different types of AE events (see, e.g. Nair et al. 2019; Anastasopoulos 2005; Rippengill et al. 2003). The goal is that waveforms with similar features are grouped (or clustered) that correspond to different source mechanisms or failure types. A set of characteristics, or parameters, are extracted from each signal, which is then considered by the algorithm to determine the clusters. An example of this approach evaluated on small fiber-reinforced polymer (FRP) specimens is reported by Sause et al. (2012).

Matrix decomposition techniques such as singular value decomposition, independent component analysis, and dictionary learning use the entire waveforms to determine changes occurring over time. These techniques decompose the signals into a set of components, with the objective to find the components that might be related to physical processes of interest. Ideally, components exist that are representative of fracture processes, and at the same time insensitive to noise, and independent of environmental variations. These techniques have found application in ultrasonic guided wave monitoring but might also have potential in AE analysis.

Neural networks consist of an input layer and an output layer that are connected via a number of hidden layers, all mathematically related via nonlinear relationships, which must be estimated. Applications of neural networks for AE source localization have been reported by, e.g. Kalafat and Sause (2015) and Ebrahimkhanlou and Salamone (2018).

Combinations of ML techniques are also reported. For example, Emamian et al. (2003) discuss an approach that aims to classify (or cluster) AE signals using neural networks based on subspace projections of signals.

For additional information on ML techniques and their application to structural health monitoring (SHM), the reader is referred to Farrar and Worden (2012).

3.4 Closing Remarks

AE waveform analysis faces the problem that AE are usually only directly influenced by the fracture process, in an undisturbed way, for a short part of the signal—the very first coherent portion. Generally, the signal is dominated after a few oscillations by side reflections or other influences related to the material (anisotropy, heterogeneity, etc.), the propagation path or the sensor characteristics (sensor resonance, coupling, etc.) rather than by the source itself (Köppel 2002). Similar limitations exist for all of the aforementioned applications. First, effects due to instrumentation used, the geometry, as well as wave travel path might dominate the characteristics of a recorded waveform. For example, events from the same source region with similar failure mechanisms would result in a very low coherence sum if they were recorded with different types of sensors. Furthermore, recording these signals with sensors of the same type at different locations influenced by different coupling or wave travel conditions would also result in a low coherence sum. Without good knowledge of all these influences, and properly accounting for them, signal classification algorithms are of little value, especially if the material is complex in structure and geometry.

ML techniques, while promising, have three challenges (Harley and Sparkman 2019): The feature, black box, and data challenges. The first is related to accuracy, which is highly dependent on the selected features of the waveform. The black box challenge is a particular problem for neural network approaches that do not offer much insight into selected relationships. The third challenge is related to the significant amount data necessary to train ML algorithms reliably. All of these challenges are perhaps an explanation why ML-based approaches have so far only been applied to well-controlled experimental studies. In conclusion, waveform analysis, while appealing and promising, needs to be applied with caution. While it might work well for well-defined situations, i.e. for tests where the aforementioned conditions are very similar, it does not provide a generally applicable solution.

4 Quantitative Analysis

Since quantitative analysis relies on inversion methods, it is worth introducing the concept of inversion. Numerical modeling of data, d for a given set of model parameters, m is referred to as a forward problem, where F is an operator representing the governing equations relating the model and data, as follows:

In the inverse problem, a model is assumed to approximate the physics of the problem and the model parameters are determined from the data, as follows:

The forward problem starts with the causes and then calculates the effects, while the inverse problem analyzes the effects and aims to estimate the causes. An entire branch of mathematics known as inverse theory has emerged and is used to study the solution of inversion problems and many algorithms are available to perform the inversion. Examples include the singular value decomposition method (Lawson and Hanson 1974) or the generalized linearized inverse method (e.g. Barker and Langston 1982).

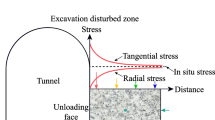

Through inversion, quantitative analysis techniques use parts of, or the entire, AE waveforms recorded by a sensor network to determine information regarding the nature of an AE source (Fig. 9). They rely on accurate 3D localization and require signals with a relatively high signal-to-noise ratio (SNR) to be successful. Two inversion problems are discussed subsequently. The first is the source localization problem where time arrivals are inverted for the 3D source localization. The second problem is more involved where phase amplitudes and polarities are inverted to determine the source mechanism through moment tensor inversion (MTI).

4.1 Overview of Source Localization

Quantitative AE analysis relies on source localization to determine the spatial and temporal coordinates of an AE source, to image fracture sources in a material or structure. The approach used to localize AE events depends on size and geometry of the structure or element being tested or monitored, and whether a solution is required in two or three dimensions. While a detailed description of these methods is the focus of chapter “Source Localization”, a brief overview is provided in this section as it is relevant for inversion techniques.

Determination of Onset Times and Amplitudes

Before the parameters of an AE source can be estimated, the onset (or arrival) times of the wave modes of interest have to be determined for each recorded AE signal. In seismograms from a mining environment, P- and S-wave arrivals are typically well separated, and hence both can be picked. For the case of AE from civil engineering materials where wave travel paths are comparatively short, this separation is typically small and also contains a strong surface (Rayleigh) wave component, which follows the S-wave closely, which is a challenge for the case when surface-mounted transducers are used (Linzer et al. 2015). Therefore, only the P-wave arrival time, tP can usually be determined reliably. For inversion techniques such as MTI, the amplitude of the P-wave, uP needs to be determined as well. Figure 10 shows an example of a typical AE signal recorded from a large reinforced concrete beam test (Mhamdi 2015).

A number of wave arrival (or signal onset) picking algorithms have been developed for AE applications over the years and are discussed in detail in chapter “Source Localization”, Sect. 2.1. During a routine test usually hundreds, or even thousands, of events are recorded, and automatic picking algorithms to extract the arrival times at each sensor are essential. Using a simple fixed threshold algorithm is not sufficient in many cases. For signals with a high SNR (an example is shown in Fig. 10), the precision of automatic algorithms should be within the range of several data samples. A comparison between different pickers for application to concrete can be found in Schechinger (2005). A multi-step process that starts with a fixed threshold pick, and then estimates a more accurate onset time using an AIC-based picker has been found to produce reliable results for concrete applications (Mhamdi 2015; Gollob 2017).

The reading of the P-wave amplitude, uP is done either in the time domain (as illustrated in the inset of Fig. 10) or, alternatively, in the frequency domain. The latter might provide more reliable results, especially for signals with a low SNR. The unit and sign of uP are used as input for a time-domain MTI (see Sect. 4.3) and should be either actual displacement or directly proportional to it.

3D Source Localization and Visualization

In order to employ quantitative analysis techniques on AE events recorded from civil engineering structures, their locations need to be determined as accurately as possible. While many 3D localization methods have been reported in the literature, the oldest and possibly still the most commonly used one is the so-called Geiger method, which is an iterative approach developed by the German seismologist Ludwig Geiger (1910). The determination of the AE hypocenter (or source) using the arrival times is an inverse problem, which has an exact solution when four arrival times are used to calculate the four unknown source parameters: three spatial coordinates and the source time of an event. When more than four arrival times are available, the problem is overdetermined and the calculation is performed using an iterative algorithm, where the coordinates are estimated by minimizing the errors of the unknown source parameters (see e.g., Salamon and Wiebols 1974; Buland 1976). The more recordings are available, the more overdetermined the system is, and the more reliable the evaluation will be. A detailed description of how iterative localization works is provided in chapter “Source Localization”, Sect. 3.4.

Software to perform AE source localization should be able to calculate the standard deviations of the hypocenter and the source time, the weight of a single station (or sensor), the number of iterations performed, and the residual of each sensor with respect to the determined best arrival time (e.g. Oncescu and Grosse 1998; Grosse 2000). The equations used generally assume that the material is homogeneous and isotropic, and that the AE source can be modeled as a point source. If this is not the case, e.g. for an anisotropic material like wood, the computational approach has to be modified—this is covered in detail in chapter “Source Localization”, Sects. 3.2 and 5.6. Figure 11 shows an example of how AE source location estimates can be visualized. Providing error information for location results is essential to interpret the results. Without this information, there is no way to assess the reliability of a result.

Example of 3D source location visualizations from a large-scale reinforced concrete beam test. For each AE event, the mean location as well as an error ellipsoid consisting of the principal standard deviations, σ1, σ2, σ3 are provided. Color indicates time of occurrence where blue and red correspond to early and late AE events, respectively. Visible and hidden sensors are shown as purple and empty circles, respectively. Figure adapted from Mhamdi (2015)

Accuracy and Reliability

The accuracy of AE localization estimates is affected by a number of factors, but most significantly by the accuracy of the picked wave arrival times. In applications where a detailed analysis of failure is required, the need for high location precision is combined with a high AE event rate, and more advanced onset picking algorithms should be used. The main trends are outlined in chapter “Source Localization”. The reader is also referred to publications written by Landis et al. (1992), Zang et al. (1998), Grosse and Reinhardt (1999) and Grosse (2000).

Other factors include: number of sensors used, sensor arrangement, SNR of recorded signals, assumed wave velocity. A detailed comparison of these factors is provided in chapter “Source Localization”, Sect. 4.3.

Accuracy and reliability of source locations can also be improved by employing weighting schemes to the used arrival times. The simplest approach is to completely omit arrival times that come from AE signals with a low SNR or that appear as outliers (Mhamdi 2015). In a more refined approach, the differences between calculated arrival times (based on estimated source location coordinates) and initially picked arrival times, referred to as residuals, are used to compute weighting factors that are essentially giving more importance (or weight) to signals recorded by sensors that are located closer to the source and are thus likely more reliable (Spottiswoode and Linzer 2005). This approach is presented in detail in chapter “Source Localization”, Sect. 5.5.

4.2 Overview of Source Inversion Techniques

Introduction

Source inversion methods are used to estimate the fracture type and orientation of a rupture (fault), as well as the seismic moment, which describes the rupture area that is related to the released energy from the waveforms of the recorded AE events.

Using an inversion algorithm in combination with 3D location estimates, a fault plane solution can be determined that enables the analysis of the fracture process in a material. Another more comprehensive method of fracture analysis is the application of MTI techniques.

Fracture types are of interest in fracture mechanics in understanding and classifying the way a material fails. Different terms are used to describe the cracking behavior. In the following section the terms ‘opening crack’ and ‘Mode I’ are synonymous, as well as ‘Mode II’ and ‘Mode III’ for shear cracks, with forces parallel to the crack (in-plane shear) or forces perpendicular to the crack (out-of-plane shear). In seismology, shear dislocations are described by a double couple (DC) source because the DC force representation allows simplification of some mathematics, which is discussed in Sect. 4.3 in more detail.

Subsequently an overview of the three main approaches to estimate the fracture type of an AE source is presented: First motion technique, moment tensor inversion techniques, and full waveform inversion.

First Motion Technique

There are several ways to determine the crack type and orientation of AE sources. One way is to use the polarities of initial P-wave pulses—this is known as the first motion technique. The distribution of the two senses of the wave polarity around the focus is determined by the radiation pattern of the source. Using the distribution of the polarities, it is possible to estimate the orientation of the nodal planes (where no displacement takes place) and thus the mechanism of the source. However, it is important to bear in mind that, due to the symmetry of the radiation pattern, two orthogonal planes can be fitted. These planes are often referred to as the ‘fault plane’ and the ‘auxiliary plane’.

Positive polarities would be measured at all sensors, in the case of an opening crack (Mode I). In the case of a shear fracture (Modes II and III), the polarity of the P-wave onset changes from positive (upward displacement of waveform) to negative (downward displacement) according to the position of the sensor relative to the source and the shear planes (Fig. 12). These two examples assume that the sensors have been calibrated properly, so that a positive displacement in the signal indicates movement away from the source (i.e. compression). If the radiation pattern of the source is to be analyzed, it is important that the pattern is sampled adequately over the focal sphere. This implies that many sensors are used, providing adequate coverage of the focal sphere, i.e. a good distribution of sensors over all angles with respect to the fault plane. For this technique, a minimum of 23 sensors is required to uniquely characterize the mechanism (Lockner et al. 1993). For a smaller set of sensors, MTI is more suitable (see Sect. 4.3) to estimate the failure mechanisms.

Unfortunately, it is not possible to quantify the deviation from a pure shear dislocation and to determine isotropic components of the source with this first motion technique.

Fault plane solutions can also be used to infer stress orientations. This applies to the case where new fractures develop in response to the ambient stress field (e.g. mining-induced fracturing, failure in laboratory tests) but is limited in tectonic studies since earthquakes often occur on preexisting faults that could have developed during different stress regimes.

The stress directions are halfway between the nodal planes, so that the maximum compressive stress (P-axis) can be found by bisecting the dilatational quadrant and the minimum compressive stress (T-axis) is located by bisecting the compressional quadrant. The intermediate stress axis (B-axis) is orthogonal to the P and T-axes.

Moment Tensor Inversion–Overview

A number of moment tensor inversion (MTI) techniques have been proposed in the literature. The methods applied differ greatly according to the available data and the purpose of the study. For the purposes of this textbook, it is useful to distinguish between the ‘absolute’ and ‘relative’ methods. These broad classes of inversion techniques are based on methods used to estimate the Green’s functions, which describe the wave propagation between a source and a receiver (sensor). A graphical overview of different MTI techniques is given in Fig. 13, followed by a more detailed description of each method.

Overview of different MTI techniques. Figure adapted from Mhamdi (2015)

Moment Tensor Inversion–Absolute Method

In the absolute methods, the Green’s functions are evaluated theoretically, or determined empirically from observations and a known source. The most straightforward approach is to use a homogeneous isotropic material model (Ohtsu 1991). However, this is often not adequate for many of the materials used in civil engineering. More suitable are approaches based on finite-difference methods (Fukunaga and Kishi 1986; Enoki and Kishi 1988; Napier et al. 2005; Hildyard et al. 2005), which can take into account the heterogeneity of the materials. Methods based on a combination of the above mentioned techniques (Landis 1993; Landis and Shah 1995) are often not sufficient due the attenuation of the material.

The absolute methods are often used in global seismology, but are difficult to apply in environments where there are possible lateral inhomogeneities such as the underground mining environment and in materials often used in civil engineering. In these cases, it is not always possible to calculate the Green’s functions with adequate accuracy, resulting in systematic errors being introduced into the moment tensor solution.

For the absolute methods to be properly applied, the influence of material parameters must be well known. This is usually not the case for fractures in concrete, where aggregates, reinforcement and air-filled pores are common. In addition, the sensor characteristics must be well known (this is not a problem in geophysics where seismometers with a flat response over the signal frequency range are usually used). Poor coupling of transducers also limits the accuracy of absolute inversion techniques.

Moment Tensor Inversion–Relative Method

In contrast to the absolute methods, the relative inversion methods do not require the computation of the theoretical Green’s functions for each event. Relative methods are based on the concept of a common raypath between a cluster of seismic sources and any sensor, and assume that all the events in the cluster experience the same wave propagation effects to each sensor.

Figure 14 illustrates the applicability to clusters of AE events where the distance between the hypocenters (AE event locations) of an AE cluster is small compared to the length of the travel paths to the sensors.

In the relative methods, the Green’s functions of a well-known reference event(s) can be used to compute the Green’s functions of the medium for other nearby events (e.g. Patton 1980; Strelitz 1980; Oncescu 1986). This method also has its limitations when only a few reference events are available or when their fracture mechanisms are not well known.

In the relative method proposed by Dahm (1996), known as the relative method without a reference mechanism, the path effects described by the Green’s functions are eliminated analytically—thereby completely avoiding the explicit use of the Green’s functions. However, this method is applicable to clusters of AE events having different radiation patterns only and, because there is no absolute reference, any of the six components of the moment tensor can be incorrect by an unknown scaling factor. This syndrome can be reduced, but not eliminated, through reference to scalar moment estimates. Furthermore, when the mechanisms are similar, the method is extremely sensitive to noise. This is a serious disadvantage in cases where clusters of recorded events might have very similar mechanisms. This problem is accentuated when the method is applied to very small events having similar mechanisms and with signals just above the noise level (Andersen 2001).

Moment Tensor Inversion–Hybrid Method

A problem common to both the absolute and relative MTI methods is their sensitivity to noise/errors in the observations and their dependency on accurate Green’s functions to describe the wave propagation effects. Factors such as the focusing and defocusing of the raypaths due to the presence of lateral inhomogeneities in complex materials, the degradation of the velocity model due to fracturing, the presence of voids on the seismic raypaths, low SNR all have adverse effects on the accuracy of the MTI solution. For example, the deviations in the raypaths due to the presence of an inhomogeneity between a cluster of seismic sources and a particular sensor can result in consistently high or low amplitudes being recorded at that sensor (for all the events in a cluster). This would result in systematic errors being introduced into the moment tensor elements. To compensate for such errors, hybrid MTI methods were developed by Andersen (2001). These methods apply to clusters of AE events, are iterative, and are a combination of the absolute and relative MTI methods.

The hybrid methods are essentially weighting schemes that aim to increase the accuracy of the computed moment tensor by reducing the effect of noisy data on the system of equations and correct for site effects. These methods can also be used to enhance signals recorded near a nodal plane in the radiation pattern or to decrease the influence of a low quality observation. Various weighting schemes, which apply to individual events, have been proposed in the literature (Udias and Baumann 1969; Šílený et al. 1992). The new aspect of the hybrid methods is that the correction or weight applied to a particular observation is based on the residuals (for a particular geophone site, channel and phase) calculated using all of the events in the cluster—this constitutes the relative component of the hybrid methods.

Figure 15 shows stereonets of the radiation patterns, and nodal plane solutions, computed using absolute MTI methods, and for data recorded from a small-scale concrete beam reported by Linzer et al. (2015). The MTI Toolbox was used to produce the results discussed subsequently (Linzer 2012). These ten events were expected to have similar solutions because they all came from an opening crack. As is evident from Fig. 15, there is a fair amount of scatter in the patterns. The effect of the hybrid MTI method is a “sharpening” of the radiation patterns, which is achieved iteratively, as discussed above. This is evident in particular for AE event 119, where the normalized standard error of the solution reduced notably during the iteration process (see Fig. 16).

Radiation patterns for a select cluster from moment tensors computed using a a single-event absolute MTI method and b hybrid MTI method with median correction recorded from a small-scale concrete beam test. Blue color represents movement towards the source (tension) and red indicates movement away from the source (compression). Stereonets are projected on the X-Y plane i.e. top-view of the beam. Figure source: (reuse with permission from publisher) Linzer et al. (2015)

Full Waveform Inversion

This method uses the entire waveforms recorded at the sensors to estimate not only fracture type and fault plane but the force-time function associated with the kinematics of the source. While this technique is established in seismology (see, e.g. Virieux et al. 2017), it has found very little application in the study of AE from civil engineering materials. An example of a closely related study was performed by To and Glaser (2005) on artificial rock. The researchers used a piezoelectric disc, which had been embedded in a gypsum plate, to generate a well-defined AE source.

The inversion in this case can be obtained by deconvolution (i.e. solving the inverse problem) or by setting the problem up as a forward problem where Green’s functions are generated and adjusted from the estimated source origin until a solution is found for which the difference between the recorded and estimated sensor measurements are minimized. The first approach can be difficult under noisy conditions and the second approach is computationally expensive and might have non-unique solutions.

4.3 Moment Tensor Inversion for AE Analysis

Concept of Moment Tensor

The seismic moment tensor is a useful concept because it can be used to completely describe the wave radiation pattern and the strength of a source. The moment tensor, M is defined by a combination of force couples and dipoles as:

where each element of the matrix represents the force couples or dipoles as shown in Fig. 17.

The first subscript of the force couple represents the direction of the two forces and the second subscript gives the direction of the arm of the couple. For example, the element, Mxy is a force couple comprising two forces acting in the +x and –x direction on an arm parallel to the y-axis (Fig. 17, top row, middle column). The magnitude of Mxy is called the ‘moment’ of the force couple. Another example, element Mxx is a vector dipole consisting of two forces acting in the +x and –x directions, where the arm is parallel to the x-axis (Fig. 17, top row, left column) and hence has no moment.

In the case of a purely explosive source, the source mechanism can be described by summing the diagonal components of the moment tensor, as shown in Fig. 18.

The source mechanism of an idealized shearing source can be described by summing the off-diagonal components, –(Mxy + Myx) of the moment tensor, as is shown in Fig. 19.

Inversion for the moment tensor is possible because a linear relationship, first noted by Gilbert (1973), exists between the ground displacement and the Green’s functions (Fig. 20). Typical input data for a MTI consist of the network geometry (location coordinates of the sensors), knowledge of sensor polarity (i.e. whether an upward displacement at the sensor indicates compression or tension), sensor orientation, estimated AE source locations, P and/or S-wave displacement amplitudes recorded at each sensor (time-domain inversion) or P and/or S-wave spectral amplitudes (frequency-domain inversion), and the polarities of the wave phases. It should be stressed that unbiased measurements are critical for an MTI to produce reliable results. This means that either (1) high-fidelity sensors with a flat response curve are used or (2) the sensor’s transfer function needs to be available and employed to deconvolve the sensor’s influence from the recordings.

Summary of MTI Methodology

The goal of all MTI methods is to use observed values of ground displacement to infer properties of the source, as characterized by the moment tensor. By using the representation theorem for seismic sources (Aki and Richards 1980) and assuming a point source, the displacement field, uk recorded at a receiver, k is given by:

where \(G_{ki,j} \left( {x,t;\zeta ,t'} \right)\) are the elastodynamic Green’s functions containing the propagation effects between the source, \(\left( {\zeta ,t'} \right)\) and receiver, \(\left( {x,t} \right)\). The comma between the subscript indices in Eq. (7) describes the partial derivatives at the source with respect to the coordinates after the comma, i.e. \(G_{ki,j} = \frac{{\partial G_{ki} }}{{\partial \xi_{j} }}\). The \(M_{ij} \left( {\xi ,t} \right)\) terms are the nine time-dependent components of the moment tensor. This equation can be simplified dramatically by assuming that the source time function is an impulse and that all of the moment tensor components have the same time-dependency (synchronous source approximation). In addition, since the equivalent body forces conserve angular momentum, Mij = Mji, only six of the components are required. Applying these assumptions, Eq. (8) reduces to the following linear relationship:

which can be written using matrix notation as:

where u is a vector of dimension, n of sampled values of the integrated ground displacement or spectral plateaus depending on whether a time or a frequency-domain approach is being taken; G is a n × 6 matrix of Green’s functions in the coordinate system of the receivers; and m is a vector consisting of the moment tensor components, M11, M22, M33, M12, M 13 and M23. In most cases the number of sensors, n ≫6 and the system of equations is thus, in principle, overdetermined.

Vectors or matrices are denoted using bold letters, and vector or matrix elements are given using the same letter but in italics. For example, ui is a vector of observed amplitude data for event, i containing M elements of the form, \(u_{ij}\) where the first subscript, i indicates the event number and \(i = \left( {1,2,3, \ldots ,N} \right)\) where N is the maximum number of events, the second subscript, j denotes the site number where j = (1, 2, 3, …, p) and p is the maximum number of geophone or sensors. Previously published work by Andersen (2001) gives the equations for both triaxial recordings of both P- and S-wave phases (which requires two additional indices). Since the sensors used in quantitative AE analysis are uniaxial and only the P-waves are used, because the P-S separation is very small, the equations can be simplified as follows.

The elements contained in vector, u are:

The Green’s function matrix, \({\mathbf{G}}_{i}\) for the ith event consists of elements of the form, \(G_{ji1} ,G_{ij2} ,G_{ij3} , \ldots ,G_{ij6}\), where the third subscript describes the component of the Green’s function.

Each element in the Green’s function is described by

where \(\gamma_{ij1} = \frac{{x_{i} - \xi_{j1} }}{{r_{ij} }}\), \(\gamma_{ij2} = \frac{{x_{i} - \xi_{j1} }}{{r_{ij} }}\), and \(\gamma_{ij3} = \frac{{x_{i} - \xi_{j1} }}{{r_{ij} }}\) are the direction cosines of the source-receiver vector where source, i is positioned at \(x_{i} = \left( {x_{i1} ,x_{i2} ,x_{i3} } \right)\) and receiver, j at \(\xi_{j} = \left( {\xi_{j1} ,\xi_{j2} ,\xi_{j3} } \right)\). The direction cosines, \(\eta_{j1} ,\eta_{j2} ,\eta_{j3}\) describe the orientation of each sensor, ρ indicates density, α is the P-wave velocity and r is the source-receiver distance, \(r = \left| {x - \xi } \right|\).

The great utility of Eq. (11) is that the Green’s functions are rotated into the coordinate system of the sensors so that measurements from uniaxial directional sensors can be used. This approach differs from most derivations in crustal seismology where the measurements are rotated into the P-Sh-Sv directions and the Green’s functions are calculated for the P-Sh-Sv coordinate system.

The vector of moment tensor components for the ith event, \({\mathbf{m}}_{i}\) is given by:

where \(m_{i1} = M_{11}\), \(m_{i2} = M_{12}\), \(m_{i3} = M_{13}\), \(m_{i4} = M_{22}\), \(m_{i5} = M_{23}\), \(m_{i6} = M_{33}\) and \(M_{11} ,M_{12}\), etc. are the Cartesian moment tensor components defined by Eq. (6) and illustrated in Fig. 17 in the coordinate system of the seismic network.

To solve for the components of the moment tensor, m, Eq. (9) is written as an inverse problem such that:

where G−1 is the generalized inverse of G. The system of linear equations can then be solved using standard least-squares techniques.

In general, MTIs involve two major assumptions. Firstly, it is assumed that the fault plane dimensions are shorter than the wavelength of the seismic waves used in the inversion and, secondly, that the effect of the earth structure on the seismic waves is modeled correctly. If either of these assumptions does not hold, the resultant moment tensor may contain “false” components that do not represent the physics of the source.

Interpretation of the Moment Tensor

Once the moment tensor has been estimated, a number of source parameters can be computed to aid interpretation. The most basic of the source parameters is the scalar seismic moment, Mo, which is a measure of the irreversible inelastic deformation in the area of the rupture and is the root-mean-squared average of the moment tensor elements:

The scalar moment can be related to attributes of the source:

where μ is the rigidity (described by the Young or shear modulus of the medium), A is the surface area of the rupture, and \(\bar{D}\) is the average final static displacement after the rupture. The rigidity modulus, μ has a relatively simple physical meaning and measures the resistance of the elastic body to shearing deformation.

Slightly more complicated analyses involve decomposing the moment tensor into its isotropic and deviatoric components, which represent the volume change and shearing contributions to the source mechanism. To do this, the moment tensor is first diagonalized and the deviatoric component is calculated by subtracting the one third of the trace from each of the eigenvalues:

where tr(M) is the trace of the moment tensor, and is equal to the sum of the eigenvalues: tr(M) = e1 + e2 + e3. The trace is a measure of the volume change at the source and the sign of tr(M) gives the direction of motion relative to the source with positive being outward. For example, a negative value of tr(M) indicates an implosion.

The deviatoric component can be further decomposed into a variety of eigenvalue combinations that represent simple arrangements of equivalent body forces, adapted from fracture mechanics, like that of an opening crack (Mode I) or of shear cracks (Mode II or III) (Fig. 21).

The coseismic volume change at the source can be calculated using:

where λ and μ are Lamé’s elastic moduli (McGarr 1992). The constant, λ is not simply related to experimentally observed quantities and its value is usually calculated from those of μ and one of the other experimentally determined coefficients. As an example, for quartzitic strata, 3λ + 2μ = 1.63 × 105 MPa (McGarr 1992).

The deviatoric moment tensor can also be expressed as a sum of double-couples, M1 and M2:

Each of the double-couple terms has a scalar seismic moment defined by Eq. (14). Therefore, the total shear deformation can be computed using:

Perhaps one of the most useful parameters that can be computed from the deviatoric moment tensor is the fault plane solution. This consists of the orientations of the two planes (usually expressed as strike, dip and rake, Fig. 22) that can be fitted to the deviatoric moment tensor along which zero displacement takes place (i.e. the nodal planes). The moment tensor components for a double-couple of arbitrary orientation are given by Aki and Richards (1980) as:

Definition of fault orientation parameters: strike, dip and rake (Andersen 2001)

where s is a unit slip vector lying in a fault plane and n is a unit vector normal to the plane. Since the moment tensor, M is symmetric, Mij = Mji, the vectors s and n can be interchanged, which means that the vector normal could be the slip vector to the other plane, and vice versa. These two nodal planes are called the fault plane and auxiliary plane, and together are called the fault plane solution. The fault plane solution indicates the type of faulting that is taking place in the source area (Fig. 23).

Triangle diagram for displaying the focal mechanism projections, also referred to as stereonets, for common fault types. The three vertices correspond to pure strike-slip (top), reverse (right) and normal fault (left) mechanisms. Planes, a and b represent either the fault plane or auxiliary plane. Shaded regions (++) indicate compressional P-wave motions (Andersen 2001)

Fault plane solutions can also be used to infer stress orientations. This applies to the case where new fractures develop in response to the ambient stress field (e.g. mining-induced fracturing, failure in laboratory tests) but is limited in tectonic studies since earthquakes often occur on preexisting faults that could have developed during different stress regimes. The stress directions are halfway between the nodal planes, so that the maximum compressive stress (P-axis) can be found by bisecting the dilatational quadrant and the minimum compressive stress (T-axis) is located by bisecting the compressional quadrant. The intermediate stress axis (B-axis) is orthogonal to the P and T-axes.

In Fig. 24 illustrates the relationship between fault planes and the maximum and minimum compressional stresses, and Fig. 25 shows the same relationship for different fault types.

Simplistic relationship between the maximum (P) and minimum (T) principal stresses and fault planes (modified from Stein and Wysession 2003)

Simplistic relationship between the P- and T-axes and normal, reverse and oblique slip fault types. The P and T axes can be obtained by drawing a meridian line that connects the poles to the fault planes. The points halfway between the nodal planes are the P- and T-axes (modified from Stein and Wysession 2003)

Note that these directions of maximum and minimum compressive stress discussed above are 45° to the slip plane, which is simplistic. Laboratory experiments show that the fracture plane is often about 25° from the maximum principal stress direction.

A plethora of decompositions are possible, but many are physically implausible. It is important to have an idea of the likely failure modes, and apply the relevant decomposition. Reports are available illustrating different ways to decompose the moment tensor (e.g. Jost and Hermann 1989).

Another parameter that can be calculated is the deviation, ε, of the seismic source from that of a pure double-couple:

where \(e_{1}^{*}\) and \(e_{3}^{*}\) are the maximum and minimum deviatoric eigenvalues with \(\left| {e_{1}^{*} } \right| \ge \left| {e_{2}^{*} } \right| \ge \left| {e_{3}^{*} } \right|\); ε = 0 for a pure double-couple source, and ε = 0.5 for a pure compensated linear vector dipole (CLVD) (Dziewonski et al. 1981). The percentage of double-couple contributions, %DC, to the deviatoric moment tensor can be calculated from ε using:

(Jost and Hermann 1989). The percentage of CLVD contributions, %CLVD, to the deviatoric moment tensor can be calculated from ε using:

The sum of %DC and %CLVD should be 100.

Another measure of the nature of the moment tensor is the R-ratio introduced by Feignier and Young (1992). R is essentially the ratio of volumetric to shear components and is defined as:

where \(e_{k}^{*}\) are the deviatoric eigenvalues. If R > 30, the event is considered to be dominantly tensile; if –30 ≤ R ≤ 30, the event is a dominantly shear event; if R <–30 the event is dominantly implosive. An event could show implosive components if there is collapse towards a void, for example, closure of an underground excavation.

An example of the R-ratios computed for AE events recorded during a large-scale reinforced concrete beam test is presented in Fig. 26. As can be observed, the majority of AE events have R-ratios between –45 and +30, and thus lie in what is considered the shear region. Note that the events in Cluster 1 originated from an inclined crack in the high shear region of the beam. Details of the test are reported in Mhamdi (2015).

Elevation view (top) and R-ratios versus AE events plot for select cluster (bottom) from large-scale reinforced concrete beam test. Figure adapted from Mhamdi (2015)

4.4 Concluding Remarks

While MTI has been used in seismology and mining to characterize ground motion for many decades, it has still not found widespread application and acceptance in fracture monitoring of civil engineering materials. Some of the reasons might be associated with the wave characteristics being easier to interpret in the larger scale media of the earth. Also, instrumenting civil engineering structures with many high-fidelity sensors and recording hardware is expensive, cumbersome, and requires highly-specialized expertise for analyzing and interpreting the data.

The MTI Toolbox is a software package that was originally developed for the mining environment (Andersen 2001; Linzer 2012) but has proven itself for quantitative analysis of AE from civil engineering materials (Mhamdi 2015; Linzer et al. 2015; Finck et al. 2003). Once AE locations and wave mode amplitudes have been estimated, this software can be used to perform MTI using the absolute, relative, or hybrid method. A plethora of parameters are provided along with stereonets to interpret the results. Figure 27 shows a screen shot of the input mask and Fig. 28 shows a sample result.

Screenshot of input mask from MTI Toolbox (Linzer 2012)

Screenshot of results provided by MTI Toolbox (Linzer 2012)

The derivation presented in this chapter has been adapted for application to AE analysis of civil engineering materials, i.e. for P-wave measurements by uniaxial sensors. The difference between this derivation and those given in crustal seismological texts is that the Green’s functions are rotated into the coordinate system of the sensors whereas, in crustal seismology, the Green’s functions are calculated for the P-Sh-Sv coordinate system which require triaxial data that can be rotated into the same system.

References

Aki K, Richards PG (1980) Quantitative seismology. Freeman, San Francisco

Anastasopoulos A (2005) Pattern recognition techniques for acoustic emission based on condition assessment of unfired pressure vessels. J Acoust Emiss 23:318–330

Andersen LM (2001) A relative moment tensor inversion technique applied to seismicity induced by mining. PhD thesis, University of the Witwatersrand, Johannesburg, p 230

ASTM E610 (1982) Standard definitions of terms relating to acoustic emission. ASTM, pp 579–581

Balázs GL, Grosse CU, Koch R, Reinhardt HW (1993) Acoustic emission monitoring on steel-concrete interaction. Otto Graf J 4:56–90

Bar-Cohen Y, Xue T, Lih SS (1996) Polymer piezoelectric transducers for ultrasonic NDE. NDTnet 1(9):7

Barker JS, Langston CA (1982) Moment tensor inversion of complex earthquakes. Geophys J Int 46(3):341–371

Berger H (ed) (1977) Nondestructive testing standards—a review. Gaithersburg, ASTM, Philadelphia

Buland R (1976) The mechanics of locating earthquakes. Bull Seismol Soc Am 66(1):173–187

Carter GC, Ferrie JF (1979) A coherence and cross spectral estimation program. IEEE Press, pp 1–2.3

CEN European Standard (2009) EN 1330-9—nondestructive testing—terminology—Part 9: Terms used in acoustic emission analysis. Comité Européan de Normalisation CEN. (1330-9) Rel. 2000-3, pp 1–23

Dahm T (1996) Relative moment tensor inversion based on ray theory: theory and synthetic tests. Geophys J Int 124:245–257

Daubechies I (1996) Where do wavelets come from?—a personal point of view. Proc IEEE 84(4):510–513

DGZfP (1991) Merkblatt SE-3 – Richtlinie zur Charakterisierung des Schallemissionsprüfgerätes im Labor. Deutsche Gesellschaft für Zerstörungsfreie Prüfung. Recommendation SE-3