Abstract

Existing clustering ensemble selection methods adopt internal and external evaluation indexes to measure the quality and diversity of base clusterings. The significance of base clustering is quantified by the average or weighted average of multiple evaluation indexes. However, there exist two limitations in these methods. First, the evaluation of base clusterings in the form of linear combination of multiple indexes lacks the structural analysis and relative comparison between clusterings and measures. Second, the consistency between the final evaluation and the multiple evaluations from different measures cannot be guaranteed. To tackle these problems, we propose a clustering ensemble selection method with Analytic Hierarchy Process (AHPCES). Experimental results validate the effectiveness of the proposed method.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

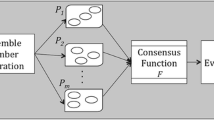

Clustering ensemble selection creates the final clustering by evaluating and selecting a subset of base clusterings, and it performs as good as even better than full clustering ensemble [6, 13, 17, 18]. Quality and diversity of base clustering are two critical issues of clustering ensemble selection. A subset of base clusterings of both high quality and diversity will produce a precise ensemble result.

Most existing clustering ensemble selection methods adopt internal and external evaluation indexes to measure the quality and diversity of base clusterings. The significance of base clustering is directly quantified by the average (or weighted average) of multiple evaluation indexes [18]. However, there exist the following problems in these methods. First, the final evaluation of base clusterings is obtained by the linear combination of multiple indexes, which is a single level evaluation assemble and lacks the structural analysis and relative comparison between clusterings and measures. Second, the consistency between the final evaluation of base clusterings and the multiple evaluations from different measures cannot be guaranteed.

To tackle the problems above, we propose a clustering ensemble selection method based on Analytic Hierarchy Process (AHPCES) [15] in this paper. AHP is a structured technique for organizing and analyzing complex group decisions. As to the characteristics of AHP for group decision making, we apply it to formulate the clustering ensemble selection process. Specifically, we design an analytic hierarchy process to evaluate base clusterings, which achieves the hierarchical ensemble of the weights of evaluations from base clustering level to criteria level. Considering the significances of evaluation measures and integrating the weights of base clusterings under multiple measures in hierarchy, AHP-based method can generate more reasonable and consistent evaluations of base clustering results which facilitates the selective clustering ensemble.

The rest of this paper is organized as follows. Section 2 introduces the proposed method in detail, which includes comparison matrix construction, weight computation and algorithm implementation. In Sect. 3, experiment results validate the effectiveness of the AHP-based selection for clustering ensemble.

2 Clustering Ensemble Selection with AHP

The framework of the proposed AHPCES consists of three levels. On the bottom level of base clusterings, all the base clusterings are measured with multiple evaluation indexes under the criteria of quality and diversity; On the second level of evaluation index, multiple evaluation indexes are grouped and composed to form the overall evaluation of base clusterings in the aspects of quality and diversity respectively; On the top level of criteria, the final significance of base clusterings is obtained by integrating the significances of the criteria of quality and diversity. The detailed computation of the base clustering significance is introduced below.

On the top level, the significance of a clustering result \({C_i}\) is obtained by

in which the weights \({\lambda _q}\) and \({\lambda _d}\) denote the significances of the criteria of clustering quality and diversity and we set \({\lambda _q} = 0.7,{\lambda _d} = 0.3\) as default. \({q_{{C_i}}}\) and \({d_{{C_i}}}\) denote the evaluations of the quality and diversity of the clustering \({C_i}\).

On the second level of evaluation index, given L quality measures and T diversity measures, \({q_{{C_i}}}\) and \({d_{{C_i}}}\) are obtained by

where \(0 \le w{q_l},w{d_t} \le 1\mathrm{{ }}\) and \(\mathrm{{ }} \sum \limits _{l = 1}^L {w{q_l}} = \sum \limits _{t = 1}^T {w{d_t}} = 1\). \({q_{{C_i}}^l}\) and \({d_{{C_i}}^t}\) denote the evaluations of the clustering \({C_i}\) with the lth quality measure and the tth diversity measure respectively, \({w{q_l}}\) and \({w{d_t}}\) are the normalized significance weights of the measures.

On the bottom level of base clustering results, adopting L quality and T diversity measures to evaluate M base clusterings, we suppose \({\{ q_{{C_i}}^1,...,q_{{C_i}}^l,...,q_{{C_i}}^L\}}\) are L quality evaluations and \({\{ d_{{C_i}}^1,...,d_{{C_i}}^t,...,d_{{C_i}}^T\}}\) are T diversity evaluations of a clustering \({C_i}\), \(1 \le i \le M\) and normalize all the evaluations to \(\left[ {0,1} \right] \). The pairwise comparison of clusterings \({C_i}\) and \({C_j}\) in quality can be formulated by

and the pairwise comparison of clusterings in diversity can be formulated by

Based on the pairwise comparison, given an evaluation measure, we can construct a comparison matrix of all the clusterings.

Definition 1

Pairwise-Comparison Matrix of Clustering Results. Given M clusterings and an evaluation measure, the pairwise comparison of clusterings \({C_i}\) and \({C_j}\) is \(L\left( {i,j} \right) _{q|d}^{l|t}\) denoted by \(L\left( {i,j} \right) \). Suppose a mapping \(f:L\left( {i,j} \right) \rightarrow [1,9]\) ranks continuous pairwise comparison into 9 levels through sorting and partitioning all the values, the comparison matrix of clusterings is constructed as follows.

For convenience, we use \({\mathbf{{P}}_q^l}\) to denote the comparison matrix of the lth quality measure and \({\mathbf{{P}}_d^t}\) to denote the matrix of the tth diversity measure.

According to the AHP theory, the elements of the comparison matrix indicate the levels of relative importance between the different clustering results. ‘1 | 9’ means ‘equal | extreme importance’. Moreover, because \(\mathbf{{P}}\left( {i,j} \right) \cdot \mathbf{{P}}\left( {j,i} \right) = 1\), the comparison matrix \(\mathbf{{P}}\) is a positive reciprocal matrix. Referring to [15], we can further define the consistent comparison matrix of clusterings.

Definition 2

Consistent Comparison Matrix. If the pairwise-comparison matrix \({\mathbf{{P}} = \left[ {\mathbf{{P}}\left( {i,j} \right) |1 \le i,j \le M} \right] }\) is a positive reciprocal matrix, it is consistent if and only if the following relationship holds.

A comparison matrix \(\mathbf{{P}}\) is ‘perfectly consistent’, if \(\mathbf{{P}}\) satisfies the \(C.R. = 0\). A comparison matrix \(\mathbf{{P}}\) is ‘near consistent’, if the consistent ratio \(0 \le C.R. \le 0.1\). The ratio \(C.R. = \frac{{C.I.}}{{R.I.}}\), R.I. is a random index whose value depends on M and \(C.I. = \frac{{\lambda {}_{\max } - M}}{{M - 1}}\), in which \({\lambda _{\max }}\) is the maximum eigenvalue of the matrix. If a comparison matrix is consistent (or near), the principle eigenvector of the matrix is the consistent low-rank representation of all the pairwise comparisons [15]. The experiments will validate the consistency of the constructed comparison matrix.

Due to the principal eigenvector of a consistent comparison matrix \(\mathbf{{P}}\) is a necessary representation of the priorities derived from the pairwise comparisons, we can calculate the evaluations of clusterings through the eigendecomposition of the comparison matrix. The elements of the obtained principal eigenvector represent the significance of the corresponding clustering results, which are consistent to the pairwise comparisons. For the \({M \times M}\) pairwise comparison matrix \(\mathbf{{P}}_q^l\) of the lth quality measure, we normalize the element sum of the principle eigenvector \({\mathbf{{q}}^l}\) to one and obtain the quality weights of M clustering results.

For multiple L quality measures, the principle eigenvectors of L comparison matrices lead to L groups of quality weights \(\{ {\mathbf{{q}}^1},...,{\mathbf{{q}}^L}\} \) as the overall quality evaluation of the clustering results. In a similar way, we can calculate the diversity weights of clustering results \({\mathbf{{d}}^t} = \left\{ {d_{{C_1}}^1, \cdots ,d_{{C_M}}^T} \right\} \) through the eigendecomposition of comparison matrices of diversity measures \(\mathbf{{P}}_d^t,\mathrm{{ 1}} \le t \le T\).

Besides constructing the comparison matrices to compute the weights of clustering results \(q_{{C_i}}^l\) and \(d_{{C_i}}^t\), we should also construct comparison matrices to calculate the significance weights of evaluation measures, i.e. \(w{q_l},\mathrm{{ }}w{d_t}\). In this paper, we adopt four internal indexes as the quality measures (L \(=\) 4) and three external indexes as the diversity measures (T \(=\) 3). The internal indexes include Calinski Harabasz index (CHI) [3], Davies-Bouldin index (DBI) [5], Compactness (CP) and Separation (SP). The external indexes include Cluster Accuracy (CA), Adjusted Rand index (ARI) and Normalized Mutual Information (NMI). Referring to [14], we can construct a \(4 \times 4\) comparison matrix \({\mathbf{{P}}_{eq}}\) for quality measures and a \(3 \times 3\) comparison matrix \({\mathbf{{P}}_{ed}}\) for diversity measures.

Similar to the calculation of weights of clusterings, the significance weights of evaluation measures are computed through eigendecomposition of \({\mathbf{{P}}_{eq}}\) and \({\mathbf{{P}}_{ed}}\).

where \(\mathbf{{eq}} = \left\{ {w{q_1}, \cdots ,w{q_L}} \right\} \mathrm{{ }}\),\(\mathrm{{ }}\sum \limits _{l = 1}^L {w{q_l} = 1} \mathrm{{ }}\) and \(\mathrm{{ }}{} \mathbf{{ed}} = \left\{ {w{d_1}, \cdots ,w{d_T}} \right\} \),\(\mathrm{{ }}\sum \limits _{t = 1}^T {w{d_t} = 1}\). The normalized principal eigenvectors \(\mathbf{{eq}}\) and \(\mathbf{{ed}}\) provide the comparative significance weights of the evaluation measures in quality and diversity respectively.

As introduced above, synthesizing the weights of clustering results and evaluation measures as shown in Formulas (1) and (2), we can quantify the overall evaluation of clustering results under multiple measures and thereby select the significant clusterings for ensemble. The process of clustering ensemble selection based on AHP is presented in Algorithm 2.

3 Experimental Results

We implement three experiments to validate AHPCES. In these experiments, we adopt kmeans to generate 50 base clusterings, set the selection ratio ranges from 20\(\%\) to 60\(\%\), use LinkCluE [10] as the consensus function and adopt three external criteria (CA, ARI, NMI) and three internal criteria (SC, DI, CHI) to evaluate the clusterings, high evaluation values indicate the high performances of the clusterings. All the experiments are performed on 11 data sets. The detailed descriptions of the data sets are shown in Table 1.

3.1 Test of Comparison Matrix Consistency

In the first experiment, in order to demonstrate the consistency of the constructed pairwise comparison matrices of clustering quality and diversity (see Sect. 2), we compute the consistency ratio C.R. of all the pairwise comparison matrices for the base clusterings on each data set.

Figure 1 shows the average consistency ratio of the quality and diversity comparison matrices \(C.R. \le 0.1\) (namely near consistent), which indicates that the principal eigenvectors of comparison matrices can be used to quantify the significance of base clusterings in quality and diversity.

3.2 Test of AHP-based Clustering Selection

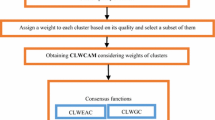

In the second experiment, we implement two tests to verify the AHPCES. In the first test, we compare AHPCES with four clustering selection strategies, which include Full Clustering Ensemble (FCE), Random Clustering Ensemble Selection (RCES), Top-k Quality Clustering Ensemble Selection (TQCES) and Top-k Diversity Clustering Ensemble Selection (TDCES).

Figure 2 shows the average evaluations on all the data sets against the selection ratios ranging from 20% to 60%. We can find that, under the same selection proportions, AHPCES achieves the most precise ensemble results than the other selection strategies, which means the AHPCES is more effective to select the significant base clusterings for ensemble.

In the second test, we adopt four consensus functions to ensemble the clusterings selected by AHPCES and the full clusterings without selection. The consensus functions include CSPA, HGPA, MCLA [16] and TOME [19]. With different consensus functions, Fig. 3 shows the comparison between the ensemble results with AHPCES and without selection. We can find that, for all the consensus functions, the AHPCES can improve the full ensemble results, which validates the robustness of the AHP strategy for base clustering selection.

3.3 Overall Evaluation

In the final experiment, we expect to overall evaluate the proposed AHPCES method through comparing with three elegant clustering ensemble selection methods. The methods for comparison include Cluster and Selection algorithm (CAS) [6], Adaptive Cluster Ensemble Selection algorithm (ACES) [2] and Selective Spectral Clustering Ensemble algorithm (SELSCE) [12]. Because of the limitation of paper length, we just present the average evaluations generated by different selection strategies on all the data sets.

Table 2 presents the comparison between AHPCES and other clustering ensemble selection methods. We show the max average value evaluated by each criterion and attach the corresponding selected proportion. It is obvious that AHPCES achieves the best performances. Abundant experiments indicate that our proposed method is effective to select the qualified and diverse base clusterings, and thereby produce precise clustering ensemble results.

4 Conclusions

we propose a clustering ensemble selection method with analytic hierarchy process (AHPCES) to address the limitations of the most existing clustering ensemble selection methods. Experimental results validate AHPCES can generate reasonable and consistent evaluations of base clusterings for selective ensemble. Our future work will focus on the theoretical analysis of the consistency of pairwise comparison matrices and try to improve the construction of the comparison matrices of evaluations indexes.

References

Asuncion, A., Newman, D.: UCI machine learning repository (2007)

Azimi, J., Fern, X.: Adaptive cluster ensemble selection. In: Twenty-First International Joint Conference on Artificial Intelligence, 9, pp. 992–997 (2009)

Caliński, T., Harabasz, J.: A dendrite method for cluster analysis. Commun. Stat. Theory Methods 3(1), 1–27 (1974)

Chang, H., Yeung, D.Y.: Robust path-based spectral clustering. Pattern Recogn. 41(1), 191–203 (2008)

Davies, D.L., Bouldin, D.W.: A cluster separation measure. IEEE Trans. Pattern Anal. Mach. Intell. 2, 224–227 (1979)

Fern, X.Z., Lin, W.: Cluster ensemble selection. Stat. Anal. Data Min. ASA Data Sci. J. 1(3), 128–141 (2008)

Fränti, P., Virmajoki, O.: Iterative shrinking method for clustering problems. Pattern Recogn. 39(5), 761–775 (2006)

Fu, L., Medico, E.: Flame, a novel fuzzy clustering method for the analysis of dna microarray data. BMC Bioinform. 8(1), 3 (2007)

Gionis, A., Mannila, H., Tsaparas, P.: Clustering aggregation. ACM Trans. Knowl. Discov. from Data (TKDD) 1(1), 4 (2007)

Iam-on, N., Garrett, S., et al.: Linkclue: a matlab package for link-based cluster ensembles. J. Stat. Softw. 36(9), 1–36 (2010)

Jain, A.K., Law, M.H.C.: Data clustering: a user’s dilemma. In: Pal, S.K., Bandyopadhyay, S., Biswas, S. (eds.) PReMI 2005. LNCS, vol. 3776, pp. 1–10. Springer, Heidelberg (2005). https://doi.org/10.1007/11590316_1

Jia, J., Xiao, X., Liu, B., Jiao, L.: Bagging-based spectral clustering ensemble selection. Pattern Recogn. Lett. 32(10), 1456–1467 (2011)

Jie, Z., Zhihui, L., Duoqian, M., Can, G., Xiaodong, Y.: Multigranulation rough-fuzzy clustering based on shadowed sets. Inf. Sci. 507, 553–573 (2018)

Liu, Y., Li, Z., Xiong, H., Gao, X., Wu, J., Wu, S.: Understanding and enhancement of internal clustering validation measures. IEEE Trans. Cybern. 43(3), 982–994 (2013)

Saaty, T.L.: Decision-making with the ahp: why is the principal eigenvector necessary. Eur. J. Oper. Res. 145(1), 85–91 (2003)

Strehl, A., Ghosh, J.: Cluster ensembles-a knowledge reuse framework for combining multiple partitions. J. Mach. Learn. Res. 3(Dec), 583–617 (2002)

Yue, X., Miao, D., Cao, L., Wu, Q., Chen, Y.: An efficient color quantization based on generic roughness measure. Pattern Recogn. 47(4), 1777–1789 (2014)

Zhao, X., Liang, J., Dang, C.: Clustering ensemble selection for categorical data based on internal validity indices. Pattern Recogn. 69, 150–168 (2017)

Zhong, C., Yue, X., Zhang, Z., Lei, J.: A clustering ensemble: two-level-refined co-association matrix with path-based transformation. Pattern Recogn. 48(8), 2699–2709 (2015)

Acknowledgment

This work was supported by National Natural Science Foundation of China (Nos. 61976134, 61991410, 61991415) and Open Project Foundation of Intelligent Information Processing Key Laboratory of Shanxi Province (No. CICIP2018001).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Liu, W., Yue, X., Zhong, C., Zhou, J. (2020). Clustering Ensemble Selection with Analytic Hierarchy Process. In: Yang, H., Pasupa, K., Leung, A.CS., Kwok, J.T., Chan, J.H., King, I. (eds) Neural Information Processing. ICONIP 2020. Communications in Computer and Information Science, vol 1332. Springer, Cham. https://doi.org/10.1007/978-3-030-63820-7_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-63820-7_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-63819-1

Online ISBN: 978-3-030-63820-7

eBook Packages: Computer ScienceComputer Science (R0)