Abstract

The recent advances in TEM instrumentation with faster and more sensitive detectors, and the ever-increasing number of advanced algorithms capable of achieving quality 3D reconstructions with fewer acquired projections, are transforming electron tomography in one of the most versatile tools for a materials scientist, as the possible field of application for this technique is open to virtually any nanoscaled material. The complete three-dimensional characterization of magnetic nanoparticles is not an exception. Not only the 3D morphology is resolved, but also the elemental composition in 3D, by combining the available reconstruction algorithms and TEM spectral characterization techniques, seeking to retrieve the so-called spectrum volume. Among them, electron energy loss spectroscopy (EELS) stands out, given its unchallenged lateral resolution and its unique ability to resolve variations of the oxidation states, and even atomic coordination, through the analysis of the fine structure of the elemental edges in the acquired spectra. This chapter is a revision of electron tomography strategies applied to magnetic nanomaterials, beginning in a chronologically ordered description of some of the commonly used algorithms and their underlying mathematical principles: from the historical Radon transform and the WBP, to the iterative ART and SIRT algorithms, the later DART and recently added compressed sensing-based algorithms with superior performance. Regarding the spectral reconstruction, dimensionality reduction techniques such as PCA and ICA are also presented here as a viable way to reduce the problem complexity, by applying the reconstruction algorithms to the weighted mappings of the physically meaningful resolved components. In this sense, the recent addition of clustering algorithms to the possible spectral unmixing tools is also described, as a proof of concept of its potentiality as part of an analytical electron tomography routine. Throughout the text, a series of published experiments are described, in which electron tomography and advanced EELS data treatment techniques are used in conjunction to retrieve the spectrum volume of several magnetic nanomaterials, revealing details of the NPs under study such as the 3D distribution of oxidation states.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Electron tomography

- EELS

- PCA

- ICA

- Clustering

- HCA

- Spectrum volume

- SIRT

- ART

- Radon transform

- WBP

- DART

- Compressed sensing

- Magnetic-NPs

- MVA

- Fe-oxide

- Mn-oxide

- TEM

- HAADF

- TVM

- La-oxide

- BLU

- Missing-wedge

- EFTEM

- X-EDS

1 Introduction: Fundamentals of Electron Tomography and Overview of Classic Reconstruction Methods

Electron tomography in the transmission electron microscope (TEM) refers to the reconstruction of 3D volumes from the 2D projection images obtained in the microscope. TEM tomography was first applied to the field of biology [1]. In the last couple of decades, the operational and instrumental advances, as well as the formulation of new reconstruction algorithms, have introduce tomography into the realm of materials and physical sciences. Nowadays, it plays a central role in the study and fabrication of nanostructured materials. Moreover, the combination of tomographic techniques with TEM spectroscopic techniques (electron energy loss spectroscopy EELS and energy-dispersive X-ray spectroscopy EDX/EDS) has recently opened new perspectives for the characterization of nanomaterials.

In order to successfully carry out tomography experiments in the TEM, the signal acquired (projected images) must fulfil the projection requirement: the contrast in the image must change monotonically with given property of the sample (e.g. thickness or Z number) [2]. This automatically discards high resolution TEM images, formed through phase contrast, and specifically bright and dark field diffraction contrast imaging modes. For crystalline samples, scanning-TEM high-angle annular dark field (STEM-HAADF) images are preferred, as HAADF incoherent signal is monotonically dependent on the thickness and the Z number.

Experimentally, tomography in the TEM is carried out through the acquisition of a set of images obtained at different tilt angles [3]. Hence, a certain degree of discretization is inherently introduced and, thus, the quality of the reconstruction will be affected by the number of projections (the angle step) and the considered angular range. The resolution in TEM tomography is anisotropic. For simplicity, let us consider a single tilt axis experiment. In the tilt axis direction, the resolution is that of the projected images, but in the other two orthogonal axes, it is limited by the number of projections acquired and the diameter of the reconstruction volume, where lower step improves the resolution, especially at higher angles. However, this leads to an increment in the acquisition time, possibly jeopardizing sample stability and increasing sample damage. A compromise between angle step and acquisition time, avoiding a significant loss of resolution, is thus necessary.

Furthermore, since the space in the pole piece inside the TEM column is limited, it is practically impossible to cover the whole range of angles \(\pm {90^\circ }\) with conventional sample holders. This causes an undersampling in the illumination direction and is responsible of the most important artefact in TEM tomography: the missing wedge [2]. It degrades the resolution causing an elongation in the illumination direction, and it must be corrected by a factor of: \(= \;\sqrt {(\alpha + \sin \alpha \,\cos \alpha )/(\alpha - \sin \alpha \,\cos \alpha )}\). There are several approaches to deal with the effect of the missing wedge. Experimentally, the preparation of samples in a needle shape [4, 5] is one possible solution (to cover the \(\pm {90^\circ }\) tilting range), but in principle excludes the study of nanoparticles. Double tilt experiments [6] are a viable option, reducing the missing wedge to a missing cone, at the cost of increasing the difficulty during image acquisition. DART [7, 8] algorithms appear as an alternative option when the composition of the sample is well known.

1.1 Mathematical Principles and Reconstruction Methods

1.1.1 Radon Transform and Fourier Methods

The first attempts to solve problems of reconstruction of 3D objects by tomography were based on the Radon transform [9] formulation and the Fourier space properties, through the application of the so-called central section theorem: the projection of an object at a given angle is a central section through the Fourier transform of that object (i.e. calculating the Fourier transform of the acquired projected images at different angles is equivalent to sampling the Fourier space of the projected object, that can be recovered performing an inverse Fourier transform).

The definition of the missing wedge is straightforward in terms of the Fourier space. Applying the central section theorem, we can relate the undersampling in the projection tilt series with non-sampled spatial frequencies in the Fourier space and, thus, to the resolution degradation and elongation of the reconstruction [2].

Although theoretically and historically relevant, the discretization imposed by the actual experiment inevitably forces a certain degree of interpolation in the reconstruction and, thus, an increment in the computational time. This triggered the development of the so-called direct methods. In them, the reconstruction is carried out in direct space instead of Fourier space.

1.1.2 Weighted Back-Projection (WBP)

This technique consists in the reconstruction of the original object from the projections in direct space [10]. To that end, the back-projection bodies are built, smearing the projected images back in the original projection angles. WBP has been extensively used for single tilt axis experiments (mainly in medical and biological applications [11, 12]). Not only it can be analytically resolved but it is also equivalent to Fourier methods. Besides, some of the artefacts intrinsically associated to WBP, due to the discrete nature of the experiments, are easily removed by applying a combination of ramp and low-pass filters. However, WBP performance is far from that of the iterative methods listed below.

1.1.3 Algebraic Reconstruction Techniques (ART)

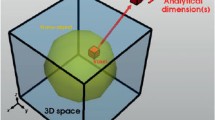

To understand the mathematical procedure behind this first iterative method, let us consider a single axis tilt experiment [13]. The object to be reconstructed can be seen as a set of planes (slices) perpendicular to the tilting axis. Every and each one of these planes would then be a 1D line in the projected TEM image for each tilt angle in the experiment. By reconstructing the set of 2D planes, the 3D volume corresponding to the sample can be recovered, hence the problem dimensionality is reduced in the calculations (Fig. 11.1).

Each plane of the volume is regarded as a grid of n × n points (i, j) completely containing the sample slice into its boundaries (Fig. 11.1). In the reconstruction, each (i, j) position will have an optical density \({\rho_{i,j}}\), depending on the object and the angle of the projection. Each ray \(\left( {k,\theta } \right)\) of the projection has the integrated density \({R_{k,\theta }} = \;\sum {\rho_{i,j}}\;\), where the summation is extended to all the grid points (i, j) contained within the ray. Experimentally, the acquired images are projections at a series of angles \(\theta\), and the measured intensities are \({P_{k,\theta }}\) for each \(\left( {k,\theta } \right)\) ray. Therefore, obtaining the set of \({\rho_{i,j}}\) (n × n) from \({P_{k,\theta }}\) is the problem that needs to be solved

The iterative nature of ART implies that the algorithm needs to converge. Two algorithms are available, multiplicative (mult.) and additive (add.):

where the initial guess for the optical density \({\rho_{i,j}}\) is to consider a homogeneous distribution of the total intensity (T) of a plane (slice) \({\rho_{i,j}}^{0\;} = T/{n^2}\). In the additive method, \({N_{k,\theta }}\) is the number of grid points (i, j) in the ray \(\left( {k,\theta } \right)\). The algorithms positively define \({\rho_{i,j}} \geqslant 0\), as they take into account the fact that the signal is positively constrained (\(\rho \left( {x,y,z} \right) \geqslant 0\)).

1.1.4 Simultaneous Iterative Reconstruction Technique (SIRT)

This second iterative method for tomographic reconstruction arises from the same mathematical framework as ART [14]. The main difference lies in the use of information in the algorithms. ART gets \({\rho_{i,j}}\) in each iteration and for each projection (angle \(\theta\)), only from information of the same projection. SIRT algorithms use all projections at the same time:

The term \({L_{k,\theta }}\) is the length of the ray \(\;\left( {k,\theta } \right)\).

Stability in noisy conditions is greatly enhanced in SIRT algorithms (specially compared to ART). SIRT converges more slowly than ART, but the quality of the results is overall better. Nonetheless, care is required when operating ART and SIRT algorithms. The results may converge after a few iterations and the algorithms may then begin to diverge again (i.e. the reproduced intensities for the projections may further differ from the measured projected values).

1.1.5 Discrete Algebraic Reconstruction Technique (DART)

DART algorithm belongs to the field of discrete tomography (DT), dealing with volume reconstruction from a low number of projections [7, 8]. It is driven by the idea of a priori object segmentation (i.e. component separation assigning a single grey level for each material present in the object). It operates iteratively in two steps: (1) reconstruction step, (2) segmentation step. For simplicity, let us consider a binary case (i.e. only one material species and the background) for a 2D reconstruction from 1D projections, that can be easily generalized to 3D volume reconstructions. The problem (reconstruction) can be represented by a linear system \(\vec p = W\vec x\), where p are the projection values, x the image/object and W represents the projection process.

The reconstruction step is a continuous tomographic reconstruction method: ART [13], SIRT [14] or any other iterative algorithm can be used. From now on, we refer to this step as the algebraic reconstruction method (ARM). The segmentation is carried out separating the material and the background (thresholding the grey levels), and identifying the set boundary \({B^{\left( t \right)}}\) and ‘fixed’ \({F^{\left( t \right)}}\) pixels (where (t) accounts for the iteration step).

The set \({B^{\left( t \right)}}\) will be updated by the ARM algorithm in each iteration, while \({F^{\left( t \right)}}\) will remain fixed. This way, the number of variables in the linear system are reduced and the computational power required is greatly decreased. Since the segmentation is applied upon the ARM-reconstructed images (possibly suffering from missing wedge), the separation of grey levels can be defective at first. To allow the creation of boundary regions inside the material, a random set of pixels from \({F^{\left( t \right)}}\) is introduced in the ARM as well with a p probability (adjustable from 0 to 1, depending on each problem). Also, a smoothing filter is applied after each iteration, to counter the strong local oscillations in the grey levels of \({B^{\left( t \right)}}\) after the ARM step.

It has been proven that DART converges faster than any ARM available, when the number of grey levels does not exceed 5 [7]. The accuracy of the reconstruction (measured comparing phantom images and the results of the reconstruction for different algorithms) is usually higher, independently of the number of projections or the angular range. Tests on the effect of the missing wedge reveal a much-reduced impact in final solutions achieved by DART. Since electron dose is reduced when a lower number of projections are required, DART appears as a viable option in cases of severe sample damage due to beam sensitivity.

Despite the advantages described for DART, it remains a heuristic algorithm, meaning that convergence cannot always be assured. This circumstance makes the selection of a unique termination criteria difficult. It also relies on previous knowledge of the sample composition. Although it is robust with respect to the chosen segmentation criteria (threshold), if the number of components (different grey levels) is not correctly identified, the reconstruction may present serious image artefacts (Fig. 11.2).

2 Analytical Tomography and the Spectrum Volume Approach

As tomographic reconstruction techniques become more accurate for volume reconstruction in materials science, and taking advantage of the new technical advances in transmission electron microscopes, the possibility of generalizing tomography to include spectroscopic signals arises. This is a particularly interesting approach in the case of magnetic nanostructures.

To this end, the combination of different analytical techniques in the TEM with tomographic reconstruction, such as energy filtered TEM tomography (EFTEM-Tomo), energy-dispersive X-ray tomography (EDX-Tomo) and electron energy loss spectroscopy tomography (EELS-Tomo) is considered.

EFTEM-Tomo [15, 16] is based on the idea of performing a classic tomography experiment from a data set of energy filtered TEM images. In this EFTEM imaging mode, a characteristic electron energy loss usually corresponding to an element specific signature (core level ionization, or bulk plasmon modes) is selected by the definition of three energy windows [3]. For each element present in the sample, an image is obtained at a characteristic electron energy loss, corresponding to a given transition of the element (or a characteristic plasmon energy of the compound). The lower acquisition times compared to other techniques and the sample stability during the acquisition (i.e. the minimization of spatial drift due to the low quantity of images needed) made it the technique of choice in the first experiments in analytical tomography. Nevertheless, EFTEM faces two shortcomings that are difficult to overcome. The first problem is the short energy resolution, implying that the elemental separation is compromised in cases where the energy window may span over various overlapping features on the spectrum. The second problem is the low energy range covered by each window. In order to be effective, each energy window must be as small as possible to avoid misidentification (as already discussed). Hence, to cover large portions of the spectra, the quantity of required filtered images may be huge.

Nowadays, EDX-Tomo [17,18,19] is one of the most popular techniques to obtain a 3D elemental reconstruction in nanostructured materials. The new generation of detectors and the multi-detector geometries in the TEM column have largely decreased acquisition times and improved collection efficiency. This way, X-ray spectra can be collected fast enough to limit sample damage, as it is tilted to acquire the projections required for the tomographic reconstruction. Nonetheless, EDX is not as good as EELS in terms of spatial resolution and oxidation state information is not available from EDX.

Finally, EELS spectrum image (SI) [20, 21]—tomography reconstruction has become the option of choice to analytically reconstruct a 3D volume when high spatial and energy resolution are required. The advances in TEM instrumentation (e.g. Cs correctors [22], Cc correctors [23] and monochromators [24], Cold FEG [25] and better spectrometers) has allowed the acquisition time for EELS SI to decrease in recent years, as well as providing an access to higher resolution. Furthermore, EELS-SI contain more information than solely the elemental composition. Fine structure information about the local atomic environment in the sample is available through the analysis of the energy loss near-edge structure (ELNES), such as the atomic coordination, valence state, and type of bonding. Thus, the determination of the oxidation state is made possible by analysing EELS-SI data. ELNES arises from the quantum nature of the electronic excitation process for the electron in a certain atomic shell interacting with the electron beam, within the atom probed. Physically, the near-edge structure (spamming several tens of eV from the ionization edge) can be directly linked to the density of (empty) states above the Fermi level [3, 26]. Some of the more usually exploited ELNE-structures are the white lines (sharp peaks caused by the ionization to well-defined empty energy states of electros in transition metals and rare earths) [27].

In the following, a series of key experiments on the development of the EELS tomography technique carried out in recent years is described, as well as the mathematical and physical considerations that made them possible.

2.1 Compressed Sensing (CS)

Before the detailed description of the experiments, the introduction of one last reconstruction algorithm developed in recent times is advisable, due to the nature of the experiments themselves. Given the increment of the data volume required for analytical electron tomography experiments, faster and more accurate approaches must be pursued.

An alternative reconstruction method, based in the same theoretical background as the image compression algorithms (JPEG and JPEG-2000) [28], is proposed: compressed sensing (CS) [5, 29,30,31,32].

It has been proved that CS presents in general higher quality reconstructions than classic SIRT and WBP given the same number of iterations, and is capable to retrieve highly accurate reconstructed images in cases of severe undersampling (i.e. low number of projections available for the reconstruction). Examples of CS-ET reconstruction of magnetic nanoparticles are available in the literature [32,33,34], illustrating the power of this method to reconstruct accurately complex 3D structures for quantitative analysis and, thus, allowing the comparative study of magnetic properties and structural information.

2.1.1 Mathematical Principles

Understanding the principles behind the CS theoretical framework requires a fair knowledge of the concepts of sparse representation, compressible signal and sensing processes (measurement).

In a standard signal acquisition process (e.g. image acquisition in TEM), an initial signal \(\vec x\) with n components will be measured against the so-called sensing waveform (i.e. a functional basis \(\varPhi\)) giving a recorded signal \(\vec p\) with m components. This is:

In general, the process will suffer from undersampling (i.e. \(m \ll n\)) and the equation system is undetermined. CS theory shows that a unique solution can be calculated for this problem, with two major restrictions: (i) \(\vec x\) is sparse in a certain basis \(\varPsi\). (ii) The basis for the sparse representation \(\varPsi\) and for the sensing waveform \(\varPhi\) must be incoherent.

A signal \(\vec x\;\) is considered sparse in a certain domain (i.e. basis \(\varPsi\)), when all the information can be expressed through a small set s of \({c_{\text{s}}} \ne 0\) coefficients (\(\vec c\)). Mathematically, the sparse transform is expressed as:

The signal is said to be sparse only if \(s \ll n\), being \(\vec c\) the sparse representation of \(\vec x\). In practical applications, CS allows the relaxation of the strict constraint of sparsity to compressibility. In a compressible \(\vec x\), \(\vec c\) would contain \(k \ne 0\) elements such as \(k > s\). Thus, the transform \(\varPsi\) is allowed to retrieve a certain small number of coefficients \(\left( {k - s} \right)\) with lower significance to the information recovery than the \(s\) remaining, but still \(k \ll n\), whereas \(\vec x\) is sufficiently represented by the \(s < k \ll n\) coefficients with higher significance. Therefore, the small coefficients \(\left( {k - s} \right)\) can be filtered away (or set to 0), providing that the inverse transform will be able to effectively recover the initial image with minimal information loss. Then, \(\vec x\) is then said to be a compressible signal.

\(\varPsi\) and \(\varPhi\) incoherence means that the sensing basis cannot be sparsely represented. Hence, it ensures that each \({p_i}\) contains information about many of the coefficients \({x_j}\) and, thus, it guarantees that under sampling artefacts (\(\varPhi\) domain) are distributed in a noise-like fashion through the signal in the sparse domain (\(\varPsi\)).

The practical application of CS requires of an optimization process to recover the sparse coefficients from measurements, since the signal acquired is compressed and the optimal transform \(\varPsi\) is a priori unknown. To that end, a nonlinear algorithm is required, able to minimize the number of nonzero coefficients (promoting sparsity) without compromising the consistency of the measured data. It has been shown that the minimization can be carried out over the so-called \({l_1}\)-norm [35] \(\vec c = \;\mathop \sum \nolimits_i \left| {c_i} \right|\).

2.1.2 Compressed Sensing in Electron Tomography (CS-ET)

CS-ET can be formulated from two different equivalent perspectives: (i) being \(\vec p\) the projection image (sinogram) and \(\varPhi\) the real space projection operator in the frame of a discretized Radon transform or (ii) applying the central slice theorem in the frame of a discretized Fourier transform (FT). This second approach sets \(\vec p\) as the FT of the projection data (i.e. discrete radial samples of the object in the Fourier space), and \(\varPhi\) as a discrete Fourier operator. Then, the under sampling artefacts (already discussed in the introduction) that arise from an uncomplete radial sampling of the Fourier space can be minimized through the effective application of a CS-based reconstruction algorithm.

The sparsity of the signals recorded (necessary condition for the application of CS) must be promoted through a certain transform \(\varPsi\). Several possibilities of transforms are described in the literature, depending on the sample nature and projection images information content (acquisition mode). The most common choice is a combination of sparse transform in the image domain itself (\({\varPsi} = {\mathbb{I}}\) identity transform) and the spatial gradient domain (\(\varPsi =\) spatial finite-differences transform). The sparsity is then promoted by minimizing the \({l_1}\)-norm in both spaces (being called the TV-norm in the spatial gradient space). This is a convex optimization problem that can be formulated as:

where \({\lambda_{\mathbb{I}}},\;{\lambda_{\text{TV}}}\) coefficients are the weightings of the specific transforms \({l_1}\)-norm minimization (image \({\mathbb{I}}\) and gradient TV), the \({l_2}\)-norm term includes the tolerance to noise in the dataset (\(\varPhi {\vec x^\prime } - {\vec p_{l2}} \leqslant \varepsilon\)) and \({\vec x^\prime }\) is the reconstruction of the \(\vec x\) signal from the projection data \(\vec p\). The minimization is an iterative process (see Fig. 11.3)

The quality of the reconstructed image will be affected by the values of the weighting factors \({\lambda_{\mathbb{I}}},{\lambda_{\text{TV}}}\). These values are not given a priori by any theory and must be approximated in each reconstruction problem independently. Under/overestimating them will lead to defective reconstructions, given that the minimization iterative process will incorrectly filter information in the sparse domain.

2.2 EELS Tomography: From Spectrum Image Tilt Series to the Spectrum Volume

The end goal of analytical tomography is to retrieve the complete information about a 3D structure at the nanoscale. This is equivalent to reconstructing a four-dimensional object, where the first three dimensions correspond to the geometry of the sample, and the fourth dimension is related to the chemical composition. In the case of EELS tomography, the fourth dimension is the energy loss. This object is known as the spectrum volume (SV), where each voxel contains an EELS spectrum (see Fig. 11.4) [36, 37].

The first approximation to the spectrum volume reconstruction was carried out by acquiring sets of spectrum images (SI) at different tilt angles, simultaneous with the acquisition of HAADF images [37]. To avoid sample damage, the experiments are usually performed using short acquisition times for the EEL spectra. These conditions reduce the signal to noise ratio (SNR) of the spectra; thus, statistical treatment is routinely required to retrieve significant information. The large number of spectra acquired for the complete set of projections increases the statistics, allowing the successful retrieval of a denoised signal through multivariable analysis methods (MVA) [20, 38,39,40].

The information fed to the tomographic reconstruction algorithms is intended to be the intensity of the EELS edges on the spectrum images acquired \(I\left( {x,y,\theta } \right)\) (i.e. the integrated area under the curve for each edge). This way, a map of separated elements according to EELS edge intensities is available for each projected image. The intensity of EELS edges in the core-loss region is given by:

for the k edge of element A integrating over a collection angle \(\beta\) along an energy range \(\Delta\), \({I_K}^A\) is the integrated intensity, \({N^A}\) is the areal density, \({\sigma_k}^A\) is the ionization cross-section and \({l_T}\) is the total transmitted beam intensity; t is the sample thickness and \(\lambda\) the inelastic mean free path. (If \(t/\lambda \geqslant 0.3\), plural scattering events are expected, and the equation may not hold ). Avoiding plural scattering, the edge intensities will vary monotonically with thickness (material property), and the signals are suitable for tomographic reconstruction. This will be the usual scenario for magnetic nanoparticles due to their reduced size.

In cases where plural scattering is relevant (thicker or larger samples), some problems may arise. One clear example is the case of the ‘cupping artefact’, characterized by an inversion of the spectrum image contrast in thicker zones of the sample [41]. This leads to a miscalculation of the edge intensity and, thus, of the elemental quantification. Hence, in those cases, EELS edge intensities may no longer be suitable signals for tomography reconstruction.

The correct identification of cupping artefact effects in SI is not always straightforward, since they can be mistaken by a structural effect on the image (e.g. core-shell structure). Thereby, a thorough study of the material is required before undertaking the tomographic reconstruction. Although the spectrum volume cannot be retrieved if EELS edge intensities are unsuitable for tomographic reconstruction, 3D information for the elemental distribution in the samples can still be extracted [37].

2.2.1 Multivariable Analysis Methods (MVA)

SNR is usually low in EELS-SI experiments, due to image acquisition constraints to avoid sample damage. Hence, statistical treatment of the signals in the SI and noise reduction are imperative. Besides, the problem requires the separation of the contribution of each element to the spectra per pixel and their identification, which can be regarded as a blind source separation (BSS) problem [20, 38,39,40].

First, the data \(\left( {x,y,\theta ,\Delta E} \right)\) needs to be treated to correct energy drift. Then, weighted principal component analysis (wPCA) [38] is applied. The weighting of the PCA is adapted to the dominant Poissonian noise. The PCA algorithm computes a new spectral base for the spectrum image, where the base components are ordered by the spectral variance in the original SI. Thus, the dimensionality is transformed from intensity of each energy loss channel at a given point to weight of each new base component in that given point. The method is based on three major assumptions: (i) the problem to be solved is linear, (ii) the signal has higher variance than noise and (iii) there is component orthogonality. The linearity and orthogonality mean that the separated components can be treated as a basis for the energy loss spectra space (i.e. each spectrum can be expressed as a weighted sum of the components). The higher variance components resolved by the algorithm are generally related with meaningful features of the sample (e.g. thickness and elemental composition), whereas the components of lower variance are usually associated with pure noise and do not offer further information pertaining to the spatial distribution of the elements.

The problem with PCA, and the reason it is not regarded as a valid BSS by itself, is that it relies in second order statistics (variance) to separate components. This is, the separation of components in PCA is not based on physical considerations, so they may have no physical meaning [42]. Thereby, they are also, in principle, unsuitable for tomographic reconstruction, since they may fail to fulfil the projection requirement. Nonetheless, as a noise reduction step, wPCA plays an important role since the BSS algorithms described ahead are not to be applied to noisy datasets. It also plays the important role of reducing the number of components in the calculation, thus reducing the computational time required.

Independent component analysis (ICA) is the first possible method for BSS. It deals with the data as a mixture of independent, and therefore uncorrelated, components. Those components are found according to their non-Gaussian distribution and they should unveil physically meaningful components of the dataset [39, 40].

A second possible method for BSS is the Bayesian linear unmixing (BLU) [42, 43] approximation, described by N. Dobigeon. One reason to choose BLU over ICA is that the latter has been shown to fail performing endmember extraction precisely when the spectral sources (components or endmembers) are not statistically independent, a strict condition for the implementation of ICA [42, 44]. Besides, this Bayesian formulation allows the introduction of several constraints for the calculations, such as (1) sparsity, (2) non-negativity and (3) full additivity. The case of the EELS-SV [36] calculation can clearly benefit from the introduction of the number of components, non-negativity nature of the signal, and the proportions limitation (i.e. full additivity for the proportions of the components expected in the sample) into the data treatment, as a way of increasing the endmember identification accuracy. Alongside, Dobigeon model [42] is characterized by estimating the parameters of the new basis in a lower dimension space identified by a standard dimension reduction technique, such as PCA, within a Bayesian framework. This is ought to reduce the degrees of freedom in the parameters.

Although both methods described have proven to solve the BSS problem for tomography reconstruction, new advances are expected in the field, driven by the growing resources dedicated to the treatment of big data in a wide spectrum of scientific and technological fields [45].

2.2.2 EELS-SV. Tomographic Reconstruction from the Elemental Maps

After the PCA noise reduction and the EEL Spectra separation as a sum of weighted spectral components identified by ICA/BLU, these are set as the orthogonal axes of the new spectral basis. Every single EEL spectrum on every SI of the tilt series dataset acquired is represented as a weighted sum of the identified spectral components.

The final step is the tomographic reconstruction itself, using the spectral weighted component map separated from the SI as the projections fed to the algorithms. The quality of the reconstruction, the number of projections and the computational resources available, will determine the algorithm of choice, as already discussed.

At this point, EELS-SV is already available, since the full spectra in each reconstructed voxel have been calculated from the separated components and the corresponding weighting factors.

As a paradigmatic example, let us briefly revise the results achieved on the reconstruction of ferromagnetic (FM) CoFe2O4 (CFO) nanocolumns embedded in a ferroelectric (FE) BiFeO3 (BFO) matrix grown on a LaNiO3 buffered LaAlO3 substrate (BFO–CFO//LNO/LAO) (see Fig. 11.5a). It is a prototypical multiferroic vertical nanostructure, where the magnetic properties are strongly dependent of the substrate material and orientation, ferroic phases, and phase ratio. Thus, a complete characterization, expected to bind functional properties and material structure, requires precise knowledge of the local composition (EELS) coupled with 3D structure reconstruction (electron tomography) [36].

a HAADF image of a projection of the multiferroic compound. Green: area chosen for the EELS-SV reconstruction. b EELS Spectrum components extracted from the BSS process as the new parametric basis: 1—Background, 2—FexOy 3—LaxOy. c SI corresponding to a vertical orthoslice through the reconstructed SV. d Volume reconstructions for the parametric basis in b. e Spectrum profile for the spectrum line shown in red in c

A focus ion beam (FIB) [46] preparation of a nanopillar TEM specimen ensured almost constant thickness in the projections regardless the tilt angle. Electron mean free path exceeds sample thickness in all possible transmitted trajectories. Hence, multiple scattering events should remain as a residual contribution to EELS signal. This is confirmed by the absence of contrast inversion towards the centre of the nanocolumn in the SI and, thus, enables the use of EELS component as the input dataset for tomographic reconstruction.

BLU along with PCA where carried out for the endmember separation, and SIRT was the algorithm of choice for tomographic reconstruction, requiring 20 iterations before convergence. The parametric basis extracted after MVA consisted of four components, identified as: (1) iron oxide (FexOy), (2) lanthanum oxide(LaxOy), (3) background contribution, and (4) noise in vacuum contribution (kept for calculations but not reconstructed), as shown in Fig. 11.5b. The results of the volume reconstructions for each component in the basis in Fig. 11.5b are shown in Fig. 11.5d.

One of the multiple advantages of the access to the EELS-SV can be easily understood through the images in Fig. 11.5c, e. The first one is a vertical orthoslice through the EELS-SV, and the second displays the spectra profile through the spectrum line traced in the orthoslice. The possibility to access spectral information in the inside of the structure, unaffected by the material above and below, as in the selected spectrum line, is unique of this technique.

2.2.3 Cupping Artefact and the Spectrum Volume

Unfortunately, EELS edge intensity does not always fulfil the projection requirement. In cases where plural scattering is not negligible, thickness effects causing contrast inversion (i.e. cupping artefact) prevent a monotonical behavior of the intensity with the material composition and, thus, tomography algorithms fail to reconstruct the SV [37].

One possible solution to overcome this problem is to extract the concentration of the elements from the intensity edges. Let us consider the case of three elements identified after MVA. The concentration of element A (taking advantage of the exponential relation shown in subsection 11.2.2 for the intensity, IkA(beta, delta) of core loss EELS edges) is

assuming that the mean free path \(\lambda\) does not effectively change in these materials. This signal already fulfils the projection requirement and is suitable for the algorithms of ET.

Besides, it is common to extract from the MVA process a component of the spectra related to sample thickness and, thus, suitable for tomography reconstruction. If this thickness-related signal is merged with quantification data pixel by pixel, the intensity of this new signal will fulfil the projection requirement, as it can be regarded as the contribution of a given element to the thickness found for every pixel (density-thickness contrast images). The thickness inversion effect (‘cupping artefact’) is eliminated, and a signal fulfilling the projection requirement and containing elemental quantification is available for tomography reconstruction [37].

This method allows to recover a 3D reconstruction segmenting the identified elements through MVA, although the SV is not recovered (i.e. each voxel in the reconstructed volume will not contain the whole EEL Spectra). It proves to be an effective way of minimizing the negative effects of spectrum image artefacts and retains as much chemical quantitative information as possible in a reconstructed 3D volume.

2.3 A Case Study: 3D Visualization of Iron Oxidation State in FeO/Fe3O4 Core–Shell Nanocubes Through Compressed Sensing

The determination of the oxidation state of chemical species is of paramount importance to understand the physicochemical properties of nanomaterials for a wide range of applications, and particularly in the case of studying magnetic properties in nanostructured materials [34]. The high spatial and spectral resolution that characterizes EELS spectroscopy makes this technique suitable to recover detailed compositional and electronic information, relevant in such cases as core-shell magnetic nanoparticles. Furthermore, the energy loss near-edge structure (ELNES) can be used to determine the oxidation state of the probed chemical species. The combination of this technique with a highly accurate ET reconstruction method (i.e. CS), able to retrieve fine structural details in a 3D distribution from fewer projection images and, thus, reducing sample damage, enables the recovery of the complete EELS-SV with access to oxidation state information.

To test this possibility, an experiment was proposed, aiming to recover the EELS-SV reconstruction of an iron oxide nanocube, with a FeOx/FeOy core-shell structure (Torruella, Pau et al. 2016. 3D Visualization of the Iron Oxidation State in FeO/Fe3O4 Core–Shell Nanocubes from Electron Energy Loss Tomography. Nano letters, 16(8), 5068–5073) [34]. This particular problem poses a challenge due to the fact that the contrast difference between shell and core was not enough, neither in HREM nor in STEM-HAADF, to accurately segment the image.

In order to obtain a quantitative 3D oxidation state map of the particle, a EELS-SI tilt series was acquired in low voltage conditions (80 keV) from −69° to +67°, every 4° each containing 64 × 64 pixels with spectra in the energy range 478−888 eV.

The high spectral resolution (given a dispersion of 0.2 eV/pixel and 0.015 s/pixel), much needed to analyze ELNES features, meant severe sample damage by beam focus during the second half of the tilt series acquisition, due to accumulated dose. Furthermore, some SI were also discarded because the evidences of the significant coherent diffraction occurring at specific angles. Fortunately, the high symmetry of the nanocube allowed the reconstruction assuming a mirror effect in the projections for positive tilting angles.

The procedure followed in MVA for noise reduction (namely PCA) and BSS was applied only to the area of interest in the EEL spectra, i.e. around the iron L2,3 edges. This effectively reduced the computational time. The BSS algorithm chosen was Fast-ICA, implemented in Hyperspy [47]. Fast-ICA was performed over the first derivative of the six remaining spectral components after PCA (denoising). Among the six components, two were related to the Fe ionization edge, labelled as C1 and C2. They show clear ELNES features that can be identified as the Fe L2,3 white lines (Fig. 11.6). The position of the maximum of the Fe L3 of C2 is shifted +1.9 eV with respect to Fe L3 maximum in C1. For comparison, Fig. 11.6 also shows reference EEL spectra corresponding to the Fe L3,2 edges for wüstite (Fe1−xO), Fe2+ and haematite (Fe2O3) Fe3+. This allowed the extraction of the Fe oxidation state maps, depicted as the weight of the respective components in each pixel as shown in Fig. 11.6 (right) for the 0° projection. Similar maps are obtained for the whole SI angular range, giving rise to two set of images suitable for 3D reconstruction.

For the tomographic reconstruction of the SV, the obtained components fulfilled the projection requirement, given that the nanocube size ranged between 35 and 40 nm and no cupping artefact was observed. CS was chosen as the reconstruction algorithm, given the low number of projections available and the precision expected in the SV. The sparsity promoting transform was the one described in the previous section (both image and gradient spaces where sparsely transformed).

The results of the reconstruction are shown in Fig. 11.7. The orthoslice for the Fe2+ Fig. 11.7d reveals the presence of this oxidation state in the core and the shell of the nanoparticle, whereas the presence of Fe3+ Fig. 11.7e is confined to the shell. This is consistent with the shell Fig. 11.7b being Fe3O4 and the core Fig. 11.7a FeO.

The object reconstructed is a perfect example of an EELS-SV containing information about the oxidation state through ELNES analysis. Again, one of the clear advantages of the SV reconstruction is that information that usually would not be accessible experimentally is easily recovered by isolating specific voxels, for example, single spectra from the core region without shell contribution (Fig. 11.8a). In particular, spectrum lines, where the different oxidation states are clearly separated and spatially resolved, are accessible inside the reconstructed volume. This is shown in Fig. 11.8c, where a 2D vision of the EEL spectra along the line marked in 11.8b is depicted, being the ordinate axis the position in the line and the abscissa axis the energy loss. The intensity for each of the energy channels is represented by a colour scale (see Fig. 11.8c). The chemical shift of 1.4 eV for the different oxidation states in the iron edge is observable in the single spectrum and the spectrum line extracted from the SV reconstruction, proving the success in the reconstruction.

3 Emerging Techniques and Future Perspectives for EELS-SV

The incorporation of EEL Spectra into electron tomography reconstruction resulted in the possibility of reconstructing the spectrum volume for a given sample (i.e. the 4D dataset containing both structural and compositional information of a three-dimensional sample). Although the obtained results proved the successful implementation of this technique, allowing even the recovery of ELNES features from the reconstructed SV, there is clearly room for further improvements.

The separation of spectral components with physical meaningful information and suitable for tomographic reconstruction remains the main issue in the standard process described for EELS-SV recovery. Up to now, a combination of PCA (signal denoising) and ICA or BLU (BSS technique) has been used as the core of the MVA approximation for data treatment of the EELS-SI datasets. Although these methods have so far yielded good results, they rely on the ability of the scientist to make a physical interpretation of the output components, something that is not always straightforward. Hence, the implementation of clustering (or cluster analysis) to EELS SV recovery is considered.

3.1 Clustering Analysis: Mathematical Principles

An EELS-SI of \(n = X \cdot Y\) pixels and \(E = p\) channels per spectra can be represented by a n × p matrix, each spectrum a different row. Any given clustering algorithm will try to group spectra according to the similarity of their characteristic features (e.g. position of a certain intensity edge, or intensity ratios for edges in the same positions). Many options for the clustering implementation, such as K-means [48], density-based methods, [49] and agglomerative clustering algorithms [50] are available. In this case, the implementation of the hierarchical agglomerative clustering (HAC) for the segmentation of EELS-SI is briefly described, following the work done in [45].

Let us consider each spectrum (1 per pixel) a single object in a p-dimensional (pD) space. This is, all the different characteristics in the spectrum contained in a single pixel describe a single point in this new pD space. The distance between pD points in any given metric will characterize the similarity between spectra. For instance, let us consider the Euclidean metric:

where \({x^{i,j}}_k\;\left( {k = 1, \ldots ,p} \right)\) are the coordinates for the spectra at the \(\left( {i,j} \right)\) points in the pD space. The HAC algorithm will measure this distance, grouping the closest elements in clusters iteratively. In each h iteration, the number of clusters will effectively decrease, and the distance \({({d_{i,j}})^h}\) measured between the closest clusters will increase. At the end, if no stopping condition is set, all spectra would be grouped in a single cluster, losing the relevant information of the segmentation. In general, the value of \({({d_{i,j}})^h}\) will suffer a sudden increment (orders of magnitude) after a certain number of iterations, which can be related to having achieved a certain degree of segmentation. This can be used as the stopping criteria. A very illustrative way to understand the process is through the so-called denogram plots (Fig. 11.9), a ‘tree’ representation for the number of elements in a cluster against the minimum distance between them (at which a new cluster was formed from two previous different clusters, linked by a horizontal line).

3.2 Application of Clustering to EELS

EELS-SI and the data analysis techniques introduced so far, PCA and ICA/BLU, aimed to map the spatial distribution of compounds and properties of the sample through the study of the shape of individual EEL spectra. The nature of the problem, along with the characteristics of EEL spectra (good energy resolution and differentiated spectral features for each compound), makes it a suitable candidate for the implementation of clustering techniques.

Furthermore, clustering presents the advantage of resolving different regions without any prior assumption over the data. Also, due to the nature of cluster analysis techniques, the averaged signal in a single cluster will always contain physical meaningful information (absent of negative edges and other features commonly found in ICA or PCA, that require further manual analysis). This presents clustering as a new step towards the automatization on EEL spectroscopic techniques, especially in EELS-SI segmentation.

The first practical approximation to the implementation of clustering algorithms in EELS data treatment can be found in Torruella, Pau, et al. 2018. Clustering analysis strategies for electron energy loss spectroscopy (EELS). Ultramicroscopy, 185, 42–48 [45].

In it, EELS simulation and experimental datasets were successfully segmented after the implementation of the HAC algorithm following three different strategies: (i) HAC in raw data, (ii) HAC followed by PCA, and (iii) PCA followed by HAC separation. All three strategies successfully retrieved segmented results, but the accuracy and quantity of information provided was different in each case.

3.2.1 EELS Simulation Dataset

The simulated artificial EELS dataset consisted of a 128 × 128 pixels SI, with 1024 channels per spectrum. Four zones with different spectral features were created to test the proficiency of the algorithm to segregate spectral components when detailed analysis is required. The basic structure resembles a spherical core-shell NP, with two additional elliptical internal zones (see Fig. 11.10a). The core of the particle was filled with spectra corresponding a constant FeO composition. The shell was filled with Fex−1Ox+1, where the ratio Fe/O linearly varied increasing O towards the exterior. One of the elliptical zones inside the core was filled with FeCoO spectra to simulate a precipitate of Co, and the other one was a void. All spectra were filled with Gaussian and Poissonian noise to represent a real study case.

a EEL spectral phantom used on the simulation of clustering techniques. b Results of the application of HAC on the raw data, showing the segmentation under three different threshold counts: 5000, 2500, and 1500. c Results of applying PCA to the shell region after HAC (green in b). d Result of applying HAC to the PCA scores of the phantom

Raw data clustering segmentation was able to identify the regions of different composition without any prior assumption of the data (such as the composition and expected distribution of elements), identifying the four different zones of the phantom. Despite the acceptable separation (Fig. 11.10b), some problems with the cluster assignation for the FeO are present near the frontier core/shell, and the Fe/O gradient is not well represented for the shell. This accounts for the incapacity of clustering approach to retrieve negative non-physical edges in the spectral components separated and, thus, the incapacity of gradient composition detection. One hint of the presence of this gradient can be obtained by the relaxation of the pD distance threshold (i.e. relaxing the stopping criteria). Then, the shell will appear to contain three different clusters (Fig. 11.10).

To avoid the loss of this information, by grouping within the same cluster boundaries spectra with similar features arising from different physical properties in the sample, the first solution explored was the application of PCA to the HAC results. This proved capable of identifying the linear gradient of the Fe/O composition in the shell, retrieving a non-physical component with a negative edge in the decomposed cluster linked to a decreasing quantity of iron towards the exterior (Fig. 11.10c).

The second possible solution was the implementation of HAC algorithms over the score components extracted from the PCA calculations in the original EELS dataset. This method yielded similar results to the previous one (see Fig. 11.10d). The main difference resides now in the computational time. By first applying PCA, the number of spectral components is reduced to 3 and, thus, the HCA algorithm iterates over \(n = 128\cdot128 = 16384\;\) elements with only \(p = 3\) possible spectral components (instead of the 1024 initial channels).

3.2.2 Example. Magnetic Fe3O4–Mn3O4/MnO Nanoparticle

The experimental dataset analyzed corresponds to a core-shell structure for a magnetic nanoparticle composed by a mixture of Fe3O4 and Mn3O4/MnO [51].

Once again, raw data clustering segmentation successfully separated regions of different composition. The core composition was identified as a majority of iron oxide with minor contributions of manganese oxide (presumably arising from the shell beneath the core), and the shell composition was identified as manganese oxide. To accurately get the core–shell separation, a normalization step in the EELS dataset was imposed, to eliminate thickness effects in the signal. One main advantage over PCA + ICA approximation is that none of the segmented components contained non-physical features (such as negative edges) that required further interpretation.

Unfortunately, fine structure spectral information (ELNES) is lost if only HCA is performed. PCA was applied to the results of cluster analysis in the shell, as previously done in the phantom case. Two different components in the cluster were found, corresponding to different oxidation states of manganese oxide identified through ELNES analysis, separating Mn3O4 and MnO (see Fig. 11.11c). The results still lacked a good spatial resolution for the separation of each oxidation state, leading to a possible misinterpretation under the assumption of homogeneous mixing of states.

Results for the HAC-EELS data treatment in the Fe3O4–Mn3O4/MnO magnetic nanoparticle. a HAC in raw data. Core and shell clearly separated. b Shell component separation by ELNES analysis, after applying PCA over the clusters retrieved by HAC. c HAC on the score spectra extracted by PCA over EELS dataset

To that end, the implementation of HAC algorithms over the score signals extracted from the PCA calculations in the original EELS dataset was carried out, following the same procedure explained in the phantom case study. This method yielded an effective separation of the two zones with different oxidation states (Mn3O4 and MnO) in the shell of manganese oxide (Fig. 11.11b), improving the segmentation accuracy, the spatial resolution and reducing the computational time (since HCA is carried out over only four different spectral components).

3.3 Future Perspectives. Clustering Data in EELS-SV Tomographic Reconstructions

The potential benefits arising from the joint performance of new ET algorithms and better segmentation procedures are manifold: to overcome the problems arising from low acquisition times, few projections or reduced pixel time. This will lead to better EELS-SV reconstructions with a higher degree of complexity (ELNES analysis included), and in otherwise non-treatable cases (e.g. samples susceptible to beam damage). In this sense, systematic approaches have been recently reported, applying wavelet transform [28, 52] to reduce the incidence of Poissonian noise [53, 54] in HAADF images previous to the 3D reconstruction via TMV-algorithm [55, 56]. The same method is likely to be implemented in EELS-SV reconstruction, given the unavoidable Poissonian noise present in EELS due to the signal nature.

The recently introduced clustering approach for the EELS-SI segmentation is still to be tested on EELS-SV reconstructions. In principle, given the physical nature of the components extracted from cluster analysis, they should be a valid signal in most ET algorithms, whereas no thickness-related artefacts break the projection requirement. A systematic study on the accuracy of the EELS-SV reconstruction, using different algorithms, under different acquisition conditions and including clustering segmentation techniques, needs to be addressed shortly. Also, an in-depth study of the suitability of the wide variety of clustering algorithms available (e.g. the described HAC, K-means [48] and agglomerative clustering [57], among others) applied to EELS data treatment must be also undertaken.

References

V. Lučić, F. Förster, W. Baumeister, Structural studies by electron tomography: from cells to molecules. Annu. Rev. Biochem. 74, 833–865 (2005)

P.A. Midgley, M. Weyland, 3D electron microscopy in the physical sciences: the development of Z-contrast and EFTEM tomography. Ultramicroscopy 96, 413–431 (2003)

D.B. Williams, C.B. Carter, The transmission electron microscope. in Transmission Electron Microscopy (Springer US, 1996), pp. 3–17. https://doi.org/10.1007/978-1-4757-2519-3_1

P.A. Midgley, R.E. Dunin-Borkowski, Electron tomography and holography in materials science. Nat. Mater. 8, 271–280 (2009)

Z. Saghi et al., Compressed sensing electron tomography of needle-shaped biological specimens—potential for improved reconstruction fidelity with reduced dose. Ultramicroscopy 160, 230–238 (2016)

P. Penczek, M. Marko, K. Buttle, J. Frank, Double-tilt electron tomography. Ultramicroscopy 60, 393–410 (1995)

K.J. Batenburg et al., 3D imaging of nanomaterials by discrete tomography. Ultramicroscopy 109, 730–740 (2009)

T. Roelandts et al., Accurate segmentation of dense nanoparticles by partially discrete electron tomography. Ultramicroscopy 114, 96–105 (2012)

J. Radon, Über die Bestimmung von Funktionen durch ihre Integralwerte längs gewisser Mannigfaltigkeiten. in books.google.com, pp. 71–86 (1983). https://doi.org/10.1090/psapm/027/692055

Radermacher, M. Weighted back-projection methods. in Electron Tomography: Methods for Three-Dimensional Visualization of Structures in the Cell (Springer New York, 2006), pp. 245–273. https://doi.org/10.1007/978-0-387-69008-7_9

R.A. Brooks, D.G. Chiro, Principles of computer assisted tomography (CAT) in radiographic and radioisotopic imaging. Phys. Med. Biol. 21, 689–732 (1976)

G.A. Perkins et al., Electron tomography of large, multicomponent biological structures. J. Struct. Biol. 120, 219–227 (1997)

R.B. Der, T. Gabor, Algebraic reconstruction techniques (ART) for three-dimensional electron microscopy and X-ray photography (1970) (Elsevier)

P. Gilbert, Iterative methods for three dimensional reconstruction of an object from projections. J. Theor. Biol. 36, 105–117 (1972)

M. Weyland, P.A. Midgley, Extending energy-filtered transmission electron microscopy (EFTEM) into three dimensions using electron tomography. Microsc. Microanal. 9, 542–555 (2003)

B. Goris, S. Bals, W. Van den Broek, J. Verbeeck, G. Van Tendeloo, Exploring different inelastic projection mechanisms for electron tomography. Ultramicroscopy 111, 1262–1267 (2011)

G. Möbus, R.C. Doole, B.J. Inkson, Spectroscopic electron tomography. Ultramicroscopy 96, 433–451 (2003)

G. Möbus, B.J. Inkson, Nanoscale tomography in materials science. Mater. Today 10, 18–25 (2007)

J. Frank, S. Edition, J. Frank, S. Edition, in Electron Tomography. (Springer, 2006). doi:10.1007/978-0-387-69008-7

N. Dobigeon, N. Brun, Spectral mixture analysis of EELS spectrum-images. Ultramicroscopy 120, 25–34 (2012)

C. Jeanguillaume, C. Colliex, Spectrum-image: the next step in EELS digital acquisition and processing. Ultramicroscopy 28, 252–257 (1989)

B. Freitag, S. Kujawa, P.M. Mul, J. Ringnalda, P.C. Tiemeijer, Breaking the spherical and chromatic aberration barrier in transmission electron microscopy. Ultramicroscopy 102, 209–214 (2005)

B. Kabius et al., First application of Cc-corrected imaging for high-resolution and energy-filtered TEM. J. Electron. Microsc. (Tokyo) 58, 147–155 (2009)

K. Kimoto, G. Kothleitner, W. Grogger, Y. Matsui, F. Hofer, Advantages of a monochromator for bandgap measurements using electron energy-loss spectroscopy. Micron 36, 185–189 (2005)

M.T. Otten, W.M.J. Coene, High-resolution imaging on a field emission TEM. Ultramicroscopy 48, 77–91 (1993)

R.F. Egerton, in Electron Energy-Loss Spectroscopy in the Electron Microscope. (Springer, 2011)

L. Cavé, T. Al, D. Loomer, S. Cogswell, L. Weaver, A STEM/EELS method for mapping iron valence ratios in oxide minerals. Micron 37, 301–309 (2006)

Q. Du, J.E. Fowler, Hyperspectral image compression using JPEG2000 and principal component analysis. IEEE Geosci. Remote Sens. Lett. 4, 201–205 (2007)

E. Candès, J. Romberg, Sparsity and incoherence in compressive sampling. Inverse Probl. 23, 969–985 (2007)

B. Bougher, Introduction to compressed sensing. Lead. Edge 1256–1258 (2015). doi:http://dx.doi.org/10.1190/tle34101256.1

R. Leary, Z. Saghi, P.A. Midgley, D.J. Holland, Compressed sensing electron tomography. Ultramicroscopy 131, 70–91 (2013)

J.M. Thomas, R. Leary, P.A. Midgley, D.J. Holland, A new approach to the investigation of nanoparticles: electron tomography with compressed sensing. J. Colloid Interface Sci. 392, 7–14 (2013)

Z. Saghi, et al., Three-dimensional morphology of iron oxide nanoparticles with reactive concave surfaces. a compressed sensing-electron tomography (CS-ET) approach. Nano Lett. 11, 4666–4673 (2011)

P. Torruella et al., 3D visualization of the iron oxidation state in FeO/Fe3 O4 core-shell nanocubes from electron energy loss tomography. Nano Lett. 16, 5068–5073 (2016)

L. Rudin, Nonlinear total variation based noise removal algorithms. Physica D 60, 259–268 (1992)

L. Yedra et al., EELS tomography in multiferroic nanocomposites: from spectrum images to the spectrum volume. Nanoscale 6, 6646–6650 (2014)

L. Yedra et al., EEL spectroscopic tomography: towards a new dimension in nanomaterials analysis. Ultramicroscopy 122, 12–18 (2012)

H. Abdi, L.J. Williams, Principal component analysis. WIREs Comp. Stat. 2, 433–459 (2010)

A. Hyvärinen, J. Karhunen, E. Oja, Independent component analysis (2004)

J.D. Bayliss, J.A. Gualtieri, R.F. Cromp, Analyzing hyperspectral data with independent component analysis, in (ed. J.M. Selander) vol. 3240, pp. 133–143 (International Society for Optics and Photonics, 1998)

W. Van den Broek et al., Correction of non-linear thickness effects in HAADF STEM electron tomography. Ultramicroscopy 116, 8–12 (2012)

N. Dobigeon, S. Moussaoui, M. Coulon, J.-Y. Tourneret, A.O. Hero, Joint bayesian end member extraction and linear unmixing for hyperspectral imagery. IEEE Trans. Signal Process. 57, 4355–4368 (2009)

N. Dobigeon, N. Brun, Ultramicroscopy spectral mixture analysis of EELS spectrum-images. Ultramicroscopy 120, (2012)

J. Nascimento, J. Bioucas-Dias, Does independent component analysis play a role in unmixing hyperspectral data? IEEE Trans. Geosci. Remote Sens. 43, 175–187 (2005)

P. Torruella et al., Clustering analysis strategies for electron energy loss spectroscopy (EELS). Ultramicroscopy 185, 42–48 (2018)

L.A. Giannuzzi, F.A. Stevie, A review of focused ion beam milling techniques for TEM specimen preparation 10_1016-S0968-4328(99), 00005–0 Micron ScienceDirect_com. Micron 30, 197–204 (1999)

F. de la Peña, et al., Hyperspy/Hyperspy: Hyperspy 1.3. hyperspy/hyperspy: HyperSpy 1.3 (2017). https://doi.org/10.5281/zenodo.583693

A.K. Jain, Data clustering: 50 years beyond K-means. Pattern Recognit. Lett. 31, 651–666 (2010)

J. Sander, M. Ester, H.P.P. Kriegel, X. Xu, Density-based clustering in spatial databases: the algorithm GDBSCAN and its applications. Data Min. Knowl. Discovery 194, 169–194 (1998)

I. Davidson, S.S. Ravi, Agglomerative hierarchical clustering with constraints: theory and empirical resutls, in 9th European Conference on Principles of Data Mining and Knowledge Discovery Databases, PKDD 2005, pp. 59–70 (2005)

L. Yedra et al., Oxide wizard: an EELS application to characterize the white lines of transition metal edges. Microsc. Microanal. 20, 698–705 (2014)

T. Printemps et al., Self-adapting denoising, alignment and reconstruction in electron tomography in materials science. Ultramicroscopy 160, 23–34 (2016)

M. Makitalo, A. Foi, Optimal inversion of the Anscombe transformation in low-count Poisson image denoising. IEEE Trans. Image Process. 20, 99–109 (2011)

M. Makitalo, A. Foi, Optimal inversion of the generalized Anscombe transformation for Poisson-Gaussian noise. IEEE Trans. Image Process. 22, 91–103 (2013)

M. López-Haro et al., A macroscopically relevant 3D-metrology approach for nanocatalysis research. Part. Part. Syst. Charact. 35, 1700343 (2018)

B. Goris, W. Van den Broek, K.J. Batenburg, H.H. Mezerji, S. Bals, Electron tomography based on a total variation minimization reconstruction technique. Ultramicroscopy 113, 120–130 (2012)

F. Murtagh, P. Legendre, Ward’s hierarchical agglomerative clustering method: which algorithms implement ward’s criterion? J. Classif. 31, 274–295 (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Torruella, P. et al. (2021). Electron Tomography. In: Peddis, D., Laureti, S., Fiorani, D. (eds) New Trends in Nanoparticle Magnetism. Springer Series in Materials Science, vol 308. Springer, Cham. https://doi.org/10.1007/978-3-030-60473-8_11

Download citation

DOI: https://doi.org/10.1007/978-3-030-60473-8_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-60472-1

Online ISBN: 978-3-030-60473-8

eBook Packages: Chemistry and Materials ScienceChemistry and Material Science (R0)